1. Introduction

Electricity load forecasting is essential in realizing power grid system intelligence [

1]. It is also an essential part of a power system’s economic operation. Nevertheless, it is challenging to store a large amount of electric energy. The generation and consumption of electrical energy can be implemented simultaneously. Thus, it is necessary to make a balance between the stable power supply and load demand [

2]. Accurate STLF can ensure the safety of the smart grid and realize urban sustainable development [

3].

Generally, in view of the time span, electricity load forecasting is divided into long-term load forecasting (LTLF), medium-term load forecasting (MTLF), and short-term load forecasting (STLF) [

4]. LTLF is used to forecast the electricity load from several months to the following year. MLTF ranges from a week to a few months. In comparison, STLF forecasts the electricity load from the following hour to the next week [

5]. STLF is a crucial component in the power grid management system although it is a difficult task [

6,

7,

8,

9,

10,

11]. There are different factors that could influence the STLF efficiency, including the following ones: (1) Calendar factors can make significant changes in electricity load. (2) Weather conditions, such as temperature and humidity, also bring huge uncertainties and non-periodic effects. (3) Historical load is used to characterize strong randomness and trend characteristics of the load series.

Historically, many STLF methods are carried out to determine the historical load, achieving high forecasting performance [

12]. The STLF models can be divided into statistical models and artificial intelligence (AI) models. The common statistical models are the linear regression (LR) method [

13] and the autoregressive integrated moving average (ARIMA) method [

14]. The statistical models aim to build a mathematical relationship between input and output. However, it is challenging for statistical models to obtain the accurate forecast result, and the reason is that the electricity load often presents nonlinear and non-stationary features [

14]. Furthermore, the problem has become more serious, and the reason is that renewable energy integrates into the smart grid system.

The machine learning-based models are implemented to relieve the above issue. The main machine learning models include support vector machine (SVM) [

15,

16] and artificial neural network (ANN) [

17,

18,

19]. The main approach of SVM is based on the structural risk minimization theory. Although the SVR can forecast the electricity load, it is challenging to select the best parameters, limiting the forecasting performance. The ANN can learn the relationship between input data and output. The combination of ANN and multi-layer perceptron (MLP) is one of the most widely applied forecast models. However, the freedom of the ANN-based model is affected by the initial condition and the complexity of its model, which suffers from over-fitting [

19].

The deep learning method can overcome the training issue of the traditional neural network due to its strong nonlinear approximation ability [

20,

21,

22]. Deep learning, particularly CNN, has become one of the most widely applied forecast models [

23,

24], and the reason is that the global sharing ability of the CNN can effectively reduce the training period. Jiang et al. [

23] adopted CNN to extract the multi-scale load characteristics from the related household load data, improving model generalization. Ahmadian et al. [

24] designed the hybrid framework which consists of CNN and a modified grey wolf optimizer (EGWO). The parameters of the CNN are optimized by the enhanced EGWO algorithm, boosting its capacity for feature extraction. Nevertheless, the CNN-based methods still encounter some problems. Concretely, the requirement of adopting CNN is space invariance [

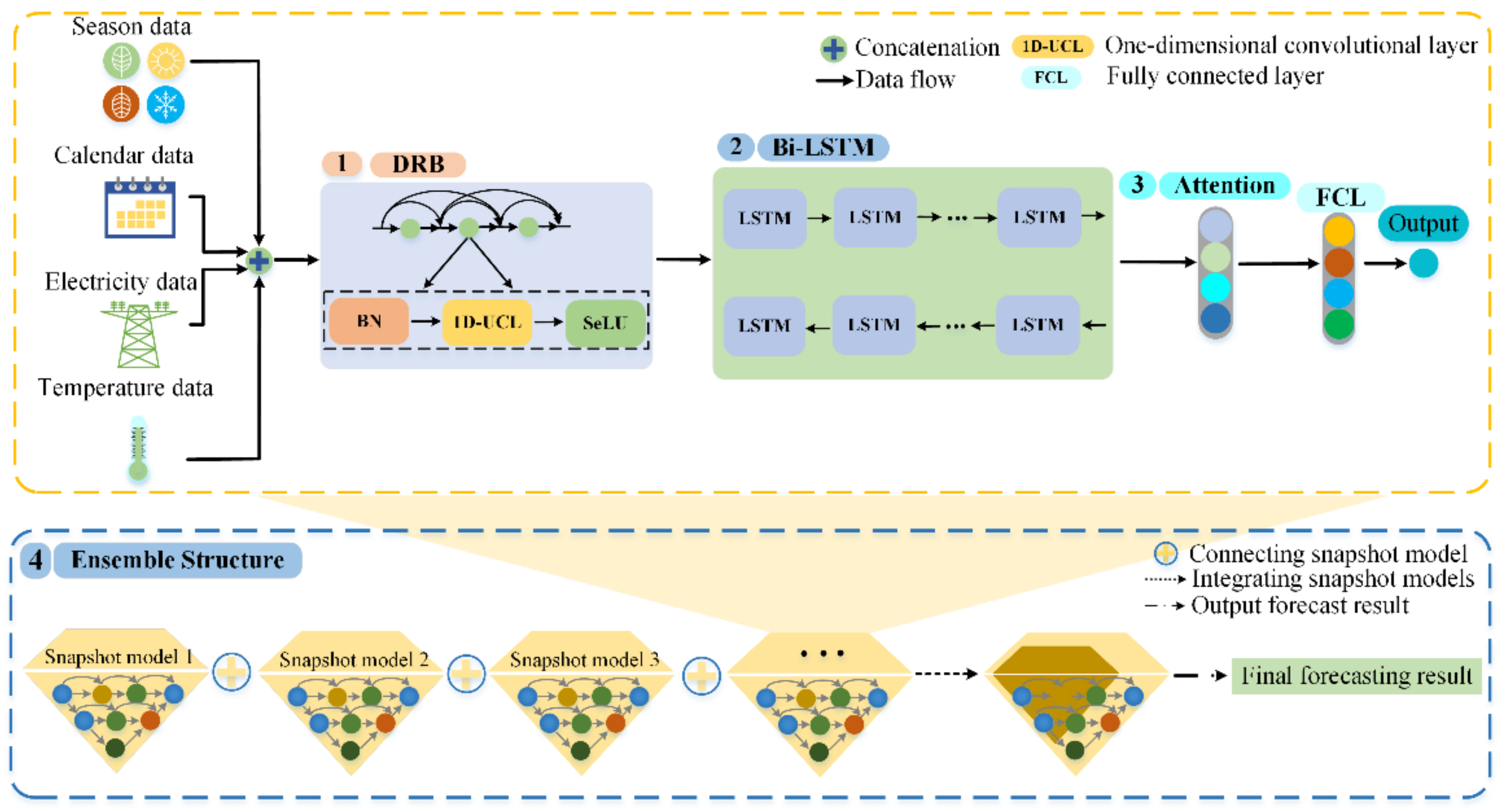

25], which is not satisfied by the collected data. The reason is that the input data usually include load features, calendar data, and other weather data. The different categories of the input data could offer various impacts on the forecasting ability, which is known as feature imbalance. In addition, the different timestamps include various information. This is named time imbalance. As a result, both imbalances result in the space variance of the input data. Thus, the paper proposes the densely residual block (DRB) based on the unshared convolutional neural network without the requirement of space invariance. It can efficiently capture the crucial features from input data. The LSTM and Bi-LSTM are recurrent neural networks that are capable of processing time series data [

26,

27]. Kong et al. [

7] designed an LSTM-based framework to forecast the residential load of the single energy user, which is essential for capturing volatile and uncertain load series. Wang et al. [

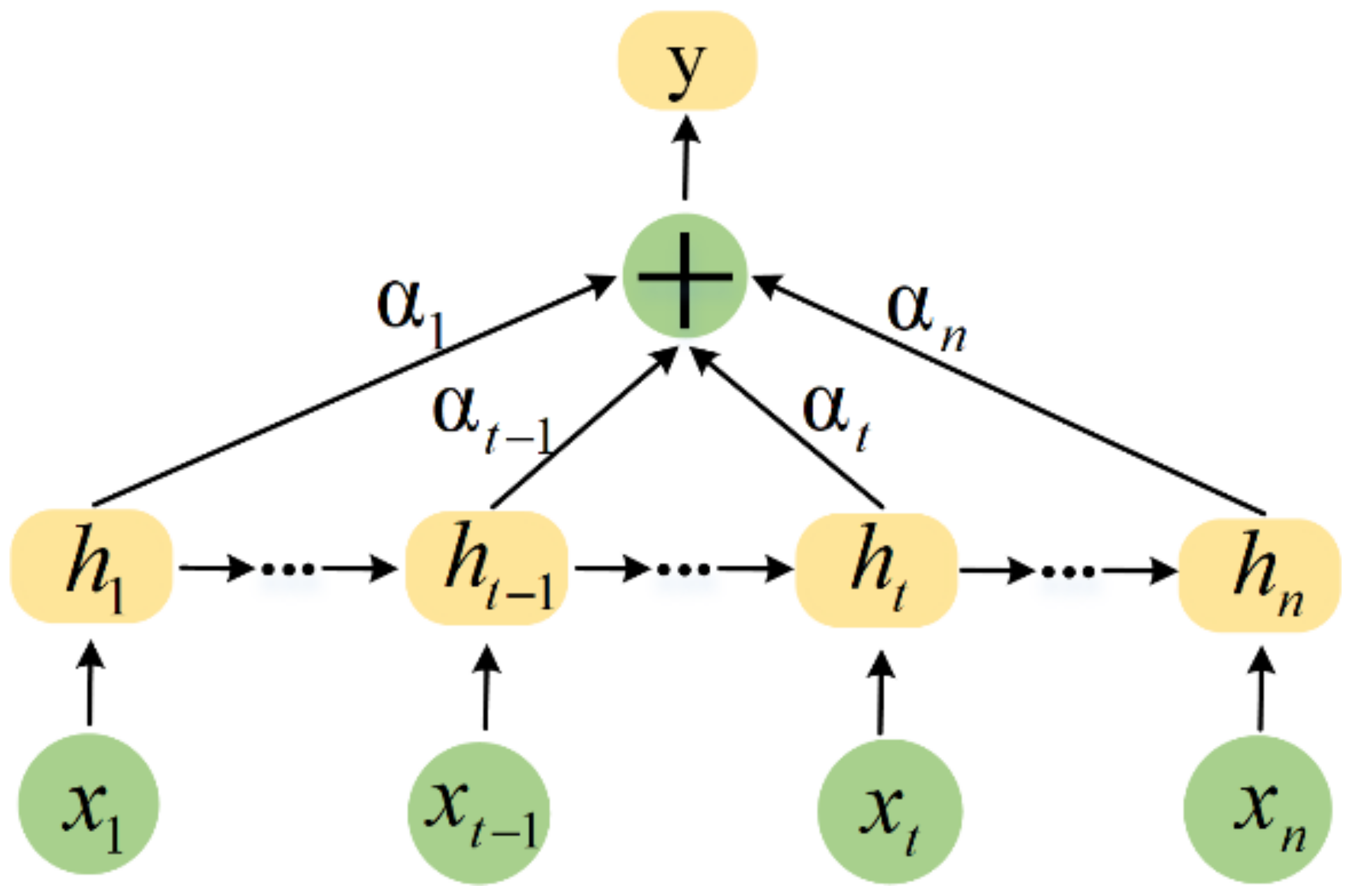

28] adopted the Bi-LSTM neural network to extract the nonlinear relationship between recent and past loads. Then, the attention mechanism is implemented to strengthen the influence of the crucial information.

The domain of deep learning widely accepts that the hybrid model can produce a more accurate forecast result compared with the single ones [

29,

30,

31,

32,

33]. Lee et al. [

31] designed a novel residual network to integrate the 1D-CNN and Bi-LSTM layers, which could make it easier for the model to learn the crucial features from the input data. The experiments demonstrate that the hybrid model can obtain superior forecasting performance compared with LSTM and Bi-LSTM. Yang et al. [

32] combined the long short-term memory (LSTM) and the attention mechanism to forecast the heat load. Moreover, the introduced attention mechanism can efficiently help the model learn the relationship between temperature and historical heat load, realizing accurate load forecasts. Farsi et al. [

33] proposed a parallel framework that consists of 1D-CNN and LSTM. The parallel CNN layer can extract the spatial features from input data. Then, the extracted features are the input of the LSTM layer and Dense layer to forecast the electricity load. However, ensemble thinking usually encounters a few issues. For example, the existing model cannot integrate the most accurate models. In addition, its training period is too long [

30,

34,

35,

36]. The problems result in a reduction in the model generalization. Thus, this paper proposes innovative two-stage ensemble thinking to overcome the above limitations. The contributions of the paper can be summarized as follows:

The paper designs the innovative densely residual block (DRB) based on residual fashion and the unshared convolutional layer to efficiently extract the crucial features from the input data.

The paper proposes an innovative ensemble model which comprises a densely residual block (DRB), bidirectional long short-term memory (Bi-LSTM) layers based on the attention mechanism, and ensemble thinking. In addition, the proposed two-stage ensemble thinking can help model ensemble most accurate snapshot models, improving forecasting performance.

The rest of this paper is summarized as follows:

Section 2 details the basic framework of the proposed method.

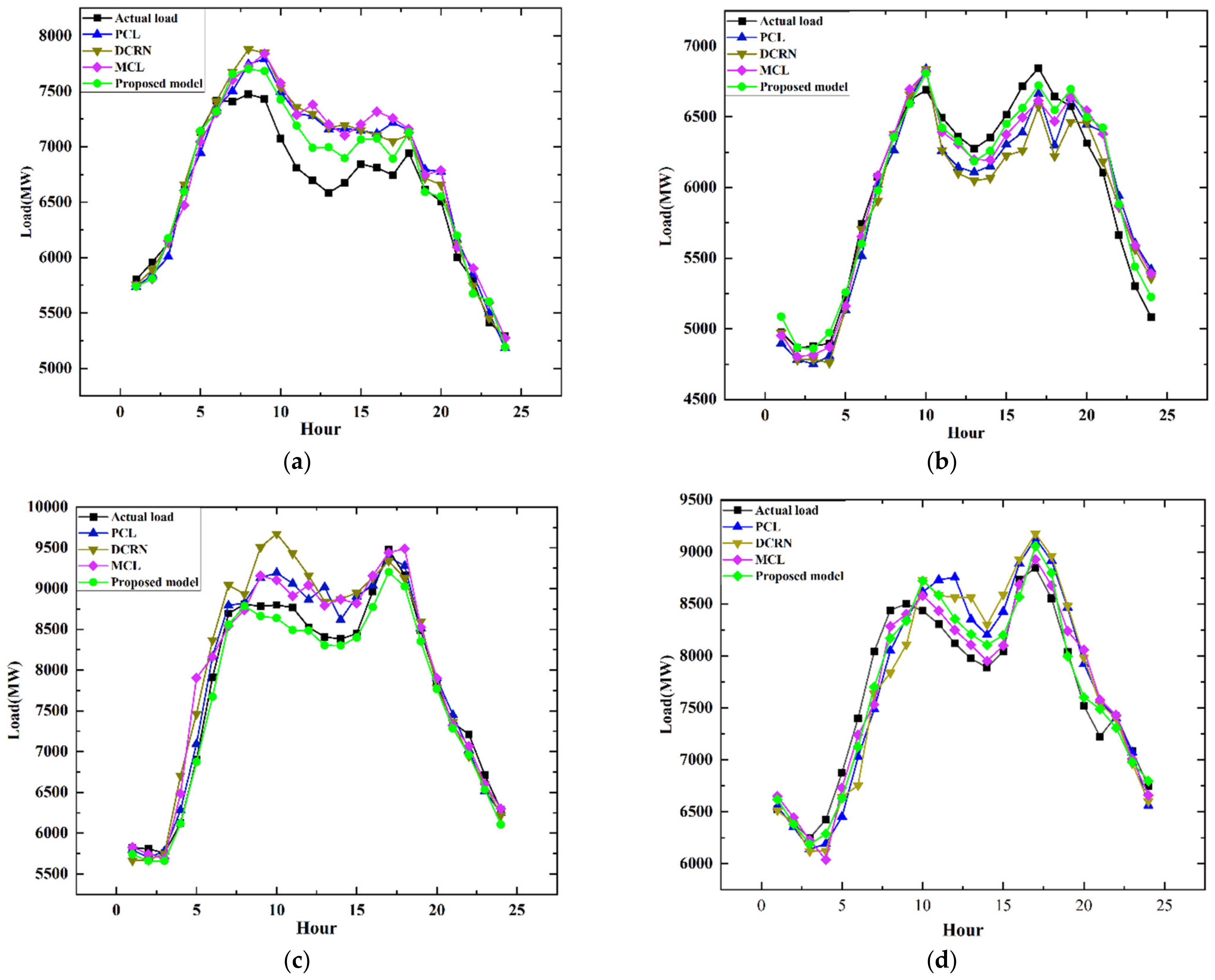

Section 3 shows the experiment setup and the simulation results of two datasets, the Australian dataset and the North American dataset.

Section 4 provides conclusions and future research directions.

4. Conclusions

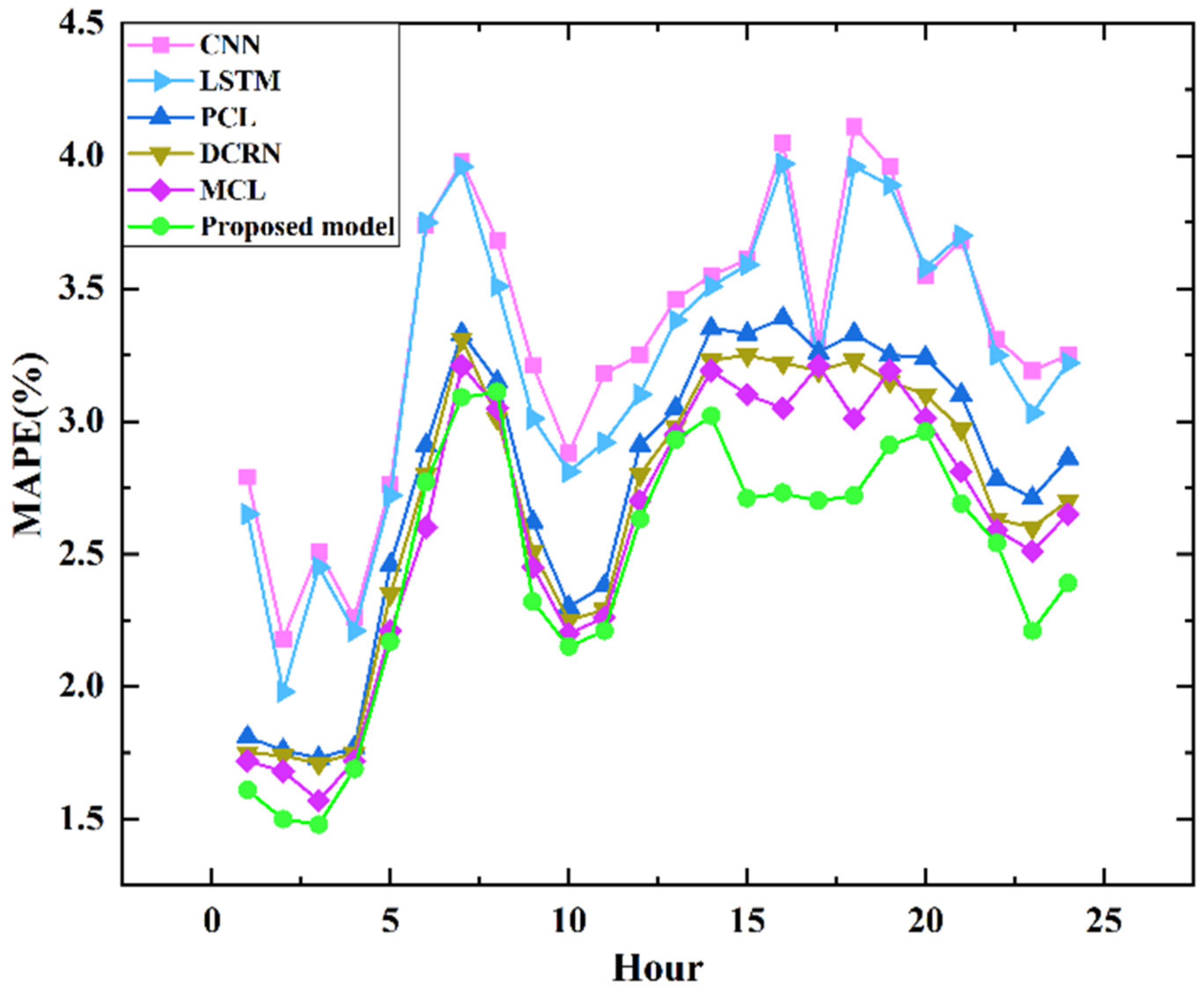

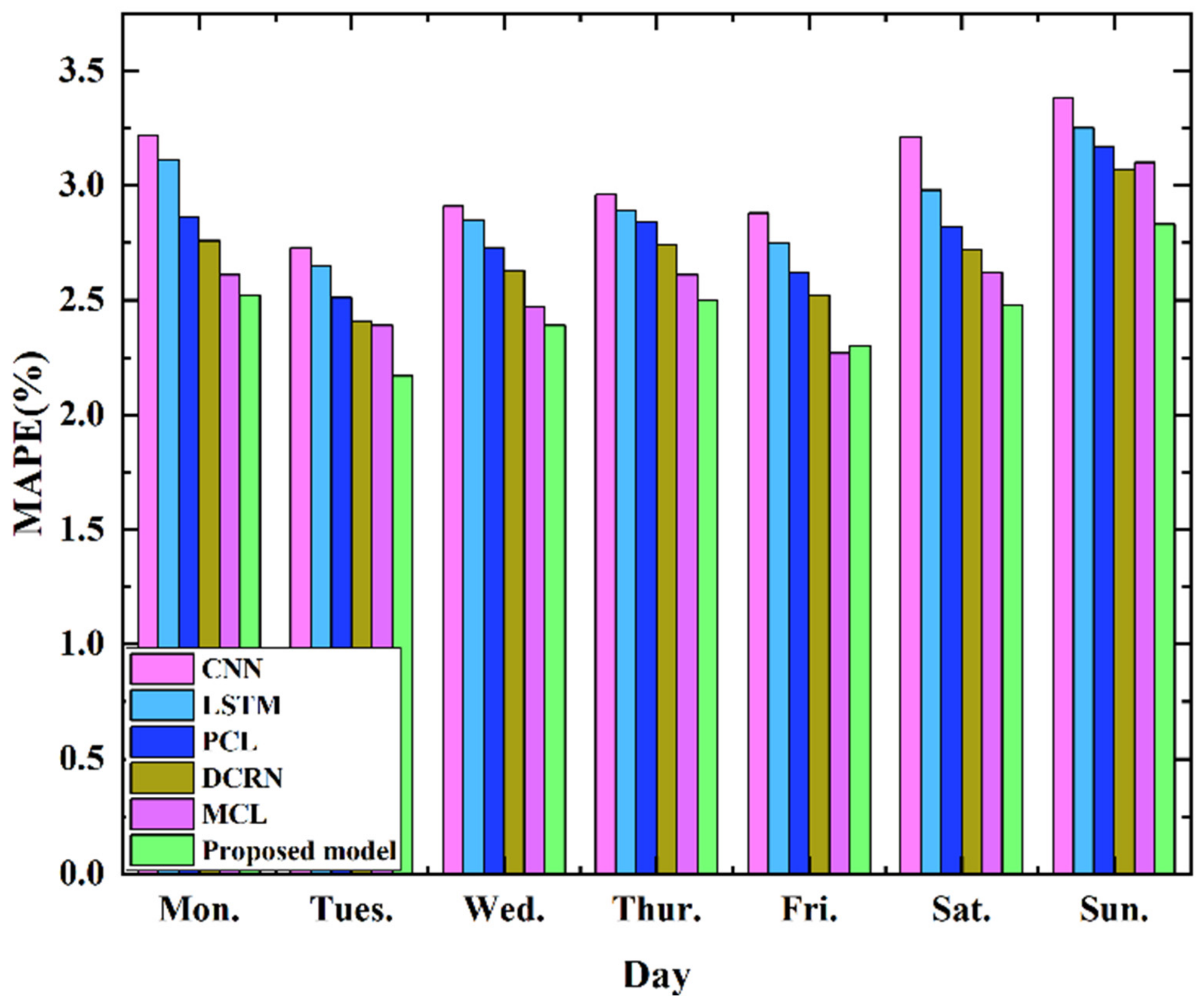

Short-term load forecasting is essential for the sustainable operation of the power system, and it has the ability to overcome the problem caused by the lack of electricity supply. Thus, the study proposes an innovative STLF method that consists of the DRB, Bi-LSTM layers, and attention mechanism. Specifically, the DRB based on the unshared convolutional neural network can relieve the limitation of the CNN-based models without the requirement of space invariance. It can extract the crucial features from input data. The paper reports the forecast results of the proposed model on two datasets. Experimental results demonstrate that the proposed model obtains more accurate forecast results than the mainstream schemes.

This paper still has some limitations to improve. For instance, the proposed model of this paper is only used for deterministic load forecasting, which cannot show the uncertainty of the electricity load. Interval forecasting and probabilistic forecasting need to be implemented to relieve the above problem. In future work, the advanced optimization algorithm can be adopted to tune the parameters of the proposed method. External factors, such as wind speed and humidity, can also be introduced into the model to boost model generalization.