Abstract

Emerging information technology such as Internet of Things (IoT) has been continuously applied and deepened in the field of education, and the learning analytics technology based on children’s games is gradually moving toward practical application research, but there are few empirical studies on the micro level of emerging information technology and learning analytics methods in the evaluation of young children’s learning process and learning effects. As the research content, the study examines preschool children’s analogical reasoning abilities, reflecting their thinking levels and processing abilities. Using a decision tree model in learning analytics, the process data and result data of children’s analogical reasoning games based on Internet of Things technology are analyzed, and the classification model of preschool children’s analogical reasoning is constructed. The study found that the learning analysis of analogical reasoning based on games mediated by IoT technology is feasible and effective.

1. Introduction

The issue of sustainable development is widely recognized as a pressing one in modern society [1], and early education is increasingly being recognized as an important component of sustainable education. It is particularly important for quality education and parenting during early childhood development [2], when quality education can provide effective support for early childhood development. As a result of new educational technologies and methods, there are more opportunities for supporting sustainable early childhood development.

Emerging technologies such as the Internet of Things and artificial intelligence are gradually applied in the fields of education management and teaching within the context of education informatization, and emerging technologies have provided substantial support for the expansion of learning and assessment methods. The combination of information technology and education has not only altered the educational landscape, but also the methods of teaching and learning [3], and it offers numerous opportunities for the growth of learning analytics [4]. The prevalent tendency in schools for learning analytics and applications is to promote the full integration of IT and education. However, the majority of earlier research has focused on online courses, specialized web-based courses, and well-known learning content management systems [5], with relatively little research on offline learning, particularly for young children. On the one hand, the learning styles and environments of young children are vastly different from those of university students and elementary and secondary school students. The learning of young children emphasizes interaction with the real world, with play as the primary activity, and is informal learning. On the other hand, the application of emerging information technology in the field of preschool education is still concentrated at the level of conceptual conception and educational management, and empirical research at the micro level, such as the process and effect evaluation of young children’s learning, is lacking.

In recent years, the development of IoT technology and its application research in education has made it possible to evaluate the learning process and learning outcomes of young children, both to ensure children’s interaction with the real world and to collect and record data on the process and outcomes of children’s learning in play, providing dynamic and diverse data to conduct learning analysis of preschool children. Learning analytics is a multidisciplinary method that incorporates data processing, enhanced learning techniques, educational data mining, and data visualization. The goal of learning analytics is to match educational challenges to an individual’s needs and skills by offering interventions such as feedback and learning content that can provide direction for enhancing the learning process and predicting learning outcomes [6,7,8]. Consequently, technology may be employed merely as a recording tool instead of as a constructive augmentation for assessment-based learning [9]. Consequently, with the help of IoT technology and learning analytics, the process and outcome data of preschool children’s learning in play can be effectively recorded and analyzed to provide powerful support for teachers’ education and teaching as well as children’s further learning and development, which is also the proper method for achieving educational informatization in preschool education.

2. Literature Review

2.1. Study of Learning Analytics Applied to Micro Learning Processes

Learning analytics has shifted from theoretical research such as principle exploration to practical application research such as learning behavior analysis and learning prediction based on educational big data, focusing on the practical application value of data models and exploring the teaching and learning evaluation and learning outcome prediction behind the data [10,11]. The issues of learning behavior diagnosis and intelligent monitoring of the learning process are among the research directions of learning analytics and data mining, and it is crucial to analyze the process of learning occurrence [10]. Currently, learning analytics is mainly applied in online or blended learning environments to predict or warn students’ academic performance with secondary school and university students as research objects [12,13,14,15], to explore students’ online learning motivation or self-regulation characteristics [16,17,18]. Overall, with the development of online learning spaces, empirical studies of learning analysis based on actual courses and teaching using technologies such as intelligent recording systems, IoT sensing technologies, and online learning and management platforms have gradually increased, but there is insufficient analysis of individual learning characteristics and behavioral analysis [19], and there is still a lack of micro-level teaching and learning that can be facilitated. In particular, in the field of preschool education, research on digital game-based learning based on online platforms and resources is beginning to emerge, but research on real game-based learning analysis based on the application of IoT technology is rare.

2.2. Research on the Application of Information Technology in Game-Based Learning and Assessment for Preschool Children

There is a deeper integration and development of artificial intelligence technology and learning analysis [20], and in the era of artificial intelligence, educational assessment should integrate learning and diagnostic aspects, record learning process data and assessment process data through technology, focus on the formative nature of assessment, compute, model and visualize various behaviors of children, and achieve dynamic, developmental and comprehensible assessment. On the one hand, it assesses students’ level of proficiency, and on the other hand, it supports further learning and reflection of individuals through appropriate forms of feedback [21]. With the dissatisfaction with traditional standardized tests and the increasing penetration of information technology in the field of education, game-based learning and assessment has received increasing attention [22]. Game-based assessment can examine children’s learning and development in an ecological context. The results of game-based assessment can vividly describe children’s relevant strengths and weaknesses [23], have good ecological validity, flexibility, and can motivate children [24], and the process of game-based assessment is also the process of children learning through play. There are currently learning analyses of students’ behavioral trajectories and learning behaviors based on the process of educational games in the field of primary and secondary schools, exploring the performance of students at different academic levels in educational games and the impact of behavioral patterns in educational games on academic performance [25,26].

Learning basic concepts in early childhood education can be used to improve children’s early literacy education, reading skills, writing and math skills through play [27]. Learning analytics and game-based learning and assessment can encourage the creation of more effective educational games that also help to maximize the learning process [28]. Learning media and collaborative learning models in basic education can be used in early education through the use of platforms such as desktop computers, laptops, and tablets. Young children can receive a boost in knowledge and skill improvement through the use of new technologies [29]. Research has found that children aged 2–5 spend a lot of time on technology such as tablets or smartphones [30,31], because technology on tablets or smartphones that use multimodal functions (e.g., auditory, visual, and touch) are very accessible to young children through swiping, dragging, and tapping [32]. Tablets or smart phones provide learning and exploration using touch-based digital tools that keep children interested [33,34]. The latest technology provides learning experiences that give children the opportunity to gain more knowledge and fun from playing games on tablets or smart phones [35].

It should be noted that with learning and evaluation based on digital games, children are mainly in contact with a screen, and cannot really touch and manipulate objects; with the emergence of information technology such as the Internet of Things and educational applications, the integration of Internet of Things technology in game-based learning and evaluation ensures children’s physical interaction with games, in line with the cognitive development characteristics of children. These technologies are also are able to provide more detailed and efficient data information, enabling automated data collection and transmission. In the current research, early childhood games based on digital games or IoT technology focus on learning results, ignoring the analysis and evaluation of the learning process, and the data types are more diverse in the evaluation based on games, and data mining technology provides the conditions for data processing and analysis. With the development of data analysis technology, more processes and fragmented and personalized data can be analyzed and mined as variables in certain statistical models. Currently, educational data mining has a wide range of applications in classroom teaching behavior analysis and online learning analysis [36,37,38]. Educational data mining techniques such as decision trees and association rules provide support for analytical methods for game-based learning and evaluation.

Analogical reasoning is a central mechanism of human learning [39], essential for the acquisition and integration of new knowledge and skills [40], and essential for other higher-order cognitive tasks such as concept shifting, scientific learning, creative thinking, problem solving, assessment, and decision making [41,42,43]. Analogical reasoning embodies important process abilities and levels of thinking in children, and it is important to analyze the processes and performance of learning in analogical reasoning games in young children. An analysis of the relevant literature reveals that few empirical studies have been conducted on the application of learning analytics and emerging information technologies to preschool children’s learning and developmental assessment, and that the application of IoT technology can automate the recording and transmission of data, and also provide technical support for the recording and immediate feedback of children’s learning and play processes. Currently, empirical research on the application of IoT technology to preschool children’s education, teaching, learning and development evaluation is urgently needed to meet the trend of education modernization, support children’s learning and development, and achieve the purpose of sustainable development education.

The research team previously classified young children’s analogical reasoning levels according to their cognitive attribute mastery characteristics [44], and classified young children’s analogical reasoning ability into four levels, which are described in Section 3. Previous standardized tests of analogical reasoning are summative evaluations, and the evaluation process lacks feedback and is based mainly on children’s operational results, and the evaluation data are analyzed mainly through classical test theory and item response theory. In this paper, based on the existing research, we construct and validate a game-based analysis and evaluation of preschool children’s analogical reasoning learning through learning analysis methods based on IoT technology. The process of analogical reasoning learning for young children is also the process of evaluation, and the game process provides automated feedback for children, who can make their own adjustments according to the prompts. The learning analytics approach incorporates data on the play process and outcome data of young children’s analogical reasoning into a classification prediction model to explore effective models based on learning analytics that can evaluate and support young children’s learning and development in everyday play and learning. The following components make up this research question.

- In what ways is IoT technology able to contribute to the design of analogical reasoning play materials that collect and transmit data automatically?

- What analogical reasoning game process data and outcome data can be incorporated into classification prediction models?

- What is the accuracy of classifying children’s analogical reasoning skills based on the outcome data and the process data of analogical reasoning games?

3. Materials and Methods

3.1. Instruments for Research

The previous study analogical reasoning standardized test instrument and game materials were adapted from Siegler and Svetina and Stevenson et al.’s animal figure matrix task [45,46], a classical analogical reasoning task. The analogical reasoning task selected were familiar animal figures of horses and elephants for children, and the animal figures had four dimensions of variation in size, color (red, yellow, and blue), orientation (toward the left, toward the right), and species, consistent with the dimensions of variation in Sigler’s study, for a total of 24 animal figures. Considering manipulation and fun, the study made the 24 animal graphics cards as a set of 4 dice, which were squares with 4.5 cm sides, with 6 animal graphics on each die, for a total of 24 different animal graphics. Each side of the dice was different, with more variations making it more interesting, which is very suitable as a learning material for young children.

In a game-based analogical reasoning assessment, the specific analogical reasoning items vary from child to child, and the number of tasks completed varies from child to child, whereas in a standardized analogical reasoning test, the number of analogical reasoning tasks to be completed is the same for each child (4 practice tasks and 20 formal tasks). Current analogical reasoning tests are presented in both verbal and non-verbal (graphics and pictures) forms, with the verbal form requiring a higher level of prior knowledge and reading skills, and the graphics and pictures being more appropriate for younger children. The animal figures used in this study are horses and elephants, which are familiar to children, and are also non-literal, which excludes cultural restrictions and has a strong suitability.

The analogical reasoning game adopts the “analogical reasoning operation box” with automatic feedback function. Sixteen animal graphics dice are needed, and every four different dice are one group, with four groups in total. Each set of dice corresponds to the four compartments of the analogical reasoning box (from left to right, compartments 1, 2, 3 and 4). The subject can choose any one die from the four dice, then choose any side to put in the No. 1 grid, and then choose any one animal from the four dice corresponding to the No. 2 grid as the friend of the animal in the first grid. The first two animals are a pair of good friends; the subject then selects an animal according to the same procedure, and helps the third animal find a friend according to the relationship between the first two animals, as shown in Figure 1. If the subject finds the right friend, the bottom of the fourth grid lights up green; if they find the wrong friend, it lights up red. The children can adjust their approach according to the results of feedback for testing. The analogical reasoning game time is not limited; children can decide independently. Most children’s game time was about 25–30 min, including becoming familiar with the materials and explaining the rules of the game and operating procedures for about 5 min. In order to better stimulate children’s interest and participation enthusiasm, the game adds a reward mechanism: children can obtain corresponding stickers every time they find the right friend, and a certain number of stickers can be exchanged for animal models. Both the analogical reasoning game and the pre-study standardized test were administered by doctoral students who were systematically trained in preschool education.

Figure 1.

Analogical reasoning game box.

3.2. IoT-Enabled Automatic Capture and Transmission of Game Data

The study uses optical image recognition technology in the Internet of Things technology to identify analogical inference materials. Optical identify (abbreviated as OID) optical image recognition technology is an implementation of the Internet of Things. Each OID invisible code is encoded with a graphic consisting of many subtle dots according to specific rules and corresponding to a specific set of values, and the OID invisible code can be hidden under the color of the printed material. Thus, each face of the analogical inference dice is labeled with an invisible code to identify the corresponding animal graphics. To identify and transmit the data, the study designed the analogical reasoning box in which the dice were placed. The box had four compartments in the upper part of the box, and the bottom of the box was hidden with components such as sensors, batteries and microcomputers. Each compartment had a point-and-shoot-like reading head (Sonix II technology) at the front and bottom left, which recognized and read the topmost graphic element of the animal graphic dice through a set arithmetic program, and then converted the light signal into an electrical signal through a sensor and transmitted it to the computer.

The black box recognized the information of the dice, in which the data were recorded and stored and transmitted by an intelligent robot (there was a screen on the head of the robot, and the data could be displayed on the screen, so that the researcher or the teacher could see the situation of the children’s game data record instantly). In order to avoid attracting the children’s interest and influencing their analogical reasoning games, the robot was not introduced much during the game, but only explained that the robot “beiya” was playing with us. The process from children’s play to data recording and transmission was completed simultaneously, as shown in Figure 2.

Figure 2.

Analogy game data collection and transmission process.

3.3. Research Subjects

Subjects were randomly selected from 539 children who took the standardized test of analogical reasoning for preschool children aged 4~6 years old. In China, preschoolers aged 3~6 years are educated in kindergartens, which are divided into small classes (3~4 year olds), middle classes (4~5 year olds), and large classes (5~6 year olds) according to the age of the children. This study was conducted in the fall semester, when the children in small class had been in kindergarten for less than six months, and they mainly solved the problem of adjustment to kindergarten. After communication with the small class teachers and observation, the children in the small classes had difficulty in completing the analogical reasoning game alone, so the study was conducted with preschool children over 4 years old, i.e., 4~6-year-old preschool children as the research subjects. As shown in Table 1, in total, 74 children, 40 boys and 34 girls, from two classes in the kindergarten participating in the research project, were selected as participants in the study, with no significant differences between the monthly ages of boys and girls (p > 0.05). According to the results of the previous large sample study, the children were divided into four levels of analogical reasoning, level 1 being unable to complete the analogical reasoning task; level 2 being able to complete only tasks similar to the practice task, i.e., they could only understand the rules and would not transfer them; level 3 being able to complete tasks with two latitudinal transformations (e.g., size, color, orientation, and species of animals); and level 4 being able to complete tasks with three or more latitudinal transformations. In total, 74 children completed the analogical reasoning standardized test and the analogical reasoning game, with a 3- to 5-day interval between the two tasks.

Table 1.

Participant characteristics.

3.4. Data Acquisition and Preparation

Data acquisition is an important part of game-based analogical reasoning learning and evaluation. This study identifies specific types of data based on the purpose of the evaluation and the possibility of technical implementation, and the key to the evaluation is the method of data processing and analysis, especially when process and outcome data coexist. Information technology-based learning and evaluation are increasingly available, and the amount and diversity of available data from learners, teachers, educational environments and learning environments is enormous. The decision tree is a basic classification and regression method that is widely used. The study uses a decision tree model to classify the performance of children’s analogical reasoning games, and the application of the decision tree model begins with the acquisition and preparation of data.

The data acquisition was based on the analogical reasoning toys designed with the help of IoT technology, and the data preparation was performed based on the raw data and preliminary statistics. In the game-based analogical reasoning learning analysis, the data of preschool children’s analogical reasoning games included outcome data and process data. The outcome data were mainly children’s game scores or game scores, and analogical reasoning levels derived from standardized tests of analogical reasoning, while the process data included game duration, total number of tests, total number of adjustments, average game duration, average number of tests, and average number of adjustments.

Based on the results of the standardized test of analogical reasoning in the preschool period, preschoolers were classified into four levels of analogical reasoning as Y values in the decision tree. Then, we determined how best to classify children’s analogical reasoning levels based on the behavioral data in children’s analogical reasoning games. The decision tree model in data mining can automatically select the division attributes and their values for each division rule according to the characteristics of the data. The principle of the decision tree algorithm is to use the data set to calculate the impurity index (entropy) of each data feature attribute, select the feature attribute with the largest value of the impurity index as the division attribute, divide the data set according to the size of the division attribute value, and divide those greater than that value into one class and those less than that value into another class, and gradually advance until the division cannot further improve the classification accuracy, so the best decision tree model is obtained [47].

4. Results

The decision tree model in data mining was used to construct a classification model of children’s analogical reasoning learning, and to test the possibility and validity of evaluating preschool children’s analogical reasoning ability through analogical reasoning game behavior data. According to the decision tree model, the data mining and data analysis stages mainly include feature attribute screening, model construction, model testing, and model analysis and interpretation.

4.1. Screening of Predictor Variables

Feature attribute screening aims to identify key indicators for evaluating preschoolers’ analogical reasoning ability. The selection of feature attributes that correlate well with the classification levels derived from standardized tests of analogical reasoning in preschoolers from the process and outcome data of children’s analogical reasoning games and demographic variables is the attribute selection process and is important for model construction. Gender, grade level, total game score, game score rate, total game duration, total number of tests, total number of adjustments, average game duration, average number of tests, and average number of adjustments have been identified as the feature attributes available for selection during the data preparation phase. In general, feature attributes can be filtered by correlation analysis, manual discriminations, and model building exploration. The results of the correlation analysis between the classification results of the standardized measure of analogical reasoning (as Y-values in the data mining decision tree analysis) and the available feature attributes are shown in Table 2. Except for the average duration, which did not reach significant correlation with Y-values, Y-values were significantly correlated with all the feature attributes, and were moderately strongly correlated or weakly correlated according to the degree of correlation.

Table 2.

Correlation between Y-values and feature attributes.

Among the characteristic attributes, game score, game duration, number of tests and number of adjustments are available in both total and average ways. Since in analogical reasoning games, children complete different numbers of tasks and have different total game durations, using game score rate, average game duration and average number of tests and average number of adjustments can better reflect individual children’s performance. Therefore, game score rate, average game duration, average number of tests and average number of adjustments, gender and month age were selected as the initial attribute features. In addition, exploration through decision tree model building also revealed that the average behavioral data used for model building were more accurate than the performance of total behavioral data such as total hours. The gender and grade level of the subject children were significantly correlated with the Y value, but the inclusion of gender and grade level in the attribute features was found, from the model construction exploration, to not increase the classification accuracy of the model, and the study aimed to judge the level of analogical reasoning of the subject children based on their specific performance in the analogical reasoning game without prior inclusion of age and gender factors, so the final attribute features used for the decision tree model analysis included game score rate, average game duration, average number of detections, and average number of adjustments, for a total of four attribute features.

4.2. Classification Prediction Model Construction

4.2.1. Model Construction Process

The study selected “game score rate”, “average game duration”, “average number of tests”, and “average number of adjustments”. The four attributes were selected as independent variables, and the level of the analogous reasoning standard test was used as the Y value, which is the predicted target attribute. The study conducted input and analysis in Python language through the Spyder compiler in Anaconda software. The decision tree classifier in sklearn, “DecisionTreeClassifier”, was invoked in the Spyder editor to build a decision tree prediction model for analogical reasoning level classification of preschool children. The model construction includes four stages: toolkit selection, data writing, model construction and model visualization. The specific steps are as follows.

- (1)

- Toolkit selection. In the machine learning library sklearn, we identify the toolkits needed for model building, including the decision tree classifier “DecisionTreeClassifier”, “train_test_split” for dividing the proportion of training set and test set, “cross_val_score” for cross-validation and “metrics” for model evaluation.

- (2)

- Data writing, reading and extraction process. Using Python language, we entered the code in the editor to write, read and extract the data.

- (3)

- Model construction. The decision tree classifier uses the information quotient “entropy” or “gini” as the discriminant of the tree branches. The maximum depth of the tree is usually set by combining the number of feature attributes and the sample size. The maximum depth refers to the root node generating children from top to bottom, until the tree grows down to the maximum depth, then the tree stops growing and no more branches. The study set the maximum depth to 5 and 4. After comparison, we found that the model data fit better and the classification accuracy was higher when the maximum depth was set to 4. In addition, the sample size of the study was small, and the maximum depth was set to 4 to prevent over-fitting and too many nodes of the decision tree.

- (4)

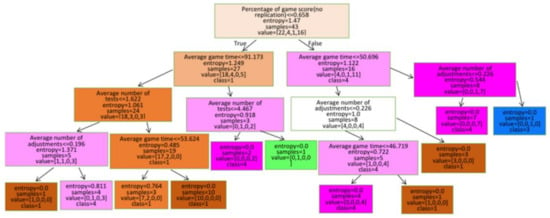

- Model visualization. Model visualization was used to present the constructed decision tree model by means of a tree. Visualization was mainly conducted with the help of graphviz package, and the visualized feature attributes were the above finalized game score rate, average game duration, average number of detections and average number of adjustments. The categories of the feature attributes predicting the final node rating were divided into four categories with the same level of Y-values. The final constructed decision tree classification model of preschoolers’ cognitive attribute mastery levels for analogical reasoning is shown in Figure 3.

Figure 3. Classification model of analogical reasoning in preschool children.

Figure 3. Classification model of analogical reasoning in preschool children.

As shown in Figure 3, the decision tree model is an inverted tree diagram, with the root at the top and the leaves at the bottom, and the leaves are labeled with the classification results. The classification rules of the decision tree model are described by the statement “if...... then......”, and according to Figure 3, there are 11 specific classification rules.

4.2.2. Classification and Prediction Model Testing

The training set samples were used for model construction, and the test set samples were used for model testing. The model testing was conducted to classify the test set samples based on the decision tree model derived from the training set, so as to test the classification accuracy of the model and to derive the relative importance coefficients of the feature attributes. The relative importance coefficients of the four attributes of game score rate, average duration, average number of tests and average number of adjustments were 0.218, 0.321, 0.229 and 0.233, respectively. Model testing can classify preschool children’s analogical reasoning level more accurately according to the behavioral data in children’s games.

4.2.3. Classification and Prediction Model Analysis

Based on the decision tree classification model, the study constructed a classification model with four attribute features X on the target attribute Y, which included 11 judgment rules. Specifically, the features of game score rate, average game duration, average number of detections, and average number of adjustments in preschool children’s analogical reasoning games were able to judge children’s level of analogical reasoning. The model takes into account the outcome and process performance of children’s games. The distribution of analogical reasoning ability includes level 1, level 2 and level 4 in the case of a game score less than or equal to 0.658, and level 1, level 3 and level 4 in the case of a game score greater than 0.658. This also indicates that game score is an important basis for judgment, but not the only basis. It is not possible to judge children’s analogical reasoning level only based on the level of the score rate, but also to consider children’s play process data above and below the cutoff point of 0.658.

For the judgment rule of analogical reasoning level 1, children’s game score rate was less than 0.658, the average duration is less than one and a half minutes, and the average number of detections was more than two, regardless of the average number of adjustments; if the average duration was less than one and a half minutes, the average number of detections was not more than two, but the average number of adjustments was less than one, children also belonged to level 1. This shows that if the game score was not higher than the cutoff point, children’s average game length was short, and when the number of tests was high or the average length was short, and the number of tests and adjustments were low, children’s analogical reasoning level tended to belong to level 1; that is, children’s time for thinking was short in the process of analogical reasoning task construction and completion, the construction of the task was more random, the task was tested many times, and the judgment button was pressed frequently in a short period of time, and there was also a lack of reflection and time for learning. Children with few average detections and adjustments, on the other hand, may have been unable to adjust the task based on the feedback information because they did not know how to adjust after receiving the wrong feedback. Children were also classified as Level 1 if their game score rate was above the cutoff, their average game length was less than 1 min, and their average number of adjustments was less than one. These children had a shorter average time for task construction and made few adjustments, indicating that these children may have constructed fewer types of tasks and less difficult tasks, and although they were above the game score rate cutoff, they constructed mainly low-level tasks.

The rule sets for Level 2 and Level 3 were simpler, and the distribution of Level 2 and Level 3 children was also less represented in the large sample data. Without being above the game score rate cutoff, if the average length of children’s games was more than one and a half minutes and the average number of tests was more than four, then children belonged to level 2. Compared with level 1 children, level 2 children spend more time in analogical reasoning task construction and testing and will test the results multiple times when neither game score rate is above the cutoff, indicating that level 2 children spend more time in thinking and processing time is higher. For the Level 3 rule set, children scored higher than the cutoff point and spent more than one minute on average playing and adjusting more than one time. Level 3 children spent more time on average playing and adjusted more than Level 1 children with higher scores, which indicates that Level 3 children process more deeply than Level 1 children during task construction and response.

In the rule set of level 4, a child belonged to level 4 if their game score was not higher than the cutoff point, the average game duration was not more than one and a half minutes, but the number of tests was less than two and the number of adjustments was more than 0.196, or the average duration was more than one and a half minutes, but the number of tests was not more than four. In the determination of the child’s level, the conditional rules were the process of comparing the judgment level by level; for example, the second judgment rule of level 4 differs from that of level 2 only in the average number of tests. Under the same game score rate and average time limit, children with more than four tests were level 2, and children with no more than four tests on average were level 4, which also indicates that children with higher levels judge more carefully before testing and press the testing button after checking.

Through the specific analysis of the judgment rules and the specific performance of children’s play process, it is possible to understand the characteristics of children’s analogical reasoning at different levels, and also to know the differences between children of the same level in terms of game outcome data and process data. The determination of children’s analogical reasoning level integrates four attribute features: game score rate, average game duration, average number of tests and number of adjustments, which provides important information for understanding children’s analogical reasoning learning process.

The performance and strategies of young children at analogical reasoning games and task-solving games. Analogical reasoning game participants constructed an average of 13 analogical reasoning tasks, with a wide range of difficulties and types of analogical reasoning tasks constructed, with significant individual differences. The score rate of children’s initial created tasks in the game was 52.51 percent, and the score rate of their final responses grew dramatically after changing according to the correct and erroneous feedback to reach 78.9 percent (p < 0.001). It suggests that effective learning happens during young children’s play.

In addition, while resolving issues requiring analogical reasoning, children demonstrated a variety of solution strategies and approaches. Some children designed and completed each job quite rapidly, as did testing and correction, and there was an element of unpredictability in the initial task construction. Nevertheless, some youngsters play the task construction and solution process rather slowly, with some forethought, and engage in detection and adjustment behaviors only when they are more internally clear. For the feedback results, when children received feedback about the error of the game task, some children continued picking up the blocks and searching by trial and error, while others re-observed the first two animal figures, considered the relationship between animal friends, and then searched for a specific animal figure. In general, children with lower levels of analogical reasoning can construct a single type of analogical reasoning task or a more random game task, and the tasks they can complete are simpler and less likely to transfer to more complex tasks; however, children with higher levels of analogical reasoning have more accurate judgments of whether they can complete tasks, and the game tasks they construct are frequently completed correctly. They are also eager to take the initiative to tackle more difficult analogy jobs. Overall, the data on children’s play processes and outcomes can be used to categorize children’s levels of analogical reasoning, as well as to identify the strategies and styles that children exhibit when solving analogical reasoning tasks during play, which provides teachers with crucial information for guiding children’s analogical reasoning learning.

5. Discussion

5.1. Game Design in Game-Based Learning and Assessment with IoT Technology

Game-based analogical reasoning assessment with the help of IoT technology provides a new assessment model for preschool children’s learning and development assessment, and an important reference for game-based assessment design. The application and integration of IoT technology as an emerging technology and game-based assessment as a new method provide new perspectives and approaches to child development and educational assessment, and provide new windows to our perception of children, teachers and educational approaches and methods. The integration and practical exploration of new technologies and methods support the redefinition and rethinking of children’s learning and assessment.

Play is an important way for children to learn, and play environments allow children to direct their own learning, at a pace consistent with their individual level of development [48]. The benefits of play have been validated in terms of personal and social development and individual learning [49,50]. Thus, the design of the game is crucial, and the selection of game materials and the development of game rules are subject to constant discussion and testing. The analogical reasoning game in the study was adapted from a classical analogical reasoning task, with interesting game materials and age-appropriate game rules. An important prerequisite for the reliability and validity of game-based assessment is good game design. Research has demonstrated that learning is optimized when game-design techniques are closely integrated with learning objectives and teacher–student interaction [51].

Feedback in educational contexts has been considered a key factor in improving knowledge and acquiring skills [52]. The main value of formative assessment is that it can provide immediate feedback based on the results of the learner’s assessment [53]. Learning is defined as a lifelong process of acquiring, interpreting, and evaluating information and experiences, and then transforming the information/experience into knowledge, skills, values, and dispositions. Good games can support deep and meaningful learning. Contextual learning theory suggests that knowledge acquired by mindful learning is considered meaningful and useful [54]. Learning is best achieved when it is active, goal-oriented, contextualized, and fun [55]. Therefore, teaching and learning environments should be interactive, provide continuous feedback, be able to engage and maintain children’s attention, and have appropriate and adaptive levels of challenge, which are also characteristics of good games. Thus, game-based formative assessment with the help of IoT technology provides the basis for children’s active learning. In preschool education, learning and play materials are essential for child development. Early childhood educators frequently select and create manipulative items based on the interests and educational objectives of their students. Current kindergarten theme and area activity materials frequently lack an element of feedback. The educational process does include a great deal of chance and uncertainty, and there are numerous instances in which children’s play and learning require explicit and rapid feedback to develop or improve.

The integration of IoT technology into toy materials can provide children with more natural and objective evaluation than external feedback provided by teachers or peers. On the one hand, the feedback provided by the toys themselves means that the play materials can naturally present results and suggest completion after children have completed the manipulation, excluding external intervention or interference, preserving an independent, complete and continuous exploration process for children, and also maintaining the integrity of children’s play to the greatest extent possible. On the other hand, the evaluation and feedback provided by IoT technology are relatively objective and open, giving children sufficient space for reflection. Specifically, the play materials only provide hints about the results of manipulation, but do not directly provide solutions. Children can think independently and decide how to respond to the feedback, how to improve the strategy and solve the problem, and children’s subjectivity can be manifested. Therefore, the immediate evaluation of IoT technology in games can encourage children’s independent inquiry and enhance the learning effect.

5.2. Validity and Accuracy of Game-Based Learning and Assessment Based on Learning Analytics Using IoT Technology

The study used learning analytics in the context of IoT technology to construct a classification model of preschool children’s analogical reasoning levels based on analogical reasoning game outcome data and processual data. In the context of IoT technology, game-based assessment is formative in nature, and unlike traditional standardized tests, each child can construct their own game tasks and show different game processes and outcomes, and learning analytics-based technology can effectively use the data and outcomes in games to evaluate and support children individually. The findings of this study are consistent with the findings of Shanshan Li’s study [47], which empirically demonstrates the effectiveness of IoT technology applied to preschool children’s learning and development evaluation, and further validates the importance of both play process data and outcome data as evaluation indicators. Previous predictions of learning outcomes have mostly taken regression analysis, with less research on prediction using machine learning models and less exploration of behavioral indicators for predicting learning outcomes [56], and in the context of educational informatization, the study explores the possibility and feasibility of emerging information technology and learning analytics methods in preschool children’s learning evaluation using IoT technology.

The study illustrates that IT-supported game-based assessment is also a process of children’s learning. Information-technology-mediated game-based assessment has the potential of formative assessment, and with the help of information technology, the real-time collection of children’s play data can be realized, both data on the results of play and data on the process of play, including a series of process data on children’s play time, number of attempts, and play strategies. With the Internet and IoT technologies, there is a wealth of data information for evaluating and supporting student learning, which can provide effective information for personalized evaluation and targeted learning support. Game-based assessment can simultaneously measure and support learning. Children’s learning can be continuously monitored without the learning process being interrupted [57]. Children’s interactions with play can be recorded as interrelated data points, each providing concrete evidence of learning [58]. Game-based evaluation can provide continuous evaluation based on a continuous stream of data rather than discrete data characterized by standardized tests. As the evaluation goes deeper into the game, children do not notice that they are being evaluated [59], providing better ecological validity.

Due to low teacher-to-student ratios and huge class sizes, it is difficult for teachers in China to provide constant attention to each student. In addition, teachers have diverse areas of competence, and the use of observation methods and instruments is not yet standardized in kindergartens, making it difficult to make reliable judgements about the progress of children in each area of development. Early childhood educators have a wide variety of responsibilities and operate under pressure, and they require additional professional assistance. Game design within the framework of IoT technology can give professional support for early childhood educators by giving them with high-quality play materials and data on children’s learning and developmental evaluation, helping them to better understand children. A game-based assessment focuses on the direct activities of children. In game-based assessment, the process of evaluation is also the process of learning for children, and children engage with the materials, allowing teachers to observe children’s daily behavioral performance and automating the recording of data on children’s play performance. The results of the game-based assessment provide a detailed description of the child’s strengths and weaknesses in specific domains and enable better support for the child by synthesizing the interrelationships between the child’s learning and development in each domain and exploring the factors that influence the child’s learning. The approach and design ideas employed in this work may be useful and provide a novel way of thinking about assessing the analogical reasoning skills of young infants, a topic that merits further refining and in-depth study.

5.3. Game-Based Learning Analytics Makes Optimal Use of Multidimensional Information about Learners (Learning Process and Learning Outcome Data)

The integration of emerging information technology and education is redefining learning and teaching and changing the traditional way of teaching and learning. The game-based analogical reasoning learning and assessment in this study is child-led, and children construct their own game tasks, fully reflecting the autonomy of children, who can construct different number and difficulty of game tasks and can set the goals of the game. The use of technology allows us to obtain specific process data about children’s play, to understand the correctness of the game tasks, the length of the game, the adjustments and the tests. Game-based learning and assessment, especially with the help of information technology, offers more possibilities for comprehensive and effective evaluation and support of children’s learning and development. Advocates of game-based learning believe that we should equip students to be innovative, creative, and adaptive to meet the needs of the twenty-first century and to meet their learning needs for complex and integrated domain content [60,61]. Games are different from any other medium in that they are situational and explorable and have a stronger appeal.

Previous game-based assessments of children’s learning and development have focused on children’s physical motor skills, cognitive abilities such as mathematics and language, and emotional and social development [62,63,64], but little attention has been paid to cognitive processing mechanisms or cognitive processes in children’s play processes [65]. Although games can evaluate and support children’s learning of relevant domain content, games are actually better suited to support more complex and integrated competencies. Thus, game assessment with the help of information technology can evaluate more complex abilities that can be used to assess children’s communication and cooperation, problem solving, reasoning, and creativity, and it can understand children’s specific behavioral performance and obtain detailed data about the learning process. Evaluating learners while playing provides insight into individual potential learning processes and allows understanding the impact of children’s motivational, affective, and metacognitive characteristics on the learning process and learning outcomes. Therefore, with the support of emerging information technologies, game-based learning and development evaluation can evaluate a wider range of content and obtain more detailed and comprehensive data on individual children’s performance in multiple domains and dimensions, such as cognition, attitude, emotion and sociality, and complex problem solving, providing real and valuable information for children’s development and teachers’ instructional design. Game design in the context of IoT technology can provide professional support for early childhood teachers by providing them with high-quality play materials, and can also provide teachers with data on children’s learning and developmental assessment, allowing them to better understand children. Game-based assessment focuses on children’s direct activities. In game-based assessment, the process of evaluation is also a process of learning for children, and children interact with the materials, increasing the possibility for teachers to observe children’s daily behavioral performance and helping teachers to automate the recording of data on children’s play performance. This imposes new information literacy and data analysis literacy requirements on teachers [66].

6. Conclusions

The study analyzes the feasibility and accuracy of utilizing learning analytics in the context of IoT technology to investigate game-based learning and the evaluation of young children’s analogical reasoning. Using information technology, the study automatically records and sends process and outcome data pertaining to young children’s game-based learning. Using children’s play process data and outcome data, a classification model of children’s analogical reasoning ability was constructed using a decision tree model in learning analytics, and the classification was reasonably accurate. The study provides a valuable resource for the application of developing information technologies and novel methodologies in early childhood education and evaluation. On the one hand, we consider the concept and value orientation of growing information technology applications in education, and on the other, we investigate successful game design and technology implementation solutions and game-based assessment application models.

7. Limitations

This exploratory empirical study examines the application of developing information technology and innovative data analysis methodologies in the field of preschool education. This study has some limitations. The study currently has a relatively small sample size, which will be expanded at a later stage. Meanwhile, there is further improvement in the accuracy of the model constructed by the study.

8. Future Directions

Future research should entail close collaboration between game designers, researchers, psychometricians, subject matter experts, and other stakeholders in order to integrate teaching, learning, and assessment [67]. This assessment model is in its infancy, and its design, technological implementation, and application require the involvement of numerous parties. It is essential to consider how to preserve personal information and control research ethics in future study and practice [68]. Future research will be conducted in related fields to increase the sample size of the study and to consider the possibility of integrating the use of other information technologies, such as the collection of children’s physiological data and the collection of data such as facial expressions and emotions [69], in order to achieve the analysis of multimodal data [70].

The study will also explore teachers’ interpretation and the use of data on children’s play processes and outcomes, and explore individualized features of children’s play processes, such as problem-solving strategies for analogical reasoning games, in order to better practice sustainable education. The advent of new information technology, evaluation techniques, and paradigms does not directly result in positive educational outcomes. New technology and methods offer chances to build new learning environments and assessment paradigms, but it is essential to think and behave sensibly and cautiously throughout the practical exploration and research process. Information technology is an objective environmental factor in the ecosystem of human development, and we need to reflect on how to use technology rationally, consider what kind of information technology can influence children’s learning, what are the mechanisms of influence, and what are the temporal and spatial conditions of influence, and we need to clarify the multiple theoretical foundations behind the application of information technology media to educational practice and the basic principles and specific rules of its application [71], and there is still a need for deeper rational thinking in this area, as well as for bottom-up rational reflection combined with in-depth practice.

Author Contributions

X.L. designed the study, conducted the statistic analyses and drafted the manuscript. L.L. and T.H. assisted with data collection, extraction and analysis and also revised the manuscript. S.L. was in charge of data checking and inspection. L.G. determined the research questions and focus, co-designed the survey instrument and edited the manuscript. All authors have read and agreed to the published version of the manuscript.

Funding

This project was supported by the General Project of Jiangsu Province University Philosophy and Social Science Research in 2021—Game Based Evaluation of Preschool Children’s Analogical Reasoning Based on Data Mining (2021SJA1197); the Annual Project of the Fourteenth Five Year Plan for Educational Science in Jiangsu Province: Quality Evaluation of Preschool Children’s Regional Activities based on Internet of Things Technology (C-b/2021/01/37); Jiangsu University of Technology Social Science Foundation Project in 2021: Quality Evaluation of Preschool Children’s Regional Activities based on Internet of Things Technology (KYY21502); the General Project of the Fourteenth Five Year Plan for Educational Science in Jiangsu Province: Evaluation of Preschool Teachers’ Ability to Support Science Education from the Perspective of Preconcepts (C-c/2021/01/33).

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki and approved by the Ethics Committee of East China Normal University (HR 694-2020 and date of approval 5 March 2020).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

Data from the studies described in this article are not yet available.

Acknowledgments

The authors would like to thank all the workers who participated in this study, as well as the kindergarten directors who facilitated the recruitment of participants in the survey.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Engdahl, I. Early childhood education for sustainability: The OMEP world project. Int. J. Early Child. 2015, 47, 347–366. [Google Scholar] [CrossRef]

- Furu, A.C.; Heilala, C. Sustainability education in progress: Practices and pedagogies in Finnish early childhood education and care teaching practice settings. Int. J. Early Child. Environ. Educ. 2021, 8, 16–29. [Google Scholar]

- Zhao, Y.; Zhao, W.; Jiang, Q. Design and empirical study of an online self-regulated learning intervention for teachers in a learning analytics perspective. Mod. Distance Educ. 2020, 3, 79–88. (In Chinese) [Google Scholar] [CrossRef]

- Gu, X.Q.; Hu, Y.L. Understanding, designing and serving learning: A review and prospective of learning analytics. Open Educ. Res. 2020, 26, 40–42. (In Chinese) [Google Scholar] [CrossRef]

- Oinas, S.; Hotulainen, R.; Koivuhovi, S.; Brunila, K.; Vainikainen, M. Remote learning experiences of girls, boys and non-binary students. Comput. Educ. 2022, 183, 104499. [Google Scholar] [CrossRef]

- Siemens, G.; Baker, R.S.J. Learning analytics and educational data mining: Towards communication and collaboration. In Proceedings of the 2nd International Conference on Learning Analytics and Knowledge, Vancouver, BC, Canada, 29 April–2 May 2012; pp. 252–254. [Google Scholar] [CrossRef]

- Nkhoma, M.; Sriratanaviriyakul, N.; Cong, H.P.; Lam, T.K. Examining the mediating role of learning engagement, learning process and learning experience on the learning outcomes through localized real case studies. J. Educ. Train. 2014, 56, 287–302. [Google Scholar] [CrossRef]

- Blumenstein, M. Synergies of learning analytics and learning design: A systematic review of student outcomes. J. Learn. Anal. 2020, 7, 13–32. [Google Scholar] [CrossRef]

- Danniels, E.; Pyle, A.; DeLuca, C. The role of technology in supporting classroom assessment in play-based kindergarten. Teach. Teach. Educ. 2020, 88, 102966. [Google Scholar] [CrossRef]

- Hu, H.; Li, Y.X.; Lang, Q.E.; Yang, H.R.; Zhao, Q.H.; Cao, Y.F. Occurrence process, design model and mechanism elucidation of deep learning. China Distance Educ. 2020, 1, 54–61, 77. (In Chinese) [Google Scholar] [CrossRef]

- Wu, F.T.; Tian, H. Mining meaningful learning behavior characteristics:a framework for learning outcome prediction. Open Educ. Res. 2019, 6, 75–82. (In Chinese) [Google Scholar] [CrossRef]

- Chen, Z.J.; Zhu, X.L. Research on predictive modeling of online learners’ academic performance based on educational data mining. China E-Learn. 2017, 12, 75–89. (In Chinese) [Google Scholar]

- Wang, C.H.; Fu, G.S. Prediction of online learning behavior and performance and design of learning intervention model. Chin. Distance Educ. 2019, 2, 39–48. (In Chinese) [Google Scholar] [CrossRef]

- Chernobilsky, E.; Hayes, S. Utilizing Learning Analytics in Small Institutions: A Study of Performance of Adult Learners in Online Classes. In Utilizing Learning Analytics to Support Study Success; Ifenthaler, D., Mah, D.K., Yau, J.K., Eds.; Springer: Cham, Switzerland, 2019; pp. 201–220. [Google Scholar]

- Kim, D.; Yoon, M.; Jo, I.H.; Branch, R.M. Learning analytics to support self-regulated learning in asynchronous online courses: A case study at a women’s university in South Korea. Comput. Educ. 2018, 127, 233–251. [Google Scholar] [CrossRef]

- Sun, F.Q.; Feng, R. A study of online learning motivation assessment in the context of learning analytics. Mod. Educ. Technol. 2022, 32, 10. (In Chinese) [Google Scholar]

- Xu, X.Q.; Zhao, W.; Liu, H.X. Research on the application framework and integration path of learning analytics in self-regulated learning. Res. E-Learn. 2021, 42, 9. (In Chinese) [Google Scholar]

- Lee, D.; Watson, S.L.; Watson, W.R. Systematic literature review on self-regulated learning in massive open online courses. Australas. J. Educ. Technol. 2019, 35, 28–41. [Google Scholar] [CrossRef]

- Pan, Q.Q.; Yang, X.M.; Chen, S.C. International research progress and trends in learning analytics-an analysis based on Journal of Learning Analytics papers from 2014 to 2016. China Distance Educ. 2019, 3, 14–22. (In Chinese) [Google Scholar] [CrossRef]

- Mou, Z.; Liu, S.; Gao, Y. A decade of international research in learning analytics: Hot spots, trends and prospects. Open Learn. Res. 2022, 27, 10. [Google Scholar]

- Zhang, S.; Wang, X.; Qi, Y. Artificial intelligence-enabled education evaluation: New concept and core elements of “integration of learning and assessment”. China Distance Educ. 2021, 2, 1–8. (In Chinese) [Google Scholar] [CrossRef]

- Eisert, D.; Lamorey, S. Play as a window on child development the relationship of play and other developmental domains. Early Educ. Dev. 1996, 7, 221–235. [Google Scholar] [CrossRef]

- Linder, T.W. Transdisciplinary Play-Based Assessment: A Functional Approach to Working with Young Children, Rev; Paul H Brookes Publishing: Baltimore, MD, USA, 1993. [Google Scholar]

- Ge, X.; Ifenthaler, D. Designing engaging educational games and assessing engagement in game-based learning. In The Handbook of Research on Serious Games for Educational Applications; Zheng, R., Gardner, M.K., Eds.; IGI Global: Hershey, PA, USA, 2018; pp. 253–270. [Google Scholar]

- Ali, M.; Shatabda, S.; Ahmed, M. Impact of learning analytics on product marketing with serious games in Bangladesh. In Proceedings of the 2017 IEEE Region 10 Humanitarian Technology Conference (R10-HTC), Dhaka, Bangladesh, 21–23 December 2017; pp. 576–579. [Google Scholar]

- Sung, H.Y.; Wu, P.H.; Hwang, G.J.; Lin, D.C. A learning analytics approach to investigating the impacts of educational gaming behavioral patterns on students’ learning achievements. In Proceedings of the2017 6th IIAI International Congress on Advanced Applied Informatics (IIAI-AAI), Hamamatsu, Japan, 9–13 July 2017; pp. 564–568. [Google Scholar]

- Aladé, F.; Lauricella, A.R.; Beaudoin-Ryan, L.; Wartella, E. Measuring with Murray: Touchscreen technology and preschoolers’ STEM learning. Comput. Hum. Behav. 2016, 62, 433–441. [Google Scholar] [CrossRef]

- Freire, M.; Serrano-Laguna, Á.; Manero, B.; Martínez-Ortiz, I.; Moreno-Ger, P.; Fernández-Manjón, B. Learning Analytics for serious games. In Learning, Design, and Technology; Spector, M.J., Lockee, B.B., Childress, M.D., Eds.; Springer: Cham, Switzerland, 2016; pp. 1–29. [Google Scholar]

- Drigas, A.; Kokkalia, G.; Lytras, M.D. ICT and collaborative co-learning in preschool children who face memory difficulties. Comput. Hum. Behav. 2015, 51, 645–651. [Google Scholar] [CrossRef]

- Marsh, J.; Plowman, L.; Yamada-Rice, D.; Bishop, J.; Davenport, A.; Davis, S.; Piras, M. Exploring Play and Creativity in Pre-Schoolers’ Use of Apps: Final Project Report; Project Report; University of Sheffield: Sheffield, UK, 2015. [Google Scholar]

- Neumann, M.M. Using tablets and apps to enhance emergent literacy skills in young children. Early Child. Res. Q. 2018, 42, 239–246. [Google Scholar] [CrossRef]

- Roskos, K.; Burstein, K.; Shang, Y.; Gray, E. Young children’s engagement with e-books at school: Does device matter? Sage Open 2014, 4, 2158244013517244. [Google Scholar] [CrossRef]

- Huber, B.; Tarasuik, J.; Antoniou, M.N.; Garrett, C.; Bowe, S.J.; Kaufman, J. Young children’s transfer of learning from a touchscreen device. Comput. Hum. Behav. 2016, 56, 56–64. [Google Scholar] [CrossRef]

- Neumann, M.M. Young children’s use of touch screen tablets for writing and reading at home: Relationships with emergent literacy. Comput. Educ. 2016, 97, 61–68. [Google Scholar] [CrossRef]

- Hui, L.T.; Hoe, L.S.; Ismail, H.; Foon, N.H.; Michael, G.K.O. Evaluate children learning experience of multitouch flash memory game. In Proceedings of the 2014 4th World Congress on Information and Communication Technologies, Melaka, Malaysia, 8–11 December 2014; pp. 97–101. [Google Scholar] [CrossRef]

- Chen, D.X.; Jim, Y.Y.; Yang, B. Analysis of deep learning techniques in the field of educational big data mining. Electrochem. Educ. Res. 2019, 40, 68–76. (In Chinese) [Google Scholar] [CrossRef]

- Maseleno, A.; Sabani, N.; Huda, M.; Ahmad, R.B.; Jasmi, K.A.; Basiron, B. Demystifying learning analytics in personalised learning. Int. J. Eng. Technol. 2018, 7, 1124–1129. [Google Scholar] [CrossRef]

- Alom, B.M.M.; Courtney, M. Educational data mining: A case study perspectives from primary to university education in Australia. Int. J. Inf. Technol. Comput. Sci. 2018, 10, 1–9. [Google Scholar] [CrossRef][Green Version]

- Gentner, D. Analogical Learning in Similarity and Analogical Reasoning; Vosniadou, S., Ortony, A., Eds.; Cambridge University Press: London, UK, 1989. [Google Scholar]

- Alexander, P.A.; Jablansky, S.; Singer, L.M.; Denis, D. Relational reasoning: What we know and why it matters. Policy Insights Behav. Brain Sci. 2016, 3, 36–44. [Google Scholar] [CrossRef]

- Blanchette, I.; Dunbar, K. Representational change and analogy: How analogical inferences alter target representations. J. Exp. Psychol. Learn. Mem. Cogn. 2002, 28, 672–685. [Google Scholar] [CrossRef] [PubMed]

- Gentner, D.; Smith, L.A. Analogical learning and reasoning. In The Oxford Handbook of Cognitive Psychology; Daniel, R., Ed.; Oxford University Press: New York, NY, USA, 2013; pp. 668–681. [Google Scholar]

- Glynn, S.M.; Takahashi, T. Learning from analogy-enhanced science text. J. Res. Sci. Teach. 1998, 35, 1129–1149. [Google Scholar] [CrossRef]

- Guo, L.P.; Lv, X.; Luo, Y.Y.; Li, Y.F.; Zhao, Y.X. A study on the application of IoT technology to preschool children’s analogical reasoning evaluation and learning support-based on cognitive diagnostic approach. Electrochem. Educ. Res. 2020, 41, 94–101. [Google Scholar] [CrossRef]

- SIegler, R.S.; Svetina, M. A microgenetic/cross-sectional study of matrix completion: Comparing short-term and long-term change. Child Dev. 2002, 73, 793–809. [Google Scholar] [CrossRef]

- Stevenson, C.E.; Touw, K.W.J.; Resing, W.C.M. Computer or paper analogy puzzles: Does assessment mode influence young children’s strategy progression? Educ. Child Psychol. 2011, 28, 67–84. [Google Scholar]

- Li, S.S. Application of IoT Technology and Data Mining for 3–4 Years Old Children’s Fetch-by-Number Game. Ph.D. Thesis, East China Normal University, Shanghai, China, 2020. (In Chinese). [Google Scholar]

- Weisberg, D.S.; Hirsh-Pasek, K.; Golinkoff, R.M. Guided play: Where curricular goals meet a playful pedagogy. Mind Brain Educ. 2013, 7, 104–112. [Google Scholar] [CrossRef]

- Saracho, O.N.; Spodek, B. Young children’s literacy-related play. Early Child. Dev. Care 2006, 176, 707–721. [Google Scholar] [CrossRef]

- Van, O.B.; Duijkers, D. Teaching in a play-based curriculum: Theory, practice and evidence of developmental education for young children. J. Curric. Stud. 2013, 45, 511–534. [Google Scholar] [CrossRef]

- Sawyer, R.K. Cambridge Handbook of the Learning Sciences; Cambridge University Press: London, UK, 2006. [Google Scholar]

- Shute, V.J. Focus on formative feedback. Rev. Educ. Res. 2008, 78, 153–189. [Google Scholar] [CrossRef]

- Dalziel, J. Enchancing Web-Based Learning with Computer Assisted Assessment: Pedagogical and Technical Considerations; Loughborough University: Loughborough, UK, 2001. [Google Scholar]

- Brown, J.S.; Collins, A.; Duguid, P. Situated cognition and the culture of learning. Educ. Res. 1989, 18, 32–42. [Google Scholar] [CrossRef]

- Bransford, J.D.; Brown, A.L.; Cocking, R.R. How People Learn; National Academy Press: Washington, DC, USA, 2000. [Google Scholar]

- Mou, Z.J.; Wu, F.T. Content analysis and design orientation of learning outcome prediction research in the context of big data in education. China E-Learn. 2017, 7, 26–32. (In Chinese) [Google Scholar]

- DiCerbo, K.; Shute, V.; Kim, Y.J. The future of assessment in technology rich environments: Psychometric considerations. In Learning, Design, and Technology; Springer: Berlin/Heidelberg, Germany, 2017; pp. 1–21. [Google Scholar] [CrossRef]

- Shute, V.J.; Ventura, M.; Bauer, M. Melding the power of serious games and embedded assessment to monitor and foster learning. In Serious Games: Mechanisms and Effects; Ritterfeld, U., Cody, M., Vorderer, P., Eds.; Routledge: New York, NY, USA, 2009; pp. 295–321. [Google Scholar]

- Shute, V.J. Stealth assessment in computer-based games to support learning. In Computer Games and Instruction; Tobias, S.E., Fletcher, J.D., Eds.; IAP Information Age Publishing: Charlotte, NC, USA, 2011; pp. 503–524. [Google Scholar]

- Gee, J.P. What video games have to teach us about learning and literacy. Comput. Entertain. 2003, 1, 20. [Google Scholar] [CrossRef]

- Shaffer, D.W. Epistemic frames for epistemic games. Comput. Educ. 2006, 46, 223–234. [Google Scholar] [CrossRef]

- Dempsey, J.V.; Haynes, L.L.; Lucassen, B.A.; Casey, M.S. Forty simple computer games and what they could mean to educators. Simul. Gaming 2002, 33, 157–168. [Google Scholar] [CrossRef]

- Ke, F. A qualitative meta-analysis of computer games as learning tools. In Gaming and Simulations: Concepts, Methodologies, Tools and Applications; Ferdig, R.E., Ed.; IGI Global: Hershey, PA, USA, 2011; pp. 1619–1665. [Google Scholar]

- Vogel, J.J.; Vogel, D.S.; Cannon-Bowers, J.; Bowers, C.A.; Muse, K.; Wright, M. Computer gaming and interactive simulations for learning: A meta-analysis. J. Educ. Comput. Res. 2006, 34, 229–243. [Google Scholar] [CrossRef]

- Alkan, S.; Cagiltay, K. Studying computer game learning experience through eye tracking. Br. J. Educ. Technol. 2007, 38, 538–542. [Google Scholar] [CrossRef]

- Ifenthaler, D.; Gibson, D.; Prasse, D.; Shimada, A.; Yamada, M. Putting learning back into learning analytics: Actions for policy makers, researchers, and practitioners. Educ. Technol. Res. Dev. 2020, 68, 1–20. [Google Scholar] [CrossRef]

- Plass, J.L.; Homer, B.D.; Kinzer, C.K. Foundations of game-based learning. Educ. Psychol. 2015, 50, 258–283. [Google Scholar] [CrossRef]

- Beerwinkle, A.L. The use of learning analytics and the potential risk of harm for K-12 students participating in digital learning environments. Educ. Technol. Res. Dev. 2020, 69, 327–330. [Google Scholar] [CrossRef] [PubMed]

- Prinsloo, P.; Slade, S. Student Vulnerability, Agency, and Learning Analytics: An Exploration. J. Learn. Anal. 2016, 3, 159–182. [Google Scholar] [CrossRef]

- Merceron, A.; Blikstein, P. Learning Analytics: From Big Data to Meaningful Data. J. Learn. Anal. 2016, 2, 4–8. [Google Scholar] [CrossRef]

- Mayer, R.E. Cognitive theory of multimedia learning. In The Cambridge Handbook of Multimedia Learning; Mayer, R., Mayer, R.E., Eds.; Cambridge University Press: London, UK, 2005. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).