A Transfer Learning-Based Artificial Intelligence Model for Leaf Disease Assessment

Abstract

1. Introduction

- (a)

- Create a transfer learning-based model to diagnose three paddy leaf diseases.

- (b)

- Model performance analysis and evaluation based on various evaluation parameters.

- (c)

- Deploy and test the proposed method in a cloud environment.

- In segmentation, the brightness of images is a major concern.

- The preliminary seed selection is crucial for segmentation.

- The image texture is difficult to tackle.

- To resolve the segmentation issues, semantic segmentation was used to extract a region of interest.

- The semantic/vegetation segmentation is used here to resolve the issues in normal segmentation.

- The proposed approach considers only leaf lesion parts that enhance the detection accuracy.

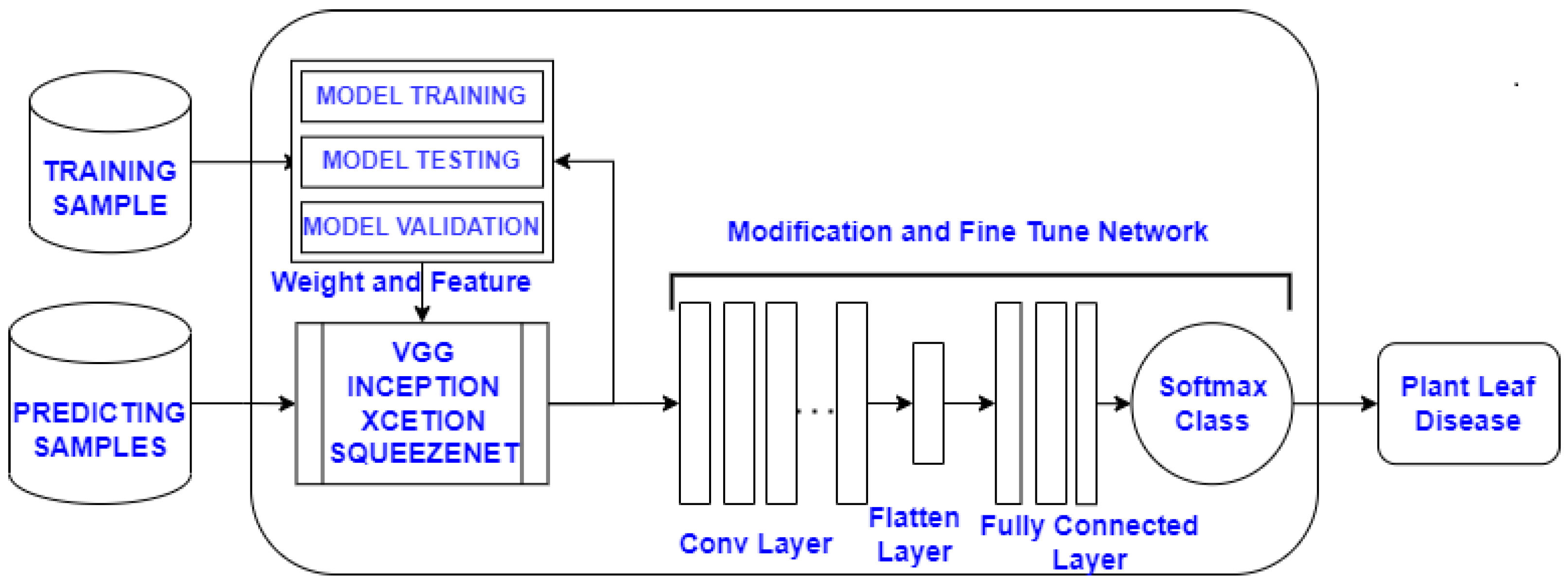

- The proposed approach has used state-of-the-art transfer learning models such as InceptionNet, SqueezeNet, VGG16, VGG19, and ResNet.

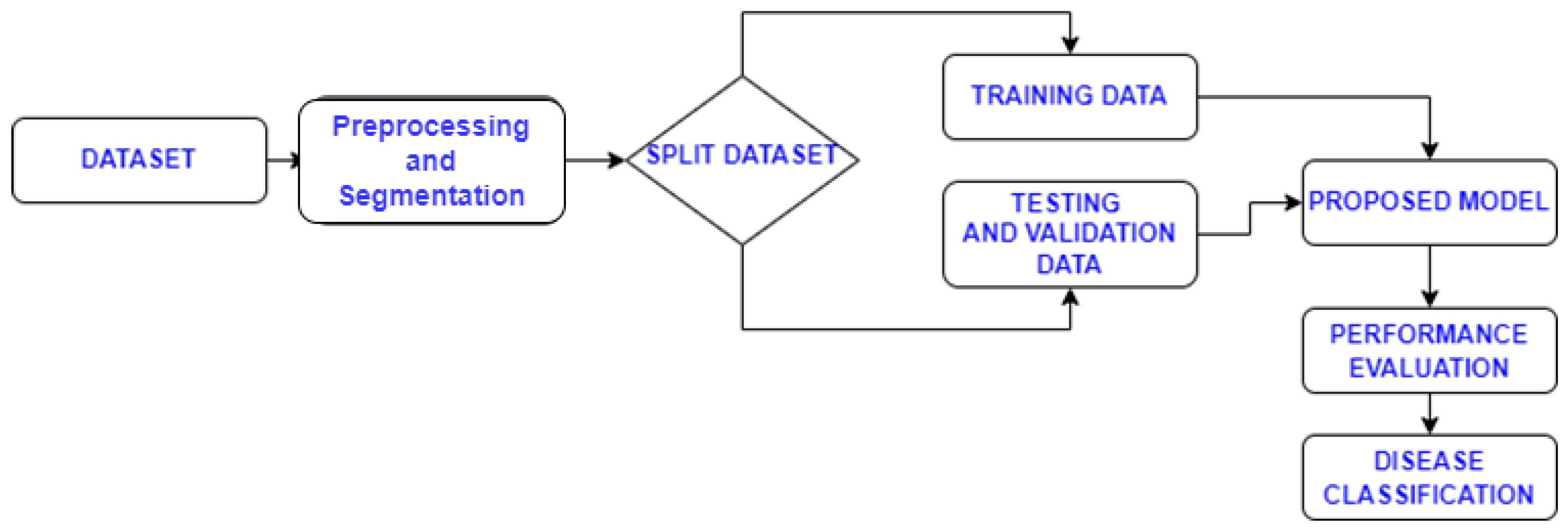

2. Materials and Methods

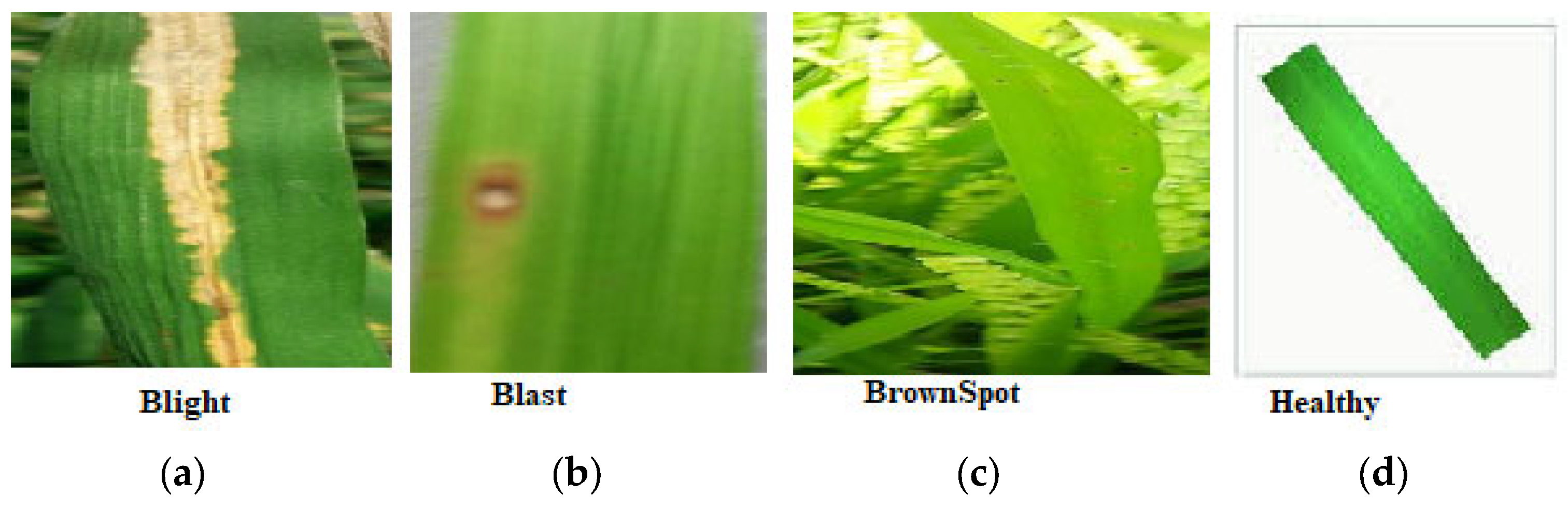

2.1. Dataset Description

2.2. Proposed Methodology

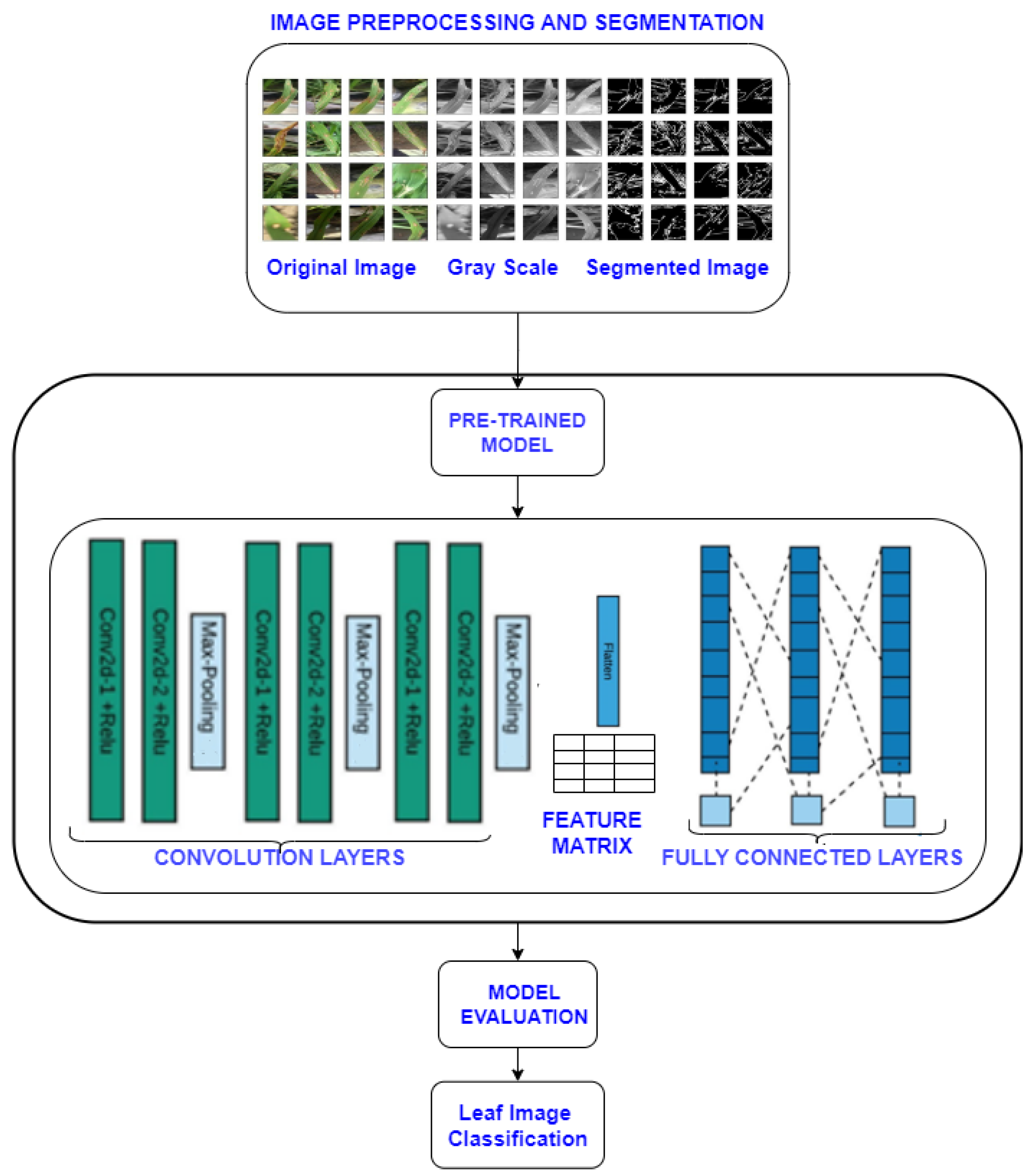

2.2.1. Image Processing and Argumentation

| Algorithm 1: Semantic Masking |

| Input: Dataset Images |

| Output: Masking Image. |

|

2.2.2. Training Phase

- AlexNet has five convolutional layers, three fully connected layers followed by an output layer, and contains 62.3 million parameters.

- Visual Geometry Group (VGG) network contains VGG16 and VGG19. In this network, multiple 3 × 3 filters are used to extract complex features at a low cost.

- ResNet is a 34-layer plain network inspired by VGG-19. ResNet50 and ResNet152 are example networks of ResNet.

- InceptionV4, with 43 million parameters and an upgraded Stem module with three residuals and one InceptionV4, achieves better performance.

- SqueezeNet is a CNN with 18 layers deep. They are offering the SqueezeNet small CNN architecture with 50× fewer parameters.

- Xception has 71 layers and 23 million parameters. It is based on InceptionV3. Xception was heavily inspired by InceptionV3. The convolutional blocks are replaced with depth-wise separable convolutions.

- i.

- Convolutional Layer

- ii.

- Pooling Layer

- iii.

- Fully Connected Layer

2.3. Model Evaluation

- a.

- b.

- c.

- d.

3. Results and Discussion

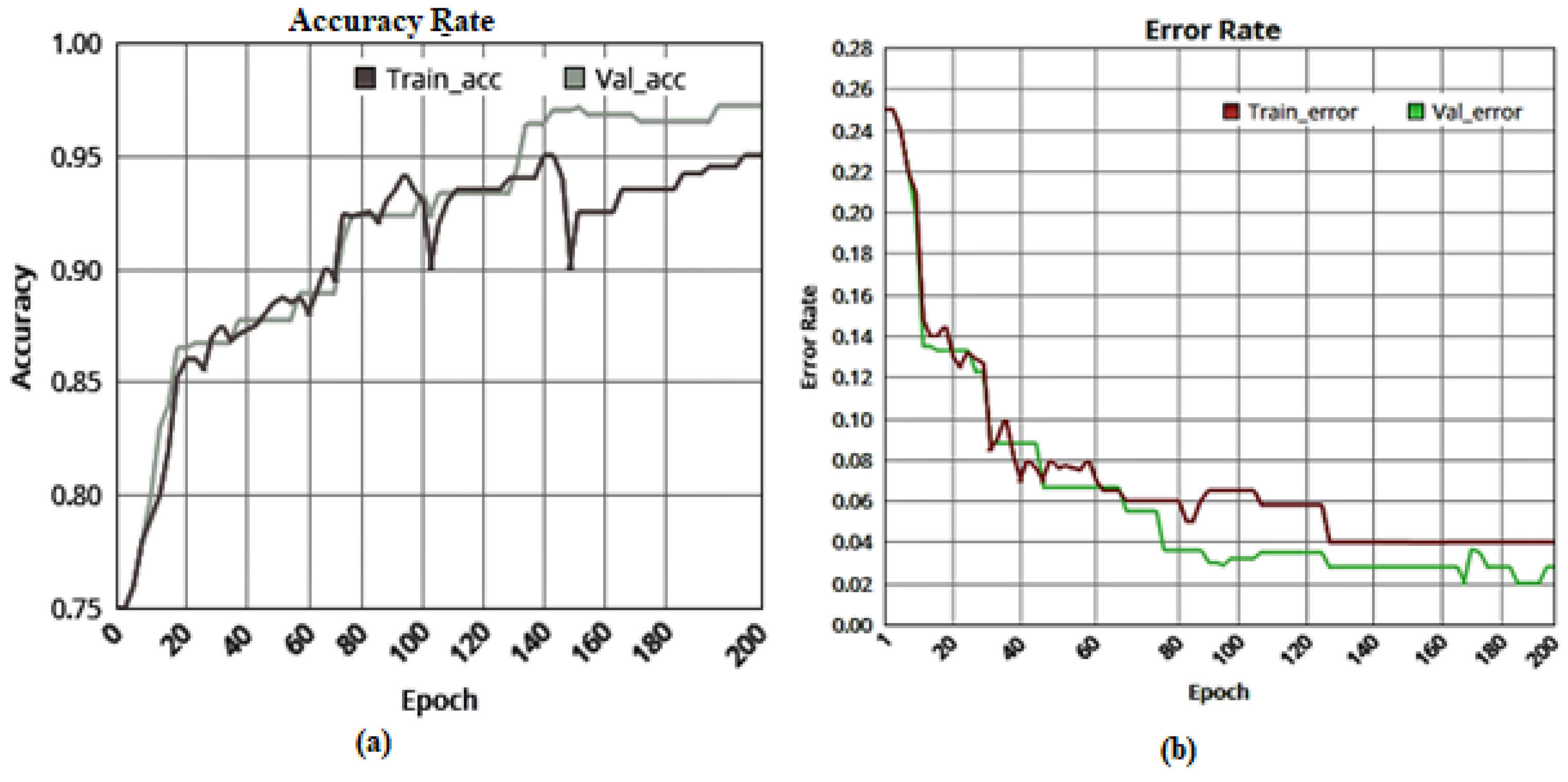

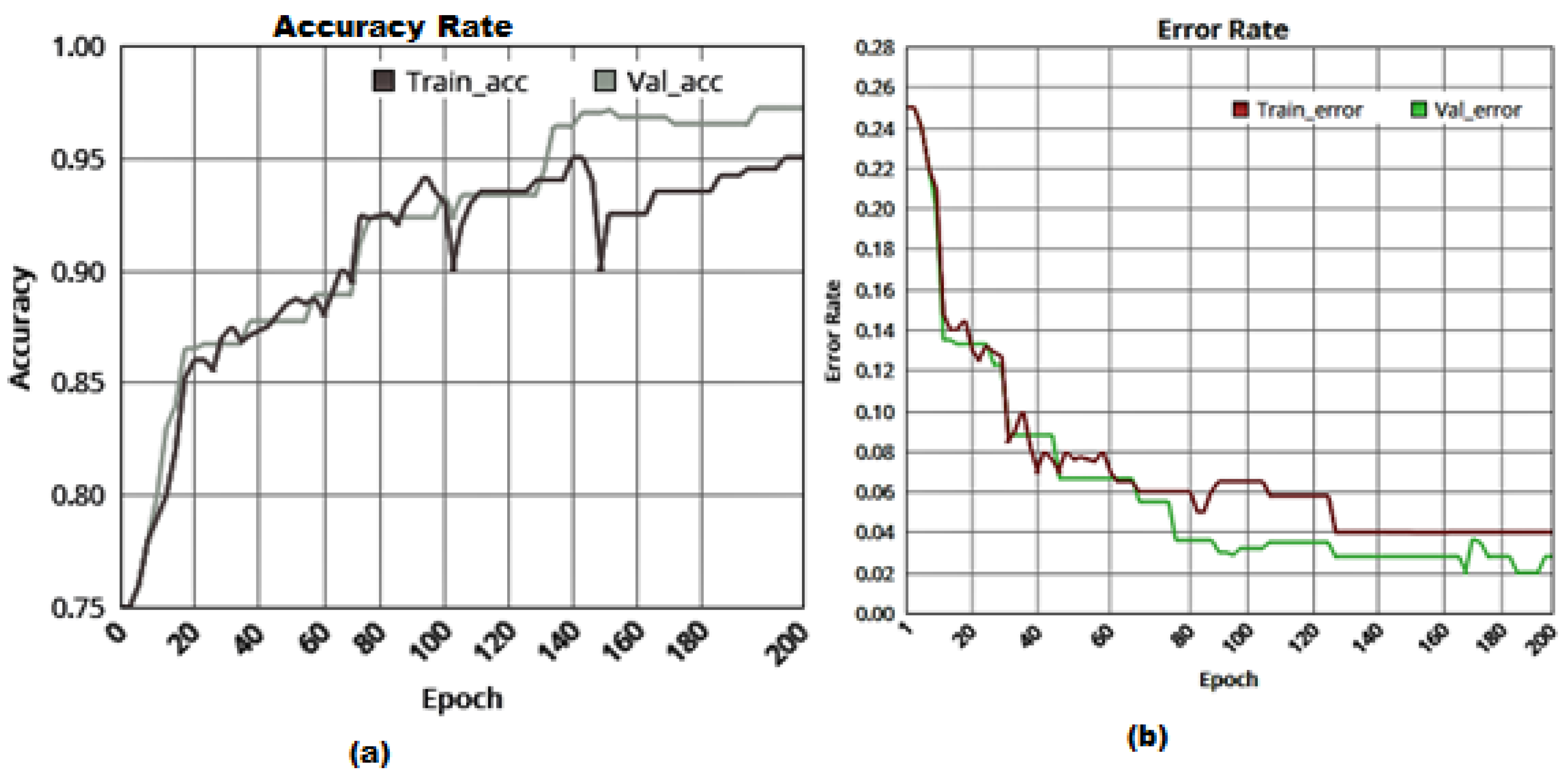

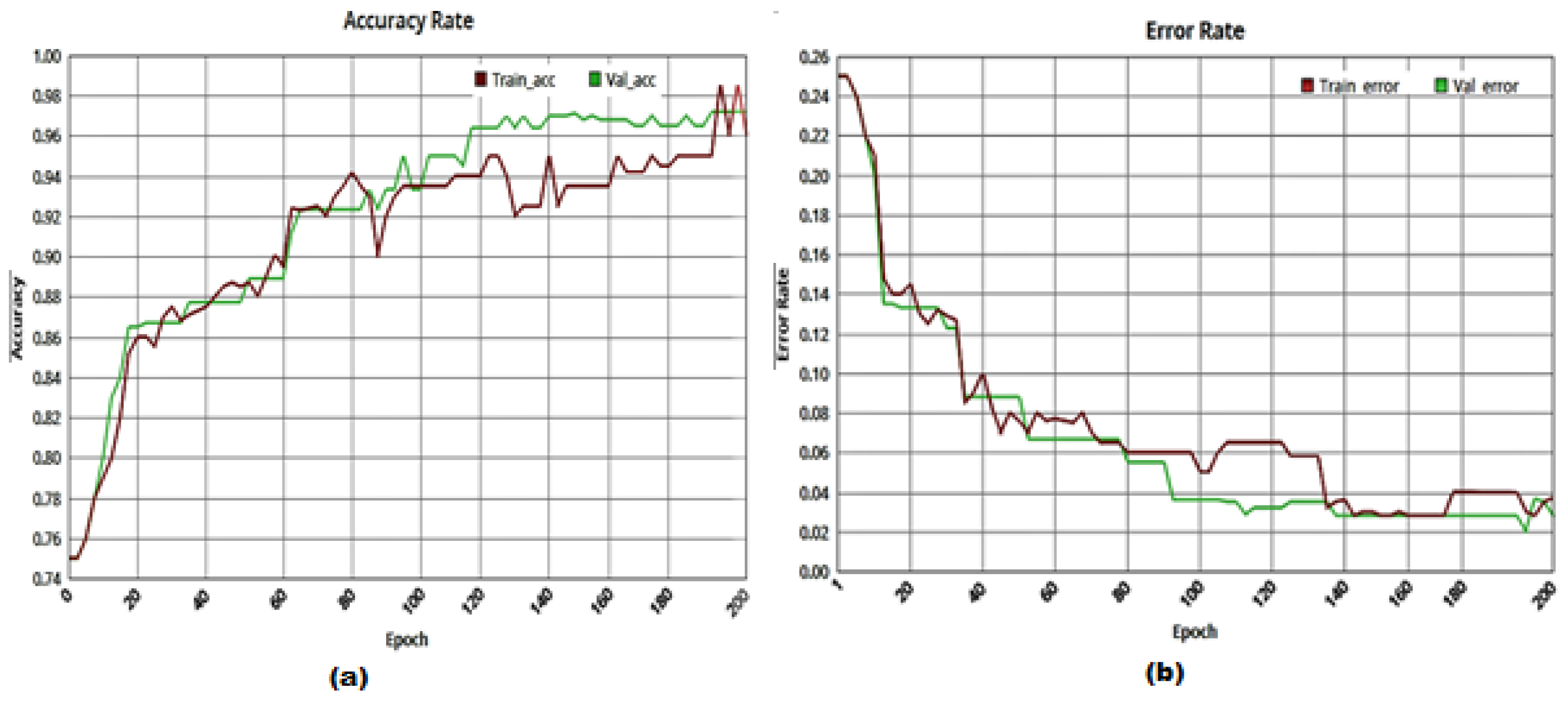

3.1. Analysis Using Sampling

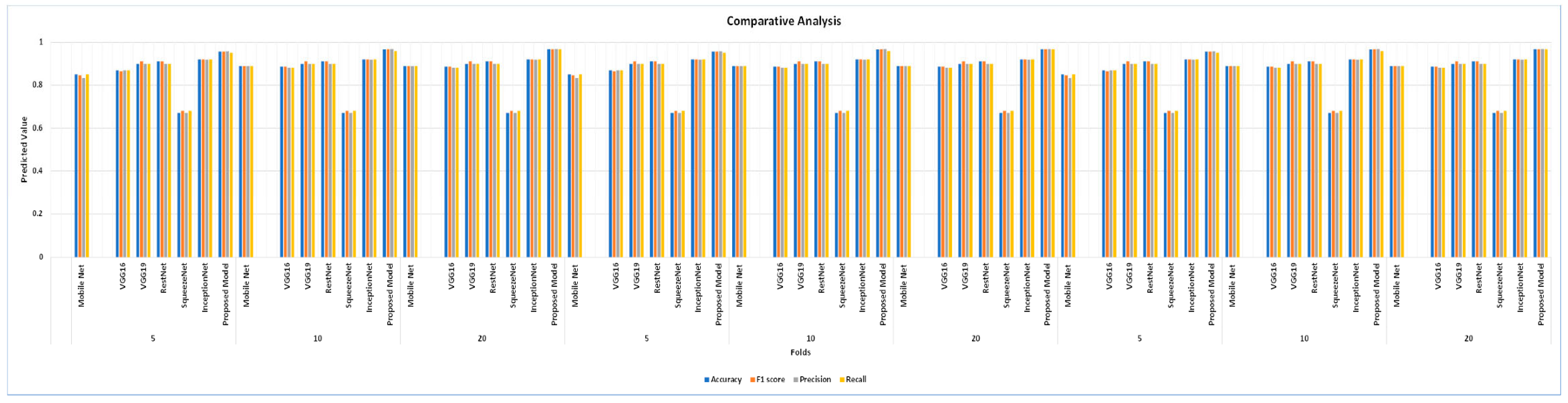

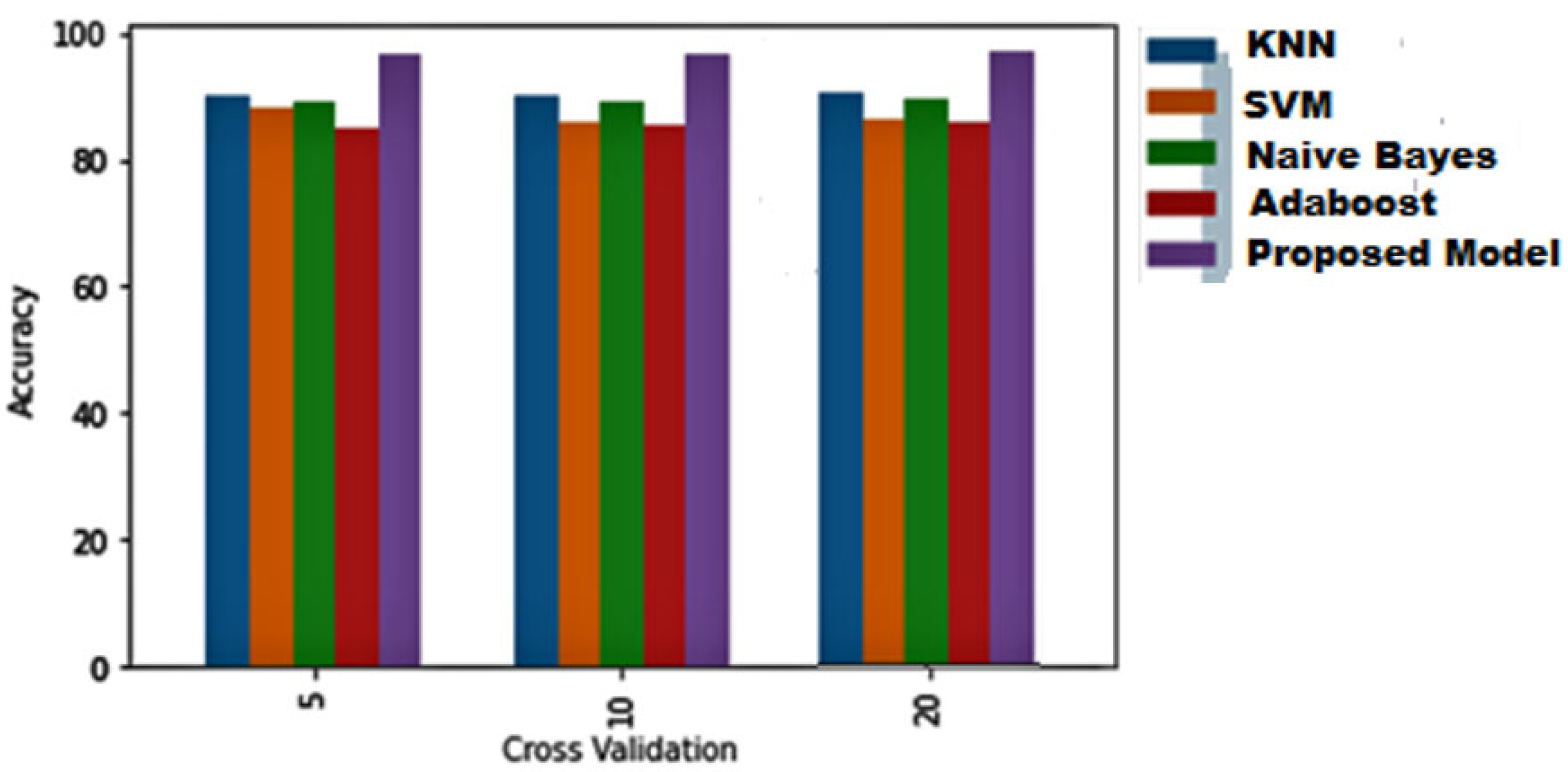

3.2. Analysis with K-Folds Validation

3.3. Confusion Matrix with InceptionV3

4. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Van Eeuwijk, F.A.; Bustos-Korts, D.; Millet, E.J.; Boer, M.P.; Kruijer, W.; Thompson, A.; Malosetti, M.; Iwata, H.; Quiroz, R.; Kuppe, C.; et al. Modelling strategies for assessing and increasing the effectiveness of new phenotyping techniques in plant breeding. Plant Sci. 2019, 282, 23–39. [Google Scholar] [CrossRef] [PubMed]

- Martinelli, F.; Scalenghe, R.; Davino, S.; Panno, S.; Scuderi, G.; Ruisi, P.; Dandekar, A.M. Advanced methods of plant disease detection. A review. Agron. Sustain. Dev. 2015, 35, 1–25. [Google Scholar] [CrossRef]

- Kaur, P.; Gautam, V. Plant Biotic Disease Identification and Classification based on Leaf Image: A Review. In Proceedings of the 3rd International Conference on Computing Informatics and Networks, LNCS, Delhi, India, 29–30 July 2020; Springer: Berlin/Heidelberg, Germany, 2021. [Google Scholar]

- Sethy, P.K.; Barpanda, N.K.; Rath, A.K.; Behera, S.K. Image processing techniques for diagnosing rice plant disease: A survey. Procedia Comput. Sci. 2020, 167, 516–530. [Google Scholar] [CrossRef]

- Gong, A.; Yu, J.; He, Y.; Qiu, Z. Citrus yield estimation based on images processed by an Android mobile phone. Biosyst. Eng. 2013, 115, 162–170. [Google Scholar] [CrossRef]

- Deng, R.; Jiang, Y.; Tao, M.; Huang, X.; Bangura, K.; Liu, C.; Qi, L. Deep learning-based automatic detection of productive tillers in rice. Comput. Electron. Agric. 2020, 177, 105703. [Google Scholar] [CrossRef]

- Xu, G.; Zhang, F.; Shah, S.G.; Ye, Y.; Mao, H. Use of leaf color images to identify nitrogen and potassium deficient tomatoes. Pattern Recognit. Lett. 2011, 32, 1584–1590. [Google Scholar] [CrossRef]

- Baresel, J.P.; Rischbeck, P.; Hu, Y.; Kipp, S.; Barmeier, G.; Mistele, B.; Schmidhalter, U. Use of a digital camera as alternative method for non-destructive detection of the leaf chlorophyll content and the nitrogen nutrition status in wheat. Comput. Electron. Agric. 2017, 140, 25–33. [Google Scholar] [CrossRef]

- Tao, M.; Ma, X.; Huang, X.; Liu, C.; Deng, R.; Liang, K.; Qi, L. Smartphone-based detection of leaf color levels in rice plants. Comput. Electron. Agric. 2020, 173, 105431. [Google Scholar] [CrossRef]

- Liu, H.; Ma, X.; Tao, M.; Deng, R.; Bangura, K.; Deng, X.; Qi, L. A plant leaf geometric parameter measurement system based on the android platform. Sensors 2019, 19, 1872. [Google Scholar] [CrossRef]

- Jiang, H.; Zhang, C.; Qiao, Y.; Zhang, Z.; Zhang, W.; Song, C. CNN feature-based graph convolutional network for weed and crop recognition in smart farming. Comput. Electron. Agric. 2017, 174, 105450. [Google Scholar] [CrossRef]

- Liu, B.; Bruch, R. Weed detection for selective spraying: A review. Curr. Robot. Rep. 2020, 1, 19–26. [Google Scholar] [CrossRef]

- Asad, M.H.; Bais, A. Weed detection in canola fields using maximum likelihood classification and deep convolutional neural network. Inf. Process. Agric. 2020, 7, 535–545. [Google Scholar] [CrossRef]

- Mishra, A.M.; Harnal, S.; Mohiuddin, K.; Gautam, V.; Nasr, O.A.; Goyal, N.; Singh, A. A Deep Learning-Based Novel Approach for Weed Growth Estimation. Intell. Autom. Soft Comput. 2022, 31, 1157–1172. [Google Scholar] [CrossRef]

- Ngugi, L.C.; Abelwahab, M.; Abo-Zahhad, M. Recent advances in image processing techniques for automated leaf pest and disease recognition—A review. Inf. Process. Agric. 2021, 8, 27–51. [Google Scholar] [CrossRef]

- Zhang, M.; Qin, Z.; Liu, X. Remote sensor spectral imagery to detect late blight in field tomatoes. Precis. Agric. 2005, 6, 489–508. [Google Scholar] [CrossRef]

- Strange, R.N.; Scott, P.R. Plant disease: A threat to global food security. Annu. Rev. Phytopathol. 2015, 43, 83–116. [Google Scholar] [CrossRef]

- Islam, T.; Sah, M.; Baral, S.; Choudhury, R. RA faster technique on rice disease detection using image processing of affected area in agro-field. In Proceedings of the 2018 Second International Conference on Inventive Communication and Computational Technologies (ICICCT), Coimbatore, India, 20–21 April 2018; pp. 62–66. [Google Scholar]

- Zhu, W.; Chen, H.; Ciechanowska, I.; Spaner, D. Application of infrared thermal imaging for the rapid diagnosis of crop disease. IFAC 2018, 51, 424–430. [Google Scholar] [CrossRef]

- Li, B.; Liu, Z.; Huang, J.; Zhang, L.; Zhou, W.; Shi, J. Hyperspectral identification of rice diseases and pests based on principal component analysis and probabilistic neural network. Trans. Chin. Soc. Agric. Eng. 2009, 25, 43–147. [Google Scholar]

- Gautam, V. Qualitative model to enhance quality of metadata for data warehouse. Int. J. Inf. Technol. 2020, 12, 1025–1036. [Google Scholar] [CrossRef]

- Gunawan, P.A.; Kencana, E.N.; Sari, K. Classification of paddy leaf diseases using artificial neural network. J. Phys. Conf. Ser. IOP Publ. 2013, 2013, 1722. [Google Scholar]

- Ozguven, M.M.; Adem, K. Automatic detection and classification of leaf spot disease in sugar beet using deep learning algorithms. Phys. A Stat. Mech. Appl. 2019, 535, 122537. [Google Scholar] [CrossRef]

- Karlekar, A.; Seal, A. SoyNet: Soybean leaf diseases classification. Comput. Electron. Agric. 2020, 172, 105342. [Google Scholar] [CrossRef]

- Agarwal, M.; Sing, A.; Arjaria, S.; Sinha, A.; Gupta, S. ToLeD: Tomato leaf disease detection using convolution neural network. Proc. Comput. Sci. 2020, 167, 293–301. [Google Scholar] [CrossRef]

- Rangarajan, A.K.; Purushothaman, R.; Ramesh, A. Tomato crop disease classification using pre-trained deep learning algorithm. Procedia Comput. Sci. 2018, 133, 1040–1047. [Google Scholar] [CrossRef]

- Trivedi, N.K.; Gautam, V.; Anand, A.; Aljahdali, H.M.; Villar, S.G.; Anand, D.; Kadry, S. Early Detection and Classification of Tomato Leaf Disease Using High-Performance Deep Neural Network. Sensors 2021, 21, 7987. [Google Scholar] [CrossRef]

- Sambasivam, G.; Opiyo, G.D. A predictive machine learning application in agriculture: Cassava disease detection and classification with imbalanced dataset using convolutional neural networks. Egypt. Inform. J. 2021, 22, 27–34. [Google Scholar] [CrossRef]

- Polder, G.; van de Westeringh, N.; Kool, J.; Khan, H.A.; Kootstra, G.; Nieuwenhuizen, A. Automatic detection of tulip breaking virus (TBV) using a deep convolutional neural network. IFAC 2019, 52, 12–17. [Google Scholar] [CrossRef]

- Coulibaly, S.; Kamsu-Foguem, B.; Kamissoko, D.; Traore, D. Deep neural networks with transfer learning in millet crop images. Comput. Ind. 2019, 108, 115–120. [Google Scholar] [CrossRef]

- Kamal, K.C.; Yin, Z.; Wu, M.; Wu, Z. Depth wise separable convolution architectures for plant disease classification. Comput. Electron. Agric. 2019, 165, 104948. [Google Scholar]

- Hossain, S.M.; Tanjil, M.; Morhsed, M.; Ali, M.A.B.; Islam, M.Z.; Islam, M.; Mobassirin, S.; Sarker, I.H.; Islam, S.M. Rice leaf diseases recognition using convolutional neural networks. In Proceedings of the International Conference on Advanced Data Mining and Applications, Foshan, China, 12–15 November 2020; pp. 299–314. [Google Scholar]

- Picon, A.; Seitz, M.; Alvarez-Gila, A.; Mohnke, P.; Ortiz-Barredo, A.; Echazarra, J. Crop conditional Convolutional Neural Networks for massive multi-crop plant disease classification over cell phone acquired images taken on real field conditions. Comput. Electron. Agric. 2019, 167, 105093. [Google Scholar] [CrossRef]

- Barbedo, J.G.A. Plant disease identification from individual lesions and spots using deep learning. Biosyst. Eng. 2019, 180, 96–107. [Google Scholar] [CrossRef]

- Natalia, K.; Rosemarie, W. Molecular biology of viroid–host interactions and disease control strategies. Plant Sci. 2014, 228, 48–60. [Google Scholar]

- Wang, G.; Sun, Y.; Wang, J. Automatic image-based plant disease severity estimation using deep learning. Comput. Intell. Neurosci. 2017, 2017, 2917536. [Google Scholar] [CrossRef] [PubMed]

- Sladojevic, S.; Arsenovic, M.; Anderla, A.; Culibrk, D.; Stefanovic, D. Deep neural networks-based recognition of plant diseases by leaf image classification. Comput. Intell. Neurosci. 2016, 2016, 3289801. [Google Scholar] [CrossRef]

- Fuentes, A.; Yoon, S.; Kim, S.C.; Park, D.S. A robust deep-learning-based detector for real-time tomato plant diseases and pests recognition. Sensors 2022, 17, 2022. [Google Scholar] [CrossRef]

- Bhagawati, R.; Bhagawati, K.; Singh, A.; Nongthombam, R.; Sarmah, R.; Bhagawati, G. Artificial neural network assisted weather-based plant disease forecasting system. Int. J. Recent Innov. Trends Comput. Commun. 2015, 3, 4168–4173. [Google Scholar]

- Atole, R.R.; Park, D. A multiclass deep convolutional neural network classifier for detection of common paddy plant anomalies. Int. J. Adv. Comput. Sci. Appl. 2018, 9, 67–70. [Google Scholar]

- Chawathe, S.S. Paddy Disease Detection by Image Analysis. In Proceedings of the 2020 10th Annual Computing and Communication Workshop and Conference (CCWC), Las Vegas, NV, USA, 6–8 January 2020; pp. 0524–0530. [Google Scholar]

- Narmadha, R.P.; Arulvadivu, G. Detection and measurement of paddy leaf disease symptoms using image processing. In Proceedings of the 2017 International Conference on Computer Communication and Informatics (ICCCI), Coimbatore, India, 5–7 January 2017; pp. 1–4. [Google Scholar]

- Velesaca, H.O.; Mira, R.; Suárez, P.L.; Larrea, C.X.; Sappa, A.D. Deep learning based corn kernel classification. In Proceedings of the Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 14–19 June 2020; pp. 66–67. [Google Scholar]

- Hammad Masood, M.; Saim, H.; Taj, M.; Awais, M.M. Early Disease Diagnosis for Paddy Crop. In Proceedings of the ICLR 2020 Workshop on Computer Vision for Agriculture (CV4A), Addis Ababa, Ethiopia, 26 April 2020. [Google Scholar]

- Chen, J.; Zhang, D.; Nanehkaran, Y.A.; Li, D. Detection of rice plant diseases based on deep transfer learning. J. Sci. Food Agric. 2020, 100, 3246–3256. [Google Scholar] [CrossRef]

- Barbedo, J.G.A. Impact of dataset size and variety on the effectiveness of deep learning and transfer learning for plant disease classification. Comput. Electron. Agric. 2018, 153, 46–53. [Google Scholar] [CrossRef]

- Rasjava, A.R.I.; Sugiyarto, A.W.; Kurniasari, Y.; Ramad han, S.Y. Detection of Paddy Plants Diseases Using Convolutional Neural Network (CNN). Int. Conf. Sci. Eng. 2020, 3, 393–396. [Google Scholar]

- Baranwal, S.; Khandelwal, S.; Arora, A. Deep learning convolutional neural network for apple leaves disease detection. In Proceedings of the International Conference on Sustainable Computing in Science, Technology and Management (SUSCOM), Jaipur, India, 26–28 February 2019. [Google Scholar]

- Karmokar, B.C.; Ullah, M.S.; Siddiquee, M.K.; Alam, K.M.R. Tea leaf diseases recognition using neural networkensemble. Int. J. Comput. Appl. 2015, 114, 975–8887. [Google Scholar]

- Srinivas, B.; Satheesh, P.; Naidu, P.R.S.; Neelima, U. Prediction of Guava Plant Diseases Using Deep Learning. Int. Conf. Commun. Cyber Phys. Eng. (ICCCE) 2020, 698, 1495–1505. [Google Scholar]

- Goluguri, N.R.R.; Devi, K.S.; Srinivasan, P. Paddy-net: An efficient artificial fish swarm optimization applied deep convolutional neural network model for identifying the Oryza sativa diseases. Neural Comput. Appl. 2020, 33, 5869–5884. [Google Scholar] [CrossRef]

- Trivedi, N.K.; Sarita, S.; Lilhore, U.K.; Sharma, S.K. COVID-19 Pandemic: Role of Machine Learning & Deep Learning Methods in Diagnosis. Int. J. Cur. Res. Rev. 2021, 13, 150–156. [Google Scholar]

- Mahrishi, M.; Morwal, S.; Muzaffar, A.W.; Bhatia, S.; Dadheech, P.; Rahmani, M.K.I. Video index point detection and extraction framework using custom YoloV4 Darknet object detection model. IEEE Access 2021, 9, 143378–143391. [Google Scholar] [CrossRef]

- Bhalla, K.; Koundal, D.; Bhatia, S.; Khalid, M.; Rahmani, I.; Tahir, M. Fusion of infrared and visible images using fuzzy based siamese convolutional network. Computer. Mater. Conf. 2022, 70, 5503–5518. [Google Scholar]

- Noon, S.K.; Amjad, M.; Qureshi, M.A.; Mannan, A. Use of deep learning techniques for identification of plant leaf stresses: A review. Sustain. Comput. Inform. Syst. 2020, 28, 100443. [Google Scholar] [CrossRef]

- Gandhi, P.; Bhatia, S.; Dev, K. Data Driven Decision Making Using Analytics; CRC Press: Boca Raton, FL, USA, 2021. [Google Scholar]

- Gautam, V. Analysis and application of vehicular ad hoc network as intelligent transportation system. In Mobile Radio Communications and 5G Networks; Springer: Singapore, 2021; pp. 1–17. [Google Scholar]

- Kansal, N.; Bhushan, B.; Sharma, S. Architecture, Security Vulnerabilities, and the Proposed Countermeasures in Agriculture-Internet-of-Things (AIoT) Systems. Internet Things Anal. Agric. 2021, 3, 329–353. [Google Scholar]

- Sethi, R.; Bhushan, B.; Sharma, N.; Kumar, R.; Kaushik, I. Applicability of Industrial IoT in Diversified Sectors: Evolution, Applications and Challenges. Stud. Big Data Multimed. Technol. Internet Things Environ. 2020, 79, 45–67. [Google Scholar]

- Sethy, P.K.; Barpanda, N.K.; Rath, A.K.; Behera, S.K. Deep feature-based rice leaf disease identification using support vector machine. Comput. Electron. Agric. 2020, 175, 105527. [Google Scholar] [CrossRef]

- Kahar, M.A.; Sofianita, M.; Shuzlina, A.R. Early detection and classification of paddy diseases with neural networks and fuzzy logic. In Proceedings of the International Conference Mathematical Computational Methods in Science and Engineering, Kuala Lumpur, Malaysia, 23–25 April 2015; pp. 248–257. [Google Scholar]

- Khaing, W.H.; Chit, S.H. Development of Paddy Diseased Leaf Classification System Using Modified Color Conversion. Int. J. Softw. Hardw. Res. Eng. 2018, 6, 24–32. [Google Scholar]

- Ganesan, G.; Chinnappan, J. Hybridization of ResNet with YOLO classifier for automated paddy leaf disease recognition: An optimized model. J. Field Robot. 2022, 39, 1087–1111. [Google Scholar] [CrossRef]

- Akila, M.; Deepan, P. Detection and Classification of Plant Leaf Diseases by using Deep Learning Algorithm. Int. J. Eng. Res. Technol. 2018, 6, 1–5. [Google Scholar]

- Ramesh, S.; Vydeki, D. Recognition and classification of paddy leaf diseases using Optimized Deep Neural network with Jaya algorithm. Inf. Process. Agric. 2020, 7, 249–260. [Google Scholar] [CrossRef]

- Fahrul, A.; Muh, I.; Dyna, M.K.; Krishna, P.C. Expert System for Rice Plant Disease Diagnosis. F1000Research 2019, 7, 1902. [Google Scholar]

- Toran, V.; Sipi, D. Optimizing Rice Plant Diseases Recognition in Image Processing and Decision Tree Based Model. In Proceedings of the International Conference on Next Generation Computing Technologies, Dehradun, India, 30–31 October 2017; pp. 733–751. [Google Scholar]

- Xiao, M.; Ma, Y.; Feng, Z.; Deng, Z.; Hou, S.; Shu, L.; Lu, Z. Rice blast recognition based on principal component analysis and neural network. Comput. Electron. Agric. 2018, 154, 482–490. [Google Scholar] [CrossRef]

- Shampa, S.; Asit, K.D. Particle Swarm Optimization based incremental classifier design for rice disease prediction. Comput. Electron. Agric. 2017, 140, 443451. [Google Scholar]

- Huang, J.; Liao, H.; Zhu, Y.; Sun, J.; Sun, Q.; Liu, X. Hyperspectral detection of rice damaged by rice leaf folder (Cnaphalocrocis medinalis). Comput. Electron. Agric. 2012, 82, 100–107. [Google Scholar] [CrossRef]

- Wei, J.Y.; Jing, H.C.; Guo, N.C.; Shi, H.W.; Feng, F.F. The early diagnosis and fast detection of blast fungus, Magnaporthe grisea, in rice plant by using its chitinase as biochemical marker and a rice cDNA encoding mannose-binding lectin as recognition probe. Biosens. Bioelectron. 2013, 15, 820–826. [Google Scholar]

- Chen, J.; Chen, J.; Zhang, D.; Sun, Y.; Nanehkaran, Y.A. Using deep transfer learning for image-based plant disease identification. Comput. Electron. Agric. 2020, 173, 105393. [Google Scholar] [CrossRef]

- Uguz, S.; Uysal, N. Classification of olive leaf diseases using deep convolutional neural networks. Neural Comput. Appl. 2021, 33, 4133–4149. [Google Scholar] [CrossRef]

- Sharma, M.; Kumar, C.J.; Deka, A. Early diagnosis of rice plant disease using machine learning techniques. Arch. Phytopathol. Plant Prot. 2022, 55, 1–25. [Google Scholar] [CrossRef]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the inception architecture for computer vision. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26–30 June 2016. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erjam, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015. [Google Scholar]

- Peng, J.; Kang, S.; Ning, Z.; Deng, H.; Shen, J.; Xu, Y.; Zhang, J.; Zhao, W.; Li, X.; Gong, W. Residual convolutional neural network for predicting response of trans arterial chemoembolization in hepatocellular carcinoma from CT imaging. Eur. Radiol. 2020, 30, 413–424. [Google Scholar] [CrossRef] [PubMed]

- Ma, J.; Du, K.; Zheng, F.; Zhang, L.; Sun, Z. A segmentation method for processing greenhouse vegetable foliar disease symptom images. Inf. Process. Agric. 2019, 6, 216–223. [Google Scholar] [CrossRef]

- Suryanarayana, G.; Chandran, K.; Khalaf, O.I.; Alotaibi, Y.; Alsufyani, A.; Alghamdi, S.A. Accurate Magnetic Resonance Image Super-Resolution Using Deep Networks and Gaussian Filtering in the Stationary Wavelet Domain. IEEE Access 2021, 9, 71406–71417. [Google Scholar] [CrossRef]

- Li, G.; Liu, F.; Sharma, A.; Khalaf, O.I.; Alotaibi, Y.; Alsufyani, A.; Alghamdi, S. Research on the natural language recognition method based on cluster analysis using neural network. Math. Probl. Eng. 2021, 2021, 9982305. [Google Scholar] [CrossRef]

- Singh, V.; Misra, A.K. Detection of plant leaf diseases using image segmentation and soft computing techniques. Inf. Process. Agric. 2017, 4, 41–49. [Google Scholar] [CrossRef]

- Rice Leaf Dataset. 2022. Available online: https://www.kaggle.com/minhhuy2810/rice-diseases-image-dataset (accessed on 2 February 2022).

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Simonyan, K.; Andrew, Z. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Chung, C.L.; Huang, K.J.; Chen, S.Y.; Lai, M.H.; Chen, Y.C.; Kuo, Y.F. Detecting bakanae disease in rice seedlings by machine vision. Comput. Electron. Agric. 2016, 121, 404–411. [Google Scholar] [CrossRef]

- Lu, Y.; Yi, S.; Zeng, N.; Liu, Y.; Zhang, Y. Identification of rice diseases using deep convolutional neural networks. Neurocomputing 2017, 267, 378–384. [Google Scholar] [CrossRef]

- Rafeed, R.C.; Saha, A.P.; Eunus, A.M.; Khan, M.A.I.; Hasan, A.S.; Nowrin, F.; Wasif, A. Identification and recognition of rice diseases and pests using convolutional neural networks. Biosyst. Eng. 2020, 194, 112–120. [Google Scholar]

- Liang, W.J.; Zhang, H.; Zhang, G.F.; Cao, H. Rice blast disease recognition using a deep convolutional neural network. Sci. Rep. 2019, 9, 1–10. [Google Scholar]

- Chen, W.L.; Lin, Y.B.; Ng, F.L.; Liu, C.Y.; Lin, Y.W. Ricetalk: Rice blast detection using internet of things and artificial intelligence technologies. IEEE Internet Things J. 2019, 7, 1001–1010. [Google Scholar] [CrossRef]

- Duong-Trung, N.; Quach, L.D.; Nguyen, M.H.; Nguyen, C.N. Classification of grain discoloration via transfer learning and convolutional neural networks. In Proceedings of the International Conference on Machine Learning and Soft Computing, Da Lat, Vietnam, 25–28 January 2019; pp. 27–32. [Google Scholar]

- Shrivastava, V.K.; Pradhan, M.K.; Minz, S.; Thakur, M.P. Rice plant disease classification using transfer learning of deep convolution neural network. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, XLII-3/W6, 631–635. [Google Scholar] [CrossRef]

- Deng, R.; Tao, M.; Xing, H.; Yang, X.; Liu, C.; Liao, K.; Qi, L. Automatic diagnosis of rice diseases using deep learning. Front. Plant Sci. 2021, 12, 701038. [Google Scholar] [CrossRef]

| Class | Count of Images | Training Images | Testing/Validation Images |

|---|---|---|---|

| Blight | 300 | 250 | 50 |

| Blast | 365 | 300 | 65 |

| Brown spot | 335 | 270 | 65 |

| Healthy | 500 | 400 | 100 |

| Hyper Parameter | Description |

|---|---|

| No. of Con. Layer | 15 |

| No. of Max Pooling Layer | 15 |

| Dropout rate | 0.25, 0.5 |

| Network Weight Assigned | Uniform |

| Activation Function | ReLu |

| Learning Rates | 0.001, 0.01, 0.1 |

| Epoch | 50, 100, 200, 250 |

| Batch Sizes | 32, 50, 60, 100 |

| Epoch | Learning Rate | Accuracy (%) |

|---|---|---|

| 50 | 0.1 | 96.23 |

| 0.01 | 96.42 | |

| 0.001 | 96.35 | |

| 0.001 | 96.52 | |

| 100 | 0.1 | 96.23 |

| 0.01 | 96.36 | |

| 0.001 | 96.35 | |

| 0.001 | 96.32 | |

| 150 | 0.1 | 96.65 |

| 0.01 | 96.36 | |

| 0.001 | 96.33 | |

| 0.001 | 96.32 | |

| 200 | 0.1 | 96.62 |

| 0.01 | 96.47 | |

| 0.001 | 97.47 | |

| 0.001 | 96.47 |

| Year | Diseases Count | Techniques | Accuracy (%) | Reference |

|---|---|---|---|---|

| 2016 | 1 | SVM, GA | 87.90 | [86] |

| 2017 | 10 | CNN | 95.48 | [87] |

| 2018 | 9 | CNN | 93.30 | [88] |

| 2019 | 1 | CNN, SVM, LBPH | 95.83 | [89] |

| 2019 | 1 | RiceTalk | 89.40 | [90] |

| 2019 | 1 | InceptionV3 | 88.20 | [91] |

| 2019 | 3 | AlexNet, CNN, SVM | 91.37 | [92] |

| 2021 | 3 | Ensemble DL | 91 | [93] |

| Dataset Used | Model | Number of Folds | Accuracy | F1 Score | Precision | Recall |

|---|---|---|---|---|---|---|

| Paddy Leaf [88] | Mobile Net | 5 | 0.85 | 0.845 | 0.834 | 0.85 |

| VGG16 | 0.87 | 0.8654 | 0.87 | 0.87 | ||

| VGG19 | 0.9 | 0.91 | 0.9 | 0.9 | ||

| RestNet | 0.91 | 0.91 | 0.90 | 0.9 | ||

| SqueezeNet | 0.67 | 0.68 | 0.67 | 0.68 | ||

| InceptionNet | 0.92 | 0.92 | 0.9185 | 0.92 | ||

| Proposed Model | 0.9574 | 0.9578 | 0.9588 | 0.95 | ||

| Mobile Net | 10 | 0.89 | 0.89 | 0.89 | 0.89 | |

| VGG16 | 0.887 | 0.887 | 0.88 | 0.88 | ||

| VGG19 | 0.9 | 0.91 | 0.9 | 0.9 | ||

| RestNet | 0.91 | 0.91 | 0.90 | 0.9 | ||

| SqueezeNet | 0.67 | 0.68 | 0.67 | 0.68 | ||

| InceptionNet | 0.92 | 0.92 | 0.9185 | 0.92 | ||

| Proposed Model | 0.9674 | 0.9678 | 0.9688 | 0.96 | ||

| Mobile Net | 20 | 0.89 | 0.89 | 0.89 | 0.89 | |

| VGG16 | 0.887 | 0.887 | 0.88 | 0.88 | ||

| VGG19 | 0.9 | 0.91 | 0.9 | 0.9 | ||

| RestNet | 0.91 | 0.91 | 0.90 | 0.9 | ||

| SqueezeNet | 0.67 | 0.68 | 0.67 | 0.68 | ||

| InceptionNet | 0.92 | 0.92 | 0.9185 | 0.92 | ||

| Proposed Model | 0.968 | 0.968 | 0.9688 | 0.968 | ||

| Paddy Leaf [89] | Mobile Net | 5 | 0.85 | 0.845 | 0.834 | 0.85 |

| VGG16 | 0.87 | 0.8654 | 0.87 | 0.87 | ||

| VGG19 | 0.9 | 0.91 | 0.9 | 0.9 | ||

| RestNet | 0.91 | 0.91 | 0.90 | 0.9 | ||

| SqueezeNet | 0.67 | 0.68 | 0.67 | 0.68 | ||

| InceptionNet | 0.92 | 0.92 | 0.9185 | 0.92 | ||

| Proposed Model | 0.9574 | 0.9578 | 0.9588 | 0.95 | ||

| Mobile Net | 10 | 0.89 | 0.89 | 0.89 | 0.89 | |

| VGG16 | 0.887 | 0.887 | 0.88 | 0.88 | ||

| VGG19 | 0.9 | 0.91 | 0.9 | 0.9 | ||

| RestNet | 0.91 | 0.91 | 0.90 | 0.9 | ||

| SqueezeNet | 0.67 | 0.68 | 0.67 | 0.68 | ||

| InceptionNet | 0.92 | 0.92 | 0.9185 | 0.92 | ||

| Proposed Model | 0.9674 | 0.9678 | 0.9688 | 0.96 | ||

| Mobile Net | 20 | 0.89 | 0.89 | 0.89 | 0.89 | |

| VGG16 | 0.887 | 0.887 | 0.88 | 0.88 | ||

| VGG19 | 0.9 | 0.91 | 0.9 | 0.9 | ||

| RestNet | 0.91 | 0.91 | 0.90 | 0.9 | ||

| SqueezeNet | 0.67 | 0.68 | 0.67 | 0.68 | ||

| InceptionNet | 0.92 | 0.92 | 0.9185 | 0.92 | ||

| Proposed Model | 0.968 | 0.968 | 0.9688 | 0.968 | ||

| Paddy Leaf [89] | Mobile Net | 5 | 0.85 | 0.845 | 0.834 | 0.85 |

| VGG16 | 0.87 | 0.8654 | 0.87 | 0.87 | ||

| VGG19 | 0.9 | 0.91 | 0.9 | 0.9 | ||

| RestNet | 0.91 | 0.91 | 0.90 | 0.9 | ||

| SqueezeNet | 0.67 | 0.68 | 0.67 | 0.68 | ||

| InceptionNet | 0.92 | 0.92 | 0.9185 | 0.92 | ||

| Proposed Model | 0.9574 | 0.9578 | 0.9588 | 0.95 | ||

| Mobile Net | 10 | 0.89 | 0.89 | 0.89 | 0.89 | |

| VGG16 | 0.887 | 0.887 | 0.88 | 0.88 | ||

| VGG19 | 0.9 | 0.91 | 0.9 | 0.9 | ||

| RestNet | 0.91 | 0.91 | 0.90 | 0.9 | ||

| SqueezeNet | 0.67 | 0.68 | 0.67 | 0.68 | ||

| InceptionNet | 0.92 | 0.92 | 0.9185 | 0.92 | ||

| Proposed Model | 0.9674 | 0.9678 | 0.9688 | 0.96 | ||

| Mobile Net | 20 | 0.89 | 0.89 | 0.89 | 0.89 | |

| VGG16 | 0.887 | 0.887 | 0.88 | 0.88 | ||

| VGG19 | 0.9 | 0.91 | 0.9 | 0.9 | ||

| RestNet | 0.91 | 0.91 | 0.90 | 0.9 | ||

| SqueezeNet | 0.67 | 0.68 | 0.67 | 0.68 | ||

| InceptionNet | 0.92 | 0.92 | 0.9185 | 0.92 | ||

| Proposed Model | 0.968 | 0.968 | 0.9688 | 0.968 |

| Predicted | ||||||

|---|---|---|---|---|---|---|

| Actual | Blight | Blast | Brown Spot | Healthy | ∑ | |

| Blight | 96.460% | 1.90% | 1.00% | 0.5% | 300 | |

| Blast | 1.85% | 96.490% | 0.70% | 0.55% | 365 | |

| Brown Spot | 0.50% | 0.7% | 98.00% | 0.8% | 335 | |

| Healthy | 0.50% | 0.60% | 2.10% | 96.80% | 500 | |

| ∑ | 300 | 365 | 335 | 500 | 1500 | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gautam, V.; Trivedi, N.K.; Singh, A.; Mohamed, H.G.; Noya, I.D.; Kaur, P.; Goyal, N. A Transfer Learning-Based Artificial Intelligence Model for Leaf Disease Assessment. Sustainability 2022, 14, 13610. https://doi.org/10.3390/su142013610

Gautam V, Trivedi NK, Singh A, Mohamed HG, Noya ID, Kaur P, Goyal N. A Transfer Learning-Based Artificial Intelligence Model for Leaf Disease Assessment. Sustainability. 2022; 14(20):13610. https://doi.org/10.3390/su142013610

Chicago/Turabian StyleGautam, Vinay, Naresh K. Trivedi, Aman Singh, Heba G. Mohamed, Irene Delgado Noya, Preet Kaur, and Nitin Goyal. 2022. "A Transfer Learning-Based Artificial Intelligence Model for Leaf Disease Assessment" Sustainability 14, no. 20: 13610. https://doi.org/10.3390/su142013610

APA StyleGautam, V., Trivedi, N. K., Singh, A., Mohamed, H. G., Noya, I. D., Kaur, P., & Goyal, N. (2022). A Transfer Learning-Based Artificial Intelligence Model for Leaf Disease Assessment. Sustainability, 14(20), 13610. https://doi.org/10.3390/su142013610