The Core of Smart Cities: Knowledge Representation and Descriptive Framework Construction in Knowledge-Based Visual Question Answering

Abstract

1. Introduction

- •

- A knowledge description framework is proposed to express the knowledge composition, morphology and mathematical model in visual question answering;

- •

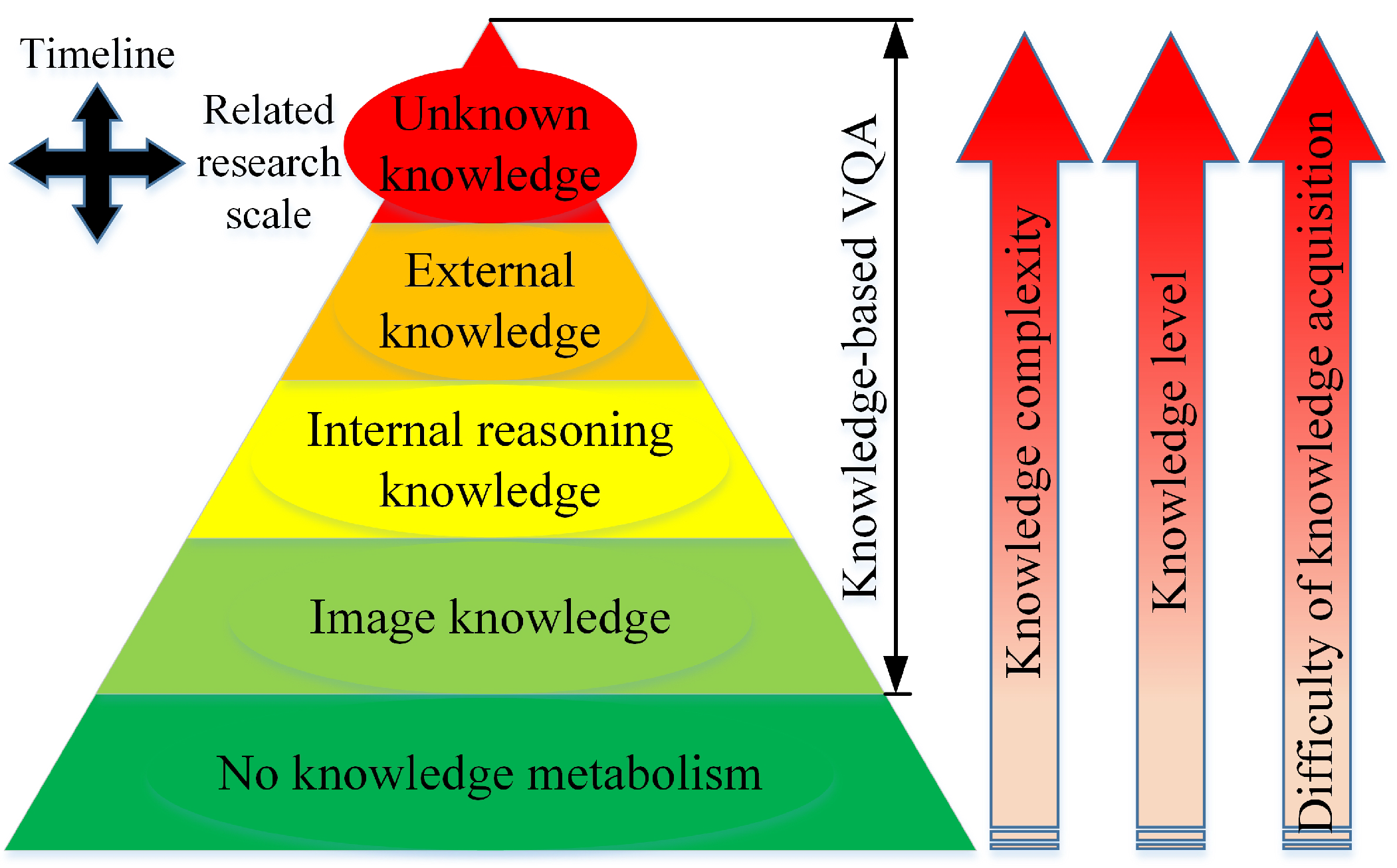

- The concept of knowledge pyramid is proposed to describe the knowledge content and participation forms in different visual question answering;

- •

- The basic mathematical model of knowledge-based visual question answering is proposed;

- •

- The rationality of the research content of this paper is explained by means of example elaboration and experimental verification.

2. Knowledge Pyramid

3. Theoretical Model

4. Knowledge Description Framework

| Category | Type | Method |

|---|---|---|

| Data | Data set | VQA1.0 [5], VQA2.0 [42], FVQA [39], Visual 7W [43], GQA [44], ⋯ |

| Knowledge base | ConceptNet [45], VGR [46], DBpedia [47], Webchild [48], ⋯ | |

| Network Type | Convolutional Neural Network | Faster R-CNN [49], YOLO v3 [50], Resnet [51], VggNet [52], ⋯ |

| Recurrent Neural Network | LSTM [53], GRU [54], BERT [55], GPT-3 [56], ⋯ | |

| Attention Mechanism | Transformer [57], Re-Attention [58], Rahman [34]⋯ | |

| Index | Evaluation indicators | Accuracy, WUPS, BLEU, Consensus et al. [8] |

5. Experiment and Analysis

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A. Materials Used in this Paper

References

- Sheng, H.; Zhang, Y.; Wang, W.; Shan, Z.; Fang, Y.; Lyu, W.; Xiong, Z. High confident evaluation for smart city services. Front. Environ. Sci. 2022, 1103. [Google Scholar] [CrossRef]

- Li, C.; Xuan, W. Green development assessment of smart city based on PP-BP intelligent integrated and future prospect of big data. Acta Electron. Malays. (AEM) 2017, 1, 1–4. [Google Scholar] [CrossRef]

- Fang, Y.; Shan, Z.; Wang, W. Modeling and key technologies of a data-driven smart city system. IEEE Access 2021, 9, 91244–91258. [Google Scholar] [CrossRef]

- Lu, H.P.; Chen, C.S.; Yu, H. Technology roadmap for building a smart city: An exploring study on methodology. Future Gener. Comput. Syst. 2019, 97, 727–742. [Google Scholar] [CrossRef]

- Antol, S.; Agrawal, A.; Lu, J.; Mitchell, M.; Batra, D.; Zitnick, C.L.; Parikh, D. Vqa: Visual question answering. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Washington, DC, USA, 7–13 December 2015; pp. 2425–2433. [Google Scholar]

- Farazi, M.; Khan, S.; Barnes, N. Accuracy vs. complexity: A trade-off in visual question answering models. Pattern Recogn. 2021, 120, 108106. [Google Scholar] [CrossRef]

- Teney, D.; Wu, Q.; van den Hengel, A. Visual question answering: A tutorial. IEEE Signal Process. Mag. 2017, 34, 63–75. [Google Scholar] [CrossRef]

- Manmadhan, S.; Kovoor, B.C. Visual question answering: A state-of-the-art review. Artif. Intell. Rev. 2020, 53, 5705–5745. [Google Scholar] [CrossRef]

- Hosseinioun, S. Knowledge grid model in facilitating knowledge sharing among big data community. Comput. Sci. 2018, 2, 8455–8459. [Google Scholar] [CrossRef]

- Aditya, S.; Yang, Y.; Baral, C. Explicit reasoning over end-to-end neural architectures for visual question answering. Aaai Conf. Artif. Intell. 2018, 32, 629–637. [Google Scholar] [CrossRef]

- Agrawal, A.; Batra, D.; Parikh, D.; Kembhavi, A. Don’t just assume; look and answer: Overcoming priors for visual question answering. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4971–4980. [Google Scholar]

- Wu, Y.; Ma, Y.; Wan, S. Multi-scale relation reasoning for multi-modal Visual Question Answering. Signal Process. Image Commun. 2021, 96, 116319. [Google Scholar] [CrossRef]

- Ma, Z.; Zheng, W.; Chen, X.; Yin, L. Joint embedding VQA model based on dynamic word vector. PeerJ Comput. Sci. 2021, 7, e353. [Google Scholar] [CrossRef] [PubMed]

- Bai, Y.; Fu, J.; Zhao, T.; Mei, T. Deep attention neural tensor network for visual question answering. Proc. Eur. Conf. Comput. Vis. 2018, 11216, 20–35. [Google Scholar]

- Gordon, D.; Kembhavi, A.; Rastegari, M.; Redmon, J.; Fox, D.; Farhadi, A. Iqa: Visual question answering in interactive environments. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4089–4098. [Google Scholar]

- Li, W.; Yuan, Z.; Fang, X.; Wang, C. Knowing where to look? Analysis on attention of visual question answering system. In Proceedings of the European Conference on Computer Vision (ECCV) Workshops, Munich, Germany, 8–14 September 2018; pp. 145–152. [Google Scholar]

- Zhang, W.; Yu, J.; Zhao, W.; Ran, C. DMRFNet: Deep multimodal reasoning and fusion for visual question answering and explanation generation. Inf. Fusion 2021, 72, 70–79. [Google Scholar] [CrossRef]

- Liang, W.; Jiang, Y.; Liu, Z. GraghVQA: Language-Guided Graph Neural Networks for Graph-based Visual Question Answering. arXiv 2021, arXiv:2104.10283. [Google Scholar]

- Kim, J.J.; Lee, D.G.; Wu, J.; Jung, H.G.; Lee, S.W. Visual question answering based on local-scene-aware referring expression generation. Neural Netw. 2021, 139, 158–167. [Google Scholar] [CrossRef]

- Zhu, Y.; Zhang, C.; Ré, C.; Fei-Fei, L. Building a Large-scale Multimodal Knowledge Base for Visual Question Answering. arXiv 2015, arXiv:1507.05670. [Google Scholar]

- Wu, Q.; Wang, P.; Shen, C.; Dick, A.; Van Den Hengel, A. Ask me anything: Free-form visual question answering based on knowledge from external sources. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 4622–4630. [Google Scholar]

- Zhu, Y.; Lim, J.J.; Fei-Fei, L. Knowledge acquisition for visual question answering via iterative querying. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1154–1163. [Google Scholar]

- Su, Z.; Zhu, C.; Dong, Y.; Cai, D.; Chen, Y.; Li, J. Learning visual knowledge memory networks for visual question answering. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7736–7745. [Google Scholar]

- Yu, J.; Zhu, Z.; Wang, Y.; Zhang, W.; Hu, Y.; Tan, J. Cross-modal knowledge reasoning for knowledge-based visual question answering. Pattern Recognit. 2020, 108, 107563. [Google Scholar] [CrossRef]

- Zhang, L.; Liu, S.; Liu, D.; Zeng, P.; Li, X.; Song, J.; Gao, L. Rich visual knowledge-based augmentation network for visual question answering. IEEE Trans. Neural Netw. Learn. Syst. 2020, 32, 4362–4373. [Google Scholar] [CrossRef]

- Zheng, W.; Yin, L.; Chen, X.; Ma, Z.; Liu, S.; Yang, B. Knowledge base graph embedding module design for Visual question answering model. Pattern Recognit. 2021, 120, 108153. [Google Scholar] [CrossRef]

- Liu, L.; Wang, M.; He, X.; Qing, L.; Chen, H. Fact-based visual question answering via dual-process system. Knowl.-Based Syst. 2022, 237, 107650. [Google Scholar] [CrossRef]

- Uehara, K.; Duan, N.; Harada, T. Learning To Ask Informative Sub-Questions for Visual Question Answering. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 21 June 2022; pp. 4681–4690. [Google Scholar]

- Cudic, M.; Burt, R.; Santana, E.; Principe, J.C. A flexible testing environment for visual question answering with performance evaluation. Neurocomputing 2018, 291, 128–135. [Google Scholar] [CrossRef]

- Lioutas, V.; Passalis, N.; Tefas, A. Explicit ensemble attention learning for improving visual question answering. Pattern Recognit. Lett. 2018, 111, 51–57. [Google Scholar] [CrossRef]

- Liu, F.; Xiang, T.; Hospedales, T.M.; Yang, W.; Sun, C. Inverse visual question answering: A new benchmark and VQA diagnosis tool. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 42, 460–474. [Google Scholar] [CrossRef] [PubMed]

- Lu, P.; Li, H.; Zhang, W.; Wang, J.; Wang, X. Co-attending free-form regions and detections with multi-modal multiplicative feature embedding for visual question answering. In Proceedings of the AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018; Volume 32, pp. 1–8. [Google Scholar]

- Mun, J.; Lee, K.; Shin, J.; Han, B. Learning to Specialize with Knowledge Distillation for Visual Question Answering. In Proceedings of the 32nd Conference on Neural Information Processing Systems (NeurIPS 2018), Montréal, QC, Canada, 3–8 December 2018; pp. 8092–8102. [Google Scholar]

- Rahman, T.; Chou, S.H.; Sigal, L.; Carenini, G. An Improved Attention for Visual Question Answering. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 1653–1662. [Google Scholar]

- Zhang, W.; Yu, J.; Hu, H.; Hu, H.; Qin, Z. Multimodal feature fusion by relational reasoning and attention for visual question answering. Inf. Fusion 2020, 55, 116–126. [Google Scholar] [CrossRef]

- Bajaj, G.; Bandyopadhyay, B.; Schmidt, D.; Maneriker, P.; Myers, C.; Parthasarathy, S. Understanding Knowledge Gaps in Visual Question Answering: Implications for Gap Identification and Testing. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 14–19 June 2020; pp. 386–387. [Google Scholar]

- Marino, K.; Rastegari, M.; Farhadi, A.; Mottaghi, R. Ok-vqa: A visual question answering benchmark requiring external knowledge. In Proceedings of the IEEE/cvf Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 3195–3204. [Google Scholar]

- Wu, Q.; Shen, C.; Wang, P.; Dick, A.; Van Den Hengel, A. Image captioning and visual question answering based on attributes and external knowledge. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 1367–1381. [Google Scholar] [CrossRef]

- Wang, P.; Wu, Q.; Shen, C.; Dick, A.; Van Den Hengel, A. Fvqa: Fact-based visual question answering. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 2413–2427. [Google Scholar] [CrossRef]

- Wang, P.; Wu, Q.; Shen, C.; Hengel, A.V.D.; Dick, A. Explicit Knowledge-based Reasoning for Visual Question Answering. Proc. Conf. Artif. Intell. 2017, 1290–1296. [Google Scholar] [CrossRef]

- Teney, D.; Liu, L.; van Den Hengel, A. Graph-structured representations for visual question answering. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 3233–3241. [Google Scholar]

- Goyal, Y.; Khot, T.; Summers-Stay, D.; Batra, D.; Parikh, D. Making the V in VQA Matter: Elevating the Role of Image Understanding in Visual Question Answering. Int. J. Comput. Vis. 2019, 398–414. [Google Scholar] [CrossRef]

- Zhu, Y.; Groth, O.; Bernstein, M.; Fei-Fei, L. Visual7w: Grounded question answering in images. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 4995–5004. [Google Scholar]

- Hudson, D.A.; Manning, C.D. Gqa: A new dataset for real-world visual reasoning and compositional question answering. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 6700–6709. [Google Scholar]

- Speer, R.; Chin, J.; Havasi, C. Conceptnet 5.5: An open multilingual graph of general knowledge. In Proceedings of the Thirty-First AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017. [Google Scholar]

- Krishna, R.; Zhu, Y.; Groth, O.; Johnson, J.; Hata, K.; Kravitz, J.; Chen, S.; Kalantidis, Y.; Li, L.J.; Shamma, D.A.; et al. Visual genome: Connecting language and vision using crowdsourced dense image annotations. Int. J. Comput. Vis. 2017, 123, 32–73. [Google Scholar] [CrossRef]

- Auer, S.; Bizer, C.; Kobilarov, G.; Lehmann, J.; Cyganiak, R.; Ives, Z. Dbpedia: A nucleus for a web of open data. Semant. Web. 2017, 722–735. [Google Scholar] [CrossRef]

- Tandon, N.; Melo, G.; Weikum, G. Acquiring comparative commonsense knowledge from the web. In Proceedings of the AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017; p. 28. [Google Scholar]

- Anderson, P.; He, X.; Buehler, C.; Teney, D.; Johnson, M.; Gould, S.; Zhang, L. Bottom-up and top-down attention for image captioning and visual question answering. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 6077–6086. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 770–778. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long Short-Term Memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Cho, K.; Van Merriënboer, B.; Gulcehre, C.; Bahdanau, D.; Bougares, F.; Schwenk, H.; Bengio, Y. Learning phrase representations using RNN encoder-decoder for statistical machine translation. arXiv 2014, arXiv:1406.1078. [Google Scholar]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv 2018, arXiv:1810.04805. [Google Scholar]

- Radford, A.; Narasimhan, K.; Salimans, T.; Sutskever, I. Improving Language Understanding by Generative Pre-Training. 2018. Available online: https://www.bibsonomy.org/bibtex/273ced32c0d4588eb95b6986dc2c8147c/jonaskaiser (accessed on 24 September 2022).

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30. Available online: https://papers.nips.cc/paper/2017/hash/3f5ee243547dee91fbd053c1c4a845aa-Abstract.html (accessed on 24 September 2022).

- Guo, W.; Zhang, Y.; Yang, J.; Yuan, X. Re-attention for visual question answering. IEEE Trans. Image Process. 2021, 30, 6730–6743. [Google Scholar] [CrossRef]

| Number | Existing Form | Sample Image | Sample Question | Standard Answer |

|---|---|---|---|---|

| 1 | No knowledge | Null | Null | |

| 2 | Image knowledge | What fruits are in the image? | Banana | |

| 3 | Internal reasoning knowledge | Figure A1a | What is the mustache made of? | Banana |

| 4 | External knowledge | What kind of banana is it? | Emperor banana | |

| 5 | Unknown knowledge | What are the girls doing? | Play? | |

| 6 | No knowledge | Null | Null | |

| 7 | Image knowledge | How many people are there in the image? | Two | |

| 8 | Internal reasoning knowledge | Figure A1e | What’s in front of the woman? | Computer |

| 9 | External knowledge | What brand of computer does the woman in the image use? | Apple | |

| 10 | Unknown knowledge | Are they working overtime? | Maybe | |

| 11 | No knowledge | Null | Null | |

| 12 | Image knowledge | How many people are there in the image? | Two | |

| 13 | Internal reasoning knowledge | Figure A1h | What is the man holding in his hand? | Umbrella |

| 14 | External knowledge | What are the two of them doing? | Taking wedding photos | |

| 15 | Unknown knowledge | This is where? | Do not know |

| Level | Existing Form | Related Research Work |

|---|---|---|

| 1 | No knowledge | Agrawal [11], Cudic [29], Lioutas [30], Liu [31], Lu [32], Mun [33], Wu [12] |

| 2 | Image knowledge | Bai [14], Gordon [15], Li [16], Rahman [34] |

| 3 | Internal reasoning knowledge | Zhang [17], Liang [18], Kim [19], Zhang [35], Bajaj [36] |

| 4 | External knowledge | Zhang [25], Yu [24], Marino [37], Su [23], Aditya [10], Zhu [22], Wu [38], Wang [39,40], Wu [21], Zhu [20] |

| 5 | Unknown knowledge | No |

| Level | Method | Publication | Dataset a | Accuracy |

|---|---|---|---|---|

| 1 | Agrawal [11] | 2018 | VQA v2 [42] | 48.24 |

| Cudic [29] | 2018 | — | — | |

| Lioutas [30] | 2018 | Visual7W [43] | 66.6 | |

| Liu [31] | 2018 | VQA v2 | 62.19 | |

| Lu [32] | 2018 | VQA v1 | 69.97 | |

| Mun [33] | 2018 | — | — | |

| Wu [12] | 2021 | VQA v1 | 68.47 | |

| 2 | Bai [14] | 2018 | VQA v2 | 67.94 |

| Gordon [15] | 2018 | — | — | |

| Li [16] | 2018 | VQA v2 | 65.19 | |

| Rahman [34] | 2021 | VQA v2 | 70.90 | |

| 3 | Zhang [35] | 2020 | VQA v2 | 67.34 |

| Bajaj [36] | 2020 | — | — | |

| Zhang [17] | 2021 | VQA v2 | 71.27 | |

| Liang [18] | 2021 | GQA[44] | 94.78 | |

| Kim [19] | 2021 | VQA v2 | 70.79 | |

| 4 | Zhu [22] | 2017 | VQA v1 | 68.90 |

| Wu [38] | 2017 | VQA v1 | 59.50 | |

| Wang [40] | 2017 | VQA v1 | 69.60 | |

| Su [23] | 2018 | VQA v1 | 66.10 | |

| Aditya [10] | 2018 | VQA v2 | — | |

| Zhang [25] | 2020 | VQA v2 | 71.84 | |

| Yu [24] | 2020 | FVQA [39] | 79.63 | |

| Visual7W | 69.03 | |||

| 5 | — | — | — |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, R.; Wu, S.; Wang, X. The Core of Smart Cities: Knowledge Representation and Descriptive Framework Construction in Knowledge-Based Visual Question Answering. Sustainability 2022, 14, 13236. https://doi.org/10.3390/su142013236

Wang R, Wu S, Wang X. The Core of Smart Cities: Knowledge Representation and Descriptive Framework Construction in Knowledge-Based Visual Question Answering. Sustainability. 2022; 14(20):13236. https://doi.org/10.3390/su142013236

Chicago/Turabian StyleWang, Ruiping, Shihong Wu, and Xiaoping Wang. 2022. "The Core of Smart Cities: Knowledge Representation and Descriptive Framework Construction in Knowledge-Based Visual Question Answering" Sustainability 14, no. 20: 13236. https://doi.org/10.3390/su142013236

APA StyleWang, R., Wu, S., & Wang, X. (2022). The Core of Smart Cities: Knowledge Representation and Descriptive Framework Construction in Knowledge-Based Visual Question Answering. Sustainability, 14(20), 13236. https://doi.org/10.3390/su142013236