An Ensemble Model with Adaptive Variational Mode Decomposition and Multivariate Temporal Graph Neural Network for PM2.5 Concentration Forecasting

Abstract

1. Introduction

2. Methods

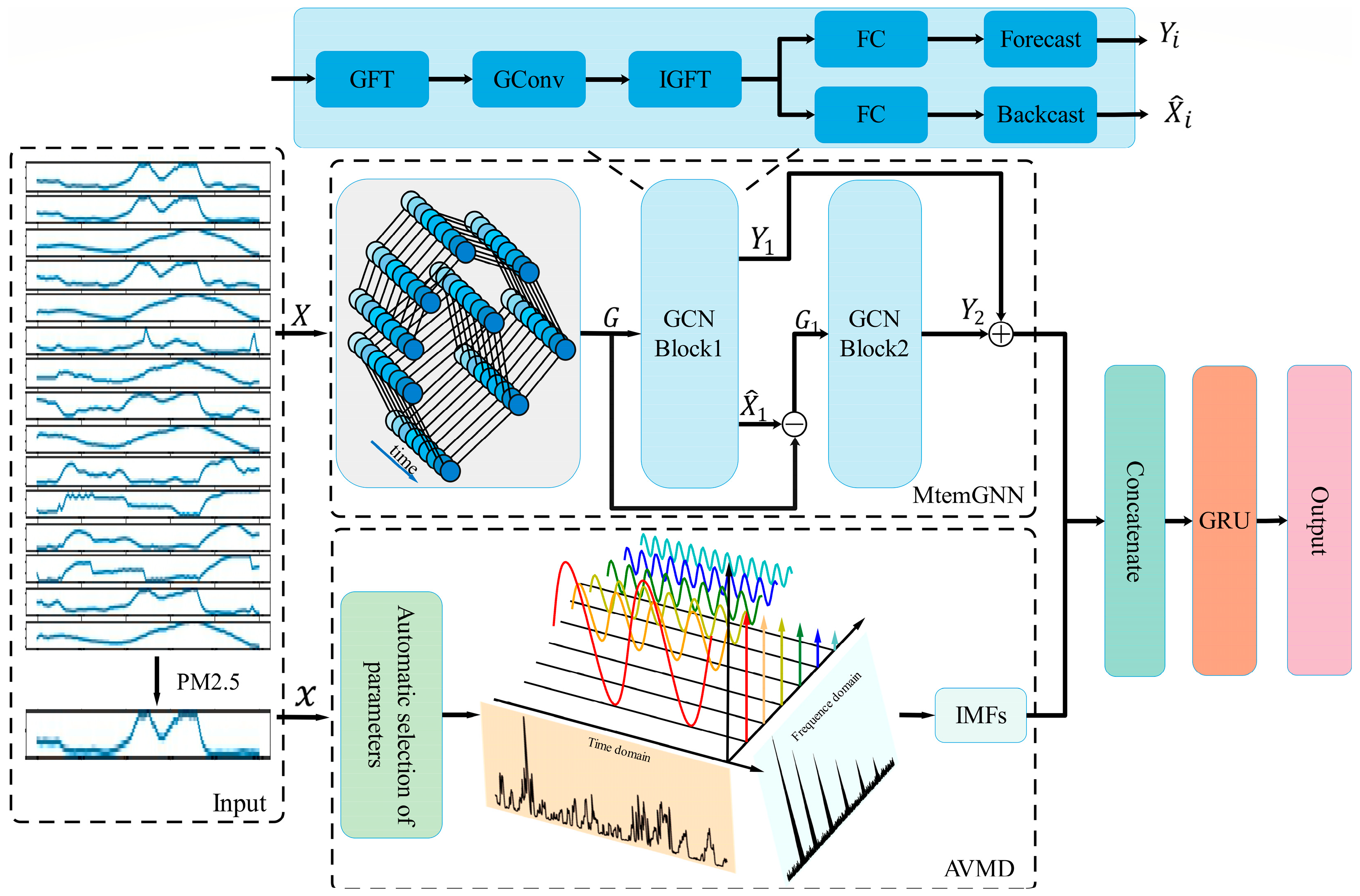

2.1. Overview of the PMNet

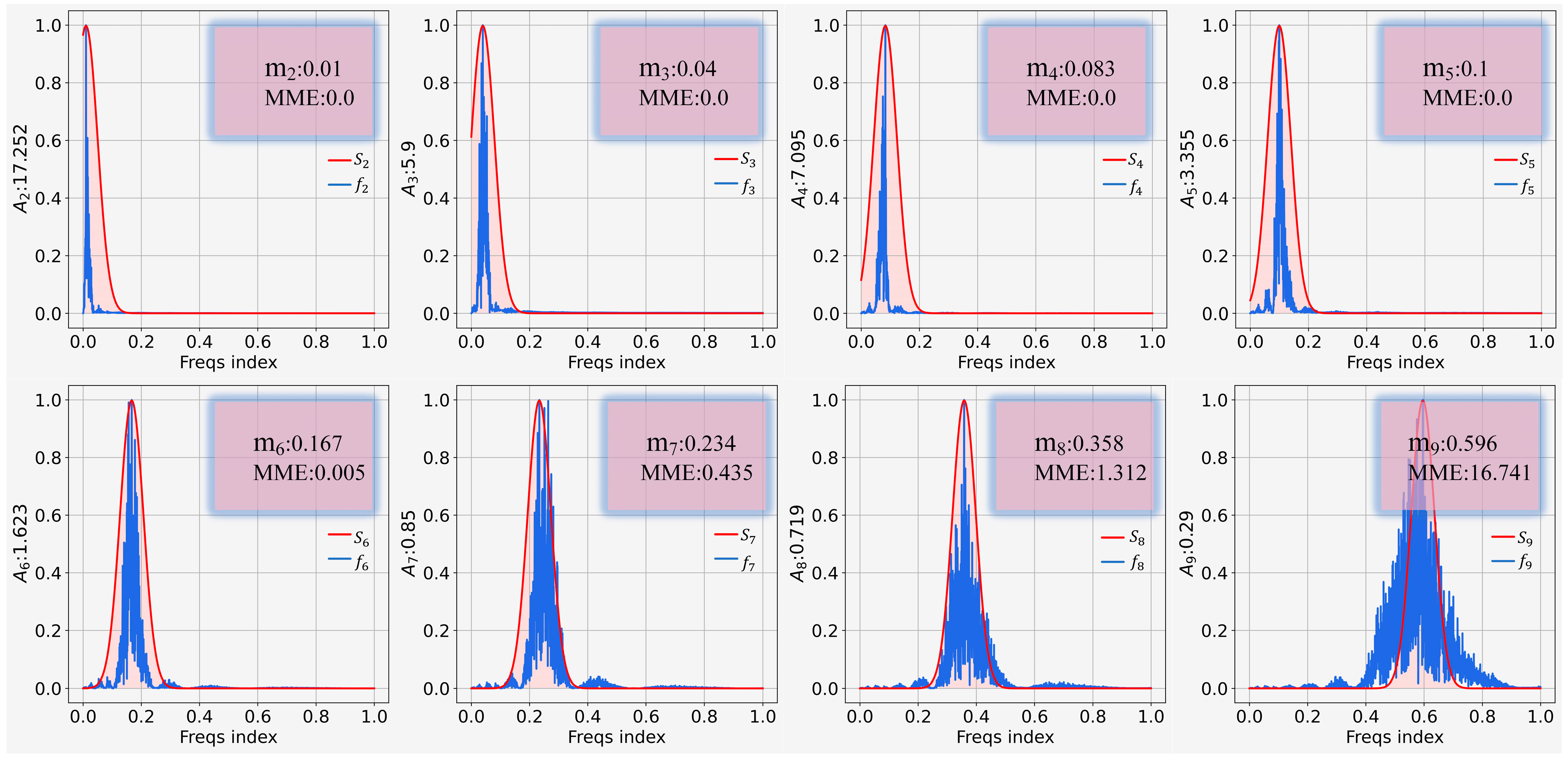

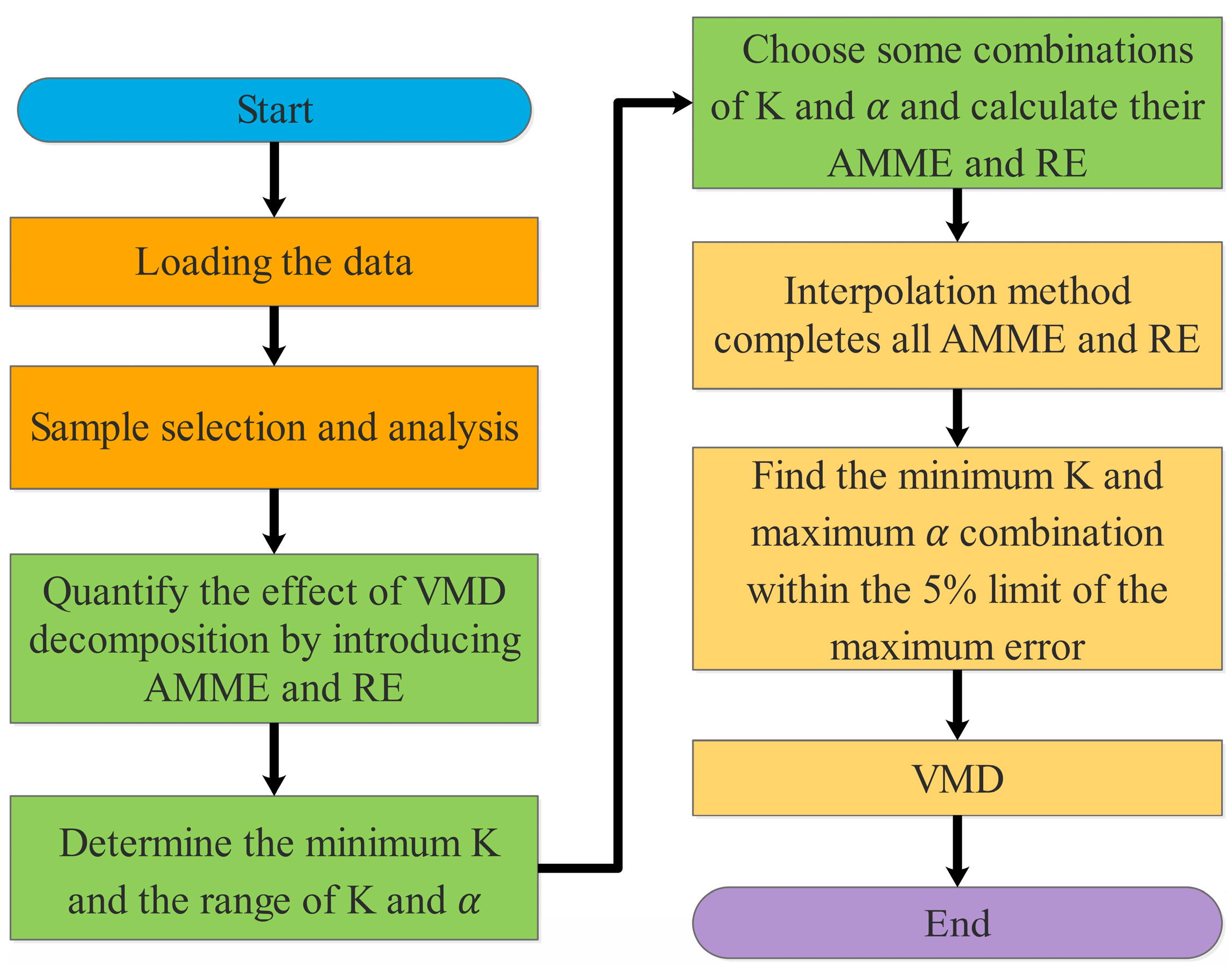

2.2. AVMD

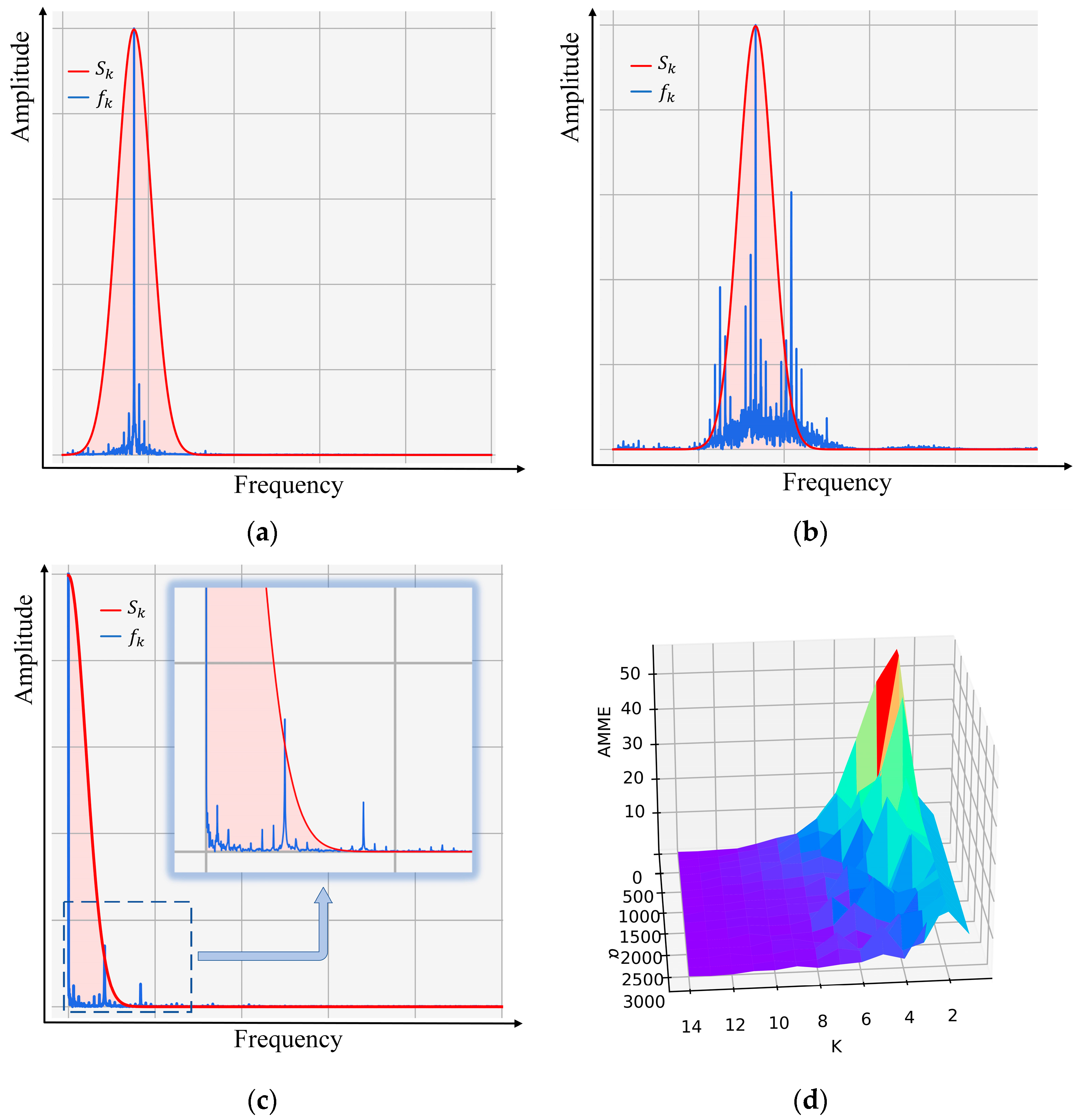

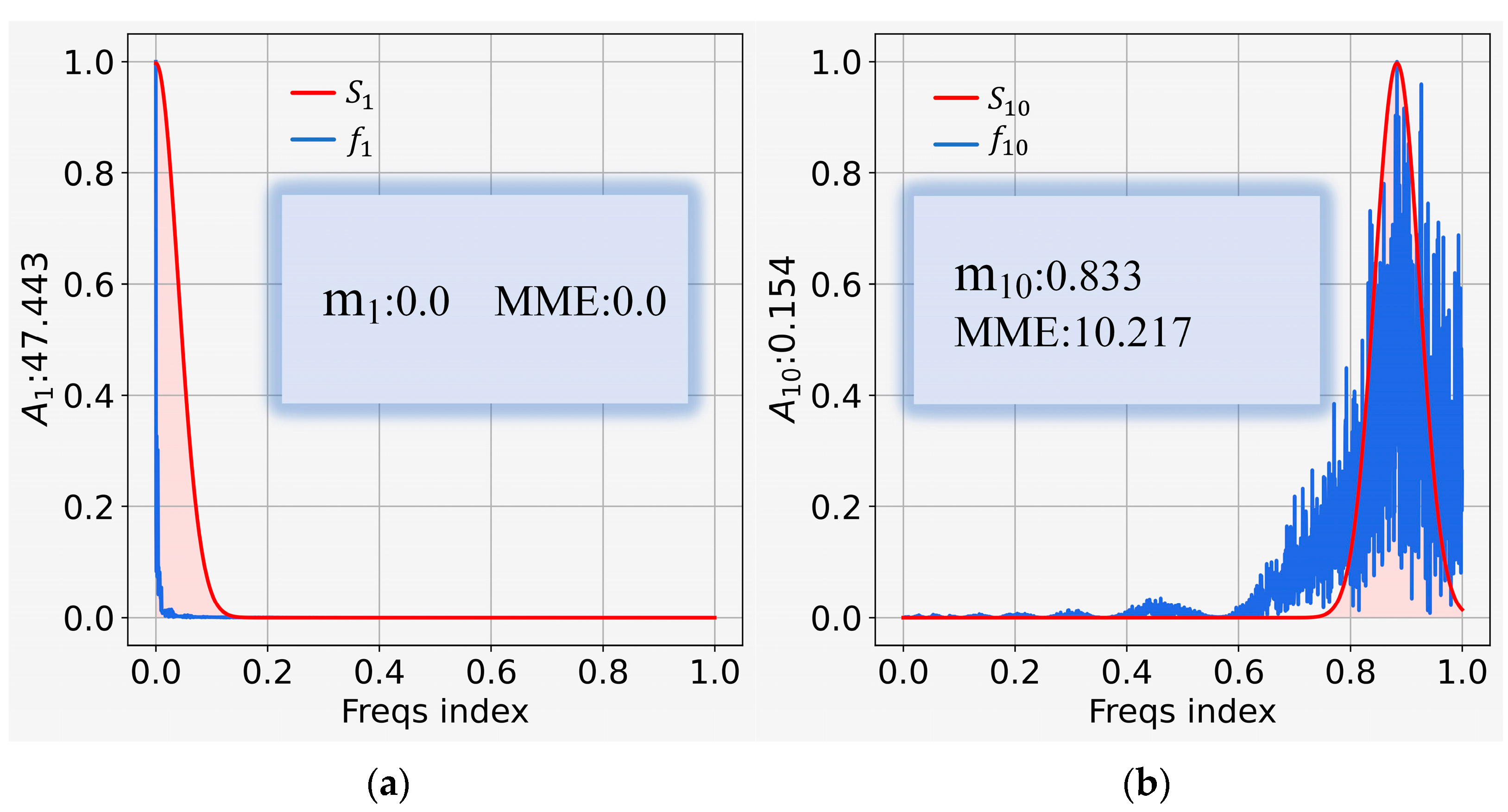

2.2.1. Quantification of VMD Decomposition Effect

2.2.2. Selection of Optimal Parameter Combination

2.3. MtemGNN

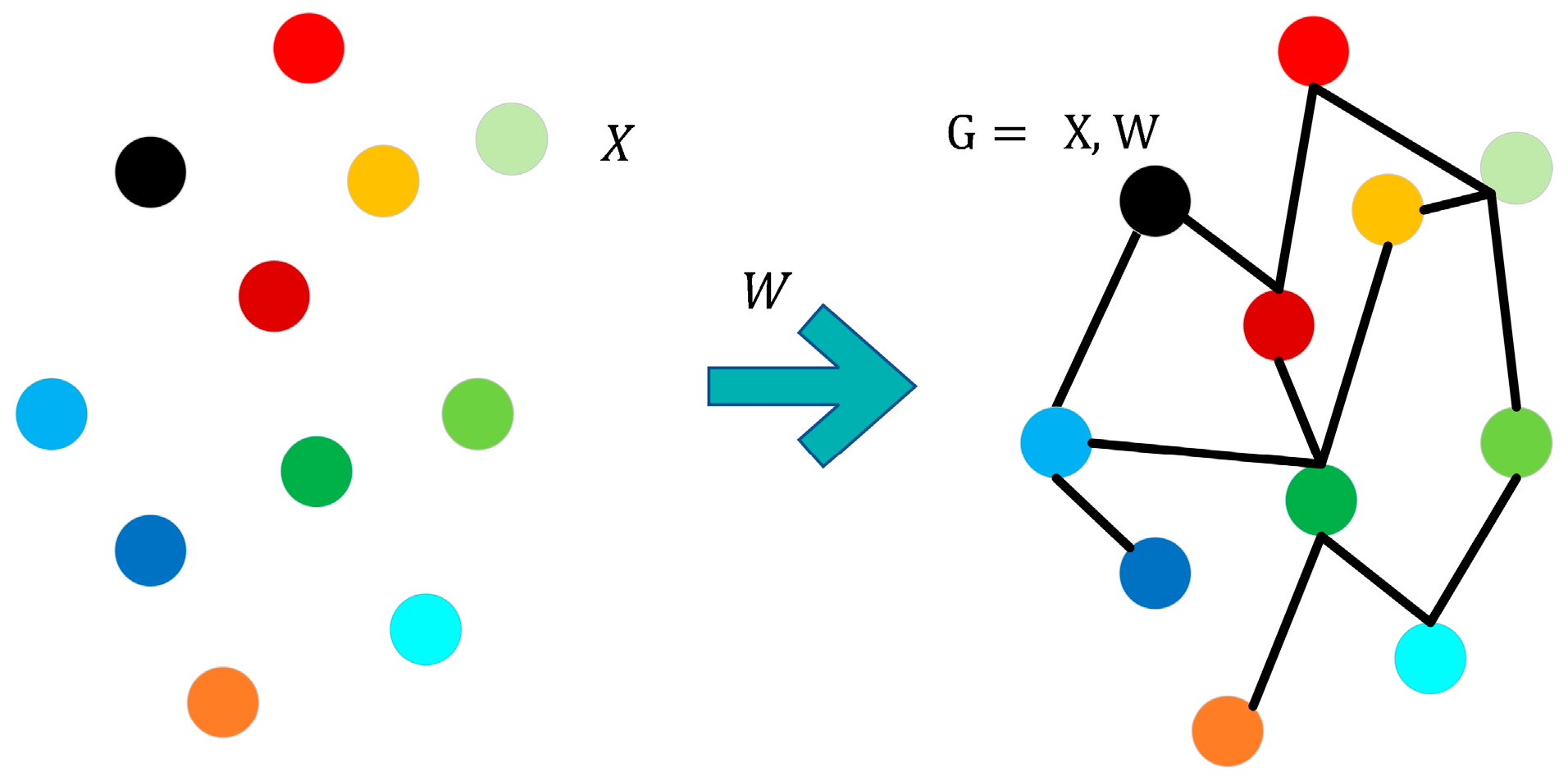

2.3.1. Latent Correlation Layer

2.3.2. GCN Layer

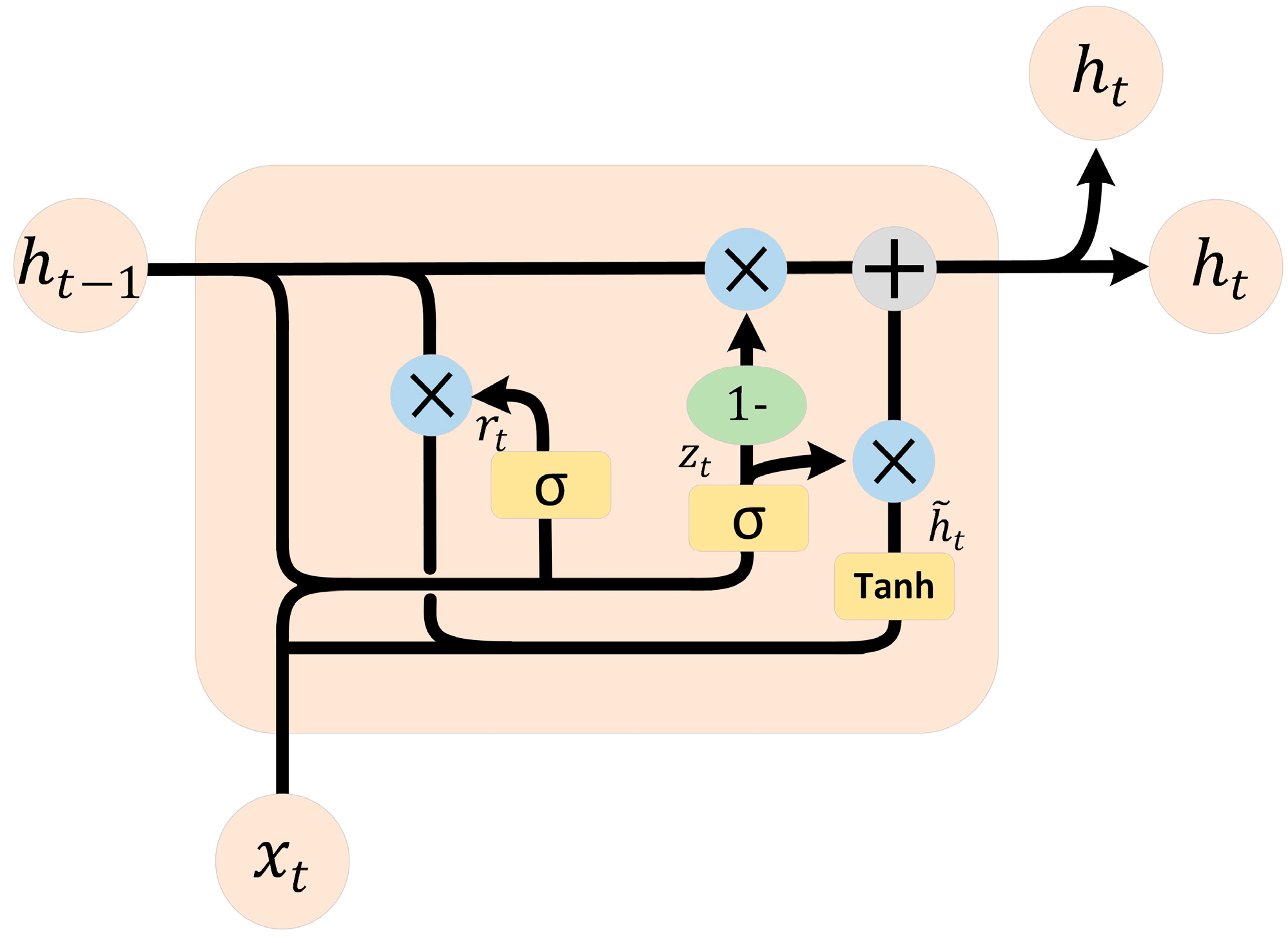

2.4. GRU

3. Experimental Results

3.1. Settings

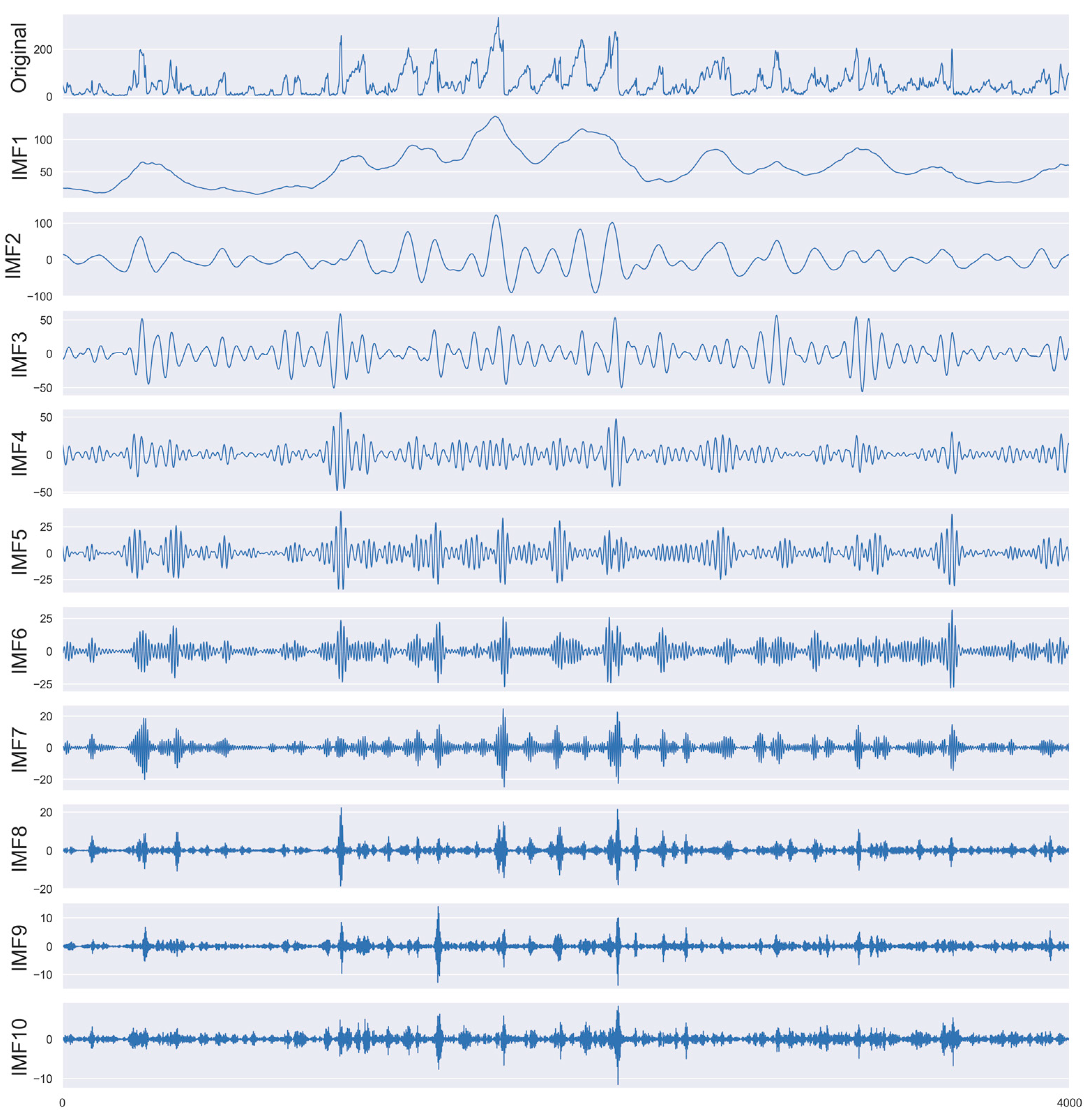

3.2. AVMD Performance

3.3. Performance Evaluation Indices

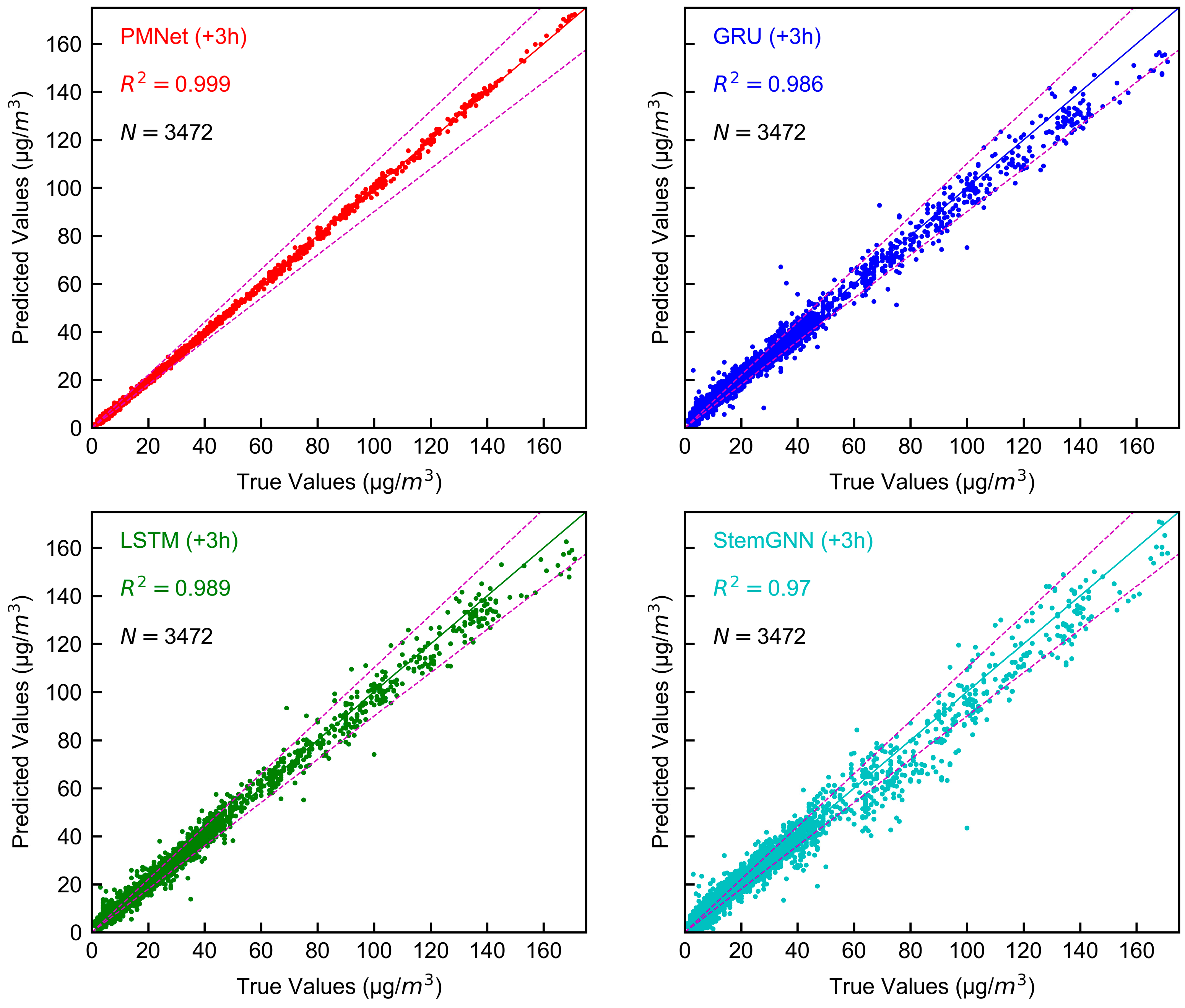

3.4. Comparison of the PMNet with Other Prediction Methods

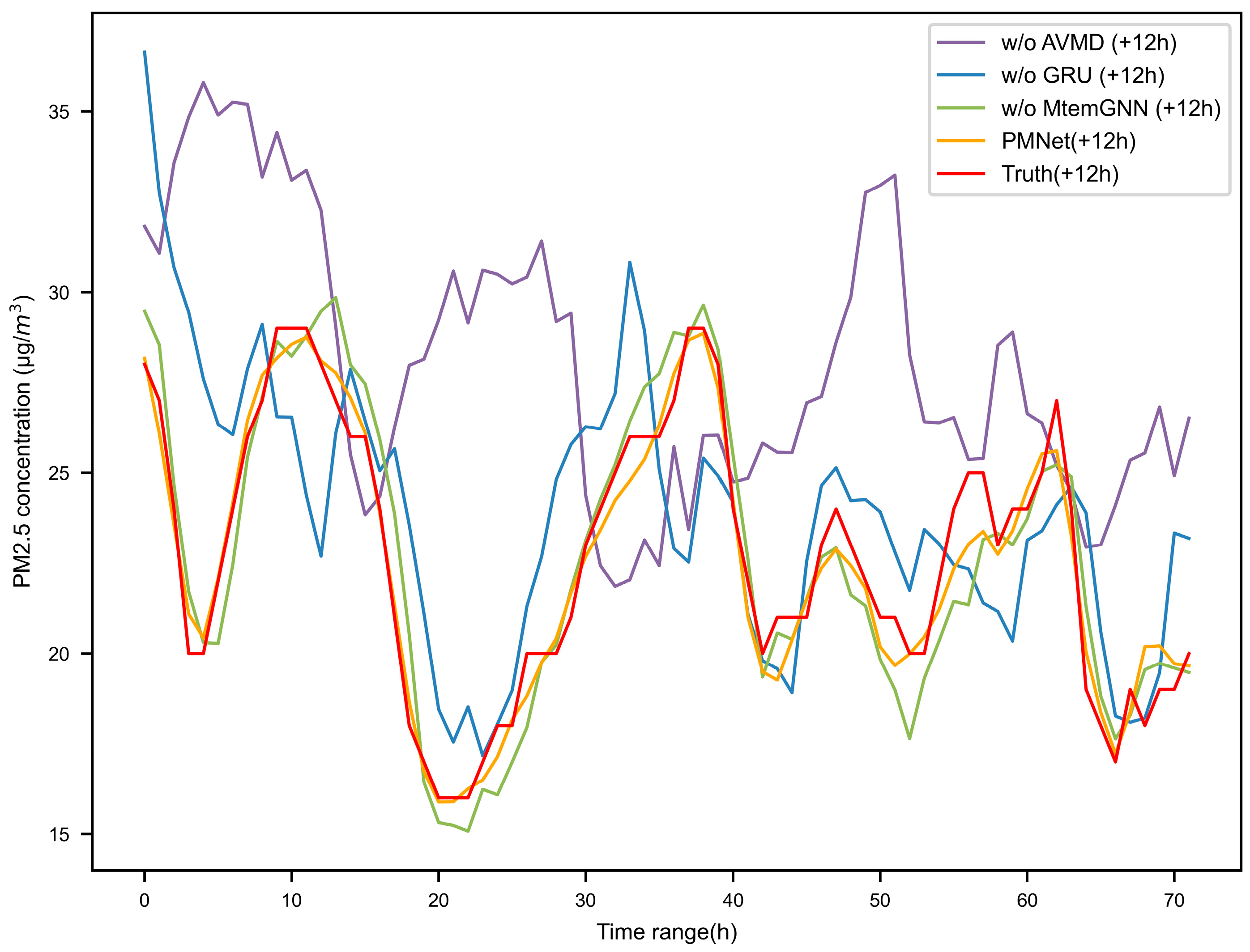

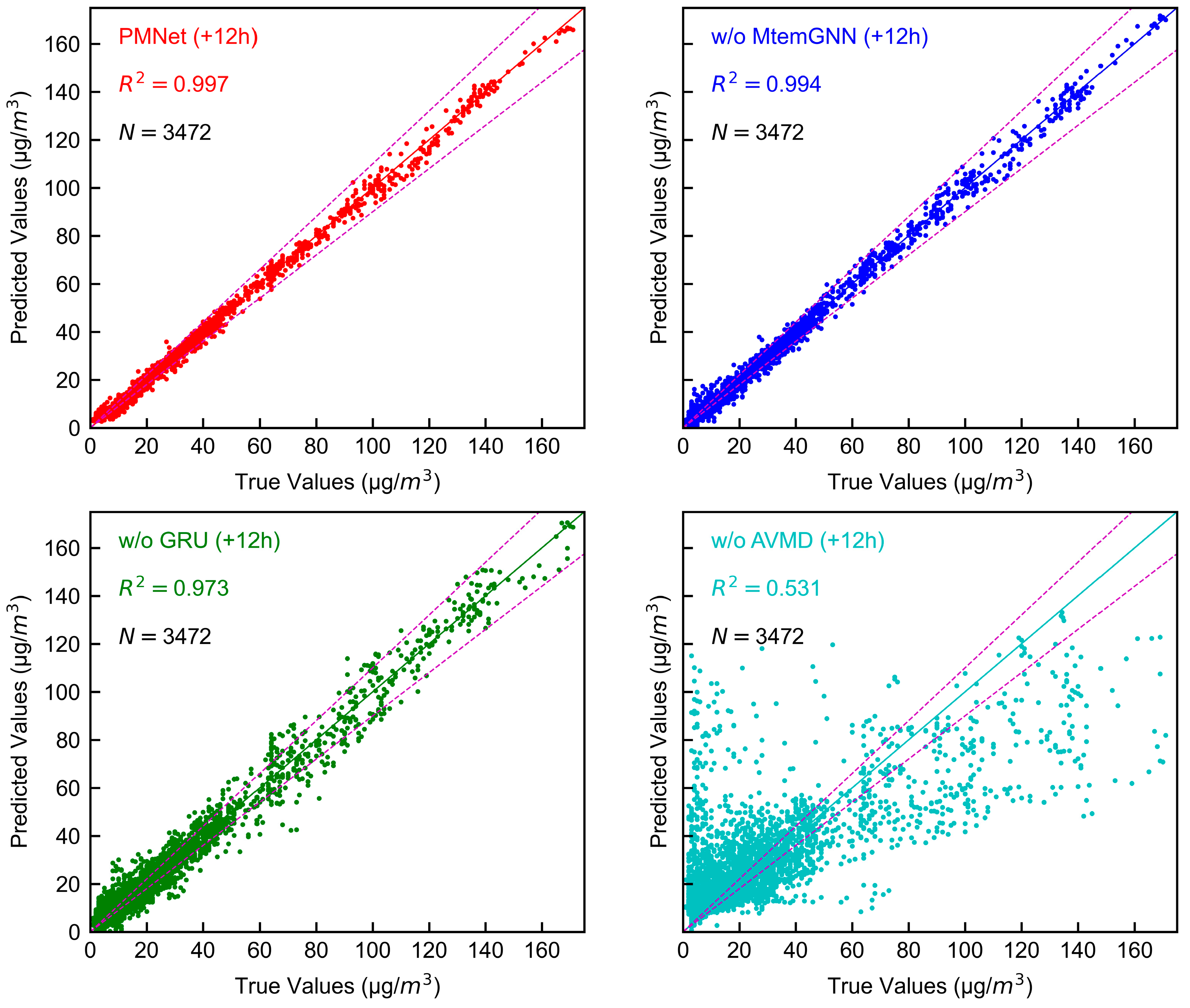

3.5. Ablation Experiment

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A. VMD

Appendix B. Submodularity and Supermodularity

Appendix C. The Architecture of GRU

Appendix D. Visualization of Mode Mixing for the Remaining IMFs

References

- WHO. Available online: https://www.who.int/health-topics/air-pollution#tab=tab_1 (accessed on 18 September 2022).

- Meo, S.A.; Almutairi, F.J.; Abukhalaf, A.A.; Alessa, O.M.; Al-Khlaiwi, T.; Meo, A.S. Sandstorm and its effect on particulate matter PM 2.5, carbon monoxide, nitrogen dioxide, ozone pollutants and SARS-CoV-2 cases and deaths. Sci. Total Environ. 2021, 795, 148764. [Google Scholar] [CrossRef] [PubMed]

- Yang, B.-Y.; Qian, Z.M.; Li, S.; Fan, S.; Chen, G.; Syberg, K.M.; Xian, H.; Wang, S.-Q.; Ma, H.; Chen, D.-H.; et al. Long-term exposure to ambient air pollution (including PM1) and metabolic syndrome: The 33 Communities Chinese Health Study (33CCHS). Environ. Res. 2018, 164, 204–211. [Google Scholar] [CrossRef] [PubMed]

- Schneider, R.; Vicedo-Cabrera, A.; Sera, F.; Masselot, P.; Stafoggia, M.; de Hoogh, K.; Kloog, I.; Reis, S.; Vieno, M.; Gasparrini, A. A Satellite-Based Spatio-Temporal Machine Learning Model to Reconstruct Daily PM2.5 Concentrations across Great Britain. Remote Sens. 2020, 12, 3803. [Google Scholar] [CrossRef]

- Guo, H.; Li, W.; Wu, J. Ambient PM2.5 and Annual Lung Cancer Incidence: A Nationwide Study in 295 Chinese Counties. Int. J. Environ. Res. Public Health 2020, 17, 1481. [Google Scholar] [CrossRef]

- Aldegunde, J.A.Á.; Sánchez, A.F.; Saba, M.; Bolaños, E.Q.; Caraballo, L.R. Spatiotemporal Analysis of PM2.5 Concentrations on the Incidence of Childhood Asthma in Developing Countries: Case Study of Cartagena de Indias, Colombia. Atmosphere 2022, 13, 1383. [Google Scholar] [CrossRef]

- Cao, X.; Shen, L.; Wu, S.; Yan, C.; Zhou, Y.; Xiong, G.; Wang, Y.; Liu, Y.; Liu, B.; Tang, X.; et al. Urban fine particulate matter exposure causes male reproductive injury through destroying blood-testis barrier (BTB) integrity. Toxicol. Lett. 2017, 266, 1–12. [Google Scholar] [CrossRef]

- Wei, Y.; Cao, X.-N.; Tang, X.-L.; Shen, L.-J.; Lin, T.; He, D.-W.; Wu, S.-D.; Wei, G.-H. Urban fine particulate matter (PM2.5) exposure destroys blood–testis barrier (BTB) integrity through excessive ROS-mediated autophagy. Toxicol. Mech. Methods 2018, 28, 302–319. [Google Scholar] [CrossRef]

- Coccia, M. How do low wind speeds and high levels of air pollution support the spread of COVID-19? Atmos. Pollut. Res. 2021, 12, 437–445. [Google Scholar] [CrossRef]

- Namdar-Khojasteh, D.; Yeghaneh, B.; Maher, A.; Namdar-Khojasteh, F.; Tu, J. Assessment of the relationship between exposure to air pollutants and COVID-19 pandemic in Tehran city, Iran. Atmos. Pollut. Res. 2022, 13, 101474. [Google Scholar] [CrossRef]

- van Beest, M.R.R.S.; Arpino, F.; Hlinka, O.; Sauret, E.; van Beest, N.R.T.P.; Humphries, R.S.; Buonanno, G.; Morawska, L.; Governatori, G.; Motta, N. Influence of indoor airflow on particle spread of a single breath and cough in enclosures: Does opening a window really ‘help’? Atmos. Pollut. Res. 2022, 13, 101473. [Google Scholar] [CrossRef]

- Setti, L.; Passarini, F.; De Gennaro, G.; Barbieri, P.; Perrone, M.G.; Borelli, M.; Palmisani, J.; Di Gilio, A.; Torboli, V.; Fontana, F.; et al. SARS-Cov-2RNA found on particulate matter of Bergamo in Northern Italy: First evidence. Environ. Res. 2020, 188, 109754. [Google Scholar] [CrossRef] [PubMed]

- Soh, P.-W.; Chang, J.-W.; Huang, J.-W. Adaptive Deep Learning-Based Air Quality Prediction Model Using the Most Relevant Spatial-Temporal Relations. IEEE Access 2018, 6, 38186–38199. [Google Scholar] [CrossRef]

- Zhou, Y.; Chang, F.-J.; Chang, L.-C.; Kao, I.-F.; Wang, Y.-S. Explore a deep learning multi-output neural network for regional multi-step-ahead air quality forecasts. J. Clean. Prod. 2019, 209, 134–145. [Google Scholar] [CrossRef]

- Woody, M.C.; Wong, H.-W.; West, J.J.; Arunachalam, S. Multiscale predictions of aviation-attributable PM2.5 for U.S. airports modeled using CMAQ with plume-in-grid and an aircraft-specific 1-D emission model. Atmos. Environ. 2016, 147, 384–394. [Google Scholar] [CrossRef]

- Zhou, G.; Xu, J.; Xie, Y.; Chang, L.; Gao, W.; Gu, Y.; Zhou, J. Numerical air quality forecasting over eastern China: An operational application of WRF-Chem. Atmos. Environ. 2017, 153, 94–108. [Google Scholar] [CrossRef]

- Yu, S.; Mathur, R.; Schere, K.; Kang, D.; Pleim, J.; Young, J.; Tong, D.; Pouliot, G.; McKeen, S.A.; Rao, S.T. Evaluation of real-time PM 2.5 forecasts and process analysis for PM 2.5 formation over the eastern United States using the Eta-CMAQ forecast model during the 2004 ICARTT study. J. Geophys. Res. 2008, 113, D06204. [Google Scholar] [CrossRef]

- Cobourn, W.G. An enhanced PM2.5 air quality forecast model based on nonlinear regression and back-trajectory concentrations. Atmos. Environ. 2010, 44, 3015–3023. [Google Scholar] [CrossRef]

- Aldegunde, J.A.Á.; Sánchez, A.F.; Saba, M.; Bolaños, E.Q.; Palenque, J.Ú. Analysis of PM2.5 and Meteorological Variables Using Enhanced Geospatial Techniques in Developing Countries: A Case Study of Cartagena de Indias City (Colombia). Atmosphere 2022, 13, 506. [Google Scholar] [CrossRef]

- Zhang, J.; Ding, W. Prediction of Air Pollutants Concentration Based on an Extreme Learning Machine: The Case of Hong Kong. Int. J. Environ. Res. Public Health 2017, 14, 114. [Google Scholar] [CrossRef]

- Li, X.; Peng, L.; Yao, X.; Cui, S.; Hu, Y.; You, C.; Chi, T. Long short-term memory neural network for air pollutant concentration predictions: Method development and evaluation. Environ. Pollut. 2017, 231, 997–1004. [Google Scholar] [CrossRef]

- Kristiani, E.; Lin, H.; Lin, J.R.; Chuang, Y.H.; Huang, C.Y.; Yang, C.T. Short-Term Prediction of PM2.5 Using LSTM Deep Learning Methods. Sustainability 2022, 14, 2068. [Google Scholar] [CrossRef]

- Zhou, X.; Xu, J.; Zeng, P.; Meng, X. Air Pollutant Concentration Prediction Based on GRU Method. J. Phys. Conf. Ser. 2019, 1168, 032058. [Google Scholar] [CrossRef]

- Gocheva-Ilieva, S.; Ivanov, A.; Stoimenova-minova, M. Prediction of Daily Mean PM10 Concentrations Using Random Forest, CART Ensemble and Bagging Stacked by MARS. Sustainability 2022, 14, 798. [Google Scholar] [CrossRef]

- Zhao, J.; Yuan, L.; Sun, K.; Huang, H.; Guan, P.; Jia, C. Forecasting Fine Particulate Matter Concentrations by In-Depth Learning Model According to Random Forest and Bilateral Long- and Short-Term Memory Neural Networks. Sustainability 2022, 14, 9430. [Google Scholar] [CrossRef]

- Huang, C.-J.; Kuo, P.-H. A Deep CNN-LSTM Model for Particulate Matter (PM2.5) Forecasting in Smart Cities. Sensors 2018, 18, 2220. [Google Scholar] [CrossRef] [PubMed]

- Pak, U.; Ma, J.; Ryu, U.; Ryom, K.; Juhyok, U.; Pak, K.; Pak, C. Deep learning-based PM2.5 prediction considering the spatiotemporal correlations: A case study of Beijing, China. Sci. Total Environ. 2020, 699, 133561. [Google Scholar] [CrossRef] [PubMed]

- Cao, D.; Wang, Y.; Duan, J.; Zhang, C.; Zhu, X.; Huang, C.; Tong, Y.; Xu, B.; Bai, J.; Tong, J.; et al. Spectral Temporal Graph Neural Network for Multivariate Time-series Forecasting. Adv. Neural Inf. Process. Syst. 2021, 33, 17766–17778. [Google Scholar]

- Zhu, S.; Lian, X.; Liu, H.; Hu, J.; Wang, Y.; Che, J. Daily air quality index forecasting with hybrid models: A case in China. Environ. Pollut. 2017, 231, 1232–1244. [Google Scholar] [CrossRef]

- Huang, G.; Li, X.; Zhang, B.; Ren, J. PM2.5 concentration forecasting at surface monitoring sites using GRU neural network based on empirical mode decomposition. Sci. Total Environ. 2021, 768, 144516. [Google Scholar] [CrossRef]

- Li, G.; Chen, L.; Yang, H. Prediction of PM2.5 concentration based on improved secondary decomposition and CSA-KELM. Atmos. Pollut. Res. 2022, 13, 101455. [Google Scholar] [CrossRef]

- Zheng, G.; Liu, H.; Yu, C.; Li, Y.; Cao, Z. A new PM2.5 forecasting model based on data preprocessing, reinforcement learning and gated recurrent unit network. Atmos. Pollut. Res. 2022, 13, 101475. [Google Scholar] [CrossRef]

- Shen, Y.; Ma, Y.; Deng, S.; Huang, C.-J.; Kuo, P.-H. An Ensemble Model based on Deep Learning and Data Preprocessing for Short-Term Electrical Load Forecasting. Sustainability 2021, 13, 1694. [Google Scholar] [CrossRef]

- Dragomiretskiy, K.; Zosso, D. Variational Mode Decomposition. IEEE Trans. Signal Process. 2014, 62, 531–544. [Google Scholar] [CrossRef]

- Hu, W.; Jin, J.; Liu, T.-Y.; Zhang, C. Automatically Design Convolutional Neural Networks by Optimization With Submodularity and Supermodularity. IEEE Trans. Neural Netw. Learn. Syst. 2020, 31, 3215–3229. [Google Scholar] [CrossRef]

- Shepard, D. Two-dimensional interpolation function for irregularly-spaced data. In Proceedings of the 1968 23rd ACM National Conference, online, 1 January 1968; pp. 517–524. [Google Scholar]

- Scarselli, F.; Gori, M.; Ah Chung Tsoi; Hagenbuchner, M.; Monfardini, G. The Graph Neural Network Model. IEEE Trans. Neural Netw. 2009, 20, 61–80. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention Is All You Need. Adv. Neural Inf. Process. Syst. 2017, 30, 5999–6009. [Google Scholar]

- Kipf, T.N.; Welling, M. Semi-supervised classification with graph convolutional networks. In Proceedings of the 5th International Conference on Learning Representations, ICLR 2017, Toulon, France, 24–26 April 2017; pp. 1–14. [Google Scholar]

- Oreshkin, B.N.; Carpov, D.; Chapados, N.; Bengio, Y. N-BEATS: Neural basis expansion analysis for interpretable time series forecasting. arXiv 2019, arXiv:1905.10437. [Google Scholar]

- Defferrard, M.; Bresson, X.; Vandergheynst, P. Convolutional Neural Networks on Graphs with Fast Localized Spectral Filtering. Nat. Commun. 2016, 8, 15679. [Google Scholar]

- Ando, R.K.; Zhang, T. Learning on Graph with Laplacian Regularization. In Advances in Neural Information Processing Systems 19; The MIT Press: Cambridge, MA, USA, 2007; pp. 25–32. ISBN 9780262195683. [Google Scholar]

- Cho, K.; van Merrienboer, B.; Bahdanau, D.; Bengio, Y. On the Properties of Neural Machine Translation: Encoder–Decoder Approaches. In Proceedings of the SSST-8, Eighth Workshop on Syntax, Semantics and Structure in Statistical Translation, Doha, Qatar, 25 October 2014; pp. 103–111. [Google Scholar] [CrossRef]

- Chung, J.; Gulcehre, C.; Cho, K.; Bengio, Y. Empirical Evaluation of Gated Recurrent Neural Networks on Sequence Modeling. arXiv 2014, arXiv:1412.3555. [Google Scholar]

- Huang, C.-J.; Shen, Y.; Chen, Y.; Chen, H. A novel hybrid deep neural network model for short-term electricity price forecasting. Int. J. Energy Res. 2021, 45, 2511–2532. [Google Scholar] [CrossRef]

- Sun, N.; Zhou, J.; Chen, L.; Jia, B.; Tayyab, M.; Peng, T. An adaptive dynamic short-term wind speed forecasting model using secondary decomposition and an improved regularized extreme learning machine. Energy 2018, 165, 939–957. [Google Scholar] [CrossRef]

- Wang, J.; Zhang, Y.; Zhang, F.; Li, W.; Lv, S.; Jiang, M.; Jia, L. Accuracy-improved bearing fault diagnosis method based on AVMD theory and AWPSO-ELM model. Measurement 2021, 181, 109666. [Google Scholar] [CrossRef]

- Yang, H.; Wang, C.; Li, G. A new combination model using decomposition ensemble framework and error correction technique for forecasting hourly PM2.5 concentration. J. Environ. Manag. 2022, 318, 115498. [Google Scholar] [CrossRef]

- Gao, X.; Li, W. A graph-based LSTM model for PM2.5 forecasting. Atmos. Pollut. Res. 2021, 12, 101150. [Google Scholar] [CrossRef]

- Zhu, M.; Xie, J. Investigation of nearby monitoring station for hourly PM2.5 forecasting using parallel multi-input 1D-CNN-biLSTM. Expert Syst. Appl. 2023, 211, 118707. [Google Scholar] [CrossRef]

- Lei, T.M.T.; Siu, S.W.I.; Monjardino, J.; Mendes, L.; Ferreira, F. Using Machine Learning Methods to Forecast Air Quality: A Case Study in Macao. Atmosphere 2022, 13, 1412. [Google Scholar] [CrossRef]

- Ho, C.H.; Park, I.; Kim, J.; Lee, J.B. PM2.5 Forecast in Korea using the Long Short-Term Memory (LSTM) Model. Asia-Pac. J. Atmos. Sci. 2022, 1, 1–14. [Google Scholar] [CrossRef] [PubMed]

- Yeo, I.; Choi, Y.; Lops, Y.; Sayeed, A. Efficient PM2.5 forecasting using geographical correlation based on integrated deep learning algorithms. Neural Comput. Appl. 2021, 33, 15073–15089. [Google Scholar] [CrossRef]

- Unser, M.; Sage, D.; Van De Ville, D. Multiresolution Monogenic Signal Analysis Using the Riesz–Laplace Wavelet Transform. IEEE Trans. Image Process. 2009, 18, 2402–2418. [Google Scholar] [CrossRef]

- Rockafellar, R.T. A dual approach to solving nonlinear programming problems by unconstrained optimization. Math. Program. 1973, 5, 354–373. [Google Scholar] [CrossRef]

| Parameter Name | Value |

|---|---|

| Initial learning rate | 0.001 |

| Epochs | 150 |

| Batch size | 24 |

| Loss function | Mean squared error (MSEloss) |

| Optimizer | Root Mean Square Propagation (RMSProp) |

| Window size | 24 h |

| Forecast horizon | 12 h |

| Methods | Metric | +1 h | +2 h | +3 h | Average |

|---|---|---|---|---|---|

| StemGNN | MAPE(%) | 16.126 | 22.167 | 30.212 | 22.835 |

| MAE | 2.014 | 3.268 | 4.726 | 3.336 | |

| RMSE | 2.917 | 5.228 | 7.808 | 5.681 | |

| LSTM | MAPE(%) | 11.991 | 19.332 | 24.618 | 18.647 |

| MAE | 1.728 | 2.755 | 3.911 | 2.798 | |

| RMSE | 2.598 | 4.811 | 7.001 | 5.129 | |

| GRU | MAPE(%) | 12.594 | 17.513 | 25.688 | 18.598 |

| MAE | 1.802 | 2.805 | 4.059 | 2.889 | |

| RMSE | 2.790 | 4.697 | 6.990 | 5.122 | |

| PMNet | MAPE(%) | 5.401 | 5.112 | 5.103 | 5.205 |

| MAE | 0.558 | 0.646 | 0.666 | 0.623 | |

| RMSE | 0.731 | 0.855 | 0.863 | 0.816 |

| Methods | MAPE (%) | MAE | RMSE |

|---|---|---|---|

| PMNet | 7.348 | 0.834 | 1.201 |

| w/o MtemGNN | 11.909 | 1.403 | 1.978 |

| w/o GRU | 26.020 | 3.168 | 4.387 |

| w/o AVMD | 61.711 | 8.459 | 14.454 |

| Authors and Ref. | Forecast Horizon | Data Sources | Outcome | Algorithms |

|---|---|---|---|---|

| Huang et al. [30] | One hour | Beijing | RMSE = 11.372 | EMD-GRU |

| Li et al. [31] | One day | Beijing | RMSE = 1.2289 | CEEMDAN-DSE-BVMD-CSA-KELM 1 |

| Yang et al. [48] | One hour | Xi’an | RMSE = 0.8909 | AIVMD-RBF-IOWA-LSTM-EC 2 |

| Gao et al. [49] | One hour | Gansu | RMSE = 3.405 | Graph-based Long Short-Term Memory (GLSTM) |

| Zhu et al. [50] | One hour | Shanghai | RMSE = 4.2489 | 1D-CNN-biLSTM |

| Lei et al. [51] | One hour | Macao | RMSE = 3.72 | Random forest (RF) |

| Ho et al. [52] | One day | Korea | RMSE = 8.2 | LSTM |

| Yeo et al. [53] | One hour | Seoul | RMSE = 7.962 | CNN-GRU |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pei, Y.; Huang, C.-J.; Shen, Y.; Ma, Y. An Ensemble Model with Adaptive Variational Mode Decomposition and Multivariate Temporal Graph Neural Network for PM2.5 Concentration Forecasting. Sustainability 2022, 14, 13191. https://doi.org/10.3390/su142013191

Pei Y, Huang C-J, Shen Y, Ma Y. An Ensemble Model with Adaptive Variational Mode Decomposition and Multivariate Temporal Graph Neural Network for PM2.5 Concentration Forecasting. Sustainability. 2022; 14(20):13191. https://doi.org/10.3390/su142013191

Chicago/Turabian StylePei, Yadong, Chiou-Jye Huang, Yamin Shen, and Yuxuan Ma. 2022. "An Ensemble Model with Adaptive Variational Mode Decomposition and Multivariate Temporal Graph Neural Network for PM2.5 Concentration Forecasting" Sustainability 14, no. 20: 13191. https://doi.org/10.3390/su142013191

APA StylePei, Y., Huang, C.-J., Shen, Y., & Ma, Y. (2022). An Ensemble Model with Adaptive Variational Mode Decomposition and Multivariate Temporal Graph Neural Network for PM2.5 Concentration Forecasting. Sustainability, 14(20), 13191. https://doi.org/10.3390/su142013191