2.1. The Shifting Perspective on Education

There are four elements that are key to education: learners, teachers, content, and contexts [

13]. Learners can learn to gather information by themselves or collaborate with their peers to sustain and improve their knowledge [

14]. Teachers can supervise and help learners gain knowledge correctly. Furthermore, a teacher can help to deliver knowledge content to learners with a suitable learning approach [

15]. However, most teachers lack the time to teach the learners more deeply [

16]. Previous studies have demonstrated that technology can help teachers overcome the time limitations [

17,

18].

These studies have implemented technology to support educational settings because technology has become more mature and reliable. For example, recognition technologies, such as speech recognition and the support of AI could accommodate learners to practice pronouncing English texts independently [

18]. A different study suggested that learners could learn geometry meaningfully in their surroundings as the learning contexts are defined as authentic contexts with the recognition of geometric objects through AI [

19]. These studies also demonstrated that the implementation of technology in education can sustain the learners’ motivation and enhance learning achievement [

18,

19].

Recently, researchers have trained NLP with large datasets to improve its recognition accuracy through deep learning [

20]. By doing so, the NLP can understand human language more with its knowledge. For example, the NLP with a large language model like GPT-3 has 175 billion parameters that can understand the human language as input and generate new texts based on their knowledge [

21]. This mechanism is similar to the education setting, whereas the teacher educates the learners, gradually increasing their knowledge. Lu et al. [

22] used the GPT-2 for the question answering system (Q&A) as authoring the question and grading the answer. They trained the AI to match their purpose before generating the short question for learners. Later, they used it to grade the students’ answers. Doing so could help teachers to prepare the question and grade the students’ answers. In this case, the AI also learns to gain its knowledge. However, the capabilities of AI could act like a teacher to help humans become more knowledgeable and help others become more knowledgeable. The next subsection will describe how education could accommodate not only humans but also all things.

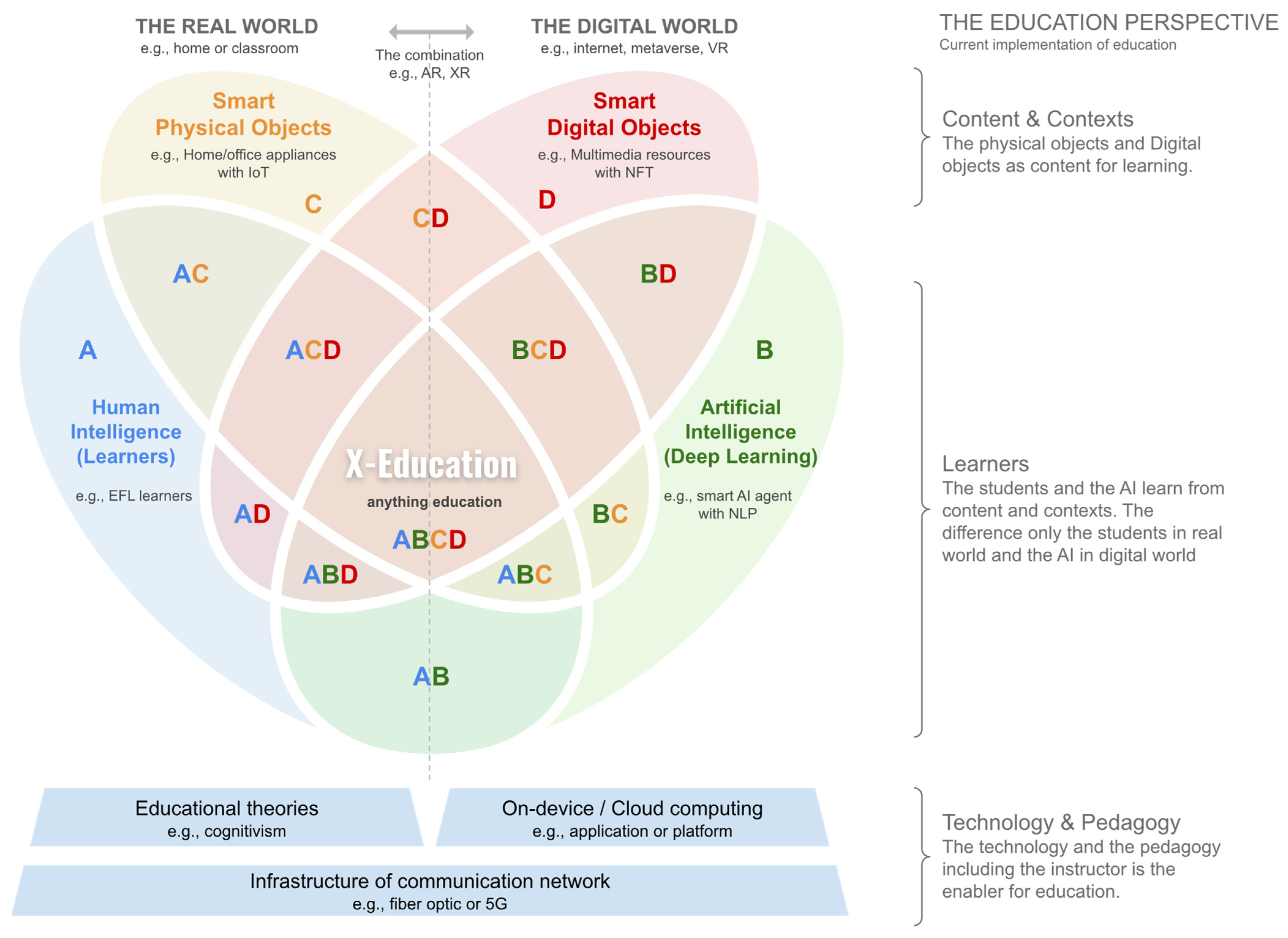

2.2. Education for All Things

All things in this world are physical objects, digital objects, or other forms, such as AI. Some of them are alive, such as plants, and some of them are inanimate objects, such as chairs. For example, the IoT could be formed of physical objects that could control their hardware with microprocessors and sensors [

23]. However, the implementation of IoT is usually only for data collection and managing its hardware using task scheduling [

24]. In addition, it is used remotely on the cloud server, which requires internet connectivity [

3]. The IoT itself has limited computing resources [

25], so it requires the use of the computing resources on a cloud server.

In daily life, most people interact with digital objects, such as pictures, videos, or music on their mobile devices. Currently, digital objects can have their own identities and single authorship with NFTs [

11]. A digital object uploaded to the NFT marketplace receives a unique address or token [

26]. The other thing is like AI that could understand the human world through mathematical representations [

24]. Humans cannot see the AI directly, unlike physical or digital objects, but they can benefit from implementing it. However, recent research has shown that AI still has a lot of space to improve its ability to help humans in different fields [

6,

24].

Similarly, human intelligence is the natural form that can distinguish between humans and other things in this world [

6]. Commonly, human intelligence also needs to be improved with help from others. For example, EFL learners could improve their English writing skills after being taught by an experienced English teacher [

27,

28]. However, learners cannot receive help from teachers at any time for their learning due to the limited learning time available in school. AI could tackle this limitation because it works 24 h a day to help learners learn, in and out of school, using ubiquitous technologies and cloud services. Several studies have addressed this issue and investigated how to fill the gap of learning time with AI support [

28,

29]. For example, EFL learners could practice writing at any time with the help of grammar checking that implements AI. Through these mechanisms, EFL learners as humans could benefit from learning with AI [

28].

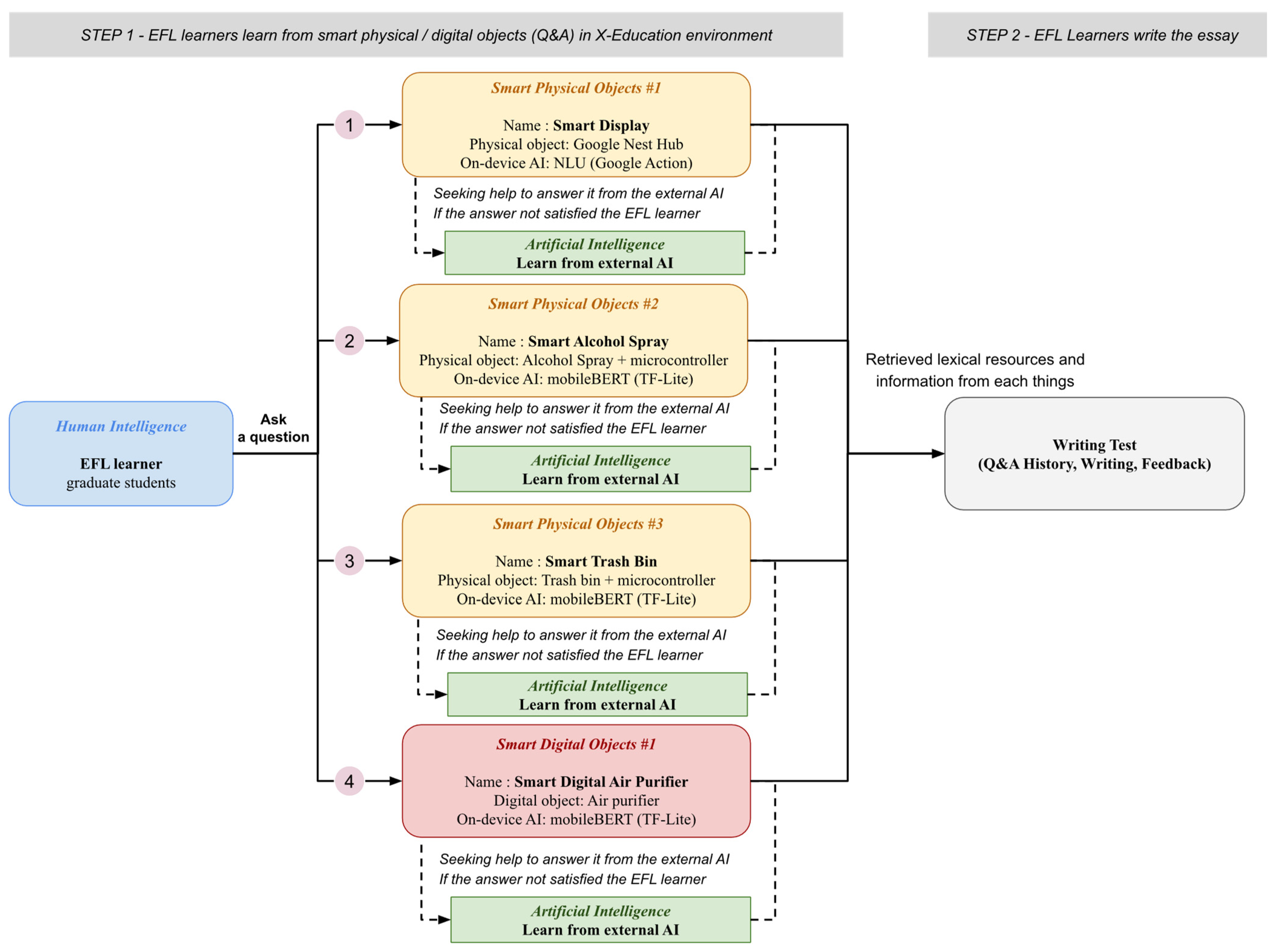

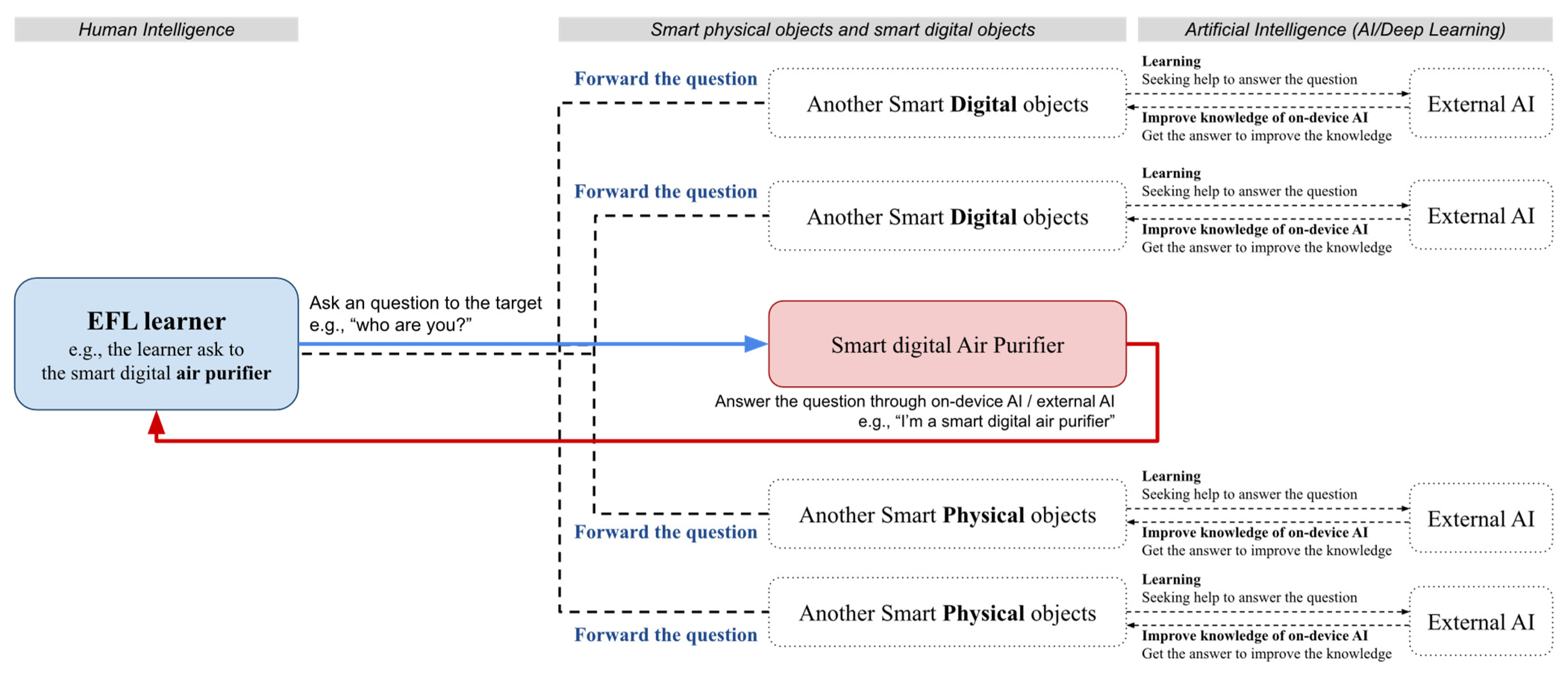

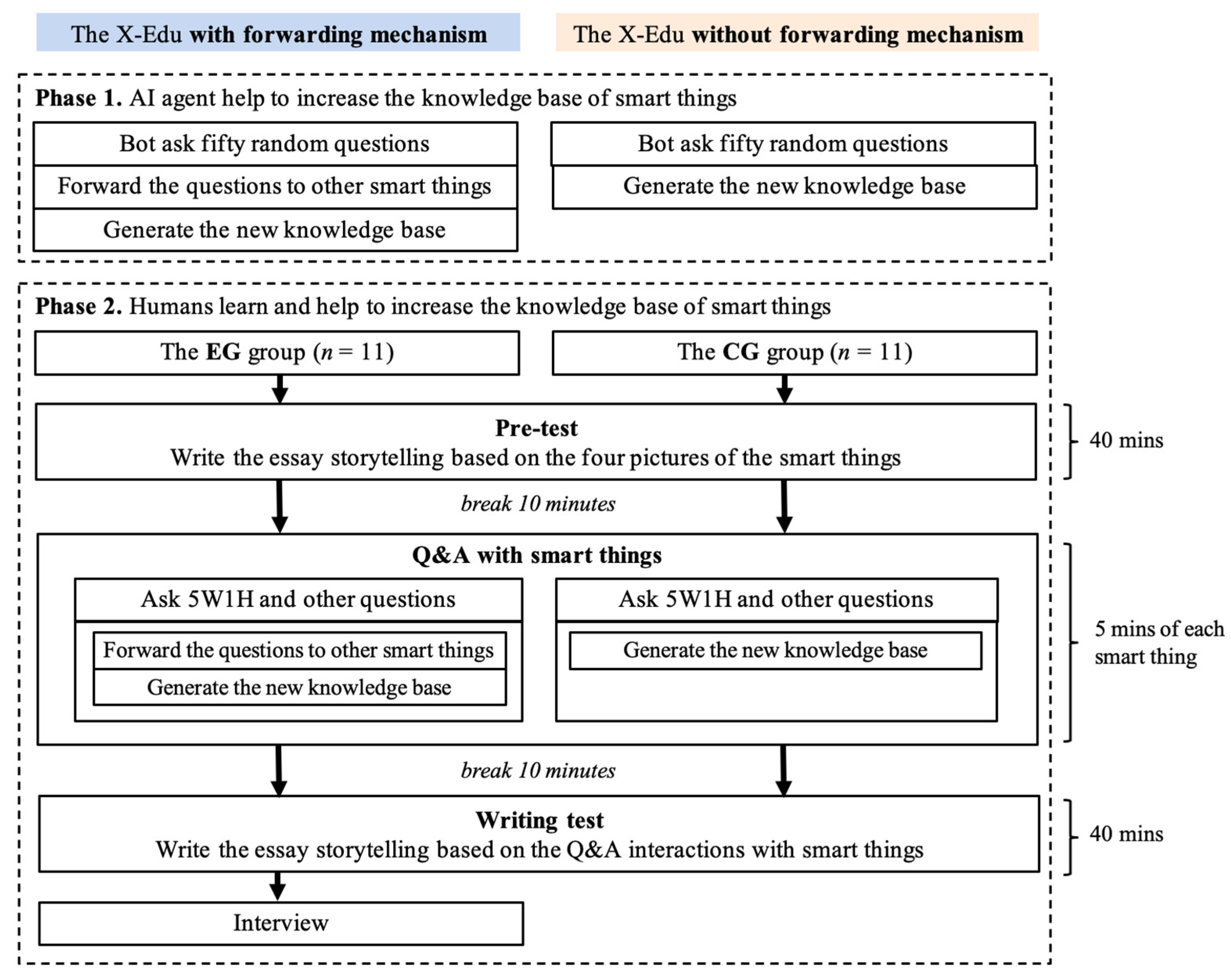

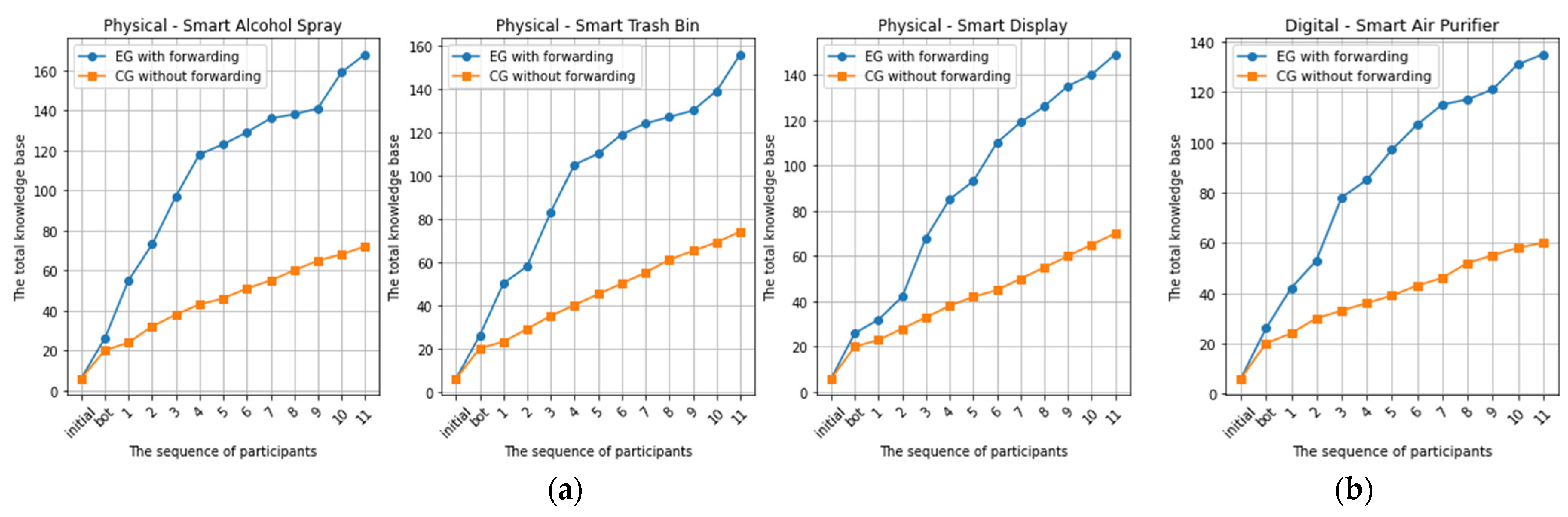

Similarly, this AI mechanism can also be used to teach the IoT, as AI can learn from a physical object and pictures as digital objects and increase their knowledge. Likewise, the IoT as a learner will learn from the AI as a teacher. Meanwhile, all things could connect and learn from each other without time limitations. All things, including people, can not only learn from AI but can also learn from other smart things, such as digital objects. For example, EFL learners could learn from smart physical objects to understand their properties, and physical objects can also learn from the digital objects through interaction.

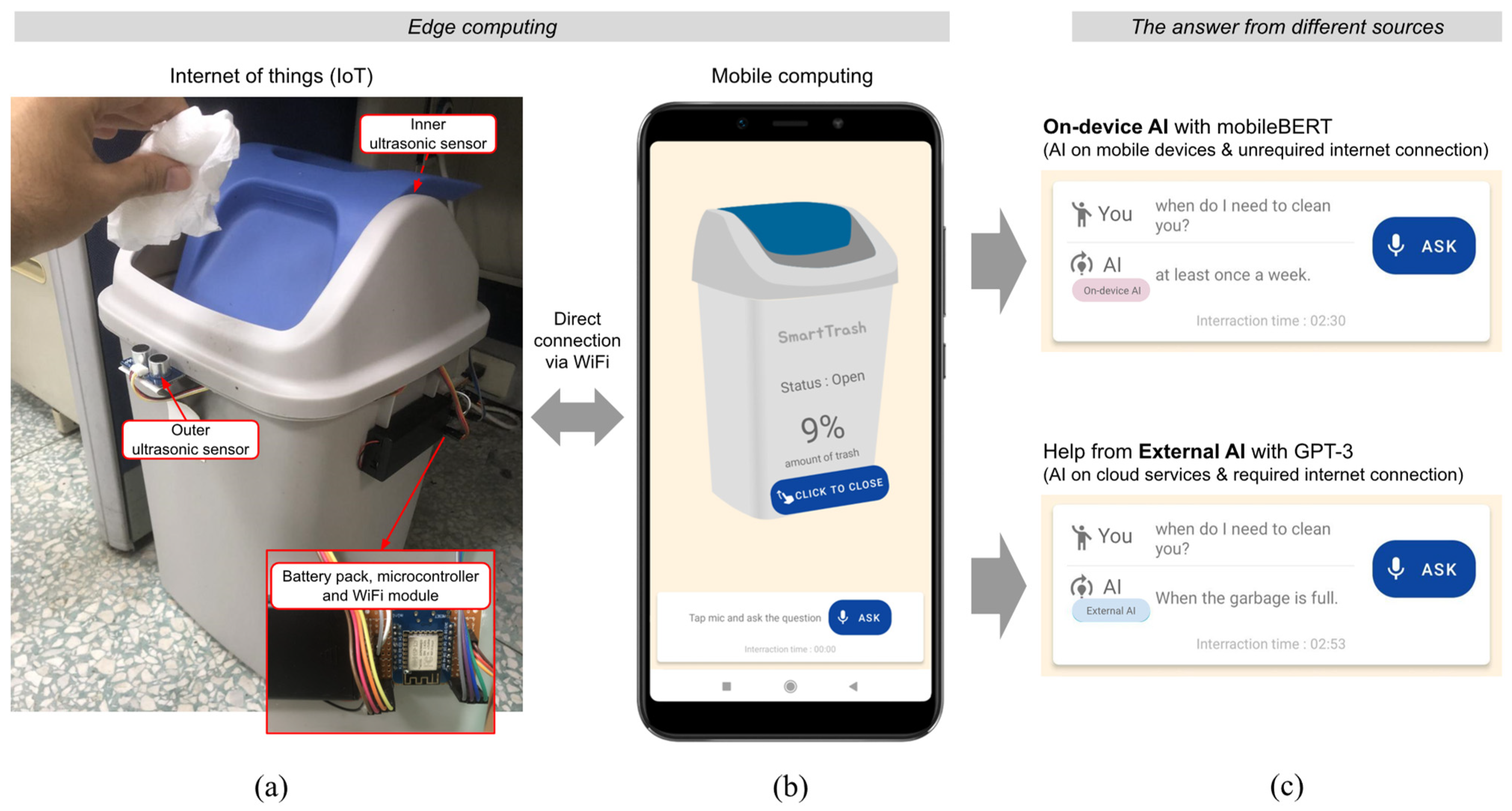

The problem is that physical and digital objects cannot understand human language directly. In addition, the IoT will require higher computing resources from a cloud server to understand the human language with NLP [

30]. This will cause high latency, which implies long waits to receive computational results. Furthermore, previous studies suggested implementing edge computing, which is computing resources that need to be placed near the user or the IoT in order to reduce the connection delay [

3,

7]. In addition, edge computing could collaborate with mobile computing to share their computing resources. Hence, AI, especially NLP, could be deployed in mobile computing to build edge computing that can understand human language with a low delay. The inferencing process will use shared resources in edge computing, not in the cloud [

6,

7].

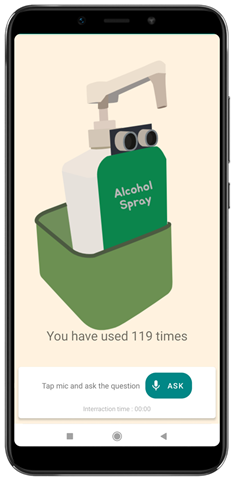

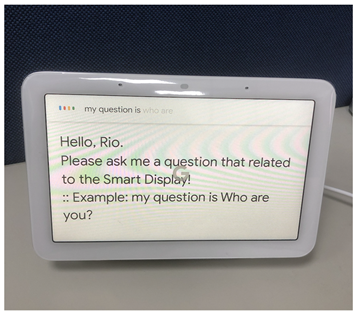

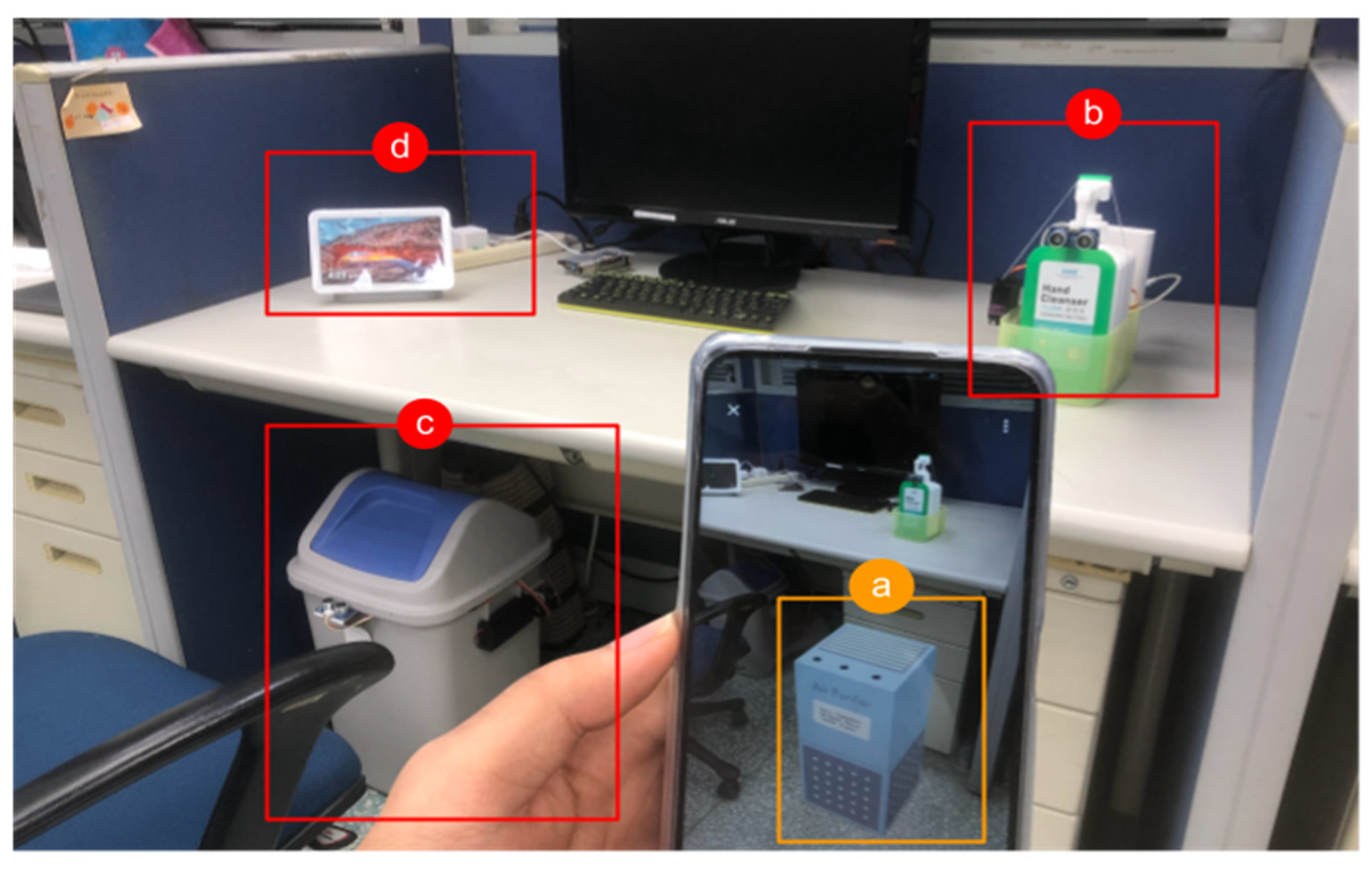

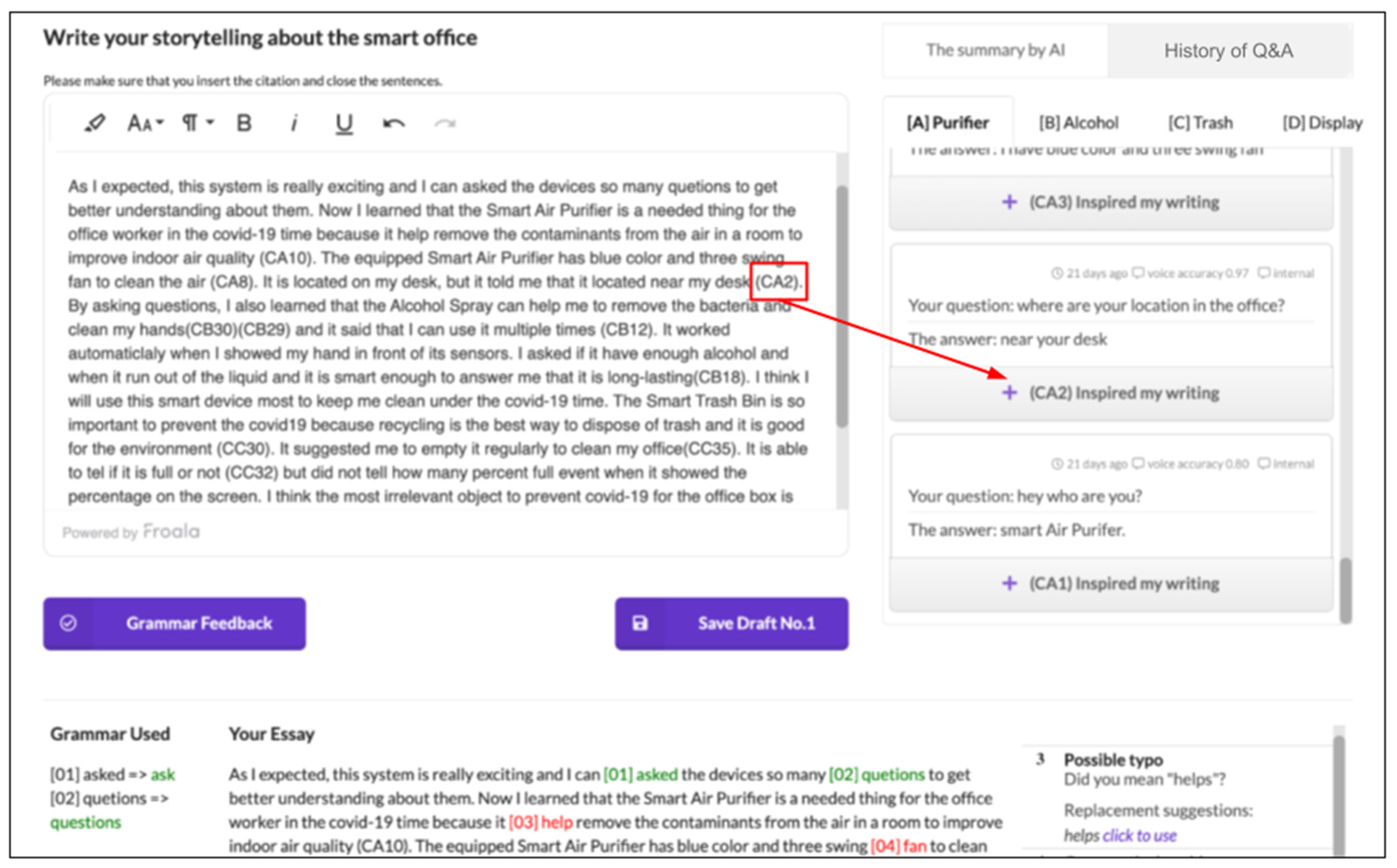

The edge computing implementation of on-device AI using TF-Lite and a pre-trained model, such as the mobileBERT model, could enable the Q&A interactions between on-device AI and the humans [

31,

32]. Thus, physical objects and digital objects with on-device AI and edge computing could answer the human questions quickly. Hence, physical objects could be formed as smart physical objects because physical objects with on-device AI have the intelligence to understand human language. For this reason, smart physical objects are not only limited to the IoT devices but also other objects, such as plants and furniture. Edge computing can be implemented with plants; thus, the plants could understand human language, and humans could ask questions of the plants. Likewise, for human intelligence, an on-device AI also needs an initial knowledge base related to its properties to answer the question from EFL learners. Later, on-device AI could improve its knowledge by learning from other things, such as AI or external AI [

22]. External AI refers to large language models like the GPT-3 from OpenAI, which have more knowledge than on-device AI [

21]. Hence, external AI could help to understand the human language and improve the knowledge of on-device AI by generating the answers to enhance its knowledge.

Therefore, EFL learners could learn from all things, such as smart physical objects, smart digital objects, and AI, to gather more English lexical resources and practice EFL learning. In addition to human beings, all things can learn from each other; for example, smart physical objects can learn from external AI to improve their knowledge about their properties, functions, and benefits.

In addition, the data of all things from the IoT, edge computing, mobile devices, and AI algorithms have different new and emerging forms [

33]. Using the new and emerging forms of data for education requires careful considerations concerning the risk of using AI [

34]. This is because the successful orchestration and thorough integration of the data from different sources are the key factors for successfully aiding education. Moreover, humans also need to consider the risk of AI support in education. Humans can monitor and evaluate the computational results from AI to reduce the risk, besides improving the security of AI algorithms.