Smart Traffic Data for the Analysis of Sustainable Travel Modes

Abstract

:1. Introduction

2. Materials and Methods

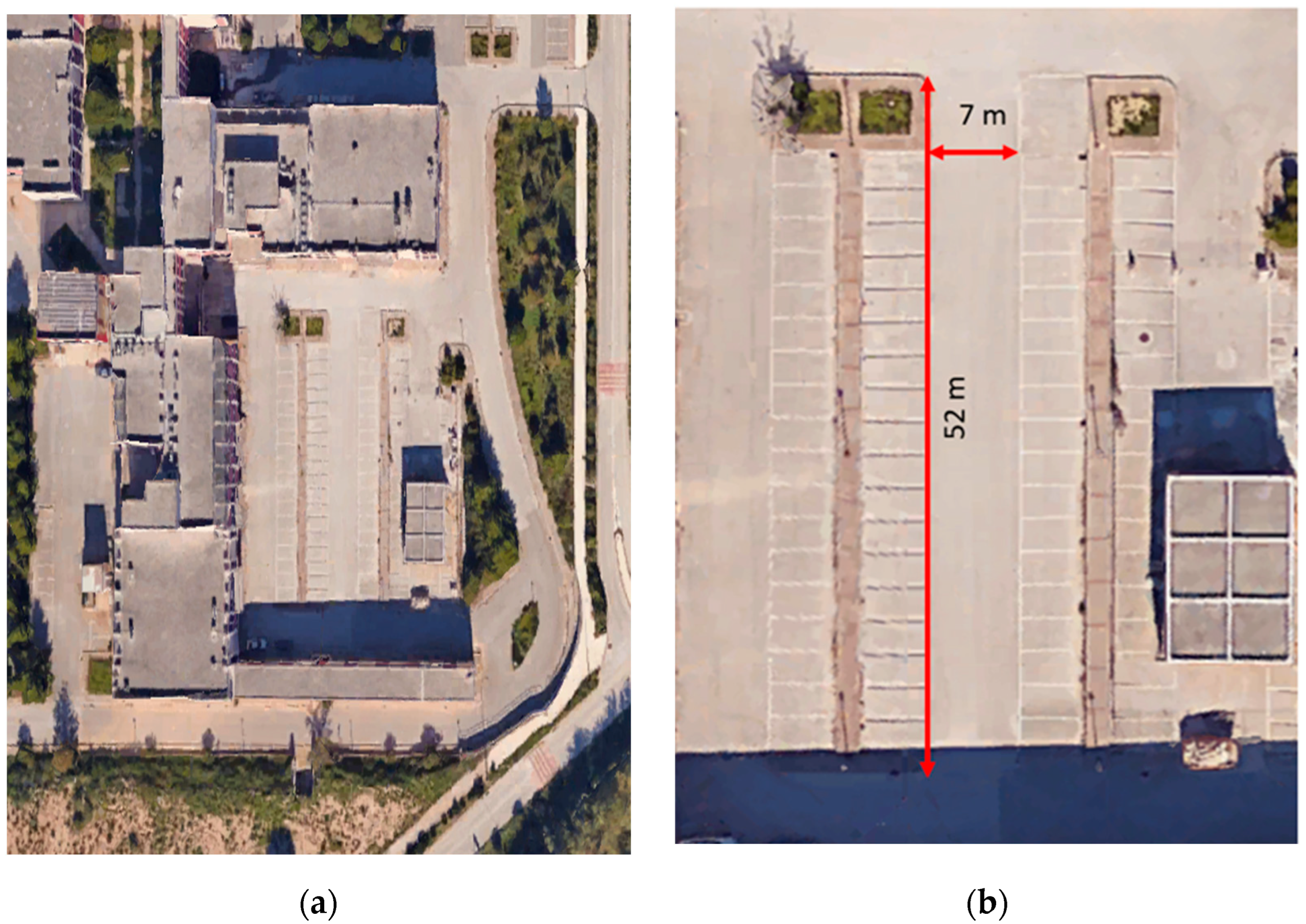

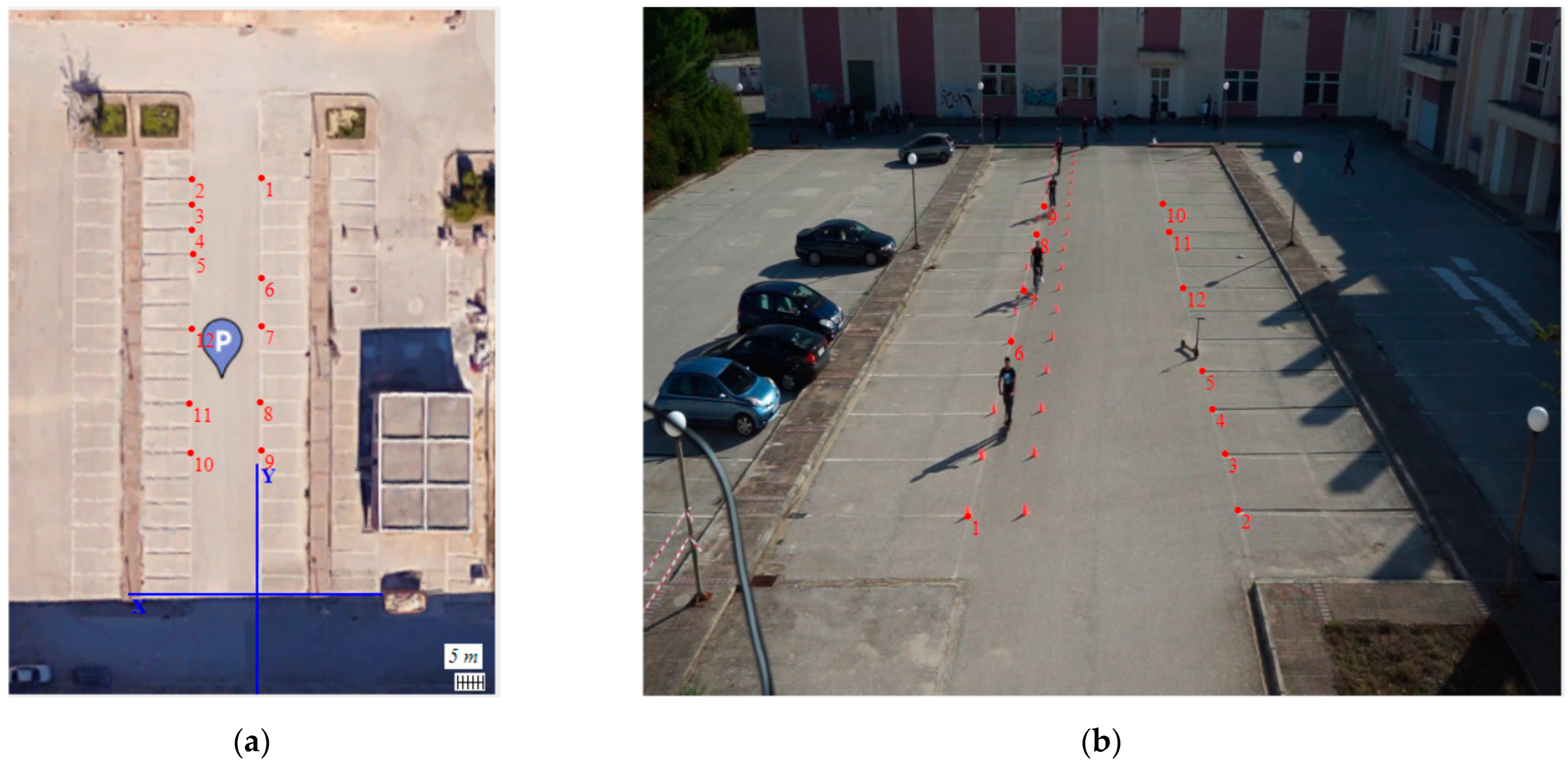

2.1. Experimental Set-Up

2.1.1. E-Scooter and Smartphone Characteristics

2.1.2. Experimental Scenarios

2.2. Image Analysis Software (μ-Scope)

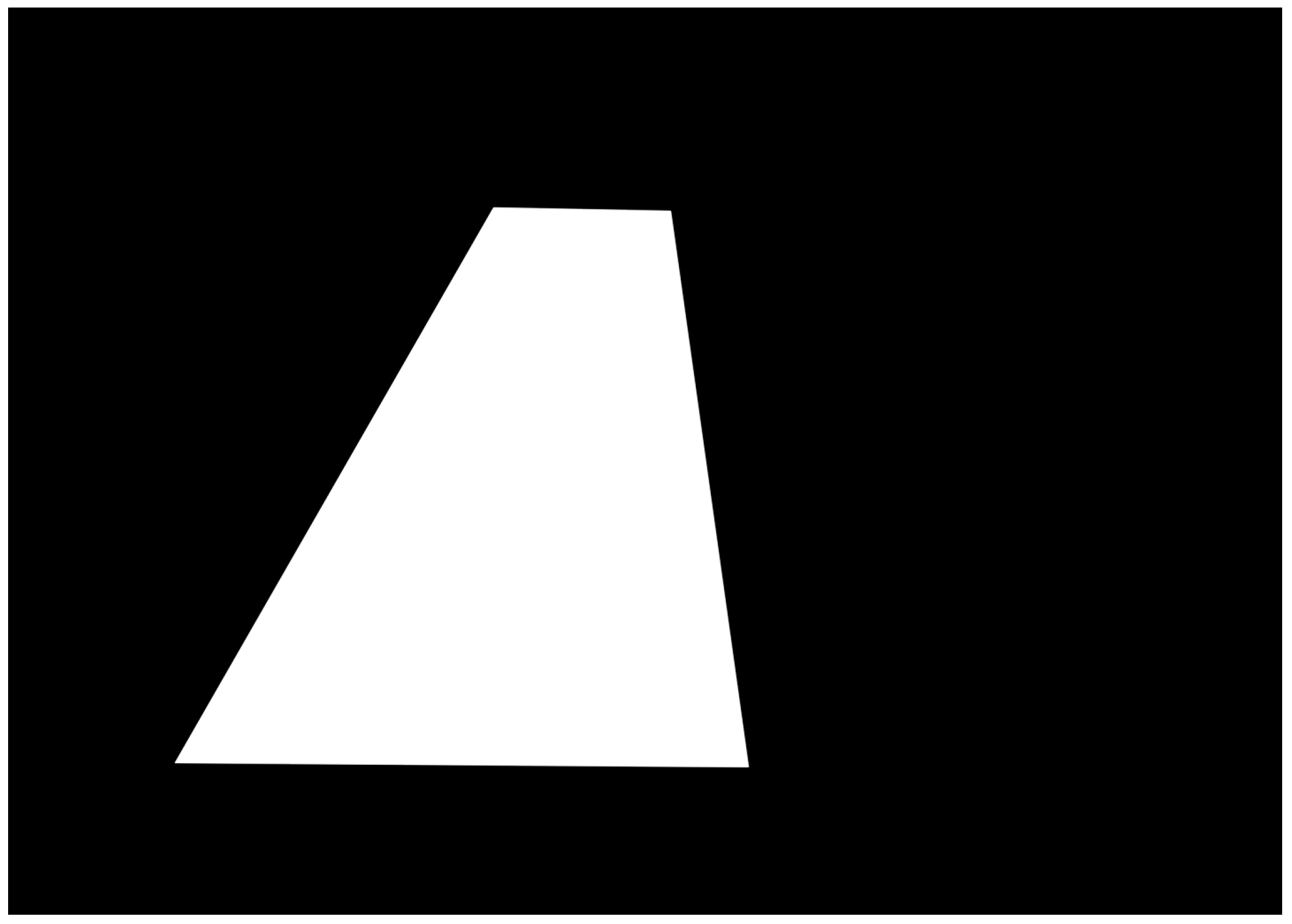

2.2.1. Preprocessing

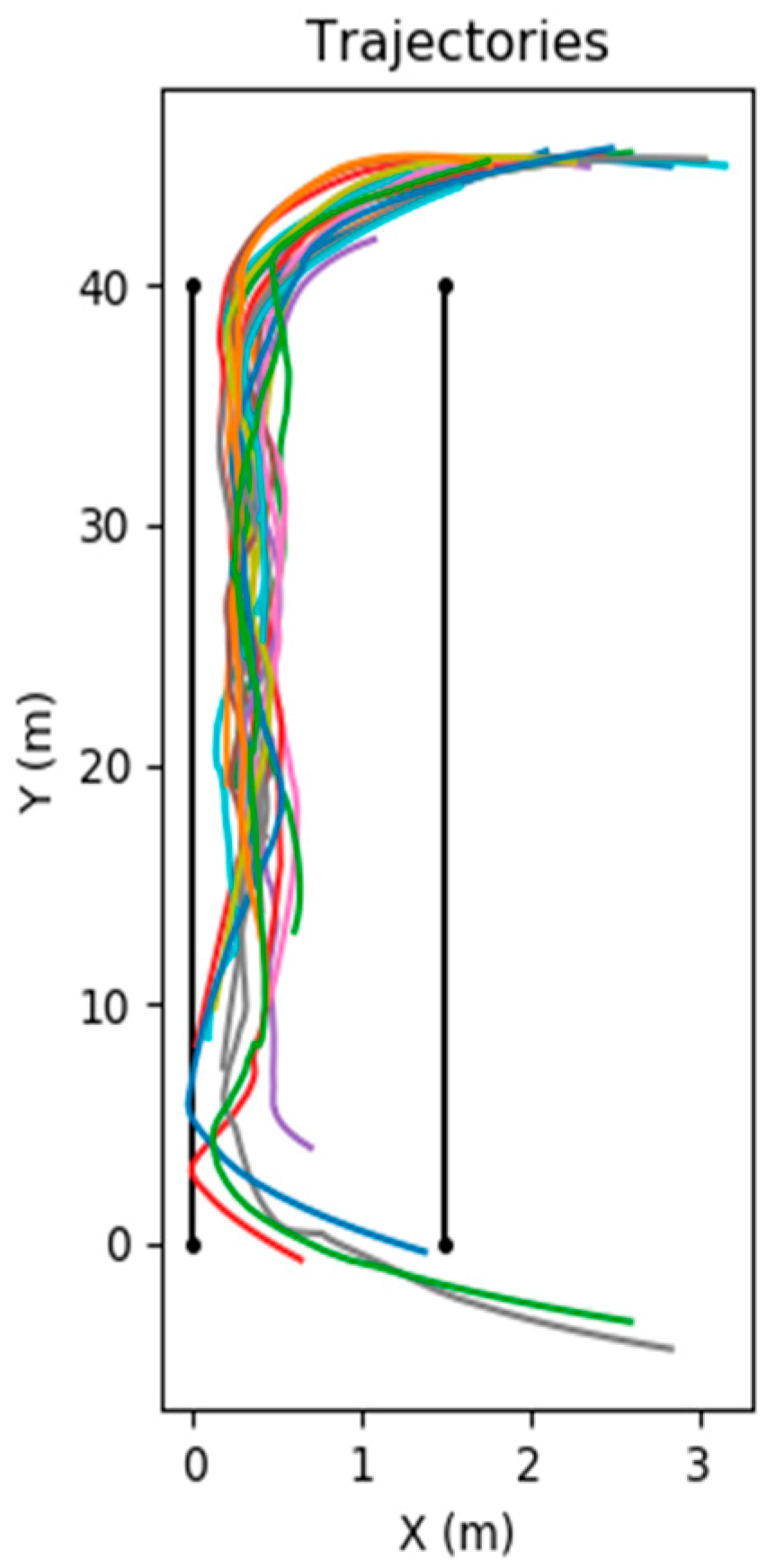

2.2.2. Processing: Trajectory Extraction

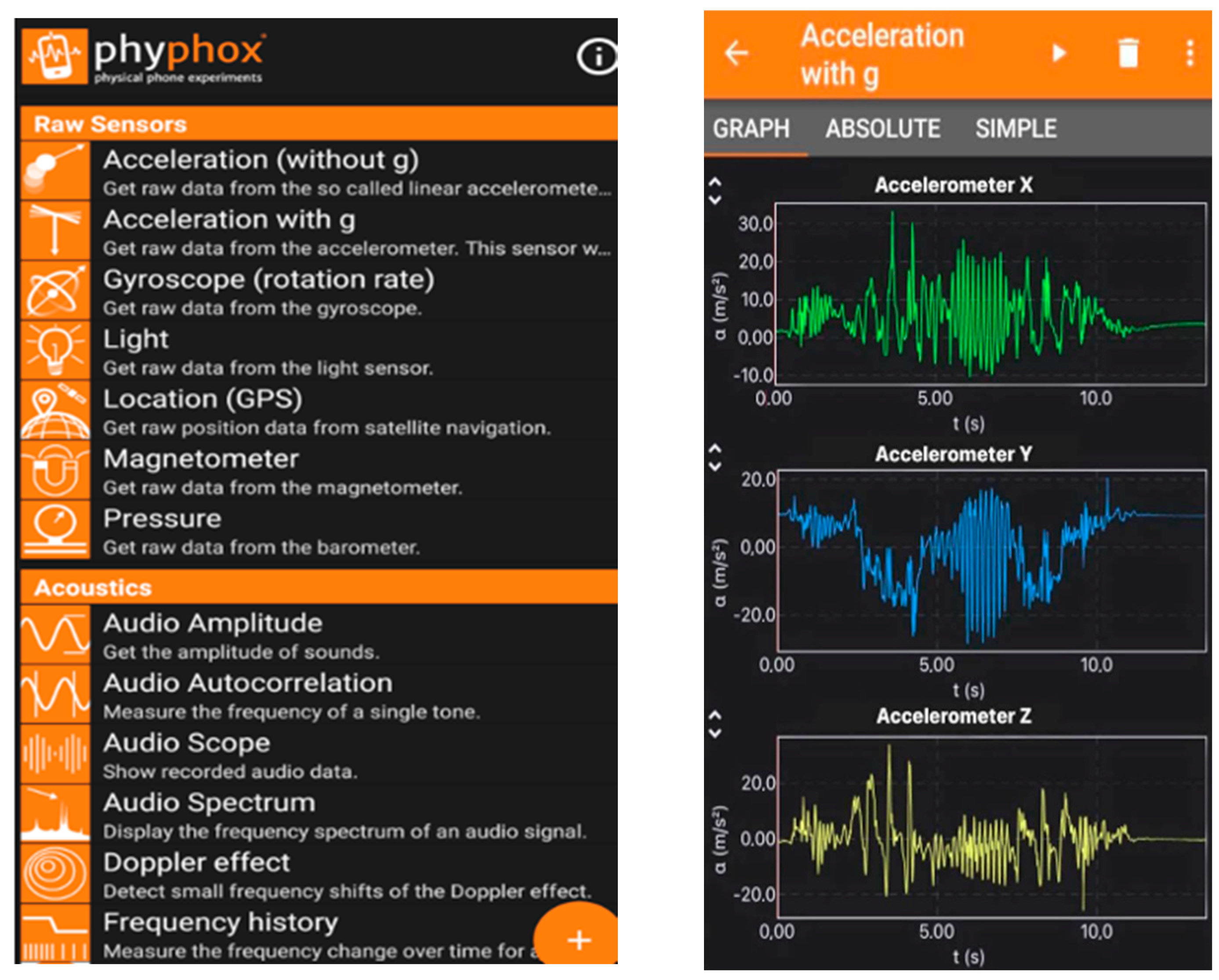

2.3. Phyphox

2.4. Assessment Methodology

3. Results

3.1. Error Analysis: Factors Influencing the Accuracy of Measurements

- Presence of pedestrians

- Rider being distracted

- Road width

- Direction of PMDs

- Direction of pedestrians

3.1.1. Presence of Pedestrians across the Study Area

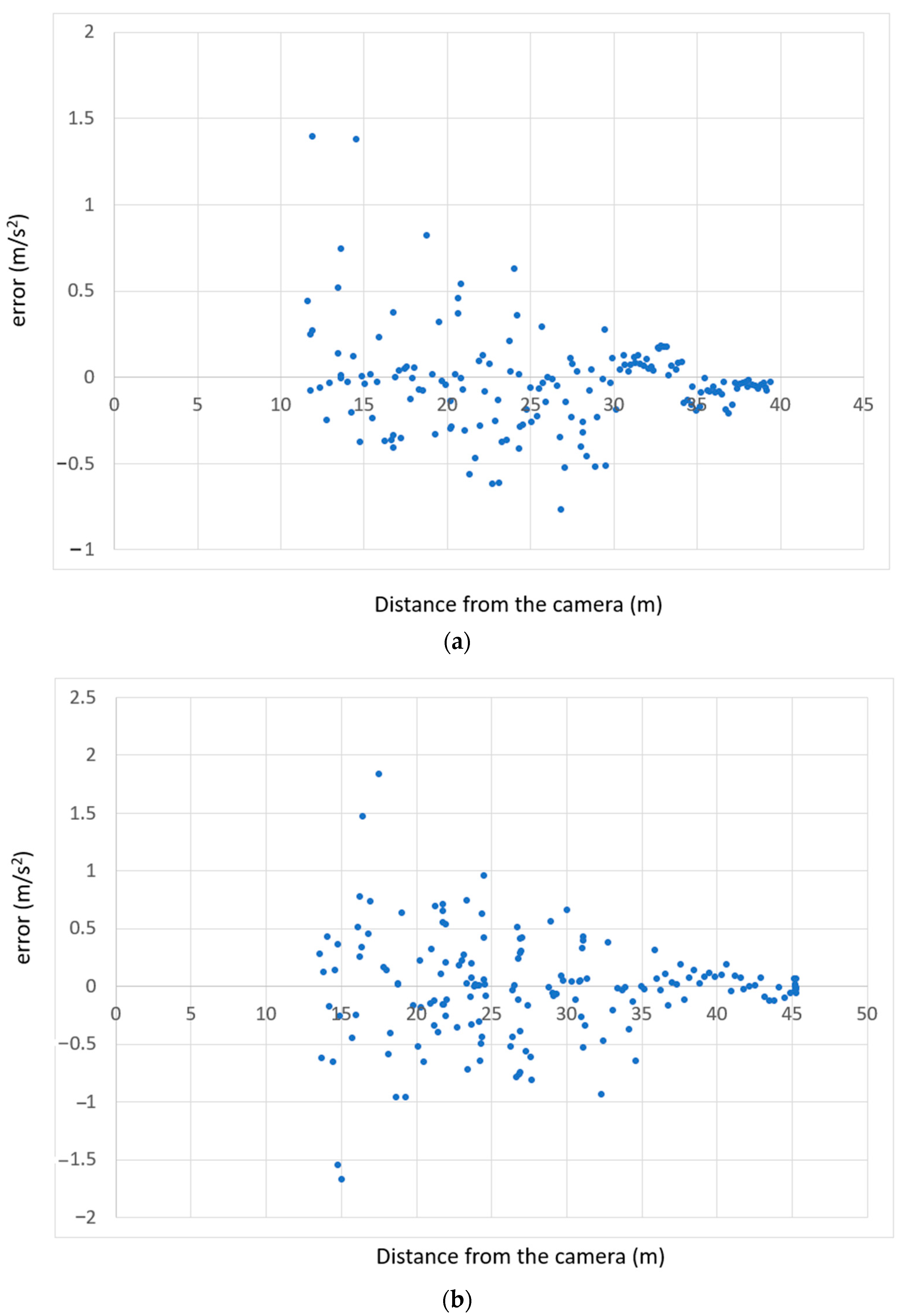

3.1.2. Road Width

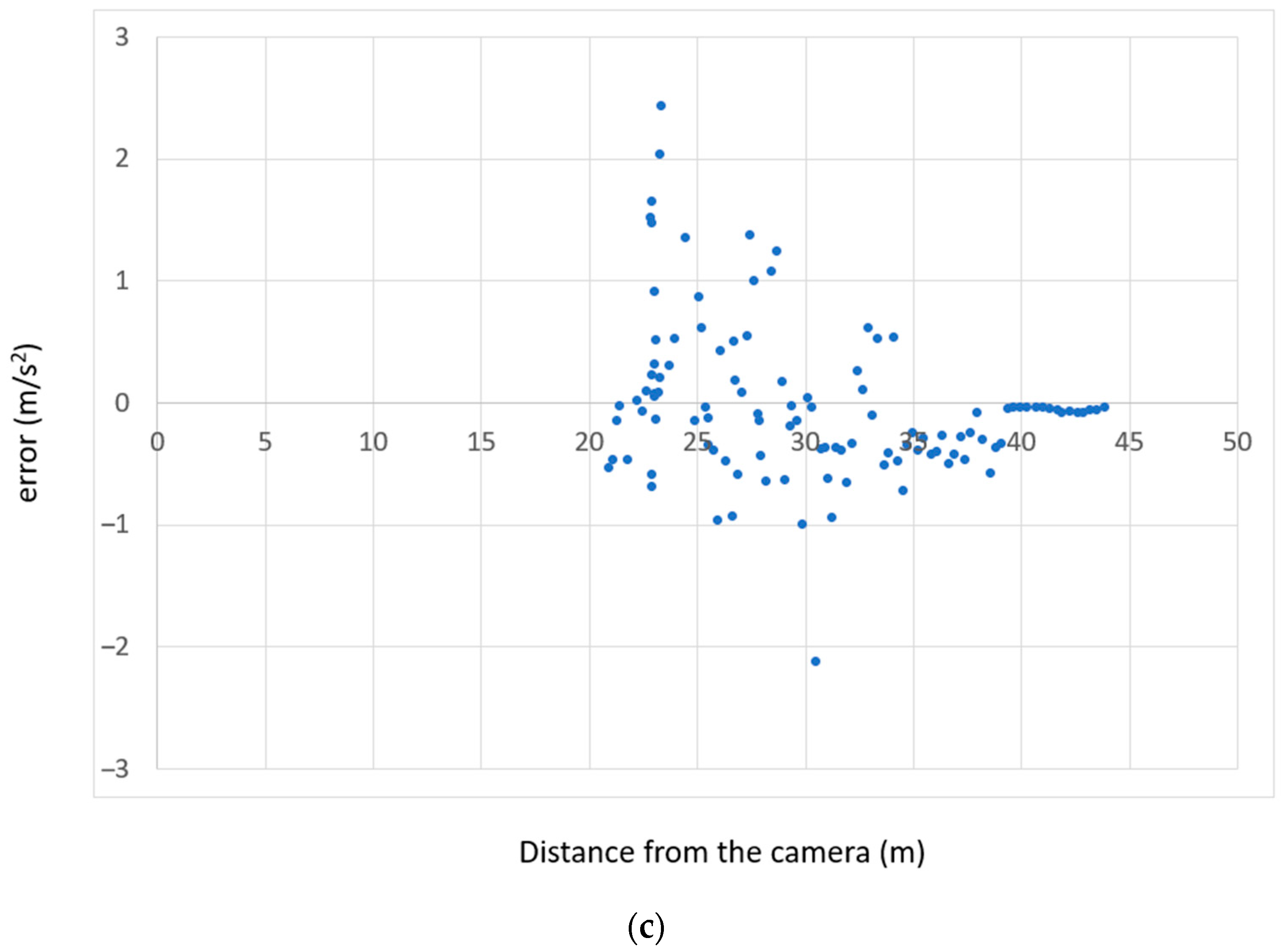

3.1.3. E-Scooter Direction

3.1.4. Pedestrian Direction

3.1.5. Rider Distraction

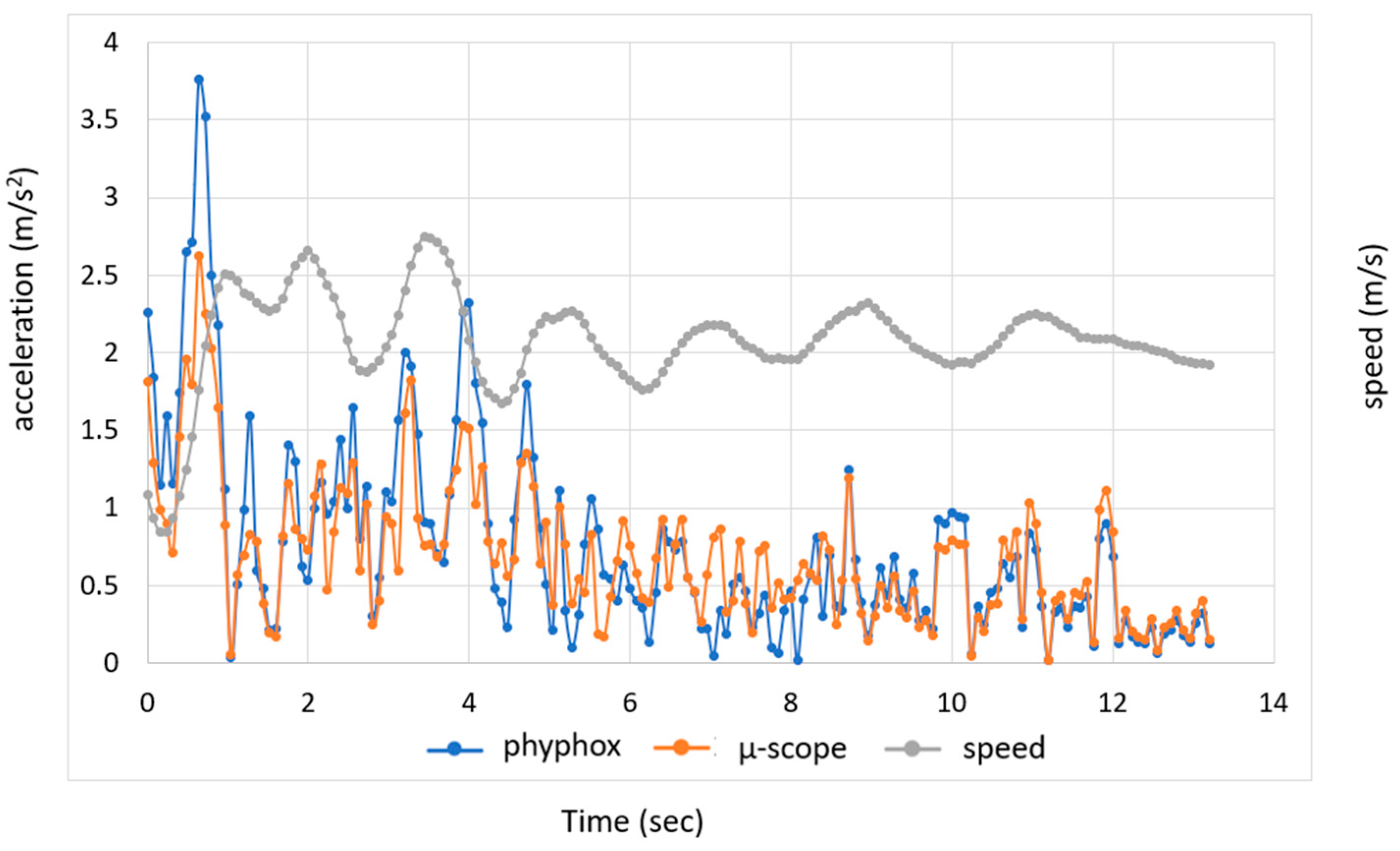

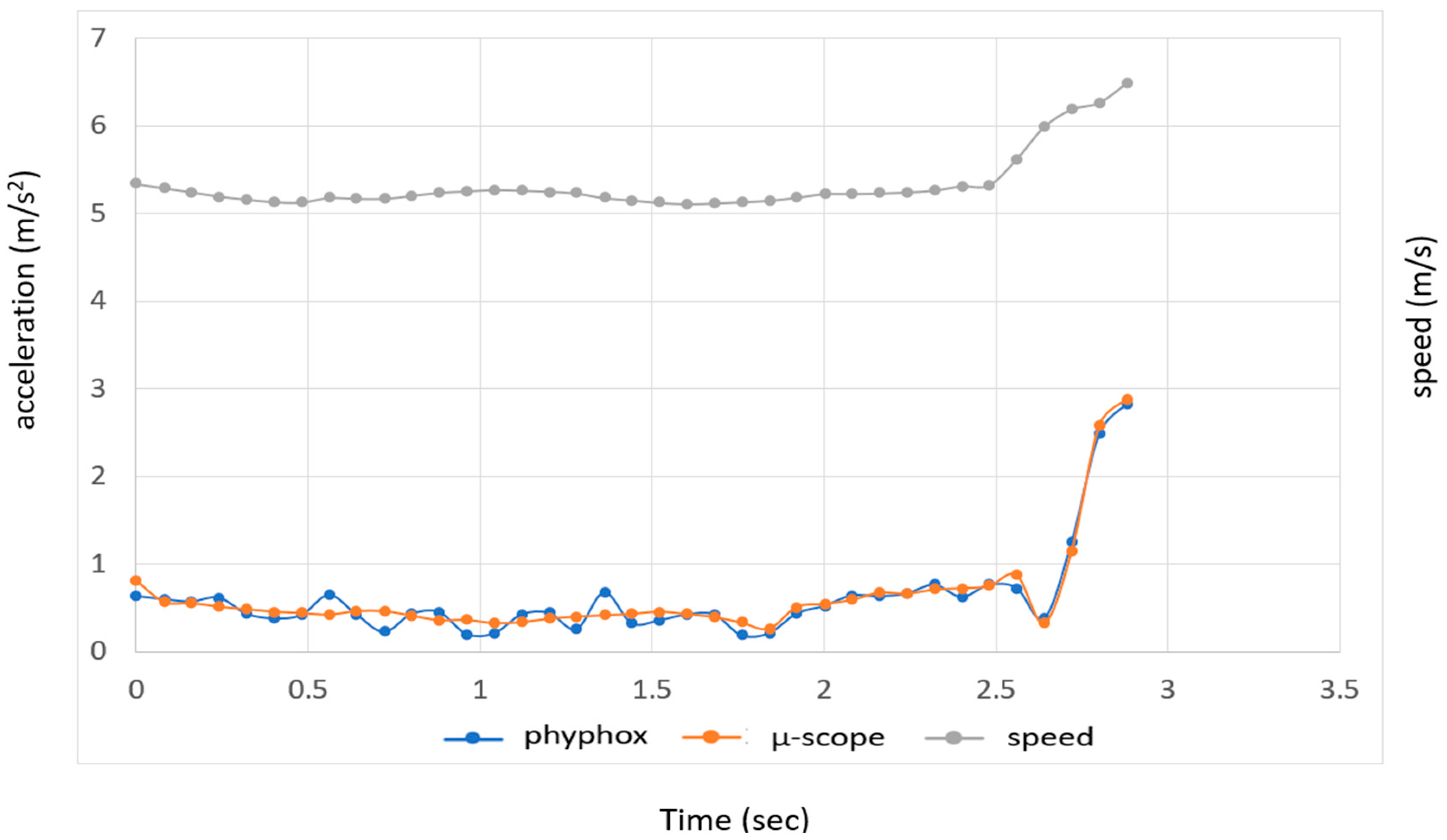

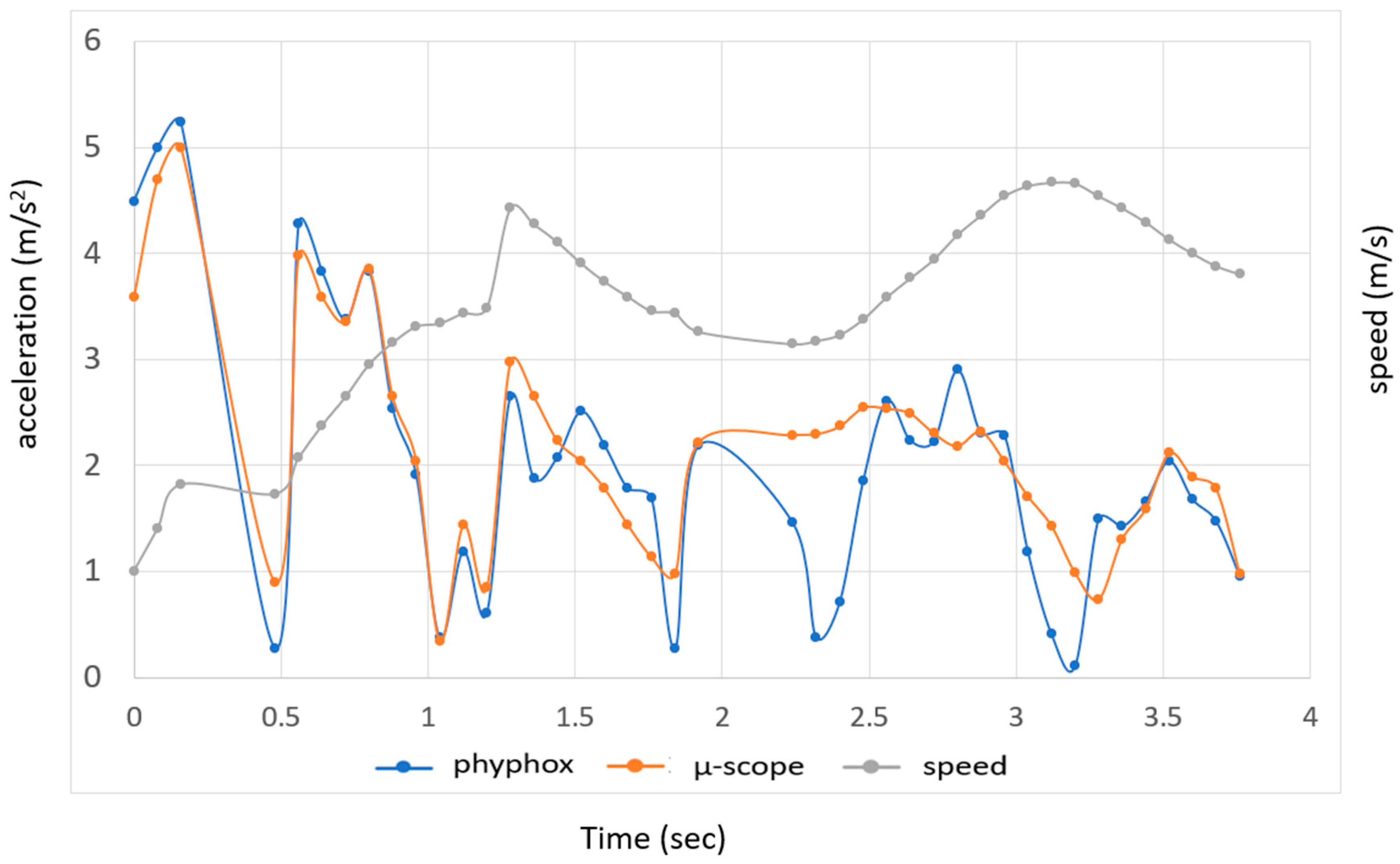

3.2. Error Analysis: Impact of Riding Style

4. Discussions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Zagorskas, J.; Marija Burinskienė, M. Challenges caused by increased use of e-powered personal mobility vehicles in European cities. Sustainability 2019, 12, 273. [Google Scholar] [CrossRef]

- Gössling, S. Integrating e-scooters in urban transportation: Problems, policies, and the prospect of system change. Transp. Res. Part D Transp. Environ. 2020, 79, 102230. [Google Scholar] [CrossRef]

- Wang, K.; Qian, X.; Fitch, D.T.; Lee, Y.; Malik, J.; Circella, G. What travel modes do shared e-scooters displace? A review of recent research findings. Transp. Rev. 2022, 1–27. [Google Scholar] [CrossRef]

- Moreau, H.; de Jamblinne de Meux, L.; Zeller, V.; D’Ans, P.; Ruwet, C.; Achten, W.M. Dockless e-scooter: A green solution for mobility? Comparative case study between dockless e-scooters, displaced transport, and personal e-scooters. Sustainability 2020, 12, 1803. [Google Scholar] [CrossRef]

- Dibaj, S.; Hosseinzadeh, A.; Mladenović, M.N.; Kluger, R. Where Have Shared E-Scooters Taken Us So Far? A Review of Mobility Patterns, Usage Frequency, and Personas. Sustainability 2021, 13, 11792. [Google Scholar] [CrossRef]

- Bozzi, A.D.; Aguilera, A. Shared E-scooters: A review of uses, health and environmental impacts, and policy implications of a new micro-mobility service. Sustainability 2021, 13, 8676. [Google Scholar] [CrossRef]

- Orozco-Fontalvo, M.; Llerena, L.; Cantillo, V. Dockless electric scooters: A review of a growing micromobility mode. Int. J. Sustain. Transp. 2022. [Google Scholar] [CrossRef]

- Marques, D.L.; Coelho, M.C. A Literature Review of Emerging Research Needs for Micromobility—Integration through a Life Cycle Thinking Approach. Future Transp. 2020, 2, 135–164. [Google Scholar] [CrossRef]

- Yang, H.; Ma, Q.; Wang, Z.; Cai, Q.; Xie, K.; Yang, D. Safety of micro-mobility: Analysis of E-Scooter crashes by mining news reports. Accid. Anal. Prev. 2020, 143, 105608. [Google Scholar] [CrossRef]

- McKenzie, G. Spatiotemporal comparative analysis of scooter-share and bike-share usage patterns in Washington, DC. J. Transp. Geogr. 2019, 78, 19–28. [Google Scholar] [CrossRef]

- Noland, R.B. Trip patterns and revenue of shared e-scooters in Louisville, Kentucky. Findings 2019, 7747. [Google Scholar] [CrossRef]

- Huo, J.; Yang, H.; Li, C.; Zheng, R.; Yang, L.; Wen, Y. Influence of the built environment on E-scooter sharing ridership: A tale of five cities. J. Transp. Geogr. 2021, 93, 103084. [Google Scholar] [CrossRef]

- Liu, M.; Seeder, S.; Li, H. Analysis of e-scooter trips and their temporal usage patterns. Inst. Transp. Engineers. ITE J. 2019, 89, 44–49. [Google Scholar]

- Orr, B.; MacArthur, J.; Dill, J. The Portland E-Scooter Experience. 2019. Available online: https://pdxscholar.library.pdx.edu/trec_seminar/163/ (accessed on 15 July 2022).

- Hosseinzadeh, A.; Algomaiah, M.; Kluger, R.; Li, Z. E-scooters and sustainability: Investigating the relationship between the density of E-scooter trips and characteristics of sustainable urban development. Sustain. Cities Soc. 2021, 66, 102624. [Google Scholar] [CrossRef]

- Bai, S.; Jiao, J. Dockless E-scooter usage patterns and urban built Environments: A comparison study of Austin, TX, and Minneapolis, MN. Travel Behav. Soc. 2020, 20, 264–272. [Google Scholar] [CrossRef]

- Jiao, J.; Bai, S. Understanding the shared e-scooter travels in Austin, TX. ISPRS Int. J. Geo-Inf. 2020, 9, 135. [Google Scholar] [CrossRef]

- Or, C.; Smart, M.J.; Noland, R.B. Spatial associations of dockless shared e-scooter usage. Transp. Res. Part D Transp. Environ. 2020, 86, 102396. [Google Scholar]

- Feng, Y.; Zhong, D.; Sun, P.; Zheng, W.; Cao, Q.; Luo, X.; Lu, Z. Micromobility in smart cities: A closer look at shared dockless e-scooters via big social data. In Proceedings of the ICC 2021—IEEE International Conference on Communications, Montreal, QC, Canada, 14–23 June 2021. [Google Scholar]

- Foissaud, N.; Gioldasis, C.; Tamura, S.; Christoforou, Z.; Farhi, N. Free-floating e-scooter usage in urban areas: A spatiotemporal analysis. J. Transp. Geogr. 2022, 100, 103335. [Google Scholar] [CrossRef]

- Chicco, A.; Diana, M. Understanding micro-mobility usage patterns: A preliminary comparison between dockless bike sharing and e-scooters in the city of Turin (Italy). Transp. Res. Procedia 2022, 62, 459–466. [Google Scholar] [CrossRef]

- Moran, M.E.; Laa, B.; Emberger, G. Six scooter operators, six maps: Spatial coverage and regulation of micromobility in Vienna, Austria. Case Stud. Transp. Policy 2020, 8, 658–671. [Google Scholar] [CrossRef]

- Younes, H.; Zou, Z.; Wu, J.; Baiocchi, G. Comparing the temporal determinants of dockless scooter-share and station-based bike-share in Washington, DC. Transp. Res. Part A Policy Pract. 2020, 134, 308–320. [Google Scholar] [CrossRef]

- Christoforou, Z.; de Bortoli, A.; Gioldasis, C.; Seidowsky, R. Who is using e-scooters and how? Evidence from Paris. Transp. Res. Part D Transp. Environ. 2021, 92, 102708. [Google Scholar] [CrossRef]

- Campisi, T.; Akgün-Tanbay, N.; Nahiduzzaman, K.M.; Dissanayake, D. Uptake of e-Scooters in Palermo, Italy: Do the Road Users Tend to Rent, Buy or Share? In Proceedings of the International Conference on Computational Science and Its Applications, Cagliari, Italy, 13–16 September 2021; Springer: Cham, Switzerland, 2021. [Google Scholar]

- Laa, B.; Leth, U. Survey of E-scooter users in Vienna: Who they are and how they ride. J. Transp. Geogr. 2020, 89, 102874. [Google Scholar] [CrossRef]

- Almannaa, M.; Alsahhaf, F.; Ashqar, H.; Elhenawy, M.; Masoud, M.; Rakotonirainy, A. Perception analysis of E-scooter riders and non-riders in Riyadh, Saudi Arabia: Survey outputs. Sustainability 2021, 13, 863. [Google Scholar] [CrossRef]

- Guo, Y.; Yu, Z. Understanding factors influencing shared e-scooter usage and its impact on auto mode substitution. Transp. Res. Part D Transp. Environ. 2021, 99, 102991. [Google Scholar] [CrossRef]

- Nikiforiadis, A.; Paschalidis, E.; Stamatiadis, N.; Raptopoulou, A.; Kostareli, A.; Basbas, S. Analysis of attitudes and engagement of shared e-scooter users. Transp. Res. Part D Transp. Environ. 2021, 94, 102790. [Google Scholar] [CrossRef]

- Latinopoulos, C.; Patrier, A.; Sivakumar, A. Planning for e-scooter use in metropolitan cities: A case study for Paris. Transp. Res. Part D Transp. Environ. 2021, 100, 103037. [Google Scholar] [CrossRef]

- Bahrami, F.; Rigal, A. Planning for plurality of streets: A spheric approach to micromobilities. Mobilities 2022, 17, 1–18. [Google Scholar] [CrossRef]

- Gioldasis, C.; Christoforou, Z.; Seidowsky, R. Risk-taking behaviors of e-scooter users: A survey in Paris. Accid. Anal. Prev. 2021, 163, 106427. [Google Scholar] [CrossRef]

- Gioldasis, C.; Christoforou, Z. Smart Infrastructure for Shared Mobility. In Proceedings of the Conference on Sustainable Urban Mobility, Skiathos Island, Greece, 17–19 June 2020; Springer: Cham, Switzerland, 2020. [Google Scholar]

- Kazemzadeh, K.; Sprei, F. Towards an electric scooter level of service: A review and framework. Travel Behav. Soc. 2022, 29, 149–164. [Google Scholar] [CrossRef]

- Dias, C.; Iryo-Asano, M.; Nishiuchi, H.; Todoroki, T. Calibrating a social force based model for simulating personal mobility vehicles and pedestrian mixed traffic. Simul. Model. Pract. Theory 2018, 87, 395–411. [Google Scholar] [CrossRef]

- Valero, Y.; et Antonelli, A.; Christoforou, Z.; Farhi, N.; Kabalan, B.; Gioldasis, C.; Foissaud, N. Adaptation and calibration of a social force based model to study interactions between electric scooters and pedestrians. In Proceedings of the 2020 IEEE 23rd International Conference on Intelligent Transportation Systems (ITSC), Rhodes, Greece, 20–23 September 2020. [Google Scholar]

- Glavić, D.; Trpković, A.; Milenković, M.; Jevremović, S. The E-Scooter Potential to Change Urban Mobility—Belgrade Case Study. Sustainability 2021, 13, 5948. [Google Scholar] [CrossRef]

- Kostareli, A.; Basbas, S.; Stamatiadis, N.; Nikiforiadis, A. Attitudes of e-scooter non-users towards users. In Proceedings of the Conference on Sustainable Urban Mobility, Skiathos Island, Greece, 17–19 June 2020; Springer: Cham, Switzerland, 2020; pp. 87–96. [Google Scholar]

- Nikiforiadis, A.; Basbas, S.; Campisi, T.; Tesoriere, G.; Garyfalou, M.I.; Meintanis, I.; Papas, T.; Trouva, M. Quantifying the negative impact of interactions between users of pedestrians-cyclists shared use space. In Proceedings of the International Conference on Computational Science and Its Applications, Cagliari, Italy, 1–4 July 2020; Springer: Cham, Switzerland, 2020; pp. 809–818. [Google Scholar]

- Che, M.; Lum, K.M.; Wong, Y.D. Users’ attitudes on electric scooter riding speed on shared footpath: A virtual reality study. Int. J. Sustain. Transp. 2021, 15, 152–161. [Google Scholar] [CrossRef]

- Yu, H.; Dias, C.; Iryo-Asano, M.; Nishiuchi, H. Modeling pedestrians’ subjective danger perception toward personal mobility vehicles. Transp. Res. Part F Traffic Psychol. Behav. 2018, 56, 256–267. [Google Scholar]

- Pashkevich, A.; Burghardt, T.E.; Puławska-Obiedowska, S.; Šucha, M. Visual attention and speeds of pedestrians, cyclists, and electric scooter riders when using shared road–a field eye tracker experiment. Case Stud. Transp. Policy 2022, 10, 549–558. [Google Scholar] [CrossRef]

- Kim, D.; Park, K. Analysis of potential collisions between pedestrians and personal transportation devices in a university campus: An application of unmanned aerial vehicles. J. Am. Coll. Health 2021. [Google Scholar] [CrossRef]

- Dozza, M.; Violin, A.; Rasch, A. A data-driven framework for the safe integration of micro-mobility into the transport system: Comparing bicycles and e-scooters in field trials. J. Saf. Res. 2022, 81, 67–77. [Google Scholar] [CrossRef]

- Phyphox. Available online: https://phyphox.org/ (accessed on 20 July 2022).

- Staacks, S.; Hütz, S.; Heinke, H.; Stampfer, C. Advanced tools for smartphone-based experiments: Phyphox. Phys. Educ. 2018, 53, 045009. [Google Scholar] [CrossRef]

- Koetsier, C.; Busch, S.; Sester, M. Trajectory extraction for analysis of unsafe driving behaviour. In Proceedings of the International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, Enschede, The Netherlands, 10–14 June 2019; pp. 1573–1578. [Google Scholar]

- Gruden, C.; Campisi, T.; Canale, A.; Tesoriere, G.; Sraml, M. A cross-study on video data gathering and microsimulation techniques to estimate pedestrian safety level in a confined space. IOP Conf. Ser. Mater. Sci. Eng. 2019, 603, 042008. [Google Scholar] [CrossRef]

- Gruden, C.; Ištoka Otković, I.; Šraml, M. An Eye-Tracking Study on the Effect of Different Signalized Intersection Typologies on Pedestrian Performance. Sustainability 2022, 14, 2112. [Google Scholar] [CrossRef]

- Johnsson, C.; Norén, H.; Laureshyn, A.; Ivina, D. T-Analyst-semi-automated tool for traffic conflict analysis. In InDeV, Horizon 2020 Project. Deliverable 6.1; Lund University: Lund, Sweden, 2018; Available online: https://ec.europa.eu/research/participants/documents/downloadPublic?documentIds=080166e5c2c9951c&appId=PPGMS (accessed on 15 July 2022).

- Tsai, R. A versatile camera calibration technique for high-accuracy 3D machine vision metrology using off-the-shelf TV cameras and lenses. IEEE J. Robot. Autom. 1987, 3, 323–344. [Google Scholar] [CrossRef]

- Jocher, G.; Chaurasia, A.; Stoken, A.; Borovec, J.; NanoCode012; Kwon, Y.; TaoXie; Fang, J.; imyhxy; Michael, K.; et al. Ultralytics/yolov5: v4. 0-nn. SiLU () Activations, Weights & Biases Logging, PyTorch Hub Integration. Zenodo. 2021. Available online: https://zenodo.org/record/4418161 (accessed on 15 July 2022).

- Wojke, N.; Bewley, A.; Paulus, D. Simple online and realtime tracking with a deep association metric. In Proceedings of the 2017 IEEE International Conference on Image Processing (ICIP), Beijing, China, 17–20 September 2017. [Google Scholar]

- Pelaez Barrajon, J.; Juan, A.F.S. Validity and reliability of a smartphone accelerometer for measuring lift velocity in bench-press exercises. Sustainability 2020, 12, 2312. [Google Scholar] [CrossRef]

- Kittiravechote, A.; Sujarittham, T. Smartphone as monitor of the gravitational acceleration: A classroom demonstration and student experiment. J. Phys. Conf. Ser. 2021, 1719, 012094. [Google Scholar] [CrossRef]

| Model | ||

|---|---|---|

| Characteristic | Xiaomi | Fiat F500-F85K |

| Maximum speed (km/h) | 18 | 20 |

| Wheel Diameter | 8.5″ | 8.5″ |

| Weight (kg) | 12 | 14 |

| Engine Power | 250 W | 350 W |

| Maximum Range (km) | 20 | 24.9 |

| Maximum user weight (kg) | 100 | 120 |

| Cruise Control | Yes | Yes |

| Vehicle | 1st | 2nd | 3rd |

|---|---|---|---|

| Device model | SM-A515F | Mi Note 10 Lite | Redmi Note 9 |

| Device brand | Samsung | Xiaomi | Redmi |

| Device board | exynos9611 | toco | joyeuse |

| Device manufacturer | Samsung | Xiaomi | Xiaomi |

| Accelerometer range | 78.4532 | 78.45318 | 78.45318 |

| Accelerometer analysis | 0.0023942 | 0.002392823 | 0.002392823 |

| Accelerometer MinDelay | 2000 | 2404 | 2404 |

| Accelerometer MaxDelay | 160,000 | 1,000,000 | 1,000,000 |

| Accelerometer Power | 0.15 | 0.17 | 0.15 |

| Accelerometer version | 15,932 | 142,338 | 140,549 |

| Range of linear acceleration | 78.4532 | 156.98999 | 156.98999 |

| Linear acceleration Analysis | 0.0023942 | 0.01 | 0.01 |

| MinDelay linear acceleration | 10,000 | 5000 | 5000 |

| MaxDelay linear acceleration | 0 | 200,000 | 200,000 |

| Linear acceleration Power | 1.9 | 0.515 | 0.515 |

| Linear acceleration Version | 1 | 1 | 1 |

| Scenarios | |||||||||

|---|---|---|---|---|---|---|---|---|---|

| S1 | S2 | S3 | S4 | S5 | S6 | S7 | S8 | S9 | |

| Width (m) | 1.5 | 1.5 | 1.5 | 1.5 | 2.5 | 2.5 | 2.5 | 2.5 | 3.5 |

| Distraction | No | Yes | No | Yes | No | Yes | No | Yes | No |

| E-scooter Direction | CW | CW | CCW | CCW | CW | CW | CCW | CCW | CW |

| Bicycle Direction | CCW | CCW | CW | CW | CCW | CCW | CW | CW | CCW |

| Pedestrian crowd | High | High | Very high | High | Average | Average | Low | Very Low | |

| Pedestrian crossing point | Yes | Yes | Yes | Yes | Yes | Yes | Yes | Yes | No |

| RMSE Value | |||||||||

|---|---|---|---|---|---|---|---|---|---|

| >0.1 | >0.2 | >0.3 | >0.4 | >0.5 | >0.6 | >0.7 | >0.8 | >0.9 | |

| Scenario | |||||||||

| E-Scooter | S9 | S7 | S8 | S2 | S5 | S1 | S6 | S3 | S4 |

| 1st | 0.1038 | 0.2716 | 0.2895 | 0.3577 | 0.2396 | 0.4531 | 0.4761 | 0.6925 | 0.6146 |

| 0.2920 | 0.2768 | 0.3342 | 0.2724 | 0.4472 | 0.6920 | 0.7229 | 0.8356 | 0.7677 | |

| 0.3466 | 0.2548 | 0.3254 | 0.5859 | 0.4212 | 0.6663 | 0.6803 | 0.6171 | ||

| 0.3794 | 0.3628 | 0.5338 | 0.3943 | 0.7216 | 0.6023 | 0.6992 | |||

| 0.3702 | 0.4305 | 0.4950 | 0.5413 | 0.6157 | 0.7223 | ||||

| 2nd | 0.1806 | 0.3924 | 0.2305 | 0.3985 | 0.5129 | 0.4607 | 0.6014 | 0.6765 | 0.9265 |

| 0.1224 | 0.3373 | 0.2492 | 0.3493 | 0.4126 | 0.5932 | 0.5829 | 0.9102 | 0.7914 | |

| 0.3347 | 0.2887 | 0.3393 | 0.4668 | 0.3912 | 0.5192 | 0.7697 | 0.7070 | ||

| 0.3361 | 0.4908 | 0.4803 | 0.4206 | 0.5289 | 0.6451 | 0.7839 | |||

| 0.2836 | 0.3060 | 0.3793 | 0.3643 | 0.4998 | 0.6217 | ||||

| 3rd | 0.2057 | 0.3517 | 0.3553 | 0.3014 | 0.4304 | 0.4482 | 0.5065 | 0.6179 | 0.6497 |

| 0.1041 | 0.2150 | 0.3045 | 0.3222 | 0.3156 | 0.4656 | 0.3709 | 0.9065 | 0.9823 | |

| 0.3893 | 0.2997 | 0.4492 | 0.3517 | 0.3913 | 0.4671 | 0.7015 | 0.6774 | ||

| 0.3332 | 0.3635 | 0.4717 | 0.5563 | 0.3503 | 0.7643 | 0.9098 | |||

| 0.3169 | 0.3364 | 0.5533 | 0.4420 | 0.4861 | 0.4221 | ||||

| RMSE Value | |||||||||

|---|---|---|---|---|---|---|---|---|---|

| >0.1 | >0.2 | >0.3 | >0.4 | >0.5 | >0.6 | >0.7 | >0.8 | >0.9 | |

| 1.5 m | 2.5 m | 2.5 m | 2.5 m | 2.5 m | 3.5 m | 3.5 m | 3.5 m | 3.5 m | |

| E-Scooter | S9 | S5 | S6 | S7 | S8 | S1 | S2 | S3 | S4 |

| 1st | 0.1038 | 0.2396 | 0.4761 | 0.2716 | 0.2895 | 0.4531 | 0.3577 | 0.6925 | 0.6146 |

| 0.2920 | 0.4472 | 0.7229 | 0.2768 | 0.3342 | 0.6920 | 0.2724 | 0.8356 | 0.7677 | |

| 0.5859 | 0.6663 | 0.3466 | 0.2548 | 0.4212 | 0.3254 | 0.6803 | 0.6171 | ||

| 0.5338 | 0.7216 | 0.3794 | 0.3943 | 0.3628 | 0.6023 | 0.6992 | |||

| 0.4950 | 0.6157 | 0.3702 | 0.5413 | 0.4305 | 0.7223 | ||||

| 2nd | 0.1806 | 0.5129 | 0.6014 | 0.3924 | 0.2305 | 0.4607 | 0.3985 | 0.6765 | 0.9265 |

| 0.1224 | 0.4126 | 0.5829 | 0.3373 | 0.2492 | 0.5932 | 0.3493 | 0.9102 | 0.7914 | |

| 0.4668 | 0.5192 | 0.3347 | 0.2887 | 0.3912 | 0.3393 | 0.7697 | 0.7070 | ||

| 0.4803 | 0.5289 | 0.3361 | 0.4206 | 0.4908 | 0.6451 | 0.7839 | |||

| 0.3793 | 0.4998 | 0.2836 | 0.3643 | 0.3060 | 0.6217 | ||||

| 3rd | 0.2057 | 0.4304 | 0.5065 | 0.3517 | 0.3553 | 0.4482 | 0.3014 | 0.6179 | 0.6497 |

| 0.1041 | 0.3156 | 0.3709 | 0.2150 | 0.3045 | 0.4656 | 0.3222 | 0.9065 | 0.9823 | |

| 0.3517 | 0.4671 | 0.3893 | 0.2997 | 0.3913 | 0.4492 | 0.7015 | 0.6774 | ||

| 0.4717 | 0.3503 | 0.3332 | 0.5563 | 0.3635 | 0.7643 | 0.9098 | |||

| 0.5533 | 0.4861 | 0.3169 | 0.4420 | 0.3364 | 0.4221 | ||||

| S2 | S6 | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| E-Scooter | RMSE | Average Error | Aver. μ-Scope | Accel. Phyphox | Speed | E-Scooter | RMSE | Average Error | Aver. μ-Scope | Accel. Phyphox | Speed |

| 1st | 0.358 | −0.096 | 0.586 | 0.682 | 2.031 | 1st | 0.476 | −0.029 | 1.196 | 1.225 | 2.500 |

| 0.272 | −0.062 | 0.906 | 1.447 | 1.542 | 0.723 | 0.016 | 0.721 | 0.605 | 2.134 | ||

| 0.325 | −0.058 | 0.749 | 1.706 | 1.726 | 0.666 | −0.179 | 0.695 | 0.874 | 2.532 | ||

| 0.363 | −0.061 | 1.082 | 1.760 | 1.834 | 0.722 | −0.142 | 0.815 | 0.957 | 4.109 | ||

| 0.431 | −0.064 | 1.079 | 1.632 | 1.855 | 0.616 | −0.030 | 1.255 | 1.185 | 2.011 | ||

| 2nd | 0.399 | −0.043 | 0.678 | 0.846 | 1.715 | 2nd | 0.601 | −0.169 | 1.131 | 1.300 | 0.484 |

| 0.349 | −0.109 | 0.965 | 1.082 | 3.088 | 0.583 | 0.020 | 1.290 | 1.269 | 2.667 | ||

| 0.339 | −0.095 | 1.239 | 0.977 | 4.043 | 0.519 | −0.349 | 2.720 | 2.069 | 2.116 | ||

| 0.491 | 0.133 | 1.988 | 1.855 | 2.147 | 0.529 | 0.193 | 1.340 | 1.146 | 3.204 | ||

| 0.500 | −0.022 | 1.706 | 1.711 | 0.378 | |||||||

| 3rd | 0.301 | −0.121 | 0.577 | 0.901 | 3.204 | 3rd | 0.507 | −0.026 | 0.946 | 0.972 | 2.405 |

| 0.322 | −0.041 | 1.115 | 1.156 | 4.189 | 0.371 | 0.092 | 1.074 | 1.082 | 2.708 | ||

| 0.449 | −0.174 | 2.038 | 1.489 | 5.199 | 0.467 | −0.403 | 1.212 | 1.215 | 2.287 | ||

| 0.363 | −0.083 | 0.417 | 0.999 | 4.979 | 0.350 | −0.123 | 0.859 | 0.682 | 2.747 | ||

| 0.336 | −0.068 | 0.737 | 0.806 | 2.165 | 0.486 | −0.006 | 0.799 | 0.705 | 2.419 | ||

| S1 | S3 | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| E-Scooter | RMSE | Av. Er. | Accel. μ-Scope | Accel. Phyphox | Speed | E-Scooter | RMSE | Av. Er. | Camera | Phyphox | Speed |

| 1st | 0.4531 | −0.1076 | 0.6661 | 0.7737 | 1.0857 | 1st | 0.6925 | −0.1156 | 1.0724 | 1.1880 | 3.2952 |

| 0.4920 | 0.6920 | 2.0281 | 1.3550 | 1.5660 | 0.8356 | −0.0566 | 1.1769 | 1.2543 | 2.1451 | ||

| 0.4212 | −0.3246 | 1.4295 | 1.7541 | 1.9186 | 0.6803 | −0.1244 | 0.6624 | 0.7867 | 2.6110 | ||

| 0.3943 | −0.0785 | 0.8217 | 0.9003 | 1.1819 | 0.6023 | −0.0825 | 0.8987 | 0.9812 | 2.8299 | ||

| 0.5413 | −0.0602 | 0.8956 | 0.9558 | 1.6780 | 0.7223 | −0.0415 | 0.5518 | 0.5933 | 4.2778 | ||

| 2nd | 0.4607 | −0.0228 | 1.0721 | 1.0493 | 0.9119 | 2nd | 0.6765 | −0.1223 | 1.2961 | 1.4184 | 3.1886 |

| 0.5932 | −0.0177 | 1.5021 | 1.5459 | 1.6828 | 0.9102 | −0.1491 | 1.8534 | 2.0446 | 2.1460 | ||

| 0.3912 | −0.0112 | 1.4907 | 1.5018 | 2.7937 | 0.7697 | −0.0103 | 2.3175 | 2.3277 | 2.4185 | ||

| 0.4206 | −0.1669 | 0.7439 | 0.9108 | 1.1714 | 0.6451 | −0.0712 | 1.2652 | 1.3142 | 3.5030 | ||

| 0.3643 | −0.0785 | 0.6414 | 0.7249 | 1.6760 | 0.6217 | −0.0712 | |||||

| 3rd | 0.4482 | −0.0161 | 0.6984 | 0.7145 | 1.4459 | 3rd | 0.6179 | −0.1538 | 0.9878 | 1.1416 | 4.5734 |

| 0.4656 | −0.0197 | 0.9773 | 0.9972 | 1.5293 | 0.9065 | −0.1049 | 1.4452 | 1.5102 | 2.3506 | ||

| 0.3913 | −0.2639 | 0.6883 | 0.7405 | 1.5222 | 0.7015 | 0.0191 | 1.1888 | 1.1697 | 2.4805 | ||

| 0.5563 | −0.0522 | 0.9463 | 1.2101 | 2.2565 | 0.7643 | −0.0591 | 1.5204 | 1.5795 | 2.7048 | ||

| 0.4420 | −0.0355 | 0.7369 | 0.7993 | 1.4801 | 0.4221 | −0.1039 | 0.9385 | 1.0424 | 3.9259 | ||

| S5 | S7 | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| E-Scooter | RMSE | Av. Er. | Accel. μ-Scope | Accel. Phyphox | Speed | E-Scooter | RMSE | Av. Er. | Accel. μ-Scope | Accel. Phyphox | Speed |

| 1st | 0.2396 | 0.0452 | 0.3130 | 0.2679 | 4.1537 | 1st | 0.2716 | −0.0023 | 0.7596 | 0.7619 | 2.2257 |

| 0.4472 | −0.4165 | 0.8171 | 1.2336 | 3.2815 | 0.2768 | −0.0333 | 0.3065 | 0.3398 | 0.4194 | ||

| 0.5859 | 0.1761 | 0.6627 | 0.4866 | 3.4829 | 0.3466 | −0.2373 | 1.1271 | 1.6645 | 2.3519 | ||

| 0.5338 | 0.0850 | 1.0541 | 0.9691 | 3.5603 | |||||||

| 2nd | 0.5129 | −0.0919 | 1.0341 | 1.1259 | 4.0967 | 2nd | 0.3924 | −0.0234 | 0.5151 | 0.5335 | 0.5969 |

| 0.4126 | 0.1035 | 1.0820 | 0.9785 | 0.3133 | 0.3373 | 0.0906 | 0.4357 | 0.3452 | 0.6318 | ||

| 0.4668 | −0.1224 | 0.6187 | 1.0352 | 3.0831 | 0.3347 | 0.0910 | 1.5233 | 1.9323 | 4.6892 | ||

| 0.4803 | −0.3217 | 0.4642 | 0.2881 | 3.2844 | |||||||

| 0.3793 | −0.4788 | 0.8556 | 0.7707 | 3.3619 | |||||||

| 3rd | 0.4304 | −0.0112 | 0.9894 | 1.0006 | 4.1605 | 3rd | 0.3517 | −0.0060 | 0.3502 | 0.3562 | 0.5948 |

| 0.3156 | −0.0352 | 0.8996 | 0.9348 | 4.2043 | 0.2150 | 0.0399 | 1.2158 | 1.1759 | 2.8876 | ||

| 0.3517 | −0.0310 | 0.7473 | 0.7784 | 4.0477 | 0.3893 | 0.0605 | 0.6672 | 0.6067 | 2.5315 | ||

| 0.4717 | 0.1735 | 0.6328 | 0.4593 | 3.9034 | |||||||

| 0.5533 | 0.1846 | 0.6796 | 0.4949 | 3.3578 | |||||||

| RMSE Value | |||||||||

|---|---|---|---|---|---|---|---|---|---|

| >0.1 | >0.2 | >0.3 | >0.4 | >0.5 | >0.6 | >0.7 | >0.8 | >0.9 | |

| Scenario | |||||||||

| E-Scooter | S1 | S2 | S3 | S4 | S5 | S6 | S7 | S8 | S9 |

| 1st | 0.4531 | 0.3577 | 0.5925 | 0.6145 | 0.2396 | 0.6761 | 0.2715 | 0.2895 | 0.1037 |

| 0.4920 | 0.2723 | 0.6355 | 0.7676 | 0.4471 | 0.7229 | 0.2768 | 0.3341 | 0.2220 | |

| 0.4212 | 0.3253 | 0.5803 | 0.6170 | 0.5858 | 0.6662 | 0.3466 | 0.2547 | ||

| 0.3942 | 0.3628 | 0.6023 | 0.6991 | 0.5337 | 0.7215 | 0.3794 | |||

| 0.5413 | 0.4305 | 0.6222 | 0.4949 | 0.6157 | 0.3701 | ||||

| 2nd | 0.4607 | 0.3985 | 0.4065 | 0.9265 | 0.5129 | 0.6014 | 0.3924 | 0.2305 | 0.1806 |

| 0.5932 | 0.3493 | 0.9102 | 0.7914 | 0.4126 | 0.5829 | 0.3373 | 0.2492 | 0.1224 | |

| 0.3912 | 0.3393 | 0.4697 | 0.7070 | 0.4668 | 0.5192 | 0.3347 | 0.2887 | ||

| 0.4206 | 0.4908 | 0.4451 | 0.7839 | 0.4803 | 0.5289 | 0.3361 | |||

| 0.3643 | 0.3060 | 0.6217 | 0.3793 | 0.4998 | 0.2836 | ||||

| 3rd | 0.4482 | 0.3014 | 0.4179 | 0.6497 | 0.4304 | 0.5065 | 0.3517 | 0.3553 | 0.2057 |

| 0.4656 | 0.3222 | 0.9065 | 0.9823 | 0.3156 | 0.3709 | 0.2150 | 0.3045 | 0.1041 | |

| 0.3913 | 0.4492 | 0.6015 | 0.6774 | 0.3517 | 0.4671 | 0.2093 | 0.2997 | ||

| 0.5563 | 0.3635 | 0.5643 | 0.9098 | 0.4717 | 0.3503 | 0.3332 | |||

| 0.4420 | 0.3364 | 0.4221 | 0.5533 | 0.4861 | 0.3169 | ||||

| Scenario | |||||||||

|---|---|---|---|---|---|---|---|---|---|

| E-Scooter | S1 | S2 | S3 | S4 | S5 | S6 | S7 | S8 | S9 |

| 1st | −0.1076 | −0.0958 | −0.1156 | 0.3550 | 0.0452 | −0.0290 | −0.0023 | −0.0714 | −0.0218 |

| 0.0673 | −0.0622 | −0.0566 | −0.0339 | −0.4165 | 0.0164 | −0.0333 | −0.2883 | −0.0060 | |

| −0.3246 | −0.0583 | −0.1244 | 0.2467 | 0.1761 | −0.1786 | −0.2373 | −0.1010 | ||

| −0.0785 | −0.0605 | −0.0825 | −0.1943 | 0.0850 | −0.1418 | −0.0027 | |||

| −0.0602 | −0.0643 | −0.0415 | −0.0305 | −0.0875 | |||||

| 2nd | −0.0228 | −0.0433 | −0.1223 | 0.0116 | −0.0919 | −0.1687 | −0.0234 | −0.0617 | −0.0276 |

| −0.0177 | −0.1091 | 0.4914 | 0.2475 | 0.1035 | 0.0204 | −0.0906 | −0.1632 | −0.0290 | |

| −0.0112 | −0.0949 | −0.0103 | 0.6073 | −0.1224 | −0.3493 | 0.0910 | −0.0569 | ||

| −0.1669 | 0.1333 | −0.0712 | −0.4161 | −0.3217 | 0.1932 | −0.0391 | |||

| −0.0785 | −0.0712 | −0.4788 | −0.0217 | 0.0238 | |||||

| 3rd | −0.0161 | −0.1212 | −0.1538 | −0.0554 | −0.0112 | −0.0261 | −0.0060 | −0.1911 | −0.0598 |

| −0.0197 | −0.0413 | −0.1049 | −0.2540 | −0.0352 | 0.0916 | −0.0399 | −0.1643 | −0.0788 | |

| −0.2639 | −0.1741 | 0.0191 | −0.1156 | −0.0310 | −0.4031 | −0.0605 | 0.3347 | ||

| −0.0522 | −0.0826 | −0.0591 | −0.4121 | 0.1735 | −0.1227 | −0.2627 | |||

| −0.0355 | −0.0683 | −0.1039 | 0.1846 | −0.0063 | −0.2615 | ||||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Christoforou, Z.; Gioldasis, C.; Valero, Y.; Vasileiou-Voudouris, G. Smart Traffic Data for the Analysis of Sustainable Travel Modes. Sustainability 2022, 14, 11150. https://doi.org/10.3390/su141811150

Christoforou Z, Gioldasis C, Valero Y, Vasileiou-Voudouris G. Smart Traffic Data for the Analysis of Sustainable Travel Modes. Sustainability. 2022; 14(18):11150. https://doi.org/10.3390/su141811150

Chicago/Turabian StyleChristoforou, Zoi, Christos Gioldasis, Yeltsin Valero, and Grigoris Vasileiou-Voudouris. 2022. "Smart Traffic Data for the Analysis of Sustainable Travel Modes" Sustainability 14, no. 18: 11150. https://doi.org/10.3390/su141811150

APA StyleChristoforou, Z., Gioldasis, C., Valero, Y., & Vasileiou-Voudouris, G. (2022). Smart Traffic Data for the Analysis of Sustainable Travel Modes. Sustainability, 14(18), 11150. https://doi.org/10.3390/su141811150