Abstract

Automated Vehicles (AVs) are attracting attention as a safer mobility option thanks to the recent advancement of various sensing technologies that realize a much quicker Perception–Reaction Time than Human-Driven Vehicles (HVs). However, AVs are not entirely free from the risk of accidents, and we currently lack a systematic and reliable method to improve AV safety functions. The manual composition of accident scenarios does not scale. Simulation-based methods do not fully cover the peculiar AV accident patterns that can occur in the real world. Artificial Intelligence (AI) techniques are employed to identify the moments of accidents from ego-vehicle videos. However, most AI-based approaches fall short in accounting for the probable causes of the accidents. Neither of these AI-driven methods offer details for authoring accident scenarios used for AV safety testing. In this paper, we present a customized Vision Transformer (named ViT-TA) that accurately classifies the critical situations around traffic accidents and automatically points out the objects as probable causes based on an Attention map. Using 24,740 frames from Dashcam Accident Dataset (DAD) as training data, ViT-TA detected critical moments at Time-To-Collision (TTC) ≤ 1 s with 34.92 higher accuracy than the state-of-the-art approach. ViT-TA’s Attention map highlighting the critical objects helped us understand how the situations unfold to put the hypothetical ego vehicles with AV functions at risk. Based on the ViT-TA-assisted interpretation, we systematized the composition of Functional scenarios conceptualized by the PEGASUS project for describing a high-level plan to improve AVs’ capability of evading critical situations. We propose a novel framework for automatically deriving Logical and Concrete scenarios specified with 6-Layer situational variables defined by the PEGASUS project. We believe our work is vital towards systematically generating highly reliable and trustworthy safety improvement plans for AVs in a scalable manner.

1. Introduction

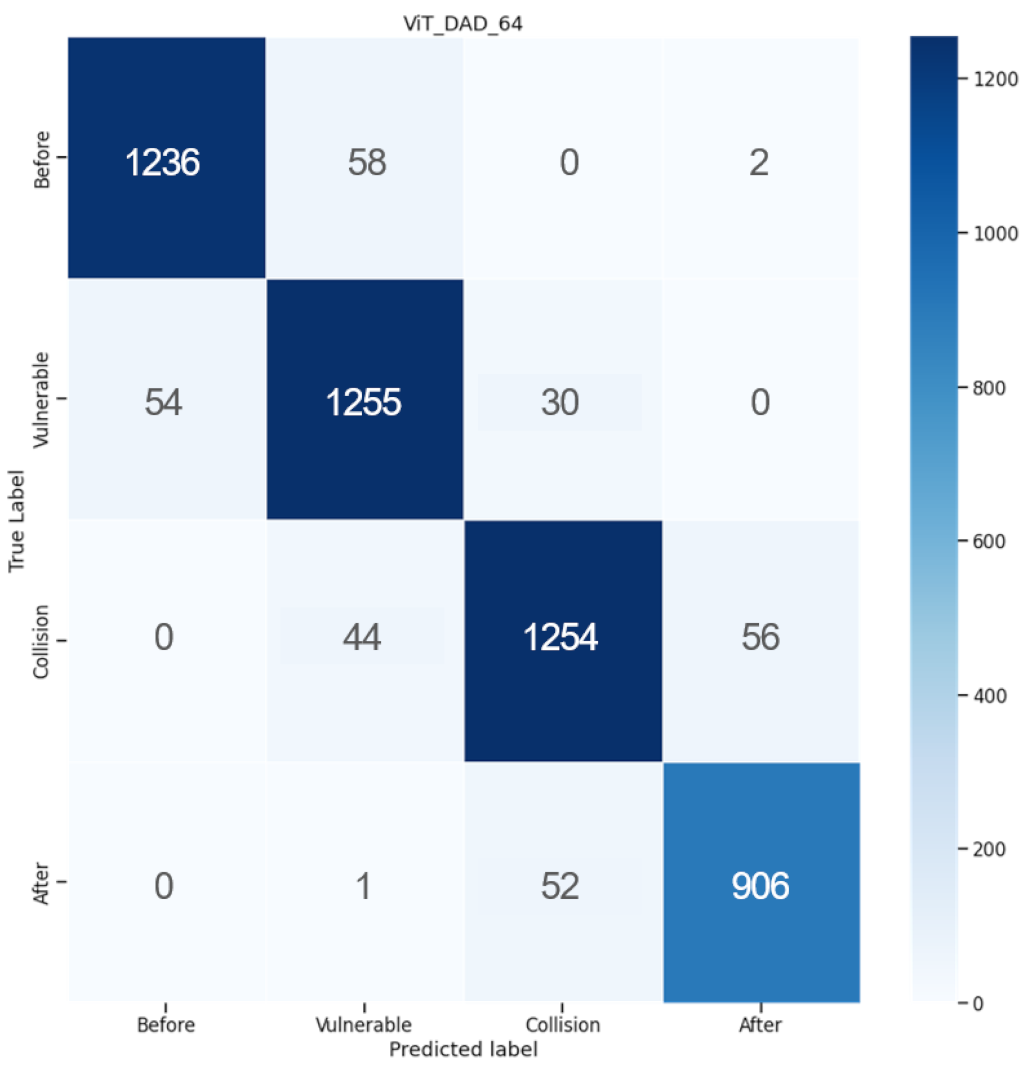

With the advancement of autonomous driving technology, expectations for continuing commercial success of Automated Vehicles (AVs) are rapidly increasing [1,2]. Society of Automotive Engineers defines AVs as vehicles capable of navigating, controlling, and avoiding risks partly or totally without human assistance and divides them into six levels of driving automation [3]. In reality, most current commercial AVs are evaluated at Level 2 or 3, i.e., capable of partial or conditional automation [4]. AVs are viewed as the critical components of the Intelligent Transportation System to contribute to traffic flow enhancement. In particular, as driver negligence accounts for more than 90% of the causes of traffic accidents, AVs are attracting attention as a safer alternative transportation mode [5,6]. However, there are still hurdles to meeting the above-mentioned expectations due to errors in recognizing surrounding objects during autonomous driving and operational control transfer in a moment of emergency [7]. AVs may risk human drivers and passengers, especially when they transfer the control back to the human drivers [8,9]. Some researchers tried to leverage Vehicle-To-Everything technologies to help AVs become more promptly and accurately aware of dangerous circumstances based on the information provided by the nearby vehicles, pedestrians, and traffic infrastructure over a high-speed wireless network [7,10,11,12]. Evaluation criteria are being discussed for safety standards and operations specifically for AVs [10]. The AV safety assessment is a part of the New Car Assessment Program that tests the safety level of vehicles. Various evaluation criteria have been proposed based on ISO 26262 [13], an international automotive functional safety standard for preventing accidents caused by defects in the Electric and Electronic system. Simulation-based accident scenario studies for safety evaluation have been discussed [14,15]. However, most previous studies were based on limited evaluation criteria for temporary test drive permits. Current scenarios do not sufficiently reflect traffic conditions and unique accident patterns in various countries and regions of concern. Test plans based on such imperfect accident scenarios cannot fully assess AVs’ safety functions on the road. Therefore, it is necessary to develop test scenarios based on actual accident data to obtain a broader spectrum of trustworthy safety criteria for AVs [16,17]. However, obtaining actual AV accident data is highly challenging and costly. To address the limited availability of the actual AV accident data for research purposes, we employ real accident data of human-driven vehicles (HVs) as a source of scenario extraction because HVs are known to exhibit similar patterns of accidents as AVs, according to recent studies [18,19,20]. Specifically, we base our analysis on the Dashcam Accident Dataset (DAD), which contains video clips of collisions [21]. The lack of objectivity in accident scenarios due to human experts’ intervention is a limitation. Hence, data-oriented analysis has been emphasized [17]. Artificial Intelligence (AI) has emerged as a replacement for human experts for deriving unbiased accident scenarios based on data [22]. Virdi et al. [23] used deep recurrent neural networks such as Long Short-Term Memory (LSTM) algorithm to analyze the sequential pattern of accidents. However, previously employed machine learning algorithms for accident modeling come short in accountability. Hence, explainable Artificial Intelligence (XAI) technologies are emerging to interpret the inference made by the AI transparently. XAI improves the trustworthiness of AIs by analyzing the relationship between input data, model parameters, and inference outcomes. Highly reliable XAI results were produced in various problem domains [24,25,26]. In particular, Transformer neural network with Self-Attention-based feature encoding layers identifies critical parts of the input data [27]. With the explanation capability, Transformer showed outstanding performance for continuous sequence analysis in Natural Language Processing (NLP) [27], Speech-to-Text [28,29], Reinforcement Learning (RL) [30,31], and Computer Vision (CV) [32,33,34]. Motivated by the success of Transformers in various previous research works, this study aims to make the following novel contributions through the overall approach we illustrated in Figure 1. We accurately classify actual road accidents and critical situations using Vision Transformer-Traffic Accident (named ViT-TA), an Attention-based Transformer algorithm customized for computer vision data. Specifically, ViT-TA was applied to a stream of accident video frames recorded by HVs. Here, we assume that the ego vehicles in the video have AV safety functions. From the time-series of visual Attention maps, we can identify the Spatio-temporal characteristics of critical situations around accidents, such as road conditions and dangerous movements of the vehicles referred to as Target Vehicles (TVs) causing the accidents [35]. Based on the ViT-TA-assisted situation interpretation, we derive intuitive Functional accident scenarios using 6-Layer information defined by the PEGASUS project [14,36,37,38].

Figure 1.

Overview of Vision Transformer-assisted safety assessment scenario extraction.

Our study is presented in the following order: In Section 2, we draw the novelty of this study by reviewing the related works on the safety evaluation of AVs and traffic accident scenarios. In Section 3, we describe Dashcam Accident Dataset (DAD) preprocessing and ViT-TA architecture for modeling situations around traffic accidents. In Section 4, we evaluate the performance of ViT-TA. Section 5 explains how we derived Functional accident scenarios and suggests a framework that automatically generates more concrete and highly objective safety test scenarios. Finally, Section 6 makes concluding remarks.

2. Related Work

In this section, we review the research on various methods for evaluating the safety of AVs. AVs have to fulfill safe driving tasks under various operating circumstances. Traditionally, human experts have composed the operational scenarios based on their knowledge and experience. However, experts’ subjectivity and intervention can make scenarios non-systematic and impractical [36]. The safety assessment procedures written by human experts cannot wholly and quantitatively cover various critical situations [17]. Various studies considered alternative approaches based on simulations and AI algorithms to resolve the shortcomings of manual scenario authoring by humans. The simulation-based methods can systematically generate scenarios in a scalable manner. Lim et al. [39] simulated AVs to assess their capability of satisfying the minimum safety requirements under various scenarios. Kim et al. [40] evaluated AV’s controllability during the fault-injected test simulations. Their study concluded with a list of safety concepts and indicators that should appear in test scenarios. Park et al. [41] developed scenarios for evaluating safety measures during take-over situations on virtually simulated highways. Similarly, Abbas et al. [42] demonstrated various dangerous situations during autonomous driving through a virtual simulator based on the Grand Theft Auto V game. Recently, simulation methods have been studied in conjunction with AI technologies. Strickland et al. [43] used Bayesian Convolutional LSTM (Conv-LSTM) to predict collision risks during the autonomous and assisted driving mode. They generated collision scenarios using traffic simulators such as VISSIM and SSAM. Jenkins et al. [10] created accident scenarios by feeding V2X data such as vehicle speed and directions to Recurrent Neural Networks. They measured the Kernel Density similarity between the synthesized scenarios and the actual accident data. Fremont et al. [44] proposed comprehensive scenario-based experiments to generate 1294 safety assessment cases. However, some of the scenarios based on the simulations may not be valid in the real world. Therefore, looking into the actual accident data as a source for more concrete and realistic scenarios is imperative. Yao et al. [45] implemented a method for tracking traffic participants recorded in first person-view videos to predict potential accidents. Maaloul et al. [46] used a heuristic method based on Farneback Optical Flow to detect motions that can lead to highway accidents. Morales et al. [47] presented a semantic segmentation method for detecting road obstacles in ego-vehicle videos. They computed obstacle trajectories using monocular vision and an extended Kalman filter. Ghahremannezhad et al. [48] devised a real-time road boundary detection method to point out the vehicles unsafely crossing the boundaries. Agrawal et al. [49] developed a system that promptly alarms the emergency services upon traffic accidents detected by a deep neural network. Kim et al. [26] presented a traffic accident detection method based on a Spatio-temporal 3D convolutional neural network that consists of a pre-activation ResNet and a Feature Pyramid Network. These works focused on improving the accident detection accuracy and paid less attention to the causality analysis necessary for accident scenario composition. Sui et al. [50] clustered accident reports to obtain six crash scenarios based on the time of the crash, visual obstruction, and pre-crash behavior of the car and two-wheeled vehicles. Some of the scenarios they discovered resembled those provided by the Euro New Car Assessment Program. Furthermore, the scenarios reflected the unique characteristics of different countries. Holland et al. [51] designed virtual roads in which crashes occurred based on Open Street Maps and applied the NLP technique to generate the crash scenario from a text description of the crash event. The scenarios specified the real features of roads, such as the curvature, the number of lanes, and speed limits. Geng et al. [52] constructed an ontology of road elements, traffic participants, and their inter-relationships. They computed the probability of accidents on urban roadways given the ontological information. They claimed that the explicit specification of the inter-relationship can be used to interpret the accidents in detail, and the result of such interpretation can be reflected in the safety test scenarios for AVs. Yuan et al. [53] identified nine field variables such as time, space environment, and accident participation from real accident data to describe 4320 compelling risk scenarios. Given those variables, they used an artificial neural network to compute the probability of accidents. Park et al. [35] mined text reports of accidents on the community roads to extract keywords for traffic safety situations. Using an NLP technique and the keywords, they followed a 5-layer format to compose 54 functional scenarios that can be tested on urban arterials and interactions. Lenard et al. [54] studied typical patterns of pedestrian accidents and proposed pattern variation methods to generate scenarios pertaining to less common and severe accident circumstances. These previous research works did not examine the accident patterns in the context of AVs. The AI-based methods mentioned above could not automatically pinpoint the objects that caused accidents. Thus, these methods still relied on human experts to conclude the causality. As mentioned earlier, the correctness of the interpretation made by human experts is questionable. Karim et al. [55] proposed the Dynamic Spatial-Temporal Attention (DSTA) model for not only anticipating traffic accidents but also for identifying probable causes from Car Crash Da (CCD) [56]. While their work proposed an intriguing technical direction for interpreting accident scenes, they did not fine-tune their model to reliably detect exact points where the critical situation starts to develop. Neither of the works aimed to study a method for generating safety test scenarios for AVs. To address the limitations of the previous works, we customized an Attention-based Transformer neural network (ViT-TA). Our network is trained to account for the causes of traffic accidents recorded in the Dashcam Accident Dataset [21]. Given accurately pointed reasons, we can more reliably reflect the interpretation of the critical situations onto the safety test scenarios for AVs. Unlike DSTA, ViT-TA does not restrict the understanding of accidents only regarding the pre-recognized surrounding cars. Therefore, ViT-TA can also highlight non-vehicle objects as a probable cause of accidents that otherwise could be overlooked by DSTA or human experts. We derived AV-oriented safety test scenarios that happen at the moment with a TTC shorter than the typical Perception-Reaction-Time (PRT) of human drivers in critical situations. We believe our method can improve the objectivity of the safety test scenarios for AVs as they are based on the accurate accounting of actual accidents by ViT-TA.

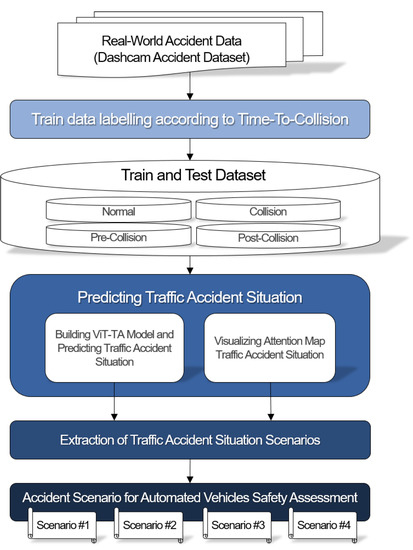

3. Methodology

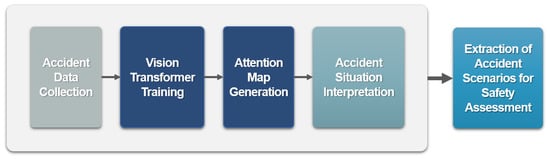

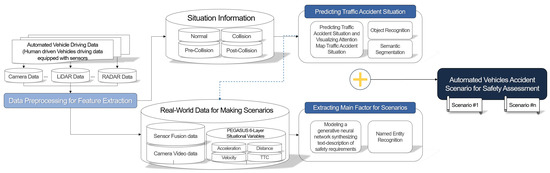

In this section, we present the overall procedure of deriving AV accident scenarios for safety assessment, as shown in Figure 2. The process consists of three steps. First, image frames are extracted from ego-vehicle video clips containing traffic accidents between Target-Vehicles (TVs). Each frame is categorized into four classes, Normal, Pre-Collision, Collision, and Post-Collision, according to TTC values considering the conventional PRT of AVs. Second, a ViT-TA neural network model is fine-tuned to produce Attention maps that visually indicate critical factors of dangerous situations. Finally, accident scenarios are composed based on the analysis result returned by ViT-TA.

Figure 2.

Framework for deriving AV accident scenarios based on real-world accident data set.

3.1. Dataset

We used the Dashcam Accident Dataset (DAD) that contains 620 accident First Point of View (FPV) videos collected between 2014 and 2016 through dash cameras mounted on HVs in six Taiwanese cities (Taipei, Taichung, Yilan, Tainan, Kaohsiung, and Hsinchu) [21]. The proportions of the accident types are as follows: 42.6% motorbike-to-car accidents, 19.7% car-to-car accidents, 15.6% motorbike-to-motorbike accidents, and 20% other miscellaneous types; 3% of the accidents occurred during night time. In addition to accident clips, 1130 normal traffic videos are included. The DAD contains relatively more challenging videos showing accidents taking place in complicated roads and crowded streets. The size of each frame is 224 × 224. We used 455 videos for training and 165 videos for testing. Each 4 s video with 720p resolution comprises 100 frames played back at 25 FPS (Frames Per Second). Note that we assumed the HV of FPV to be an ego-vehicle with AV functions.

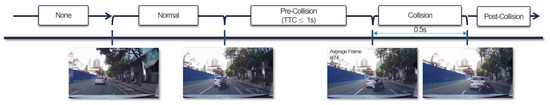

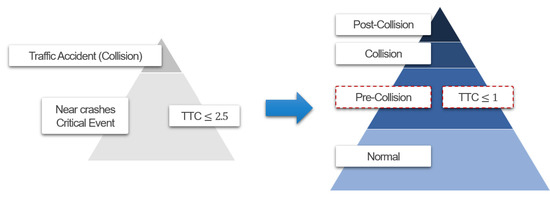

Most previous studies focus mainly on the very moment of the collision itself. As shown in Figure 3, we classified each video frame into one of four classes based on TTC to understand the precursors and aftermaths of accidents. The four classes are normal situation before an accident (Normal), a situation that can lead to a collision (Pre-Collision), a moment of collision (Collision), and a situation after the crash (Post-Collision). Most studies regarding HV accidents focus on the situation at TTC < 2.5 s, considering the typical PRT of human drivers [57]. PRT is defined as the time interval at which the driver first represents a response to an object or situation in the driver’s view. PRT is divided into four stages: perception, identification, motion, and reaction. The American Association of State Highway and Transportation Officials (AASHTO) also uses the cognitive response time of 2.5 s to design safe roads [58]. However, technical advancements helped HVs avoid unexpected critical situations much quicker. For example, most recent vehicles have an automatic transmission that does not require the overhead of changing forward gears [59,60,61]. Advanced Driver Assistance Systems, such as Lane Keeping Assist and Forward Collision-Avoidance Assist, have helped vehicles respond to critical situations at PRT < 2.5 s [62,63,64,65].

Figure 3.

Labeling the DAD video frames with critical situations.

Considering AVs are more dexterous than the HVs, we define the Pre-Collision situation as the moment when TTC < 1 s, as shown in Figure 4. It is assumed that AV passengers are well adjusted with no fear to the reduced PRT in a near collision situation. Videos in the DAD were mainly about the accidents between nearby TVs, not the FPV ego vehicles. However, the FPV ego vehicles we assumed to be the AVs were well within reach of the dangerously moving TVs at TTC < 1. Therefore, the situation around the nearby TVs’ accidents can be regarded as a critical situation in which the automated ego vehicles become vulnerable to becoming involved in another accident as a chain reaction.

Figure 4.

Accident video frame labeling based on TTC more suitable for understanding critical situations for AVs.

After screening 45,500 frames, we learned that Pre-Collision situations started to be captured at the 74th frame on average. The Normal driving situation is set at the 25th frame and forward up to TTC to the frame just before the Pre-Collision state. The scene of the accident lasted 0.5 s on average. Frames after 0.5 s were labeled as Post-Collision. We cropped out the first 24 frames marked Normal from every video to avoid the imbalance among the four classes in terms of data amount. Hence, the total number of frames for training and testing ViT-TA was 24,740, as shown in Table 1.

Table 1.

The Number of Frames per Class.

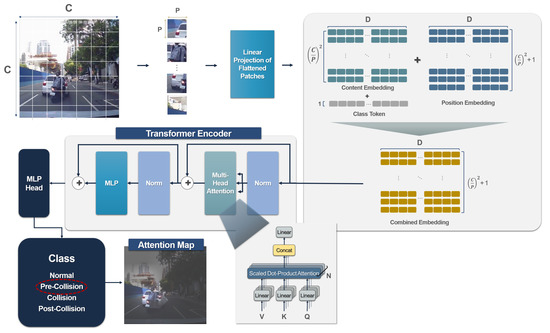

3.2. Vision Transformer-Traffic Accident (ViT-TA)

Vision Transformer (ViT) [66] is a Transformer algorithm [27] applied to images and videos. The original Transformer was designed to conduct Sequence-to-Sequence (seq2seq) modeling based on Encoder and Decoder with the layers computing scaled dot-product Attention values. The Attention values represent the importance of a token in converting a sequence to another sequence. For ViT, a token is a patch of image pixels, whereas a token for seq2seq modeling is conventionally a natural language word. In this study, we customized ViT to devise ViT-TA (ViT-Traffic Accident). We first present how ViT-TA computes the embedding of objects and their positions as follows. ViT-TA dissects a C × C image into patches. The content of each P × P patch is embedded into a latent vector with a length of D through linear projection. A token of the class label is embedded into a D-length latent vector so that ViT-TA can learn to classify the scene in an image. We refer to the latent vector constructed through this process a content embedding. Then, ViT-TA encodes each patch position according to Equation (1), where p is the offset of the patch from top left-hand corner of an image, D is the length of the vector containing encoded vectors, and i = 0, 1, …, D. Position embedding has the exact dimensions of the content embedding. The position encoding plays a crucial role in representing the relative location of a patch to others. Moreover, the patch positions are essential for interpreting critical situations leading to accidents.

ViT-TA combines the content and the position embedding by vector addition. Finally, a (C/P)2 × D input matrix is generated. ViT-TA feeds the input matrix to the Transformer Encoder block, with its architecture shown in Figure 5. Each Transformer Encoder block is a network of normalization (Norm), Multi-Head Attention (MHA), and Multi-Layer Perceptrons (MLP) blocks. Each Attention Head in the MHA block computes scaled dot-product Attention scores of the image patches according to Equation (2). Q, K, and V are matrices of initially random parameters for computing the Attention score of every patch, and dk is the dimension of the split segment of combined embedding on each of N Attention Heads.

Figure 5.

Processing of accident scenes through Vision Transformer for Traffic Accidents (ViT-TA).

ViT-TA computes Attention scores in parallel and feeds the normalization of the output from every Attention Head to MLP, which uses Gaussian Error Linear Unit as the non-linear activation function. We stripped the Transformer decoder block from the original Transformer and let MLP determines the class of a given image. During training, parameters in Q, K, and V matrices converge, and the Transformer Encoder block learns to associate the computed Attention scores of patches with the classification result of MLP. Upon detecting critical situations, we regard the objects appearing over the image patches with high Attention scores as the probable causes of accidents. Note that we did have to encode the sequence of image frames explicitly using RNNs such as LSTM favored by many previous traffic studies. Instead, the explicit class labeling and the position encoding prevent ViT-TA from becoming confused with the proper order of transitions during critical situations. The MHA in ViT-TA as a rich semantic representation of image frames can be modeled in parallel. Thus, ViT-TA has a training speed advantage over RNNs as well.

4. Evaluation of ViT-TA Model

Processing of accident scenes through Vision Transformer for Traffic AccidentsWe preprocessed DAD with Open CV (v4.5) and implemented ViT-TA with Python (v3.9.7) and Tensorflow (v2.6.0). We empirically configured the ViT-TA structure to produce an optimal model, as shown in Table 2 and Table 3. The Dense network at the end is connected to the Softmax layer to classify the scene in an image frame. We set the patch size to 32 × 32. We had a total of 12 Attention Heads in the Transformer Encoder. The length (D) of the combined embedding of each patch was 768. A set of 24,740 video frames from DAD was divided into train and test data at a ratio of 8:2. We varied the batch size between 16, 32, and 64. For normalization and model generalization, we used Categorical Cross Entropy as a loss function with label smoothing set to 0.2 [67]. For optimization, we employed Rectified Adam (RAdam) [68], which complements the limitations of Adaptive Moment Estimation (Adam). We iterated the training process up to an epoch of 1000 with early stoppage enabled. We trained ViT-TA and evaluated it on a Window 10 Deep Learning machine with Intel(R) Xeon(R) Gold 5220R CPU, a 192GB RAM, and an NVIDIA GeForce RTX 3090 GPU. As a result, we fine-tuned the model with a total of 87,466,855 parameters.

Table 2.

Configuration of ViT-TA Structure.

Table 3.

Configuration of ViT-TA Training.

For the evaluation of the model, we use the following metrics: (1) accuracy (Equations (3)); (2) precision (Equations (4)); (3) recall (Equations (5)); and (4) f1-score (Equation (6)) using precision and recall. A True Positive (TP) is an outcome where the model correctly predicts the positive class. A True Negative (TN) is an outcome where the model correctly predicts the negative class. A False Positive (FP) is an outcome where the model incorrectly predicts the positive class. A False Negative (FN) is an outcome where the model incorrectly predicts the negative class.

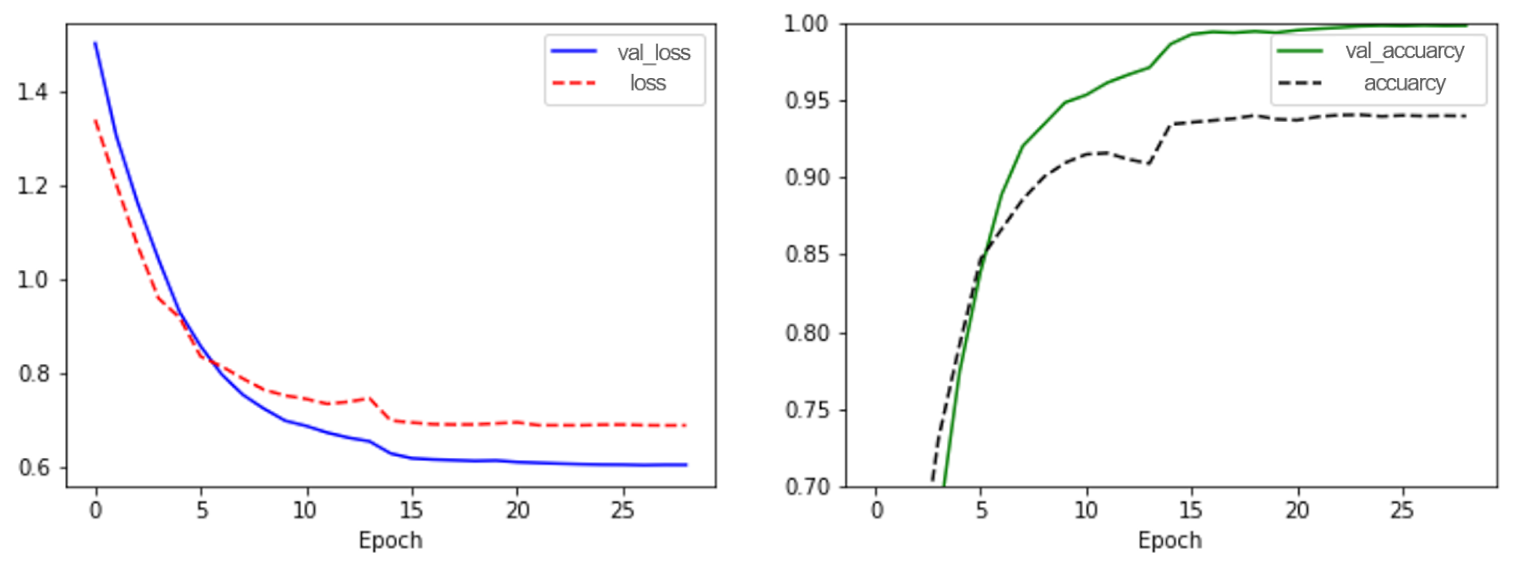

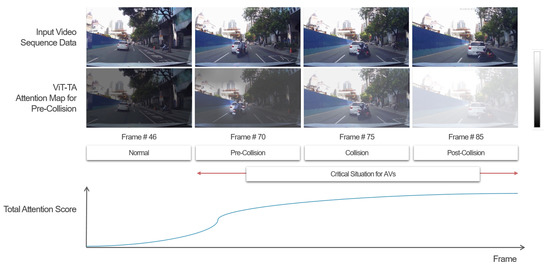

The learning was conducted until the validation accuracy reached a peak of 99%, as shown in Table 4. After the training, ViT-TA achieved the highest test accuracy of 94% when we set the batch size to 64. According to the confusion matrix, ViT-TA remarkably never misclassified Collisions as Normal situations. A small portion of pre-collision situations was falsely identified as collisions. Despite a few FPs and FNs, we were not hampered in identifying the precursors of crashes as Attention maps were given, as shown in Figure 6.

Table 4.

ViT-TA learning Result.

Figure 6.

Illustrating the critical situation with Attention maps and scores generated by ViT-TA.

Attention scores of the image patches are visualized by the transparencies. High transparency indicates a high Attention score. For Normal situations, the entire image was nearly opaque, as the Attention scores of the patches were universally low. When the pre-collision situation was detected, ViT-TA pinned a focal point on the objects involved in the collision, as shown in Frame #70 in Figure 6. Interestingly, the focal point fades out over the subsequent frames that eventually contain the scenes of Collisions and Post-collision. Starting from the Pre-collision state, the total Attention scores of the image frames continuously increased until they reached the peak at Post-collision (Frame #85). Such visually salient transition of the Attention scores over the frames and the critical objects highlighted at the Pre-collision state helped us understand how the situation unfolded. Karim et al. (2022) [55] also trained DSTA with the DAD to point out the entities involved in a collision. Karim et al. set an arbitrary threshold of crash probability exceeding 50% to identify a critical point. With such thresholds, the mean TTC was approximately 4.5 s. However, the critical moments actually occurred mainly at TTC ≤ 1 s, according to our in-depth screening of the DAD videos. Perception-To-Response Times (PRT) of human drivers and AVs are typically 2.5 s and 1.5 s, respectively. Considering the significantly shorter PRT, the credibility and usefulness of the arbitrary thresholds set for DSTA are questionable. Focusing on the critical moments at TTC < 1 s, our ViT-TA outperformed DSTA with an average accuracy 34.92% higher than DSTA. As introduced earlier, DSTA recognized cars prior to classifying the video scenes. However, we argue that such object recognitions must be performed afterward, especially when accident scenario writing is a concern. DSTA can overlook unclassified objects as crucial factors for accidents. Removing the biases by not restricting ViT-TA only to vehicles was another reason for outperforming DSTA.

5. Composition of Vulnerable Traffic Accident Scenarios Based on ViT-TA

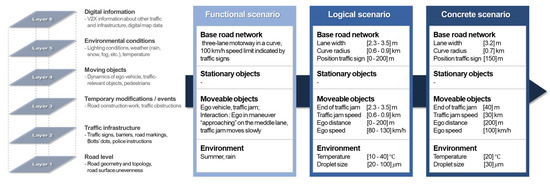

In this section, we share our experience composing a safety assessment scenario for AVs based on the preliminary analysis result returned by ViT-TA. Research projects such as PEGASUS [38], CETRAN [69], ENABEL-S [70] studied AV safety scenarios. In particular, through the PEGASUS project, academic researchers and industry partners, such as BMW and Audi, systematized methods and requirements for the safety of autonomous driving functions. In this study, we defined the template for composing what PEGASUS refers to as Functional scenarios that describe critical situations at a high level, as shown in Figure 7 [37]. The Functional scenarios specify the AV functions to be evaluated under certain circumstances expressed with 6-Layer information such as road levels, traffic infrastructure, events, objects, environments, and digital information (Figure 7).

Figure 7.

Functional, Logical, and Concrete scenarios defined by PEGASUS based on 6-Layer information.

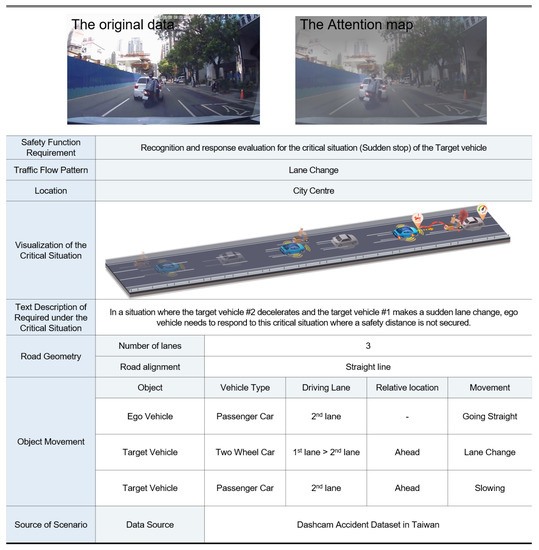

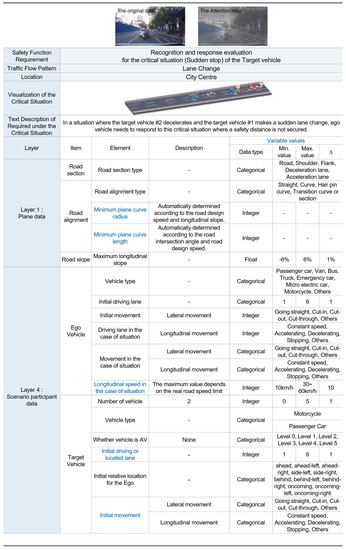

In the following, we explain each section in the sample Functional scenario illustrated in Figure 8. The safety Function Requirement section states the objective of safety evaluation. For instance, the goal is to test how AVs respond to the sudden stopping of target vehicles ahead. The Traffic Flow Pattern section describes how target vehicles moved, for example, lane changes. The Location section specifies where the critical situation took place. The Visualization section illustrates the critical situation. The template offers an area for full-text description of safety functions required under the critical situation. The Road Geometry section describes road level information such as the number of lanes and road alignments. The Object Movement section describes the maneuvers of the objects involved in the critical situation. This section specifies the details such as vehicle types, driving lane of the vehicles, and the location of the target vehicles relative to the position of the ego vehicle. Finally, the raw snapshot of the scene showing the critical situation and its attention map is attached at the top of the scenario template to highlight the factors contributing to the critical situation. Examples of the attention maps for some of the critical situations recorded in the DAD are shown in Figure 8.

Figure 8.

Illustrating the Critical situation of attentions of the ViT-TA for Extraction functional AV Scenarios.

The first case shows a two-wheeled vehicle suddenly cutting in and crashing into the car ahead, slowing down on the third straight lane during the daytime. The second case shows a car suddenly switching lanes and colliding with another vehicle on the first lane during nighttime. The third case shows the accident ahead due to a car that ran a red light. The last case shows the collision between two vehicles ahead simultaneously crossing lanes on the curved road during nighttime. ViT-TA was able to pinpoint the objects involved in the collisions. We can regard that the highlighted things are likely to invoke a critical situation for the ego vehicle having AV functions. The visual cues provided by ViT-TA can help safety inspectors describe Functional accident scenarios. We can plan a test that evaluates the AV’s capability of recognizing and evading the critical situations described in the Functional scenario. Figure 9 shows a Functional scenario describing the first case shown in Figure 8.

Figure 9.

Functional Scenario #1: Evaluation plan for the sudden accident between Target Vehicles ahead.

However, the Functional scenarios do not provide the configuration of situational values necessary to define a precise and concrete safety evaluation plan. The PEGASUS project introduced Logical and Concrete scenarios that specify the range and initialization of the situational variables, as shown in Figure 7. It was not feasible to extract the exact values of the situational variables such as TTC, vehicle speed, acceleration, the distance between objects, lane numbers, and object trajectories from the 2D camera. Values of the situational variables can be obtained from 3D point cloud data recorded by depth cameras such as LiDAR. Given the depth data, we propose a reference framework for automating the writing process of Logical and Concrete scenarios with minimal human intervention, as illustrated in Figure 10 and Figure 11.

Figure 10.

Framework for automation of Logical and Concrete scenarios.

Figure 11.

An example of a Logical and Concrete Scenario for AV safety assessment.

We first extract features from data collected by the sensors on AVs. We train ViT-TA with the feature embeddings seen under Normal, Pre-collision, Collision, and Post-collision situations. After the training, ViT-TA detects and highlights objects contributing to the critical conditions. Through object recognition [71], semantic segmentation, and image classification techniques [72,73], the types and geometry of the highlighted objects can be inferred. Upon detection of the first appearance of the critical objects, video frames up to the Post-collision point are iterated to compute the values of the situational variables, such as speed and direction of the vehicles and the distance between critical objects. The situational variables can be randomly adjusted for scenario variations to cover peculiar AV accident patterns not typically seen in HV accidents [74]. Finally, we can adopt text generation techniques [51] to fill out the natural language description of the safety function required under critical situations. Understanding the text accident reports requires recognizing named entities and their inter-relations that can be mined with various machine learning techniques [75,76]. We expect that such an automation framework minimizes human intervention and promises a highly objective scenario for the safety assessment of AVs. To improve the credibility of the scenarios further, we plan to employ the latest development of statistical explanation techniques in addition to the Transformer-based visual interpretation approaches [25,26,77,78].

6. Conclusions

In this paper, we presented a custom Vision Transformer (ViT-TA) for automatically pointing out the clues from videos containing the scenes of critical situations leading to potential traffic accidents. With 24,740 frames from Dashcam Accident Dataset (DAD) used as training data, ViT-TA could classify video scenes with an average f1-score of 94. ViT-TA’s average accuracy was 34.92% higher than DSTA in detecting the critical moment at TTC < 1, considering AVs’ typical Perception-Reaction-Time (PRT) time. While most previous AI-based methods focus on catching the accidents, ViT-TA’s attention map highlighting the critical objects helped us understand how the situations unfolded to put hypothetical ego vehicles with AV functions at risk. Based on the ViT-TA-assisted interpretation, we demonstrated the composition of Functional scenarios conceptualized by the PEGASUS project for describing a high-level plan to test AVs’ capability of evading critical situations. The objectivity and trustworthiness of the Functional scenario can be improved through our approach compared to the manual scenario composition by humans. Using actual accident data, we can derive more plausible scenarios unforeseen by the simulation-based methods. However, from the 2D video frames, we could not derive a Logical and Concrete scenario specified with the values of situational variables defined as 6-Layer information by the PEGASUS project such as TTC, vehicle speed, directions, and distance between objects. The situational variables can be initialized by analyzing depth information captured by sensors such as LiDAR. We laid out a framework for automating the scenario authoring process by incorporating ViT-TA modeling LiDAR data, object recognition algorithms, and generative-adversarial neural networks for synthesizing text description of safety function requirements in a Logical and Concrete scenario. Our study is a significant step toward generating highly reliable safety improvement plans for AVs.

Author Contributions

Conceptualization, Y.Y., M.K. and W.L.; methodology, W.L. and Y.Y.; software, W.L. and M.K.; validation, W.L. and M.K.; formal analysis, W.L. and M.K.; investigation, W.L. and M.K.; resources, W.L.; data curation, W.L.; writing—original draft preparation, W.L.; writing—review and editing, Y.Y. and M.K.; visualization, M.K.; supervision, K.H. and Y.Y.; project administration, K.H. and Y.Y.; funding acquisition, K.H. and Y.Y. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported the Korea Agency for Infrastructure Technology Advancement (KAIA) grant funded by the Ministry of Land, Infrastructure, and Transport (Grant 22AMDP-C161754-02).

Data Availability Statement

This work used the data—https://aliensunmin.github.io/project/dashcam/, accessed on 29 June 2022.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Kim, B.J.; Lee, S.B. Safety Evaluation of Autonomous Vehicles for a Comparative Study of Camera Image Distance Information and Dynamic Characteristics Measuring Equipment. IEEE Access 2022, 10, 18486–18506. [Google Scholar] [CrossRef]

- Wang, Y.; Wang, L.; Guo, J.; Papamichail, I.; Papageorgiou, M.; Wang, F.Y.; Bertini, R.; Hua, W.; Yang, Q. Ego-efficient lane changes of connected and automated vehicles with impacts on traffic flow. Transp. Res. Part C Emerg. Technol. 2022, 138, 103478. [Google Scholar] [CrossRef]

- SAE. Taxonomy and Definitions for Terms Related to Driving Automation Systems for On-Road Motor Vehicles; SAE International: Warrendale, PA, USA, 2018. [Google Scholar]

- U.S. Department of Transportation. Vision for Safety 2.0 Guidance for Automated Vehicles; National Highway Traffic Safety Administration: Washington, DC, USA, 2017.

- Masmoudi, M.; Ghazzai, H.; Frikha, M.; Massoud, Y. Object detection learning techniques for autonomous vehicle applications. In Proceedings of the 2019 IEEE International Conference on Vehicular Electronics and Safety (ICVES), Cairo, Egypt, 4–6 September 2019; pp. 1–5. [Google Scholar]

- Luettel, T.; Himmelsbach, M.; Wuensche, H.J. Autonomous ground vehicles—Concepts and a path to the future. Proc. IEEE 2012, 100, 1831–1839. [Google Scholar] [CrossRef]

- Zhao, L.; Chai, H.; Han, Y.; Yu, K.; Mumtaz, S. A collaborative V2X data correction method for road safety. IEEE Trans. Reliab. 2022, 71, 951–962. [Google Scholar] [CrossRef]

- Boggs, A.M.; Arvin, R.; Khattak, A.J. Exploring the who, what, when, where, and why of automated vehicle disengagements. Accid. Anal. Prev. 2020, 136, 105406. [Google Scholar]

- Song, Y.; Chitturi, M.V.; Noyce, D.A. Automated vehicle crash sequences: Patterns and potential uses in safety testing. Accid. Anal. Prev. 2021, 153, 106017. [Google Scholar] [CrossRef]

- Jenkins, I.R.; Gee, L.O.; Knauss, A.; Yin, H.; Schroeder, J. Accident scenario generation with recurrent neural networks. In Proceedings of the 2018 21st International Conference on Intelligent Transportation Systems (ITSC), Maui, HI, USA, 4–7 November 2018; pp. 3340–3345. [Google Scholar]

- Arena, F.; Pau, G.; Severino, A. V2X communications applied to safety of pedestrians and vehicles. J. Sens. Actuator Netw. 2019, 9, 3. [Google Scholar] [CrossRef] [Green Version]

- Wang, J.; Shao, Y.; Ge, Y.; Yu, R. A survey of vehicle to everything (V2X) testing. Sensors 2019, 19, 334. [Google Scholar] [CrossRef] [Green Version]

- Palin, R.; Ward, D.; Habli, I.; Rivett, R. ISO 26262 Safety Cases: Compliance and Assurance. International Standard ISO/FDIS 26262; ISO: Berlin, Germany, 2011. [Google Scholar]

- Riedmaier, S.; Ponn, T.; Ludwig, D.; Schick, B.; Diermeyer, F. Survey on scenario-based safety assessment of automated vehicles. IEEE Access 2020, 8, 87456–87477. [Google Scholar] [CrossRef]

- Hallerbach, S.; Xia, Y.; Eberle, U.; Koester, F. Simulation-based identification of critical scenarios for cooperative and automated vehicles. SAE Int. J. Connect. Autom. Veh. 2018, 1, 93–106. [Google Scholar] [CrossRef]

- Ulbrich, S.; Menzel, T.; Reschka, A.; Schuldt, F.; Maurer, M. Defining and substantiating the terms scene, situation, and scenario for automated driving. In Proceedings of the 2015 IEEE 18th International Conference on Intelligent Transportation Systems, Gran Canaria, Spain, 15–18 September 2015; pp. 982–988. [Google Scholar]

- Erdogan, A.; Ugranli, B.; Adali, E.; Sentas, A.; Mungan, E.; Kaplan, E.; Leitner, A. Real-world maneuver extraction for autonomous vehicle validation: A comparative study. In Proceedings of the 2019 IEEE Intelligent Vehicles Symposium (IV), Paris, France, 9–12 June 2019; pp. 267–272. [Google Scholar]

- Kang, M.; Song, J.; Hwang, K. For Preventative Automated Driving System (PADS): Traffic Accident Context Analysis Based on Deep Neural Networks. Electronics 2020, 9, 1829. [Google Scholar] [CrossRef]

- Favarò, F.M.; Nader, N.; Eurich, S.O.; Tripp, M.; Varadaraju, N. Examining accident reports involving autonomous vehicles in California. PLoS ONE 2017, 12, e0184952. [Google Scholar] [CrossRef] [PubMed]

- Lee, W.; Kang, M.H.; Song, J.; Hwang, K. The Design of Preventive Automated Driving Systems Based on Convolutional Neural Network. Electronics 2021, 10, 1737. [Google Scholar] [CrossRef]

- Chan, F.H.; Chen, Y.T.; Xiang, Y.; Sun, M. Anticipating accidents in dashcam videos. In Proceedings of the Asian Conference on Computer Vision, Taipei, Taiwan, 20–24 November 2016; Springer: Berlin/Heidelberg, Germany, 2016; pp. 136–153. [Google Scholar]

- Demetriou, A.; Allsvåg, H.; Rahrovani, S.; Chehreghani, M.H. Generation of driving scenario trajectories with generative adversarial networks. In Proceedings of the 2020 IEEE 23rd International Conference on Intelligent Transportation Systems (ITSC), Rhodes, Greece, 20–23 September 2020; pp. 1–6. [Google Scholar]

- Virdi, J. Using Deep Learning to Predict Obstacle Trajectories for Collision Avoidance in Autonomous Vehicles; University of California: San Diego, CA, USA, 2018. [Google Scholar]

- Das, A.; Rad, P. Opportunities and challenges in explainable artificial intelligence (xai): A survey. arXiv 2020, arXiv:2006.11371. [Google Scholar]

- Wang, P.Y.; Galhotra, S.; Pradhan, R.; Salimi, B. Demonstration of generating explanations for black-box algorithms using Lewis. Proc. VLDB Endow. 2021, 14, 2787–2790. [Google Scholar] [CrossRef]

- Kim, Y.J.; Yoon, Y. Speed Prediction and Analysis of Nearby Road Causality Using Explainable Deep Graph Neural Network. J. Korea Converg. Soc. 2022, 13, 51–62. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30. [Google Scholar]

- Di Gangi, M.A.; Negri, M.; Cattoni, R.; Dessi, R.; Turchi, M. Enhancing transformer for end-to-end speech-to-text translation. In Proceedings of the Machine Translation Summit XVII: Research Track, Dublin, Ireland, 19–23 August 2019; pp. 21–31. [Google Scholar]

- Kano, T.; Sakti, S.; Nakamura, S. Transformer-based direct speech-to-speech translation with transcoder. In Proceedings of the 2021 IEEE Spoken Language Technology Workshop (SLT), Shenzhen, China, 19–22 January 2021; pp. 958–965. [Google Scholar]

- Chen, L.; Lu, K.; Rajeswaran, A.; Lee, K.; Grover, A.; Laskin, M.; Abbeel, P.; Srinivas, A.; Mordatch, I. Decision transformer: Reinforcement learning via sequence modeling. Adv. Neural Inf. Process. Syst. 2021, 34, 15084–15097. [Google Scholar]

- Parisotto, E.; Song, F.; Rae, J.; Pascanu, R.; Gulcehre, C.; Jayakumar, S.; Jaderberg, M.; Kaufman, R.L.; Clark, A.; Noury, S.; et al. Stabilizing transformers for reinforcement learning. In Proceedings of the International Conference on Machine Learning, PMLR, Virtual, 13–18 July 2020; pp. 7487–7498. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 11–17 October 2021; pp. 10012–10022. [Google Scholar]

- Arnab, A.; Dehghani, M.; Heigold, G.; Sun, C.; Lučić, M.; Schmid, C. Vivit: A video vision transformer. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 11–17 October 2021; pp. 6836–6846. [Google Scholar]

- Wang, W.; Xie, E.; Li, X.; Fan, D.P.; Song, K.; Liang, D.; Lu, T.; Luo, P.; Shao, L. Pyramid vision transformer: A versatile backbone for dense prediction without convolutions. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 11–17 October 2021; pp. 568–578. [Google Scholar]

- Park, S.; Park, S.; Jeong, H.; Yun, I.; So, J. Scenario-mining for level 4 automated vehicle safety assessment from real accident situations in urban areas using a natural language process. Sensors 2021, 21, 6929. [Google Scholar] [CrossRef]

- Menzel, T.; Bagschik, G.; Maurer, M. Scenarios for development, test and validation of automated vehicles. In Proceedings of the 2018 IEEE Intelligent Vehicles Symposium (IV), Changshu, China, 26–30 June 2018; pp. 1821–1827. [Google Scholar]

- Menzel, T.; Bagschik, G.; Isensee, L.; Schomburg, A.; Maurer, M. From functional to logical scenarios: Detailing a keyword-based scenario description for execution in a simulation environment. In Proceedings of the 2019 IEEE Intelligent Vehicles Symposium (IV), Paris, France, 9–12 June 2019; pp. 2383–2390. [Google Scholar]

- Audi, A.G., Volkswagen, A.G. Pegasus Method: An Overview. Available online: https://www.pegasusprojekt.de/files/tmpl/Pegasus-Abschlussveranstaltung/PEGASUS-Gesamtmethode.pdf (accessed on 7 May 2022).

- Lim, H.; Chae, H.; Lee, M.; Lee, K. Development and validation of safety performance evaluation scenarios of autonomous vehicle based on driving data. J. Auto-Veh. Saf. Assoc. 2017, 9, 7–13. [Google Scholar]

- Kim, H.; Park, S.; Paik, J. Pre-Activated 3D CNN and Feature Pyramid Network for Traffic Accident Detection. In Proceedings of the 2020 IEEE International Conference on Consumer Electronics (ICCE), Las Vegas, NV, USA, 4–6 January 2020; pp. 1–3. [Google Scholar]

- Park, S.; Jeong, H.; Kwon, C.; Kim, J.; Yun, I. Analysis of Take-over Time and Stabilization of Autonomous Vehicle Using a Driving Simulator. J. Korea Inst. Intell. Transp. Syst. 2019, 18, 31–43. [Google Scholar] [CrossRef]

- Abbas, H.; O’Kelly, M.; Rodionova, A.; Mangharam, R. Safe at any speed: A simulation-based test harness for autonomous vehicles. In Proceedings of the International Workshop on Design, Modeling, and Evaluation of Cyber Physical Systems, Seoul, Korea, 15–20 October 2017; Springer: Berlin/Heidelberg, Germany, 2017; pp. 94–106. [Google Scholar]

- Strickland, M.; Fainekos, G.; Amor, H.B. Deep predictive models for collision risk assessment in autonomous driving. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, Australia, 21–25 May 2018; pp. 4685–4692. [Google Scholar]

- Fremont, D.J.; Kim, E.; Pant, Y.V.; Seshia, S.A.; Acharya, A.; Bruso, X.; Wells, P.; Lemke, S.; Lu, Q.; Mehta, S. Formal scenario-based testing of autonomous vehicles: From simulation to the real world. In Proceedings of the 2020 IEEE 23rd International Conference on Intelligent Transportation Systems (ITSC), Rhodes, Greece, 20–23 September 2020; pp. 1–8. [Google Scholar]

- Yao, Y.; Xu, M.; Wang, Y.; Crandall, D.J.; Atkins, E.M. Unsupervised traffic accident detection in first-person videos. In Proceedings of the 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Macau, China, 4–8 November 2019; pp. 273–280. [Google Scholar]

- Maaloul, B.; Taleb-Ahmed, A.; Niar, S.; Harb, N.; Valderrama, C. Adaptive video-based algorithm for accident detection on highways. In Proceedings of the 2017 12th IEEE International Symposium on Industrial Embedded Systems (SIES), Toulouse, France, 14–16 June 2017; pp. 1–6. [Google Scholar]

- Morales Rosales, L.A.; Algredo Badillo, I.; Hernández Gracidas, C.A.; Rangel, H.R.; Lobato Báez, M. On-road obstacle detection video system for traffic accident prevention. J. Intell. Fuzzy Syst. 2018, 35, 533–547. [Google Scholar] [CrossRef]

- Ghahremannezhad, H.; Shi, H.; Liu, C. A real time accident detection framework for traffic video analysis. In Proceedings of the the 16th International Conference on Machine Learning and Data Mining, New York, NY, USA, 18–23 July 2020; pp. 77–92. [Google Scholar]

- Agrawal, A.K.; Agarwal, K.; Choudhary, J.; Bhattacharya, A.; Tangudu, S.; Makhija, N.; Rajitha, B. Automatic traffic accident detection system using ResNet and SVM. In Proceedings of the 2020 Fifth International Conference on Research in Computational Intelligence and Communication Networks (ICRCICN), Online, 26–27 November 2020; pp. 71–76. [Google Scholar]

- Sui, B.; Lubbe, N.; Bärgman, J. A clustering approach to developing car-to-two-wheeler test scenarios for the assessment of Automated Emergency Braking in China using in-depth Chinese crash data. Accid. Anal. Prev. 2019, 132, 105242. [Google Scholar] [CrossRef] [PubMed]

- Holland, J.C.; Sargolzaei, A. Verification of autonomous vehicles: Scenario generation based on real world accidents. In Proceedings of the 2020 SoutheastCon, Raleigh, NC, USA, 12–15 March 2020; Volume 2, pp. 1–7. [Google Scholar]

- Geng, X.; Liang, H.; Yu, B.; Zhao, P.; He, L.; Huang, R. A scenario-adaptive driving behavior prediction approach to urban autonomous driving. Appl. Sci. 2017, 7, 426. [Google Scholar] [CrossRef]

- Yuan, Q.; Xu, X.; Zhau, J. Paving the Way for Autonomous Vehicle Testing in Accident Scenario Analysis of Yizhuang Development Zone in Beijing. In Proceedings of the CICTP 2020, Xi’an, China, 14–16 August 2020; pp. 62–72. [Google Scholar]

- Lenard, J.; Badea-Romero, A.; Danton, R. Typical pedestrian accident scenarios for the development of autonomous emergency braking test protocols. Accid. Anal. Prev. 2014, 73, 73–80. [Google Scholar] [CrossRef] [Green Version]

- Karim, M.M.; Li, Y.; Qin, R.; Yin, Z. A Dynamic Spatial-Temporal Attention Network for Early Anticipation of Traffic Accidents. IEEE Trans. Intell. Transp. Syst. 2022, 23, 9590–9600. [Google Scholar] [CrossRef]

- Bao, W.; Yu, Q.; Kong, Y. Uncertainty-based traffic accident anticipation with spatio-temporal relational learning. In Proceedings of the 28th ACM International Conference on Multimedia, Seattle, WA, USA, 12–16 October 2020; pp. 2682–2690. [Google Scholar]

- Olson, P.L.; Sivak, M. Perception-response time to unexpected roadway hazards. Hum. Factors 1986, 28, 91–96. [Google Scholar] [CrossRef]

- Aashto, A. Policy on Geometric Design of Highways and Streets; American Association of State Highway and Traffic Officials: Washington, DC, USA, 2001; Volume 1, p. 158. [Google Scholar]

- Wortman, R.H.; Matthias, J.S. An Evaluation of Driver Behavior at Signalized Intersections; Arizona Department of Transportation: Phoenix, AZ, USA, 1983; Volume 904.

- Mussa, R.N.; Newton, C.J.; Matthias, J.S.; Sadalla, E.K.; Burns, E.K. Simulator evaluation of green and flashing amber signal phasing. Transp. Res. Rec. 1996, 1550, 23–29. [Google Scholar] [CrossRef]

- Zhi, X.; Guan, H.; Yang, X.; Zhao, X.; Lingjie, L. An Exploration of Driver Perception Reaction Times under Emergency Evacuation Situations. In Proceedings of the Transportation Research Board 89th Annual Meeting, Washington, DC, USA, 10–14 January 2010. [Google Scholar]

- Fildes, B.; Keall, M.; Bos, N.; Lie, A.; Page, Y.; Pastor, C.; Pennisi, L.; Rizzi, M.; Thomas, P.; Tingvall, C. Effectiveness of low speed autonomous emergency braking in real-world rear-end crashes. Accid. Anal. Prev. 2015, 81, 24–29. [Google Scholar] [CrossRef] [Green Version]

- Isaksson-Hellman, I.; Lindman, M. Evaluation of the crash mitigation effect of low-speed automated emergency braking systems based on insurance claims data. Traffic Inj. Prev. 2016, 17, 42–47. [Google Scholar] [CrossRef]

- Cicchino, J.B. Effectiveness of forward collision warning and autonomous emergency braking systems in reducing front-to-rear crash rates. Accid. Anal. Prev. 2017, 99, 142–152. [Google Scholar] [CrossRef] [PubMed]

- Cicchino, J.B. Effects of automatic emergency braking systems on pedestrian crash risk. Accid. Anal. Prev. 2022, 172, 106686. [Google Scholar] [CrossRef] [PubMed]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the Inception Architecture for Computer Vision. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2818–2826. [Google Scholar]

- Liu, L.; Jiang, H.; He, P.; Chen, W.; Liu, X.; Gao, J.; Han, J. On the variance of the adaptive learning rate and beyond. arXiv 2019, arXiv:1908.03265. [Google Scholar]

- de Gelder, E.; den Camp, O.O.; de Boer, N. Scenario Categories for the Assessment of Automated Vehicles; CETRAN: Singapore, 2020; Volume 1. [Google Scholar]

- ENABLE-S3 Consortium. Testing and Validation of Highly Automated Systems. Technical Report. ENABLE-S3. Available online: https://www.tugraz.at/fileadmin/user_upload/Institute/IHF/Projekte/ENABLE-S3_SummaryofResults_May2019.pdf (accessed on 7 May 2022).

- Yoon, Y.; Hwang, H.; Choi, Y.; Joo, M.; Oh, H.; Park, I.; Lee, K.H.; Hwang, J.H. Analyzing basketball movements and pass relationships using realtime object tracking techniques based on deep learning. IEEE Access 2019, 7, 56564–56576. [Google Scholar] [CrossRef]

- Hwang, H.; Choi, S.M.; Oh, J.; Bae, S.M.; Lee, J.H.; Ahn, J.P.; Lee, J.O.; An, K.S.; Yoon, Y.; Hwang, J.H. Integrated application of semantic segmentation-assisted deep learning to quantitative multi-phased microstructural analysis in composite materials: Case study of cathode composite materials of solid oxide fuel cells. J. Power Sources 2020, 471, 228458. [Google Scholar] [CrossRef]

- Hwang, H.; Ahn, J.; Lee, H.; Oh, J.; Kim, J.; Ahn, J.P.; Kim, H.K.; Lee, J.H.; Yoon, Y.; Hwang, J.H. Deep learning-assisted microstructural analysis of Ni/YSZ anode composites for solid oxide fuel cells. Mater. Charact. 2021, 172, 110906. [Google Scholar] [CrossRef]

- Goodall, N.J. Ethical decision making during automated vehicle crashes. Transp. Res. Rec. 2014, 2424, 58–65. [Google Scholar] [CrossRef] [Green Version]

- Yoon, Y. Per-service supervised learning for identifying desired WoT apps from user requests in natural language. PLoS ONE 2017, 12, e0187955. [Google Scholar] [CrossRef] [Green Version]

- Lee, H.; Yoon, Y. Interest Recognition from Online Instant Messaging Sessions Using Text Segmentation and Document Embedding Techniques. In Proceedings of the 2018 IEEE International Conference on Cognitive Computing (ICCC), San Francisco, CA, USA, 2–7 July 2018; pp. 126–129. [Google Scholar]

- Garreau, D.; Luxburg, U. Explaining the explainer: A first theoretical analysis of LIME. In Proceedings of the International Conference on Artificial Intelligence and Statistics, PMLR, Online, 26–28 August 2020; pp. 1287–1296. [Google Scholar]

- Antwarg, L.; Miller, R.M.; Shapira, B.; Rokach, L. Explaining anomalies detected by autoencoders using Shapley Additive Explanations. Expert Syst. Appl. 2021, 186, 115736. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).