1. Introduction

Since 1990, the flexible job-shop scheduling problem (FJSP) has attracted attention due to its wide application and high complexity. In the last decades, fruitful results have been reported concerning the FJSP. To narrow the gap between the problem and practical manufacturing, the general FJSP was extended by considering some additional practical factors. In those extended FJSP problems, setup time is a commonly considered factor. In many real-life manufacturing systems, the setup operations, such as cleaning up or changing tools, are not only often required between jobs but also strongly depend on the immediately preceding process on the same machine. This motivates researchers to study the FJSP with sequence-dependent setup time (SDST). It is well known that the general FJSP has been proven to be an NP-hard problem. As an extended problem, SDST is obviously more complex than the general FJSP. Therefore, efficient methods are needed to acquire satisfactory solutions that are of high quality in a reasonable computational time. Considering that exact methods are too intractable to solve the problem, heuristic algorithms have received extensive attention from scholars. A summary of research optimization algorithms on FJSP-SDST is presented in

Table 1. With regard to the previous work, various heuristic algorithms have been adopted, but no heuristic can perform best for all types of SDST problems or all instances of the same problem, which is in accordance with the “no free lunch” theorem [

1]. This is also the main motivation behind presenting a fresh heuristic algorithm for the considered SDST problem.

Exploration and exploitation are treated as the most important features of heuristic algorithms. The trade-off between the two features is crucial to the computational performance. However, for many famous heuristics, some algorithms have a better global search ability, such as particle swarm optimization (PSO), ant colony optimization (ACO), the genetic algorithm (GA), and the whale optimization algorithm (WOA) [

25], while others have a better local search ability, such as simulated annealing (SA), variable neighborhood search (VNS), the crow search algorithm (CSA) [

26], and tabu search (TS). Compared to the mentioned algorithms, cat swarm optimization (CSO), a novel swarm intelligence algorithm proposed by Chu et al. [

27], is inspired by the behavioral modes of cats in nature, specifically their seeking mode and tracing mode, corresponding to global search and local search in the algorithm. The main advantage of the CSO algorithm is that the local and global search can be performed simultaneously during the evolutionary process. This feature provides the chance to find a balance between exploration and exploitation by elaborately designing the algorithm. Since it was proposed, CSO has been successfully applied to various optimization problems [

28,

29,

30,

31,

32,

33,

34,

35,

36,

37]. However, to the best of our knowledge, it is seldom adopted for SDST. Therefore, the aim of this paper is to apply CSO to the FJSP-SDST. To enhance the search ability, the quantum computing principle is incorporated with the conventional CSO to form quantum cat swarm optimization (QCSO). In QCSO, some improvements are made, as follows: (1) Quantum encoding is employed to enhance the search ergodicity of the algorithm. (2) The individual positions of cats are updated by adjusting the quantum rotation angle to improve the search efficiency and speed of the algorithm. (3) A dynamic adjustment strategy for the mixture ratio of the two search modes (seeking and tracing) is adopted to maintain the balance between exploration and exploitation. Extensive experimental results demonstrate that the proposed QCSO is effective in solving the considered problem.

The remainder of this paper is structured as follows:

Section 2 describes the presented problem.

Section 3 presents the proposed QCSO algorithm.

Section 4 describes the extensive experiments and analyzes the computational results.

Section 5 provides the conclusions and future work.

3. Implementation of Proposed QCSO

3.1. Encoding Approach

To implement QCSO, the first task is to design an appropriate encoding approach. Here, the probability amplitude is used to represent the current position of each individual cat. This paper maintains a population of Q-bit individuals,

at generation

t, where

N is the size of the population and

is a Q-bit individual, defined as:

where

, (

i = 1, 2, …,

n ×

m) is a Q-bit that should satisfy the normalization condition,

.

gives the probability that the Q-bit will be found in the 0 state and

gives the probability that the Q-bit will be found in the 1 state. According to what is observed,

Q(

t) can collapse to binary string

P(

t) composed by 0 and 1. A random number

r is generated from the range [0, 1]; if

r >

, the bit of the binary string is set to 1. Thus, a binary string of length

L is formed from the Q-bit individual. Meanwhile, every

L binary string is converted to a decimal string in the range of 0 to

n. Then, a decimal string of length

n ×

o is formed. The decimal string is sorted from small to large to get the location, and its procedures are encoded.

For the FJSP with O procedures, n jobs, and m machines, the individual length of a quantum bit is defined as , where [x] represents an integer that is not more than x.

3.2. Decoding Mechanism

This paper investigates the FJSP-SDST with the purpose of facilitating high achievement for all performance indices, such as the makespan of jobs, under the conditions of satisfying the process constraints, ensuring that the precedence activities of the job are completed, and minimizing the setup time of the work procedure of the same machine. The quantum individual is a linear superposition state of the solution through the probability amplitude, so the solution of the linear superposition state should be translated into a decimal solution through the decoding mechanism [

38]. Because

, every

binary string should be converted to a decimal number, and finally a decimal string of length

m ×

n is formed. The decimal string is sorted in order from small to large to make sure that the relative position of each number is unchanged. The smallest numbers of

m represent the first job, while the next smallest numbers represent the second job. In this way, we can get the decimal string based on the working procedure code.

Step 1: Set P(t) as a Q-bit individual:

where

t represents the generation of the qubit, and in order to increase the chance that each solution will be searched,

and

are initialized with

.

Step 2: Generate a random number r from the range [0, 1]; if , let xi(t) = 1, else let xi(t) = 0 (i = 1, 2, …, n). For every P(t), we can get a binary of length n.

Step 3: In X(t), convert each binary string to a decimal string, to form a decimal string of length m × n.

Step 4: Let the numbers in D(t) be ordered from small to large; the smallest numbers of m represent the first job, the next smallest numbers represent the second job, and so on. The relative position of each number in D(t) is kept constant during the process. Thus, we can get a permutation W(t), by which each n job serial number is repeated m times. The number i that appears in W(t) for j times will represent operation j of job i. If there are two or more of the same numbers in D(t), then the smaller serial number represents the job that has the smaller process number.

The data processing of the 4 × 3 scale problem is shown in

Table 3, and the specific decoding process is as follows:

For example, in the 4 × 3 scale JSP, which includes 4 jobs and 3 machines, P(t) is a 36-bit qubit chromosome. Observing P(t), if we get the 36 binary string X(t) = {0, 1, 1, 1, 0, 1, 1, 0, 1, 1, 0, 0, 1, 1, 0, 0, 0, 0, 1, 1, 1, 0, 0, 0, 0, 1, 0, 0, 1, 0, 0, 0, 1, 1, 1, 0}, then D(t) = (2, 2, 2, 1, 2, 0, 3, 0, 1, 1, 1, 2). So positions 4, 6, and 8 comprise job 1; positions 9, 10, and 11 comprise job 2; positions 1, 2, and 3 comprise job 3; and position 5, 7, and 12 comprise job 4, and W(t) = (3, 3, 3, 1, 4, 1, 4, 1, 2, 2, 2, 4). The number 3 in position 1 represents the first operation of job 3, the number 1 in position 6 represents the second operation of job 1, and the number 4 in position 12 represents the third operation of job 4. According to process genes 101 201 202 102 (x0y represents operation y of job x) that are generated, the corresponding machine genes are selected. For example, the optional machines of x0y are JM = {a, b, c} and randomly generate discrete integers in the range of JM. If the generated number is 2, then it will select the corresponding machine b. Finally, the fitness function is calculated according to the process genes and machine genes. Any one of the quantum bits can be decoded as a feasible scheduling solution, and the advantage of this method is that it will not generate inapposite solutions.

3.3. Seeking Mode

The seeking mode corresponds to a global search in the search space of the optimization problem. According to the value of MR, the individuals of the cat swarm in the search mode are determined first, and a global local search is carried out for each individual. The mutation operator is used to evaluate the fitness after the position exchange of its quantum coding. If it is better than the current solution, the current optimal solution is replaced. The steps involved in this mode are as follows:

Step 1: Make j copies of the cat’s current location ck and place them in the memory pool; the size of the memory pool is j, and j = SMP. If the value of SPC is true, then j = (SMP − 1), and leave the current position as a candidate solution.

Step 2: According to the value of CDC, each individual copy in the memory pool randomly increases or decreases SRD percent from the current value, and the original value is replaced.

Step 3: Calculate the fitness value (FS) of each candidate solution separately.

Step 4: Select the candidate point with the highest FS from the memory pool to replace the current cat’s position and update the cat’s position.

Step 5: Select a random position from the cat’s candidate position to move, and replace the position

ck.

If the target of the fitness function is the minimum value, then FSb = FSmax, otherwise FSb = FSmin.

3.4. Tracing Mode

The tracing mode corresponds to a local search in the optimization problem. In this mode, cats move to each dimension according to their own speed; individual cats approach the local optimal position, and their individual position is updated by comparing it with the optimal position of the group. The crossover operator is used for local search, and each individual cat is optimized by tracking its history and the local optimization of the current cat population. The crossover operator is as follows:

Individual: α1, α2, …, |αi, …, αj|, …, αl

Individual historical extremes: β1, β2, …, |βi, …, βj|, …, βl

New individual after crossing: α1, α2, …, |βi, …, βj|, …, αl

The steps of tracing mode can be described as follows:

Step 1: Update the speed (

vi,

d) of each dimension direction. The best position update that the entire cat group has experienced is the current optimal solution, and it is denoted as

xbest. The speed of each cat is denoted as

vi = {

vi1,

vi2, …,

vid}, and each cat updates its speed according to Equation (11):

where

xbest,d is the position of the cat with the best fitness value;

xi,d is the position of

ck,

c1 is a constant, and

r1 is a random value in the range [0, 1];

vi,d is the updated speed of cat

i in dimension d, and

M is the dimension size;

xbest,d(

t) represents the position of the cat with the best fitness value in the current swarm.

Step 2: Determine whether the speed is within the maximum range. To prevent the variation from being too large, a limit is added to the variation of each dimension, which also results in a blind random search of the solution space. SRD is given in advance; if the changed value of each dimension is beyond the limits of the SRD, set it to the given boundary value.

Step 3: Update location. Update the position of the cat according to Equation (12):

In CSO, cats represent a feasible solution to the optimization problem to be solved. Some cats perform in seeking mode, and the rest follow in tracing mode. Two models interact through MR, and MR represents the number of cats in tracing mode as a proportion of the entire cat swarm. Most of the time, cats are resting and observing the environment, and the actual tracing and capturing time is quite short, so MR should be a smaller value in the program.

3.5. Updating Quantum Rotation Angle

As the executive mechanism of evolution operation, quantum gates can be selected according to specific issues. At present, there are many kinds of quantum gates. According to the calculation features of QCSO, the quantum rotation gate is used to update the cat swarm position in this paper. The adjusted operation of the quantum rotation gate is as follows:

The update process is as follows:

In tracing mode, the increment of qubit argument of cat

Pi is updated as follows:

Let ; if the value is out of range, it should be plus or minus .

In seeking mode, random disturbance is achieved by small range fluctuation of qubit argument.

where

c1 and

c2 are two constants and

r1 is a random value in the range [0, 1].

Meanwhile, the standard CSO allocates fixed proportions of the entire cat swarm in searching and tracking mode. However, the requirements of global and local search in the evolutionary process of CSO are different, so it cannot effectively improve the search capability of the algorithm. In view of this problem, in this paper we propose a method related to the number of iterations to select the behavior mode of a cat swarm with variable iteration times:

where

nmax is the maximum iterations and

L is the current run time.

In order to improve the global search ability and the convergence rate, the algorithm uses a larger ratio of the seeking cat swarm in the early run period and a larger ratio of the tracing cat swarm in the later run period to improve the local search ability, which guarantees the convergence property of the algorithm.

3.6. Fitness Function

The optimization objective of this paper is to minimize the makespan. When the population is large, the elitist strategy could be used to select individuals for quantum crossover, and the optimization objective minimizes the makespan as the fitness function. Due to the large number of populations, the probability that the optimal individual and the worst individual will be selected is very high. To allow better individuals to have a larger probability of being selected, we create the fitness function:

In Equation (18), Mt(x) and MB (min) indicate the completion time of the current individual and the current minimum makespan in generation t. In other words, it is the current optimal solution.

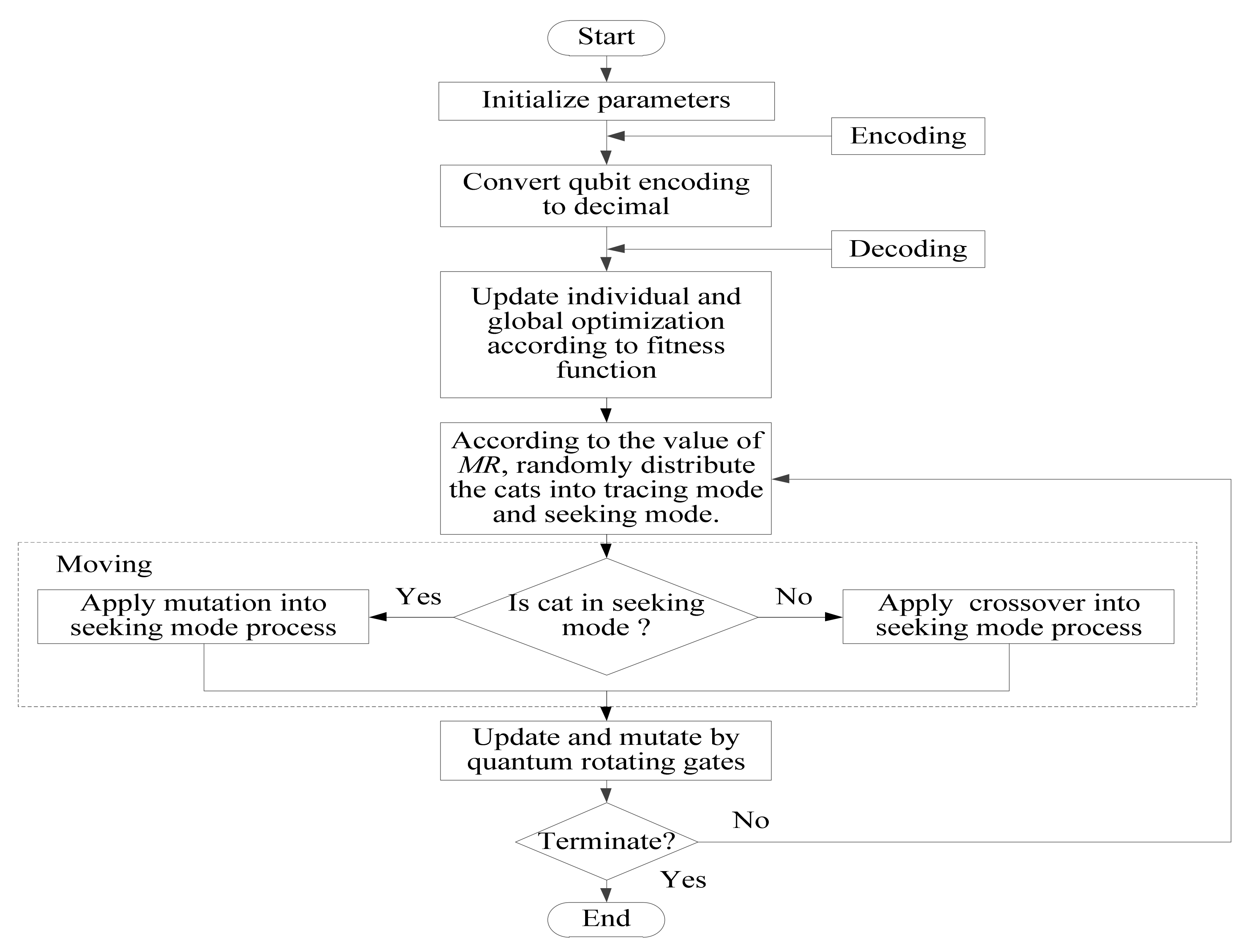

3.7. Flowchart of QCSO

The flow of QCSO is as follows:

① Initialize the population Q(t0), and randomly create n chromosomes that encoded by qubit.

② Decode chromosomes and convert qubit encoding to decimal.

③ Measure each individual in initial population Q(t0), and get a definite solution P(t0).

④ Evaluate the fitness value of each solution, and save the optimal individual and its corresponding fitness value.

⑤ According to the value of MR, determine the individual searching and tracking status of the cat group, and judge whether the calculation process can be over. If the end condition is satisfied, then exit; otherwise, continue to calculate.

⑥ Measure each individual in population Q(t), and get the corresponding definite solution.

⑦ Evaluate the fitness value of each definite solution.

⑧ Use the quantum rotation gate G(t) to update the individual position of the cat swarm, and get the new population Q(t + 1).

⑨ Save the optimal cat swarm, optimal individual, and corresponding fitness value;

⑩ Increase the number of iterations by 1, and return to step ⑤.

The flowchart of the quantum cat swarm optimization algorithm is shown in

Figure 1.