A Study of Bibliometric Trends in Automotive Human–Machine Interfaces

Abstract

:1. Introduction

2. Materials and Methods

2.1. Method

2.1.1. Co-Citation Network Analysis Relationship Formula

2.1.2. Algorithm Formulation for Different Research Elements

- 1.

- Cosine algorithm

- 2.

- Mediated centrality algorithm formula

- 3.

- Q-value and S-value

2.2. Data Sources

2.3. Technology Roadmap

3. Results

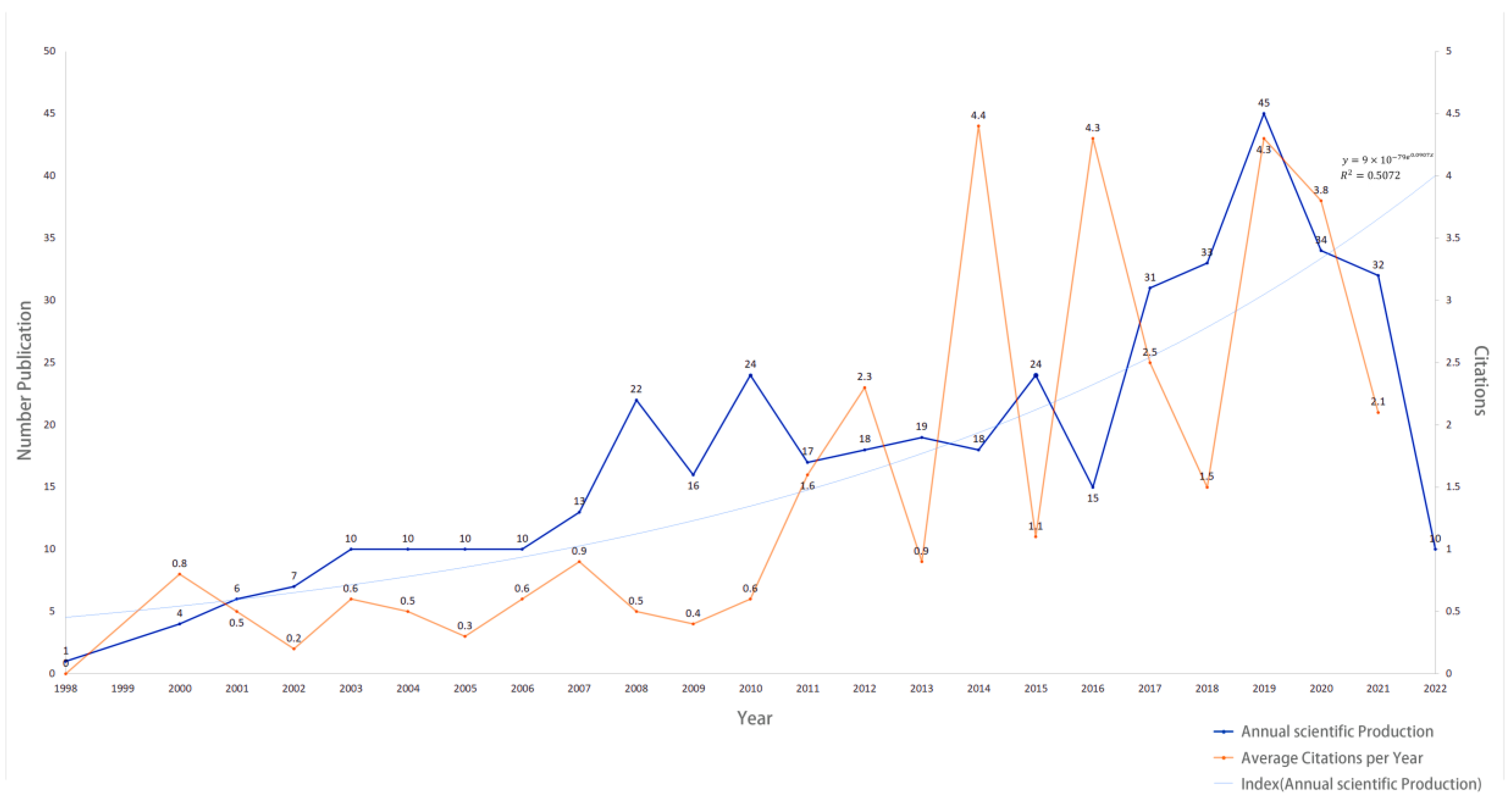

3.1. Literature Trend Analysis

3.2. Network Approach

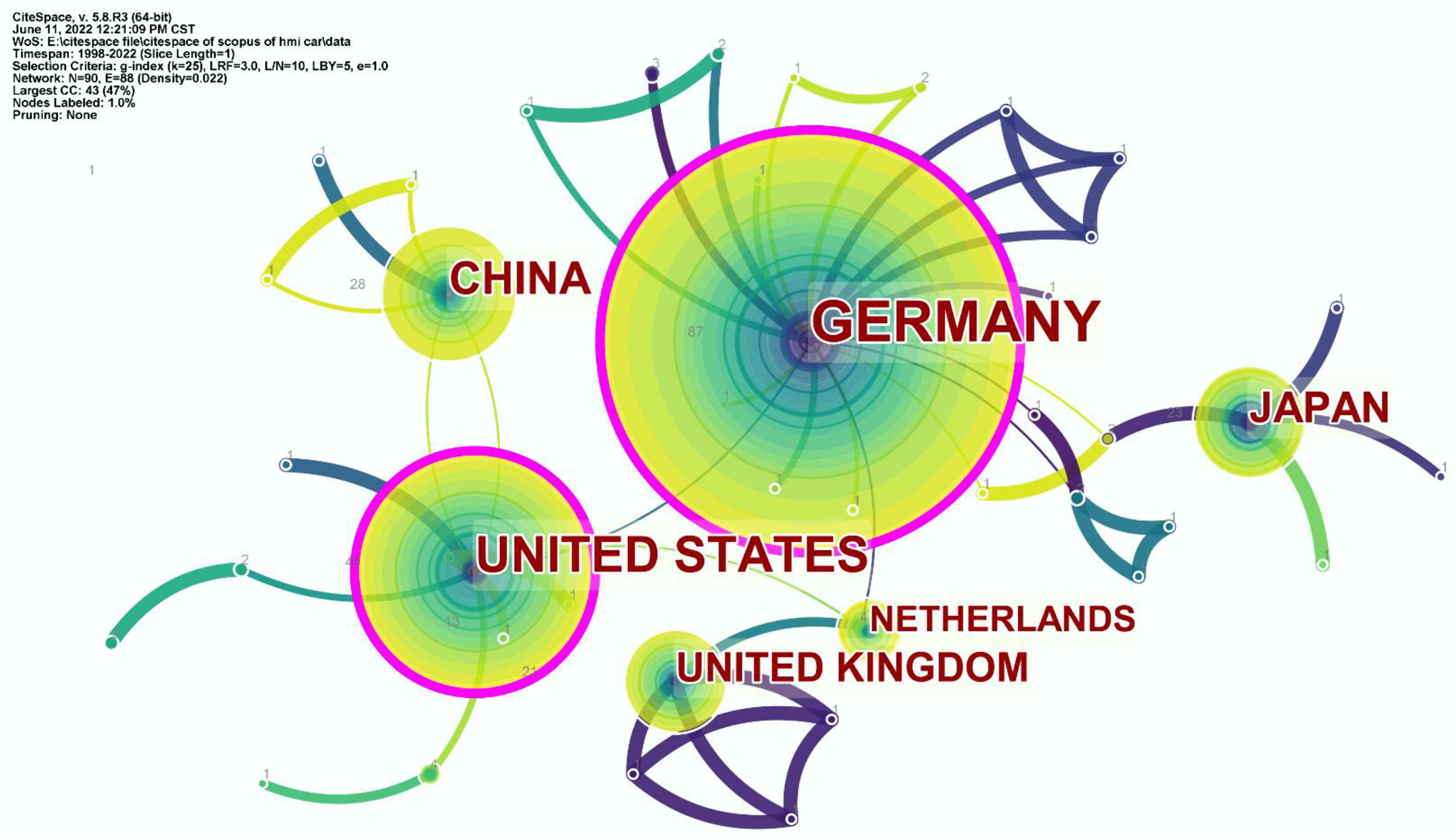

3.2.1. Country Cooperation Network

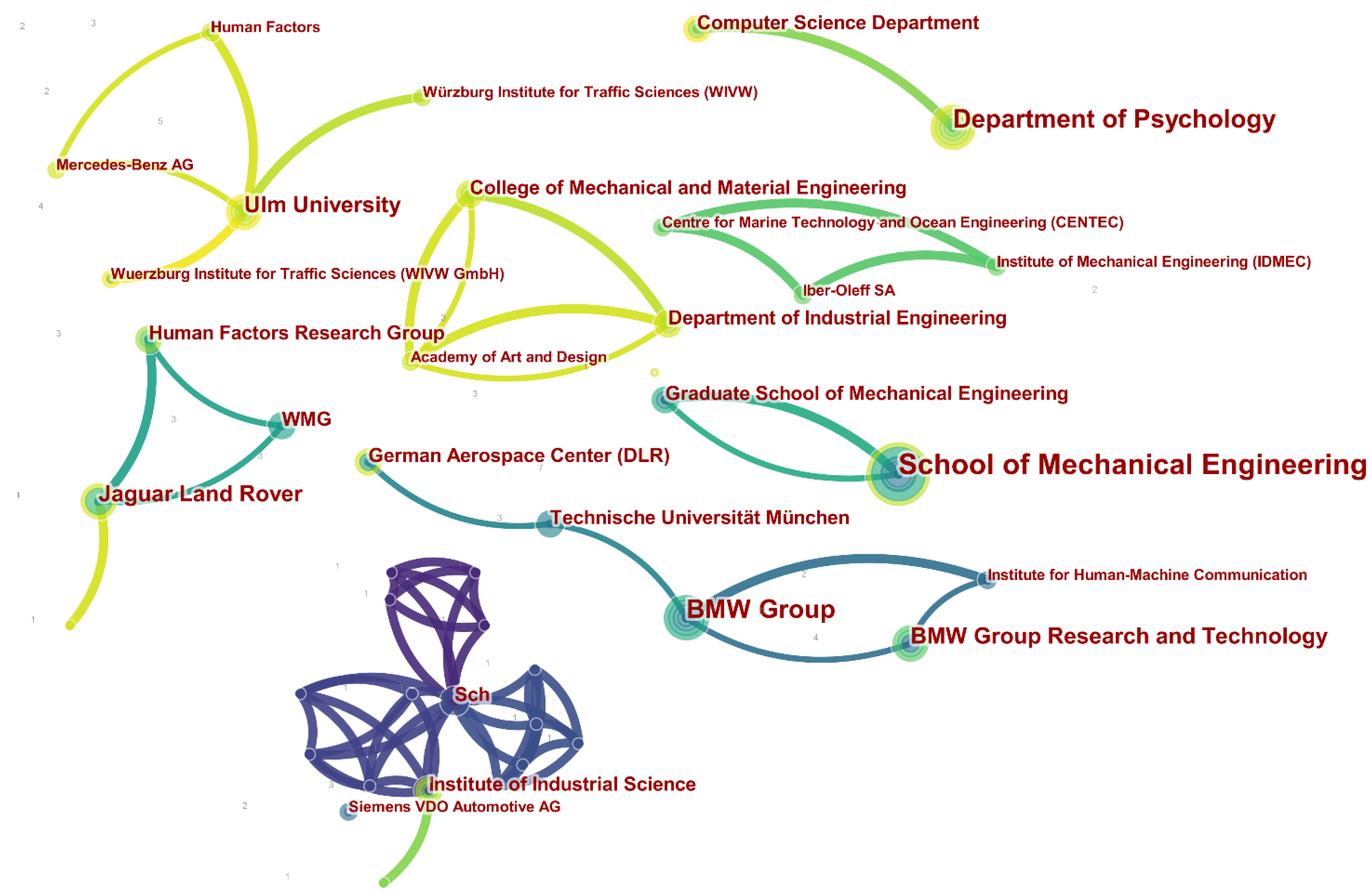

3.2.2. Institutional Cooperation Network

3.2.3. Author Collaboration Network

3.3. Research Hotspots

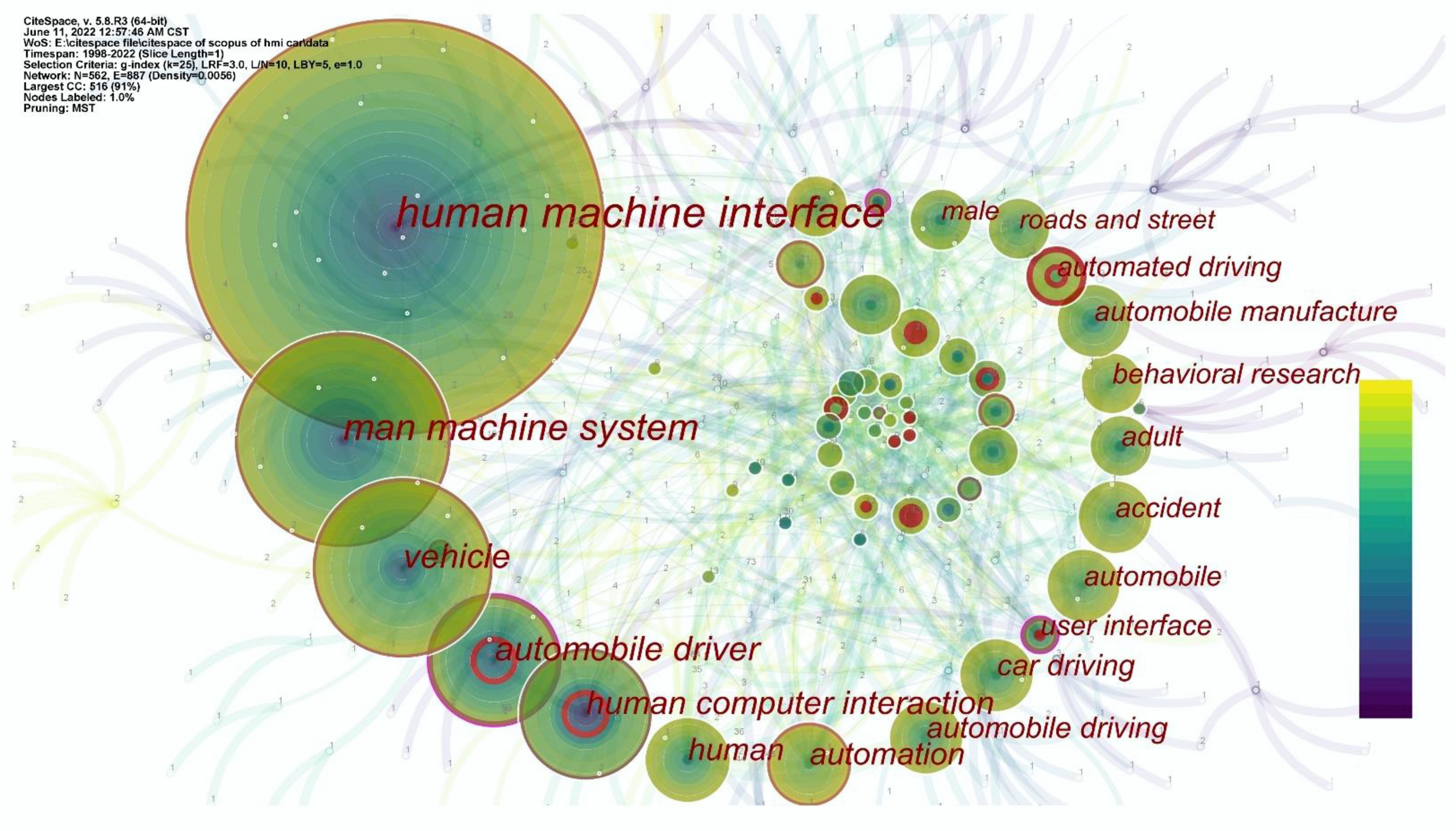

3.3.1. Keyword Co-Occurrence Network Analysis

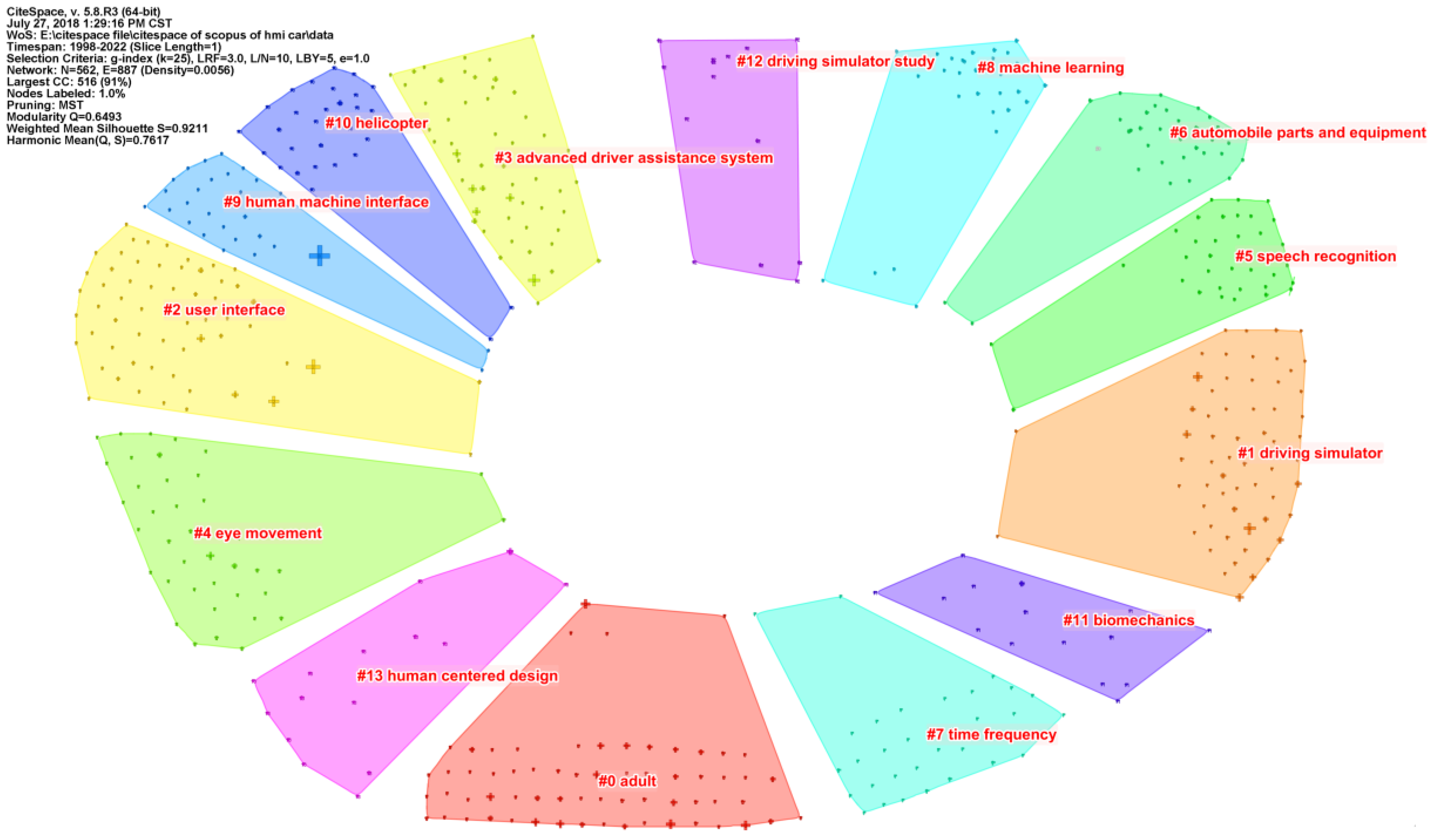

3.3.2. Keyword Clustering

- (1)

- The user studies of automotive HMI. Based on the observed clusters, studies of automotive HMIs focus on the following keywords: adult, eye movement, time frequency, biomechanics, and human–centric design. Existing research on the user interface of in-vehicle HMI has mainly been conducted from the perspectives of both drivers and pedestrians and the aspects of information transfer, five-sense feedback, contextual perception, age-level analysis, biosignals, and psychological load. In terms of information transfer, more in-depth progress has been made in foreign research on the HMI and e-HMI. For example, Koen de Clercq et al. studied the influence of an external HMI on pedestrians’ willingness to cross roads and ultimately concluded that the external HMI can effectively transfer information and improve the efficiency of interaction between pedestrians and self-driving cars [10]. In terms of five-sensory feedback, Francesco Bellotti et al. studied the feasibility of auditory interaction and verified that the use of spatial localization and acoustic information can convey information about orientation, location, and distance, while their interaction can significantly reduce the time that glasses are off the road and can cope with environments with low visibility [11]. In terms of contextual perception, Alexandra Voinescu et al. investigated the relationship between exploring individual differences in contextual perception and the perceived usability of CAV interfaces for older adults. Notably, they concluded that HMIs that are simple to use and require less interaction are favored by older adults [12]. In terms of age-level analysis, Shuo Li et al., studied an HMI design adapted to the elderly driving population and showed that informing drivers of the vehicle status and providing the reason for a manual driving takeover request achieved better takeover performance while yielding lower perceived workload and higher positive attitudes, thereby representing the most beneficial and effective human–machine interaction [13]. In terms of biosignaling, Junghwan Ryu et al. proposed a deep-learning-based driver real emotion recognizer (DRER) using a deep algorithm to recognize real emotions that cannot be accurately identified by the driver’s facial expressions. Eventually, the recognition rate reached 86.8% when identifying drivers’ induced emotions in driving situations in real experiments [14]. Regarding mental load, Xiaomeng Li investigated the effects of the HMI in ecologically safe driving on drivers’ psychological load and visual demand. The study concluded that visual measures revealed driver mental workloads consistent with subjectively reported workload levels, while drivers generated higher mental loads when receiving and processing additional information and increased their blinking behavior [15].

- (2)

- Interface research in automotive human–machine interface. As can be seen from the clusters, the clusters of interface research include user interface, advanced driver assistance system, HMI, and helicopters. The literature of this cluster was read and analyzed from the perspective of interface research. Existing international analyses have mainly been conducted at the interface and driver interaction level by studies on feedback and trust, communication warning, driver assistance, information transformation, e-HMI, multimodal HMIs, collaborative navigation, innovative interaction methods, and autonomous driving switching. In terms of communication feedback, Rachel H.Y. Ma et al. conducted simulated scenarios in which investigators assessed the level of trust in a self-driving car and its HMI as well as the level of trust in and willingness to use and accept these factors. The results showed that the high visual feedback group had the highest trust level, and this difference was significantly higher than that of the group without visual feedback [16]. In terms of communication alerts, Oliver M. Winzer et al. developed a user-centered HMI alert system that can help drivers avoid potential collisions with cyclists [8]. In terms of assisted driving, Sofia Sánchez-Mateo et al., proposed a merge assist system based on inter-vehicle communication that allows the sharing of position and speed variables between vehicles. This system was implemented on a mobile device within a vehicle, and the associated algorithm determined when and where the vehicle can perform a merging operation under safe conditions while providing appropriate information to the driver [17]. In terms of information transformation, Frederik Schewe et al. designed and evaluated an eco-HMI for speed and distance control that can use a distance–velocity meter to unconsciously influence the driver’s choice of speed, thereby improving drivers’ perception and control of speed [18]. In terms of e-HMI, Dylan Moore et al. investigated the information interaction capabilities of external HMIs and showed that if self-driving vehicles could provide universally understandable and externally presented information when interacting with other road users, they could avoid accidents and conflicts [19]. Regarding multimodal HMIs, Takuma Nakagawa et al. developed an intuitive multimodal interface system that utilizes speech, gesture, and eye gaze recognition for human–computer interaction by utilizing finite-state sensors to design the multimodal understanding component of the interface system and the dialogue control system [20]. In terms of collaborative navigation, Vicki Antrobus et al., conducted a road study aimed at providing a preliminary theoretical basis for the development of an in-vehicle intelligent HMI using the traditional navigation relationship between drivers and passengers to provide data support for exotic designs. The results showed that drivers using collaborative navigation had significantly better knowledge of landmarks and routes than those using satellite systems, which is a strong indication of the potential for collaborative navigation [21]. In terms of innovative interaction methods, Gang Tang proposed a minimalist self-powered interaction patch based on the complementary integration of frictional electric and piezoelectric sensing mechanisms to achieve multi-parameter sensing information for finger interaction (i.e., the simultaneous detection of contact position, sliding trajectory, and applied pressure). Their experimental results showed that the minimalist self-powered crosspatch has high applicability and immediate practicality in various human–computer interactions [22]. Regarding autopilot switching, Xinyu Ge et al. used deep learning neural network technology to develop a multimodal HMI that enables drivers to interact with self-driving cars and switch smoothly between manual control and autonomous driving [7].

- (3)

- The study of the external environment of the automotive human–machine interface. In the study of the external environment of the automotive HMI, the external environment refers to the entire environment associated with the driver of a car. Thus, the articles of this cluster were included. After reading and analyzing the articles, it was concluded that the study of the environment has mainly focused on safety information, from e-HMIs to early warning and prognosis. In e-HMI, the research has mainly focused on the identification and transformation of vehicle information from the human level of understanding to transform vehicle information. For example, Mathilde François et al. investigated whether external HMIs can bridge the communication gap between autonomous vehicles and pedestrians by comparing information from an e-HMI with the different driving behaviors of autonomous vehicles yielding to pedestrians to understand whether pedestrians tend to pay more attention to the motion of vehicles or e-HMIs when deciding to cross a road. Ultimately, they concluded that the two collaborate to achieve the best transfer effect [23]. In terms of early warning, Oliver M. Winzer et al. investigated the user acceptance of a preventive Car-2-X communication warning system that helps drivers avoid potential collisions with cyclists [8]. In terms of prognosis, Pavlo Bazilinskyy et al. developed various algorithms based on a test lane; maintaining a normal position while using the predicted position of the instantaneous steering angle to provide drivers with the appropriate auditory feedback, thereby reducing the occurrence of certain traffic accidents [24].

- (4)

- The study of the technical implementation of the automotive HMI from the cluster can be seen in driving simulators, speech recognition, automotive parts and equipment, machine learning, and HMI. From the perspective of technical implementation, literature related to this cluster was also read and analyzed. It was found that research on technology implementation was based on myoelectric control, early warning technology, immersive augmented displays, recognition technology, and wearable devices. Regarding myoelectric control, Edric John Nacpil et al. used the principle of surface electromyography (sEMG) to enable operators to generate electromyographic signals to control a robotic arm or prosthesis to achieve control of a vehicle—a study that will be critical to the driving experience of the disabled population in the future [25]. In terms of early warning technology, Angelos Amditis developed a warning manager for different levels of decision making and tracking in terms of perception, decision making, and behavior. The warning manager operates in such a way that the system must generate images of all possible strategies and associated risks in a given scenario and then evaluate driver behavior and suggest better options [26]. In terms of immersive augmented displays, Ali Özgür Yöntem et al. developed an immersive augmented reality HUD concept using a technique that generates images from multiple optical apertures/image sources and then displays these images according to HVS requirements by using the windshield to generate images of multiple display elements to create an immersive driving experience. This technology is also expected to be used in other parts of the car interior [27]. In terms of recognition technology, Shigeyuki Tateno used infrared array sensors to build a gesture recognition system for in-vehicle devices by combining seven different hand signals and four directions of movement for device operation, a GMM algorithm (Gaussian probability density function to accurately quantify things) to achieve background subtraction and a convolutional neural network (CNN) to achieve recognition in data recognition [28]. In the context of wearable devices, posture control is an emerging technological target in the field of HMI. As such, Xinqin Liao et al. fabricated soft, deformable, high-performance fabric strain sensors with ultra-high sensitivity and stretchability by printing silver ink directly onto pre-stretched textiles with stencils. The team used Bluetooth communication technology with a simple auxiliary signal processing circuit to produce a smart glove assembled with a textile strain sensor capable of detecting the degree of finger flexion in all directions and translating it into wireless control commands. This has a disruptive impact on the future paradigm of car driving [29].

4. Evolutionary Analysis of the Research Topic

4.1. Experimental Process

4.2. Analysis of Experimental Phenomena

4.3. Research Stage Division

4.4. Summary of This Section

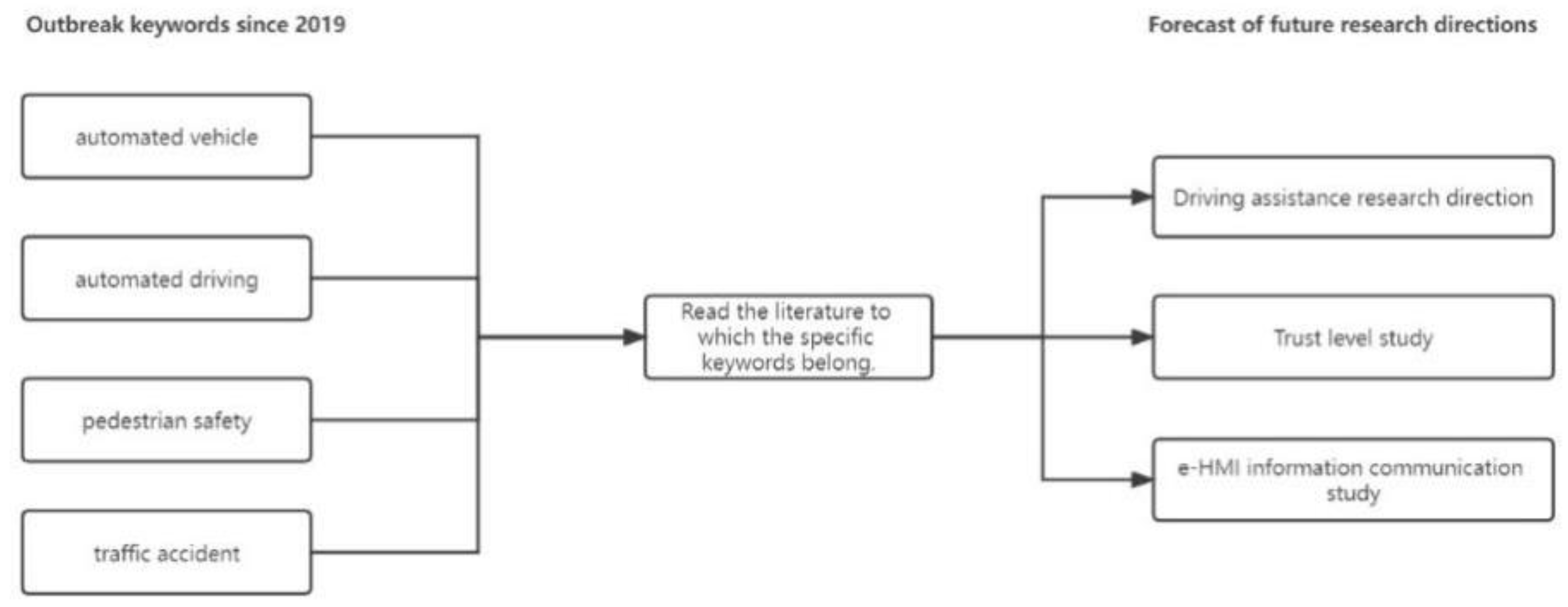

5. Burst Detection

5.1. Description of Experimental Phenomena

5.2. Analysis of Experimental Phenomena

- (1)

- Driving assistance research direction. Safety and comfort have always been the goals of autonomous driving technology (i.e., to remove users from the driving role to the maximum extent possible based on safety and to alleviate or eliminate the physical and mental burden caused by the driving process). The level of autonomous driving technology is enhanced by numerous driver assistance technologies and the interaction designs of HMIs. Li Chao Yang et al. designed a safe and smooth control-switching intelligent HMI by investigating the involvement of non-driving activities using a 3D convolutional neural network-based system to recognize driver behavior through two types of visual feedback based on head and hand movements. In the experiments, non-driving activities were classified into active and passive modes based on driver–object interaction, which ultimately yielded the system’s recognition of up to 85.87% of activities. This study serves as an important guideline for the research direction of intelligent recognition technology for self-driving cars [41]. Sayaka Ono et al. proposed and studied an HMI that constantly indicates the future position of the vehicle. The results showed that an HMI that constantly indicates the future position of the vehicle has a significantly higher avoidance rate than an HMI that only provides trajectory changes. The study confirmed that a continuous indication of the future vehicle position during the operation of an automated driving system (ADS) facilitates active driver intervention. Based on the findings, the team deduced future research questions to assess the extent to which human–computer interaction generates a sense of agency by assessing whether driver-initiated intervention remains beneficial once the driver is fully accustomed to the ADS and CTP-based HMIs. Notably, assistive technology in information display remains an important research topic in the future [42]. Shuo Li et al. designed three HCI concepts based on the needs of older drivers and conducted a driving simulation survey with 76 drivers to investigate the relative merits of assessing the impact of HCI on driver takeover performance, workload, and attitude. Their results showed that informing the driver of the vehicle status and providing a manual driving takeover request achieved the best level of assessment for each direction of cause [13]. In this regard, future research should investigate and explore the highly automated driving (HAV) human–computer interaction design in more depth. The focus should be on exploring the optimal placement of the visual interface, which will have a significant impact on the safety, usability, and acceptability of the HAV systems. Additionally, future research should explore the impact of the visual ability, psychomotor ability, reaction time, and cognitive ability of driver–HAV human–computer interactions at the user level. The above analysis predicted the future direction of driver assistance research from the overall research direction to the specific research content at two levels.

- (2)

- Trust level study. Trust level is an important indicator that can be used to improve the reliability performance of autonomous driving technology. At its present stage, trust level research focuses on the extent to which drivers respond to signals from intelligent systems (i.e., the assessment and refinement of the trustworthiness of information). Rachel H.Y. Ma et al. investigated whether visual feedback affects drivers’ trust in self-driving cars, focusing on the circumstances under which an appropriate level of trust is elicited. The level of participant trust was found to depend on whether the driver was in a safe or unsafe situation. Therefore, different levels of feedback may be required to elicit user trust under different driving conditions. In future research, the optimal combination of different feedback types that may affect the trust level of an autonomous vehicle driver under specific driving conditions (e.g., highways) should be explored and identified. Additionally, future studies should investigate whether having only visual feedback to provide information about what the vehicle is about to do affects driver trust in highly automated vehicles [16]. Ann-Kathrin Kraft et al. investigated the effect of collaborative systems on interpretation in the presence of specific system failures during manual and partially automated driving. The study focused on analyzing the effect of driver trust in the system under system malfunction and investigated whether the explanatory information provided by the system would increase driver acceptance. The results of the study showed that drivers’ trust in the system began to decline after experiencing a system failure; however, no long-term trust effects were observed [43]. No’e Monsaingeon et al. compared the features and road usage of driver-centric HMIs and vehicle-centric HMIs to determine which approach helps drivers better understand vehicle status and functionality. The results of the study showed that drivers using the driver-centric HMI had faster reaction times to speed problems and also looked at the HMI longer and more often [44]. In response to this finding, future research should focus on finding information that fosters the right level of trust in HMIs. The level of trust determines the degree of human–computer interaction. Based on the above analysis, it can be concluded that future trust levels should be studied in depth from the perspective of the human interaction information process.

- (3)

- e-HMI information communication study. As an effective means of conveying external information from an autonomous vehicle, e-HMIs can play a vital role in reducing the occurrence of traffic accidents by presenting the maximum amount of vehicle information to vulnerable road users. Y. B. Eisma et al. concluded that positioning an e-HMI display on a vehicle’s grille, windshield, and roof was the clearest option and also evokes the highest degree of information compliance when approaching a vehicle. It was also concluded that the projection-based e-HMI has limitations in terms of legibility and the visual distribution of participants; therefore, the results of the study suggest that an e-HMI should be visible on a vehicle from multiple directions [45]. Debargha Dey et al. studied the type of information pedestrians seek about cars and where they look for such information. The team analyzed gaze behavior when interacting with a manually driven vehicle during deceleration and in close proximity to yielding behavior through an eye movement study. The results of the study showed that pedestrians lose their willingness to cross a road when they are approximately 40 m away from a vehicle. They also found that a pedestrian’s decision to cross a road changes as the distance from a car changes. As a car approaches, a pedestrian’s gaze will gradually move from the bumper to the windshield. This study was the beginning of the research on pedestrian gaze behavior patterns when crossing a street [46]. Joost de Winterp et al. studied what pedestrians see when crossing a parking lot. This study showed that eye movement helps pedestrians pass safely through parking lots to a large extent. It also found that pedestrians view all sides and features of cars. The study concluded that the future displays of self-driving cars should be visible from all directions [47]. Based on the analysis of current research, future research on e-HMI information communication should focus more on physiological data related to human eye movement to study the communication and presentation of information. Moreover, e-HMI information presentation technology and presentation position arrangement should also become areas of focus.

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Chen, C.; Li, J. Text Mining and Visualization in Scientific Literature, 2nd ed.; Capital University of Economics and Business Press: Beijing, China, 2017; Volume 2. [Google Scholar]

- Zhou, X.; Li, T.; Ma, X. A bibliometric analysis of comparative research on the evolution of international and Chinese green supply chain research. Environ. Sci. Pollut. Res. 2021, 28, 6302–6323. [Google Scholar] [CrossRef]

- Chen, C. CiteSpace II: Detecting and visualizing emerging trends and transient patterns in scientific literature. J. Am. Soc. Inf. Sci. Technol. 2006, 57, 359–377. [Google Scholar] [CrossRef] [Green Version]

- Price, D.J.d.S. Little Science, Big Science; Columbia University Press: New York, NY, USA, 1963. [Google Scholar]

- Hataoka, N.; Kokubo, H.; Obuchi, Y.; Amano, A. Compact and robust speech recognition for embedded use on microprocessors. In Proceedings of the 2002 IEEE Workshop on Multimedia Signal Processing, Thomas, VI, USA, 9–11 December 2002. [Google Scholar] [CrossRef]

- Lin, Z.; Zhang, G.; Xiao, X.; Au, C.; Zhou, Y.; Sun, C.; Zhou, Z.; Yan, R.; Fan, E.; Si, S.; et al. A Personalized Acoustic Interface for Wearable Human–Machine Interaction. Adv. Funct. Mater. 2021, 32, 2109430. [Google Scholar] [CrossRef]

- Ge, X.; Li, X.; Wang, Y. Methodologies for evaluating and optimizing Multimodal human-machine-interface of Autonomous Vehicles. SAE Tech. Pap. Ser. 2018. [Google Scholar] [CrossRef]

- Winzer, O.M.; Dietrich, A.; Tondera, M.; Hera, C.; Eliseenkov, P.; Bengler, K. Feasibility analysis and investigation of the user acceptance of a preventive information system to increase the road safety of cyclists. In Human Systems Engineering and Design II, Proceeding of the 2nd International Conference on Human Systems Engineering and Design (IHSED2019): Future Trends and Applications, Munich, Germany, 16–18 September 2019; Springer: Cham, Switzerland, 2019; pp. 236–242. [Google Scholar] [CrossRef]

- Rößger, P.; Hofmeister, J. Human machine interfaces for advanced multi media applications in commercial vehicles. SAE Tech. Pap. Ser. 2001. [Google Scholar] [CrossRef]

- De Clercq, K.; Dietrich, A.; Núñez Velasco, J.P.; de Winter, J.; Happee, R. External human- machine interfaces on automated vehicles: Effects on pedestrian crossing decisions. Hum. Factors J. Hum. Factors Ergon. Soc. 2019, 61, 1353–1370. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Bellotti, F.; Berta, R.; De Gloria, A.; Margarone, M. Using 3D sound to improve the effectiveness of the advanced driver assistance systems. Pers. Ubiquitous Comput. 2002, 6, 155–163. [Google Scholar] [CrossRef]

- Voinescu, A.; Morgan, P.L.; Alford, C.; Caleb-Solly, P. The utility of psychological measures in evaluating perceived usability of automated vehicle interfaces—A study with older adults. Transp. Res. Part F Traffic Psychol. Behav. 2020, 72, 244–263. [Google Scholar] [CrossRef]

- Li, S.; Blythe, P.; Guo, W.; Namdeo, A.; Edwards, S.; Goodman, P.; Hill, G. Evaluation of the effects of age-friendly human-machine interfaces on the driver’s takeover performance in highly automated vehicles. Transp. Res. Part F Traffic Psychol. Behav. 2019, 67, 78–100. [Google Scholar] [CrossRef]

- Oh, G.; Ryu, J.; Jeong, E.; Yang, J.H.; Hwang, S.; Lee, S.; Lim, S. Drer: Deep learning-based driver’s real emotion recognizer. Sensors 2021, 21, 2166. [Google Scholar] [CrossRef] [PubMed]

- Li, X.; Vaezipour, A.; Rakotonirainy, A.; Demmel, S.; Oviedo-Trespalacios, O. Exploring drivers’ mental workload and visual demand while using an in-vehicle HMI for eco-safe driving. Accid. Anal. Prev. 2020, 146, 105756. [Google Scholar] [CrossRef]

- Ma, R.H.Y.; Morris, A.; Herriotts, P.; Birrell, S. Investigating what level of visual information inspires trust in a user of a highly automated vehicle. Appl. Ergon. 2021, 90, 103272. [Google Scholar] [CrossRef] [PubMed]

- Sánchez-Mateo, S.; Pérez-Moreno, E.; Jiménez, F. Driver monitoring for a driver-centered design and assessment of a merging assistance system based on V2V communications. Sensors 2020, 20, 5582. [Google Scholar] [CrossRef]

- Schewe, F.; Vollrath, M. Visualizing distances as a function of speed: Design and evaluation of a distance-speedometer. Transp. Res. Part F Traffic Psychol. Behav. 2019, 64, 260–273. [Google Scholar] [CrossRef]

- Moore, D.; Strack, G.E.; Currano, R.; Sirkin, D. Visualizing Implicit Ehmi for Autonomous Vehicles. In Proceedings of the 11th International Conference on Automotive User Interfaces and Interactive Vehicular Applications: Adjunct Proceedings, Utrecht, The Netherlands, 21–25 September 2019. [Google Scholar] [CrossRef]

- Nakagawa, T.; Nishimura, R.; Iribe, Y.; Ishiguro, Y.; Ohsuga, S.; Kitaoka, N. A Human Machine Interface Framework for Autonomous Vehicle Control. In Proceedings of the 2017 IEEE 6th Global Conference on Consumer Electronics (GCCE), Las Vegus, NV, USA, 24–27 October 2017. [Google Scholar] [CrossRef]

- Antrobus, V.; Burnett, G.; Krehl, C. Driver-passenger collaboration as a basis for human- machine interface design for Vehicle Navigation Systems. Ergonomics 2016, 60, 321–332. [Google Scholar] [CrossRef]

- Tang, G.; Shi, Q.; Zhang, Z.; He, T.; Sun, Z.; Lee, C. Hybridized wearable patch as a multi-parameter and multi-functional human-machine interface. Nano Energy 2021, 81, 105582. [Google Scholar] [CrossRef]

- François, M.; Osiurak, F.; Fort, A.; Crave, P.; Navarro, J. Automotive HMI design and participatory user involvement: Review and Perspectives. Ergonomics 2016, 60, 541–552. [Google Scholar] [CrossRef]

- Bazilinskyy, P.; Beaumont, C.; van der Geest, X.; de Jonge, R.; van der Kroft, K.; de Winter, J. Blind driving by means of a steering-based predictor algorithm. In Advances in Intelligent Systems and Computing, Proceedings of the AHFE 2017 International Conference on Human Factors in Transportation, Los Angeles, CA, USA, 17–21 July 2017; Springer: Cham, Switzerland, 2017; pp. 457–466. [Google Scholar]

- Nacpil, E.J.; Zheng, R.; Kaizuka, T.; Nakano, K. Implementation of a SEMG-machine interface for steering a virtual car in a driving simulator. In Advances in Human Factors in Simulation and Modeling, Proceedings of the AHFE 2017 International Conference on Human Factors in Simulation and Modeling, Los Angeles, CA, USA, 17–21 July 2017; Springer: Cham, Switzerland, 2017; pp. 274–282. [Google Scholar] [CrossRef]

- Amditis, A.; Bertolazzi, E.; Bimpas, M.; Biral, F.; Bosetti, P.; Da Lio, M.; Danielsson, L.; Gallione, A.; Lind, H.; Saroldi, A.; et al. A holistic approach to the integration of safety applications: The INSAFES subproject within the European framework programme 6 integrating project PReVENT. IEEE Trans. Intell. Transp. Syst. 2010, 11, 554–566. [Google Scholar] [CrossRef]

- Yontem, A.O.; Li, K.; Chu, D.; Meijering, V.; Skrypchuk, L. Prospective immersive human- machine interface for future vehicles: Multiple zones turn the full windscreen into a head-up display. IEEE Veh. Technol. Mag. 2021, 16, 83–92. [Google Scholar] [CrossRef]

- Tateno, S.; Zhu, Y.; Meng, F. Hand gesture recognition system for in-car device control based on Infrared Array Sensor. In Proceedings of the 2019 58th Annual Conference of the Society of Instrument and Control Engineers of Japan (SICE), Hiroshima, Japan, 10–13 September 2019. [Google Scholar] [CrossRef]

- Liao, X.; Song, W.; Zhang, X.; Huang, H.; Wang, Y.; Zheng, Y. Directly printed wearable electronic sensing textiles towards human-machine interfaces. J. Mater. Chem. C 2018, 6, 12841–12848. [Google Scholar] [CrossRef]

- Clamann, M.; Aubert, M.; Cummings, M.L. Evaluation of Vehicle-to-Pedestrian Communication Displays for Autonomous Vehicles. In Proceedings of the Transportation Research Board 96th Annual Meeting, Washington, DC, USA, 8–12 January 2017. [Google Scholar]

- Walch, M.; Lange, K.; Baumann, M.; Weber, M. Autonomous driving. In Proceedings of the 7th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, Nottingham, UK, 3 September 2015. [Google Scholar] [CrossRef]

- Merat, N.; Jamson, A.H.; Lai, F.C.H.; Daly, M.; Carsten, O.M.J. Transition to manual: Driver behaviour when resuming control from a highly automated vehicle. Transp. Res. Part F Traffic Psychol. Behav. 2014, 27, 274–282. [Google Scholar] [CrossRef] [Green Version]

- Banks, V.A.; Eriksson, A.; O’Donoghue, J.; Stanton, N.A. Is partially automated driving a bad idea? observations from an on-road study. Appl. Ergon. 2018, 68, 138–145. [Google Scholar] [CrossRef] [Green Version]

- Habibovic, A.; Lundgren, V.M.; Andersson, J.; Klingegård, M.; Lagström, T.; Sirkka, A.; Fagerlönn, J.; Edgren, C.; Fredriksson, R.; Krupenia, S.; et al. Communicating intent of automated vehicles to pedestrians. Front. Psychol. 2018, 9, 1336. [Google Scholar] [CrossRef]

- Dey, D.; Terken, J. Pedestrian Interaction with Vehicles: Roles of Explicit and Implicit Communication. In Proceedings of the 9th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, Oldenburg, Germany, 27 September 2017. [Google Scholar] [CrossRef]

- Dey, D.; Habibovic, A.; Pfleging, B.; Martens, M.; Terken, J. Color and animation preferences for a light band ehmi in interactions between automated vehicles and pedestrians. In Proceedings of the 2020 CHI Conference on Human Factors in Computing System, Honolulu, HI, USA, 25–30 April 2020. [Google Scholar] [CrossRef]

- Yang, H.; Zhao, Y.; Wang, Y. Identifying modeling forms of instrument panel system in intelligent shared cars: A study for perceptual preference and in-vehicle behaviors. Environ. Sci. Pollut. Res. 2019, 27, 1009–1023. [Google Scholar] [CrossRef]

- Faas, S.M.; Mathis, L.-A.; Baumann, M. External HMI for self-driving vehicles: Which information shall be displayed? Transp. Res. Part F Traffic Psychol. Behav. 2020, 68, 171–186. [Google Scholar] [CrossRef]

- Ackermans, S.; Dey, D.; Ruijten, P.; Cuijpers, R.H.; Pfleging, B. The effects of explicit intention communication, conspicuous sensors, and pedestrian attitude in interactions with automated vehicles. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 25–30 April 2020. [Google Scholar] [CrossRef]

- Pu, X.; Tang, Q.; Chen, W.; Huang, Z.; Liu, G.; Zeng, Q.; Chen, J.; Guo, H.; Xin, L.; Hu, C. Flexible triboelectric 3D touch pad with unit subdivision structure for effective XY positioning and pressure sensing. Nano Energy 2020, 76, 105047. [Google Scholar] [CrossRef]

- Yang, L.; Babayi Semiromi, M.; Xing, Y.; Lv, C.; Brighton, J.; Zhao, Y. The identification of non-driving activities with associated implication on the take-over process. Sensors 2021, 22, 42. [Google Scholar] [CrossRef]

- Ono, S.; Sasaki, H.; Kumon, H.; Fuwamoto, Y.; Kondo, S.; Narumi, T.; Tanikawa, T.; Hirose, M. Improvement of driver active interventions during automated driving by displaying trajectory pointers-a driving simulator study. Traffic Inj. Prev. 2019, 20, S152–S156. [Google Scholar] [CrossRef] [Green Version]

- Kraft, A.-K.; Maag, C.; Cruz, M.I.; Baumann, M.; Neukum, A. Effects of explaining system failures during maneuver coordination while driving manual or Automated. Accid. Anal. Prev. 2020, 148, 105839. [Google Scholar] [CrossRef]

- Monsaingeon, N.; Caroux, L.; Mouginé, A.; Langlois, S.; Lemercier, C. Impact of interface design on drivers’ behavior in partially automated cars: An on-road study. Transp. Res. Part F Traffic Psychol. Behav. 2021, 81, 508–521. [Google Scholar] [CrossRef]

- Eisma, Y.B.; van Bergen, S.; ter Brake, S.M.; Hensen, M.T.; Tempelaar, W.J.; de Winter, J.C. External human-machine interfaces: The effect of display location on crossing intentions and Eye Movements. Information 2019, 11, 13. [Google Scholar] [CrossRef] [Green Version]

- Dey, D.; Walker, F.; Martens, M.; Terken, J. Gaze patterns in pedestrian interaction with vehicles. In Proceedings of the 11th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, Utrecht, The Netherlands, 21–25 September 2019. [Google Scholar] [CrossRef]

- De Winter, J.; Bazilinskyy, P.; Wesdorp, D.; de Vlam, V.; Hopmans, B.; Visscher, J.; Dodou, D. How do pedestrians distribute their visual attention when walking through a parking garage? an eye-tracking study. Ergonomics 2021, 64, 793–805. [Google Scholar] [CrossRef] [PubMed]

| Ranking | Count | Centrality | Year | Country |

|---|---|---|---|---|

| 1 | 87 | 0.34 | 2000 | Germany |

| 2 | 49 | 0.21 | 1998 | United States |

| 3 | 28 | 0.06 | 2006 | China |

| 4 | 23 | 0.06 | 2001 | Japan |

| 5 | 21 | 0.06 | 2001 | United Kingdom |

| 6 | 13 | 0 | 2007 | Netherlands |

| 7 | 12 | 0 | 2007 | Sweden |

| 8 | 11 | 0 | 2008 | France |

| 9 | 11 | 0 | 2002 | Italy |

| 10 | 10 | 0 | 2003 | South Korea |

| Ranking | Count | Centrality | Year | Institution |

|---|---|---|---|---|

| 1 | 7 | 0 | 2006 | School of Mechanical Engineering, Sungkyunkwan University |

| 2 | 5 | 0 | 2016 | Department of Psychology, Ruhr University Bochum |

| 3 | 5 | 0 | 2007 | BMW Group |

| 4 | 4 | 0 | 2013 | Department of Biomechanical Engineering and Faculty of Mechanical, Maritime, and Materials Engineering, Delft University of Technology |

| 5 | 4 | 0 | 2012 | Jaguar Land Rover |

| 6 | 4 | 0 | 2019 | Eindhoven University of Technology |

| 7 | 4 | 0 | 2014 | Institute of Ergonomics, Technical University of Munich |

| 8 | 4 | 0 | 2007 | Delft University of Technology |

| 9 | 4 | 0 | 2001 | Volkswagen AG |

| 10 | 4 | 0 | 2004 | Department of Mechanical Engineering, Bilkent University |

| Number | Key Authors | Year Range of Publication | Literature Publications | Associated Authors |

|---|---|---|---|---|

| 1 | Ying Wang | 2017–2020 | 6 | Xinyu Li |

| 2 | Klaus Bengler | 2016–2020 | 6 | André Dietrich, Michael Rettenmaier |

| 3 | Zhiwei Lin | 2022 | 1 | Zhiwei Lin, Gaoqiang Zhang, Xiao Xiao, Christian Au, Yihao Zhou, Chenchen Sun, Zhihao Zhou, Rong Yan, Endong Fan, Shaobo Si, Lei Weng, Shaurya Mathur, Jin Yang, and Jun Chen |

| 4 | N Hataoka | 2002 | 1 | A. Amano |

| Number | Cluster Label | Keyword |

|---|---|---|

| 0 | adult | adult; female; male; middle-aged; human–machine interface |

| 1 | driving simulator | driving simulator; automation; human factor; automated vehicle; human engineering |

| 2 | user interface | user interface; gesture recognition; user-centered design; adaptive cruise control (ACC); man-machine system |

| 3 | advanced driver assistance system | advanced driver assistance system; automation; in-vehicle information system; road safety; motor transportation |

| 4 | eye movement | eye movement; vehicle; visual behavior; field operation test (FOT); HMI design |

| 5 | speech recognition | speech recognition; temperature sensitive; source separation; wearable acoustic sensor; flexible sensor |

| 6 | automobile parts and equipment | automobile parts and equipment; automobile electronic equipment; collision warning algorithm; safety state; car following |

| 7 | time frequency | time frequency; motion sickness; driving cognition; brain; estimation |

| 8 | machine learning | machine learning; collision avoidance; human-automation interaction; phoning while driving; psychomotor performance |

| 9 | human–machine interface | human–machine interface; telematics; wireless telecommunication system; speech dialog system; visualization |

| 10 | helicopter | helicopter; head-mounted display; helmet-mounted display; HoloLens; pilot assistance |

| 11 | biomechanics | biomechanics; anthropometry; abdomen; motor activity; head movement |

| 12 | human–machine interface; driving simulator study | Human–machine interface (HMI); driving simulator study; eye gaze; mental model; finite-state transducer |

| 13 | human-centered design | human-centered design; vehicle navigation system; ubiquitous computing; emotion detection; navigation system |

| Number | Research Hotspots |

|---|---|

| 1 | User studies of automotive HMI |

| 2 | Interface research regarding automotive human–machine interfaces |

| 3 | The study of the external environment of the automotive human–machine interface |

| 4 | The study of the technical implementation of the automotive human–machine interface |

| Stage | Topics |

|---|---|

| 1998–2008 | Driver assistance and information recognition concept building |

| 2009–2018 | Driver assistance system refinement and technology construction |

| 2019–2022 | Technology deepening and user perception research |

| First Author | Year | Article | Journal | Co-Citations | Year | Centrality | Cluster |

|---|---|---|---|---|---|---|---|

| Clamann M | 2017 | Evaluation of vehicle-to-pedestrian communication displays for autonomous vehicles [33] | Transportation Research Board | 9 | 2017 | 0 | 7 |

| Walch M | 2015 | Autonomous driving [34] | ACM Journals | 7 | 2015 | 0 | 10 |

| Merat N | 2014 | Transition to manual [35] | Transportation Research Part F | 6 | 2014 | 0 | 6 |

| Banks VA | 2018 | Is partially automated driving a bad idea? [36] | Applied Ergonomics | 6 | 2018 | 0.04 | 4 |

| Habibovic A | 2018 | Communicating intent of automated vehicles to pedestrians [37] | Frontiers in Psychiatry | 6 | 2018 | 0 | 2 |

| Dey D | 2017 | Pedestrian interaction with vehicles [38] | ACM Journals | 5 | 2017 | 0 | 7 |

| Keywords | Year | Strength | Begin | End | 1998–2022 |

|---|---|---|---|---|---|

| human–computer interaction | 1998 | 4.4 | 2001 | 2007 | ▂▂▂▃▃▃▃▃▃▃▂▂▂▂▂▂▂▂▂▂▂▂▂▂▂ |

| user interface | 1998 | 3.88 | 2001 | 2007 | ▂▂▂▃▃▃▃▃▃▃▂▂▂▂▂▂▂▂▂▂▂▂▂▂▂ |

| ergonomics | 1998 | 3.86 | 2001 | 2010 | ▂▂▂▃▃▃▃▃▃▃▃▃▃▂▂▂▂▂▂▂▂▂▂▂▂ |

| automobile driver | 1998 | 6.29 | 2003 | 2011 | ▂▂▂▂▂▃▃▃▃▃▃▃▃▃▂▂▂▂▂▂▂▂▂▂▂ |

| virtual reality | 1998 | 3.82 | 2008 | 2011 | ▂▂▂▂▂▂▂▂▂▂▃▃▃▃▂▂▂▂▂▂▂▂▂▂▂ |

| automobile simulator | 1998 | 3.44 | 2008 | 2013 | ▂▂▂▂▂▂▂▂▂▂▃▃▃▃▃▃▂▂▂▂▂▂▂▂▂ |

| experiment | 1998 | 3.39 | 2008 | 2013 | ▂▂▂▂▂▂▂▂▂▂▃▃▃▃▃▃▂▂▂▂▂▂▂▂▂ |

| human engineering | 1998 | 3.62 | 2017 | 2019 | ▂▂▂▂▂▂▂▂▂▂▂▂▂▂▂▂▂▂▂▃▃▃▂▂▂ |

| human factor | 1998 | 3.98 | 2018 | 2019 | ▂▂▂▂▂▂▂▂▂▂▂▂▂▂▂▂▂▂▂▂▃▃▂▂▂ |

| automated vehicle | 1998 | 5.1 | 2019 | 2022 | ▂▂▂▂▂▂▂▂▂▂▂▂▂▂▂▂▂▂▂▂▂▃▃▃▃ |

| automated driving | 1998 | 4.01 | 2019 | 2022 | ▂▂▂▂▂▂▂▂▂▂▂▂▂▂▂▂▂▂▂▂▂▃▃▃▃ |

| pedestrian safety | 1998 | 3.28 | 2019 | 2022 | ▂▂▂▂▂▂▂▂▂▂▂▂▂▂▂▂▂▂▂▂▂▃▃▃▃ |

| traffic accident | 1998 | 4.79 | 2020 | 2022 | ▂▂▂▂▂▂▂▂▂▂▂▂▂▂▂▂▂▂▂▂▂▂▃▃▃ |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, X.; Liao, X.-P.; Tu, J.-C. A Study of Bibliometric Trends in Automotive Human–Machine Interfaces. Sustainability 2022, 14, 9262. https://doi.org/10.3390/su14159262

Zhang X, Liao X-P, Tu J-C. A Study of Bibliometric Trends in Automotive Human–Machine Interfaces. Sustainability. 2022; 14(15):9262. https://doi.org/10.3390/su14159262

Chicago/Turabian StyleZhang, Xu, Xi-Peng Liao, and Jui-Che Tu. 2022. "A Study of Bibliometric Trends in Automotive Human–Machine Interfaces" Sustainability 14, no. 15: 9262. https://doi.org/10.3390/su14159262

APA StyleZhang, X., Liao, X.-P., & Tu, J.-C. (2022). A Study of Bibliometric Trends in Automotive Human–Machine Interfaces. Sustainability, 14(15), 9262. https://doi.org/10.3390/su14159262