Design Aspects in Repairability Scoring Systems: Comparing Their Objectivity and Completeness

Abstract

:1. Introduction

Scoring Systems for Repairability

- The criteria for these scoring systems are publicly available in the English language.

- The evaluation method used is quantitative or at least semi-quantitative in nature, to provide a more objective assessment and enable ranked comparisons of products.

- It must be the latest iteration or version of the assessment system from the organisation/group.

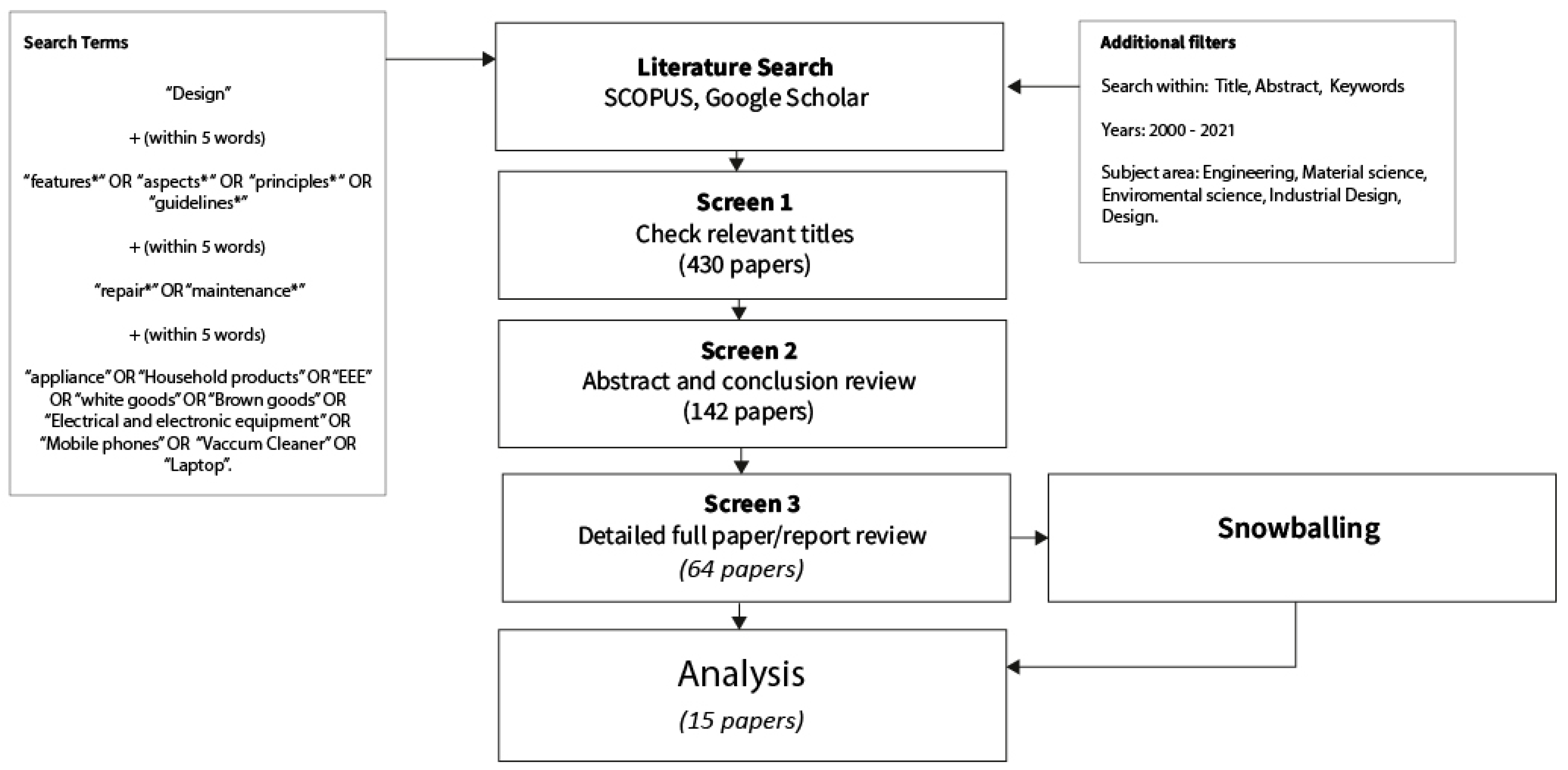

2. Method

2.1. Assessing Completeness of the Scoring Systems

2.2. Assessing Objectivity of the Scoring Systems

- Objective: Each level score that can be achieved is clearly defined, the testing action to achieve the score can be quantified and is operator-independent.

- Semi-objective: Whilst the testing action can be quantified, no clear indication is given on how each level of the score is achieved, causing a degree of operator dependence.

- Subjective: One or more testing actions cannot be quantified objectively; the result is operator-dependent.

3. Results and Discussion

3.1. Aspects not Addressed or only Partially Addressed by the Scoring System

3.2. Interdependencies between Design Elements

3.3. Comparing Scoring Systems

4. Recommendations for Future Work

- -

- Assessments of health and safety were semi-objective across the majority of the scoring systems. Therefore, there is an opportunity to develop objective criteria and testing methodologies for assessing health and safety of the user and the product during and after repair.

- -

- The eDiM method database could be expanded and further simplified to measure the ease of disassembly more universally. Additionally, the question of whether the additional accuracy provided by eDiM compared to disassembly step compensates for the increased difficulty in testing needs to be considered.

- -

- Since time for reassembly is sometimes higher than for disassembly, it might be important to consider ease of reassembly as a separate criterion whenever eDiM is not used.

- -

- In terms of repair information content, it is important to establish what information is most critical to promote repair. Additionally, information that is dependent on specific faults/components should be addressed at the fault/component level instead of the product level.

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Bakker, C.; Wang, F.; Huisman, J.; den Hollander, M. Products that go round: Exploring product life extension through design. J. Clean Prod. 2014, 69, 10–16. [Google Scholar] [CrossRef]

- Baldé, C.; Forti, V.; Gray, V.; Kuehr, R.; Stegmann, P. Suivi des Déchets d’équipements Électriques et Électroniques à l’échelle Mondiale 2017: Quantités, Flux et Ressources; UNITAR: Geneva, Switzerland, 2017. [Google Scholar]

- OECD. Material Resources, Productivity and the Environment; OECD: Paris, France, 2015; pp. 1–14. [Google Scholar]

- European Commission. Circular Economy Action Plan. 2020. Available online: https://ec.europa.eu/environment/topics/circular-economy/first-circular-economy-action-plan_en (accessed on 10 August 2021).

- Sanfelix, J.; Cordella, M.; Alfieri, F. Methods for the Assessment of the Reparability and Upgradability of Energy-Related Products: Application to TVs Final Report; European Commission Publications Office: Seville, Spain, 2019. [Google Scholar] [CrossRef]

- Bracquené, E.; Brusselaers, J.; Dams, Y.; Peeters, J.; de Schepper, K.; Duflou, J.; Dewulf, W. ASMER BENELUX Repairability Criteria for Energy Related Products; Study in the BeNeLux Context to Evaluate the Options to Extend the Product Life Time; BeNeLux: Bruxelles, Belgium, 2018. [Google Scholar]

- ONR 192102; Label of Excellence for Durable, Repair Friendly, Designed Electrical and Electronic Appliances. Beuth Publishing: Berlin, Germany, 2014.

- Flipsen, B.; Huisken, M.; Opsomer, T.; Depypere, M. IFIXIT Smartphone Reparability Scoring: Assessing the Self-Repair Potential of Mobile ICT Devices. PLATE Conf. 2019, 2019, 18–20. [Google Scholar]

- iFixit. Smartphone Repairability Scores 2021. Available online: https://www.ifixit.com/smartphone-repairability (accessed on 2 August 2021).

- Ademe, M.H.; Ciarabelli, L.; Alma, D.; Eric, E.W.M.; Virginie, L.; Guillaume, D.; Benjamin, M.; Astrid, L.F. Benchmark International Du Secteur De La Reparation; Agemce de l’Environment: Paris, France, 2018. [Google Scholar]

- Franceschini, F.; Galetto, M.; Maisano, D. Management by Measurement: Designing Key Indicators and Performance Measurement Systems: With 87 Figures and 62 Tables; Springer: Berlin/Heidelberg, Germany, 2010. [Google Scholar]

- Bracquene, E.; Peeters, J.R.; Burez, J.; de Schepper, K.; Duflou, J.R.; Dewulf, W. Repairability evaluation for energy related products. Procedia CIRP 2019, 80, 536–541. [Google Scholar] [CrossRef]

- Bracquene, E.; Peeters, J.; Alfieri, F.; Sanfelix, J.; Duflou, J.; Dewulf, W.; Cordella, M. Analysis of evaluation systems for product repairability: A case study for washing machines. J. Clean. Prod. 2021, 281, 125122. [Google Scholar] [CrossRef]

- Indice de Réparabilité. 2021. Available online: https://www.ecologie.gouv.fr/indice-reparabilite (accessed on 19 April 2022).

- EN 45554. General Methods for the Assessment of the Ability to Repair, Reuse and Upgrade Energy-Related Products; European Committee for Electrotechnical Standardization: Brussels, Belgium, 2021. [Google Scholar]

- Wohlin, C. Guidelines for snowballing in systematic literature studies and a replication in software engineering. In Proceedings of the EASE ‘14: 18th International Conference on Evaluation and Assessment in Software Engineering, London, UK, 13–14 May 2014. [Google Scholar] [CrossRef]

- Bovea, M.D.; Pérez-Belis, V. Identifying design guidelines to meet the circular economy principles: A case study on electric and electronic equipment. J. Environ. Manag. 2018, 228, 483–494. [Google Scholar] [CrossRef] [PubMed]

- Den Hollander, M.C. Design for Managing Obsolescence: Design Methodology for Preserving Product Integrity in a Circular Economy. Ph.D. Thesis, Delft University of Technology, Delft, The Netherlands, 2018. [Google Scholar]

- EN 62542:2013; Environmental Standardization for Electrical and Electronic Products and Systems—Glossary of Terms. European Committee for Electrotechnical Standardization: Bruxelles, Belgium, 2013.

- Vanegas, P.; Peeters, J.R.; Cattrysse, D.; Tecchio, P.; Ardente, F.; Mathieux, F.; Dewulf, W.; Duflou, J.R. Ease of disassembly of products to support circular economy strategies. Resour. Conserv. Recycl. 2018, 135, 323–334. [Google Scholar] [CrossRef] [PubMed]

- Peeters, J.R.; Tecchio, P.; Vanegas, P. eDIM: Further Development of the Method to Assess the Ease of Disassembly and Reassembly of Products: Application to Notebook Computers; Publications Office of the European Union: Luxembourg, 2018. [Google Scholar] [CrossRef]

- Bonvoisin, J.; Halstenberg, F.; Buchert, T.; Stark, R. A systematic literature review on modular product design. J. Eng. Des. 2016, 27, 488–514. [Google Scholar] [CrossRef]

- Pozo Arcos, B.; Bakker, C.A.; Flipsen, B.; Balkenende, R. Practices of Fault Diagnosis in Household Appliances: Insights for Design. J. Clean. Prod. 2020, 265, 121812. [Google Scholar] [CrossRef]

- Cordella, M.; Sanfelix, J.; Alfieri, F. Development of an Approach for Assessing the Reparability and Upgradability of Energy-related Products. Procedia CIRP 2018, 69, 888–892. [Google Scholar] [CrossRef]

- Pozo Arcos, B.; Bakker, C.A.; Flipsen, B.; Balkenende, R. Faults in consumer products are difficult to diagnose, and design is to blame: A user observation study. J. Clean. Prod. 2021, 319, 128741. [Google Scholar] [CrossRef]

- Dangal, S.; van den Berge, R.; Pozo Arcos, B.; Faludi, J.; Balkenende, R. Perceived capabilities and barriers for do-it-yourself repair. In Proceedings of the 4th PLATE 2021 Conference, Virtual, 26–28 May 2021. [Google Scholar]

- Moss, M. Designing for Minimal Maintenance Expense: The Practical Application of Reliability And Maintainability. Quality and Reliability Series Part 1; Marcel Dekker: New York, NY, USA, 1985. [Google Scholar]

- Perera, H.S.C.; Nagarur, N.; Tabucanon, M.T. Component part standardization: A way to reduce the life-cycle costs of products. Int. J. Prod. Econ. 1999, 60, 109–116. [Google Scholar] [CrossRef]

- Deloitte. Study on Socioeconomic Impacts of Increased Reparability—Final Report; Prepared for the European Commission, DG ENV; Publications Office of the European Union: Luxembourg, 2016. [Google Scholar] [CrossRef]

- Shahbazi, S.; Jönbrink, A.K. Design guidelines to develop circular products: Action research on nordic industry. Sustainability 2020, 12, 3679. [Google Scholar] [CrossRef]

- Pérez-Belis, V.; Braulio-Gonzalo, M.; Juan, P.; Bovea, M.D. Consumer attitude towards the repair and the second-hand purchase of small household electrical and electronic equipment. A Spanish case study. J. Clean. Prod. 2017, 158, 261–275. [Google Scholar] [CrossRef]

- Keoleian, G.; Menerey, D. Life Cycle Design Guidance Manual: Environmental Requirements and the Product System; Office of Research and Development: Washington, DC, USA, 1993. [Google Scholar]

- Viegand Maagøe A/S; Van Holsteijn en Kemna B.V. Review Study on Vacuum Cleaners Final Report; European Commission, Directorate-General for Energy: Brussels, Belgium, 2019. [Google Scholar]

- Tecchio, P.; Ardente, F.; Mathieux, F. Understanding lifetimes and failure modes of defective washing machines and dishwashers. J. Clean. Prod. 2019, 215, 1112–1122. [Google Scholar] [CrossRef]

- Sabbaghi, M.; Cade, W.; Behdad, S.; Bisantz, A.M. The current status of the consumer electronics repair industry in the U.S.: A survey-based study. Resour. Conserv. Recycl. 2017, 116, 137–151. [Google Scholar] [CrossRef] [Green Version]

- Dewberry, E.; Saca, L.; Moreno, M.; Sheldrick, L.; Sinclair, M. A Landscape of Repair. Sustain Innov. 2016, 2016, 76–85. [Google Scholar]

- IFixit. Repair Market Observations from Ifixit; IFixit: San Luis Obispo, CA, USA, 2019. [Google Scholar]

- Flipsen, B.; Bakker, C.; van Bohemen, G. FLIPSEN Developing a reparability indicator for electronic products. In Proceedings of the 2016 Electron Goes Green 2016+ (EGG), Berlin, Germany, 6–9 September 2016; pp. 1–9. [Google Scholar] [CrossRef]

- Jaeger-Erben, M.; Frick, V.; Hipp, T. Why do users (not) repair their devices? A study of the predictors of repair practices. J. Clean. Prod. 2020, 286, 125382. [Google Scholar] [CrossRef]

- Ackermann, L.; Mugge, R.; Schoormans, J. Consumers’ perspective on product care: An exploratory study of motivators, ability factors, and triggers. J. Clean. Prod. 2018, 183, 380–391. [Google Scholar] [CrossRef]

- Jef, R.P.; Paul, V.; Cattrysse, D.; Tecchio, P.; Mathieux, F.; Ardente, F. Study for a Method to Assess the Ease of Disassembly of Electrical and Electronic Equipment. Method Development and Application to a Flat Panel Display Case Study; Publications Office of the European Union: Luxembourg, 2016. [Google Scholar] [CrossRef]

- Laitala, K.; Klepp, I.G.; Haugrønning, V.; Throne-Holst, H.; Strandbakken, P. Increasing repair of household appliances, mobile phones and clothing: Experiences from consumers and the repair industry. J. Clean. Prod. 2021, 282, 125349. [Google Scholar] [CrossRef]

- Willems, G. Electronics Design-for-eXcellence Guideline, Design-for-Robustness of Electronics; IMEC: Louvain, Belgium, 2019; pp. 1–36. [Google Scholar]

- Ingemarsdotter, A.E.; Stolk, M.; Balkenende, R. Design for Safe Repair in a Circular Economy; Technical University Delft: Delft, The Netherlands, 2021. [Google Scholar]

- Svensson-Hoglund, S.; Richter, J.L.; Maitre-Ekern, E.; Russell, J.D.; Pihlajarinne, T.; Dalhammar, C. Barriers, enablers and market governance: A review of the policy landscape for repair of consumer electronics in the EU and the U.S. J. Clean. Prod. 2021, 288, 125488. [Google Scholar] [CrossRef]

- Cordella, M.; Alfieri, F.; Sanfelix, J. JRC Analysis and Development of aJ JRC Repair—Scoring System for Repair and Upgrade of Products—Final Report; European Commission Publications Office: Seville, Spain, 2019. [Google Scholar] [CrossRef]

- Zandin, K.B. MOST Work Measurement Systems, 4th ed.; Taylor & Francis Group: Abingdon, UK, 2002. [Google Scholar]

| Scoring System | Mainly Based on | Products That Can Be Tested | Details |

|---|---|---|---|

| EN 45554 (2020) |

| All EEE | The general method of assessment for repair, reuse, and upgrade. Provides a generic set of tools and is not tailored to specific products. Intended for both professional repairers and self-repairers. |

| FRI (2020) |

| Washing machines, TVs, Laptops, Smartphones, Lawnmowers, | Based on five criteria: documentation, disassembly, spare part availability, spare part price, and additional product-based criteria. Intended for both professional repairers and self-repairers. |

| iFixit (2019) |

| Mobile phones | Eight criteria focused on assessing ease of self-repair. |

| RSS (2019) |

| VCs, laptops, TVs, mobile phones, WMs, DWs | Assessment of repairability, reusability, and upgradability. Intended for professional repairers. |

| AsMer (2018) |

| All EEE | Based on five main repair steps: product identification, failure diagnostic, disassembly and reassembly, spare part replacement, and restoring to working condition. Three different repairability criteria: information provision, product design, and service. Intended for professional repairers and self-repairers. |

| ONR 192102 (2014) |

| Brown goods and white goods | Assessment of both durability and repairability. Criteria are related to product design, provision of information and services. Intended for professional repairer |

| Design Features and Principles | Definition and How It Relates to Repair |

|---|---|

| Disassembly | The product is taken apart so that it can subsequently be reassembled and made operational [19]. Required to access components for most repairs [20]. |

| Reassembly | Assembling a product to its original configuration after disassembly [21]. Required to return a product to operation. |

| Fastener removability and reusability | Facilitation of removability of fasteners while ensuring that there is no impairment of the parts [or product] due to the process. Required for disassembly and ease of reassembly. |

| Fastener visibility | Whether more than 0.5 mm2 of the fastener surface area is visible when looking at fastening direction [20], and visual cues [8]. Facilitates product disassembly. |

| Tools required | Number and type of tools necessary for repair of the product [15]. |

| Modularity | The product design is composed of different modules. A module can consist of one or more components. Modules can be separated from the rest of the product as self-contained, semi-autonomous chunks; and they can be recombined with other components [22]. Modularity improves diagnosis [23], product disassembly, [24] and spare part price. The degree of modularity needs to be balanced—bundling into bigger modules decreases disassembly time but makes spare parts expensive, and vice versa. |

| Diagnosis | Process of isolating the reason for product failure. Diagnosis is facilitated by designed signals (text, light, sound, or movement) [23]. Even without these features, visible surfaces and component accessibility for inspection can also promote failure isolation [25]. |

| Health and safety | Health and safety risks to the user during and after repair. Features minimizing safety risks also increasing confidence in product disassembly and reassembly [26]. |

| Standard parts and interface | Enforcing “the conformance of commonly used parts and assemblies to generally accepted design standards for configuration, dimensional tolerances, performance ratings, and other functional design attributes” [27]. Standardization beneficially affects spare part cost and availability, tooling, component identification complexity, and skill levels required, and increases the interchangeability of components during maintenance and repair [28]. |

| Information accessibility | Information available to the product user and repairers. Whilst this is not directly a design element, manuals and labels are provided with the product. Guides repair process [23,25,29,30,31]. |

| Design simplicity/complexity | A minimal number of disassembly steps and/or disassembly time [24], and simplicity in understanding the interface and malfunction feedback to assist failure diagnosis [25]. |

| Adaptability/ upgradability | Adaptability allows performance of the designed functions in a changing environment. Upgrading enhances the functionality of a product [18]. Software-related issues in the product can sometimes be repaired through updates. |

| Ease of handling | Features such as small size, low centre of gravity and the presence of handles all promote ease of product handling [17,18]. Facilitates disassembly process during product manipulation. |

| Interchangeability | Assuring components can be replaced in the field with no reworking required to achieve a physical fit. Allows for component testing [23,25] and facilitates component replacement. |

| Robustness | Selecting designs that are robust. Assures products do not break during repair [8]; increases confidence during disassembly [25]. |

| Redundancy | Providing an excess of functionality and/or material in products or parts. Allows removal of material as part of a recovery intervention [32]. Functional redundancy assists fault location and isolation [23]. |

| Firmware reset | Software and the electronics-related issues can be fixed via reset [33] Reset functions facilitate cause-oriented diagnosis [23] |

| Design aspect related to reparability | Scoring System | Literature | |||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| EN 45554 | RSS(JRC) | AsMer (Benelux) | ONR 192102 | FRI | iFixit 2018 | Bovea et al. [17] | Den Hollander [18] | Shahbazi et al. [30] | Tecchio et al. [34] | Pozo Arcos et al. [23] | Victoria et al. [31] | Deloitte [29] | Sabaghi et al. [35] | Dewberry et al. [36] | IFixit [37] | Filpsen et al. [38] | Jaeger et al. [39] | Ackermann et al. [38] | Jef et al. [41] | Laitala et al. [42] | |

| Disassembly | • | • | • | • | • | • | 24 | 6 | • | • | • | • | • | • | • | • | • | • | |||

| Reassembly | • | • | • | 6 | • | ||||||||||||||||

| Fastener removability and Reusability | • | • | • | • | 16 | • | • | • | • | • | • | ||||||||||

| Fastener Visibility | • | • | 11 | • | |||||||||||||||||

| Tools Required | • | • | • | • | • | • | 3 | • | • | • | • | • | • | • | |||||||

| Modularity | • | • | 13 | 5 | • | • | • | ||||||||||||||

| Diagnosis | ⃝ | ⃝ | ⃝ | ⃝ | 1 | 3 | • | • | • | • | • | • | |||||||||

| Health and safety risk (design) | ⃝ | ⃝ | ⃝ | • | • | ||||||||||||||||

| Standard parts and interface | • | • | • | • | 4 | • | |||||||||||||||

| Repair Information to user | • | • | • | • | • | • | • | • | • | • | • | • | • | • | • | ||||||

| Updatebility / Adaptability | • | • | • | 28 | 2 | • | |||||||||||||||

| Design simplicity/ Complexity | • | 29 | 1 | • | • | • | • | ||||||||||||||

| Handling | 7 | 1 | |||||||||||||||||||

| Interchangeability | 2 | • | • | ||||||||||||||||||

| Material selection/ Robustness | 1 | • | • | ||||||||||||||||||

| Redundancy | 2 | ||||||||||||||||||||

| Firmware Reset | • | • | • | • | • | • | |||||||||||||||

| Design Elements | Scoring System | Testing Method Details | ||||||

|---|---|---|---|---|---|---|---|---|

| EN 45554 | RSS (JRC) | AsMer (Benelux) | ONR 192102 | FRI | iFixit | |||

| Disassembly | Test | Dis. time or # steps | Dis. time or # steps | Dis. time | Dis. possibility | Dis. # steps | Dis. time, Path | • Dis. required • “Possibility” = Possibility of full Dis. • * = c. with reference value • cont.: Continuous levels |

| scoring levels | levels not determined. Dis. step or time (EdiM) * | 4 levels of Dis. step / time (EdiM) | 4 levels of Dis. step / time (EdiM) | 10 levels for possibility of Dis. 5 levels of Dis. Effort | 4 levels of Dis. Step | Continuous Dis. time, Path of entry* | ||

| Reassembly | Test | Rea. time | Rea. time, c. info | Rea. time | ------ | ------ | ------ | • Dis. & Rea. required • “ c. info” = check Information on Rea. • * = check with reference value |

| scoring levels | Rea. time (EdiM) * | 2: Description of Rea., Reass. time (EdiM) * | 4: Rea. time (EdiM) * | N/A | N/A | N/A | ||

| Modularity | Test | ------ | ------ | Dis. Ability | Dis. Ability | ------ | ------ | • Dis. & c. disassembly & possibility for critical components to be reducible |

| scoring levels | N/A | N/A | 3: 50% replaceable, 75% replaceable, all replaceable | 10: all reducible to individual components | N/A | N/A | ||

| Fastener Type | Test | Dis. & c. type | Dis. & c. type | ------ | Dis. & c. type | Dis. & c. type | ------ | • Disassemble & check fastener type * = (reusable > removable > non removable) |

| scoring levels | 3 * | 3 * | ------ | 10: Non removable | 3 * | N/A | ||

| Fastener Visibility | Test | Dis. c. visibility | ------ | ------ | ------ | ------ | Dis c. visibility | • check fastener visibility during • Dis: Dis. required |

| scoring levels | 3: Visible, not visible > hidden | N/A | N/A | N/A | N/A | 3: highlighted, visible, not visible | ||

| Tools Required | Test | Dis. & c. tools | Dis. & c. tools | Dis. & c. tools | Dis. & c. tools | Dis. & c. tools | Dis. & c. tools | • check tools needed during Dis. • dis: Dis. required • “prop.” = proprietary |

| scoring levels | 4: No or basic, product specific, commercially available, prop., not removable | 3: Basic, product specific, prop. | 3: Basic, product specific, prop. | 5: Intuitive device operation | 4: Basic or supplied, product specific, prop., not removable | 4: basic, product specific, prop., requires heat gun | ||

| Diagnosis | Test | cause f. & c. interface operability, c. interface, c. available documents. | cause f. & c. interface operability, c. interface, c. available documents. | cause f. & c. interface operability, c. interface, | cause f. & c. interface operability | ------ | ------ | • “f.” = fault • documents availability could be manual, official website or through service centre call. |

| scoring levels | 4: Intuitive, coded, additional software/Hardware & prop.) | 4: Intuitive, coded, additional software/Hardware & prop.) | 4: Intuitive interface, coded, additional software/Hardware & prop.) | 10: display & test mode, 10: low level operation, operation after cover removal | ------ | N/A | ||

| Health & Safety risk during repair (design) | Test | ------ | c. mfr. instructions | ------ | Dis. & c. features | ------ | Dis. & c. features | Instructions could be included via, manual, official website or by service centre call. |

| scoring levels | N/A | 1: Instruction from mfr. | N/A | 5: Protection in control processors, 4: danger warning signs, 5: warnings on sensitive components. | N/A | 8: Battery case type, adhesive use & type. Requirement of heating & sharp tools | ||

| Working Environment (safety) | Test | c. mfr. Instruction | c. mfr. Instruction | ------ | ------ | ------ | ------ | check Instruction from mfr. for work environment required for repair (via, manual, official website or through service centre call.) |

| scoring levels | 3: any condition, workshop, production environment | 3: any condition, workshop, production environment | N/A | N/A | N/A | N/A | ||

| Skill Required (safety) | Test | c. mfr. Instruction | c. mfr. Instruction | ------ | ------ | ------ | ------ | check Instruction from mfr. for skill required for repair (via, manual, official website or by service centre call.) |

| scoring levels | 4: Layman, Generalist, Expert, mfr., Not feasible | 3: Layman, Expert, mfr. | N/A | N/A | N/A | N/A | ||

| Information media | Test | ------ | ------ | c. info media | c. info media | c. info media | check information media as listed on the criteria. | |

| scoring levels | N/A | N/A | 4: Attached to product, manual, website, not available | 4: Attached to product, Manual, website, toll free contact support, local fee contact support | N/A | 3: Attached to product, video, on website | ||

| Information Content | Test | c. mfr. Instructions, c. media | mfr., c. media | mfr., c. media | mfr., c. media | mfr. | c. media | • check actual availability in different media • check manufacturers declaration |

| scoring levels | 9: c. presence (Table 5) | 9: c. presence (Table 5) | 9: c. presence (Table 5) | 13: c. presence (Table 5) | 13: c. presence (Table 5) | 5: c. presence (Table 5) | ||

| std. parts & interface | Test | c. mfr. Info. | c. mfr. Info. | c. mfr. Info. | dis. c. type | ------ | ------ | • check manufacturer Information • “std.”= standardised • “prop.” = proprietary |

| scoring levels | 3: std. part & interface, prop. part with std. interface, prop. part with non-std. interface | 2: non-prop. & Has a std. interface, prop. or lacks std. | 3 (all parts std., few parts std., no std. | 2: std. interface, non std. interface | N/A | N/A | ||

| Reset (firmware & Card) | Test | c. Possibility | c. possibility, c. information | c. information | c. possibility, c. information | c. instruction | ------ | • c. possibility to reset by trying to reset the product • c. information & instruction on firmware reset |

| scoring levels | 4: Integrated, external, service, not possible | 4: Integrated, external, service, not possible | 1: Possibility to reset | 1: Possibility to reset | 1: Possibility to rest | N/A | ||

| Design Simplicity | Test | ------ | ------ | ------ | operate. c. | ------ | ------ | operate: operate the device & c. intuitiveness. |

| scoring levels | N/A | N/A | N/A | intuitive device operation (5) | N/A | N/A | ||

| Information Availability | Scoring System | |||||

|---|---|---|---|---|---|---|

| EN 45554 | RSS (JRC) | AsMer | ONR 192102 | FRI | IFixit | |

| Features being claimed in update | • | |||||

| Update method | • | |||||

| Documentation of updates offered after the point of sale | • | |||||

| repair Instructions/manual/bulletin | • | • | • | • | ||

| Product identification | • | • | • | |||

| Component identification | • | |||||

| exploded view | • | • | • | • | • | |

| Regular maintenance instructions | • | • | • | |||

| Diagnosis information/testing procedure/ Troubleshooting chart | • | • | • | • | • | |

| Repair/Upgrade service offered by the manufacturer | • | • | ||||

| safety measures related to use, maintenance, and repair | • | • | • | • | ||

| List of available updates | • | |||||

| Disassembly instruction | • | • | • | • | ||

| Reassembly sequence | • | |||||

| Product identification | • | |||||

| Fault detection software | • | |||||

| PCB/Electronic board diagram | • | • | ||||

| Error codes | • | • | • | • | ||

| 3D printing of spare parts | • | |||||

| Reconditioning | • | |||||

| Procedure to reset to working condition | • | • | • | • | • | |

| Service centre accessibility | • | |||||

| Transportation instructions | • | |||||

| Circuit/Wiring diagram | • | • | • | |||

| Replacement supplier/supply information | • | • | • | |||

| Tools required | • | • | • | |||

| Service plan of electrical boards | • | |||||

| Training materials for repair | • | • | ||||

| Recommended torque for fasteners | • | |||||

| Compatibility of parts with other products | • | |||||

| functional specification of parts | • | |||||

| reference values for measurements | • | |||||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Dangal, S.; Faludi, J.; Balkenende, R. Design Aspects in Repairability Scoring Systems: Comparing Their Objectivity and Completeness. Sustainability 2022, 14, 8634. https://doi.org/10.3390/su14148634

Dangal S, Faludi J, Balkenende R. Design Aspects in Repairability Scoring Systems: Comparing Their Objectivity and Completeness. Sustainability. 2022; 14(14):8634. https://doi.org/10.3390/su14148634

Chicago/Turabian StyleDangal, Sagar, Jeremy Faludi, and Ruud Balkenende. 2022. "Design Aspects in Repairability Scoring Systems: Comparing Their Objectivity and Completeness" Sustainability 14, no. 14: 8634. https://doi.org/10.3390/su14148634

APA StyleDangal, S., Faludi, J., & Balkenende, R. (2022). Design Aspects in Repairability Scoring Systems: Comparing Their Objectivity and Completeness. Sustainability, 14(14), 8634. https://doi.org/10.3390/su14148634