Learning Loss Recovery Dashboard: A Proposed Design to Mitigate Learning Loss Post Schools Closure

Abstract

:1. Introduction

2. Related Work and Background (Needs for Designing a Learning Analytics Dashboard—LAD)

3. Theoretical Background

3.1. The Design Thinking Approach

3.2. Backward Instructional Design

4. Methodology

- How can potential learning loss post-COVID-19 pandemic be mitigated, utilizing the design-thinking approach in redesigning the learning experience for an LMS?

- How can learners’ experience with the LMS be adapted, to support learning recovery after having potential learning loss post-COVID-19 pandemic?

5. System Architecture (Designing a Learning Analytics Dashboard—LAD)

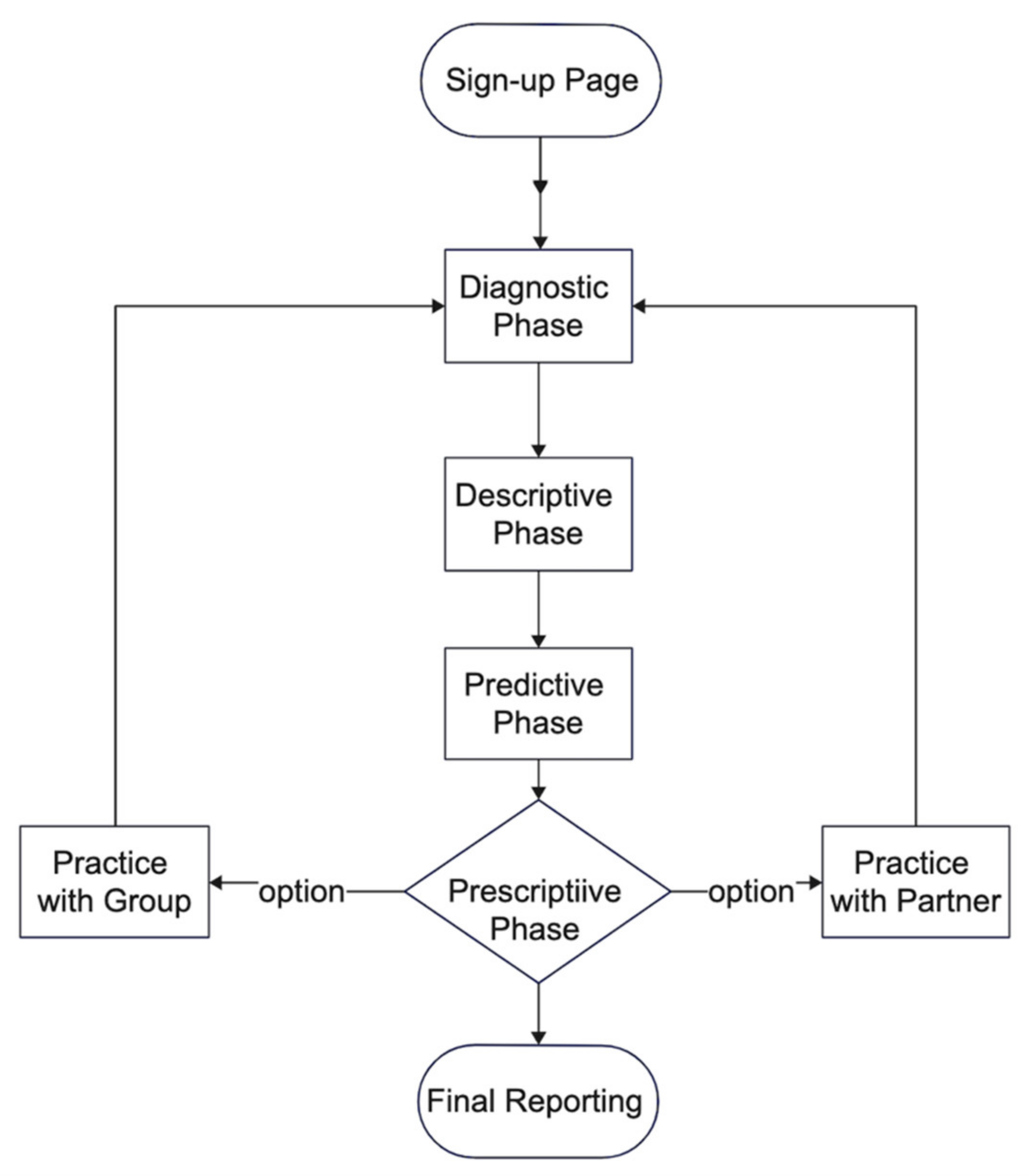

5.1. Prototype Architecture

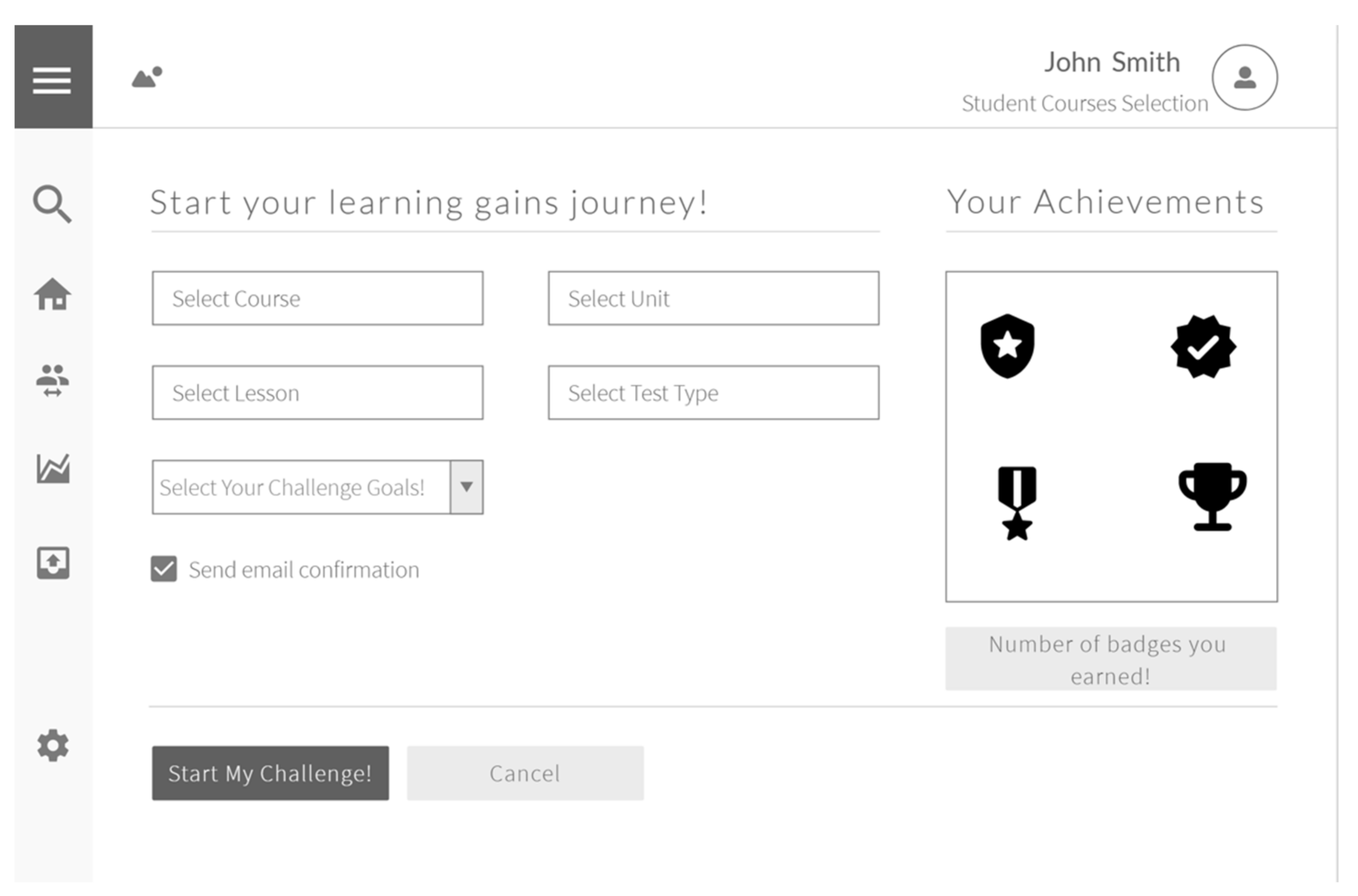

5.2. Sign-Up Page

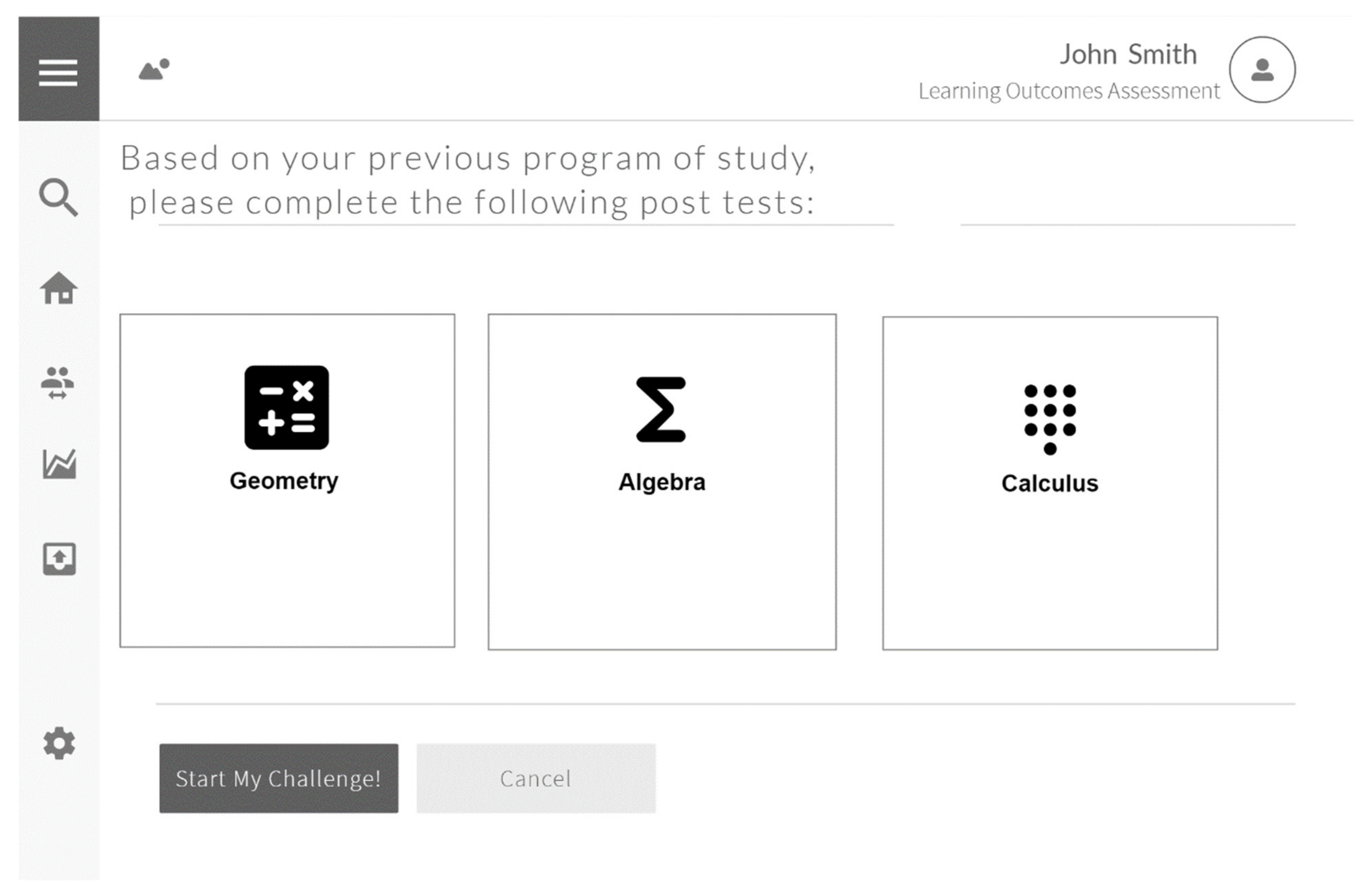

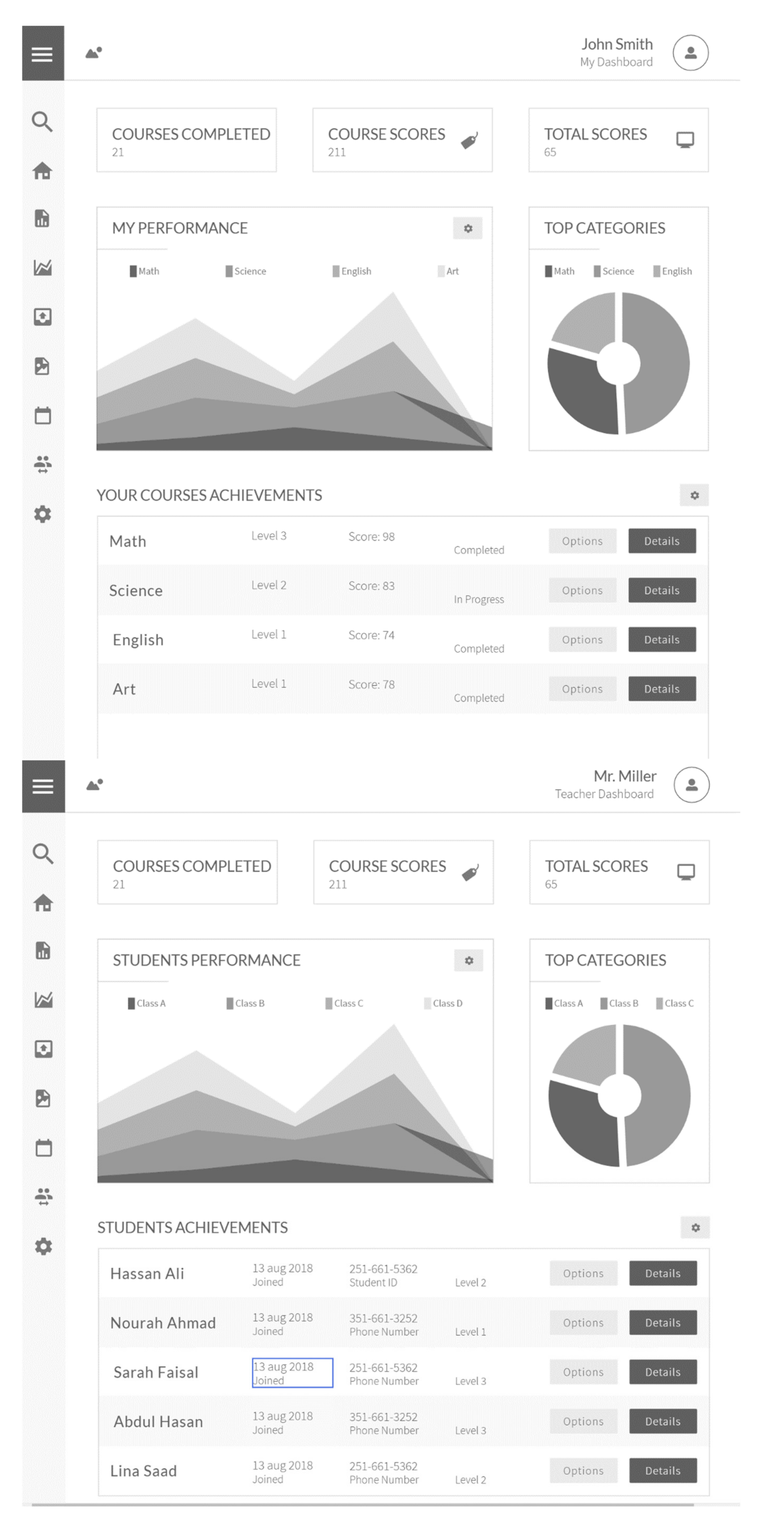

5.3. Diagnostic Phase (1st Page Interface)

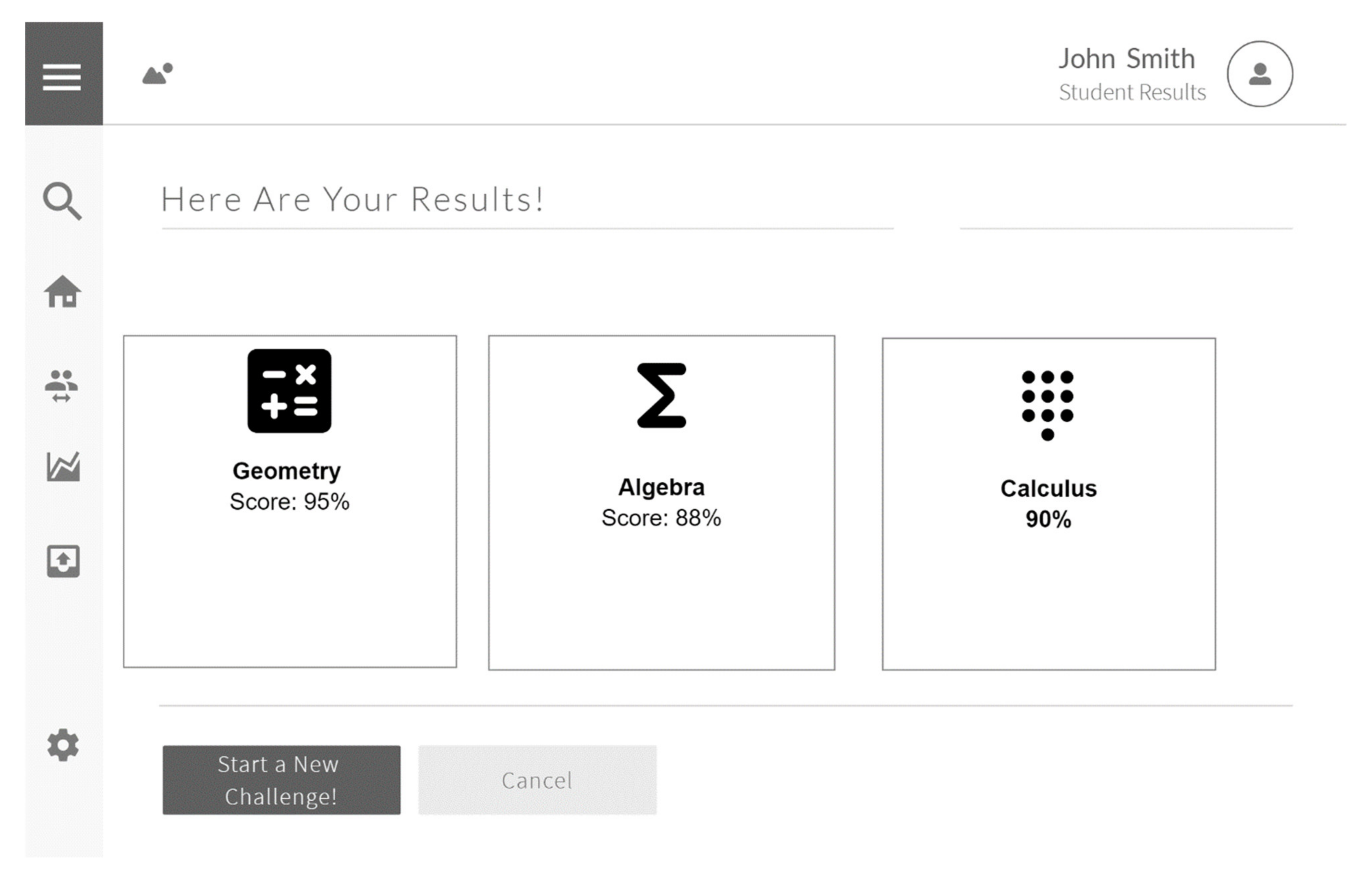

5.4. Descriptive Phase (2nd Page Interface)

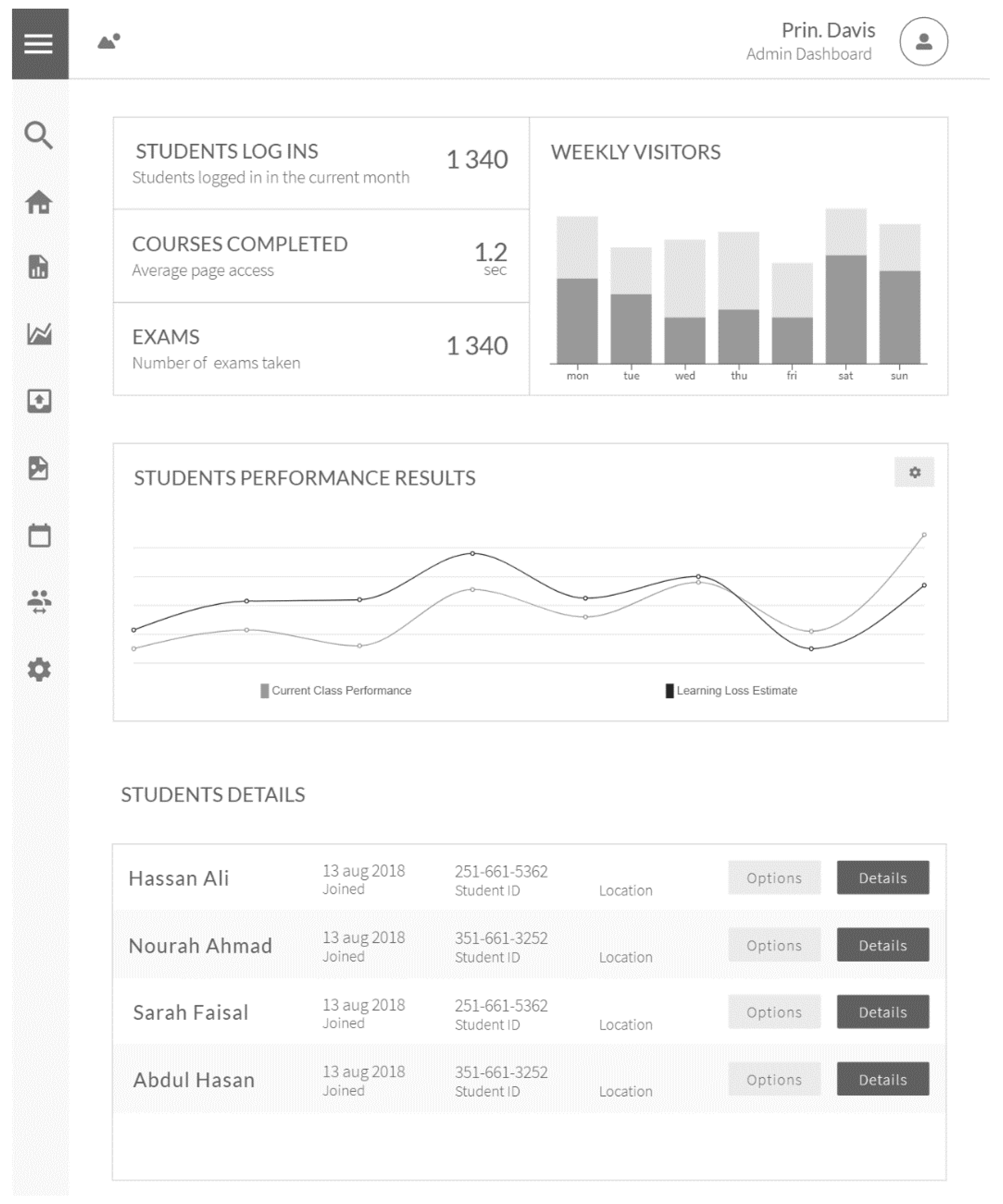

5.5. Predictive Phase (3rd Page Interface)

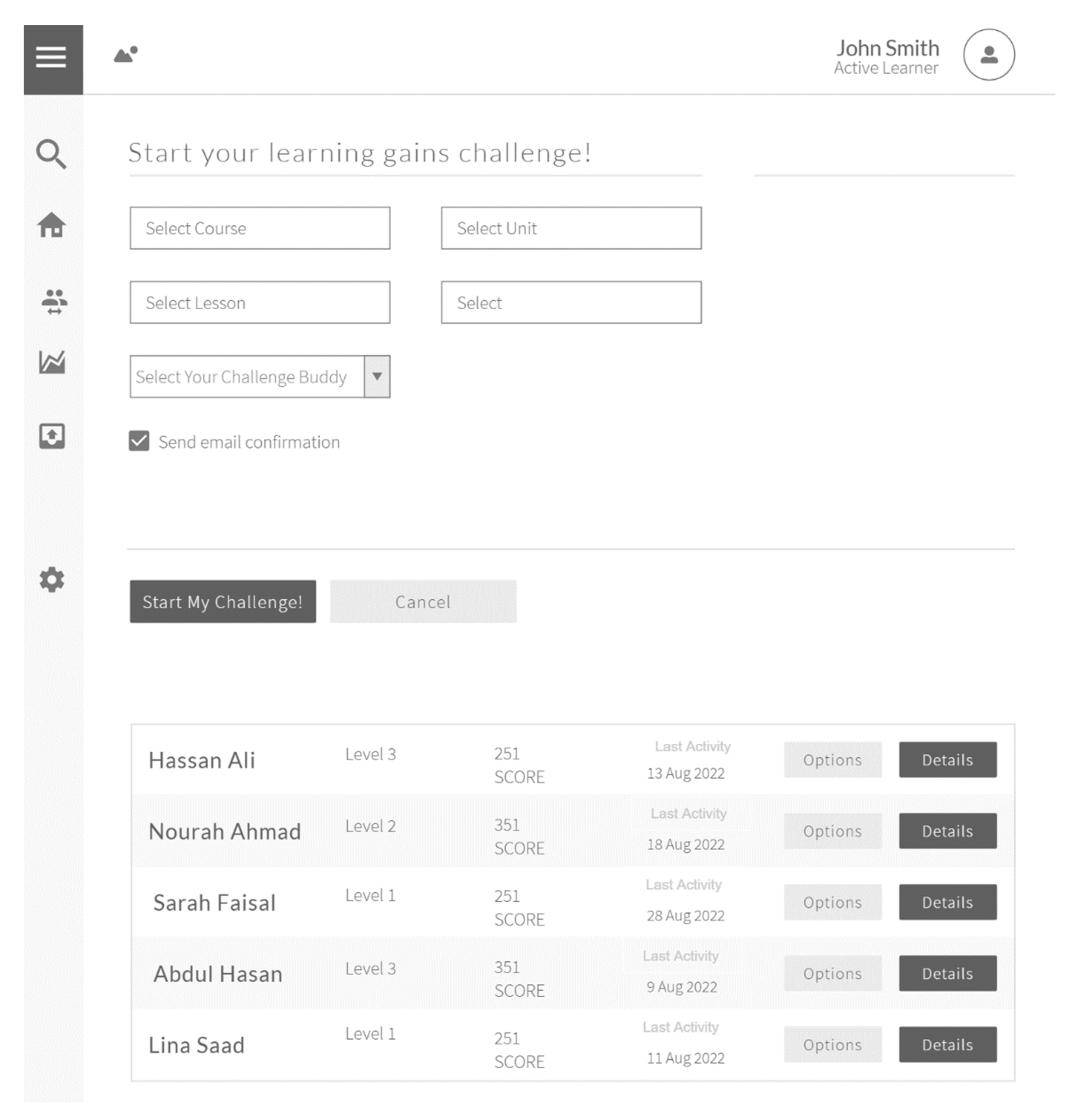

5.6. Prescriptive Phase (4th Page Interface)

6. Evaluation (Issues to Consider for a Learning Analytics Dashboard—LAD)

6.1. Teachers’ Role

6.2. Learners’ Performance Role

6.3. Digital Content Role

7. Discussion

7.1. Self-Regulated Learning

7.2. Microlearning

7.3. Scaffolding ZPD

7.4. Gamification

8. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Bao, H.; Li, Y.; Su, Y.; Xing, S.; Chen, N.; Rosé, C. The effects of a learning analytics dashboard on teachers’ diagnosis and intervention in computer-supported collaborative learning. Technol. Pedagog. Educ. 2021, 30, 287–303. [Google Scholar] [CrossRef]

- Bodily, R.; Verbert, K. Review of research on student-facing learning analytics dashboards and educational recommender systems. IEEE Trans. Learn. Technol. 2017, 10, 1–15. [Google Scholar] [CrossRef]

- Vieira, C.; Parsons, P.; Byrd, V. Visual learning analytics of educational data: A systematic literature review and research agenda. Comput. Educ. 2018, 122, 119–135. [Google Scholar] [CrossRef]

- Gutiérrez, F.; Seipp, K.; Ochoa, X.; Chiluiza, K.; De Laet, T.; Verbert, K. LADA: A learning analytics dashboard for academic advising. Comput. Hum. Behav. 2020, 107, 105826. [Google Scholar] [CrossRef]

- Zheng, J.; Huang, L.; Li, S.; Lajoie, S.P.; Chen, Y.; Hmelo-Silver, C.E. Self-regulation and emotion matter: A case study of instructor interactions with a learning analytics dashboard. Comput. Educ. 2021, 161, 104061. [Google Scholar] [CrossRef]

- Choi, S.P.; Lam, S.S.; Li, K.C.; Wong, B.T.M. Learning analytics at low cost: At-risk student prediction with clicker data and systematic proactive interventions. J. Educ. Technol. Soc. 2018, 21, 273–290. [Google Scholar]

- Aguilar, S.J. Learning analytics: At the nexus of big data, digital innovation, and social justice in education. TechTrends 2018, 62, 37–45. [Google Scholar] [CrossRef]

- Romero, C.; Ventura, S. Educational data mining and learning analytics: An updated survey. WIREs Data Min. Knowl. Discov. 2020, 10, e1355. [Google Scholar] [CrossRef]

- Rienties, B.; Toetenel, L. The Impact of 151 Learning Designs on Student Satisfaction and Performance: Social Learning (Analytics) Matters. In Proceedings of the Sixth International Conference on Learning Analytics and Knowledge, Online, 25 April 2016; pp. 339–343. [Google Scholar] [CrossRef] [Green Version]

- Baker, R. Using Learning Analytics in Personalized Learning. In Handbook on Personalized Learning for States, Districts, and Schools; Murphy, M., Redding, S., Twyman, J.S., Eds.; Center on Innovations in Learning, Temple University: Philadelphia, PA, USA, 2016; pp. 165–174. Available online: https://www.adi.org/downloads/Personalized_learning_entirehandbook.pdf (accessed on 10 September 2021).

- Charleer, S.; Klerkx, J.; Duval, E.; De Laet, T.; Verbert, K. Creating Effective Learning Analytics Dashboards: Lessons Learnt. In Adaptive and Adaptable Learning: European Conference on Technology Enhanced Learning; Verbert, K., Sharples, M., Klobučar, T., Eds.; Springer: New York, NY, USA, 2016; pp. 42–56. [Google Scholar]

- Hwang, G.J.; Chu, H.C.; Yin, C. Objectives, methodologies and research issues of learning analytics. Interact. Learning Environ. 2017, 25, 143–146. [Google Scholar] [CrossRef] [Green Version]

- Liu, M.; Kang, J.; Zou, W.; Lee, H.; Pan, Z.; Corliss, S. Using data to understand how to better design adaptive learning. Technol. Knowl. Learn. 2017, 22, 271–298. [Google Scholar] [CrossRef]

- Siemens, G.; Gaševíc, D. Guest editorial–learning and knowledge analytics. Educ. Technol. Soc. 2012, 15, 1–2. [Google Scholar]

- Marzouk, Z.; Rakovic, M.; Liaqat, A.; Vytasek, J.; Samadi, D.; Stewart-Alonso, J.; Ram, I.; Woloshen, S.; Winne, P.H.; Nesbit, J.C. What if learning analytics were based on learning science? Australas. J. Educ. Technol. 2016, 32, 6. [Google Scholar] [CrossRef] [Green Version]

- Suthers, D.; Verbert, K. Learning Analytics as a “Middle Space”. In Proceedings of the Third International Conference on Learning Analytics and Knowledge, Leuven, Belgium, 8–12 April 2013; pp. 1–4. [Google Scholar] [CrossRef]

- Gasevic, D.; Tsai, Y.S.; Dawson, S.; Pardo, A. How do we start? An approach to learning analytics adoption in higher education. Int. J. Inf. Learn. Technol. 2019, 36, 342–353. [Google Scholar] [CrossRef] [Green Version]

- Wise, A.F. Designing Pedagogical Interventions to Support Student use of Learning Analytics. In Proceedings of the Fourth International Conference on Learning Analytics and Knowledge, Online, 24 March 2014; pp. 203–211. [Google Scholar] [CrossRef]

- Jivet, I. The Dashboard That Loved Me: Designing Adaptive Learning Analytics for Self-Regulated Learning. Ph.D. Thesis, Open Universiteit, Heerlen, The Netherlands, 2021. [Google Scholar]

- Schwendimann, B.A.; Rodriguez-Triana, M.J.; Vozniuk, A.; Prieto, L.P.; Boroujeni, M.S.; Holzer, A.; Gillet, D.; Dillenbourg, P. Perceiving learning at a glance: A systematic literature review of learning dashboard research. IEEE Trans. Learn. Technol. 2016, 10, 30–41. [Google Scholar] [CrossRef]

- Winne, P.H. A metacognitive view of individual differences in self-regulated learning. Learn. Individ. Differ. 1996, 8, 327–353. [Google Scholar] [CrossRef]

- Gašević, D.; Dawson, S.; Rogers, T.; Gasevic, D. Learning analytics should not promote one size fits all: The effects of instructional conditions in predicting academic success. Internet High. Educ. 2016, 28, 68–84. [Google Scholar] [CrossRef] [Green Version]

- Brooks, S.K.; Smith, L.E.; Webster, R.K.; Weston, D.; Woodland, L.; Hall, I.; Rubin, G.J. The impact of unplanned school closure on children’s social contact: Rapid evidence review. Eurosurveillance 2020, 25, 2000188. [Google Scholar] [CrossRef] [Green Version]

- Viner, R.M.; Russell, S.J.; Croker, H.; Packer, J.; Ward, J.; Stansfield, C.; Mytton, O.; Bonell, C.; Booy, R. School closure and management practices during coronavirus outbreaks including COVID-19: A rapid systematic review. Lancet Child Adolesc. Health 2020, 4, 397–404. [Google Scholar] [CrossRef]

- Von Hippel, P.T.; Hamrock, C. Do test score gaps grow before, during, or between the school years? Measurement artifacts and what we can know in spite of them. Sociol. Sci. 2019, 6, 43–80. [Google Scholar] [CrossRef] [Green Version]

- Kuhfeld, M.; Soland, J.; Tarasawa, B.; Johnson, A.; Ruzek, E.; Liu, J. Projecting the potential impacts of COVID-19 school closures on academic achievement. Educ. Res. 2020, 49, 549–565. [Google Scholar] [CrossRef]

- Adams-Prassl, A.; Boneva, T.; Golin, M.; Rauh, C. Inequality in the impact of the coronavirus shock: Evidence from real time surveys. J. Public Econ. 2020, 189, 104245. [Google Scholar] [CrossRef]

- Andrew, A.; Cattan, S.; Costa Dias, M.; Farquharson, C.; Kraftman, L.; Krutikova, S.; Phimister, A.; Sevilla, A. Inequalities in children’s Experiences of home learning during the COVID-19 lockdown in England. Fisc. Stud. 2020, 41, 653–683. [Google Scholar] [CrossRef]

- Grätz, M.; Lipps, O. Large loss in studying time during the closure of schools in Switzerland in 2020. Res. Soc. Stratif. Mobil. 2021, 71, 100554. [Google Scholar] [CrossRef]

- Azevedo, J.P.; Hasan, A.; Goldemberg, D.; Iqbal, S.A.; Geven, K. Simulating the Potential Impacts of COVID-19 School Closures on Schooling and Learning Outcomes: A Set of Global Estimates. Available online: https://pubdocs.worldbank.org/en/798061592482682799/covid-and-education-June17-r6.pdf (accessed on 5 September 2021).

- Von Hippel, P.T. How will the coronavirus crisis affect children’s learning? Unequally. Educ. Next 2020, 22, 2. Available online: https://www.educationnext.org/how-will-coronavirus-crisis-affect-childrens-learning-unequally-covid-19/ (accessed on 10 December 2021).

- Fernández-Nieto, G.M.; Kitto, K.; Buckingham Shum, S.; Martinez-Maldonado, R. Beyond the Learning Analytics Dashboard: Alternative Ways to Communicate Student Data Insights Combining Visualisation, Narrative and Storytelling. In Proceedings of the LAK22: 12th International Learning Analytics and Knowledge Conference, Online, 21–25 March 2022; pp. 219–229. [Google Scholar]

- Susnjak, T.; Ramaswami, G.S.; Mathrani, A. Learning analytics dashboard: A tool for providing actionable insights to learners. Int. J. Educ. Technol. High Educ. 2022, 19, 12. [Google Scholar] [CrossRef]

- Fleur, D.S.; van den Bos, W.; Bredeweg, B. Learning Analytics Dashboard for Motivation and Performance. In Lecture Notes in Computer Science; Springer: New York, NY, USA, 2020; pp. 411–419. [Google Scholar] [CrossRef]

- Baneres, D.; Rodriguez, M.E.; Serra, M. An early feedback prediction system for learners at-risk within a first-year higher education course. IEEE Trans. Learn. Technol. 2019, 12, 249–263. [Google Scholar] [CrossRef]

- Kokoç, M.; Altun, A. Effects of learner interaction with learning dashboards on academic performance in an e-learning environment. Behav. Inf. Technol. 2021, 40, 161–175. [Google Scholar] [CrossRef]

- Karaoglan Yilmaz, F.G.; Yilmaz, R. Learning analytics as a metacognitive tool to influence learner transactional distance and motivation in online learning environments. Innov. Educ. Teach. Int. 2020, 58, 575–585. [Google Scholar] [CrossRef]

- Han, J.; Kim, K.H.; Rhee, W.; Cho, Y.H. Learning analytics dashboards for adaptive support in face-to-face collaborative argumentation. Comput. Educ. 2021, 163, 104041. [Google Scholar] [CrossRef]

- Majumdar, R.; Akçapınar, A.; Akçapınar, G.; Flanagan, B.; Ogata, H. LAViEW: Learning Analytics Dashboard Towards Evidence-based Education. In Proceedings of the 9th International Conference on Learning Analytics and Knowledge, Tempe, AZ, USA, 4–8 May 2019; Society for Learning Analytics Research (SoLAR): Irvine, CA, USA, 2019; pp. 1–6. [Google Scholar]

- Chatti, M.A.; Muslim, A.; Guliani, M.; Guesmi, M. The LAVA Model: Learning Analytics Meets Visual Analytics. In Advances in Analytics for Learning and Teaching; Springer: New York, NY, USA, 2020. [Google Scholar] [CrossRef]

- Gras, B.; Brun, A.; Boyer, A. For and by Student Dashboards Design to Address Dropout. 2020. Available online: https://hal.inria.fr/hal-02974682 (accessed on 12 October 2021).

- He, H.; Dong, B.; Zheng, Q.; Li, G. VUC. In Proceedings of the ACM Conference on Global Computing Education, Online, 9 May 2019; pp. 99–105. [Google Scholar] [CrossRef]

- Kia, F.S.; Teasley, S.D.; Hatala, M.; Karabenick, S.A.; Kay, M. How patterns of students dashboard use are related to their achievement and self-regulatory engagement. In Proceedings of the Tenth International Conference on Learning Analytics and Knowledge, Online, 23 March 2020; pp. 340–349. [Google Scholar] [CrossRef] [Green Version]

- Naranjo, D.M.; Prieto, J.R.; Moltó, G.; Calatrava, A. A visual dashboard to track learning analytics for educational cloud computing. Sensors 2019, 19, 2952. [Google Scholar] [CrossRef] [Green Version]

- Owatari, T.; Shimada, A.; Minematsu, T.; Hori, M.; Taniguchi, R. Real-time learning analytics dashboard for students in online classes. In Proceedings of the International Conference on Teaching, Assessment, and Learning for Engineering (TALE), Takamatsu, Japan, 8–11 December 2020. [Google Scholar] [CrossRef]

- Ulfa, S.; Fattawi, I.; Surahman, E.; Yusuke, H. Investigating learners’ perception of learning analytics dashboard to improve learning interaction in online learning system. In Proceedings of the 5th International Onference on Education and Technology (ICET), Online, 4–5 October 2019. [Google Scholar] [CrossRef]

- Aljohani, N.R.; Daud, A.; Abbasi, R.A.; Alowibdi, J.S.; Basheri, M.; Aslam, M.A. An integrated framework for course adapted student learning analytics dashboard. Comput. Hum. Behav. 2019, 92, 679–690. [Google Scholar] [CrossRef]

- Mueller-Roterberg, C. Handbook of Design Thinking: Tips and Tools for How to Design Thinking; Independently Published: Chicago, IL, USA, 2018. [Google Scholar]

- Wiggins, G.; McTighe, J. Backward Design. In Understanding by Design; ASCD: Alexandria, VA, USA, 1998; pp. 13–34. [Google Scholar]

- Jaakkola, E. Designing conceptual articles: Four approaches. AMS Rev. 2020, 10, 18–26. [Google Scholar] [CrossRef] [Green Version]

- Gilson, L.L.; Goldberg, C.B. Editors’ comment: So, what is a conceptual paper? Group Organ. Manag. 2015, 40, 127–130. [Google Scholar] [CrossRef] [Green Version]

- MacInnis, D.J.; De Mello, G.E. The concept of hope and its relevance to product evaluation and choice. J. Mark. 2005, 69, 1–14. [Google Scholar] [CrossRef]

- Park, Y.; Jo, I.H. Development of the learning analytics dashboard to support students’ learning performance. J. Univers. Comput. Sci. 2015, 21, 110–133. [Google Scholar] [CrossRef]

- Ali, L.; Asadi, M.; Gaševíc, D.; Jovanovíc, J.; Hatala, M. Factors influencing beliefs for adoption of a learning analytics tool: An empirical study. Comput. Educ. 2013, 62, 130–148. [Google Scholar] [CrossRef]

- Venant, R.; Vidal, P.; Broisin, J. Evaluation of learner performance during practical activities: An experimentation in computer education. In Proceedings of the 16th IEEE International Conference on Advanced Learning Technologies, Austin, TX, USA, 25–28 July 2016; pp. 237–241. [Google Scholar] [CrossRef] [Green Version]

- Pintrich, P.R. Multiple goals, multiple pathways: The role of goal orientation in learning and achievement. J. Educ. Psychol. 2000, 92, 544–555. [Google Scholar] [CrossRef]

- Butler, D.L.; Winne, P.H. Feedback and self-regulated learning: A theoretical synthesis. Rev. Educ. Res. 1995, 65, 245–281. [Google Scholar] [CrossRef]

- Winne, P.H. Learning Analytics for Self-Regulated Learning. In Handbook of Learning Analytics; Lang, C., Siemens, G., Wise, A., Gašević, D., Eds.; Society for Learning Analytics Research: Online, 2017; pp. 241–249. [Google Scholar]

- Ochoa, X.; Wise, A.F. Supporting the shift to digital with student-centered learning analytics. Educ. Technol. Res. Dev. 2021, 69, 357–361. [Google Scholar] [CrossRef]

- Sedrakyan, G.; Malmberg, J.; Verbert, K.; Järvelä, S.; Kirschner, P.A. Linking learning behavior analytics and learning science concepts: Designing a learning analytics dashboard for feedback to support learning regulation. Comput. Hum. Behav. 2020, 107, 105512. [Google Scholar] [CrossRef]

- Tempelaar, D.; Rienties, B.; Nguyen, Q. The contribution of dispositional learning analytics to precision education. Educ. Technol. Soc. 2021, 24, 109–122. [Google Scholar]

- Schunk, D.H. Attributions as motivators of self-regulated learning. In Motivation and Self-Regulated Learning: Theory, Research, and Applications; Schunk, D.H., Zimmerman, B.J., Eds.; Routledge: London, UK, 2008; pp. 245–266. [Google Scholar]

- Fansury, A.H.; Januarty, R.; Ali Wira Rahman, S. Digital content for millennial generations: Teaching the English foreign language learner on COVID-19 pandemic. J. Southwest Jiaotong Univ. 2020, 55, 3. [Google Scholar] [CrossRef]

- Zimmerman, B.J.; Martinez-Pons, M. Construct validation of a strategy model of student self-regulated learning. J. Educ. Psychol. 1988, 80, 284–290. [Google Scholar] [CrossRef]

- Moore, M.G. Editorial: Distance education theory. Am. J. Distance Educ. 1991, 5, 1–6. [Google Scholar] [CrossRef]

- Moore, M.G. Theory of transactional distance. In Theoretical Principles of Distance Education; Keegan, D., Ed.; Routledge: London, UK, 1993; pp. 22–38. [Google Scholar]

- Zimmerman, B.J. Self-regulated learning and academic achievement: An overview. Educ. Psychol. 1990, 25, 3–17. [Google Scholar] [CrossRef]

- Hodges, C.; Moore, S.; Lockee, B.; Trust, T.; Bond, A. The Difference between Emergency Remote Teaching and Online Learning. Available online: https://er.educause.edu/articles/2020/3/the-difference-between-emergency-remote-teaching-and-online-learning (accessed on 11 December 2021).

- Hug, T. Didactics of Microlearning: Concepts, Discourses and Examples; Waxmann Verlag: Münster, Germany, 2007. [Google Scholar]

- Sun, G.; Cui, T.; Yong, J.; Shen, J.; Chen, S. Drawing micro learning into MOOC: Using fragmented pieces of time to enable effective entire course learning experiences. In Proceedings of the IEEE 19th International Conference on Computer Supported Cooperative Work in Design (CSCWD), Calabria, Italy, 6–8 May 2015; pp. 308–313. [Google Scholar] [CrossRef] [Green Version]

- Buchem, I.; Hamelmann, H. Microlearning: A strategy for ongoing professional development. eLearning Pap. 2010, 21, 1–15. [Google Scholar]

- Beaudin, J.S.; Intille, S.S.; Morris, M. Microlearning on a Mobile Device. In UbiComp; Dourish, P., Friday, A., Eds.; Springer: New York, NY, USA, 2006; Volume 4206, pp. 1–2. [Google Scholar]

- Vygotsky, L.S. Mind in Society: The Development of Higher Psychological Processes; Harvard University Press: Cambrdige, MA, USA, 1978. [Google Scholar]

- Antonaci, A.; Klemke, R.; Specht, M. The effects of gamification in online learning environments: A systematic literature review. Informatics 2019, 6, 32. [Google Scholar] [CrossRef] [Green Version]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Aldosemani, T.I.; Al Khateeb, A. Learning Loss Recovery Dashboard: A Proposed Design to Mitigate Learning Loss Post Schools Closure. Sustainability 2022, 14, 5944. https://doi.org/10.3390/su14105944

Aldosemani TI, Al Khateeb A. Learning Loss Recovery Dashboard: A Proposed Design to Mitigate Learning Loss Post Schools Closure. Sustainability. 2022; 14(10):5944. https://doi.org/10.3390/su14105944

Chicago/Turabian StyleAldosemani, Tahani I., and Ahmed Al Khateeb. 2022. "Learning Loss Recovery Dashboard: A Proposed Design to Mitigate Learning Loss Post Schools Closure" Sustainability 14, no. 10: 5944. https://doi.org/10.3390/su14105944

APA StyleAldosemani, T. I., & Al Khateeb, A. (2022). Learning Loss Recovery Dashboard: A Proposed Design to Mitigate Learning Loss Post Schools Closure. Sustainability, 14(10), 5944. https://doi.org/10.3390/su14105944