Abstract

Educational institutions are transferring analytics computing to the cloud to reduce costs. Any data transfer and storage outside institutions involve serious privacy concerns, such as student identity exposure, rising untrusted and unnecessary third-party actors, data misuse, and data leakage. Institutions that adopt a “local first” approach instead of a “cloud computing first” approach can minimize these problems. The work aims to foster the use of local analytics computing by offering adequate nonexistent tools. Results are useful for any educational role, even investigators, to conduct data analysis locally. The novelty results are twofold: an open-source JavaScript library to analyze locally any educational log schema from any LMS; a front-end to analyze Moodle logs as proof of work of the library with different educational metrics and indicator visualizations. Nielsen heuristics user experience is executed to reduce possible users’ data literacy barrier. Visualizations are validated by surveying teachers with Likert and open-ended questions, which consider them to be of interest, but more different data sources can be added to improve indicators. The work reinforces that local educational data analysis is feasible, opens up new ways of analyzing data without data transfer to third parties while generating debate around the “local technologies first” approach adoption.

1. Introduction

In this work, educational data is considered as academic or behavioral raw data, aggregated or transformed data point generated by the interactions of students in a teaching–learning process. Students’ interactions within an LMS, such as browsing the contents of a course, communicating with teachers or students via chat or forum, delivering assessment assignments, or even the grades themselves generates data that can be used in analytical approaches. However, the generation of educational data is not limited to the use of LMS. Any students’ use of educational technology (Edtech) such as academic management platforms, learning tools, or even social media applications in teaching–learning processes generate data and are, therefore, considered as educational data.

1.1. Context in Big Data and Small Data

Considering the above, the educational data generated by an educational institution classroom can be considered Small Data, and is even preferable in terms of analysis instead of Big Data [1]. The most important thing in educational analysis is the context, which is the same as Small Data. The Big Data movement requires constant and varied data sources to achieve high accuracy levels. However, decontextualized datasets generate biased results [2,3]. For this reason, the use of Big Data algorithms that bring together realities from different educational institutions leads to a blurring of the educational context of a particular academic organization. It is the localized data that provide context to the algorithms and, therefore, the use of Small Data makes the analytical results more contextualized to the educational reality, more concrete, and more useful [4].

Even though the data is small, the available analytical techniques are designed for the analysis of large amounts of data [5,6]. Machine learning techniques are the most commonly used as well as running on Cloud Computing. Therefore, educational data is transferred outside the academic institutions and, usually, sent to private remote servers owned by contracted third parties [7,8].

1.2. Current Situation: Sensible and Fragile

The above exposed situation presents a complicated problem of sensitivity and fragility in terms of confidentiality, privacy, and identity of students [9,10,11]:

- Educational data is fragmented. As more third-party educational tools are being used, the more dispersed the students’ data will be and the less control there will be over privacy.

- Low data protection is evident. Data is accessed freely by third parties and even shared, where students are identified.

- There is no control over the data. Anyone who has access to the data can modify it at will and share it with third parties. Consequently, no one can ensure that the data is stored in the original form or free from unauthorized access.

Data privacy and security are connected to ethical aspects [12,13]. Educational technology evolves at a rapid pace and increasingly integrates data analysis processes. We are familiar with some of the analytical practices, issues, and ethical implications arising from the use of educational data [14]. Besides, we cannot imagine or conceive what future dangers may emerge nor trust in the legality, which is always one step behind the emergent technology. That is why we must take the utmost care when processing educational data, precisely to preserve them from the dangers already known and those to come.

1.3. Local Instead of Outside

In spite of above concerning problems, educational data analysis provides an environment for positive opportunities. Educational data analytics helps improve processes from three fundamental axes: academic management, teaching, and learning [15,16]. Data-driven academic management requires reliable analytical results of course performance, which are extracted from teacher and student satisfaction. Personalization, individualization, and learning adaptation are teaching tasks that are enhanced by data-driven decision-making. Self-regulation of learning is an educational goal that students can achieve by making data-driven decisions based on their interactions with learning environments. Besides, the data collection works as a real-time process and it concerns the digital tools used by the student. These educational tools can be in cloud computing or within the institution itself. However, the data and its analysis do not need to be stored or executed in cloud computing when it can be done locally [17].

Current research in data fragility analyzes solutions in cloud computing or the blockchain, either centralized or distributed databases, but always outside the control of educational institutions [18,19]. Outsourced solutions in the cloud computing may present some benefits that allow antifragility into educational institutions, as stated by Gong et al. [20] when describing cloud computing as “service-oriented, loose coupling, strong fault-tolerant, business model and eas[y to] use”. Antifragility allows rapid adaptation to change or even pandemics such as COVID-19 where education in general adopted an emergency virtual learning methodology ipso facto [21,22,23]. When data and platforms are available in the cloud it seems that in terms of sustainability, the most suitable scenario is as follows: economic advantages, cost reduction, few resources used and fault-tolerant [20]. Working inside the educational institution from the outside through virtual private networks seems to be an underestimated and underrated solution. Gaps in the current data privacy debate and the scientific community regarding research in local technologies as a solution motivates the authors of this manuscript to work in this direction.

1.4. Ethical Principles to Foster Local-First Technology

This project falls within the LEDA principles [1]: (i) legality, (ii) transparency, information, and expiration, (iii) data control, (iv) anonymous transactions, (v) interoperability, (vi) responsibility in the code, and (vii) local first. The work is mainly based on the concept of “local-first”, which refers to the distance between devices: the privacy concerns reduce as the device that processes educational data is closer to the educational role. Therefore, the main motivation of this work is to offer alternatives to Learning Analytics Dashboard based on cloud computing. These alternatives are oriented to local execution to reduce the problems related to data privacy and security.

The client-side analytics operations open up a wide range of possibilities, such as new relationships between IT support and teachers, data exchange via portable and encrypted memory, new procedural considerations regarding data, or adaptation to legality such as the GDPR [24]. Adopting local-first technology goes beyond using local technology, as it involves rethinking processes, management and internal actions in educational institutions. At the same time, both the application of educational data mining techniques and the interpretation of visualizations require corresponding levels of data literacy [25] according to the analytical–visual complexity of the process. To acquire the knowledge and skills to perform analysis and understand the results, ad hoc tools should provide functionalities regarding the data literacy skill that teachers have acquired and comply with some minimum usability tests [26]. This situation requires tools that can solve ad hoc analytical needs, according to the educational context and data literacy level of academics, and at the same time protect student data by running locally and meeting the following requirements (EDPP):

- Avoid transferring data to remote servers outside the control of institutions;

- Distribute analysis to local educational role devices to reduce centralized computing complexity;

- Enable analysis of data from any log of educational tools;

- Enable ad hoc visualizations to be generated from logs.

1.5. Research Gaps and Our Contribution

Considering the EDPP requirements, a literature search was performed to find tools that run and analyze data locally without an Internet connection, developed in web technologies, and are open source. These should also run on any device, analyze any data scheme, provide educational metrics, indicators, and visualizations. No tools were found that meet all the requirements nor any research that proposes local-first technology as a solution to data fragility issues. Some tools are offered as a proprietary service, others are open source but require running in the cloud or in the LMS as a plugin [27] with a whole structure of services that in one way or another do not meet the requirements.

In this article, our main research question regards the possibility to develop a solution considering the above requirements. As our main contribution, we develop and share, as open source, an analytics and visualization tool that meets all the stated requirements using HTML, CSS, and JavaScript web technology. Besides, throughout the sections of the article, we present the process of development, validation, and adaptation for teaching purposes and open publication.

The structure of the paper consists of five sections. The Section 1 is the introduction. In the Section 2, we expose the followed methodology and fundamentals to develop the analytical tool. In the Section 3, we present the results of the development, the survey, and the updates made to the tool concerning survey results. Finally, we present the discussion and conclusions as to the Section 4 and Section 5. The different sections show how our work makes available to (i) educators an easy-to-use Moodle log analysis tool, to (ii) developers an open-source tool for analysis of any log format to integrate into their developments or expand their functionalities, and to (iii) researchers a versatile tool to support the visualization of their research results.

2. Materials and Methods

The development of the tool consists of the execution of different phases in a mixed approach for its final open distribution:

- Design and implementation of the JavaScript library.

- Design and implementation of a front-end-*dashboard as a pilot test of the library.

- Usability improvement via an evaluation of Nielsen heuristics.

- Teacher survey to validate the interest and usefulness of the tool, the metrics and indicators used, and the visualizations presented.

The execution of the four phases has made it possible to make available a tool of teaching interest [28] but at the same time with the possibility of improvement and evolution. In the conclusions, we present different lines of research derived from this first iteration presented in this work.

2.1. JavaScript Learning Analytics Library

The first aim of the project is to develop a configurable library to analyze any log format. To achieve an EDPP compliant project, the tool should be able to:

- Run in local environments, such as personal devices, e.g., computers or smartphones;

- Run with technologies available in personal devices;

- Visualize data and insights from any learning tool’s log of students’ data and interactions.

To accomplish such requirements, we considered that web technologies were adequate to be used in the solution, due to these technologies provided by default in every computer and smartphone with an installed web browser. The resulting library is called JavaScript Learning Analytics (JSLA) and is a compendium of JavaScript interdependent classes that:

- Loads a log into an array.

- Transforms the log array items to the desired format.

- Analyzes and computes data to represent it.

To accomplish the above, JSLA defines two main components:

- Schema: This is a JavaScript function that the JSLA library uses to transform each field on record inside the logs. Developers can implement this function considering the original log format and the transformations required to visualize the analysis results. Hence, the schema defines the transformations needed to be applied to raw data.

- Widget: This is a JSON that the JSLA library uses to create visualizations. In each widget CSS styles, JavaScript Charts libraries, and JavaScript code can be specified so the web browser can execute to analyze data and create visualizations. Hence, the representation of a widget could be any result computed by JSLA.

With these two components, any developer can create a front-end to easily transform data from logs and create both predefined visualizations in the JSLA library or create new ones.

2.2. JavaScript Moodle Learning Analytics Front End

The second aim of the project can be separated into two main ideas:

- Develop a schema and different widgets based on the information collected and extracted from the Moodle LMS.

- Develop a front-end application to generate a dashboard with built-in drill-down capability and predefined visualizations.

As a result of this project, we developed the front-end application known as JavaScript Moodle Learning Analytics (JSMLA). This open-source project can be found at Github [28], and is developed using HTML, CSS, and Vanilla JavaScript, based on the JSLA library. It is a tool to easily track students’ performance, engagement and interactions with the available resources and activities in any Moodle course in which they are enrolled. It gives a sense of meaningful analysis of the information from Moodle.

This project is based on the concept of “local first” [1]. Thus, data is not analyzed in the cloud, but rather, in a local environment as the data have to be downloaded from the online LMS and stored in a JSON. Supporting other file formats is a future working line, e.g., CSV or Experience API (xAPI) [2]. The JSMLA front-end application loads the downloaded JSON using the JSLA library that processes the data considering the provided schema. Finally, widgets are processed, and visualizations presented as a reporting dashboard.

The widgets shown offer an approach to the different behavior patterns that their students acquire on a specific subject, allowing an exploration of the required data to personalize methodologies and understand its meaning, differentiating itself from other tools such as Learnglish [29] or other analytical tools.

2.2.1. Widgets

As the first iteration for JSMLA, we developed a descriptive Learning Analytics Dashboard (LAD). The dashboard contains widgets, which are categorized so the user can easily understand the given information. The development of the widgets is ad hoc based on the author’s needs in their teaching practice. We consider this first iteration as the starting point of the research, to validate its interest and usefulness for the educational community. The validation methodology is presented below.

We developed four types of widgets:

- Key Performance Indicators (KPI);

- Line chart;

- Pie chart;

- Table.

The selection of the widget type depends on the information we would like to display to let the user get the maximum benefit from the dashboard.

A default JSMLA KPI widget, as shown in Figure 1, is mainly a single numerical figure that reflects the inner value of the represented metric (or metrics). The metrics shown in this widget can either be absolute or relative [3]. The latter refers to the percentage obtained using the given information to provide a more specific analysis. This includes ratio computation in case there is a comparison between different values of the same metric. In JSMLA, we represented the totals of each metric as a numerical figure.

Figure 1.

KPI offered by JSMLA on its dashboard.

A default JSMLA line chart widget, shown in Figure 2, is a graphical representation where several data points are connected to show the evolution of information over time. Timelines are used to organize the information in a chronological sequence. These plots give a better understanding of the growth, change, recurring events, cause and effect, and main events of historical, social, and scientific significance [30]. The overview of the information over a certain period allows teachers to generate valuable insights. For instance, the evolution of student interaction with the course materials can be a key indicator.

Figure 2.

A line-based plot offered by JSMLA.

In other cases, line graphs with a threshold are often indicated with horizontal lines. This feature is possible in the widget included in JSMLA which uses CanvasJS library to generate charts.

A default JSMLA pie chart widget, as shown in Figure 3, provides a visual representation of nominal or ordinal data, a percentage or proportional data, and any categorized data with relative sizes [31]. They are used to specify how the information is divided among the different indicators, and therefore, provide a deeper dive into the distribution of information and the clarity of the metrics.

Figure 3.

Sector based plot generated by JSMLA.

A default JSMLA table, as shown in Figure 4, can contain a wide range of information. Unlike the KPI widget, the table may contain alphanumeric values. Tables show top metrics as they sort them out by columns. Each column represents the categorization or attribute of the designated row. Therefore, a table may contain multiple rows with different values for each attribute. Structured tables offer better user comprehension, clarity, and consistency. The teacher may choose how the information is ordered and grouped.

Figure 4.

A table generated by JSMLA.

2.2.2. Basic Functionalities

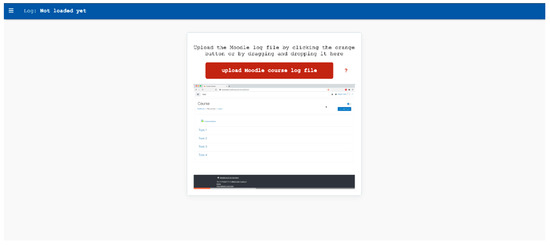

To further understand and visualize JSMLA, we will provide a walkthrough for its basic functionalities. When the front-end application is launched, the homepage has a button to allow the user to upload the JSON file that contains the student logs. For a first timer, it may be quite confusing to see this screen. In this iteration, we added a tooltip that opens up a video which explains how the tool works (see Figure 5).

Figure 5.

JSMLA landing screen, it offers a dialog to load Moodle’s log and a video with a short guide to extract said information.

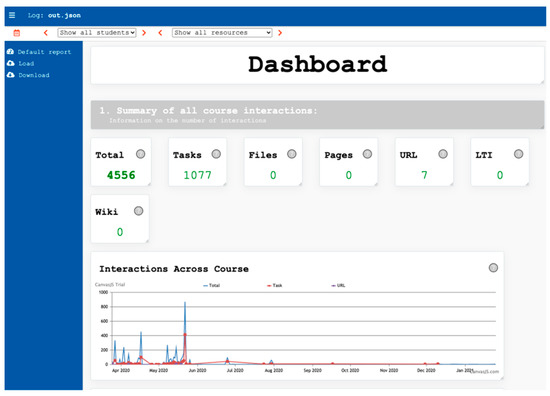

Once the data is loaded into the tool, we will be able to see the widgets (see Figure 6), which are explained in the previous section, categorized in such a way that the user may find it useful when analyzing the students’ participation and engagement in the course.

Figure 6.

JSMLA once a log has been loaded.

As commented previously, there are different sections with a set of widgets.

The first section, Summary of all course interactions (see Figure 7), contains widgets that represents the total interactions in the course. Specifically, this part of the dashboard has the KPI widgets and a line graph, showing the total number of interactions with the resources in the course. The first widgets show the number of total interactions by Moodle element type: Total: number of interactions of students with the whole course elements.

Figure 7.

JSMLA’s counters of interactions related to different components available on Moodle.

The next widget is a line-based plot that represents the evolution of all students’ interactions (see Figure 8) with three course’s elements through time, represented by colors: Total interactions, Tasks and Files. By hovering the mouse over, you get the data of each different element, as well as the date being consulted. This widget is useful for comparing overall analytics over time, analyzing which month has seen the most and least interaction with different types of resources.

Figure 8.

JSMLA’s timeline of interactions across a period of activity. Each line represents a different kind of interaction, there is an extra line to represent the combination of all activities.

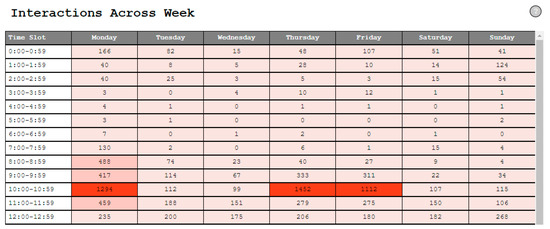

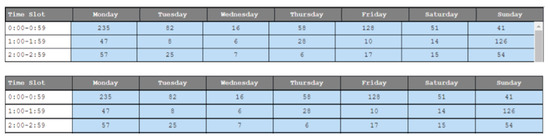

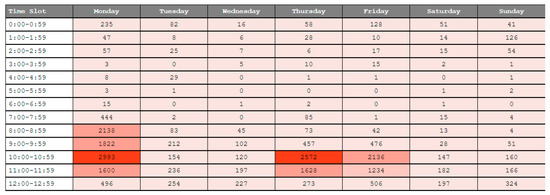

The next widget represents evolution of interactions of all students with all elements in a week, represented with the number of interactions per hour in a table (see Figure 9). This widget is made to be able to determine in which days and hours the students are more likely to work and access the subject’s Moodle. Every table has a heat map to help visually identify higher and lower values.

Figure 9.

JSMLA’s analysis table, where the different interactions across the evaluated period of time are distributed across the week hourly.

The second section, Students, contains widgets that give more specific information about all the students in the uploaded log and their individual participation statistics (see Figure 10). The first widget is a line-based plot that represents all students’ last access to the course Moodle. The x-axis represents days and y-axis represents the number of students whose last access is that day. By hovering the mouse over, the information of the given day and the list of students whose last connection corresponds to that day is acquired. This widget is useful to analyze students’ participation within the course by knowing when they connected last, and who, and therefore being able to determine if students are following the course or if they are not, and also detecting students at risk of dropping out.

Figure 10.

JSMLA’s timeline of last accesses of students across a period of activity. The y-axis represents the number of students who last saw the course or its contents in Moodle for said date.

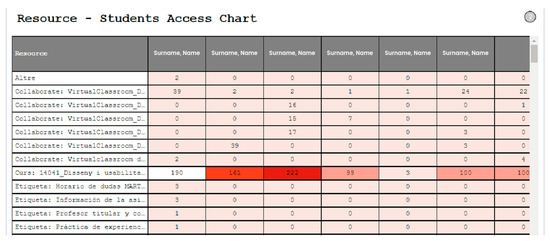

The next widget is a table that represents the number of times a member of the course has interacted with each course resource (see Figure 11). It can be used to analyze more specific data about each student and its relationship with the course elements, showing the number of times every student has interacted with every course element.

Figure 11.

JSMLA’s table of interactions which distributes interactions across resources and students.

The following widget shows the percentage of students’ participation with all course elements and the total number (see Figure 12). This widget is used to easily detect the students who participate more and those who participate less with the course.

Figure 12.

JSMLA’s list of relative interactions across a period of activity and the number.

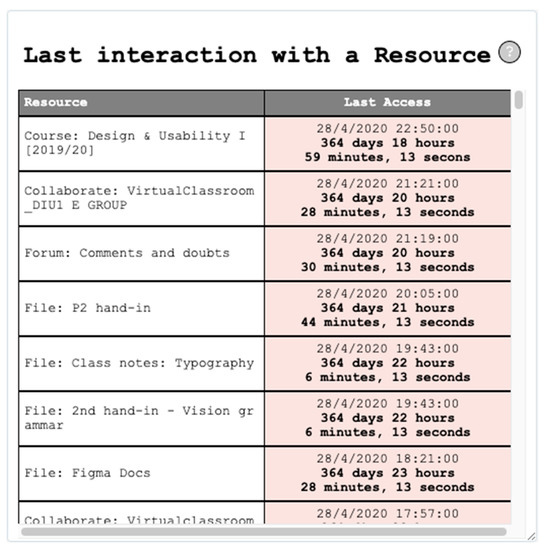

The following widget adds information about the last connection of each member of the course, in a table format showing the exact date of the last connection, and how long ago it was made (see Figure 13). This widget helps identify which students have not participated for the longest time, and therefore, for example, prevent the drop out.

Figure 13.

JSMLA’s list of last access to course for each student.

The third section, Resources, contains widgets that provide specific information about each resource of the course and the number of interactions on them. The first widget is a table that represent the last time each resource was accessed by anyone, and how long ago it was made (see Figure 14). The heatmap helps to visually identify which course elements students are less interacting with at the time, and which ones they are interacting more.

Figure 14.

JSMLA’s list of last access to a resource by any student.

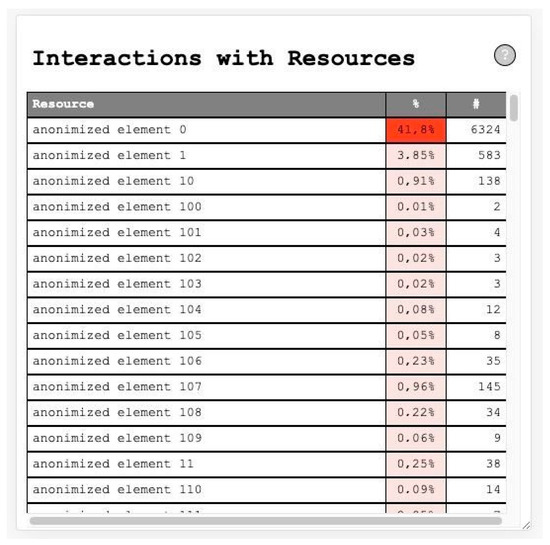

The next four widgets are tables that show percentages of interaction with different resources, and the number of times. The first table shows percentage of interaction with all kinds of resources (see Figure 15).

Figure 15.

JSMLA’s list of resources and their relative and absolute interaction for said course.

The second table represents percentage of interaction with the components of the course (see Figure 16).

Figure 16.

JSMLA’s list of components, also known as type of resource, and their relative and absolute interaction for said course.

The third table represents percentage of interaction with the events of the course (see Figure 17).

Figure 17.

JSMLA’s list of events and their relative and absolute interaction for said course.

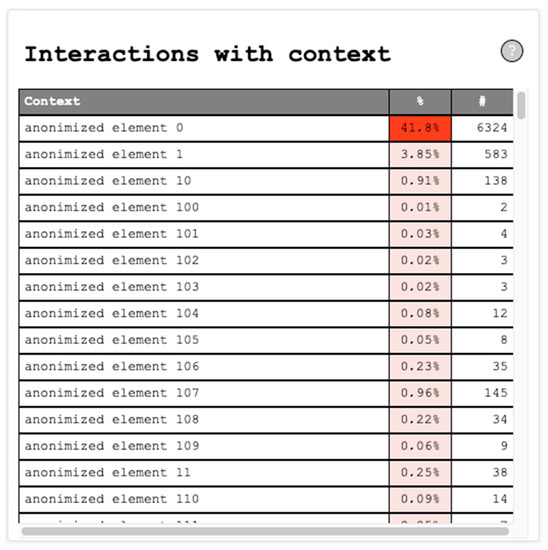

The fourth table represents percentage of interaction with the context of the course (see Figure 18).

Figure 18.

JSMLA’s list of context elements and their relative and absolute interaction for said course.

The last widget is a pie plot that represents the number of different kinds of element the course has (see Figure 19).

Figure 19.

JSMLA’s pie plot to represent the amount of interaction each component represents in a course.

Aside from these sections, we also have a general filter on the top of the application to show data only for a specific student and/or a specific resource (tasks, files, pages, etc.). This provides the user an easy access to the specific data and not only a general visualization. To further improve the data filter, we added a calendar icon which opens a modal to modify the date range (see Figure 20).

Figure 20.

JSMLA date filter to show only relevant data for a certain period.

The educational data shown on the dashboard may also be handled in different ways. We provide three options on the menu situated on the left side of the application: Default report, Load and Download.

The tool is very simple and easy to use. Its basic functionalities are intuitive and not that complicated in a user’s perspective.

2.3. Heuristic Analysis

Any widget has to provide fast and efficient readability across information, such as that displayed in rows and columns. The structure and flexibility can adapt to any source of data. However, the amount of information displayed in a single widget also matters. Too much information may result in a higher level of difficulty in understanding the facts and meaningful data. Hence, widgets also require deeper revision and polishing to not overload them with meaningless data. For example, considering table widgets there must be limitations to the number of rows and columns [32].

After the creation of the first version of JSMLA, we performed a revision to evaluate the improvements of the site to find and solve its user experience weaknesses, as well as to reinforce the strong points to make it more intuitive, clear, and accessible to the average user. To analyze the usability of the JSMLA front-end website, we decide to create a heuristic report [33], which consists of testing a series of usability hypotheses and achieving business objectives to obtain conclusions and proposals for improvement. This analysis is useful to understand the current state of the product by analyzing where it is deployed and what needs to be developed to accomplish objectives without involving final users.

2.4. Survey

A survey was conducted in Spanish with 65 professors from different education levels, specifically post-compulsory education such as primary and secondary education, higher education, basic and intermediate vocational training, and university education which includes both Bachelor and Master cycles. The total population for this study was provided across announcing this survey across social media [34,35], mail chains by the authors. Considering that each professor is an active participant in the educational sector, we did not set any minimum requirement as this study aims to inspect the perception of teachers towards JSMLA actual features and explore new possibilities directly from its main target.

The survey consisted of a thorough validation of the indicators shown in the dashboard to determine whether the information is useful for their student evaluation [36]. We presented a set of questions using a Liker scale from 1 to 5 as the answer. The lowest value, 1, means they strongly disagree, and the highest value, 5, means that they strongly agree. The survey also required teachers to introduce a brief description as to why the indicator is of use to them (summarized in a very short question).

We do not consider this study as experimental as it has no control group yet provides a group with enough participants to consider it a representative sample to provide significant findings. Despite these facts, it must be noted that the survey was conducted in Spanish and its population comes largely from similar and near regions.

An expected outcome from the survey is the visualization of each indicator and their kind of data as the expectation of new parameters to observe, so JSMLA may acquire new lines of development towards the study results; expanding the metrics the system may represent in the future and provide meaning for other tools which exploit said data, such as Learnglish or any other tools alike [29,37].

Structure

The survey is divided into four different sections (see Figure 21):

Figure 21.

Resources evaluated through the study survey.

- Course totals;

- Timeline of accesses to the course;

- Student participation;

- Student interactions with resources.

Each section refers to a specific group of indicators that are closely related to one another, explaining current JSMLA features and asks the population in regard to its perceived usefulness within the Liker scale and an open short question to justify the given mark and explain if they would use said feature.

The first section, course totals, refers to the widgets that indicate the total number of accesses (i) to the course, (ii) to each type of resource, and (iii) to each activity type. In this section, the indicators allow the user to understand the activity of the course and the types of resources and activities that are highly relevant to the students.

The second section, a timeline of accesses to the course, refers to the graphs which indicate the total number of (i) accesses to the course, (ii) last accesses, and (iii) accesses to each resource and activity concerning time. This set of indicators allows the user to understand the organization of students. The user may be able to see who is actively participating in the course and constantly carrying out their tasks daily. These indicators also exhibit the most used resources. The user will be able to understand the behavior of each student and act upon the information to prevent the students who are in danger of abandoning the course.

The third section, called student participation, contains widgets that show (i) the percentage of accesses to the course, (ii) the total number of accesses to the subject webpage, and (iii) to each resource and activity. These indicators help us understand the student information individually, offering an opportunity to pinpoint those who are making an effort and those waiting until the last moment to work on the proposed projects and activities. According to the information gained from these indicators, the teachers will know when to send notifications to some students to increase their active participation in the course.

The fourth and last section, Student interactions with resources, offers indicators for the student interactions with the resources. This includes (i) the percentage of the accesses, (ii) the total number of accesses, and (iii) the date and time of last access to the resources. The resources can be any activity, task, Moodle component such as files, forums, registers, etc., as well as user events. A user event can be a submitted task, a viewed activity, a created group, etc. All of these give the user a better understanding of the student’s behavior concerning each available resource in Moodle and in the course itself. The main objective is to detect which are the most used resources and the less used ones. The teacher will also see the most common actions done and who participates more in terms of resources.

These four sections only gather insights concerning the current widgets in the dashboard. After collecting their insights on the indicators, they were asked whether they still lack indicators. If so, they were asked to comment on it with a brief description.

With the current widgets in the JSMLA dashboard, they were also asked for possible improvements and changes to enhance the user experience. This will boost the utility of the dashboard for various contexts and build on the collected information to develop possible future indicators that are useful on a significant level.

3. Results

We present the results of the heuristic analysis and survey. These results were used to improve user experience in the use of the predefined JSMLA dashboard and to create new widgets regarding the needs of surveyed teachers.

3.1. Heuristic Analysis Results

The hypotheses under which JSMLA was evaluated are Jakob Nielsen’s 10 Heuristic Usability Principles [26]. The website was evaluated under the criteria of each of the 10 principles (the heuristics):

- Visibility of system status: system always inform users about intern processes.

- Math between system and real world: system speaks a language that the user easily understands.

- User control and freedom: the system permits undo and redo of every function.

- Consistency and standards: same meanings are represented equal, maintain consistency over icons.

- Error prevention: prevention of errors occurring in the first place.

- Recognition rather than recall: make actions visible to minimize user’s use of memory.

- Flexibility and efficiency of use: allow users to tailor frequent actions.

- Aesthetic and minimalist design: no extra information is given.

- Help users recognize errors: express error messages in plain language.

- Help and documentation: provides help and documentation.

These principles are the basis for any designer when evaluating usability, and therefore the most common ones to employ in the heuristic analysis.

The use case consists of a written description of what actions users perform on the page, i.e., what steps they would take to perform certain tasks. Writing this can be very useful to know how a certain customer will behave on the site, so it is important to define a situation that fits the normal use of the site: define well the personality of the hypothetical user, his environment, his motivations, why he uses the site, what external factor influences him, what is his real objective, etc.

The use case used for this analysis consisted of a teacher who teaches several subjects and uses the platform to analyze the real activity of his students with the material he shares.

Regarding accessibility, an area worked from an inclusive design point of view was studied that the page respecting the current regulations, specifically, the “Royal Decree 1112/2018 on the accessibility of websites and applications for mobile devices of the public sector” [38].

The result of this analysis consists of a list of web problems, the hypotheses, grouped according to its severity. The analysis extracted 20 findings classified according to their importance: priority, significance and relevancy.

As can be seen in Figure 22, the main usability errors raised by the first version of the site are based on the eighth principle, which deals with aesthetics. The poor layout of the content and the design of the graphs and tables generated confusion in the interpretation of the information.

Figure 22.

Distribution graph of findings found in the analysis, where the abscissa axis is the number of Jakob Nielsen’s heuristic principle, and the ordinate axis is the number of findings found.

Priority assumptions are also detailed concerning page consistency and patterns, corresponding to the fourth principle, since a consistent design pattern is not maintained for all sections. This hinders the ease of use and clarity of design.

Regarding the first principle, which deals with “visibility of system activity”, there are hypotheses of lack of communication that may confuse the user because he/she does not understand what the page is doing at a precise moment.

Thanks to the third principle, which corresponds to “the user’s freedom to move around the web page and undo actions”, the user had to reload the page to continue using it, resulting in major usability and fluidity of use failure.

The tenth principle deals with “the help and explanation that the page offers to the user”. Two significant findings are found wherein the user may not understand the content, thus limiting the clear understanding of the information.

It can be observed that concerning the second and fifth principles only one significant finding is found, respectively. These deal with the adequacy of the system to human language and error prevention, respectively.

The results of the heuristic analysis allow for several significant improvement changes to the first version of JSMLA and visible in Figure 23. Since the clear interpretation of data is fundamental to JSMLA, all findings related to the aesthetics of tables and content layout, e.g., are resolved:

Figure 23.

Table alignment.

- Remove elements that do not contain any information or nonfunctioning buttons;

- Enable resizing of tables and graphs;

- Correctly align the content of tables.

JSMLA has a colorimetric representation to highlight the values higher than the rest in each table. This color code is used to facilitate the search and understanding of the data visually and quickly. Initially, this code contains multiple different colors for different tables; in the analysis, it is observed that the information it is intended to provide is not clear and confuses the interpretation of the data. The system is simplified from several colors to a single color with different levels of brightness, see Figure 24.

Figure 24.

Color coding of the tables, with the darker shades representing the major colors and the lighter shades representing the minor colors.

JSMLA is an open-source tool whose main users are teachers of different subjects and teaching areas, so it must be clear, useful, and easy to use for any user. To facilitate the understanding of the information that the page shows to the user, a tooltip is added to each widget, see Figure 25, which consists of a floating box with a brief explanation.

Figure 25.

Tooltip for each widget.

Another oversight of the first version lies in the user’s freedom in using the page, along with the lack of visibility of the system status. The version tested does not react when an erroneous file is loaded; it simply stops the progress bar, giving the user a false sense that the tool is continuing to load the data. This error forces the user to close the page and reopen it to continue using it, which also happens when the user wants to load a different file than the current one.

Both impediments are solved by adding the functionality of a button that allows uploading other files at any time, as shown in Figure 26. To strengthen error detection, filters are also added that only allow uploading of files with proper file extension and not corrupted files.

Figure 26.

Load a custom dashboard.

Through this Nielsen analysis, multiple findings of errors are detected in both the usability and accessibility of the web page that serves to make it more efficient and accessible.

An important unresolved hypothesis is to improve the filter by creating subsets of categories to list and reduce the dimension, in addition to incorporating a text field that allows searching with autocomplete suggestion. This improvement is considered a relevant hypothesis, but it is finally decided to leave it as a line for the future because the priority is to reinforce clarity when interpreting data and colors, ease of use and understanding its functions, and simple design that facilitates the understanding of the information.

3.2. Teacher Survey

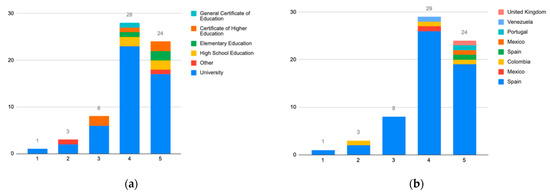

The questionnaire’s population is based on teaching staff from different types of educational institutions located in different countries.

As shown in Figure 27, the majority of the population is located in Spain (91.8%), followed by Colombia (4.8%) and Mexico (3.2%). There is also participation of staff located in the UK, Venezuela, and Portugal. Likewise, the majority of respondents are based in university (75.4%), high school (7.7%), and secondary school (6.2%); to a lesser, extent there are educational personnel from primary school (4.6%), high school (1.5%), middle school (1.5%) and five others (3.1%).

Figure 27.

Teachers’ educational stage by their country.

As shown in Figure 28, among the institutions that have participated in the study, there are some well-known university institutions, such as La Salle (Universitat Ramon Llull), UA, UCA, UPM, UCA, and UPM.

Figure 28.

Teacher distribution by institution.

Considering section “course totals” of the survey, as shown in Figure 29, most of the population is concentrated in the high end of the scale offered, both from a regional and educational stage perspective. From the perspective of Certificate of Higher Education, Other and a segment of University, its usefulness is not as relevant.

Figure 29.

(a) Perceived usefulness of course totals by teachers across educational stages. (b) Perceived usefulness of course totals by teachers across countries.

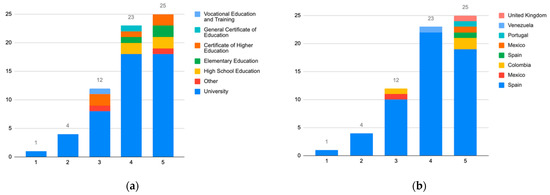

Scores shown in Figure 30 are justified by the following comments:

Figure 30.

(a) Usefulness perceived by teachers across educational stages of last access and timelines. (b) Distribution of perceived usefulness of last access and timelines by teachers across countries.

- It allows to determine which content is more attractive to the learner and thus makes adjustments, among others.

- It gives an idea, but the exact reason for the access is not known.

- It allows to know the utilization of the resources and to follow up the activity of the students. The use of the resources will allow the (re)design of these in a cycle of continuous improvement of the design of the subject.

Considering section “last access and timeline”, as shown in Figure 13, most of the population answered amidst the high end of the scale offered, both from regional and educational perspectives. In this case, the population distribution for teachers across countries is similar to teachers across educational stages.

In the following, we provide a set of responses to understand distribution shown in Figure 31:

Figure 31.

(a) Distribution of perceived usefulness by teachers across educational stages about student participation widgets. (b) Distribution of perceived usefulness by teachers across countries about student participation widgets.

- It is a piece of useful information to contrast the students’ perceptions of work.

- For continuous assessment perhaps, but if they have entered earlier or later, it is not so interesting.

- It allows the detection of periods of more and less activity. It would be interesting to provide the ability to compare between subjects to detect moments of less activity and to be able to plan deliveries in those moments.

Considering the student participation section, as shown in Figure 32, most of the population is concentrated in the higher values of the scale offered, whether considered from a regional perspective or the perspective of the educational stage.

Figure 32.

(a) Distribution of perceived usefulness by teachers across educational stages on resource interactions. (b) Distribution of perceived usefulness by teachers across countries on resource interactions.

The following set of comments offer feedback towards results shown in Figure 32:

- It can be interesting, although the result depends on the Campus activities, which often have static information. Thus, a very organized student who downloads a PDF may not download it again but may review it more than another student who accesses it multiple times.

- It would facilitate the attention to diversity, to dedicate more support to those who need it most, among many other things.

- We can know whether a specific student is using the platform or not. Their degree of interaction with the subject.

Considering section resource interactions, as shown in Figure 15, most of the population is clustered in the upper range of the scale offered, both from the regional and the educational stage perspective.

A set of answers to understand the position of the distribution shown in Figure 33 is provided below:

Figure 33.

(a) Distribution of teachers who perceive a necessary feature development across educational stages. (b) Distribution of teachers who perceive a necessary feature development across countries.

- I find this information very useful to be able to identify which resources are ‘preferred’ by students. This information can help a teacher to improve the quality of a course by focusing on generating resources that students ‘like’.

- Information to revamp the course or update.

- Depends on the resource and how easy it is to show that the resource is being used.

Lastly, teachers were asked which elements would change considering JSMLA widgets’ functionality and content. As shown in Figure 16, University and Other educational stages teachers are the most demanding.

The survey also gathered information regarding the elements that users would like to add or modify to the tool. Below there is an excerpt of the most commented concepts:

- Time indicator (graph): determine how much time a student spends in the subject from the interactions.

- Indicator of the relationship between the number of interactions of a student and the grade obtained (dedication vs. grade obtained).

- Indicator that includes academic results.

- Include more graphs instead of using so many tables, text, or numerical data.

- Include the possibility of downloading a file with information from the tool in Excel or HTML format.

- Indicator with the possibility for a teacher to establish his/her metrics (for example, an indicator based on choosing other indicators with a weight each or similar).

4. Discussion

Considering the indicators offered by the JSMLA dashboard, teachers consider them to be of interest since the majority have positively evaluated each section. Nevertheless, there have been comments regarding the information shown suggesting the need to adapt the content to some of the teachers’ needs. The university sector shows a larger need to adapt the tool to meet their needs, especially in the last section of the survey (view Figure 15 and Figure 16). For instance, there is a particular group of teachers who are eager to incorporate (i) the students’ motivations for using the resources, and (ii) the possibility to compare subjects, that is to say, load more than one JSON log file at a time. However, some of the needs cannot be solved as logs do not have them included or functionalities are too basic, such as the association of grades to the students or the creation of new indicators by the teaching staff. It may be duly noted that the study population offers a certain bias as most people come from certain geographic regions, but we considered it as acceptable as the required base profile is based on their current job: teachers. Hence, the JSMLA resulting dashboard offers interesting visualizations from Moodle’s logs. However, survey results expose that Moodle’s log data is limited and JSMLA can be improved by generating new kinds of visualization from other data sources, such as academic, that meet teacher needs.

In the dashboard, information is offered through different types of visualizations (KPI, line charts, pie charts, line charts, and tables). Notwithstanding the different forms of data visualization, teachers find the information too similar. Maybe this is due to the data of the Moodle log used to create visualizations. This reinforces the need of considering multiple sources of data.

Table widgets offer a plain representation of data. In a case where a large group of students and/or resources is analyzed, this representation grows larger and confuses the end-users. Due to the survey results, it is possible to state that some widgets were complex and hard to process. In particular, sections such as interactions and student accesses are more likely to need a visual aid to extract helpful information, such as an interpretation or a plot that represents data through different aids than tables, such as colors and interaction. In some cases, it seems that the data literacy of some surveyed teachers is not enough to understand the data presented. This is another future research line that opens to evaluate the data literacy of educators.

Due the result of this survey, several actions can be done that also could be of interest for other dashboard developers and investigators as well:

- Replace tables with graphs when possible so that it is more intuitive to extract the information shown.

- To incorporate new metrics that allow relating student involvement with the information displayed, such as information regarding the time students spend on resources or reviewing the tool.

- Add a flowchart that allows users to interpret the students’ paths through the resources.

- Offer more export formats for the generated data.

Regarding JSMLA, many widgets have changed their representation towards a visual definition, such as pie plots and scatter plots. To be precise, the widgets student participation, interactions with components, interactions with events, interactions with URL, interactions with context, last access and students, and resource—students access chart.

In case there is a reduced number of rows, as it happens with widgets like interaction with components, events, or URLs, their representation is translated to pie plots, as they provide a small group of elements. It is also applied to students’ participation and interactions with context, as these plots provide a similar structure to identify the largest groups and allows the end-users to identify said group through a hover. If there is a large number of rows and/or students, a scatter plot is provided, as it provides an axis for each student, it is possible to represent the number of times each element has been interacted with and can be easily filtered. When it comes to representing the last access for each user, the format has changed to provide an unbiased plot that represents data without any interpolation. New widgets are developed, such as students’ time dedication to the course and detailed resources. Such changes are openly available in the GitHub repository [28].

Findings Found across Previous Studies and Current

As mentioned on previous sections, JSMLA was studied through a heuristic revision as part of a user experience focus.

Whilst the previous findings [33] were focused on providing a standardized tool to analyze Moodle’s logs, through the study provided on this paper we expose new concepts to offer related to the following elements:

- Amount of time related to different resources and type of activities and including a flowchart to interpret the student’s path. This is a new concept which was not explored previously as it was not a current feature related to the nature of the previous study.

- Replace tables with graphs when possible so that it is more intuitive to extract the information shown. As this study introduces the population’s ability to understand data, this result was provided as a result of introducing user’s voice onto the content they see. At the heuristic analysis, the principles followed were not based on data visualization or the types of standards applied to the educational sector.

5. Conclusions

The data generated by an educational institution can be considered Small Data, but not Big Data. Even though the data is small, the analytical techniques that can be used are the same as for analyzing large amounts of data. Besides, these techniques are usually executed in Cloud Computing on third-party servers. This means that educational data is transferred outside of academic institutions and is often beyond their control. This situation is replicated when third-party educational technology is integrated into educational processes. Consequently, the educational context presents a serious problem of sensitivity and fragility in terms of confidentiality, privacy, and identity of the students’ data. Therefore, the more educational tools used or the more data transfers to third-party servers, the more dispersed the student data will be and the less control over privacy there will be.

Notwithstanding the above, these issues are accompanied by positive opportunities. The analysis of educational data helps to improve the learning context, teaching activity, and academic management. However, the benefits are not reason enough to put aside the problems related to data. These analytical processes must comply with the law to ensure adequate levels of privacy and security.

Teachers can use local technology to reduce data transfer and third-party actors. In this sense, as the data generated in a class can be considered Small Data, the development of a local analysis tool makes sense. Considering the novel theoretical framework that sets local technology as the first option to analytical technology adoption [17], we present an analytical and visualization tool developed with web technologies, executable locally from any device that has a web browser installed, and without requiring an internet connection.

The development of the tool follows a scientific methodology based on software development iterated in four phases. The first phase consists of the development of the base library. The second phase, and considered as a pilot to help the research, consists of the creation of a schema and visualizations to analyze data from the Moodle records report; the visualizations are based on the needs of teachers of the institution of the authors. The third phase consists of a Nielsen heuristic analysis. The final fourth phase consists of improving visualizations based on a teacher survey of different educational stages. Given the indicators and visualizations provided by the tool, teachers consider them to be of interest. However, there have been comments suggesting the need to add other datasets and views beyond an interaction log. Additionally, the resulting code is open source published [28].

The heuristic analysis allows to improve the usability of the tool and the teacher survey allows to discover other needs and points of view. The results of both actions improve the tool by generating new widgets and adapting existing ones to teachers’ perceptions. The developed tool shows initially analytical-descriptive results that lay the foundations for future developments and/or adaptations of educational indicators.

In summary, our work provides educators with an easy-to-use Moodle educational data analysis and visualization tool, developers an open-source JavaScript library to develop front ends, and researchers a versatile tool with a configurable data schema to support their research.

New lines of research are opened to address new ways of displaying relevant information for teachers, to create new metrics and indicators from multiple data sources, and to know the level of data literacy that can help improve interaction with the tool.

Author Contributions

Conceptualization, D.A.; methodology, D.A. and P.G.; software, D.A., S.C., N.M.J. and P.G.; formal analysis, P.G.; investigation, D.A. and P.G.; writing—original draft preparation, D.A., S.C., N.M.J. and P.G.; writing—review and editing, D.A., P.G. and D.F.; visualization, P.G.; supervision, D.A.; project administration, D.A., P.G. and D.F.; funding acquisition, D.F. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Secretariat of Universities and Research of the Department of Business and Knowledge of the Generalitat de Catalunya and Ramon Llull University, with the grant number 2020-URL-Proj- 058.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data supporting reported results can be found at https://lasalleuniversities-my.sharepoint.com/:f:/g/personal/daniel_amo_salle_url_edu/Erzj5js8gctIlYTdyJ4fGTQB86MrJSNT3QTk3WR24cU0KA?e=D1nFaE (accessed on 25 April 2021).

Acknowledgments

We acknowledge support in the development and documentation of the tool to Rogelio Sansaloni for the integration of new widgets, and to Luis Ribó and Oleksiy Byelyayev for creating the first version of the manual for developers.

Conflicts of Interest

The authors declare no conflict of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript, or in the decision to publish the results.

References

- Faraway, J.J.; Augustin, N.H. When small data beats big data. Stat. Probab. Lett. 2018, 136, 142–145. [Google Scholar] [CrossRef]

- Lambrecht, A.; Tucker, C. Algorithmic bias? An empirical study of apparent gender-based discrimination in the display of stem career ads. Manag. Sci. 2019, 65, 2966–2981. [Google Scholar] [CrossRef]

- Zagitova, V.; Bokulich, A.N. Gender Biased Algorithms: Word Embedding Models’ impact on Gender Equality. Available online: https://d1wqtxts1xzle7.cloudfront.net/63551362/Gender_Biased_Algorithms_Zagitova20200607-19380-es6je5.pdf?1591513955=&response-content-disposition=inline%3B+filename%3DGender_Biased_Algorithms_Word_Embedding.pdf&Expires=1619777016&Signature=bcC1dWPv33Fri5i~vvs-8CRrt9HGO6-WAs4DKfUV4Qw9Ihm3SLrHMZxxnFxh5ldOGQIioGRJDd4p7iH40IwlnqUveulWlKXUg5Hk83YzSrPgV3f0YLwW1JotwPfGysjU83qLysDuHKvHgYnKU~rw9CXA6UQM~~yPjm1pkW4-eFCHqtEIqX1S2zExbBgf-Jxbh4vP5MJj8jGu190juDu7TzqAghmGkJ8u2Yg6hz7JEJp0-vQE~ROxbPV5O7gjd-6sC9hVTt-7qnMo-KBgNLHvac13if-ERSE89WgwPfBr0ks~VPMblJto156U6IgyXzOaRO~j3Kaef0ZlHID63LzXBw__&Key-Pair-Id=APKAJLOHF5GGSLRBV4ZA (accessed on 25 April 2021).

- Broos, T.; Verbert, K.; Langie, G.; Van Soom, C.; De Laet, T. Small data as a conversation starter for learning analytics. J. Res. Innov. Teach. Learn. 2017, 10, 94–106. [Google Scholar] [CrossRef]

- Berland, M.; Baker, R.S.; Blikstein, P. Educational data mining and learning analytics: Applications to constructionist research. Technol. Knowl. Learn. 2014, 19, 205–220. [Google Scholar] [CrossRef]

- Duval, E. Learning Analytics and Educational Data Mining. Available online: https://www.google.com.hk/url?sa=t&rct=j&q=&esrc=s&source=web&cd=&ved=2ahUKEwihsda2zqXwAhVLM94KHVLvC6IQFjAGegQIChAD&url=https%3A%2F%2Fwww.upenn.edu%2Flearninganalytics%2Fryanbaker%2FLAKs%2520reformatting%2520v2.pdf&usg=AOvVaw2cNyNbifMEbNFMbuTKVofa (accessed on 25 April 2021).

- Williamson, B. Digital education governance: Data visualization, predictive analytics, and ‘real-time’ policy instruments. J. Educ. Policy 2016, 31, 123–141. [Google Scholar] [CrossRef]

- Lupton, D.; Williamson, B. The datafied child: The dataveillance of children and implications for their rights. New Media Soc. 2017, 19, 780–794. [Google Scholar] [CrossRef]

- Amo, D.; Alier, M.; García-Peñalvo, F.J.; Fonseca, D.; Casañ, M.J. Protected Users: A Moodle Plugin To Improve Confidentiality and Privacy Support through User Aliases. Sustainability 2020, 12, 2548. [Google Scholar] [CrossRef]

- Amo, D.; Alier, M.; García-Peñalvo, F.J.; Fonseca, D.; Casany, M.J. GDPR security and confidentiality compliance in LMS’ a problem analysis and engineering solution proposal. In Proceedings of the ACM International Conference Proceeding Series; Conde, M.Á., Rodríguez, F.J., Fernández, C., García-Peñalvo, F.J., Eds.; ACM: New York, NY, USA, 2019; pp. 253–259. [Google Scholar]

- Hoel, T.; Chen, W. Implications of the European Data Protection Regulations for Learning Analytics Design. Available online: https://www.estandard.no/files/LAEDM_Kanazawa_Sep2016_Hoel_Chen_final_w_header.pdf (accessed on 25 April 2021).

- Pardo, A.; Siemens, G. Ethical and privacy principles for learning analytics. Br. J. Educ. Technol. 2014, 45, 438–450. [Google Scholar] [CrossRef]

- Ethics & Privacy in Learning Analytics—A DELICATE Issue—LACE—Learning Analytics Community Exchange. Available online: https://learning-analytics.info/index.php/JLA/article/view/4912 (accessed on 25 April 2021).

- Drachsler, H.; Greller, W. Privacy and analytics: It’s a DELICATE issue a checklist for trusted learning analytics. In Proceedings of the Sixth International Conference on Learning Analytics & Knowledge, Edinburgh, UK, 25–29 April 2016; ACM Press: New York, NY, USA, 2016; pp. 89–98. [Google Scholar]

- Chatti, M.A.; Dyckhoff, A.L.; Schroeder, U.; Thüs, H. A reference model for learning analytics. Int. J. Technol. Enhanc. Learn. 2012, 4, 318–331. [Google Scholar] [CrossRef]

- Lang, C.; Siemens, G.; Wise, A.; Gasevic, D. Handbook of Learning Analytics. Available online: https://www.solaresearch.org/publications/hla-17/ (accessed on 25 April 2021).

- Amo, D.; Torres, R.; Canaleta, X.; Herrero-Martín, J.; Rodríguez-Merino, C.; Fonseca, D. Seven Principles to Foster Privacy and Security in Educational Tools: Local Educational Data Analytics. Available online: https://dl.acm.org/doi/abs/10.1145/3434780.3436637 (accessed on 25 April 2021).

- Amo, D.; Fonseca, D.; Alier, M.; García-Peñalvo, F.J.; Casañ, M.J.; Alsina, M. Personal Data Broker: A Solution to Assure Data Privacy in EdTech. In Proceedings of the HCII: International Conference on Human-Computer Interaction. Learning and Collaboration Technologies, Designing Learning Experiences (LNCS), Orlando, FL, USA, 26–31 July 2019; Zaphiris, P., Loannou, A., Eds.; Springer: Cham, Switzerland; Volume 11590, pp. 3–14. [Google Scholar]

- Amo, D.; Alier, M.; García-Peñalvo, F.; Fonseca, D.; Casañ, M.J. Privacidad, seguridad y legalidad en soluciones educativas basadas en Blockchain: Una Revisión Sistemática de la Literatura. RIED Rev. Iberoam. Educ. Distancia 2020, 23, 213. [Google Scholar] [CrossRef]

- Gong, C.; Liu, J.; Zhang, Q.; Chen, H.; Gong, Z. The characteristics of cloud computing. In Proceedings of the International Conference on Parallel Processing Workshops, San Diego, CA, USA, 13–16 September 2010; pp. 275–279. [Google Scholar]

- Daniel, S.J. Education and the COVID-19 pandemic. Prospects 2020, 49, 91–96. [Google Scholar] [CrossRef]

- Prat, J.; Llorens, A.; Alier, M.; Salvador, F.; Amo, D. Impact of Covid-19 on UPC’s Moodle platform and ICE’s role. Available online: https://dl.acm.org/doi/10.1145/3434780.3436687 (accessed on 28 April 2021).

- Corell, A.; García-Peñalvo, F.J. COVID-19: La Encerrona que Transformó las Universidades en Virtuales. Available online: https://repositorio.grial.eu/handle/grial/2182 (accessed on 28 April 2021).

- EP and the CEU Regulation (EU) 2016/679 GDPR. Available online: https://eur-lex.europa.eu/legal-content/EN/TXT/PDF/?uri=CELEX:32016R0679 (accessed on 28 April 2021).

- Mandinach, E.B.; Gummer, E.S. A Systemic View of Implementing Data Literacy in Educator Preparation. Educ. Res. 2013, 42, 30–37. [Google Scholar] [CrossRef]

- Nielsen, J. 10 Usability Heuristics for User Interface Design. Available online: https://www.nngroup.com/articles/ten-usability-heuristics/ (accessed on 28 April 2021).

- Distante, D.; Villa, M.; Sansone, N.; Faralli, S. MILA: A SCORM-compliant interactive learning analytics tool for moodle. In Proceedings of the IEEE 20th International Conference on Advanced Learning Technologies (ICALT 2020), Tartu, Estonia, 6–9 July 2020; pp. 169–171. [Google Scholar]

- Amo, D. JSMLA GitHub Repository. Available online: https://github.com/danielamof/jsmla (accessed on 1 December 2020).

- Troussas, C.; Krouska, A.; Sgouropoulou, C.; Voyiatzis, I. Ensemble learning using fuzzy weights to improve learning style identification for adapted instructional routines. Entropy 2020, 22, 735. [Google Scholar] [CrossRef] [PubMed]

- Hines, A. Using Timelines to Enhance Comprehension. Available online: https://www.readingrockets.org/article/using-timelines-enhance-comprehension#:~:text=Botheducatorsandparentscan,significance(Moline%2C1995) (accessed on 5 February 2021).

- University of Leicester Numeracy Skills. Available online: https://www.le.ac.uk/oerresources/ssds/numeracyskills/page_53.htm (accessed on 5 February 2021).

- Pharmacoepidemiological Research on Outcomes of Therapeutics by a European Consortium (PROTECT) Visualisations—Tables. Available online: https://protectbenefitrisk.eu/tables.html#:~:text=Tables (accessed on 5 February 2021).

- Villegas, E.; Gómez, P. jsMLA Heuristic Report. Available online: https://lasalleuniversities-my.sharepoint.com/:b:/g/personal/pablo_gp_salle_url_edu/EZSyl5hGBhhFt10hT9Sv1W4BU18lpErNrhISakkyxmEWyA?e=l2F3gx (accessed on 25 March 2021).

- Amo, D. Call to Teachers across Social Media. Available online: https://twitter.com/danielamof/status/1353992372670107654?s=20 (accessed on 25 March 2021).

- Publicación|Feed|LinkedIn. Available online: https://www.linkedin.com/feed/update/urn:li:activity:6763045426766606337/?updateEntityUrn=urn%3Ali%3Afs_feedUpdate%3A%28V2%2Curn%3Ali%3Aactivity%3A6763045426766606337%29 (accessed on 25 March 2021).

- Amo, D.; Gómez, P.; Cea, S.; Marie, J.N. JSMLA Validation Tool. Available online: https://docs.google.com/forms/d/e/1FAIpQLSetPbr9IH4MLE9hZ7VyUO6EYao0HFDdMSU1MX7xPU0FpDun6A/viewform (accessed on 25 March 2021).

- El Haji, E.; Mohamed, M.; Harzli, E.L. Using KNN Method for Educational and Vocational Guidance Essaid el Haji Faculté Polydisciplinaire-Larache Using KNN Method for Educational and Vocational Guidance. Artic. Int. J. Comput. Appl. 2014, 100, 975–8887. [Google Scholar] [CrossRef][Green Version]

- Ministerio de la Presidencia Real Decreto 1112/2018, de 7 de Septiembre, Sobre Accesibilidad de los Sitios web y Aplicaciones para Dispositivos Móviles del Sector Público. Available online: https://www.boe.es/diario_boe/txt.php?id=BOE-A-2018-12699 (accessed on 1 September 2020).

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).