Prioritising CAP Intervention Needs: An Improved Cumulative Voting Approach

Abstract

:1. Introduction

2. Theoretical Framework

- -

- Nominal scale techniques categorise items into groups according to perceived importance, such as ‘very important’, ‘somewhat important’, etc. Even though nominal scales are usually easy to use, they do not allow differentiation of relative importance among items in the same group. Examples of popular nominal-level techniques are the ‘Top 10’ and MoSCoW methods (acronym of M-Must have S-Should have C-Could have W-Won’t have).

- -

- Ordinal scale techniques produce a list of items ranked according to their relative importance. These techniques allow for the importance of items to be differentiated, but not to what extent. Numerical assignment and Wieger’s Method are two examples of ordinal scale techniques.

- -

- Interval scales rank items and reveal information on the size of the difference between the ordered options. Interval-level techniques are not commonly employed; however, one notable example is the requirement uncertainty prioritisation approach (RUPA).

- -

- Ratio scales use zero to represent the absolute absence of the measured value, which allows values at any point other than the origin to be multiplied or divided by constants without altering the information divulged by the scale. These scales produce an ordered list of items, in which the ratio between each pair of values is known. Items are typically evaluated on a 0–100 or similar scale, making it easy to quantify their absolute and relative importance. Their ability to order, to determine intervals, relative distances, and ratios between items, and to be used in refined statistical computations, has positioned ratio scale techniques as the most valuable of all prioritisation taxonomies [15,16].

3. Main Methodological Issues and a Proposed Improved Methodology

- The process should be ‘transparent’, both in the expression of individual preferences and in how those preferences will be aggregated to compute the final ranking;

- The process should be ‘easy to understand and execute’, yet simultaneously allow for expressive votes. Expressing preferences in complex domains is cognitively demanding, so the prioritisation method should not burden the decision maker;

- The process should be ‘flexible’ enough to be used and yield coherent results with small, medium, and large sets of items to be prioritised by a range in the count of participants;

- The process should be ‘software-based’, so that stakeholders from different places, or regional administrations in the case of Italy, can be involved. Methods that require face-to-face interaction are impractical and not recommended, especially in the COVID-19 pandemic;

- The process should be able to ‘run iteratively’. The prioritisation of needs specified in CAP is a complex process that requires multiple rounds to refine individual evaluations. Therefore, the method should not be too time-consuming to be run iteratively.

4. Results

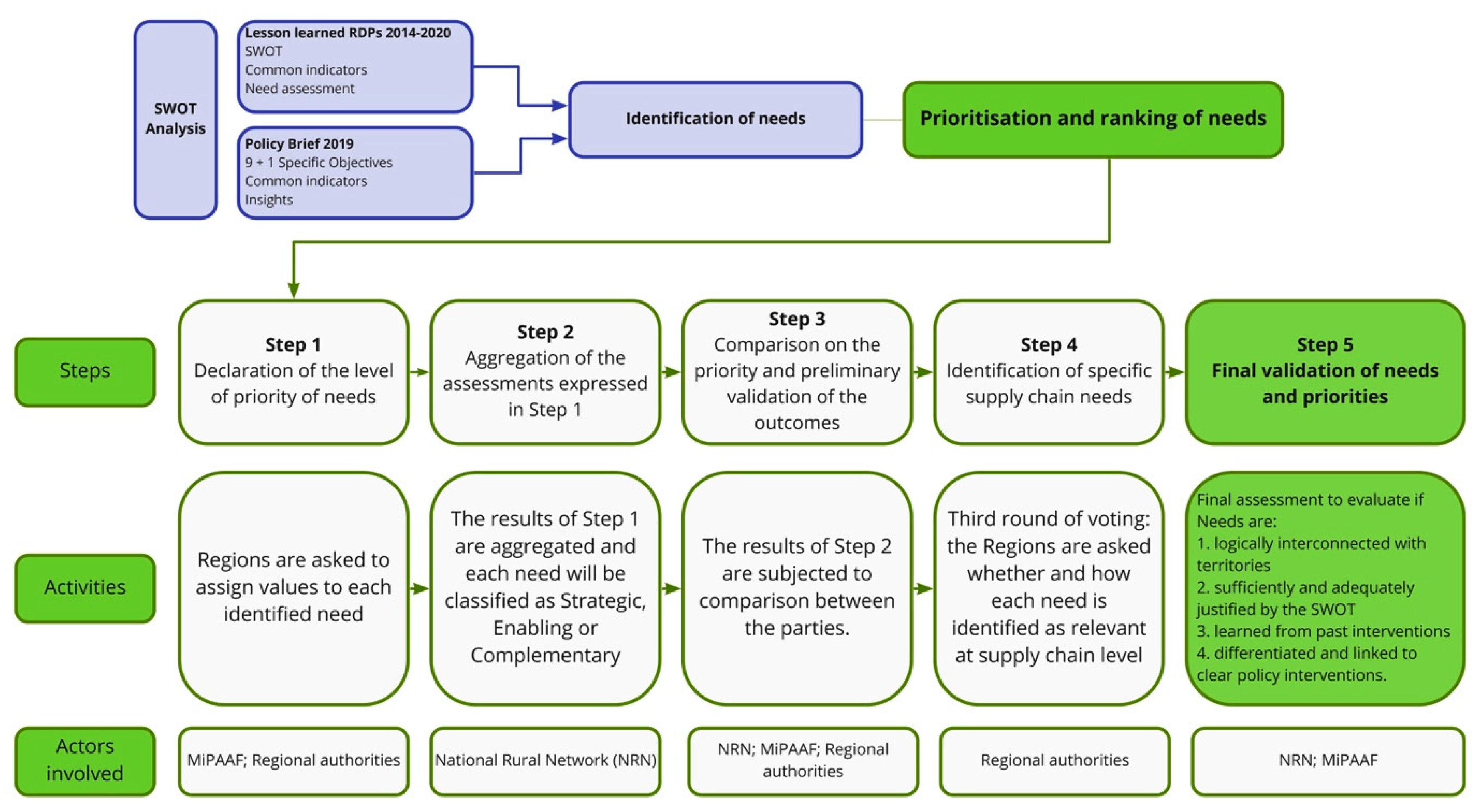

4.1. The Analysis of Results and Criticism from Round I

4.2. Arrangements and Voting Shape in Round II

- the implementation and strengthening of telematics and digital infrastructure to favour the spread of broadband and ultra-broadband in rural areas;

- the increase in the attractiveness of the territories and support for sustainable tourism;

- the raising of the quality of life in rural areas by improving the quality and accessibility of services to the population and businesses, in order to stop depopulation and support entrepreneurship.

5. Discussion-Policy Implications

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- European Commission. Proposal for a Regulation of the European Parliament and of the Council establishing Rules on Support for Strategic Plans to be Drawn Up by Member States under the Common Agricultural Policy (CAP Strategic Plans) and Financed by the European Agricultural Guarantee Fund (EAGF) and by the European Agricultural Fund for Rural Development (EAFRD); COM/2018/392 final—2018/0216 (COD); European Commission: Brussels, Belgium, 2018. [Google Scholar]

- Carey, M. The Common Agricultural Policy’s New Delivery Model Post-2020: National Administration Perspective. EuroChoices 2019, 18, 11–17. [Google Scholar] [CrossRef] [Green Version]

- Massot, A.; Negre, F. Towards the Common Agricultural Policy beyond 2020: Comparing the Reform Package with the Current Regulations, Directorate-General for Internal Polices; European Parliament: Brussels, Belgium, 2018; pp. 1–82. [Google Scholar]

- Matthews, A. Evaluating the Legislative Basis for the New CAP Strategic Plans. Available online: http://capreform.eu/evaluating-the-legislative-basis-for-the-new-cap-strategic-plans/ (accessed on 15 February 2021).

- Metta, M. How Transparent and Inclusive is the Design Process of the National CAP Strategic Plans? Available online: https://www.arc2020.eu/how-transparent-and-inclusive-is-the-design-process-of-the-national-cap-strategic-plans/ (accessed on 19 February 2021).

- Angeli, S.; Cagliero, R.; De Franco, R.; Mazzocchi, G.; Monteleone, A.; Tarangioli, S. La definizione Delle Esigenze nel Piano Strategico Della Pac 2023–2027; Working Document, Rete Rurale Nazionale 2014–2020; Mipaaf: Roma, Italy, 2020; preprint. [Google Scholar]

- ARC. CAP Reform Post 2020: Lost in Ambition? Final Report; ARC: Brussels, Belgium, 2020; pp. 1–72. [Google Scholar]

- Regulation (EU). 2020/2220 of the European Parliament and of the Council of 23 December 2020 Laying Down Certain Transitional Provisions for Support from the European Agricultural Fund for Rural Development (EAFRD) and from the European Agricultural Guarantee Fund (EAGF) in the Years 2021 and 2022 and Amending Regulations (EU) No 1305/2013, (EU) No 1306/2013 and (EU) No 1307/2013 as Regards Resources and Application in the Years 2021 and 2022 and Regulation (EU) No 1308/2013 as Regards Resources and the Distribution of Such Support in Respect of the Years 2021 and 2022; Council of the European Union: Brussels, Belgium, 2020. [Google Scholar]

- Juristo, N.; Vegas, S.; Solari, M.; Abrahão, S.; Ramos, I. A process for managing interaction between experimenters to get useful similar replications. Inf. Softw. Technol. 2013, 55, 215–225. [Google Scholar] [CrossRef] [Green Version]

- Phillips, L.D.; Costa, C.A.B. Transparent prioritisation, budgeting and resource allocation with multi-criteria decision analysis and decision conferencing. Ann. Oper. Res. 2007, 154, 51–68. [Google Scholar] [CrossRef] [Green Version]

- Smaoui, H.; Lepelley, D. Le système de vote par note à trois niveaux: Étude d’un nouveau mode de scrutin. Rev. D’économie Polit. 2013, 123, 827–850. [Google Scholar] [CrossRef]

- Marcatto, F. Dot Voting: Because One Vote Is Not Enough. Available online: https://mindiply.com/blog/post/dot-voting-because-one-vote-is-not-enough (accessed on 15 February 2021).

- Kato, T.; Asa, Y.; Owa, M. Positionality-Weighted Aggregation Methods on Cumulative Voting. arXiv 2020, arXiv:2008.08759v1. Available online: https://arxiv.org/abs/2008.08759v1 (accessed on 19 February 2021).

- Quarfoot, D.; von Kohorn, D.; Slavin, K.; Sutherland, R.; Goldstein, D.; Konar, E. Quadratic voting in the wild: Real people, real votes. Public Choice 2017, 172, 283–303. [Google Scholar] [CrossRef]

- Tufail, H.; Qasim, I.; Faisal Masood, M.; Tanvir, S.; Haider Butt, W. Towards the selection of Optimum Requirements Prioritization Technique: A Comparative Analysis. In Proceedings of the 5th International Conference on Information Management (ICIM 2019), Cambridge, UK, 24–27 March 2019; IEEE Digital Library. pp. 227–231. [Google Scholar] [CrossRef]

- Achimugu, P.; Selamat, A.; Ibrahim, R.; Mahrin, M.N. A systematic literature review of software requirements prioritization research. Inf. Softw. Technol. 2014, 56, 568–585. [Google Scholar] [CrossRef]

- Saaty, T.L. What is the analytic hierarchy process. In Mathematical Models for Decision Support; Mitra, G., Ed.; NATO ASI Series, F48; Springer: Berlin, Germany, 1988; pp. 109–121. [Google Scholar]

- Saaty, T.L.; Vargas, L.G. The analytic network process. In Decision Making with the Analytic Network Process; Springer: Boston, MA, USA, 2013; pp. 1–40. [Google Scholar]

- Karlsson, J.; Wohlin, C.; Regnell, B. An evaluation of methods for prioritizing software requirements. Inf. Softw. Technol. 1998, 39, 939–947. [Google Scholar] [CrossRef] [Green Version]

- Vestola, M. A Comparison of Nine Basic Techniques for Requirements Prioritization; Helsinki University of Technology: Helsinki, Finland, 2010; pp. 1–8. [Google Scholar]

- Perini, A.; Ricca, F.; Susi, A. Tool-supported requirements prioritization: Comparing the AHP and CBRank methods. Inf. Softw. Technol. 2009, 51, 1021–1032. [Google Scholar] [CrossRef]

- Ahl, V. An Experimental Comparison of Five Prioritization Methods—Investigating Ease of Use, Accuracy and Scalability. Master’s Thesis, School of Engineering Blekinge Institute of Technology, Ronneby, Sweden, August 2005. [Google Scholar]

- Leffingwell, D.; Widrig, D. Managing Software Requirements: A Use Case Approach, 2nd ed.; Addison-Wesley: Boston, MA, USA, 2003; p. 540. ISBN 978-0321122476. [Google Scholar]

- Bhagat, S.; Brickley, J.A. Cumulative Voting: The Value of Minority Shareholder Voting Rights. J. Law Econ. 1984, 27, 339–365. [Google Scholar] [CrossRef]

- Cooper, D.; Zillante, A. A comparison of cumulative voting and generalized plurality voting. Public Choice 2010, 150, 363–383. [Google Scholar] [CrossRef]

- Palander, T.; Laukkanen, S. A Vote-Based Computer System for Stand Management Planning. Int. J. For. Eng. 2006, 17, 13–20. [Google Scholar] [CrossRef]

- Hoover, A.; Goldbaum, M.H. Locating the optic nerve in a retinal image using the fuzzy convergence of the blood vessels. IEEE Trans. Med Imaging 2003, 22, 951–958. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Heikkilä, V.; Jadallah, A.; Rautiainen, K.; Ruhe, G. Rigorous support for flexible planning of product releases—A stakeholder-centric approach and its initial evaluation. In Proceedings of the 43rd Hawaii International Conference on System Sciences, Honolulu, HI, USA, 5–8 January 2010; IEEE Digital Library. pp. 1–10. [Google Scholar]

- Knapp, J.; Zeratsky, J.; Kowitz, B. Sprint: How to Solve Big Problems and Test New Ideas in just Five Days, 1st ed.; Simon and Schuster: New York, NY, USA, 2016; p. 274. ISBN 978-1501121746. [Google Scholar]

- Skowron, P.; Slinko, A.; Szufa, S.; Talmon, N. Participatory Budgeting with Cumulative Votes. arXiv 2020, arXiv:2009.02690v1. Available online: https://arxiv.org/abs/2009.02690 (accessed on 19 February 2021).

- Asch, S.E. Effects of group pressure upon the modification and distortion of judgments. In Groups, Leadership and Men; Guetzkow, H., Ed.; Carnegie Press: Pittsburgh, PA, USA, 1951; pp. 177–190. [Google Scholar]

- Milgram, S. Behavioral Study of obedience. J. Abnorm. Soc. Psychol. 1963, 67, 371–378. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Kohavi, R.; Henne, R.M.; Sommerfield, D. Practical Guide to Controlled Experiments on the Web: Listen to Your Customers not to the Hippo. In Proceedings of the 13th ACM SIGKDD International conference on Knowledge Discovery and Data Mining, San Jose, CA, USA, 12–15 August 2007; Association for Computing Machinery: New York, NY, USA, 2007; pp. 959–967. [Google Scholar] [CrossRef]

- Cialdini, R.B. Influence: Science and Practice, 1st ed.; Pearson Educations Inc.: London, UK, 1984. [Google Scholar]

- Nadeau, R.; Cloutier, E.; Guay, J.-H. New Evidence about the Existence of a Bandwagon Effect in the Opinion Formation Process. Int. Polit. Sci. Rev. 1993, 14, 203–213. [Google Scholar] [CrossRef]

- Van Erkel, P.F.; Thijssen, P. The first one wins: Distilling the primacy effect. Elect. Stud. 2016, 44, 245–254. [Google Scholar] [CrossRef]

- Svahnberg, M.; Karasira, A. A Study on the Importance of Order in Requirements Prioritisation. In Proceedings of the 3rd International Workshop on Software Product Management (IWSPM 2009), Atlanta, GA, USA, 1 September 2009; IEEE Digital Library. pp. 35–41. [Google Scholar] [CrossRef]

- Marcatto, F. How to Make Better Group Decision with Dot Voting. Available online: https://mindiply.com/blog/post/how-to-make-better-group-decision-with-dot-voting (accessed on 15 February 2021).

- Gibbons, S. Dot Voting: A Simple Decision-Making and Prioritizing Technique. Available online: https://www.nngroup.com/articles/dot-voting/ (accessed on 15 February 2021).

- Dennison, M. Voting with Dots. Thread from a Discussion on the Electronic Discussion on Group Facilitation. Available online: https://www.albany.edu/cpr/gf/resources/Voting_with_dots.html (accessed on 19 February 2021).

- Weaver, R.G.; Farrell, J.D. Managers as Facilitators: A Practical Guide to Getting Work Done in a Changing Workplace; Berrett-Koehler Publishers Inc.: San Francisco, CA, USA, 1997; p. 248. ISBN 978-1576750544. [Google Scholar]

- Amrhein, J. Dot Voting Tips. Michigan State University Extension. Available online: https://www.canr.msu.edu/news/dot-voting-tips (accessed on 15 February 2021).

- Barykin, S.Y.; Kapustina, I.V.; Kirillova, T.V.; Yadykin, V.K.; Konnikov, Y.A. Economics of Digital Ecosystems. J. Open Innov. Technol. Mark. Complex. 2020, 6, 124. [Google Scholar] [CrossRef]

- Chatzipetrou, P.; Angelis, L.; Rovegård, P.; Wohlin, C. Prioritization of Issues and Requirements by Cumulative Voting: A Compositional Data Analysis Framework. In Proceedings of the 36th EUROMICRO Conference on Software Engineering and Advanced Applications (SEAA 2010), Lille, France, 1–3 September 2010; IEEE Digital Library. pp. 361–370. [Google Scholar] [CrossRef]

- Bolli, M.; Cagliero, R.; Cisilino, F.; Cristiano, S.; Licciardo, L. L’analisi SWOT per la costruzione delle strategie regionali e nazionale della PAC post-2020. In Working Document Rete Rurale Nazionale 2014–2020; Mipaaf: Roma, Italy, 2019; p. 54. [Google Scholar]

- European Commission. Commission Recommendations for Italy’s CAP Strategic Plan; SWD(2020) 396 final; European Commission: Brussels, Belgium, 2020. [Google Scholar]

- Everitt, B. The Cambridge Dictionary of Statistics, 1st ed.; Cambridge University Press: Cambridge, UK; New York, NY, USA, 1998; p. 410. ISBN 978-0521766999. [Google Scholar]

- Upton, G.; Cook, I. (Eds.) Understanding Statistics; Oxford University Press: New York, NY, USA; Oxford, UK, 1996; p. 55. ISBN 978-0199143917. [Google Scholar]

- Diceman, J. Dotmocracy Handbook; Version 2.2; CreateSpace Independent Publishing Platform: Scotts Valley, CA, USA, 2010; p. 49. ISBN 978-1451527087. [Google Scholar]

- Erjavec, E.; Lovec, M.; Šumrada, T. New CAP Delivery Model, Old Issues. Intereconomics 2020, 55, 112–119. [Google Scholar]

- Matthews, A. Developing CAP Strategic Plans. Available online: http://capreform.eu/developing-cap-strategic-plans/ (accessed on 15 February 2021).

- European Court of Auditors. Rural Development Programming: Less Complexity and More Focus on Results Needed. Available online: https://www.eca.europa.eu/Lists/ECADocuments/SR17_16/SR_RURAL_DEV_EN.pdf (accessed on 31 March 2021).

- Linstone, H.A.; Turoff, M. (Eds.) The Delphi Method, Techniques and Applications; Addison-Wesley Educational Publishers Inc.: Boston, MA, USA, 2002; p. 621. [Google Scholar]

- European Court of Auditors. Future of the CAP (Briefing Paper). Available online: https://www.eca.europa.eu/Lists/ECADocuments/Briefing_paper_CAP/Briefing_paper_CAP_EN.pdf (accessed on 31 March 2021).

- Erjavec, E.; Lovec, M.; Juvančič, L.; Šumrada, T.; Rac, I. Research for AGRI Committee—The CAP Strategic Plans beyond 2020. In Assessing the Architecture and Governance Issues in Order to Achieve the EU-Wide Objectives; Study Requested by the AGRI Committee; European Parliament, Policy Department for Structural and Cohesion Policies: Brussels, Belgium, 2018; p. 52. [Google Scholar]

- NUVAL Piemonte. Programma di Sviluppo Rurale 2014–2020 della Regione Piemonte. Valutazione ex Ante: Rapporto Finale; Regione: Piemonte, Italy, 2015; p. 168. [Google Scholar]

| Scale | Examples | Complexity | Ease of Use | Accuracy | Statistics |

|---|---|---|---|---|---|

| Nominal Scale | Top 10 | Very easy | Yes | Yes | Mode and chi-square |

| MOSCoW | Easy | Yes | No | ||

| Ordinal Scale | Numerical assignment | Easy | Yes | Yes | Median and percentile |

| Ranking | Easy | N/A | N/A | ||

| Game planning | Easy | Yes | Yes | ||

| Wieger’s method (WM) | Complex | Yes | Yes | ||

| Interval Scale | Requirement uncertainty prioritisation approach (RUPA) | Complex | N/A | N/A | Mean, standard deviation, correlation, regression, variance |

| Ratio Scale | Value-oriented prioritisation | Complex | Yes | Yes | All forms |

| Analytic hierarchy process (AHP) | Very Complex | Yes | Yes | ||

| Cost value ranking | Easy | Yes | No | ||

| Cumulative voting (CV; 100 $) | Complex | Yes | Yes |

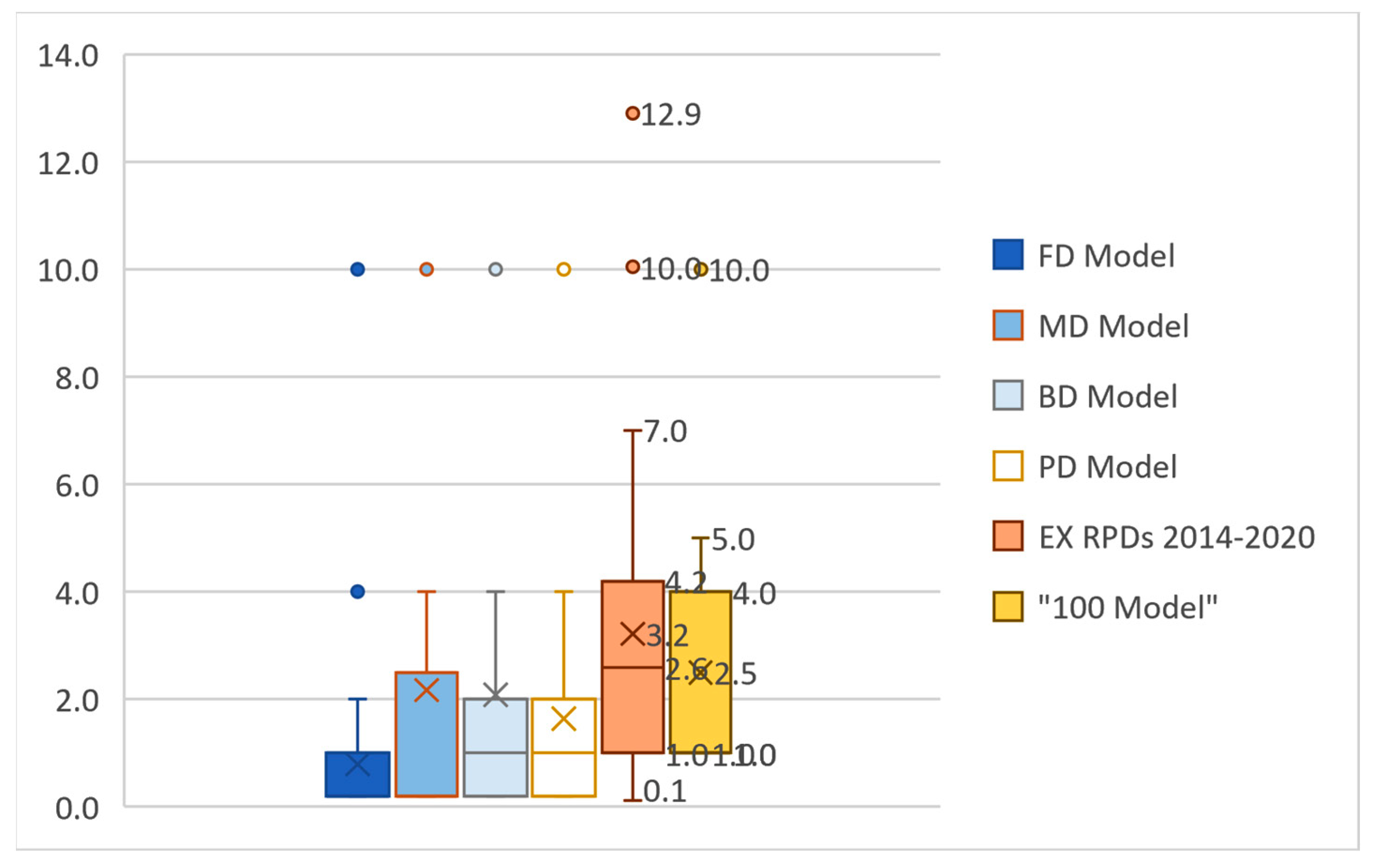

| Model | Total Dots (N) | Dots with “500” Value (N) | Dots with “200” Value (N) | Dots with “100” Value (N) | Dots with “50” Value (N) | Dots with “10” Value (N) |

|---|---|---|---|---|---|---|

| FD | 126 | 1 | 5 | 10 | 35 | 75 |

| MD | 61 | 3 | 7 | 11 | 15 | 25 |

| BD | 48 | 5 | 5 | 8 | 10 | 20 |

| PD | 46 | 6 | 5 | 5 | 5 | 25 |

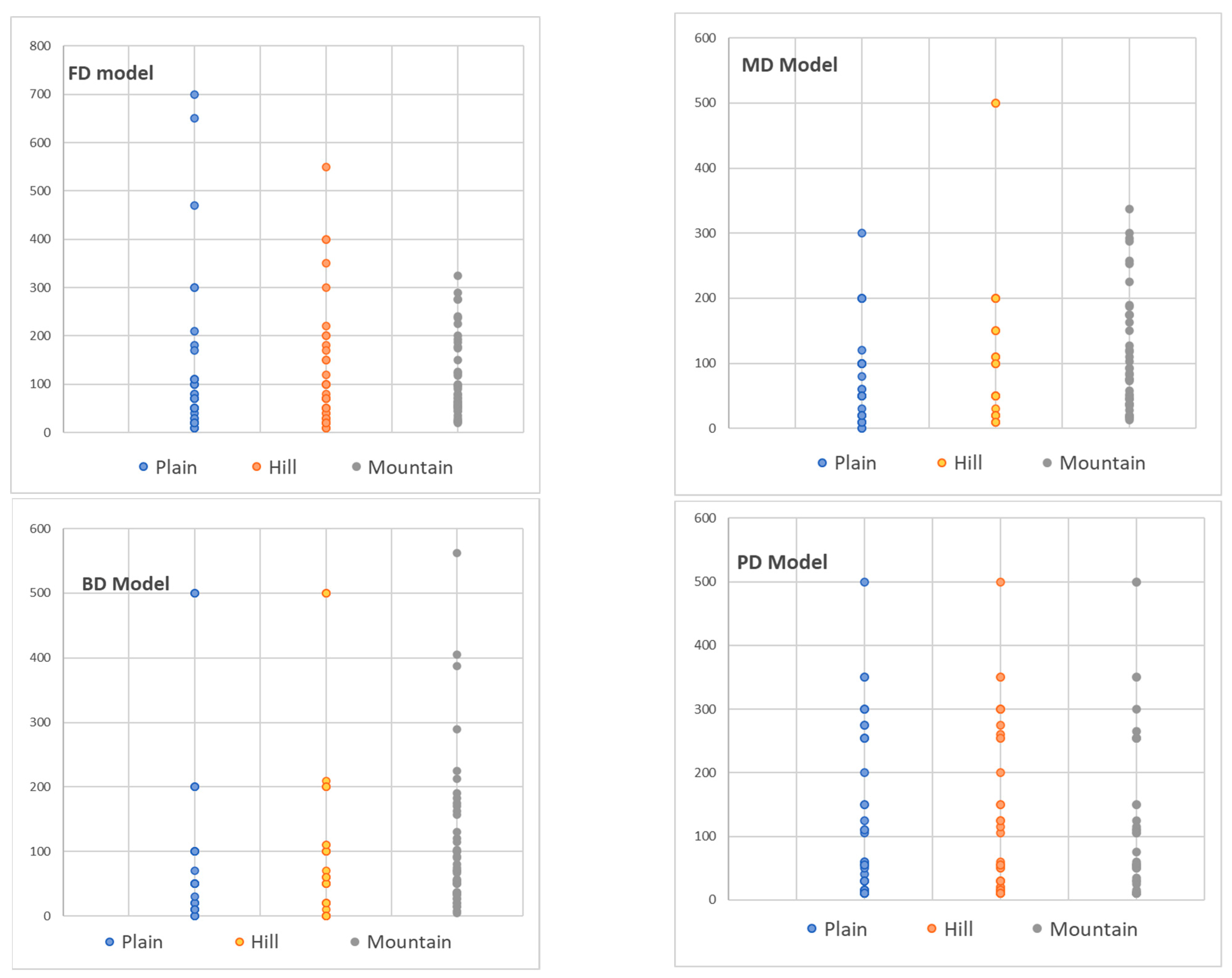

| Model | Area | Range | Average | Standard Deviation | Coef. of Variation | IQR |

|---|---|---|---|---|---|---|

| FD | Plain | 690 | 112.73 | 153.22 | 1.36 | 82.50 |

| Hill | 540 | 112.73 | 121.22 | 1.08 | 120.00 | |

| Mountain | 305 | 112.05 | 83.74 | 0.75 | 123.13 | |

| MD | Plain | 300 | 78.41 | 70.45 | 0.90 | 80.00 |

| Hill | 490 | 112.50 | 124.40 | 1.11 | 130.00 | |

| Mountain | 325 | 112.90 | 89.83 | 0.80 | 131.88 | |

| BD | Plain | 500 | 113.41 | 152.09 | 1.34 | 82.50 |

| Hill | 500 | 113.41 | 152.89 | 1.35 | 92.50 | |

| Mountain | 558 | 112.84 | 115.58 | 1.02 | 121.88 | |

| PD | Plane | 490 | 114.43 | 127.33 | 1.11 | 198.75 |

| Hill | 490 | 115.00 | 128.57 | 1.12 | 198.75 | |

| Mountain | 490 | 115.00 | 131.22 | 1.14 | 136.25 |

| Number of Dots | Dots with Value “500” | Dots with Value “200” | Dots with Value “100” | Dots with Value “50” | Dots with Value “10” |

|---|---|---|---|---|---|

| FD Model | 1% | 4% | 8% | 28% | 60% |

| MD Model | 5% | 11% | 18% | 25% | 41% |

| BD Model | 10% | 10% | 17% | 21% | 42% |

| PD Model | 13% | 11% | 11% | 11% | 54% |

| Value of Dots | |||||

| FD Model | 10% | 20% | 20% | 35% | 15% |

| MD Model | 30% | 28% | 22% | 15% | 5% |

| BD Model | 50% | 20% | 16% | 10% | 4% |

| PD Model | 60% | 20% | 10% | 5% | 5% |

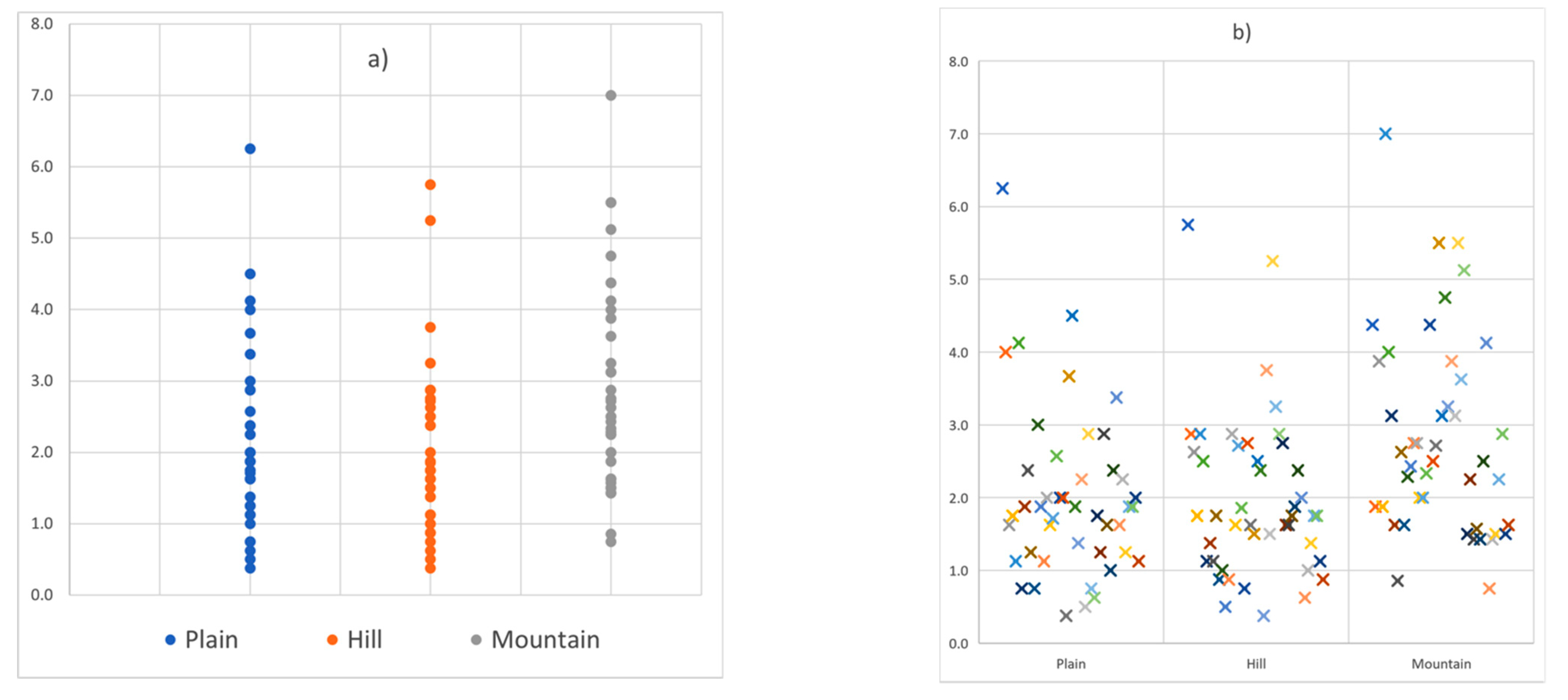

| By Number of Votes | Plain | Hill | Mountain |

|---|---|---|---|

| Class 1—High Pr. | 11% | 11% | 23% |

| Class 2—Medium Pr. | 31% | 39% | 49% |

| Class 3—Low Pr. | 47% | 37% | 52% |

| By values | |||

| Class 1—High Pr. | 12% | 13% | 19% |

| Class 2—Medium Pr. | 35% | 45% | 39% |

| Class 3—Low Pr. | 53% | 42% | 42% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cagliero, R.; Bellini, F.; Marcatto, F.; Novelli, S.; Monteleone, A.; Mazzocchi, G. Prioritising CAP Intervention Needs: An Improved Cumulative Voting Approach. Sustainability 2021, 13, 3997. https://doi.org/10.3390/su13073997

Cagliero R, Bellini F, Marcatto F, Novelli S, Monteleone A, Mazzocchi G. Prioritising CAP Intervention Needs: An Improved Cumulative Voting Approach. Sustainability. 2021; 13(7):3997. https://doi.org/10.3390/su13073997

Chicago/Turabian StyleCagliero, Roberto, Francesco Bellini, Francesco Marcatto, Silvia Novelli, Alessandro Monteleone, and Giampiero Mazzocchi. 2021. "Prioritising CAP Intervention Needs: An Improved Cumulative Voting Approach" Sustainability 13, no. 7: 3997. https://doi.org/10.3390/su13073997