Public Opinions about Online Learning during COVID-19: A Sentiment Analysis Approach

Abstract

1. Introduction

- RQ1: Among the extracted articles, were the articles positive, negative, or neutral?

- RQ2: Were the articles written fact-based or opinion-based?

- RQ3: Is there a significant difference in people’s sentiments between the news articles and blogs?

2. Related Works

2.1. Web Scraping

2.2. Sentiment Analysis

3. Methodology

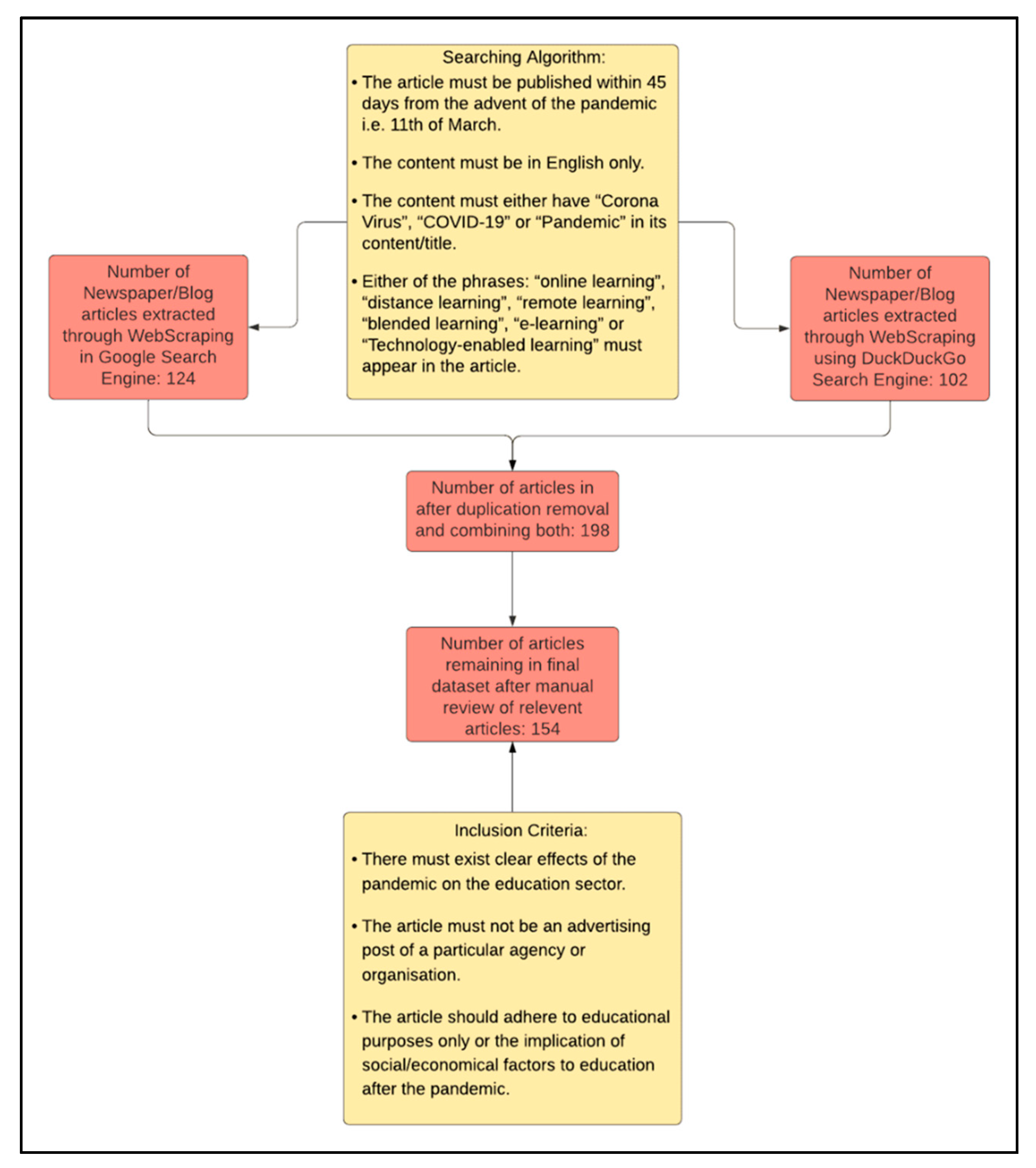

3.1. Dataset and Pre-Processing

- (a)

- Convert all the texts into lowercase.

- (b)

- Eliminate all the URLs.

- (c)

- Removing all the hashtags, user mentions, and emoji.

- (d)

- Removing all the punctuations.

- (e)

- Removing all the numbers.

- (f)

- Removing parenthesis.

3.2. Sentiment Analysis

- New Polarity = (Initial Polarity of the word ‘great’) * (intensity of the word ‘very’)

- New Subjectivity = (Initial Subjectivity of the word ‘great’) * (intensity of the word ‘very’)

4. Results and Discussion

4.1. Research Question 1

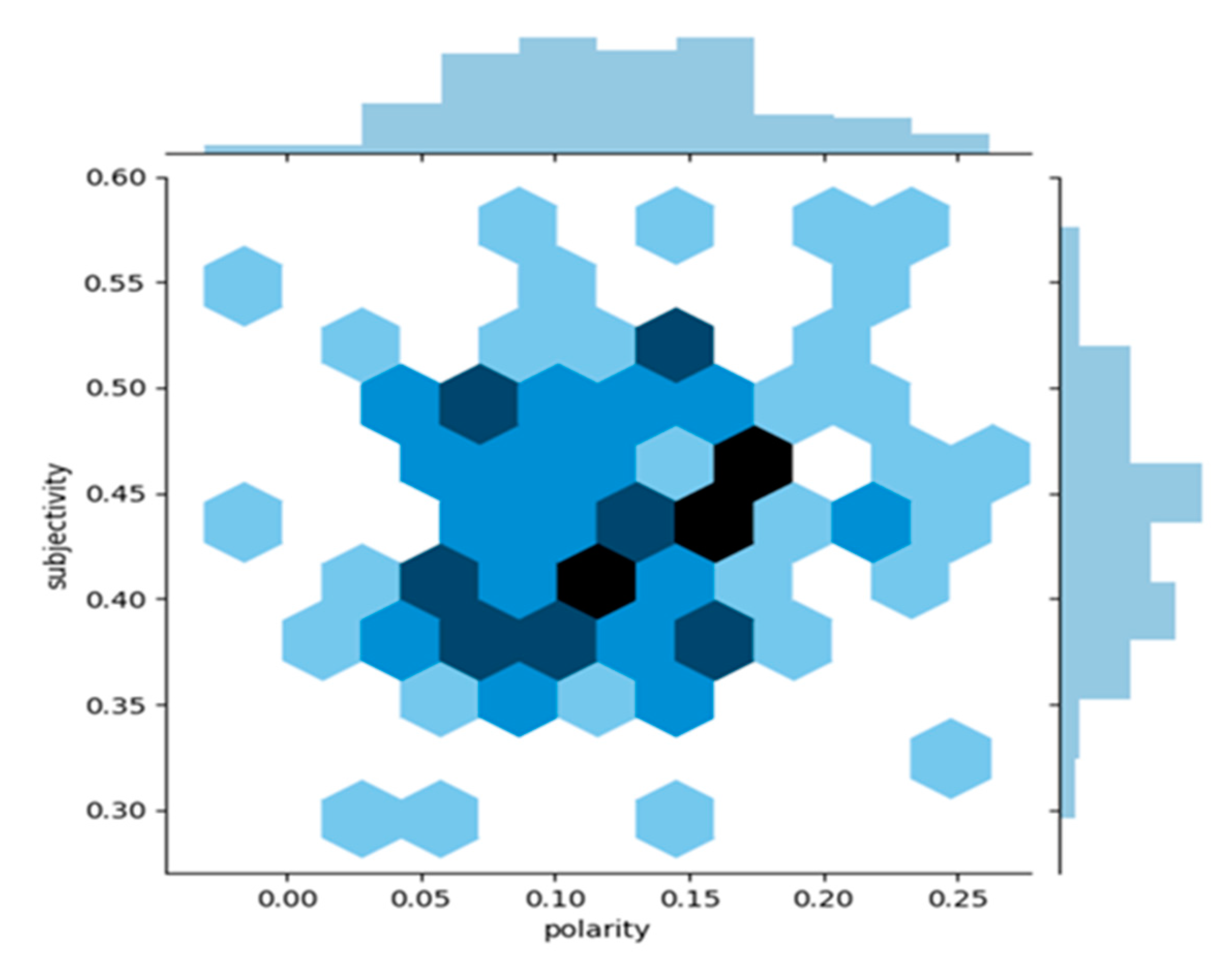

4.2. Research Question 2

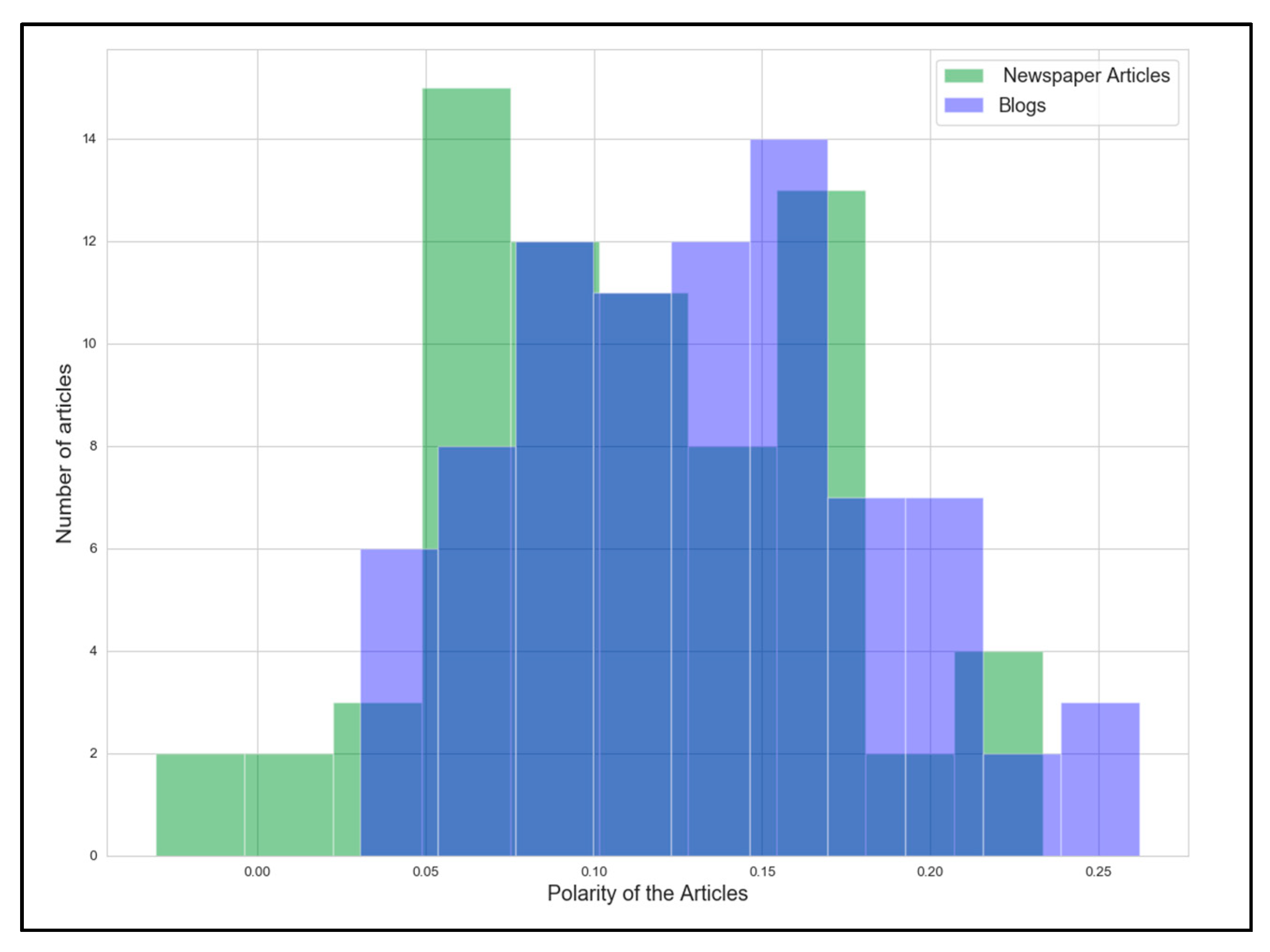

4.3. Research Question 3

5. Conclusions

6. Limitations and Recommendations

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- UNESCO. COVID-19 Impact on Education; UNESCO: Paris, France, 2020; Available online: https://en.unesco.org/covid19/educationresponse (accessed on 11 September 2020).

- Kanwar, A.; Daniel, J. Report to Commonwealth Education Ministers: From Response to Resilience; Commonwelath of Learning: Burnaby, BC, Canada, 2020. [Google Scholar]

- World Bank. World Development Report 2016: Digital Dividends; World Bank Publications: Washington, DC, USA, 2016; Available online: https://www.worldbank.org/en/publication/wdr2016 (accessed on 12 March 2020).

- Bozkurt, A.; Jung, I.; Xiao, J.; Vladimirschi, V.; Schuwer, R.; Egorov, G.; Lambert, S.; Al-Freih, M.; Pete, J.; Olcott, D., Jr.; et al. A global outlook to the interruption of education due to COVID-19 pandemic: Navigating in a time of uncertainty and crisis. Asian J. Distance Educ. 2020, 15, 1–126. [Google Scholar]

- Altbach, P.G.; de Wit, H. Responding to COVID-19 with IT: A Transformative Moment? Int. High. Educ. 2020, 103, 3–5. [Google Scholar]

- Daniel, S.J. Education and the COVID-19 pandemic. Prospects 2020, 49, 91–96. [Google Scholar] [CrossRef] [PubMed]

- Toquero, C.M. Emergency remote teaching amid COVID-19: The turning point. Asian J. Distance Educ. 2020, 15, 185–188. [Google Scholar]

- Lynch, M. E-Learning during a global pandemic. Asian J. Distance Educ. 2020, 15, 189–195. [Google Scholar] [CrossRef]

- Williamson, B.; Eynon, R.; Potter, J. Pandemic politics, pedagogies and practices: Digital technologies and distance education during the coronavirus emergency. Learn. Media Technol. 2020, 45, 107–114. [Google Scholar] [CrossRef]

- Williamson, B.; Hogan, A. Commercialisation and Privatisation in/of Education in the Context of COVID-19; Education International Research: Birmingham, UK, 2020. [Google Scholar]

- Teräs, M.; Suoranta, J.; Teräs, H.; Curcher, M. Post-Covid-19 education and education technology ‘solutionism’: A Seller’s Market. Postdigital Sci. Educ. 2020, 2, 863–878. [Google Scholar] [CrossRef]

- Anderson, J. The Coronavirus Pandemic is Reshaping Education. 2020. Available online: https://qz.com/1826369/how-coronavirus-is-changing-education/ (accessed on 14 April 2020).

- Zimmerman, J. Coronavirus and the great online-learning experiment: Let’s determine what our students actually learn online. Chronicle, 10 March 2020. Available online: https://www.chronicle.com/article/Coronavirusthe-Great/248216 (accessed on 11 March 2020).

- Zhao, Y. COVID-19 as a catalyst for educational change. Prospects 2020, 49, 29–33. [Google Scholar] [CrossRef]

- Wallsten, K. Agenda setting and the blogosphere: An analysis of the relationship between mainstream media and political blogs. Rev. Policy Res. 2007, 24, 567–587. [Google Scholar] [CrossRef]

- Aruguete, N. The agenda setting hypothesis in the new media environment. Comun. Soc. 2017, 28, 35–58. [Google Scholar] [CrossRef]

- Wu, Y.; Atkin, D.; Lau, T.Y.; Lin, C.; Mou, Y. Agenda setting and micro-blog use: An analysis of the relationship between Sina Weibo and newspaper agendas in China. J. Soc. Media Soc. 2013, 8, 53–62. [Google Scholar]

- Medhat, W.; Hassan, A.; Korashy, H. Sentiment analysis algorithms and applications: A survey. Ain Shams Eng. J. 2014, 5, 1093–1113. [Google Scholar] [CrossRef]

- Ku, L.-W.; Liang, Y.-T.; Chen, H.-H. Opinion Extraction, Summarization and Tracking in News and Blog Corpora. 2006. Available online: http://www.aaai.org/Library/Symposia/Spring/2006/ss06-03-020.php (accessed on 15 March 2020).

- Ng, C.; Law, K.M. Investigating consumer preferences on product designs by analyzing opinions from social networks using evidential reasoning. Comput. Ind. Eng. 2020, 139, 106180. [Google Scholar] [CrossRef]

- Hage, G. The haunting figure of the useless academic: Critical thinking in coronavirus time. Eur. J. Cult. Stud. 2020, 23, 662–666. [Google Scholar] [CrossRef]

- Mooney, S.J.; Westreich, D.J.; El-Sayed, A.M. Commentary. Epidemiology 2015, 26, 390–394. [Google Scholar] [CrossRef] [PubMed]

- Saurkar, A.V.; Pathare, K.G.; Gode, S.A. An overview on web scraping techniques and tools. Int. J. Future Revolut. Comput. Sci. Commun. Eng. 2018, 4, 363–367. [Google Scholar]

- Hillen, J. Web scraping for food price research. Br. Food J. 2019, 121, 3350–3361. [Google Scholar] [CrossRef]

- Haddaway, N.R. The use of web-scraping software in searching for grey literature. Grey J. 2015, 11, 186–190. [Google Scholar]

- Herrmann, M.; Hoyden, L. Applied webscraping in market research. In Proceedings of the 1st International Conference on Advanced Research Methods and Analytics, Valencia, Spain, 6–7 July 2016; Universitat Politecnica de Valencia: Valencia, Spain, 2016. [Google Scholar]

- Chen, Z.; Zhang, R.; Xu, T.; Yang, Y.; Wang, J.; Feng, T. Emotional attitudes towards procrastination in people: A large-scale sentiment-focused crawling analysis. Comput. Hum. Behav. 2020, 110, 106391. [Google Scholar] [CrossRef]

- Lee, S.-W.; Jiang, G.; Kong, H.-Y.; Liu, C. A difference of multimedia consumer’s rating and review through sentiment analysis. Multimed. Tools Appl. 2020. [Google Scholar] [CrossRef]

- Bai, X. Predicting consumer sentiments from online text. Decis. Support Syst. 2011, 50, 732–742. [Google Scholar] [CrossRef]

- Duan, W.; Yu, Y.; Cao, Q.; Levy, S. Exploring the impact of social media on hotel service performance. Cornell Hosp. Q. 2015, 57, 282–296. [Google Scholar] [CrossRef]

- Godbole, N.; Srinivasaiah, M.; Skiena, S. Large-scale sentiment analysis for news and blogs. In Proceedings of the International Conference on Weblogs and Social Media, ICWSM’07, Boulder, CO, USA, 26–28 March 2007. [Google Scholar]

- Moreo, A.; Romero, M.; Castro, J.; Zurita, J. Lexicon-based Comments-oriented News Sentiment Analyzer system. Expert Syst. Appl. 2012, 39, 9166–9180. [Google Scholar] [CrossRef]

- Balaguer, P.; Teixidó, I.; Vilaplana, J.; Mateo, J.; Rius, J.; Solsona, F. CatSent: A Catalan sentiment analysis website. Multimed. Tools Appl. 2019, 78, 28137–28155. [Google Scholar] [CrossRef]

- Li, S.; Wang, Y.; Xue, J.; Zhao, N.; Zhu, T. The impact of COVID-19 epidemic declaration on psychological consequences: A study on active weibo users. Int. J. Environ. Res. Public Health 2020, 17, 2032. [Google Scholar] [CrossRef]

- Pandey, A.C.; Rajpoot, D.S.; Saraswat, M. Twitter sentiment analysis using hybrid cuckoo search method. Inf. Process. Manag. 2017, 53, 764–779. [Google Scholar] [CrossRef]

- Pang, B.; Lee, L.; Vaithyanathan, S. Thumbs up? Sentiment classification using machine learning techniques. In Proceedings of the 2002 Conference on Empirical Methods of Natural Language Processing (EMNLP’02), Philadelphia, PA, USA, 6–7 July 2002. [Google Scholar]

- Dave, K.; Lawrence, S.; Pennock, D.M. Mining the peanut gallery: Opinion extraction and semantic classification of product reviews. In Proceedings of the 12th International Conference on World Wide Web, Budapest, Hungary, 20–24 May 2003. [Google Scholar]

- Jiang, H.; Qiang, M.; Lin, P. Assessment of online public opinions on large infrastructure projects: A case study of the Three Gorges Project in China. Environ. Impact Assess. Rev. 2016, 61, 38–51. [Google Scholar] [CrossRef]

- Chang, W.-L.; Chen, L.-M.; Verkholantsev, A. Revisiting online video popularity: A sentimental analysis. Cybern. Syst. 2019, 50, 563–577. [Google Scholar] [CrossRef]

- Tseng, C.-W.; Chou, J.-J.; Tsai, Y.-C. Text mining analysis of teaching evaluation questionnaires for the selection of outstanding teaching faculty members. IEEE Access 2018, 6, 72870–72879. [Google Scholar] [CrossRef]

- Hew, K.F.; Hu, X.; Qiao, C.; Tang, Y. What predicts student satisfaction with MOOCs: A gradient boosting trees supervised machine learning and sentiment analysis approach. Comput. Educ. 2020, 145, 103724. [Google Scholar] [CrossRef]

- Kastrati, Z.; Imran, A.S.; Kurti, A. Weakly supervised framework for aspect-based sentiment analysis on students’ reviews of MOOCs. IEEE Access 2020, 8, 106799–106810. [Google Scholar] [CrossRef]

- Loria, S. Textblob Documentation; TextBlob: Brooklyn, NY, USA, 2018. [Google Scholar]

- Onyenwe, I.; Nwagbo, S.; Mbeledogu, N.; Onyedinma, E. The impact of political party/candidate on the election results from a sentiment analysis perspective using #AnambraDecides2017 tweets. Soc. Netw. Anal. Min. 2020, 10, 1–17. [Google Scholar] [CrossRef]

- Yaqub, U.; Sharma, N.; Pabreja, R.; Chun, S.A.; Atluri, V.; Vaidya, J. Analysis and visualization of subjectivity and polarity of Twitter location data. In Proceedings of the 19th Annual International Conference on Digital Government Research: Governance in the Data Age, Delf, The Netherlands, 30 May 2018–1 June 2018; ACM: New York, NY, USA, 2018; p. 67. [Google Scholar]

- Micu, A.; Micu, A.E.; Geru, M.; Lixandroiu, R.C. Analyzing user sentiment in social media: Implications for online marketing strategy. Psychol. Mark. 2017, 34, 1094–1100. [Google Scholar] [CrossRef]

- Hasan, A.; Moin, S.; Karim, A.; Shamshirband, S. Machine learning-based sentiment analysis for twitter accounts. Math. Comput. Appl. 2018, 23, 11. [Google Scholar] [CrossRef]

- Subirats, L.; Conesa, J.; Armayones, M. Biomedical holistic ontology for people with rare diseases. Int. J. Environ. Res. Public Health 2020, 17, 6038. [Google Scholar] [CrossRef]

- Yang, L.; Li, Y.; Wang, J.; Sherratt, R.S. Sentiment ANALYSIS FOR E-commerce product reviews in Chinese based on sentiment lexicon and deep learning. IEEE Access 2020, 8, 23522–23530. [Google Scholar] [CrossRef]

- Commonwealth of Learning. Guidelines on Distance Education during COVID-19; Commonwealth of Learning: Burnaby, BC, Canada, 2020; Available online: http://oasis.col.org/handle/11599/3576 (accessed on 20 March 2020).

- McBurnie, C. The Use of Virtual Learning Environments and Learning Management Systems during the COVID-19 Pandemic; Zenodo: Geneva, Switzerland, 2020. [Google Scholar] [CrossRef]

- Mahajan, S. Technological, social, pedagogical issues must be resolved for online teaching. Indian Express, 29 April 2020. Available online: https://indianexpress.com/article/opinion/columns/india-coronavirus-lockdown-online-educationlearning-6383692/ (accessed on 20 March 2020).

- Cohen, J. Statistical Power Analysis for the Behavioral Sciences, 2nd ed.; Routledge: New York, NY, USA, 1988. [Google Scholar]

- Boykoff, M.T.; Roberts, J.T. Media Coverage of Climate Change: Current Trends, Strengths, Weaknesses. Human Development Report; United Nations: New York, NY, USA, 2017; Available online: http://hdr.undp.org/sites/default/files/boykoff_maxwell_and_roberts_j._timmons.pdf (accessed on 30 March 2020).

- Jia, M.; Tong, L.; Viswanath, P.V.; Zhang, Z. Word power: The impact of negative media coverage on disciplining corporate pollution. J. Bus. Ethics 2016, 138, 437–458. [Google Scholar] [CrossRef]

- Czerniewicz, L.; Agherdien, N.; Badenhorst, J.; Belluigi, D.; Chambers, T.; Chili, M.; De Villiers, M.; Felix, A.; Gachago, D.; Gokhale, C.; et al. A wake-up call: Equity, inequality and Covid-19 emergency remote teaching and learning. Postdigital Sci. Educ. 2020, 2, 946–967. [Google Scholar] [CrossRef]

| Word form | Position | Sense | Polarity | Subjectivity | Intensity | Example |

|---|---|---|---|---|---|---|

| Great | Adjective | “very good” | 1.0 | 1.0 | 1.0 | “Owing to his exceptional practice, he is becoming great at Tennis.” |

| Great | Adjective | “of major significance or importance” | 1.0 | 1.0 | 1.0 | “The low cost of these products gives them great appeal.” |

| Great | Adjective | “remarkable or out of the ordinary in degree or magnitude or effect” | 0.8 | 0.8 | 1.0 | “She is an actress of Great Charm.” |

| Great | Adjective | “relatively large in size or number or extent” | 0.4 | 0.2 | 1.0 | “All creatures great and small.” |

| Mean | SD | |

|---|---|---|

| Polarity | 0.12 | 0.05 |

| Subjectivity | 0.43 | 0.05 |

| Group | N | Mean | SD | t-Value |

|---|---|---|---|---|

| News articles | 72 | 0.42 | 0.06 | 2.39 * |

| Blogs | 82 | 0.44 | 0.05 |

| Group | N | Mean | SD | t-Value |

|---|---|---|---|---|

| News articles | 72 | 0.11 | 0.06 | 2.61 * |

| Blogs | 82 | 0.13 | 0.05 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bhagat, K.K.; Mishra, S.; Dixit, A.; Chang, C.-Y. Public Opinions about Online Learning during COVID-19: A Sentiment Analysis Approach. Sustainability 2021, 13, 3346. https://doi.org/10.3390/su13063346

Bhagat KK, Mishra S, Dixit A, Chang C-Y. Public Opinions about Online Learning during COVID-19: A Sentiment Analysis Approach. Sustainability. 2021; 13(6):3346. https://doi.org/10.3390/su13063346

Chicago/Turabian StyleBhagat, Kaushal Kumar, Sanjaya Mishra, Alakh Dixit, and Chun-Yen Chang. 2021. "Public Opinions about Online Learning during COVID-19: A Sentiment Analysis Approach" Sustainability 13, no. 6: 3346. https://doi.org/10.3390/su13063346

APA StyleBhagat, K. K., Mishra, S., Dixit, A., & Chang, C.-Y. (2021). Public Opinions about Online Learning during COVID-19: A Sentiment Analysis Approach. Sustainability, 13(6), 3346. https://doi.org/10.3390/su13063346