Refugees Welcome? Online Hate Speech and Sentiments in Twitter in Spain during the Reception of the Boat Aquarius

Abstract

:1. Introduction

1.1. Online Hate Speech

1.1.1. Refugees as a Subject in Social Media

1.1.2. High-Profile Events and Hate Speech

2. Materials and Methods

2.1. Sample

2.2. Measures

2.3. Software Used

3. Results

4. Discussion and Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

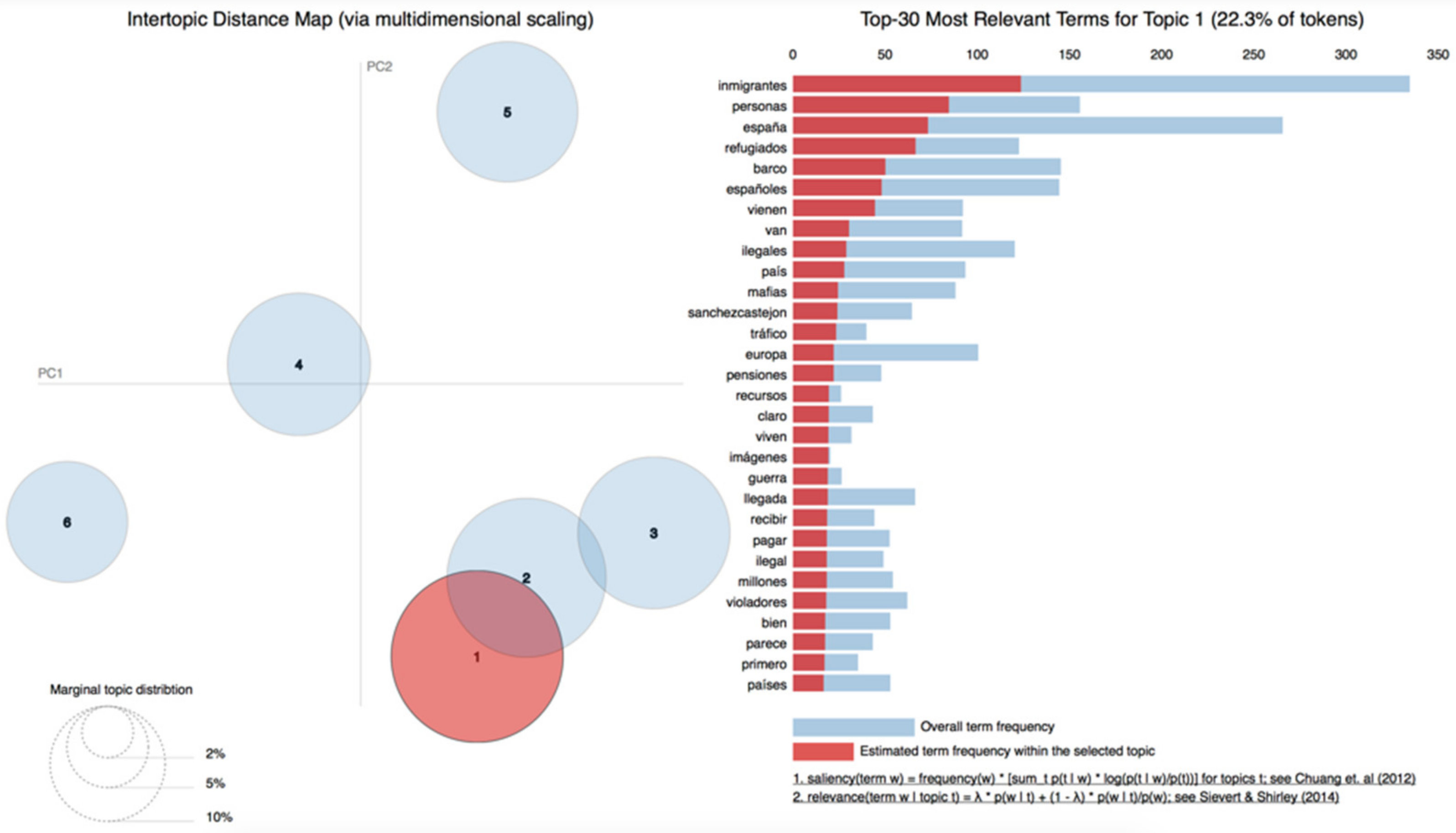

| Topic | Most Common Words and Their Frequency within the Topic (the Underlined Words are the Most Determinant for the Labelling of the Topic) |

|---|---|

| Pull effect and consequences | “inmigrantes” (0.008) + “españa” (0.007) + “barco” (0.005) + “efecto” (0.005) + “llamada” (0.004) + “va” (0.004) + “valencia” (0.004) + “consecuencias” (0.004) + “europa” (0.004) + “concentración” (0.004) |

| Pull effect and not welcoming “illegals” | “inmigrantes” (0.013) + “personas” (0.008) + “españa” (0.008) + “llamada” (0.007) + “efecto” (0.007) + “ilegales” (0.007) + “defiendetusfronteras” (0.007) + “españoles” (0.006) + “puerto” (0.006) + “aquariusnotwelcome” (0.006) |

| Not welcoming and terrorism | “van” (0.008) + “españa” (0.008) + “personas” (0.006) + “aquariusnotwelcome” (0.005) + “país” (0.005) + “boko” (0.005) + “haram” (0.005) + “inmigrantes” (0.005) + “barco” (0.004) + “tener” (0.004) |

| Smugglers and NGOs | “inmigrantes” (0.023) + “españa” (0.010) + “españoles” + “vienen” (0.008) + “mafias” (0.008) + “personas” (0.007) + “van” (0.006) + “ilegales” (0.006) + “ongs” (0.006) + “barco” (0.006) |

| Money and jobs | “refugiados” (0.011) + “inmigrantes” (0.009) + “pagar” (0.007) + “españa” (0.007) + “países” (0.005) + “barco” (0.005) + “españoles” (0.005) + “personas” (0.005) + “solución” (0.005) + “trabajo” (0.005) |

| Entrance to Europe | “españa” (0.018) + “inmigrantes” (0.009) + “europa” (0.006) + “barco” (0.006) + “valencia” (0.005) + “españoles” (0.005) + “gobierno” (0.005) + “inmigración” (0.005) + “norte” (0.004) + “millones” (0.005) |

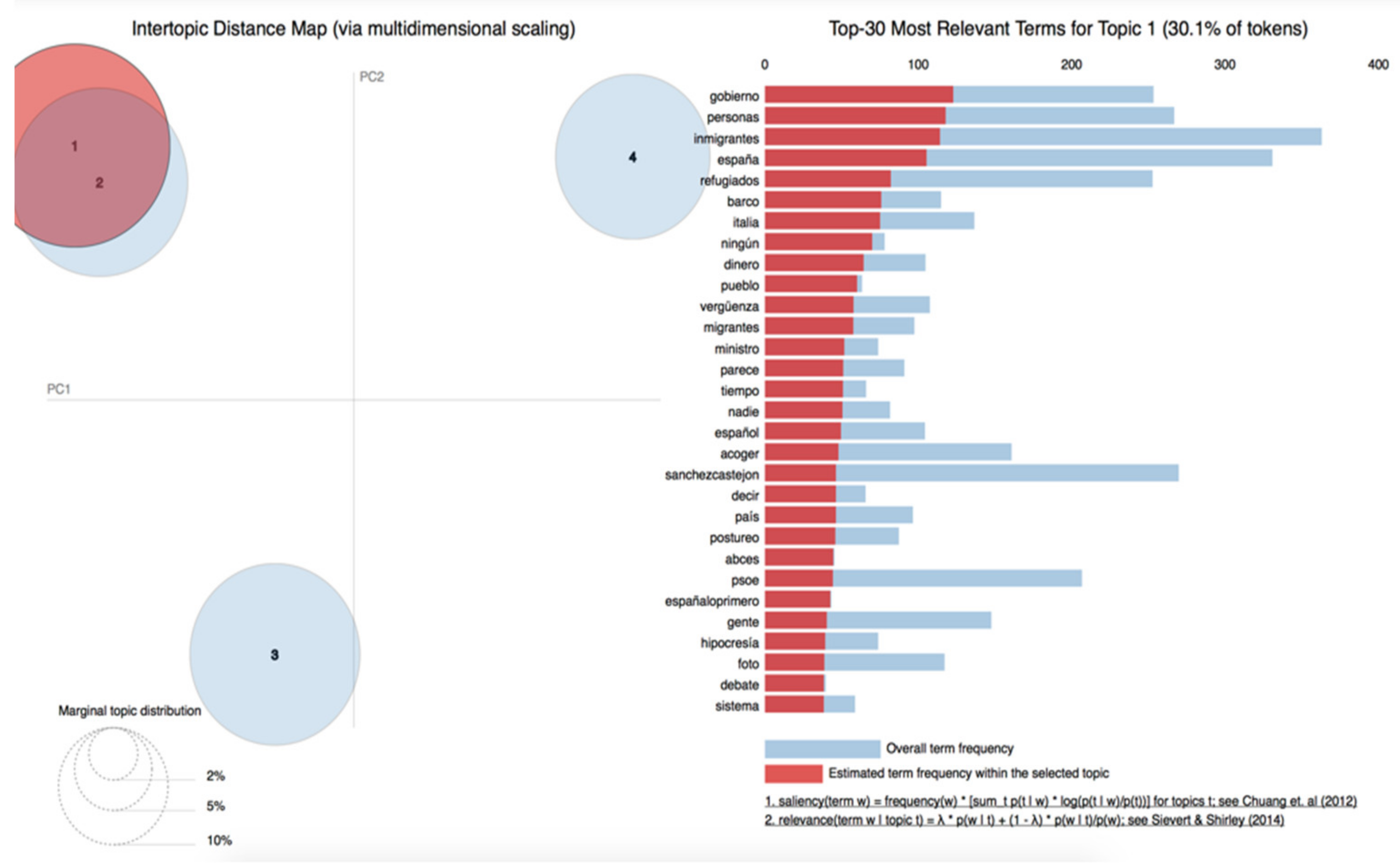

| Topic | Most Common Words and Their Frequency within the Topic (the Underlined Words are the Most Determinant for the Labelling of the Topic) |

|---|---|

| Shame of politicians | “inmigrantes” (0.006) + “urdangarín” (0.005) + “personas” (0.005) + “europa” (0.005) + “gobierno” (0.004) + “pp” (0.004) + “italia” (0.004) + “vergüenza” (0.004) + “hipocresía” (0.004) + “abierto” (0.003) |

| Media coverage | “valencia” (0.009) + “refugiados” (0.009) + “llegan” (0.006) + “españa” (0.006) + “foto” (0.006) + “personas” (0.005) + “español” (0.005) + “periodistas” (0.004) + “sánchez” (0.004) + “españoles” (0.004) |

| National politics | “inmigrantes” (0.010) + “españa” (0.009) + “gobierno” (0.008) + “gente” (0.007) + “sanchezcastejon” (0.006) + “personas” (0.006) + “política” (0.005) + “propaganda” (0.004) + “valencia” (0.004) + “años” (0.004) |

| Demanding Government to accept migrants | “psoe” (0.010) + “sanchezcastejon” (0.008) + “inmigrantes” (0.007) + “españa” (0.007) + “acoger” (0.006) + “refugiados” (0.005) + “circo” (0.005) + “personas” (0.004) + “dios” (0.004) + “dejo” (0.004) |

References

- Viúdez, J. Récord de Pateras y de Muertes de Migrantes en 2018. El País, 2 January 2019; p. 16. [Google Scholar]

- Miro-Llinares, F.; Rodriguez-Sala, J.; Rabasa, A.; Brebbia, C.A. Cyber hate speech on twitter: Analyzing disruptive events from social media to build a violent communication and hate speech taxonomy. Int. J. Des. Nat. Ecodynamics 2016, 11, 406–415. [Google Scholar] [CrossRef] [Green Version]

- Burnap, P.; Williams, M.L. Cyber Hate Speech on Twitter: An Application of Machine Classification and Statistical Modeling for Policy and Decision Making. Policy Internet 2015, 7, 223–242. [Google Scholar] [CrossRef] [Green Version]

- Williams, M.L.; Burnap, P. Cyberhate on Social Media in the aftermath of Woolwich: A Case Study in Computational Criminology and Big Data. Br. J. Criminol. 2016, 56, 211–238. [Google Scholar] [CrossRef] [Green Version]

- Miró-Llinares, F. Taxonomía de la comunicación violenta y el discurso del odio en Internet. Idp Rev. Internet Derecho Política 2016, 22, 82–107. [Google Scholar]

- Calvert, C. Hate Speech and Its Harms: A Communication Theory Perspective. J. Commun. 1997, 47, 4–19. [Google Scholar] [CrossRef]

- Müller, K.; Schwarz, C. Fanning the Flames of Hate: Social Media and Hate Crime. J. Eur. Econ. Assoc. 2020. [Google Scholar] [CrossRef]

- Gayo-Avello, D. “I Wanted to Predict Elections with Twitter and all I got was this Lousy Paper”. A Balanced Survey on Election Prediction using Twitter Data. arXiv Prepr. 2012, arXiv:1204.6441. [Google Scholar]

- Gayo-Avello, D. No, You Cannot Predict Elections with Twitter. IEEE Internet Comput. 2012, 16, 91–94. [Google Scholar] [CrossRef] [Green Version]

- Huberty, M. Can we vote with our tweet? On the perennial difficulty of election forecasting with social media. Int. J. Forecast. 2015, 31, 992–1007. [Google Scholar] [CrossRef]

- Cea D’Ancona, M.Á. La compleja detección del racismo y la xenofobia a través de encuesta. Un paso adelante en su medición. Rev. Española De Investig. Sociológicas 2009, 125, 13–45. [Google Scholar]

- Bach, M.P.; Krstić, Ž.; Seljan, S.; Turulja, L. Text Mining for Big Data Analysis in Financial Sector: A Literature Review. Sustainability 2019, 11, 1277. [Google Scholar] [CrossRef] [Green Version]

- Bach, M.P.; Pulido, C.M.; Vugec, D.S.; Ionescu, V.; Redondo-Sama, G.; Ruiz-Eugenio, L. Fostering Social Project Impact with Twitter: Current Usage and Perspectives. Sustainability 2020, 12, 6290. [Google Scholar] [CrossRef]

- Ernst, N.; Engesser, S.; Büchel, F.; Blassnig, S.; Esser, F. Extreme parties and populism: An analysis of Facebook and Twitter across six countries. Inf. Commun. Soc. 2017, 20, 1347–1364. [Google Scholar] [CrossRef]

- Calderón, C.A.; De La Vega, G.; Herrero, D.B. Topic Modeling and Characterization of Hate Speech against Immigrants on Twitter around the Emergence of a Far-Right Party in Spain. Soc. Sci. 2020, 9, 188. [Google Scholar] [CrossRef]

- Zhai, C.; Velivelli, A.; Yu, B. A Cross—Collection Mixture Model for Comparative Text Mining. In Proceedings of the tenth ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Seattle, WA, USA, 22–25 August 2004; pp. 743–748. [Google Scholar]

- Karami, A.; Lundy, M.; Webb, F.; Dwivedi., Y.K. Twitter and Research: A Systematic Literature Review Through Text Mining. IEEE Access 2020, 8, 67698–67717. [Google Scholar] [CrossRef]

- Castromil, A.R.; Rodríguez-Díaz, R.; Garrigós, P. La agenda política en las elecciones de abril de 2019 en España: Programas electorales, visibilidad en Twitter y debates electorales. El Prof. De La Inf. 2020, 29, 290217. [Google Scholar] [CrossRef]

- Arcila Calderón, C.; Blanco-Herrero, D.; Valdez Apolo, M.B. Rechazo y discurso de odio en Twitter: Análisis de contenidos de los tuits sobre migrantes y refugiados en español. Rev. Española De Investig. Sociológicas 2020, 172, 21–40. [Google Scholar]

- Urías, J. Libertad de Expresión: Una Inmersión Rápida; Tibidabo Ediciones: Barcelona, Spain, 2019. [Google Scholar]

- European Council. Framework Decision 2008/913/JHA of 28 November 2008 on Combating Certain forms and Expressions of Racism and Xenophobia by Means of Criminal Law; European Council: Brussels, Belgium, 2018. [Google Scholar]

- Lucas, B. Methods for Monitoring and Mapping online Hate Speech [Report]. GSDRC Research Helpdesk. 2014. Available online: http://gsdrc.org/docs/open/hdq1121.pdf (accessed on 20 January 2021).

- Moretón Toquero, M.A. El «Ciberodio», la Nueva Cara del Mensaje de Odio: Entre la Cibercriminalidad y la Libertad de Ex-presión. Revista jurídica de Castilla y León. 2012. Available online: https://dialnet.unirioja.es/servlet/articulo?codigo=4224783 (accessed on 20 January 2021).

- Contrada, R.J.; Ashmore, R.D.; Gary, M.L.; Coups, E.; Egeth, J.D.; Sewell, A.; Ewell, K.; Goyal, T.M.; Chasse, V. Measures of Ethnicity-Related Stress: Psychometric Properties, Ethnic Group Differences, and Associations With Well-Being1. J. Appl. Soc. Psychol. 2001, 31, 1775–1820. [Google Scholar] [CrossRef]

- Brown, R. Social identity theory: Past achievements, current problems and future challenges. Eur. J. Soc. Psychol. 2000, 30, 745–778. [Google Scholar] [CrossRef]

- Gualda, E.; Rebollo, C. The Refugee Crisis on Twitter: A Diversity of Discourses at a European Crossroads. J. Spat. Organ. Dyn. 2016, 4, 199–212. [Google Scholar]

- Banks, J. Regulating hate speech online. Int. Rev. Lawcomput. Technol. 2010, 24, 233–239. [Google Scholar] [CrossRef] [Green Version]

- Foxman, A.H.; Wolf, C. Viral Hate: Containing its Spread on the Internet; St. Martin’s Press: New York, NY, USA, 2013. [Google Scholar]

- Bakir, V.; McStay, A. Fake News and The Economy of Emotions. Digit. J. 2018, 6, 154–175. [Google Scholar] [CrossRef]

- Schäfer, C.; Schadauer, A. Online Fake News, Hateful Posts Against Refugees, and a Surge in Xenophobia and Hate Crimes in Austria. In Refugee News, Refugee Politics: Journalism, Public Opinion and Policymaking in Europe; Dell’Orto, G., Wetzstein, I., Eds.; Routledge: Oxford, OK, USA, 2019. [Google Scholar]

- Bartlett, J.; Reffin, J.; Rumball, N.; Williamson, S. Anti-Social Media. Demos 2014. pp. 1–51. Available online: http://cilvektiesibas.org.lv/site/record/docs/2014/03/19/DEMOS_Anti-social_Media.pdf (accessed on 20 January 2021).

- Chaudhry, I. #Hashtagging hate: Using Twitter to track racism online. First Monday 2015, 20. [Google Scholar] [CrossRef]

- Gallego, M.; Gualda, E.; Rebollo, C. Women and Refugees in Twitter: Rhetorics on Abuse, Vulnerability and Violence from a Gender Perspective. J. Mediterr. Knowl. 2017, 2, 37–58. [Google Scholar] [CrossRef]

- Ross, B.; Rist, M.; Carbonell, G.; Cabrera, B.; Kurowsky, N.; Wojatzki, M. Measuring the Reliability of Hate Speech Annotations: The Case of the European Refugee Crisis. Boch. Linguist. Arb. 2017, 17, 6–9. [Google Scholar] [CrossRef]

- Gualda, E.; Borrero, J.D.; Cañada, J.C. The ’Spanish Revolution’ on Twitter (2): Networks of hashtags and individual and collective actors regarding evictions in Spain. Redes. Rev. Hisp. Para El Análisis De Redes Soc. 2014, 26, 1. [Google Scholar] [CrossRef] [Green Version]

- Lamanna, F.; Lenormand, M.; Salas-Olmedo, M.H.; Romanillos, G.; Gonçalves, B.; Ramasco, J.J. Immigrant community integration in world cities. PLoS ONE 2018, 13, e0191612. [Google Scholar] [CrossRef]

- Magdy, W.; Darwish, K.; Abokhodair, N.; Rahimi, A.; Baldwin, T. #Isisisnotislam or #Deportallmuslims? Predicting Unspoken Views. In Proceedings of the WebSci ’ 8th ACM Conference on Web Science, Hannover, Germany, 22–25 May 2016; pp. 95–106. [Google Scholar]

- Gitari, N.D.; Zhang, Z.; Damien, H.; Long, J. A Lexicon-based Approach for Hate Speech Detection. Int. J. Multimed. Ubiquitous Eng. 2015, 10, 215–230. [Google Scholar] [CrossRef]

- Entman, R.M. Framing: Toward Clarification of a Fractured Paradigm. J. Commun. 1993, 43, 51–58. [Google Scholar] [CrossRef]

- Olteanu, A.; Castillo, C.; Boy, J.; Varshney, K.R. The effect of extremist violence on hateful speech online. In Proceedings of the Twelfth International AAAI Conference on Web and Social Media (ICWSM 2018), Palo Alto, CA, USA, 25–28 June 2018; pp. 221–230. [Google Scholar]

- Awan, I. Islamophobia and Twitter: A Typology of Online Hate Against Muslims on Social Media. Policy Internet 2014, 6, 133–150. [Google Scholar] [CrossRef]

- Awan, I.; Zempi, I. The affinity between online and offline anti-Muslim hate crime: Dynamics and impacts. Aggress. Violent Behav. 2016, 27, 1–8. [Google Scholar] [CrossRef] [Green Version]

- Hall, N. Hate Crime; Willan Publishing: Cullompton, UK, 2013. [Google Scholar]

- Correa, F.J.C.; Ugr, U.D.G.M.; Calvo, P.G.; De Granada, U. Posverdad, redes sociales e islamofobia en Europa. Un estudio de caso: El incendio de Notre Dame. Investig. La Comun. Desde Perspect. Teorías Y Métodos Periféricos 2019, 6, 35–57. [Google Scholar] [CrossRef]

- Brodersen, K.H.; Gallusser, F.; Koehler, J.; Remy, N.; Scott, S.L. Inferring causal impact using Bayesian structural time-series models. Ann. Appl. Stat. 2015, 9, 247–274. [Google Scholar] [CrossRef]

- Eichler, M. Causal Inference in Time Series Analysis. In Causality: Statistical Perspectives and Applications; Berzuini, C., Dawid, P., Bernardinelli, L., Eds.; Wiley: Hoboken, NJ, USA, 2012; pp. 327–354. [Google Scholar]

- Nightingale, A.; Goodman, S.; Parker, S. Beyond borders. Psychology 2017, 30, 58–62. [Google Scholar]

- Zhang, X.; Hellmueller, L. Visual framing of the European refugee crisis in Der Spiegel and CNN International: Global journalism in news photographs. Int. Commun. Gaz. 2017, 79, 483–510. [Google Scholar] [CrossRef]

- Makice, K. Twitter API: Up and Running: Learn How to Build Applications with the Twitter API; O’Reilly Media: Sebastopol, CA, USA, 2009. [Google Scholar]

- Brewer, M.B. The Psychology of Prejudice: Ingroup Love and Outgroup Hate? J. Soc. Issues 1999, 55, 429–444. [Google Scholar] [CrossRef]

- Pehrson, S.; Brown, R.; Zagefka, H. When does national identification lead to the rejection of immigrants? Cross-sectional and longitudinal evidence for the role of essentialist in-group definitions. Br. J. Soc. Psychol. 2009, 48, 61–76. [Google Scholar] [CrossRef] [Green Version]

- Thelwall, M.; Buckley, K.; Paltoglou, G.; Cai, D.; Kappas, A. Sentiment strength detection in short informal text. J. Am. Soc. Inf. Sci. Technol. 2010, 61, 2544–2558. [Google Scholar] [CrossRef] [Green Version]

- Vilares, D.; Thelwall, M.; Alonso, M.A. The megaphone of the people? Spanish SentiStrength for real-time analysis of political tweets. J. Inf. Sci. 2015, 41, 799–813. [Google Scholar] [CrossRef] [Green Version]

- Grimmer, J.; Stewart, B.M. Text as Data: The Promise and Pitfalls of Automatic Content Analysis Methods for Political Texts. Political Anal. 2013, 21, 267–297. [Google Scholar] [CrossRef]

- Ramage, D.; Hall, D.; Nallapati, R.; Manning, C.D. Labeled LDA: A Supervised Topic Model for Credit Attribution in Mul-Ti-Labeled Corpora. In Proceedings of the 2009 Conference on Empirical Methods in Natural Language Processing, Singapore, 6–7 August 2009; Volume 1, pp. 248–256. [Google Scholar]

- Arévalo Salinas, A.; Al Najjar Trujillo, T.; Aidar Abib, T. La cobertura informativa de la inmigración en Televisión Española. El caso del barco Aquarius. Est. Mens. Period. 2021, 27, 13–25. [Google Scholar] [CrossRef]

- Lawlor, A.; Tolley, E. Deciding Who’s Legitimate: News Media Framing of Immigrants and Refugees. Int. J. Commun. 2017, 11, 967–991. [Google Scholar]

- Martini, S.; Torcal, M. Trust across political conflicts: Evidence from a survey experiment in divided societies. Party Politics 2016, 25, 126–139. [Google Scholar] [CrossRef]

- Reagan, A.J.; Danforth, C.M.; Tivnan, B.; Williams, J.R.; Dodds, P.S. Sentiment analysis methods for understanding large-scale texts: A case for using continuum-scored words and word shift graphs. EPJ Data Sci. 2017, 6, 28. [Google Scholar] [CrossRef]

- Keller, T.R.; Hase, V.; Thaker, J.; Mahl, D.; Schäfer, M.S. News Media Coverage of Climate Change in India 1997–2016: Using Automated Content Analysis to Assess Themes and Topics. Environ. Commun. 2019, 14, 219–235. [Google Scholar] [CrossRef]

| Frequency | Percentage | Sentiment | Positive Words | Negative Words | |

|---|---|---|---|---|---|

| Non-hateful messages | 17,711 | 73% | 0.067 | 1.950 | −1.880 |

| Hateful messages | 6543 | 27% | −0.114 | 1.980 | −2.090 |

| Hate against refugees | 2397 | 9.9% | −0.073 | 1.960 | −2.040 |

| Hate against politicians | 4146 | 17.1% | −0.137 | 1.980 | −2.120 |

| Final sample | 24,254 | 100% | 0.018 | 1.960 | −1.940 |

| Topic | Most Common Words and Their Frequency within the Topic (the Underlined Words are the Most Determinant for the Labelling of the Topic) |

|---|---|

| Pull effect and consequences | “immigrants” (0.008) + “spain” (0.007) + “boat” (0.005) + “effect” (0.005) + “pull” (0.004) + “goes” (0.004) + “valencia” (0.004) + “consequences” (0.004) + “europe” (0.004) + “concentration” (0.004) |

| Pull effect and not welcoming “illegals” | “immigrants” (0.013) + “people” (0.008) + “spain” (0.008) + “pull” (0.007) + “effect” (0.007) + “illegal” (0.007) + “protectyourborders” (0.007) + “spaniards” (0.006) + “harbor” (0.006) + “aquariusnotwelcome” (0.006) |

| Not welcoming and terrorism | “go” (0.008) + “spain” (0.008) + “people” (0.006) + “aquariusnotwelcome” (0.005) + “country” (0.005) + “boko” (0.005) + “haram” (0.005) + “immigrants” (0.005) + “boat” (0.004) + “have” (0.004) |

| Smugglers and NGOs | “immigrants” (0.023) + “spain” (0.010) + “spaniards” + “come” (0.008) + “mafias” (0.008) + “people” (0.007) + “go” (0.006) + “illegal” (0.006) + “ngos” (0.006) + “boat” (0.006) |

| Money and jobs | “refugees” (0.011) + “immigrants” (0.009) + “pay” (0.007) + “spain” (0.007) + “countries” (0.005) + “boat” (0.005) + “spaniards” (0.005) + “people” (0.005) + “solution” (0.005) + “work” (0.005) |

| Entrance to Europe | “spain” (0.018) + “immigrants” (0.009) + “europe” (0.006) + “boat” (0.006) + “valencia” (0.005) + “spaniards” (0.005) + “government” (0.005) + “immigration” (0.005) + “north” (0.004) + “millions” (0.005) |

| Topic | Most Common Words and Their Frequency within the Topic (the Underlined Words are the Most Determinant for the Labelling of the Topic) |

|---|---|

| Shame of politicians | “immigrants” (0.006) + “urdangarín” (0.005) + “people” (0.005) + “europe” (0.005) + “government” (0.004) + “pp” (0.004) + “italy” (0.004) + “shame” (0.004) + “hypocrisy” (0.004) + “open” (0.003) |

| Media coverage | “valencia” (0.009) + “refugees” (0.009) + “arrive” (0.006) + “spain” (0.006) + “picture” (0.006) + “people” (0.005) + “spanish” (0.005) + “journalists” (0.004) + “sánchez” (0.004) + “spaniards” (0.004) |

| National politics | “immigrants” (0.010) + “spain” (0.009) + “government” (0.008) + “people” (0.007) + “sanchezcastejon” (0.006) + “persons” (0.006) + “politics” (0.005) + “propaganda” (0.004) + “valencia” (0.004) + “years” (0.004) |

| Demanding Government to accept migrants | “psoe” (0.010) + “sanchezcastejon” (0.008) + “immigrants” (0.007) + “spain” (0.007) + “host” (0.006) + “refugees” (0.005) + “circus” (0.005) + “people” (0.004) + “god” (0.004) + “leave” (0.004) |

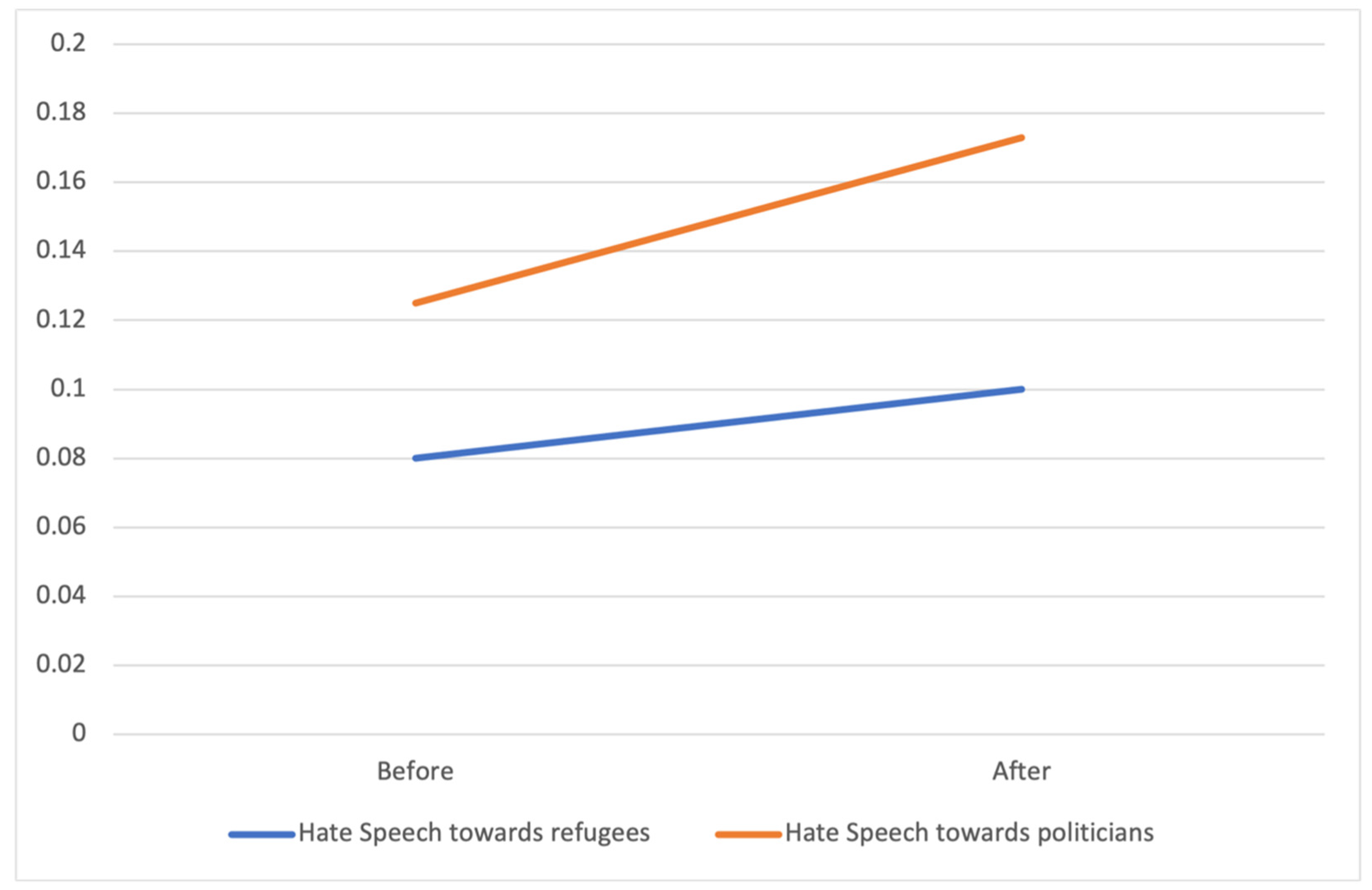

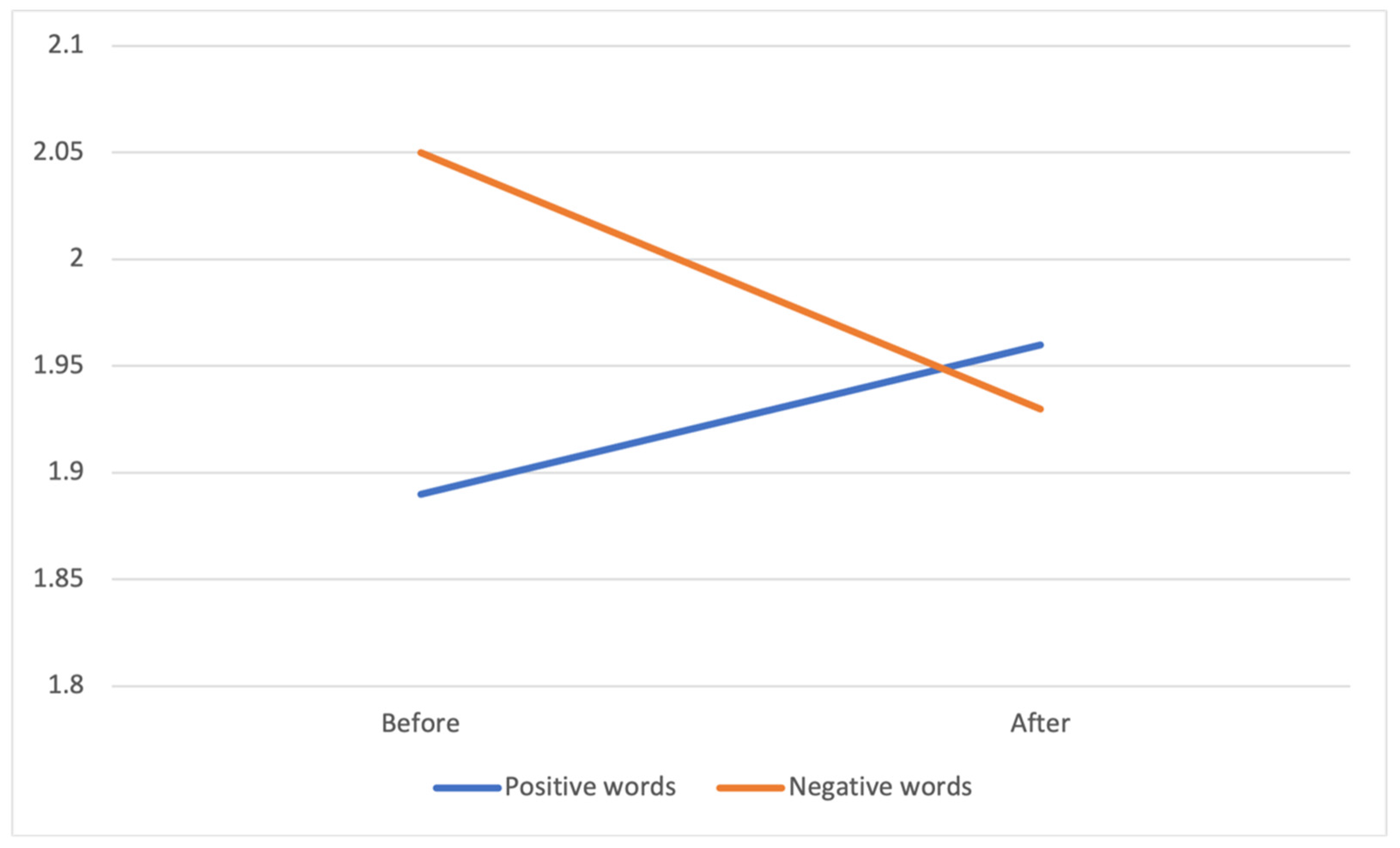

| Before | After | |||||

|---|---|---|---|---|---|---|

| n | M | SD | n | M | SD | |

| Hate Speech towards refugees * | 76 | 0.08 | 0.267 | 2320 | 0.10 | 0.300 |

| Hate Speech towards politicians *** | 119 | 0.125 | 0.331 | 4023 | 0.173 | 0.378 |

| Sentiment *** | - | −0.162 | 1.620 | - | 0.026 | 1.644 |

| Positive words * | - | 1.89 | 1.091 | - | 1.96 | 1.141 |

| Negative words ** | - | −2.05 | 1.334 | - | −1.93 | 1.278 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Arcila-Calderón, C.; Blanco-Herrero, D.; Frías-Vázquez, M.; Seoane-Pérez, F. Refugees Welcome? Online Hate Speech and Sentiments in Twitter in Spain during the Reception of the Boat Aquarius. Sustainability 2021, 13, 2728. https://doi.org/10.3390/su13052728

Arcila-Calderón C, Blanco-Herrero D, Frías-Vázquez M, Seoane-Pérez F. Refugees Welcome? Online Hate Speech and Sentiments in Twitter in Spain during the Reception of the Boat Aquarius. Sustainability. 2021; 13(5):2728. https://doi.org/10.3390/su13052728

Chicago/Turabian StyleArcila-Calderón, Carlos, David Blanco-Herrero, Maximiliano Frías-Vázquez, and Francisco Seoane-Pérez. 2021. "Refugees Welcome? Online Hate Speech and Sentiments in Twitter in Spain during the Reception of the Boat Aquarius" Sustainability 13, no. 5: 2728. https://doi.org/10.3390/su13052728

APA StyleArcila-Calderón, C., Blanco-Herrero, D., Frías-Vázquez, M., & Seoane-Pérez, F. (2021). Refugees Welcome? Online Hate Speech and Sentiments in Twitter in Spain during the Reception of the Boat Aquarius. Sustainability, 13(5), 2728. https://doi.org/10.3390/su13052728