Abstract

More and more, the evaluation of complex projects is being related to the capacity of the project to deal with crucial social, economic, and environmental issues that society is responsible for and with the activation of systemic changes. Within this “mission” perspective, growing attention is given to learning in action. This paper aims at (i) conceptualizing a methodological framework for complex project evaluation within the context of the Triple-Loop Learning mechanism and (ii) showing its application in a European project as well as including the toolbox developed in coherence with the elaborated framework. It does so by looking at the case of an ongoing Horizon 2020 project aiming to develop language-oriented technologies supporting the inclusion of migrants in Europe. In particular, the paper looks at Triple-Loop Learning as pushed by the reflection on three dimensions: the “what”, the “how”, and the “why” of collective actions in complex projects. The consequent learning process is expected to have transformational potentials at the individual, institutional/organizational, and (in the long term) up to the societal scale. By exploring the opportunities offered by the evaluation tools in the easyRights project, the study highlights the potential of nurturing a wider, arguably neglected as yet, learning space for understanding, engaging, and transforming real contexts and thus developing more effective contribution to the needed transition.

1. Introduction

The evaluation of complex participatory projects and processes demands the inclusion of the perspectives of a large number of different actors [1,2], their learning and interaction processes, as well as their involvement in and agreement on the design, recording of guiding criteria, and implementation of the evaluation [3].

The imperative to capture project impact in its multidimensionality, contextuality, and concerning systemic change and sustainability is evident to complex projects evaluation, particularly the ones that are based on co-creational approaches and thus often involve a variety of different actors from practice and scientific disciplines [4]. The cooperation of diverse groups of actors is often accompanied by various challenges. Some authors [5] identify some of them based on the analysis of evaluation frameworks from different scientific disciplines that claim to capture societal value. These comprise the affiliation to scientific disciplines and the hierarchies and ratings of evaluation instruments defined therein as well as the simplified definition of impact. This disregards indirect (unintended positive and negative consequences) and non-linear effects and the adaptation of evaluation methods according to the concrete research conditions and thus their specific impacts, pathways to impact, and contexts. In this context, the joint process and a strong consensus of all internal and external stakeholders on the evaluation framework and tool kit in terms of collaborative knowledge production and integration [4] is a key factor.

In the end, the evaluation of complex projects represents a recurring challenge for professionals, researchers, and, in general, people in charge of projects’ management and coordination. Traditionally, the final goal for a project’s evaluation goes from output validation to cost-effectiveness and efficacy, to impact assessment. More and more, the perspective of evaluation of complex projects is being related to the capacity of the project to deal with global challenges, i.e., to activate deep reflections and learning mechanisms able to contribute to long lasting changes.

Based on the overall objectives of the easyRights project to approach services as interfaces between migrants and their rights and also to activate higher level reflections of the integration of minorities in societies, a multi-method approach is set as the central reference point for the project evaluation. This concerns both the traditional evaluation activities associated with the easyRights project methodological approach to ICT development and the requirement to implement an evaluation framework consistent with a bottom–up co-creational approach that looks at learning as an additional strategic goal.

This article proceeds as follows: in Section 2, we conceptualize a methodological framework for complex project evaluation within the context of the Triple-Loop Learning mechanism; Section 3 presents the key elements of the easyRights project as an action field for operationalizing Triple-Loop Learning in complex projects; in Section 4, we examine targeting learning in the easyRights projects; Section 5 applies the proposed methodological framework for complex project evaluation to evaluate the easyRights project. Incoherence with the elaborated framework, Section 6 provides an ad hoc toolbox developed for the easyRights project; finally, Section 7 provides a reflection on the envisaged limitations of the framework meanwhile suggesting to consider learning spaces as an effective opportunity to understand, engage, and transform real contexts toward transition.

2. Complex Projects Evaluation: A Learning Activity

2.1. Triple-Loop Learning: An Imperative for Change

Triple-Loop Learning, also called transformational learning [6], is required when problems are wicked and unstructured and especially when the deep underlying causes and context have to be taken into account in redefining, relearning, and “unlearning” what we have already learned before [7]. In Triple-Loop Learning, the constant questionings and modifications help to create a shift in perspective (also including mental models [8]) that is ultimately a systemic change. Learning in this model is relevant in the perspective of a systemic change especially when it is coherent with innovation processes and their potential contribution to a social inclusion transition. The notion of transition (in many domains also referred to as a systemic change process) is crucial if we consider the global scale at which the migration phenomenon is experienced and the need to achieve a systemic change, i.e., occurring in the global society’s mindset. In transition theory, learning (social learning) is central due to its contribution to a robust strategy for accelerating and guiding societal innovation processes [9,10]; still, the related learning process has been hardly conceptualized [11].

In Triple-Loop Learning, participants reflect on how they think; according to Bateson, Triple-Loop Learning means “learning about learning” or “learning to learn” [6], i.e., adopting learning as a means for change. Therefore, an organization also learns how to improve its learning. Moreover, the examination of the values and principles that guide actions is core to this kind of learning.

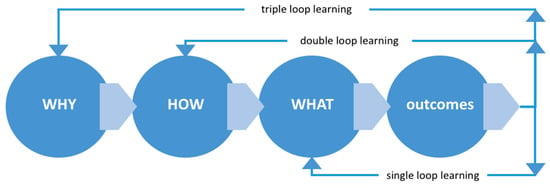

In Stacey’s terms, Triple-Loop Learning is manifested as a form of collective awareness [12]; the relationship between organizational structure and human behavior changes as the organization learns how to learn and understands more about the values and assumptions that lie below the patterns of actions [13]. Triple-Loop Learning allows not only individuals but also organizations to question whether the values and assumptions are locking them into a recurring cycle in which today’s solutions become tomorrow’s problems. In this way, the values, as well as the strategies and expectations, can be modified [14]. In the same vein, each learning loop (single, double, triple) in Triple-Loop Learning has been conceptualized by some authors [13,14] as a possible joint view of the three learning modes. They focus on the goal each loop reflects on—the what, the how, and the why—as shown in the graph below (see Figure 1).

Figure 1.

Triple-Loop Learning and the what, how, and why questions (source: Debategraph (Debategraph reworked the concept of Triple-Loop Learning considering the “what”, the “how” and the “why” and reinterpreting Sinek [15] and Englebart [16]. See https://debategraph.org/Details.aspx?nid=250157.)) Accessed on 15 March 2020.

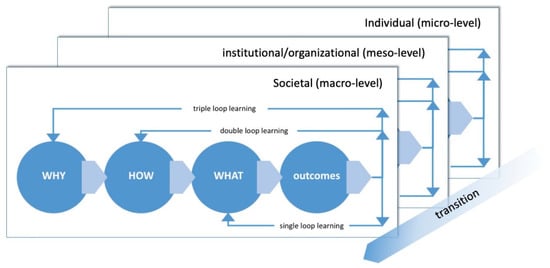

Change, targeted as a transition to improvement, may require cognitive energy, and the resulting outcomes can be envisioned “deeply” depending on the level of learning. The Triple-Loop Learning acts at the most sophisticated level and it reflects on the maturity of a transition. In other words, the more changes are embedded and internalized in the subject, whatever complex, which is learned, the better Triple-Loop Learning is achieved. The more disruptive the change to be achieved, the more Triple-Loop Learning needs to engage all involved actors, from individuals (micro-level) to institutional and organizational infrastructure (meso-level), up to the societal scale (macro-level) [17] (see Figure 2).

Figure 2.

Triple-Loop Learning and the three learning dimensions for transition: micro-(individual), meso-(institutional/organizational), and macro-(societal) levels, such as empowerment, self-esteem, (strengthening of) social cohesion, and autonomy [18]).

2.2. Quadruple Helix Ecosystems: An Effective Reference for Complex Projects’ Environments

As innovation environments, Quadruple Helix ecosystems involve the constructive interaction of national and/or local government, academia, business, and the general public [19]. Nowadays evidence is growing that these ecosystems are made of “open” communities [20] with permeable boundaries [21]. Such communities bring evidence that open innovation has more complex characteristics; as conceived by Von Hippel [22], openness is not just related to the inclusion of end-users in the process of innovation; rather, it implies a wide, complex knowledge production process, characterized by the participants with self-organization abilities making experimental approaches emerge from unexpected collective intelligence, creativity, and innovation.

Open communities as described above are often seamless aggregations of relevant actors and involve collective, insurgent actions rather than simply being planned by the explicit will of existing organizations and institutions. When these communities act in the public realm, the interplay between the “individual” and the “collective” dimensions becomes particularly rich and productive, especially thanks to the “openness” of involved actors, such as public and private parties (municipalities and individuals), to act outside—and free from—existing organizational constraints. Open communities in the public realm have been identified in many different contexts and as a result of different types of innovation initiatives, the most relevant and known being the Living Labs.

The Living Lab concept has been considered by many observers as a major paradigm shift for innovation, which has started to move out of laboratories into open-air, real-life contexts (including cities, regions, rural areas, factories, homes, hospitals, etc.—and sometimes building virtual spaces of collaboration between public and private actors). In Living Lab settings, users are co-producers of innovation, and the researchers are themselves part of the game. One could see their respective roles blurred or interchangeable [23]. This human-centric, experience-based perspective does not only ensure a user-driven design and development of products, services, or applications but also better user acceptance. The goal is to reach a more sustainable innovation by taking advantage of the ideas, experiences, and knowledge of the people involved concerning their daily needs, in their everyday lives, encompassing all their societal roles (the network of actors involved in Living Labs can be looked at as a community of practice. They are characterized by their being emergent, autonomous, and by sharing knowledge openly. These communities are defined by the participation of people sharing a common, although differentiated by specific application domain, interest toward a certain practice: members engage (in an informal manner) in the development and testing of practices through iterative processes where members give feedback and contribute to the development and improvement of those practices (see [21,22]).

Complex projects are differing from other projects as they trigger different activities to be experimented within different contexts, which are usually real-life contexts. In this manner, they grow in complexity because of the uncertainty introduced by the interaction with real life as well as the need for the involved real-life contexts to interact with each other and develop a common reflection having general value. Therefore, complex project ecosystems may be assimilated to Living Labs networks as they are made of ephemeral communities, made of a diversified mix of people, expertise, and experiences. The existence of these ecosystems is related to the synergic effort developed by participants to better understand a certain domain of practices and to develop solutions in real-life environments. In the collaborative work of such complex project communities, the open sharing of knowledge, information, and innovation are crucial. Participants are motivated to share their work or understandings and engage in collaboration due to the benefit they gain from experience and knowledge exchange.

As underlined by Wenger [24], such ephemeral communities coming to life around complex projects are “about” something; they are not mere relational systems. They share “an identity (...) as members engage in a collective process of learning”. This being related by an “about something” allows Wenger [25] to consider learning as a mode of belonging to such communities since (a) learning by doing in real-life environments transforms the individual experience into a common good; (b) being part of the same experiment makes the individual sphere of action as a collective resource benefiting from the individual sphere of actions of others; (c) having a long-lasting vision (image) of the collective, shared perspective of an (individual) action implies a more valuable idea of the individual action per se within the larger collective action, so making motivation and commitment stronger.

Such ecosystems require individual action to align with each other as well as with other processes sharing the same long-lasting vision (a sort of transitional tension considered the engine activating and (possibly) maintaining the Triple-Loop Learning mode along the different levels of socio-technical systems: individual (at micro-scale), institutional/organizational, societal). Therefore, they guarantee the evolving alignment of the collective action and the synergies with other actions toward the vision.

2.3. Evaluation in Action: A Learning Driven Framework

By connecting the Triple-Loop Learning mechanisms to Quadruple Helix ecosystems, the present study aims at conceptualizing an evaluation framework oriented to learning and targeting societal value creation. In other words, the key research question of the work described in this paper is: What evaluation framework can drive the socio-technical ecosystems activated by complex projects toward systematic changes? To do that, we look at learning as a mechanism to feed the value production chain in complex project ecosystems viewed as complex multi-actor environments.

Very often, in complex projects having a Living Lab approach, the evaluation process distinguishes summative from the formative evaluation [26,27]. Summative evaluation emphasizes an overall judgment of the project’s effectiveness. Conducted referring to the entire project, the focus of summative evaluation is to measure and document project achievements and outcomes. Although information gained from summative evaluation may be used to improve future activities in general (i.e., policymaking by the use of big data), the information is not provided in a timely fashion to provide opportunities for revision or modification of the project strategies while the project itself is still in progress.

Therefore, summative evaluation is designed to measure project performance following the entire duration of the project with the focus on identifying the effectiveness of its implementation, and it provides a means of accountability in assessing the extent to which the projects met its driving goals. Since summative evaluation is a central component of gauging instructional effectiveness at most institutions, the high-stakes nature mandates that these evaluations are valid and reliable. Summative evaluations provide information concerning project adherence its declared goals; a means of determining the effectiveness of project activities; pilots comparison to determine general lessons learnt from the project; and information about strengths and weaknesses in project implementation.

The formative evaluation aims at gaining quick feedback about the effectiveness of test-beds strategies (typically at the pilots’ level) with the explicit goal of enhancing and improving pilots’ implementation during the project time. The focus of the formative evaluation is on soliciting feedback that enables revisions of a test-beds implementation plan to enhance and nurture the learning process.

For formative evaluation to be effective, it must be goal-directed, have a clear purpose, provide feedback that enables actionable revisions, and be implemented in a timely manner within the action plan. Formative evaluations are most effective when they are focused on specific activities at the scale of planned test-beds. This requires a clear understanding of the challenges/problems in relation to each specific test-bed’s action plan; well-defined expectations in relation to specific actions of the plan; a clear definition of achievements/tests to be completed at each iteration in case many are planned; and a preliminary list of data/information to be collected.

These two evaluation levels are not independent. The formative evaluation is assumed to feed the summative by providing feedback to the general project outcomes and performances at each iteration.

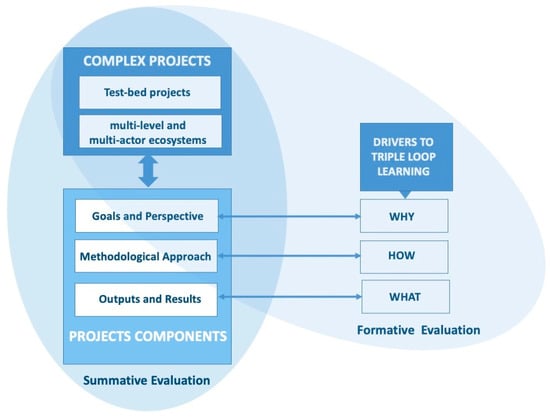

Figure 3 presents a synthetic representation of the evaluation framework that this paper proposes considering both the role of formative and summative evaluation as it is possible to interpret them according to: (1) the two key characteristics of complex projects, i.e., the inclusion of more test-bed projects and the consequent presence of multi-level/multi-actor ecosystems; (2) the three basic components of any projects, i.e., its goals and perspectives, the defined methodological approach, and its expected outputs and results; and (3) the three Triple-Loop Learning drivers related to the three reflective questions what, how, and why.

Figure 3.

The evaluation framework proposed for complex projects.

In the following paragraphs, the easyRights project is presented in coherence with the methodological framework just described.

3. The easyRights Project: An Action Field for Operationalizing Triple-Loop Learning in Complex Projects

3.1. A Project Overview

easyRights is an ongoing European Horizon 2020 project that aims to combine co-creation and intelligent language-oriented technologies to make it easier for migrants to understand and access the services to which they are entitled. The project is being developed and deployed in four pilot locations including Birmingham, Larissa, Palermo, and Malaga. The overarching objective of easyRights is to develop a complex, multi-level co-creative ecosystem in which different actors belonging to the project partnership, the local governance system for service supply, and innovation teams involved through hackathons (In the easyRights project, hackathon events are used for the creation of the Quadruple Helix communities; see Section 4.2.2 for more detailed information) cooperate in increasing the quality and performance of digital public services available to the migrants. The specific aims of the project are to improve the current personalization and contextualization levels of some services to the migrants, empower prospective beneficiaries of existing services to get better access, and bring opportunities to fruition, and engage in that effort with various actors and stakeholders from a wide range of disciplines.

It is crucial to answer the question: “How in a complex project do Triple-Loop Learning, digital innovation, and services for migrants relate to each other?” This not only acknowledges the cognitive framework activated by easyRights but also looks at the complexity of the project implementation process in each pilot at both the service governance level and that which includes hackathon activities. Each of them carries out a complex ecosystem of actors, roles, and collaborations. This ecosystem operates as a large open community [28] that can be described by using the Quadruple Helix analogy. To understand why it is so, consider e.g., the involvement of academia in the project partnership, the direct link with the ICT industry coming from both the project partners and those to be involved through the hackathons, and the key role played by local NGOs in the provision of services—which are essentially government services.

3.2. The easyRights Quadruple Helix Ecosystems

According to Lundvall [29], knowledge and learning constitute the most fundamental resources in a modern economy. More recently, Carayannis and Campbell [19] highlighted knowledge as a broad and contextualized concept in the societal realm. This is especially due to the inclusion of innovation in the conceptualization of learning as being a knowledge-driven and targeted activity [30,31,32]. In easyRights, knowledge is considered as a social learning process accompanied with significant potential in problem-solving.

As the easyRights project is strongly concerned and linked with the production, diffusion, and use of knowledge, therefore, it focuses on features to aid problem-solving for the society, which is organized around particular applications, services, and procedures. In this mode of knowledge production, continuous communication and negotiations between knowledge producers in the Quadruple Helix ecosystem are crucial [33]. The activation of Quadruple Helix ecosystems, and the consequent emergence of effective alignment dynamics among them, enables easyRights to implement further inter-institutional collaborations and to consolidate strategic alliances in the local contexts.

The easyRights Quadruple Helix ecosystems are built in each of the pilot sites and are charged with managing the local co-creation activities as well as the systemic changes in the governance of migration-related services as well as in the policies that the project innovations will ultimately bring about. This pluralism of knowledge coming from Quadruple Helix actors specifically activated by the easyRights project allows for the emergence, co-existence, and co-evolution of various knowledge and innovation paradigms (see [28]). As highlighted in several studies, in a multi-level and complex ecosystem, the existence and co-evolution of pluralism and diversity of knowledge and innovation modes are pivotal [19,28].

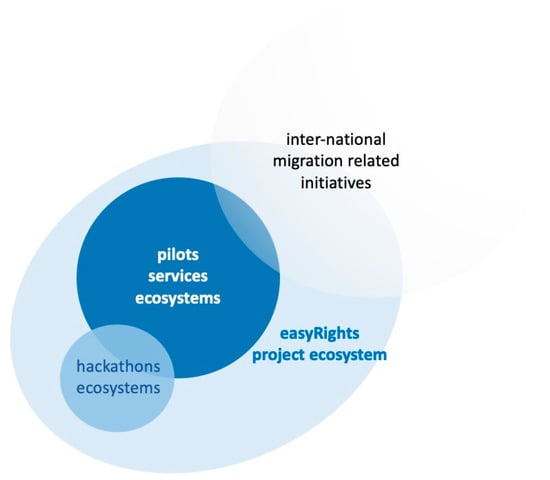

At a higher level, the easyRights project also develops a learning network by activating complex relations between the different Quadruple Helix ecosystems and actors having their powerful engines in the pilot activities. In other words, the knowledge and innovation activities in easyRights are characterized by a pluralism of cross-cutting multi-level interactions between organizations active at the local, national, and transnational levels (Figure 4).

Figure 4.

Toward a multi-level network of Quadruple Helix ecosystems.

As already underlined, the pilot activities will be the engine power for learning within the service ecosystems. Each service ecosystem will be related to the others as well as with the Quadruple Helix ecosystems activated by the hackathon initiatives (being productive along with the three phases: pre-hack, hack event, and post-hack) and with the project ecosystem being itself a Quadruple Helix example. All these represent the elements of a larger Quadruple Helix community that the project as a whole will activate.

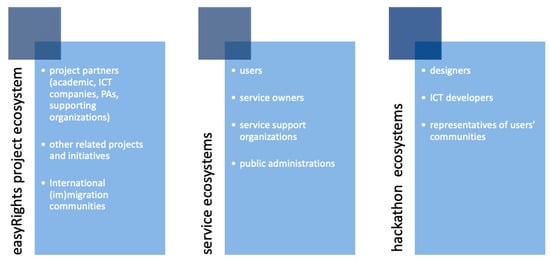

Each ecosystem includes different individual actors, organizations (public or not), and targets a wider portion of civil society, surely involving components of it in different manners. In each of the activated Quadruple Helix ecosystems (Figure 5), the learning processes will start having a transitional perspective including individuals, institutional/organizational structures, and, in a long time horizon, the societal scale (see again Figure 2).

Figure 5.

The easyRights Quadruple Helix ecosystems.

4. Targeting Learning in the easyRights Project

4.1. Inter-Organizational Learning as Value-Production Chain

As already underlined, learning is widely considered a key to knowledge generation and is often a purposeful goal of collaboration [34]. More and more, even in the business world, which traditionally shows resistance to collaboration, we witness a learning orientation in collaborative efforts. This is often defined as the development of new knowledge with the potential to influence behavior through its values and beliefs within the culture of an organization [35]. Paladino [36] emphasizes that it is crucial for organizations to learn by the continuous renewal of operations, processes, and resources, that is, learning by reflecting on values underlying visions and actions adopted by the organization (i.e., reflecting upon the what/how/why).

Triple-Loop Learning is stimulated in easyRights by creating conditions for inter-organizational collaboration and inter-organizational thinking. In particular, inter-organizational thinking is targeted to guarantee that the project contributes to the transition toward a more inclusive society. Collaboration takes place between different organizations at different stages of project activities. This creates an extended and networked learning process where feedback between different actors and collaboration dynamics involves the mobilization of, and the reflection on, a complex system of values related to the targeted vision, that of a “more inclusive society”. The outcomes of learning will be enhanced capabilities for managing change and making decisions [37].

Change—systemic change in this respect—is value-driven, and the values that the project will mobilize are those in operation in a more inclusive society. By approaching services as interfaces between migrants and their rights, easyRights activates higher-level reflections on the integration of ethnic minorities in their hosting contexts. For instance, services represent the lens to observe “values at work”, the right to have a recognized identity, the right to escape from violence and torture, the right to stay with one’s own family, the right to have a job, the right to have a safe life, etc. These rights should be (often are) guaranteed by easy and accessible services and operationalizing collective, human values for social integration. The easyRights project reinforces such values by considering that the easier the access to them for migrants, the more operational these values are for social inclusion to become achievable.

Lepack, Smith, and Taylor [38] consider value creation as having three different scales: individual, organizational, and societal; they look at the three scales from inside the organization or in relation to it. In particular “value creation at the individual level involves creativity and job performance, at the organizational level, it may mean innovation and knowledge creation, and at the societal level, it may involve firm-level innovation and entrepreneurship, as well as policies and incentives for entrepreneurship” [38] (p. 187).

In easyRights, entering the service environments to ignite a reflection on the level at which a certain service meets the migrants’ needs, implies locating value creation at least at the organizational and societal levels. This takes into account the possible revision of involved entities to achieve a wider, more systemic change of the service ecosystem and infrastructure [39]. This is the mechanism for systemic change toward inclusion that easyRights implements. In doing so, actors and institutions involved in the identified Quadruple Helix communities collaborate also to reinforce or develop the results of one another and to make the services more accessible and the entire community more inclusive.

4.2. The easyRights Methodological Framework and Its “How” Perspective in Triple-Loop Learning

4.2.1. Service Design as Value-Driven Learning Approach (Section 3.2 is Part of the Deliverable 5.1 of the easyRights Project)

The easyRights project looks at services as interfaces between the culture of public organizations and the citizens in general, in particular the migrants. The project is based on the assumption that a direct connection exists between the level of accessibility and usability of public services and the operational capacity of local public administration to be friendly and inclusive to migrants. To implement this assumption, easyRights introduces service design as a human-centered design approach to release services centered on the needs of their users in the context of the project and also to support migrants in interacting with local bureaucracy and therefore fully exercising their rights.

The capacity of the easyRights service ecosystems to design and deliver more usable and accessible services for the migrants strongly affects the possibility to fully develop their rights toward powerful citizenships and to put in place significant “acts of citizenship” [40]. This capacity of the local ecosystems depends on the culture of the actors that populate them with respect to innovation. easyRights aims to promote a cultural transformation of these ecosystems toward the experimentation of a user-centric service culture.

The easyRights pilots are considered context-based experiments. As such, they can be interpreted not only as a means for providing better services but also vehicles for deeper transformation through the iterative cycles of analysis and synthesis of the co-design process [41]. The project considers these experiments as learning spheres leading to organizational transformations [41,42]. Overlapping the co-design process with Kolb’s [43] experiential learning model, based on four iterative steps (experiencing, reflecting, thinking, and acting), easyRights exploits an interesting design-based learning framework [42,44] for reflective learning already used in other contexts.

However, these processes can often encounter difficulties in achieving the goal of embedding a co-design approach in public sector organizations or in co-designing better services from a user-centered perspective. Often, related practices conflict with the long-established organizational practices and cognitive patterns that shape the organizational culture of the public sector and runs in stark contrast with those found in the assembled design teams [45,46,47]. This is related to the low absorptive capacity [48] of these organizations and their need for transformation, which in short, calls for more knowledge creation and learning.

Co-design, defined as the creativity of designers and people not trained in design working together in the design development process [49], thus represents a powerful means for pushing change in government. The inclusion of a wider set of actors in the design and development of products and services threatens existing power structures by dismissing and going beyond the “expert” mindset. While the user-centered design was widespread in the 1990s in consumer product development, it has failed to address the complexity of 21st-century problems, which require a shift to a more egalitarian view based on idea sharing and knowledge holding, seeing users as experts and active co-designers rather than passive participants.

Building on the theoretical framework discussed above, easyRights explores how service co-design can apply new knowledge to organizational change, enhancing the co-creation capacity at both individual and organizational levels. To implement this ambitious plan, the easyRights project conceives the process of service design as a learning framework for all the actors of the service ecosystems. The idea is that by situating the process of user-centered service design in the local ecosystems and by engaging the local network of stakeholders in co-designing the new services, it would be possible to trigger powerful “learning by doing” cycles based on the co-design methodology. This can eventually nurture the development of the user-centered innovation capacity in each of the actors involved.

To operationalize this goal, easyRights is based on the hypothesis that the introduction of a user-centered service design approach should be primarily based on its practice, or on a learning-by-doing framework that can be complemented with reflections to achieve a sustainable transformation in the local ecosystem. This is not only in line with generic organizational learning principles [48,50] but also with how service design knowledge and culture is built, which is historically bound to practice. In such a setting, the role of prototypes and context-based experimentations, as the core ingredients of the service design approach, can also be regarded as key for developing co-creation knowledge and for its appropriation by the easyRights local ecosystems. Then, service design processes are seen not only as part of the development process, typically meant to prototype solutions and interactively improve them within a real situation, but also as long-term learning experiences.

4.2.2. Hackathons as a Learning Approach to Include Newcomers in Service Ecosystems

The solutions in easyRights will be developed and deployed through integrating diverse yet crucial competencies coming from three distinct teams and communities: (1) the local authorities and NGOs working with and for the immigrants’ rights at the grassroots level; (2) part of the academic and industrial partners bringing their experiences and expertise in the design and development of ICT solutions and interfaces, with a special focus on Artificial Intelligence and language training systems; (3) another part of the academic partners with distinctive competencies in urban Living Labs, social and policy analysis with the emphasis on integration and migration, service and communication design, and the management of hackathon events.

In easyRights, innovation, service co-design, and co-production are directly linked to the hackathon events (to be) organized in each pilot site. Often, there is the impression that hackathons are somehow restricted to IT-savvy participants—coders and technical developers able to generate self-sustained apps and similar software tools within the narrow time frame of a 2-day round-the-clock marathon. In the easyRights project, the development of tailor-made service innovations is based on a series of multi-stakeholder hackathons organized in the participating pilots and learning co-design environments. Such an approach has been already tested and successfully implemented in Open4Citizens, which is an EU project funded under the CAPS (Community Awareness Platforms) initiative (https://ec.europa.eu/digital-single-market/en/caps-projects, accessed on 11 November 2018).

Focusing on immigrants’ integration will help attract and aggregate very motivated and skilled people (to be looked at as newcomers of the service ecosystem or community of practice) while generating knowledge with special attention to related social matters and therefore mobilizing values. In turn, this will help the migrants (and especially those who participate in the hackathon event) fight against the barriers and cultural resistances they have to face in the hosting countries. In easyRights, the hackathon experience itself is an integration experience whereby migrants interact on a peer basis with coders and other non-IT savvy citizens in the development of solutions that eventually achieve public service transformation.

Moreover, hackathons events are important drivers for the creation of ecosystems of Quadruple Helix actors that allow effective alignment dynamics to emerge, which are productive for further inter-institutional collaboration and the consolidation of strategic alliances in the local contexts. The continuous involvement of the representatives of user communities at all three stages of the hackathon organization process, which we label “pre-hack”, “hack”, and “post-hack” phases, creates a socio-digital innovation environment based on multi-stakeholder partnerships, as a derivative of the Living Lab approach. This effectively involves target-users in the co-creation and co-production of new or reformed public services and infrastructures. The essence of a Living Lab partnership is to involve actors from the Quadruple Helix. The underlying concept is to adopt and apply citizen-centric and participatory methods to the co-design and co-experimentation of innovative urban services together with their prospective beneficiaries.

4.2.3. Interactions through Learning Dialogues

To support Triple-Loop Learning, easyRights uses an open multi-method approach to facilitate interaction and therefore the creation of a collaborative way to work. Coherently with the analyses of human activity systems [51], this approach is aimed at integrating the social dimension of the constitutive dynamics of collective action [52]. Learning in communities is expected to be enabled when members interact by reflecting critically and communicating about their reflections, thus scaling the individual critical reflection up to a collective social level [53].

Section 4.2.1 and Section 4.2.2 explained the two key methodological approaches adopted by easyRights to stimulate Triple-Loop Learning by creating conditions for reflections at the individual, institutional, and societal levels. Although co-design and hackathon practices and methodologies supply several suggestions for managing interactions, clear details are missing as to the way they can become productive for knowledge production and inter-organizational learning.

Peter Senge, in his book The Fifth Discipline, identifies tools for dialogue that widen the effectiveness of collective action, stating that, “In dialogue, individuals gain insights that could not be achieved individually. A new kind of mind begins to come into being which is based on the development of common meaning. (…) People are no longer primarily in opposition, nor can they be said to be interacting, rather they are participating in this pool of common meaning, which is capable of constant development and change” [50] (p. 224). Dialogues are widely considered as strategic means to interactive processes aiming at long-lasting systemic changes. They enable ideas to be generated, visions to be explored and developed, and strategic thinking. According to Buchanan and Dawson, dialogues help make sense of complex change processes, as they open established assumptions, question the validity of single claims to “truth”, and highlight the multi-story nature of change processes [54].

For easyRights to support learning during interaction activities (being co-design workshops, hackathon group work, or interviews), dialogues will be structured according to five of the seven dimensions of the Critically Reflective Work Behavior (CRWB) framework [55,56,57]. The CRWB framework has been developed for the observation of organizational learning especially in business organizations. It considers that critical reflection is not a hidden process occurring in individual minds; rather, it is a behavior within a group as a result of individual thinking associated with social interaction. The CRWB framework proposes seven dimensions for such observation. However, in easyRights, we adopt five of them first (Table 1), as they show to be better prone to get a prescriptive interpretation and be transformed into guiding principles for supporting dialogues. Since the project focus is on work-related learning in organizations, not all seven dimensions appear suitable for such a use. The two dimensions not adopted by easyRights are “career awareness” and “reflective working”; the first mainly applies to a closed, competitive organizational context; the second is more related to an individual sphere in the work environment so not suitable to guide interactive dialogues. The five adopted by easyRights are challenging groupthink, critical opinion sharing, openness about mistakes, asking for feedback, and experimentation as suggested by de Groot et al. [58].

Table 1.

Critically Reflective Work Behavior (CRWB)-driven guiding principles for learning dialogues (adapted from [57]).

Coherently with the CRWB framework, the learning dialogues in easyRights are operationally aimed at considering the points of view of the other people and their diversities, widening the vision of the world, and overcoming the “individual” toward the “collective”, thus supporting the creation of the Quadruple Helix communities. These guidelines will be adopted for interaction in all project activities.

5. Applying the Evaluation Framework to the easyRights Project

5.1. Evaluation for Collaborative and Critical Reflections

Both theory and experience confirm that transitions are enabled by context-dependent feedback processes that affect the system over time [65]. For the easyRights project, this implies that an assessment framework is necessary to guarantee continuous feedback provision from the local systems (pilots) to the project system and vice versa. Feedback provision is a fundamental mechanism for learning that is also highlighted at the micro-scale of learning dialogues, as well as of innovation development, which is typical for the Quadruple Helix ecosystem and Living Labs approach that will characterize the pilots’ implementation.

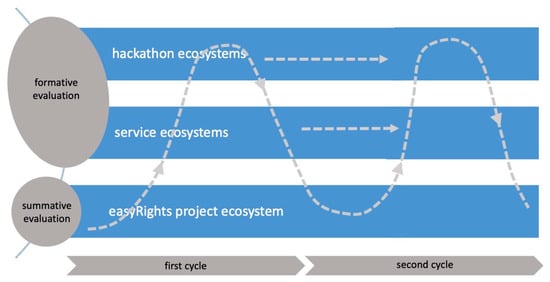

Coherently with the distinction between summative and formative evaluation [26], which was [27] described in Section 2.3, learning will be further supported and pushed by evaluation in each of the Quadruple Helix ecosystems (namely the easyRights project ecosystem, the service ecosystems, and the hackathon ecosystem) by exploring in details the what/how/why triplet. In easyRights, evaluation can be considered the additional methodological approach to push learning and will consider the three Quadruple Helix ecosystem categories as proper environments. The easyRights project ecosystem will be the environment for summative evaluation and the hackathon and service ecosystems will be appropriate for formative evaluation (Figure 6).

Figure 6.

The evaluation process through the Quadruple Helix ecosystems activities.

In this perspective, the evaluation framework of the easyRights project will be strictly focused on the implementation of the what/how/why key Triple-Loop Learning concepts at both the formative and summative levels.

5.2. Key Learning Drivers in the easyRights Project

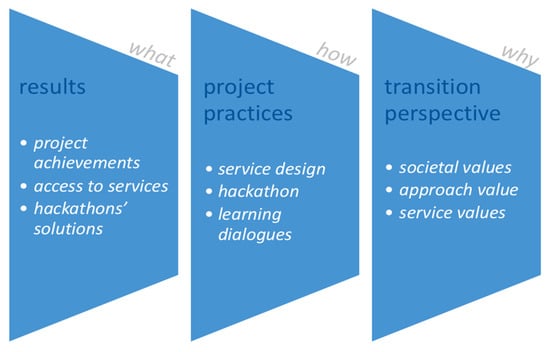

Coherently with the framework described above about the role of evaluation in easyRights, it is possible to identify three key learning drivers, objects for reflection to be activated in the different Quadruple Helix ecosystems of the project. These are the complex objects on which the evaluation plan will focus. They are spheres for reflections that the evaluation framework can target to support learning for and from the project: (1) the results; (2) the project key methodological approaches (here referred to as practices); and (3) the transition perspective. These key learning drivers are explained, one by one, in the following paragraphs.

The first learning driver, the results, is a synthesis of the “what” each Quadruple Helix ecosystem category should reflect on to activate first loop learning. Key questions for the “what” are: Is the “what” properly done? Is it the best “what” possible? Such questions will be investigated and explored through evaluation within the three Quadruple Helix ecosystems: respectively, the easyRights project, the service, and the hackathon ecosystems. It will represent the key bridge between the evaluation and the Quadruple Helix ecosystems (see Figure 7).

Figure 7.

Three learning drivers for the easyRights evaluation framework

The reflections at the level of the easyRights project ecosystem (the summative level) are mainly oriented toward project achievements and outcomes. In this respect, the monitoring and evaluation of the project’s progress will be crucial for the revision or modification of its trajectories while the project itself is still in progress. Coherently, the evaluation at this level will support a reflection on the project’s performances following the entire duration of the project with the focus on identifying the effectiveness of its implementation and providing a means of accountability in assessing the extent to which the project is moving toward the call expectations. Key objects for critical reflections at this level are (a) project adherence to the call expectations, (b) effectiveness of project activities, (c) lessons learned from the project, and (d) strengths and weaknesses in the project implementation.

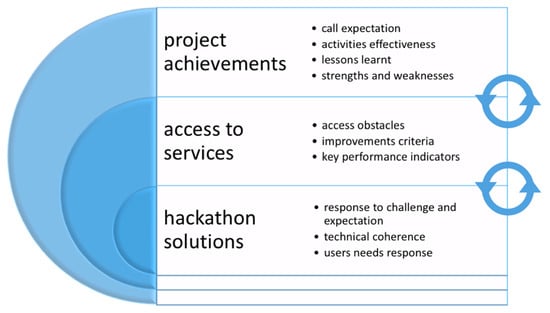

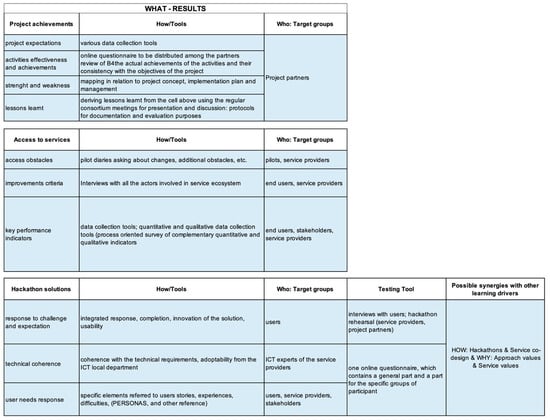

At the formative level, the “what” reflection aims to gain feedback about the effectiveness of the work at the pilot level within the service and hackathons Quadruple Helix ecosystems with the explicit goal of enhancing and improving pilot implementation during the project time and along the two cycles foreseen. For the service Quadruple Helix ecosystems, the “what” focus will be on access to service and will deal with service access obstacles, improvement criteria, and related key performance indicators. For the hackathons Quadruple Helix ecosystems, the “what” exploration will focus on the response to the hackathon challenge, the technical coherence of the developed solutions, and their ability to respond to users’ needs (Figure 8).

Figure 8.

The results (what) learning driver for the easyRights evaluation framework.

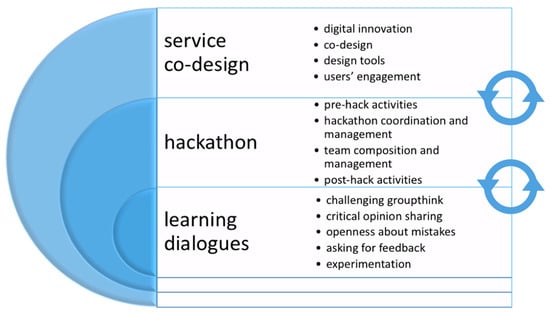

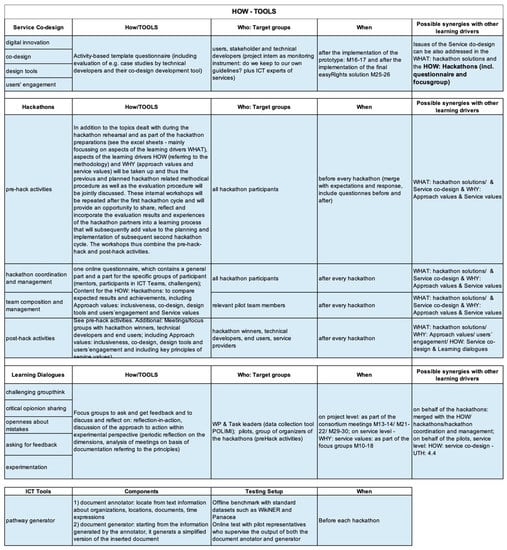

easyRights focuses on three different methodological approaches (here referred to as practices) that the project will use and consolidate. These approaches form the learning driver related to the “how”; to reflect on the “how”, the questions to be explored are as follows: Is the way we work the right one? Do we work in the best possible manner? The reflections on the “how” will target the three methodological approaches in easyRights: the service design, the hackathons, and the learning dialogues.

Service design represents the key methodological approach for the innovation of services for migrants, as underlined in easyRights. The services are considered the ignition means to a higher level of innovation eventually affecting the organizations, supplying them and activating reflections on the normative and regulative framework related to them. Thanks to co-design tools and methodologies, at the pilot level, the service design approach guarantees migrants (end-users) and the wide set of supporting organizations (volunteers, NGOs, offices, etc.) to come closer to the services and to be transformed into quasi-owners of the services in a co-production perspective.

The service design is strongly related to the hackathon practice. The way easyRights approaches this practice is fully coherent with the service co-production perspective just quoted above. In fact, hackathons are not meant to be exclusively reserved for ICT developers and experts; they are rather intended to be opened to the entire service ecosystem (including end-users, supporting organizations, service providers, etc.) so that the generated solutions are easier to be adopted and activated in use.

Finally, the third methodological approach is the learning dialogues practice and is related to the exploratory and reflective atmosphere that will be created by the project whenever interactions occur: from co-design workshops to interviews, from pilot alignment meetings to project meetings.

For the three methodological approaches, the evaluation activity will develop dedicated tools/instruments to explore responses to the “how” questions and (possibly) activate the second loop learning process (see Figure 9).

Figure 9.

Project practices (how) as a learning driver.

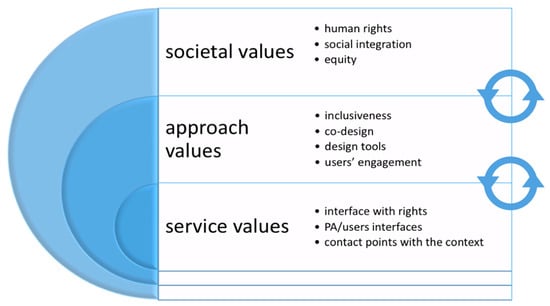

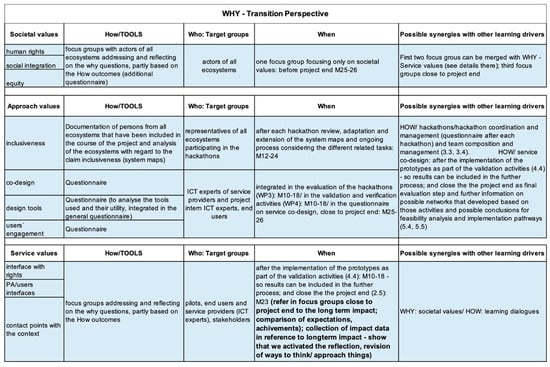

The third learning driver is strictly related to the transition perspective. Dealing with migrants and the dimensions that the migration phenomenon is assuming in contemporary times requires the introduction of a systemic change perspective (i.e., a socio-technical transition tension). Here, the reflection focus is on the “why”: the need for effective inclusion and human rights guarantees make explicit the driving values of the project. The questions looking for answers here are as follows: Why are we making such an effort? What are the long-lasting changes we all desire and share?

Values play a key role in reflecting on the “why”. At a societal level, values activate strategic reflections related to human rights, social integration, and equity. Values at this level are mobilized by visions, images of a better inclusive and just future orienting the final aims of the project activities, especially those carried out at the level of policy-making, and public debate. The easyRights methodological approach represents a value per se as it is strongly oriented to guarantee a large involvement of the service ecosystem and in doing so making values drivers for a collective learning environment. This has a transitional potential as it guarantees the vision to guide the decisions and the discussions among many actors involved in the service innovation work. Finally, there is the service value; easyRights does not look at services in a limited operational or functional manner. Services in easyRights are intended as interfaces between migrants and their rights, between users and institutions, as well as contact points between people (with a specific emphasis on immigrants) and their new context (Figure 10).

Figure 10.

Transition perspective (why) as a learning driver.

The overall evaluation approach of the easyRights project takes up previous statements in several respects: all three mentioned levels, as well as their interaction processes, are a continuous and constituent part of the evaluation of individual actors’ project activities as well as the actor-specific and project-related objectives. In the sense of a context-sensitive bottom approach, all actors are involved in feedback and learning loops e.g., as part of the presentations and discussions of (interim) project results and feedback provision between the ecosystems in a targeted and proactive manner.

6. Toward the easyRights Evaluation Toolbox

6.1. Mapping Evaluation Processes Toward a Shared Vision on Learning Drives

Understanding of the easyRights evaluation framework and its tools as synergy between evaluation and Triple-Loop Learning mechanism requires the involvement of all the actors within the three different Quadruple Helix ecosystems. The complex and multi-level co-creative network of these ecosystems and the interaction among the actors play a significant role in the development of the evaluation instruments as well as for the implementation. Such a process requires additional resources and a deeper examination of the evaluation—in the sense of activating learning processes—by the persons involved. To ensure this and to build collaborative teams that can contribute the relevant expertise and experience and continuously support a learning and feedback process internally and across ecosystems, a common understanding and agreement on the objectives and the added value of the joint process have to be addressed.

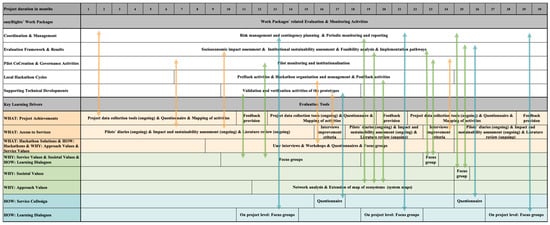

Due to the complex and multi-layered structure of the easyRights project with a large number of connected and simultaneous tasks, the first stage of the evaluation framework design sets as the creation of an overview and mapping of all evaluation and monitoring activities. Later on, the contents of the tasks have specified and directly related to the learning dimensions to define the appropriate evaluation tools in coordination with the work package and task leaders. This overview served several purposes, including a clear understanding of the following (see Figure A1 in the Appendix A):

- Links between the different evaluation tasks in the course of the project and thus the need to bring together different actors and expertise for different evaluation activities;

- The key learning drivers (related to the triplet what/how/why), which are addressed as part of different evaluation activities and in varying combinations, thus influencing the choice of specific evaluation tools. These decisions have to be made in a common process and under consideration of the (project) objectives and context;

- The concrete time frame in relation to the project activities and milestones to be evaluated in the pre-peri and post-sequence;

- Evaluation results and related discussions, feedback, and learning processes, which activate dynamics and exchange between the three ecosystems (easyRights ecosystem, hackathon ecosystems, and service ecosystems) and will influence and shape further project activities.

As shown in Figure A1 in Appendix A, the easyRights evaluation framework is characterized by the varying combinations of evaluation tools associated with one or more learning drivers and also related to different work packages. The evaluation tools for reflecting the learning driver “what” (the results) as a synthesis of the “what” of each Quadruple Helix ecosystem category are seen as triggers for the activation of the first learning loop. In turn, the resulting synergies of the ecosystems represent a central starting point for reflections on the learning driver “how” in connection with the “what”. The predominantly qualitative and semi-qualitative evaluation activities in connection with the learning driver “why” stand out to a particularly high degree as a connecting element between the ecosystems and the project activities. The reflection on the central values of the easyRights project in relation to societal values, the methodological approaches (approach values) of the project, and the view of services beyond their functionality (service values) shall mobilize visions and initiate the transition.

The first draft of the evaluation framework was discussed, adapted, and expanded together with possible associated tools in several meetings with project participants and the responsible task leaders. The task leaders are representatives of different work packages in the easyRights project. They are actors of the project ecosystem and have crucial functions in the interlinkage with the service ecosystems and hackathon ecosystems by pushing the establishment of complex relations and a learning network between the different Quadruple Helix ecosystems. The implementation of the evaluation activities, the discussion of (interim) evaluation results, and conclusions based on feedback and learning loops are for them also supportive tools in the process of creating a larger Quadruple Helix community, consisting of all ecosystems (see Appendix B, Figure A2, Figure A3 and Figure A4).

6.2. The Definition of Context-Embedded Indicators and Evaluation Tools

The assessment of the context on micro-level (individual), meso-level (institutional/organizational), and macro-level (societal) plays an important role in developing and exploiting successful ICT solutions as well as identifying relevant indicators and evaluation tools. The definition of the micro-, meso-, and macro-level of the evaluation process and context assessment corresponds to the composition of the actors of the three ecosystems as well as to the learning processes that involve all actors and initiate a transitional perspective at the individual (micro-) and institutional/organizational (meso-) levels, which continue in a long-term perspective at the societal (macro-) level (see also Figure 3 and Figure 5). In the literature, there is an increasing number of examples, often from the practice of ICT projects, that emphasize the importance of contextual embedding and comprise historical, organizational, economic, infrastructural, and political factors (e.g., [66,67,68,69]) up to the needs, characteristics, and social norms of the prospective end-users (e.g., [70,71,72]).

In the easyRights project, context assessment related to the personalization and contextualization of the ICT solutions takes place in the service and hackathon ecosystems in different project stages: before, during, and after the implementation of the ICT project solutions by the pilot communities. This assessment is an ongoing task including qualitative interviews with the prospective end-users, stakeholders, focus groups, questionnaires, and the exchange with project-external experts on migration, integration, and legal issues. Of course, this requires continuous reflection on the learning driver “how” and whether the methodological approaches and associated values of the easyRights project are consistently pursued and implemented. The central milestone in reviewing the achievement of the targeted personalization and contextualization level is the validation and verification process of the prototypes. On the level of “what”, the evaluation process of the prototypes allows a first assessment of the targeted achievements on the project level, concerning the access to the services (based on the statements of the test users and prospective service providers) and the targeted hackathon solutions. As a result of this micro-level evaluation, the ICT-related process can also become the point of reference for the definition of indicators, evaluation tools, and the implementation of the evaluation.

The definition of indicators and their operationalization is in a field of tension between the demands (i) of quantifiability, verifiability, and standardization to ensure comparability and to serve the project objectives to identify alternative pathways for future implementation of project solutions on a broader scale. This comprehends upscaling—namely, the replication of project methods, tools, and solutions at a broader territorial or community level—and out scaling, or the successful transfer/reuse in different contexts than those of the project pilots. Concurrently, there is the qualitative need (ii) to contextualize the indicators to a high degree to ensure the relevance on a local level ([73,74,75]). The combination of local and global indicators should also counteract the disadvantages of the individual approaches: top–down indicator sets support comparability but do not consider the community-specific context, while bottom–up assessment approaches that are selected by and are meant for the community might be subjective and require interpretation and translation to achieve comparability [76]. For a forward-looking longitudinal perspective and the identification of indicators relevant for sustainability, the macro-, meso-, and micro-level have to be considered, focusing on the importance of the micro-level: “(...), to be successful and sustainable, it is at the micro-level that sustainable ICT infrastructures should have the most pronounced impacts on the lives of individuals, businesses and communities. Hence, the participatory nature of the proposed evaluation method.” [77] (p. 1).

Following a bottom–up approach and for the consideration of multi-layered context, the first definition of key performance indicators for the impact assessment of the easyRights pilots is based on the various material gathered up to this point based on the exchange with and the data assessment in the service Quadruple Helix ecosystems. The key performance indicators represent reference points for the achievement of the dimensions of the learning drivers “what” (i) project achievements and (ii) access to services, which are subsequently crucial to the targeted project impact and sustainability.

The indicators were further discussed and confirmed with the pilot representatives concerning the accessibility of the required data and the associated process. However, it is necessary to keep in mind that the relevance of indicators might be time-bound and that contexts change; from this point of view, impact evaluation has to be regarded as tentative [5]. In that sense, the definition of indicators is an ongoing collaborative process crucial to the reflection and assessment of the project impacts, as well as the associated learning and adaptation processes.

As the evaluation claim of the easyRights project goes beyond an indicator-based evaluation and is also to be seen coherently with Triple Loop Learning mechanisms, a comprehensive mixed-methods approach is adopted that combines quantitative, semi-quantitative, and qualitative methods. Such an alignment, which encompasses a variety of methodological approaches, is in line with the need to evaluate the achievement of objectives (learning driver “what”) and also with a consistent implementation and integration of the dimensions of the learning driver “how’’ into the evaluation framework, such as the learning dialogues based on CRWB framework and co-creation principles. The choice, combination, and temporal sequence of the application of the tools were discussed in the previously mentioned process of the mapping of the evaluation processes. In this process, the design and combination of the respective tools were further defined with a special focus on the requirements of the respective context. The persons responsible for the implementation were identified, and their tasks in connection with the project timeline, existing resources, contact persons, and requirements were specified.

As shown in Figure A1 in Appendix A, the complementary design and implementation of qualitative and quantitative evaluation tools in the different work packages corresponds to a partially mixed methods design: quantitative and qualitative methods are implemented in different stages of the work packages (sequentially or concurrent) [78]. The mix of methods represents a combination of different types of research impact evaluations used in formative and/or summative mode and comprehends social network analysis; system mapping (systems analysis methods); interviews and focus groups (textual, oral, and arts-based methods); theory of change; logical framework analysis (indicator-based approaches) and narrative synthesis; and systematic reviews (evidence synthesis approaches) [5]. The results of work-related evaluation activities are combined in the data interpretation stage and as part of the formative feedback, learning, and reporting processes. During the design and implementation of the evaluation activities, there are varying compositions of the project-internal responsible persons and the external participants, since especially the outcomes of qualitative evaluation tools with a focus on the learning driver “why” can comprise several work packages of the project and be included in their further design and process. The integration of the value-based learning driver “why” and action as well as process orientation are dimensions of a transformative design, as defined by Greene and Caracelli: “Designs are transformative in that they offer opportunities for reconfiguring the dialog across ideological differences and, thus, they have the potential to restructure the evaluation context” [79] (p. 1).

However, feedback and learning mechanisms are not only anchored in the context of reporting but also in qualitative measures such as focus groups, interviews, workshops, and diaries of the pilots, which bring stakeholders from all ecosystems into an exchange in variable settings and as contributors to different work packages, as well as in the quantitative evaluation tools, which continue and build on qualitative evaluation results to integrate and evaluate further learning and project processes. Thus, work package-related qualitative and quantitative evaluation tools are not to be considered in a work package-limited framework but rather link the different project activities and stages with each other. Thus, the reference to each other and the interaction of the different work packages of the project is taken up in the mixed methods design of the evaluation framework of the easyRights project. Hence, the easyRights evaluation framework corresponds to the design of a fully mixed methods taking into account the following [78]: the research objectives include quantitative and qualitative objectives, such as exploration (e.g., mapping of actors and context, learning and transformative processes), as well as feasibility analyses and implementation pathways; the combination of quantitative and qualitative survey and analysis methods; and the processing and synthesis of the analysis results into conclusions.

6.3. Integration of Results Triggering Learning Mechanism

The analysis of the different evaluation activities and the triangulation of data is an ongoing process. On the one hand, the results are presented and discussed in appropriate qualitative formats with the involvement of external actors from all Quadruple Helix ecosystems. On the other hand, evaluation measures are systematically incorporated into the project ecosystems contributing to the conclusions for the achievement of objectives, the further course of the project, and further analysis on the scalability and sustainability of the project´s methodological approach and outcomes.

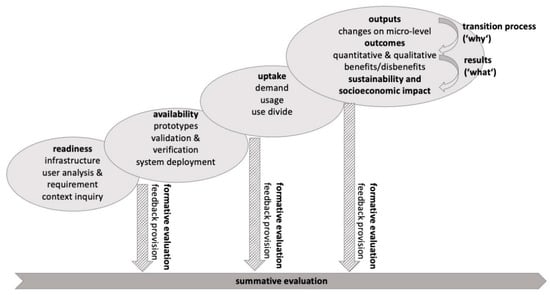

ICT development processes are usually thought of as linear, even if the key learning drivers and the associated reflection and communication processes of the actors of the easyRights Quadruple Helix ecosystems cannot be assigned to a specific project (time) phase, but they are dynamic, continuous, and repetitive processes. In Heeks and Alemayehu’s terms, the ICT-related achievements are milestones, namely readiness, availability, uptake, and impact [80], which are used as reference points for the timing of the formative evaluation activities at different stages of the project. The formative evaluation contributes to the summative evaluation by ensuring feedback provision to the general project outcomes and performances at each iteration. This approach supports the reciprocal transfer, record, and integration of experiences and knowledge and creates a joint effort of problem-solving and learning processes [81] of the easyRights project Quadruple Helix ecosystems (Figure 11).

Figure 11.

The contribution of formative evaluations to summative evaluation in easyRights projects based on the evaluation process adapted from Heeks and Alemayehu [80].

As the figure above shows, the visualization as a value chain of impact assessment referring to outputs (changes on the micro-level associated with the easyRights ICT solutions), outcomes (benefits associated with the easyRights project), and impacts (the contribution of the easyRights project solutions to the defined project goals) is useful for the understanding of the evaluation process and interlinked feedback and learning activities.

The successful transition from ICT development stages readiness to availability represents an essential step for the easyRights project achievements (“what”), which has a strong influence on the further course of the project. E-readiness assessment typically measures the prerequisites for any ICT initiative e.g., the presence of ICT infrastructure (access) and ICT skills of the target communities (use) as well as drivers and demand analysis [80,82]. Furthermore, strategically linked, project-specific inputs, in terms of money, labor, values, and political support are components of readiness [80]. The context inquiry being part of readiness has already been described above in the setting of the easyRights project. The release and testing of prototypes mark the phase of availability; therefore, the first feedback round including actors from all ecosystems takes place when the prototype delivery is in progress and the first hackathons are completed. In the following project sequence, eight assistants (two services per pilot) are integrated into public services, the hackathons are continued, and the final system deployment takes place. After the final system deployment, the second iteration of feedback provision is implemented from the local systems (pilots) to the project system and vice versa to push learning.

The sequence uptake and impact start as soon as the easyRights project solutions (eight assistants) are integrated into the services of the pilot cities and are dependent on the target achievement’s readiness and availability. In those project stages, assessment typically measures the extent to which the project’s ICT solutions are being used by its target groups and impact on the communities [80]. Uptake’s assessment also allows us to draw initial conclusions as to the extent to which easyRights project solutions and their availability within the target groups are known and meet their needs. easyRights impact analysis focuses on the socio-economic impact on the pilots’ communities and the organization of public administration. Going to impacts, only this sequence focuses on the assessment of the project’s impact on the direct (migrants, communities) and indirect target groups (public administration and NGOs).

A broader assessment approach supports further conclusions—based on analysis of the project impact—on the sustainability and the reusability and scalability of project methods and solutions. The assessment of those dimensions is already considered starting from the uptake [80], and it is further supplemented by the four evaluation results of new contextualization of the eight assistants. It already includes the results of impact assessment and contributes to reflections on the implementation of project solutions on a broader scale and sustainability planning.

The pilot activities and related ICT development achievements are reference points for learning actions and the development of learning networks of the three ecosystems. The knowledge produced, ongoing feedback, and negotiations between knowledge producers of the Quadruple Helix ecosystems are central [33] to support the dynamics between the actors for the further implementation of inter-institutional strategic alliances and cooperation in the sense of the easyRights project. The continuous provision of feedback contributes to the creation of a framework for inter-organizational collaboration and inter-organizational thinking of actors of all ecosystems in different project stages and therefore, it ensures the mobilization of, and the reflection on, a complex system of values contributing to the transition toward a more inclusive society. The design of the settings (the composition of actors and location), the presentation of (interim) results, and guided discussion and feedback provision (bottom–up, interactive, and discursive according to the learning dialogues principles) is in many ways an essential instrument for the co-creative approach of the project and the interplay of formative and summative evaluation, as well as a push-factor to support learning and reflection mechanisms in the sense of Triple-Loop Learning:

- Through content, impulses, reflections on (interim) project results, and methods contribute directly to the design and content of communication and interaction within the service ecosystems and thus have an activating effect;

- Evaluation results and the associated discussions influence the design and methodological implementation in the easyRights project;

- Reflection and learning processes of the different actors of the Quadruple Helix community on the project progress and impact can be documented, and associated learning processes can be traced.

The three interrelated easyRights key learning drivers—and objects of reflection in the three ecosystem categories of the easyRights project—are the results linked to the what, the practice related to the how, and the transition perspective referring to the why. The three key learning drivers and the interlinked contents and questions to be reflected on as part of the formative evaluation have to influence the achievement of objectives, methodological implementation, and the project impact and therefore the summative evaluation. The context inquiry and therefore chosen assessment methods, evaluation activities on the progress and achievements on pilot level, and pre-hack activities prepare the ground for the activation of the first loop learning mechanism and the resulting learning cycles in the three project ecosystem categories.

7. Conclusions

When occurring, Triple-Loop Learning takes place in different dimensions and at different levels of complex systems of communities and actors. Triple-Loop Learning helps to reflect on “what we learned”, “how we learned”, and “why we learned”. This learning process has according to several authors [10,11], a transitional power at different levels of socio-technical ecosystems. This paper proposes a methodological model for the evaluation of complex projects to exploit such potentials; it discusses the Triple-Loop Learning mechanisms in relation to Quadruple Helix ecosystems, and it introduces a conceptual framework reflecting on societal value creation by looking at learning as a mechanism to feed the value production chain in complex multi-actor environments.

The study identifies three main learning drivers for the easyRights project adopted as a test-bed for the developed evaluation framework: (a) results synthesized of what we learned, (b) project practice as to how we learned through a service co-design approach, hackathons, and learning dialogue, (c) transition perspective synthesized from why we learned as mobilizing the societal, approach and service values. These three learning drivers can be implemented in a manner that makes the evaluation activities a driver to learning.

The presented evaluation framework, its application to the easyRights project, and the associated evaluation toolbox aim to assess tangible and intangible project impacts including a variety of perspectives and actors, interlinking them and pushing a joint learning experience. The described approach meets, by a participatory co-creational setting, the need for reflexive evaluation enabling a process of parallel learning and intervention and hopefully social inclusion transition as relevant for the sustainability transition [83,84].

At the level of the easyRights project, the paper discusses a timeline and a composition of the evaluation instruments in the manner of a bottom–up mixed-methods design, which records the activities of the three ecosystems in different project phases, includes network actors of the different ecosystems, and places them in an ongoing process-oriented (learning) dialogue. Therefore, the development and implementation of the easyRights evaluation tools support the alignment of processes going on in the ecosystems, the development of a value-related shared vision and synergies, as well as a learning process and collective actions that form the basis for sustainable transition pushed by the Triple-Loop Learning mechanism.

Moreover, we envision two possible limitations in relation to our proposed methodological framework for complex project evaluation. One major and relevant limitation could be referred to the evaluation of a complex project as a “project in a project”. Often, the complex projects with multi-level ecosystems require high managerial efforts in handling complexities while proposing to engaging project actors in learning processes. This implies additional complexity and challenge for the evaluation framework to be oriented also to collective learning. The second limitation is hidden in the way we look at complex projects as including defined goals, a methodological approach, and clear and specific expected results and outcomes. We consider these essential elements as already existing at the beginning of the project implementation so not depending from any of the evaluation outcomes.

Finally, we conclude that when targeting the innovation of services for migrants, this can be a pathway to explore what, how, and why we want to evaluate, and eventually, we reflect on these questions, and we will be able to activate a Triple-Loop Learning mechanism. By exploring the opportunities offered by, and the chance to operationalize, Triple-Loop Learning, the easyRights projects highlights the potential of nurturing a wider, arguably neglected as yet, learning space for understanding, engaging, and transforming real contexts toward transition.

Author Contributions

Conceptualization, G.C. and M.K.; methodology, G.C. and M.K.; investigation, G.C., M.K. and L.R.; writing—original draft preparation, G.C. and Maryam; writing—review and editing, G.C., M.K. and L.R.; visualization, G.C., M.K. and L.R.; supervision, G.C.; project administration, G.C.; M.K. All authors have read and agreed to the published version of the manuscript.

Funding

The easyRights project is funded by EU Horizon 2020, grant number 870980. However, the opinions expressed herewith are solely of the authors and do not necessarily reflect the point of view of any EU institution.

Acknowledgments

The authors would like to ex press their gratitude to Francesco Molinari and Maria Cerreta for their comments on early drafts of the present manuscript. Finally the authors are thankful to the reviewers for their rich and inspiring suggestions.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

Figure A1.

(Temporary) illustration of work package-associated tasks, evaluation activities, and the three addressed learning drivers as What (results), How (project practices), and Why (transition perspective).

Appendix B

The three figures in this appendix show the easyRights evaluation toolbox within the shared vision on three learning drivers applied (what, how, why). Each figure summarizes the list of tools, target groups, the time to use the specific tools according to the duration of project, and the possible synergies with other learning drives.

Figure A2.

Toolbox related to the “what” (results) dimension.

Figure A3.

Toolbox related to the “how” (practices) dimension.

Figure A4.

Toolbox related to the “why” (transition perspective) dimension.

References

- Rowe, G.; Marsh, R.; Frewer, L.J. Evaluation of a Deliberative Conference. Sci. Technol. Hum. Values 2004, 29, 88–121. [Google Scholar] [CrossRef]

- Bellamy, J.A.; Walker, D.H.; McDonald, G.T.; Syme, G.J. A Systems Approach to the Evaluation of Natural Resource Management Initiatives. J. Environ. Manag. 2001, 63, 407–423. [Google Scholar] [CrossRef]