Digital Health and Care Study on Elderly Monitoring

Abstract

:1. Introduction

2. Materials and Methods

2.1. Study Design

- Age over 65 years old.

- Most of the household work is completed independently.

- In addition, the following exclusion criteria were considered:

- Unable to perform leg training seated with an exercise trainer for about 20 min for a maximum of 10 days over a two-week period.

- Unable to stand up, walk 3 m, walk back 3 m, and sit down again without the active assistance of another person.

- Unable to understand and complete paper format questionnaires.

- Advanced dementia.

- The sleep monitoring device was placed under the mattress across the bed. According to the device’s instruction manual, its position should be approximately below the chest area, as described in reference [29].

- The device for monitoring the heartbeat was put on the arm of the test person, and the organizers explained how to use and charge it.

- A third hardware element was installed at the subjects’ homes to ensure that the used devices had proper Internet connectivity through an access point with an Internet-capable sim card. Therefore, the system was able to function autonomously and was not dependent on the Wi-Fi network of the test subjects.

- Sleep diary.

- Graphical questionnaire on sleep quality.

- Pittsburgh Sleep Quality Index.

- Questionnaire to assess the acceptance of the technologies used, including free-form comments.

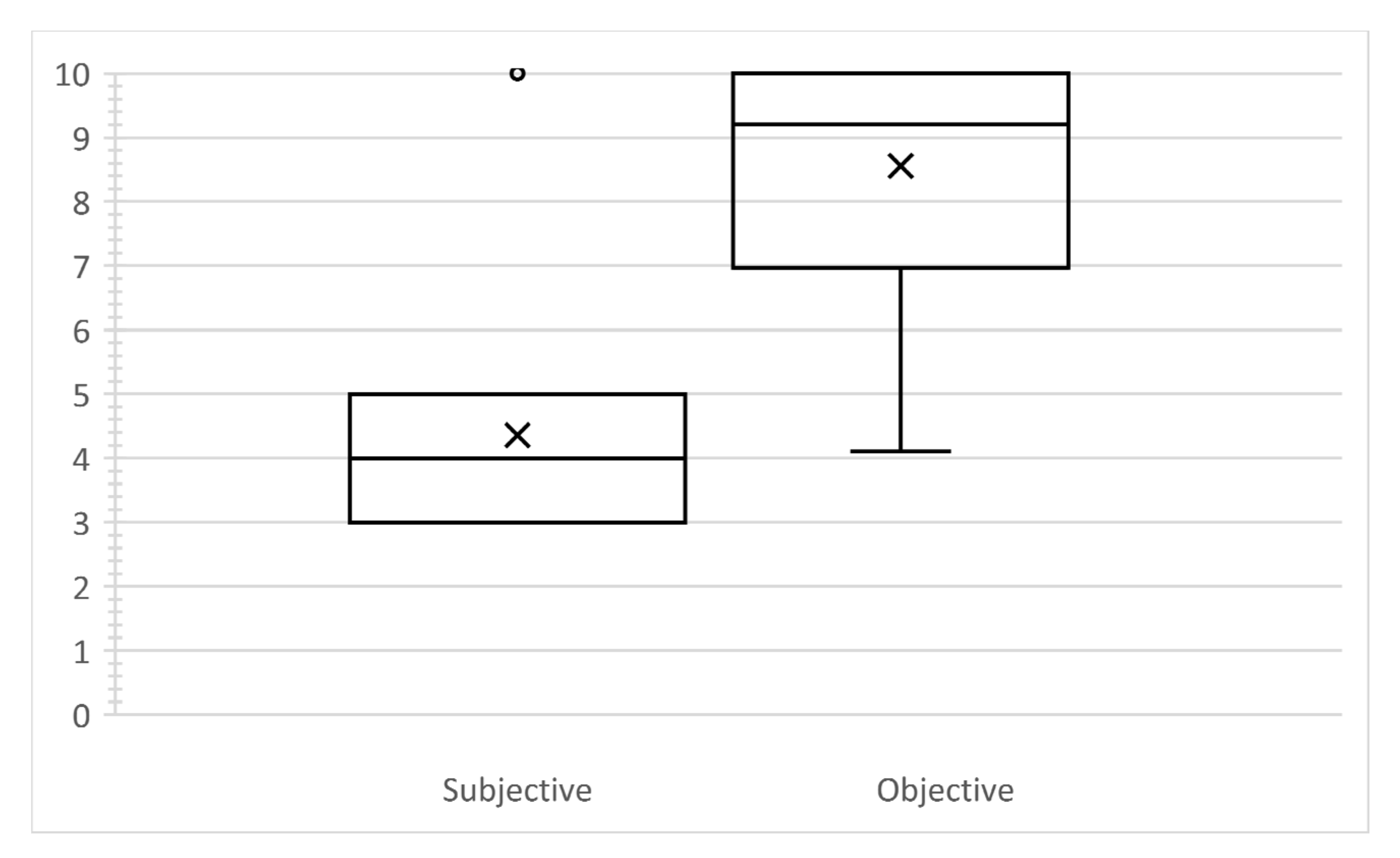

2.2. Subjective and Objective Measurement Using Home-Health Technologies

2.3. Acceptance of Technologies by the Elderly

- The technologies should be self-explanatory and should not require extensive training.

- The devices should function automatically as much as possible;

- The devices should be comfortable and safe to use.

- Surveying with a questionnaire.

- Free conversations with test participants to receive unstructured feedback.

- Systematic analysis of occurrences and irregularities during the study.

3. Results

- The study by reference [46] analysed a broad demographic. In our study, only subjects over 65 years of age were included. Therefore, the results may differ significantly due to the different age groups.

- About 20 years has passed between the study conducted by reference [46] and our study. During this period, the prevalence of sleep-related disorders may have been changed.

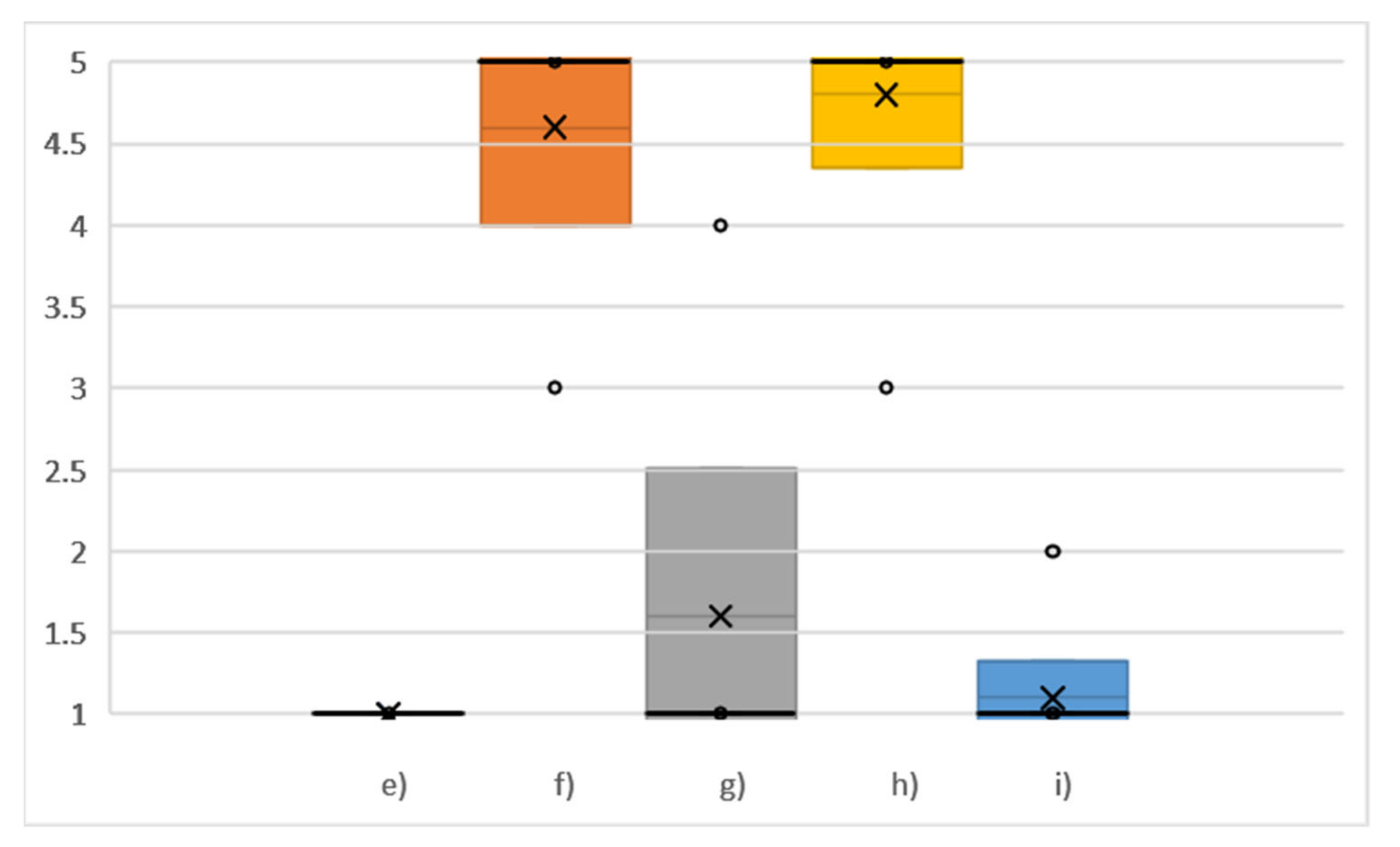

- There was no agreement among test participants on whether they would use the devices regularly. It should be noted that the devices were used over a two-week period, which, among other things, means that the subjects did not have long-term experience with the devices and therefore could not assess whether they would use the devices regularly in the long term well.

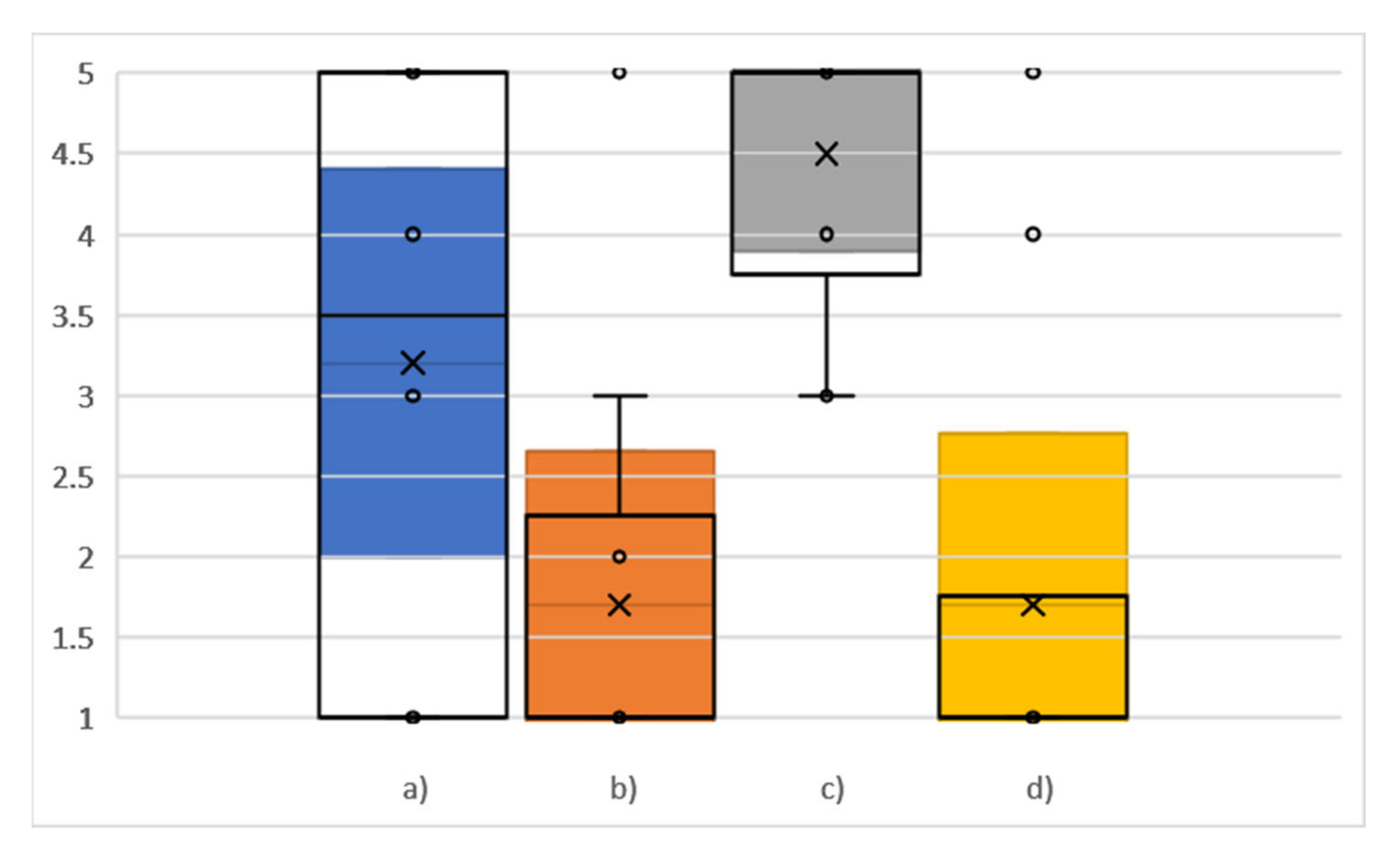

- The questions regarding the complexity of the devices, ease of use, and the need for support to use the devices, there is a clear trend in the answers, which is more in favour of the devices being relatively easy to use independently without external support. Moreover, despite the novelty of these types of devices for the subjects, no unnecessary complexity was perceived. According to the technology acceptance model, these points indicate that the proposed concept can increase acceptance [51].

- The subjects do not believe that there are too many inconsistencies (confusing or unclear functions or components) with the devices utilized.

- According to the subjects’ opinions, the majority of people can quickly learn how to handle the devices.

- The operation of the devices is not very complicated according to the subjective perception of the test participants. However, it should be noted that several people noticed some irregularities in the functioning of the proposed devices, which are described in detail below.

- It was possible to use the devices without having to learn much new information beforehand. In summary, from the answers to question (e), question (b), and question (c), it can be concluded that the presented concept, where the subjects had to interact with the devices as little as possible, gives a sense of simplicity to users, which can also lead to an increase in the acceptance of such systems [54,55,56].

- Firstly, the switching button was tiny and partially placed in the device’s body. This made it difficult for the subjects to press the button, especially when their fine motor skills were not perfect.

- The second problem was the lack of direct feedback from the device. Therefore, the test subjects did not immediately recognize whether the device was already switched on or not. After evaluating the test results, we found that several recordings were only a few seconds long. This means that the subjects switched on the device, but because they were unsure whether it worked, they tried to switch on the device again, which eventually led to the device being turned off.

4. Conclusions

- The use of devices that required minimal action on the part of the users. For example, no actions are required except (voluntary) for the unplugging and subsequent re-plugging of the sleep analysis device into the power socket. In the case of the device measuring heart rate during the day, only pressing a button was necessary, and the evaluation of the study showed that even this minimal necessary action led to problems. A possible solution would be to use a device with a more prominent and easier-to-use button to turn it on.

- The technologies should be self-explanatory, and no complex training or support from a third person should be necessary. This goal was achieved according to the results of the interview, as explained in detail below.

- When using electronic devices, there should be a sense of safety. This means that the devices should not contain any parts that could be considered dangerous. Additionally, users should be assured that when using the technologies, they cannot be easily broken. For this, the devices should be robust enough and have as few as possible easily breakable parts.

- Only a few technologies could be used and evaluated within the study framework. Therefore, it cannot be excluded that the results could deviate with a different selection of devices. However, it is always necessary to select a specific subset of technologies because it is impossible to test all available devices at once.

- The number of test subjects was limited to 10. In order to obtain statistically relevant data, the period of 14 days was chosen for the study, resulting in a total of 140 person-days of study. In addition, the same proportion of male and female test subjects was ensured. Furthermore, the proposed study contained a certain type of usability testing, which included questionnaires and a free-form interview to understand if there were any problems with the usability of devices. According to reference [63], only 10 ± 2 subjects are necessary to discover 80% of usability problems. Moreover, the analysis of the transferability of the results to the entire population of persons aged 65 and older with the approach of the statistical parameter “margin of error” was carried out and presented in the section “Results”. The margin of error scientifically confirmed the significance of the results that were obtained.

- Since the study target group was people aged 65 years of age and older, the results cannot be directly transferred to other age groups.

- The technologies were not connected to a common platform that could be accessed by the participants directly. Therefore, it was impossible to assess whether the possibility of directly viewing the results of the recordings by the test subjects could have a positive impact on acceptance.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Acknowledgments

Conflicts of Interest

References

- United Nations General Assembly. Transforming Our World: The 2030 Agenda for Sustainable Development; United Nations: New York, NY, USA, 2015. [Google Scholar]

- Haken, I.T.; Ben Allouch, S.; Van Harten, W.H. The use of advanced medical technologies at home: A systematic review of the literature. BMC Public Health 2018, 18, 284. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Nedungadi, P.; Jayakumar, A.; Raman, R. Personalized Health Monitoring System for Managing Well-Being in Rural Areas. J. Med. Syst. 2017, 42, 22. [Google Scholar] [CrossRef] [PubMed]

- Wang, J. Mobile and Connected Health Technologies for Older Adults Aging in Place. J. Gerontol. Nurs. 2018, 44, 3–5. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Guan, K.; Shao, M.; Wu, S. A Remote Health Monitoring System for the Elderly Based on Smart Home Gateway. J. Healthc. Eng. 2017, 2017, 5843504. [Google Scholar] [CrossRef] [PubMed]

- Morato, J.; Sanchez-Cuadrado, S.; Iglesias, A.; Campillo, A.; Fernández-Panadero, C. Sustainable Technologies for Older Adults. Sustainability 2021, 13, 8465. [Google Scholar] [CrossRef]

- Pekkarinen, S.; Melkas, H.; Hyypiä, M. Elderly Care and Digital Services: Toward a Sustainable Sociotechnical Transition. In Human-Centered Digitalization and Services; Toivonen, M., Saari, E., Eds.; Springer: Singapore, 2019; pp. 259–284. ISBN 978-981-13-7724-2. [Google Scholar]

- Malwade, S.; Abdul, S.S.; Uddin, M.; Nursetyo, A.A.; Fernandez-Luque, L.; Zhu, X.K.; Cilliers, L.; Wong, C.-P.; Bamidis, P.; Li, Y.-C.J. Mobile and wearable technologies in healthcare for the ageing population. Comput. Methods Programs Biomed. 2018, 161, 233–237. [Google Scholar] [CrossRef] [PubMed]

- Conti, M.; Orcioni, S.; Madrid, N.M.; Gaiduk, M.; Seepold, R. A Review of Health Monitoring Systems Using Sensors on Bed or Cushion. In Bioinformatics and Biomedical Engineering; Rojas, I., Ortuño, F., Eds.; Springer International Publishing: Cham, Switzerland, 2018; pp. 347–358. ISBN 978-3-319-78758-9. [Google Scholar]

- Sovacool, B.K.; Del Rio, D.F. Smart home technologies in Europe: A critical review of concepts, benefits, risks and policies. Renew. Sustain. Energy Rev. 2020, 120, 109663. [Google Scholar] [CrossRef]

- Asghar, I.; Cang, S.; Yu, H. Usability evaluation of assistive technologies through qualitative research focusing on people with mild dementia. Comput. Hum. Behav. 2018, 79, 192–201. [Google Scholar] [CrossRef]

- Wildenbos, G.; Jaspers, M.; Schijven, M.; Peute, L. Mobile health for older adult patients: Using an aging barriers framework to classify usability problems. Int. J. Med. Inform. 2019, 124, 68–77. [Google Scholar] [CrossRef] [PubMed]

- Cifter, A.S. Blood Pressure Monitor Usability Problems Detected Through Human Factors Evaluation. Ergon. Des. Q. Hum. Factors Appl. 2017, 25, 11–19. [Google Scholar] [CrossRef]

- Sultan, M.; Kuluski, K.; McIsaac, W.J.; Cafazzo, J.A.; Seto, E. Turning challenges into design principles: Telemonitoring systems for patients with multiple chronic conditions. Health Inform. J. 2019, 25, 1188–1200. [Google Scholar] [CrossRef]

- Carayon, P.; Hoonakker, P. Human Factors and Usability for Health Information Technology: Old and New Challenges. Yearb. Med. Inform. 2019, 28, 071–077. [Google Scholar] [CrossRef] [PubMed]

- Agnisarman, S.O.; Madathil, K.C.; Smith, K.; Ashok, A.; Welch, B.; McElligott, J.T. Lessons learned from the usability assessment of home-based telemedicine systems. Appl. Ergon. 2017, 58, 424–434. [Google Scholar] [CrossRef] [PubMed]

- Moon, N.W.; Baker, P.M.; Goughnour, K. Designing wearable technologies for users with disabilities: Accessibility, usability, and connectivity factors. J. Rehabil. Assist. Technol. Eng. 2019, 6, 205566831986213. [Google Scholar] [CrossRef] [Green Version]

- Taherdoost, H. A review of technology acceptance and adoption models and theories. Procedia Manuf. 2018, 22, 960–967. [Google Scholar] [CrossRef]

- Rahimi, B.; Nadri, H.; Afshar, H.L.; Timpka, T. A Systematic Review of the Technology Acceptance Model in Health Informatics. Appl. Clin. Inform. 2018, 9, 604–634. [Google Scholar] [CrossRef] [Green Version]

- Thoits, P.A. Stress and Health: Major Findings and Policy Implications. J. Health Soc. Behav. 2010, 51 (Suppl. 1), S41–S53. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Knutson, K.L.; Phelan, J.; Paskow, M.J.; Roach, A.; Whiton, K.; Langer, G.; Hillygus, D.S.; Mokrzycki, M.; Broughton, W.A.; Chokroverty, S.; et al. The National Sleep Foundation’s Sleep Health Index. Sleep Health 2017, 3, 234–240. [Google Scholar] [CrossRef] [PubMed]

- Benjafield, A.V.; Ayas, N.T.; Eastwood, P.R.; Heinzer, R.; Ip, M.S.M.; Morrell, M.J.; Nunez, C.M.; Patel, S.R.; Penzel, T.; Pépin, J.-L.; et al. Estimation of the global prevalence and burden of obstructive sleep apnoea: A literature-based analysis. Lancet Respir. Med. 2019, 7, 687–698. [Google Scholar] [CrossRef] [Green Version]

- Uddin, B.; Chow, C.-M.; Su, S. Classification methods to detect sleep apnea in adults based on respiratory and oximetry signals: A systematic review. Physiol. Meas. 2018, 39, 03TR01. [Google Scholar] [CrossRef]

- Gaiduk, M.; Orcioni, S.; Conti, M.; Seepold, R.; Penzel, T.; Madrid, N.M.; Ortega, J.A. Embedded system for non-obtrusive sleep apnea detection*. In Proceedings of the 2020 42nd Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC), Montreal, QC, Canada, 20–24 July 2020; Volume 2020, pp. 2776–2779. [Google Scholar] [CrossRef]

- Gulia, K.K.; Kumar, V.M. Sleep disorders in the elderly: A growing challenge. Psychogeriatrics 2018, 18, 155–165. [Google Scholar] [CrossRef] [PubMed]

- Sugaya, N.; Arai, M.; Goto, F. The effect of vestibular rehabilitation on sleep disturbance in patients with chronic dizziness. Acta Otolaryngol. 2017, 137, 275–278. [Google Scholar] [CrossRef] [PubMed]

- Leonidis, A.; Korozi, M.; Sykianaki, E.; Tsolakou, E.; Kouroumalis, V.; Ioannidi, D.; Stavridakis, A.; Antona, M.; Stephanidis, C. Improving Stress Management and Sleep Hygiene in Intelligent Homes. Sensors 2021, 21, 2398. [Google Scholar] [CrossRef] [PubMed]

- Littlejohns, P.; Kieslich, K.; Weale, A.; Tumilty, E.; Richardson, G.; Stokes, T.; Gauld, R.; Scuffham, P. Creating sustainable health care systems. J. Health Organ. Manag. 2019, 33, 18–34. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Gaiduk, M.; Seepold, R.; Ortega, J.A.; Madrid, N.M. Comparison of sleep characteristics measurements: A case study with a population aged 65 and above. Procedia Comput. Sci. 2020, 176, 2341–2349. [Google Scholar] [CrossRef]

- Park, K.S.; Choi, S.H. Smart technologies toward sleep monitoring at home. Biomed. Eng. Lett. 2019, 9, 73–85. [Google Scholar] [CrossRef] [PubMed]

- Gaiduk, M.; Seepold, R.; Madrid, N.M.; Ortega, J.A.; Conti, M.; Orcioni, S.; Penzel, T.; Scherz, W.D.; Perea, J.J.; Alarcón, Á.S.; et al. A Comparison of Objective and Subjective Sleep Quality Measurement in a Group of Elderly Persons in a Home Environment. In Applications in Electronics Pervading Industry, Environment and Society; Saponara, S., de Gloria, A., Eds.; Springer International Publishing: Cham, Switzerland, 2021; pp. 286–291. ISBN 978-3-030-66728-3. [Google Scholar]

- Ibáñez, V.; Silva, J.; Cauli, O. A survey on sleep questionnaires and diaries. Sleep Med. 2018, 42, 90–96. [Google Scholar] [CrossRef] [PubMed]

- Ibáñez, V.; Silva, J.; Cauli, O. A survey on sleep assessment methods. PeerJ 2018, 6, e4849. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Harvey, A.G.; Stinson, K.; Whitaker, K.; Moskovitz, D.; Virk, H. The Subjective Meaning of Sleep Quality: A Comparison of Individuals with and without Insomnia. Sleep 2008, 31, 383–393. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Merilahti, J.; Saarinen, A.; Pärkkä, J.; Antila, K.; Mattila, E.; Korhonen, I. Long-Term Subjective and Objective Sleep Analysis of Total Sleep Time and Sleep Quality in Real Life Settings. Annu. Int. Conf. IEEE Eng. Med. Biol. Soc. 2007, 2007, 5202–5205. [Google Scholar] [CrossRef] [PubMed]

- Huysmans, D.; Borzée, P.; Testelmans, D.; Buyse, B.; Willemen, T.; van Huffel, S.; Varon, C. Evaluation of a Commercial Ballistocardiography Sensor for Sleep Apnea Screening and Sleep Monitoring. Sensors 2019, 19, 2133. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Kortelainen, J.M.; van Gils, M.; Pärkkä, J. Multichannel Bed Pressure Sensor for Sleep Monitoring. In Proceedings of the 39th Computing in Cardiology, Krakow, Poland, 9–12 September 2012; pp. 313–316. [Google Scholar]

- Gaiduk, M.; Seepold, R.; Penzel, T.; Ortega, J.A.; Glos, M.; Madrid, N.M. Recognition of Sleep/Wake States analyzing Heart Rate, Breathing and Movement Signals. Annu. Int. Conf. IEEE Eng. Med. Biol. Soc. 2019, 2019, 5712–5715. [Google Scholar] [CrossRef]

- Gaiduk, M.; Penzel, T.; Ortega, J.A.; Seepold, R. Automatic sleep stages classification using respiratory, heart rate and movement signals. Physiol. Meas. 2018, 39, 124008. [Google Scholar] [CrossRef] [PubMed]

- Tonetti, L.; Mingozzi, R.; Natale, V. Comparison between paper and electronic sleep diary. Biol. Rhythm. Res. 2016, 47, 743–753. [Google Scholar] [CrossRef]

- Jungquist, C.R.; Pender, J.J.; Klingman, K.J.; Mund, J. Validation of Capturing Sleep Diary Data via a Wrist-Worn Device. Sleep Disord. 2015, 2015, 758937. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Buysse, D.J.; Reynolds, C.F., III; Monk, T.H.; Berman, S.R.; Kupfer, D.J. The Pittsburgh sleep quality index: A new instrument for psychiatric practice and research. Psychiatry Res. 1989, 28, 193–213. [Google Scholar] [CrossRef]

- Backhaus, J.; Junghanns, K.; Broocks, A.; Riemann, D.; Hohagen, F. Test–retest reliability and validity of the Pittsburgh Sleep Quality Index in primary insomnia. J. Psychosom. Res. 2002, 53, 737–740. [Google Scholar] [CrossRef]

- Carpenter, J.S.; Andrykowski, M.A. Psychometric evaluation of the pittsburgh sleep quality index. J. Psychosom. Res. 1998, 45, 5–13. [Google Scholar] [CrossRef]

- Riemann, D.; Backhaus, J. Behandlung von Schlafstörungen. In Materialien für die Psychosoziale Praxis; Psychologische Verlags Union: Weinheim, Germany, 1996. [Google Scholar]

- Zeitlhofer, J.; Schmeiser-Rieder, A.; Tribl, G.; Rosenberger, A.; Bolitschek, J.; Kapfhammer, G.; Saletu, B.; Katschnig, H.; Holzinger, B.; Popovic, R.; et al. Sleep and quality of life in the Austrian population. Acta Neurol. Scand. 2000, 102, 249–257. [Google Scholar] [CrossRef]

- Scherz, W.D.; Seepold, R.; Madrid, N.M.; Crippa, P.; Ortega, J.A. RR interval analysis for the distinction between stress, physical activity and no activity using a portable ECG. Annu. Int. Conf. IEEE Eng. Med. Biol. Soc. 2020, 2020, 4522–4526. [Google Scholar] [CrossRef]

- Castaldo, R.; Montesinos, L.; Melillo, P.; James, C.; Pecchia, L. Ultra-short term HRV features as surrogates of short term HRV: A case study on mental stress detection in real life. BMC Med. Inform. Decis. Mak. 2019, 19, 12. [Google Scholar] [CrossRef] [Green Version]

- Schäfer, A.; Vagedes, J. How accurate is pulse rate variability as an estimate of heart rate variability? A review on studies comparing photoplethysmographic technology with an electrocardiogram. Int. J. Cardiol. 2013, 166, 15–29. [Google Scholar] [CrossRef] [PubMed]

- Zubair, M.; Yoon, C. Multilevel mental stress detection using ultra-short pulse rate variability series. Biomed. Signal. Process. Control. 2020, 57, 101736. [Google Scholar] [CrossRef]

- Li, J.; Ma, Q.; Chan, A.H.; Man, S. Health monitoring through wearable technologies for older adults: Smart wearables acceptance model. Appl. Ergon. 2019, 75, 162–169. [Google Scholar] [CrossRef] [PubMed]

- Dowling, G.R.; Staelin, R. A Model of Perceived Risk and Intended Risk-Handling Activity. J. Consum. Res. 1994, 21, 119–134. [Google Scholar] [CrossRef]

- Yang, H.; Yu, J.; Zo, H.; Choi, M. User acceptance of wearable devices: An extended perspective of perceived value. Telemat. Inform. 2016, 33, 256–269. [Google Scholar] [CrossRef]

- Choi, J.; Kim, S. Is the smartwatch an IT product or a fashion product? A study on factors affecting the intention to use smartwatches. Comput. Hum. Behav. 2016, 63, 777–786. [Google Scholar] [CrossRef]

- Davis, F.D. Perceived Usefulness, Perceived Ease of Use, and User Acceptance of Information Technology. MIS Q. 1989, 13, 319–339. [Google Scholar] [CrossRef] [Green Version]

- Karahoca, A.; Karahoca, D.; Aksöz, M. Examining intention to adopt to internet of things in healthcare technology products. Kybernetes 2018, 47, 742–770. [Google Scholar] [CrossRef]

- Cochran, W.G. Sampling Techniques, 3rd ed.; Wiley: New York, NY, USA, 1977; ISBN 0-471-16240-X. [Google Scholar]

- Moore, D.S.; McCabe, G.P.; Craig, B.A. Introduction to the Practice of Statistics, 6th ed.; W.H. Freeman: New York, 2009; ISBN 978-1-4292-1623-4. [Google Scholar]

- Riemann, D.; Baglioni, C.; Bassetti, C.; Bjorvatn, B.; Groselj, L.D.; Ellis, J.G.; Espie, C.A.; Garcia-Borreguero, D.; Gjerstad, M.; Gonçalves, M.; et al. European guideline for the diagnosis and treatment of insomnia. J. Sleep Res. 2017, 26, 675–700. [Google Scholar] [CrossRef] [PubMed]

- Berry, R.B.; Quan, S.F.; Abreu, A.R.; Bibbs, M.L.; DelRosso, L.; Harding, S.M.; Mao, M.-M.; Plante, D.T.; Pressman, M.R.; Troester, M.R.; et al. The AASM Manual for the Scoring of Sleep and Associated Events: Rules, Terminology and Technical Specifications; Version 2.6; American Academy of Sleep Medicine: Darien, IL, USA, 2020. [Google Scholar]

- Nielsen, J. Enhancing the explanatory power of usability heuristics. In Proceedings of the SIGCHI conference on Human Factors in Computing Systems Celebrating Interdependence-CHI ′94, Boston, MA, USA, 24–28 April 1994; Adelson, B., Dumais, S., Olson, J., Eds.; ACM Press: New York, NY, USA, 1994; pp. 152–158. [Google Scholar]

- Gaiduk, M.; Perea, J.J.; Seepold, R.; Madrid, N.M.; Penzel, T.; Glos, M.; Ortega, J.A. Estimation of Sleep Stages Analyzing Respiratory and Movement Signals. IEEE J. Biomed. Health Inform. 2021. (Early Access). [Google Scholar] [CrossRef] [PubMed]

- Hwang, W.; Salvendy, G. Number of people required for usability evaluation. Commun. ACM 2010, 53, 130–133. [Google Scholar] [CrossRef]

| PSQI Value | Percentage |

|---|---|

| ≤5 | 40% |

| >5 | 60% |

| Measure | Question | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| (a) | (b) | (c) | (d) | (e) | (f) | (g) | (h) | (i) | |

| SD | 1.6865 | 1.3375 | 0.8498 | 1.4944 | 0.0000 | 0.8433 | 1.2649 | 0.6325 | 0.3162 |

| Mean | 3.2000 | 1.7000 | 4.5000 | 1.7000 | 1.0000 | 4.6000 | 1.6000 | 4.8000 | 1.1000 |

| Sample standard error | 0.5333 | 0.4230 | 0.2687 | 0.4726 | 0.0000 | 0.2667 | 0.4000 | 0.2000 | 0.1000 |

| Margin of error | 1.2065 | 0.9568 | 0.6079 | 1.0691 | 0.0000 | 0.6032 | 0.9049 | 0.4524 | 0.2262 |

| Measurement | Subject | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | |

| Subjective | 8 | 10 | 14 | 9 | 11 | 13 | 7 | 7 | 5 | 6 |

| Objective | 7 | 6 | 14 | 14 | 14 | 14 | 9 | 10 | 13 | 14 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gaiduk, M.; Seepold, R.; Martínez Madrid, N.; Ortega, J.A. Digital Health and Care Study on Elderly Monitoring. Sustainability 2021, 13, 13376. https://doi.org/10.3390/su132313376

Gaiduk M, Seepold R, Martínez Madrid N, Ortega JA. Digital Health and Care Study on Elderly Monitoring. Sustainability. 2021; 13(23):13376. https://doi.org/10.3390/su132313376

Chicago/Turabian StyleGaiduk, Maksym, Ralf Seepold, Natividad Martínez Madrid, and Juan Antonio Ortega. 2021. "Digital Health and Care Study on Elderly Monitoring" Sustainability 13, no. 23: 13376. https://doi.org/10.3390/su132313376

APA StyleGaiduk, M., Seepold, R., Martínez Madrid, N., & Ortega, J. A. (2021). Digital Health and Care Study on Elderly Monitoring. Sustainability, 13(23), 13376. https://doi.org/10.3390/su132313376