Ultra Short-Term Wind Power Forecasting Based on Sparrow Search Algorithm Optimization Deep Extreme Learning Machine

Abstract

:1. Introduction

- The proposed SSA-ELM wind power prediction model is based on time series, and it’s less dependent on input data than models based on NWP data;

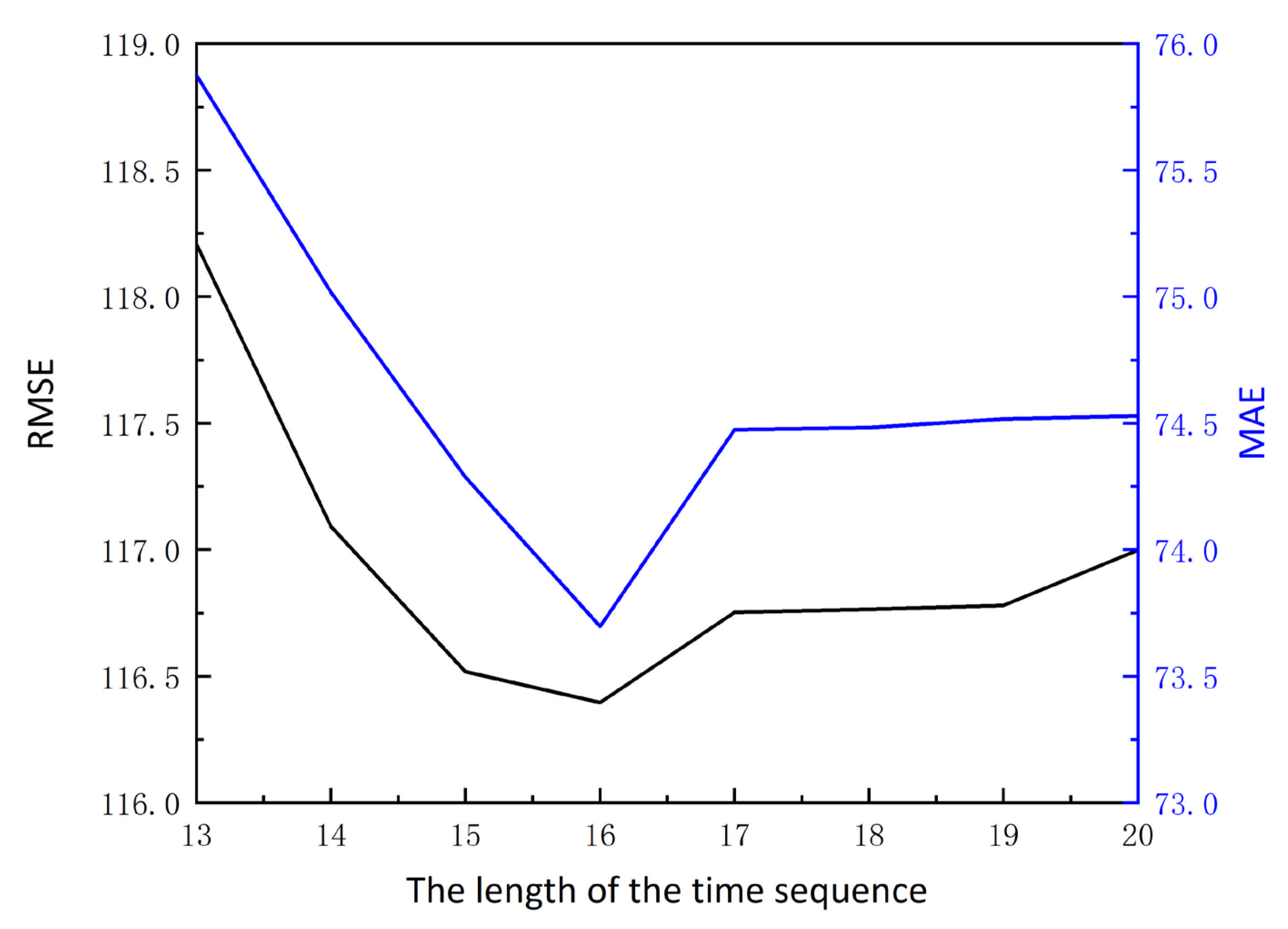

- The effect of the time series’ length on the prediction accuracy of the neural network model is verified. The method of optimizing the length of the time series is explained in detail;

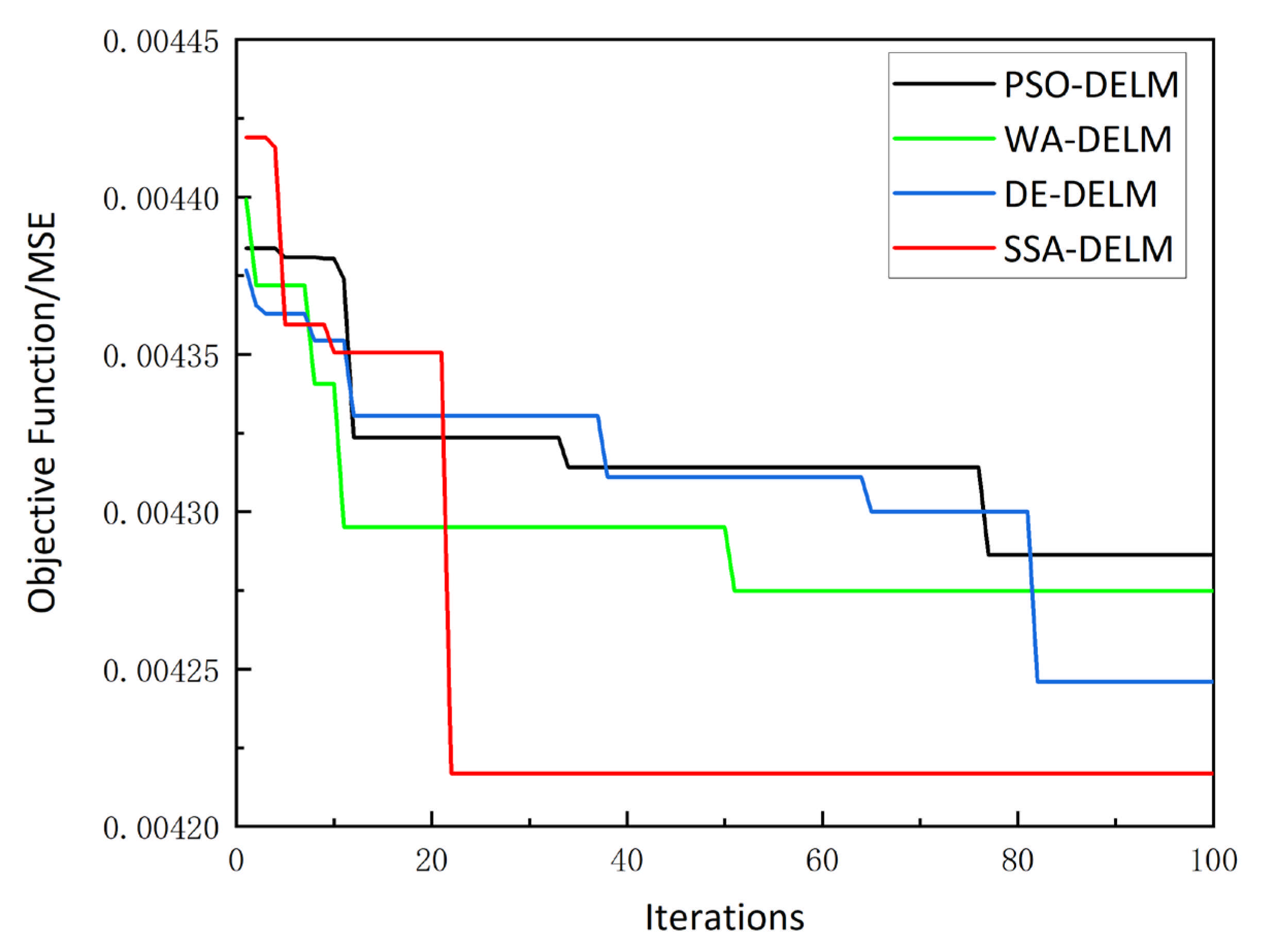

- The sparrow search algorithm is combined with the deep extreme learning machine to forecast wind power for the first time. By dividing the sparrow population into three categories: discoverers, entrants, and guards, the input weights and thresholds of DELM are optimized. The prediction results are compared with several other optimized neural network models. The results show that the proposed model increases the speed of convergence and effectively avoids the optimization process from falling into the local optimum.

2. Materials and Methods

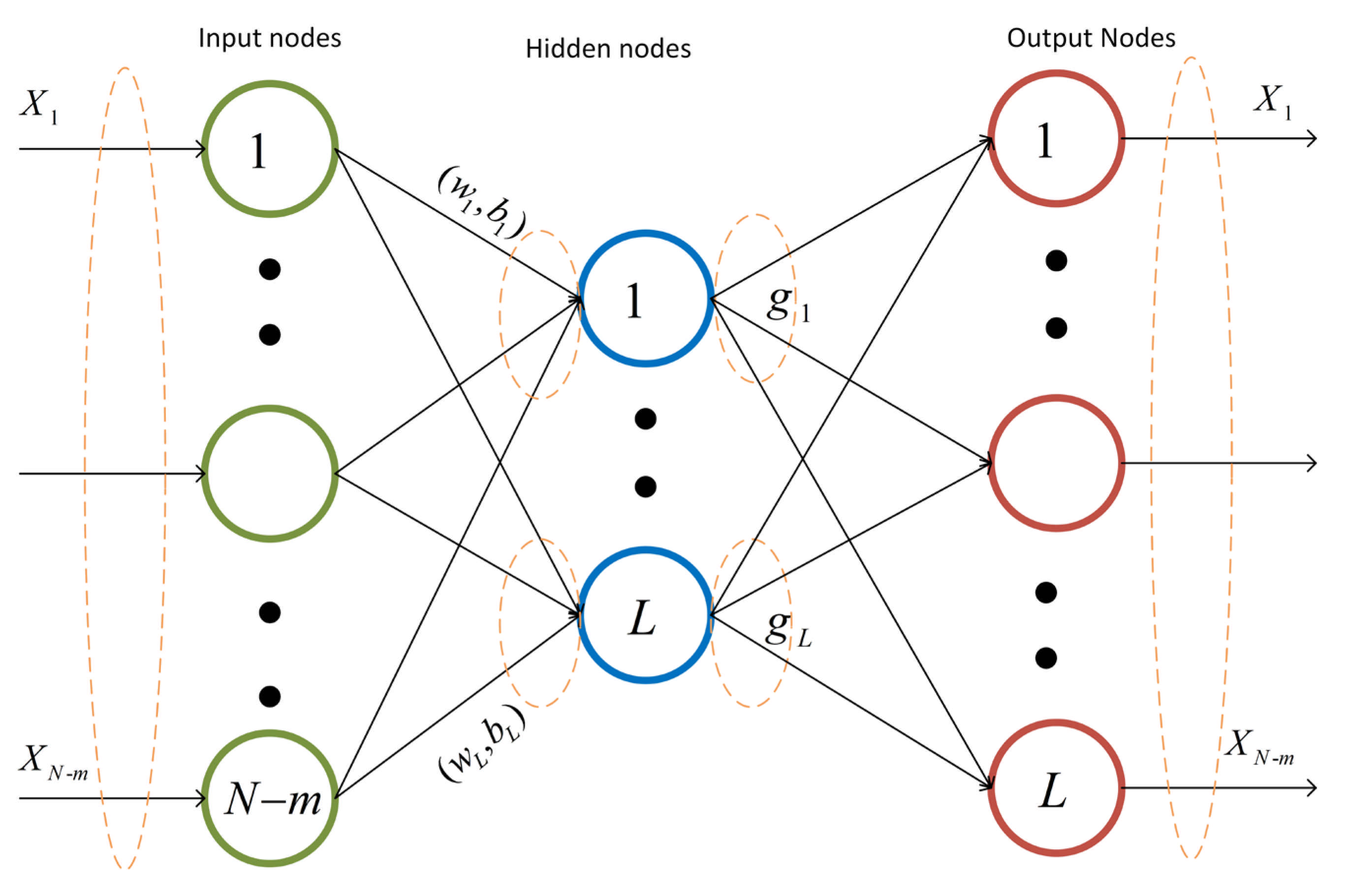

2.1. Extreme Learning Machine

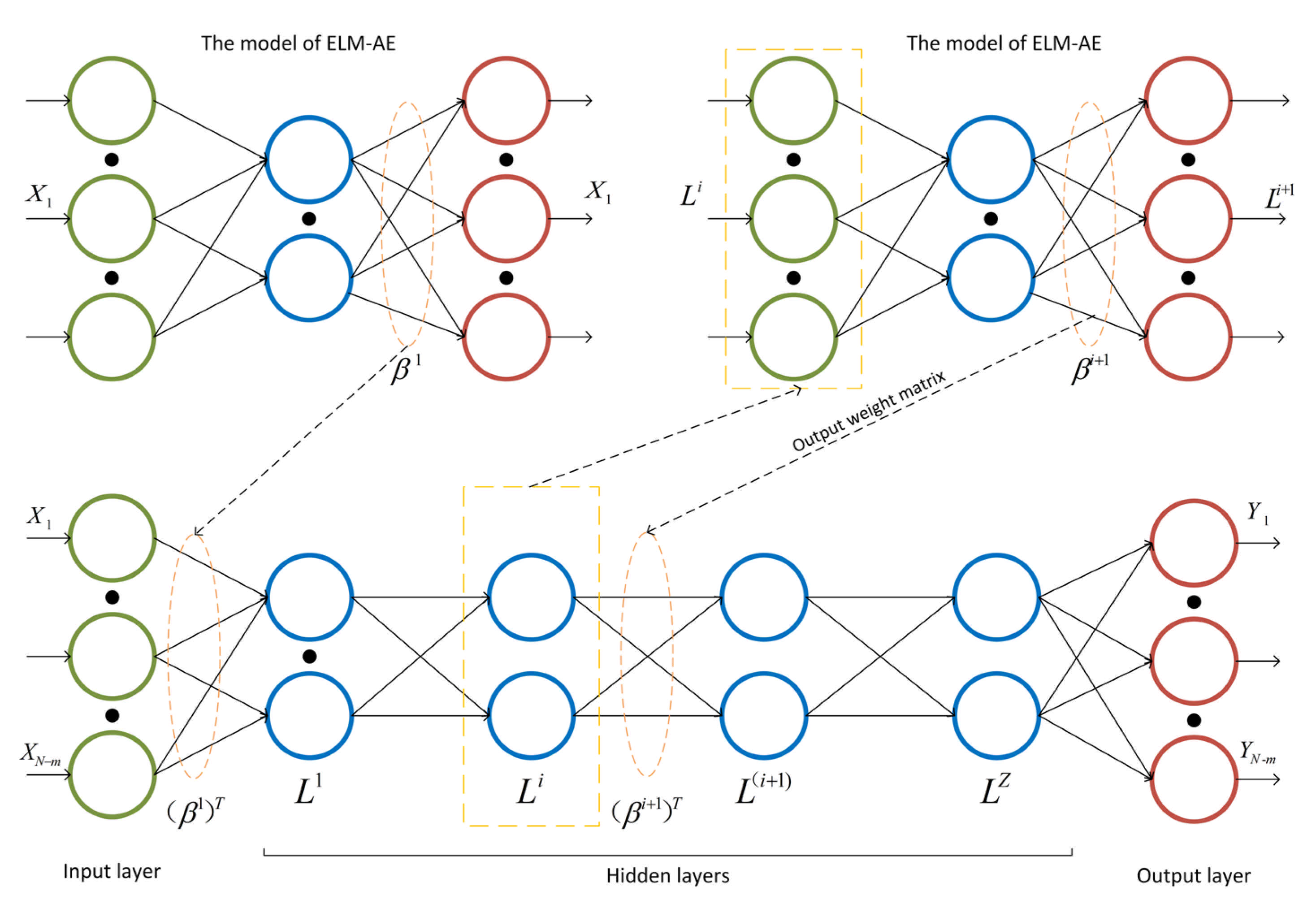

2.2. Deep Extreme Learning Machine

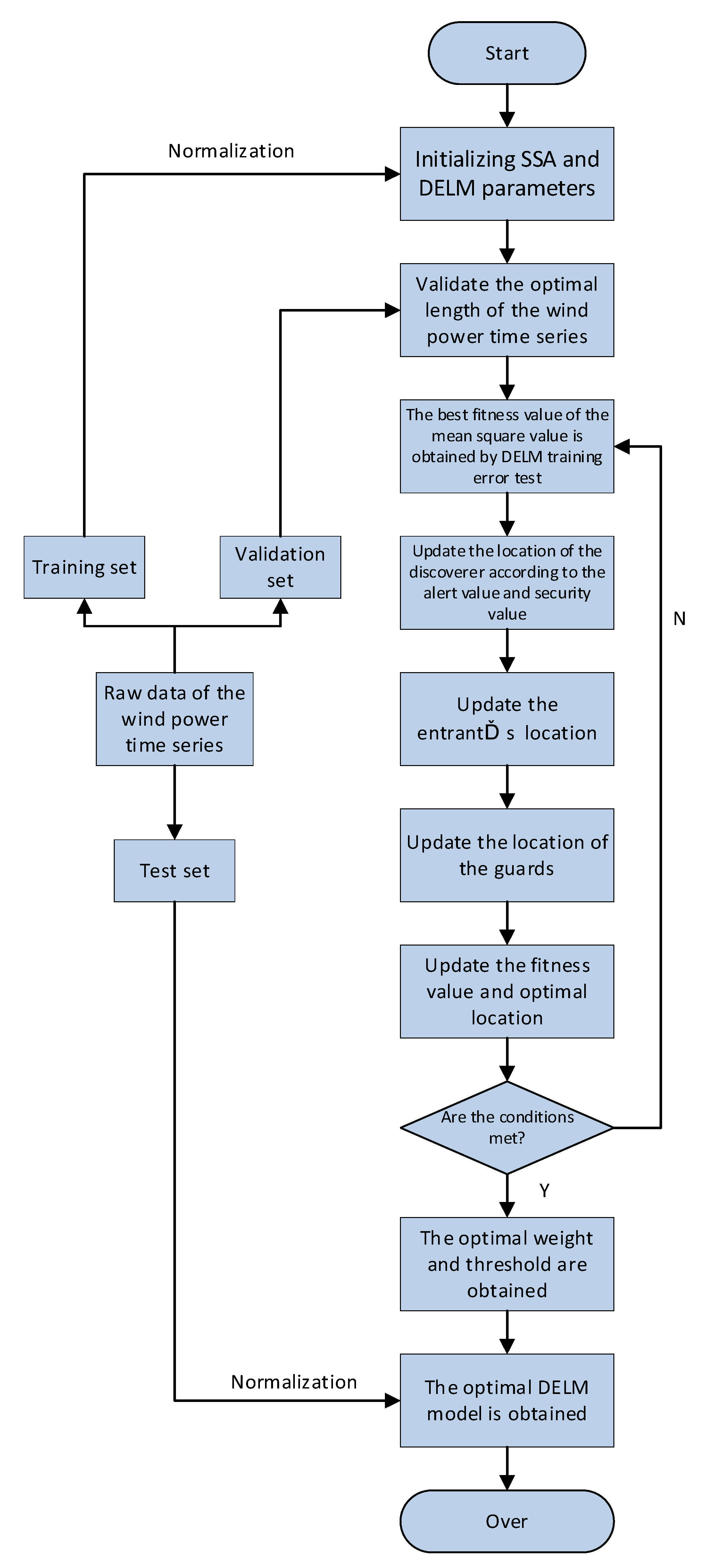

2.3. Deep Extreme Learning Machine Optimized by Sparrow Search Algorithm

2.3.1. Principles of Sparrow Search Algorithm

2.3.2. The Process of the SSA-DELM Model

| Algorithm 1: DELM optimized by the sparrow search algorithm |

| Input: Population: P; Maximum number of iterations: T; Dimensions: E; The number of discoverers: DS; The number of guards: GD; The warning value: R2. Output: The best vector (solution)—Dbest 1: while (t < T) 2: Rank the fitness values and find the current best individual and the worst individual; 3: R2 = rand (0, 1) 4: for c = 1: DS 5: Use Formula (6) to update the location of the discoverers; 6: end for 7: for c = (DS + 1): P 8: Use Formula (7) to update the location of the entrants; 9: end for 10: for c = 1: GD 11: Use Formula (8) to update the location of the guards; 12: end for 13: Obtain the current new location; 14: If the new location is better than before, replace the location with the new one; 15: t = t + 1 16: end while 17: return Dbest, fg; 18: Substitute the Dbest vector into the DELM model. |

3. Case Analysis

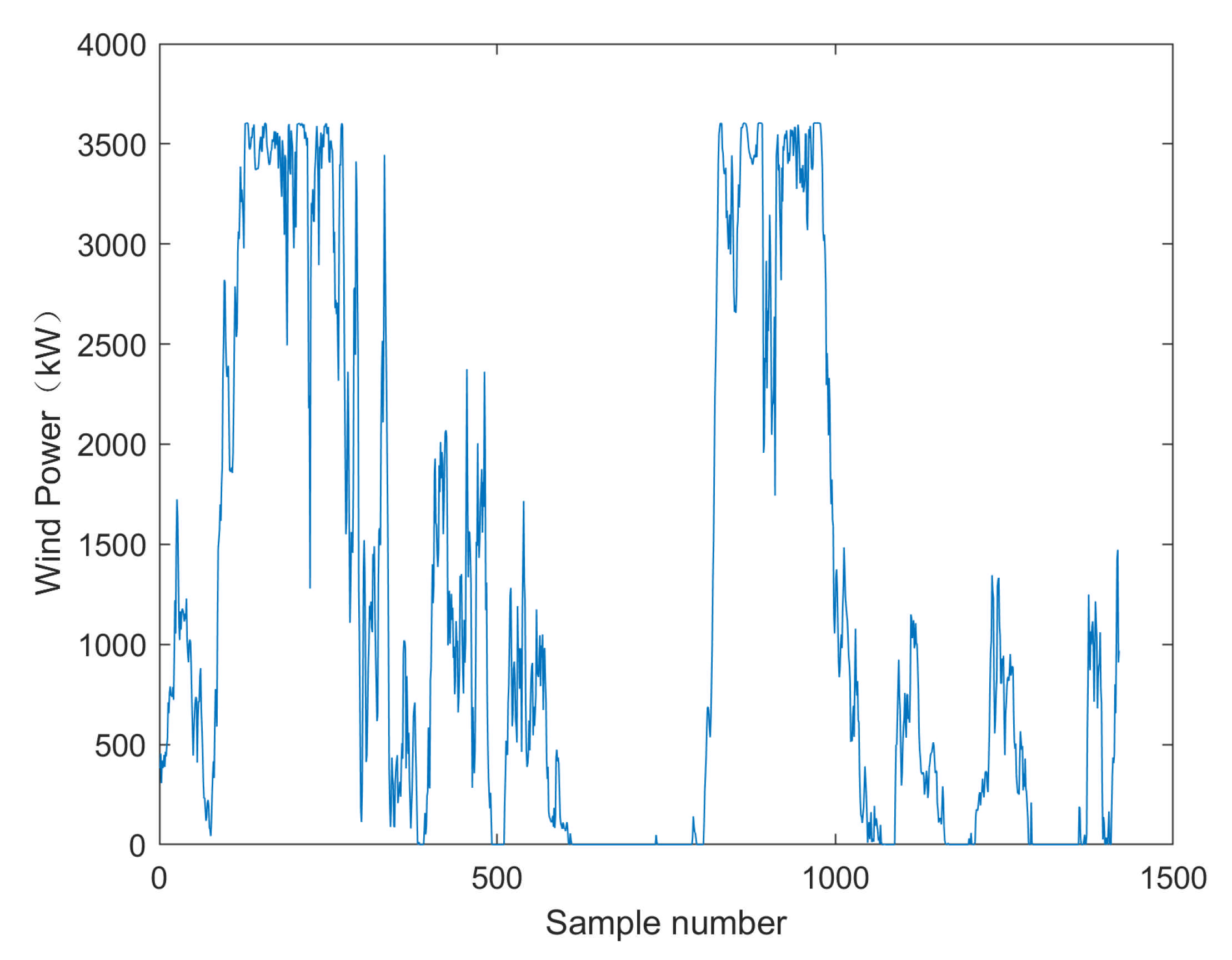

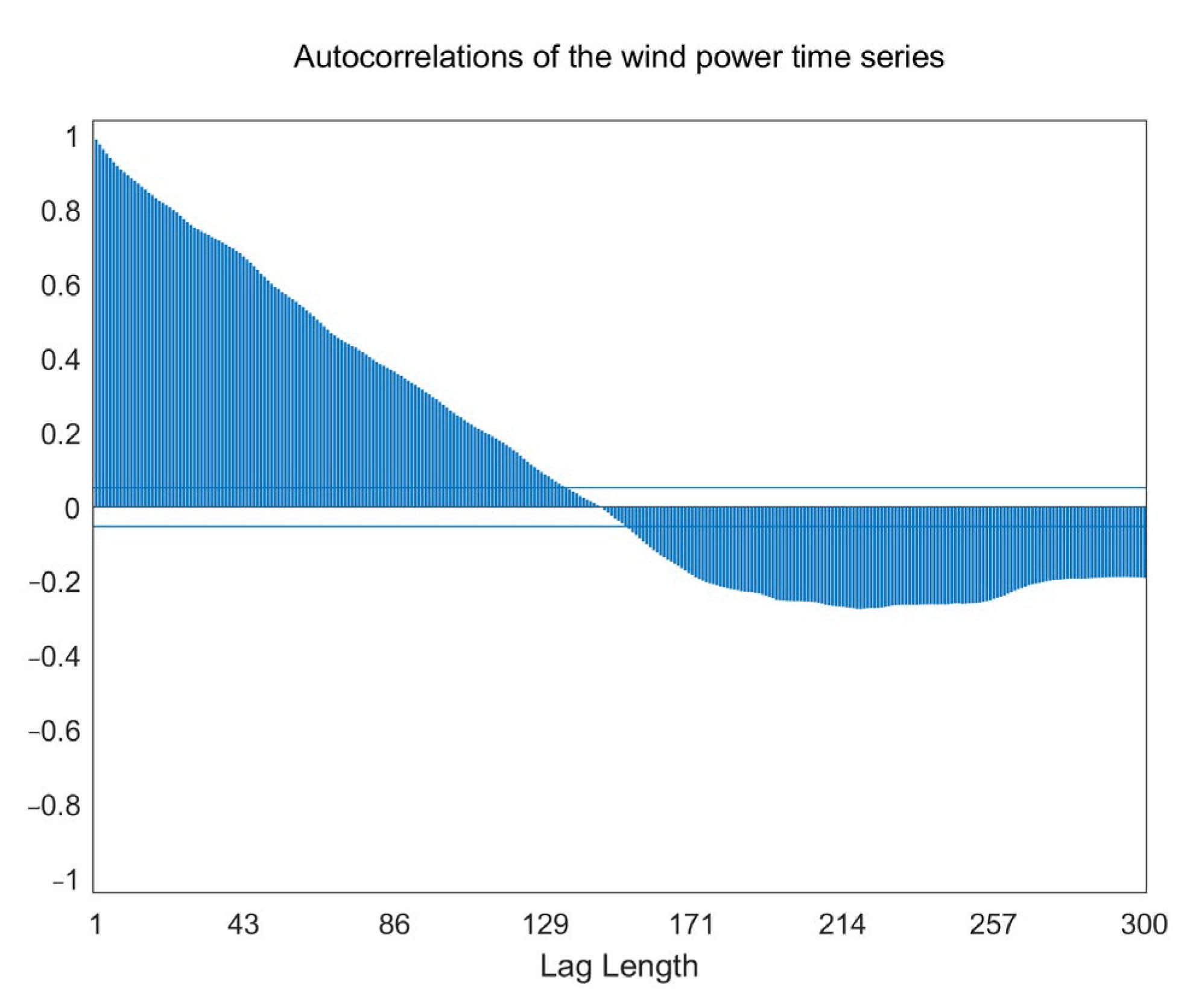

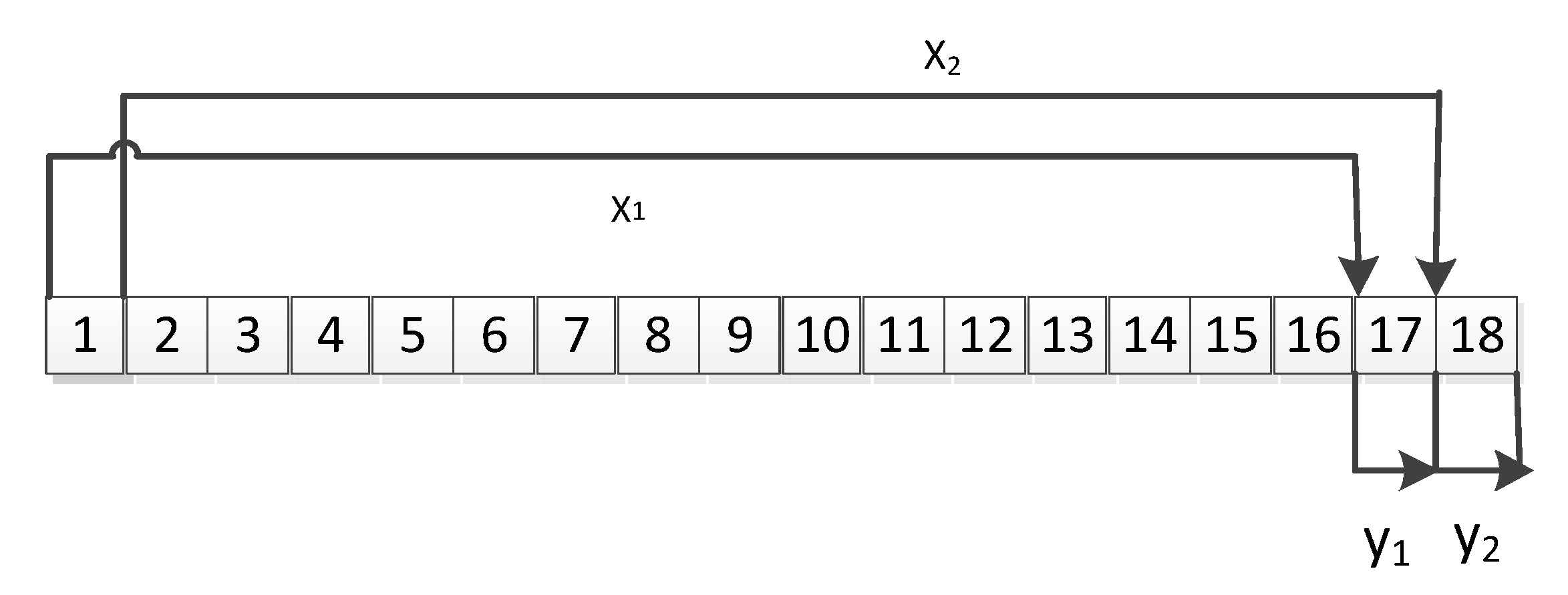

3.1. Sample Selection and Processing

3.2. Optimizing Performance Analysis

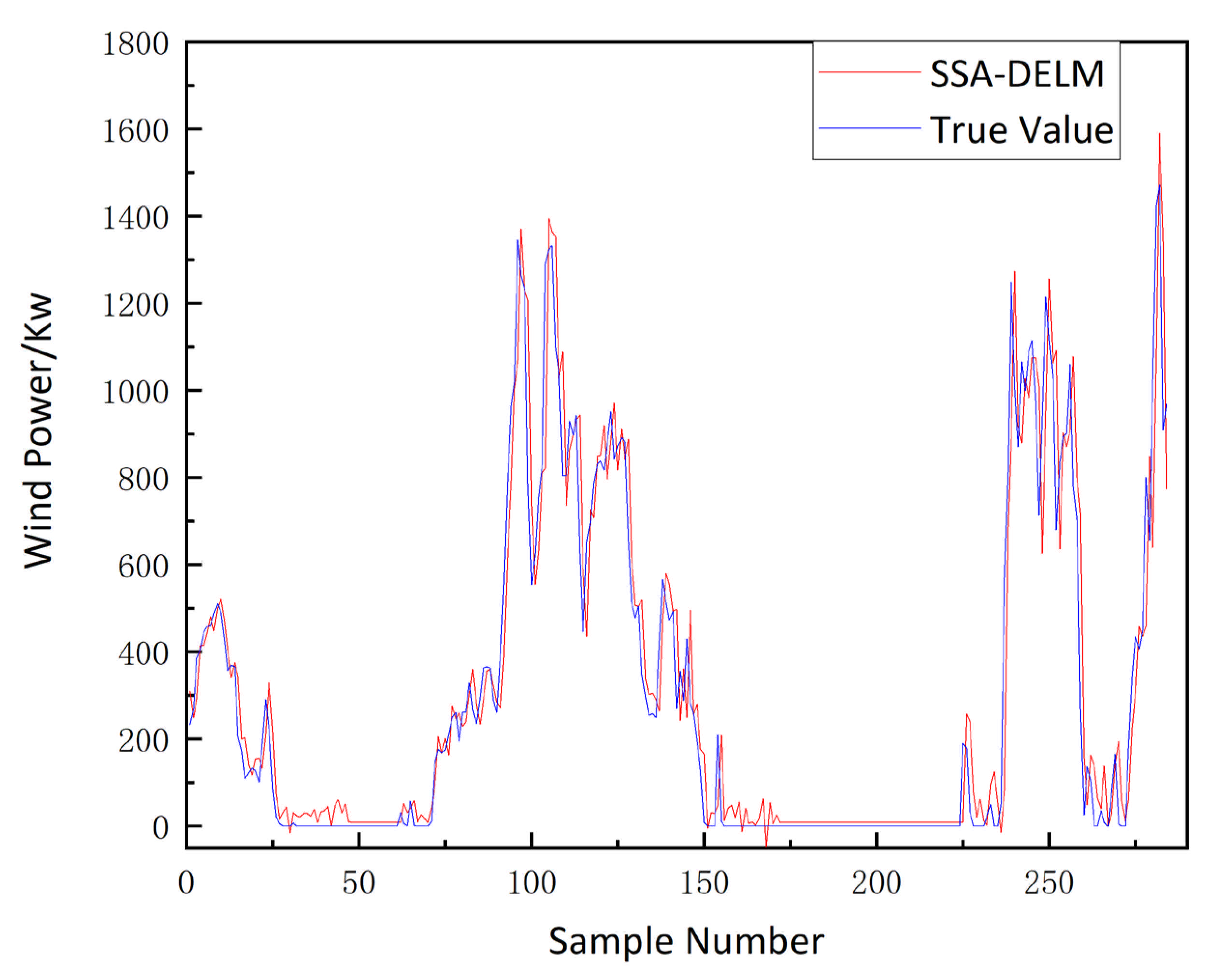

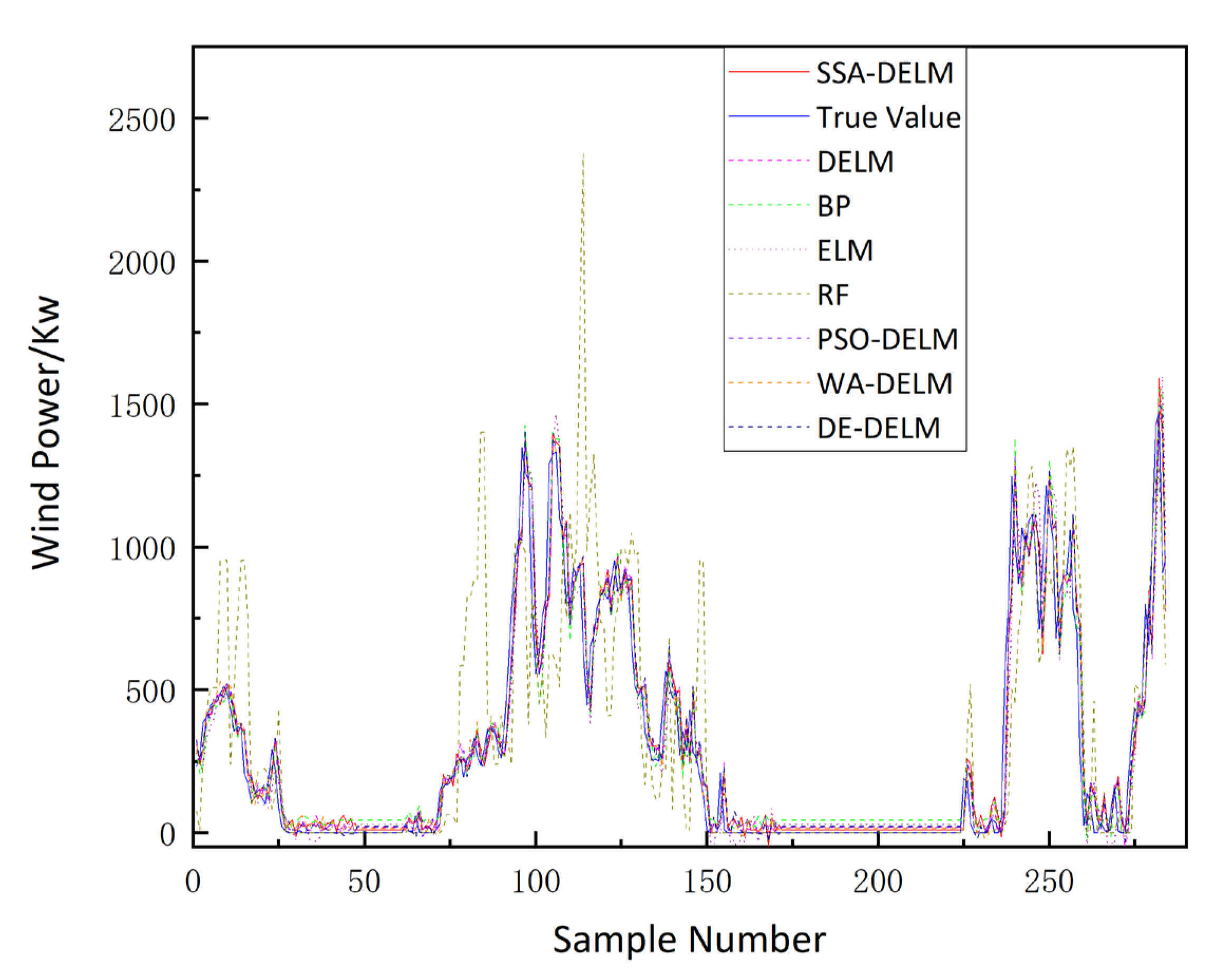

3.3. Analysis of Prediction Results

4. Conclusions

- (1)

- The method based on the DELM model to optimize time series’ length for rolling sequence prediction can meet the requirements of the proposed SSA-DELM model to accomplish higher training efficiency;

- (2)

- The SSA-DELM wind power prediction model proposed in this paper has better performance than the four models of RF, BP, ELM, and SSA-ELM in terms of MAE, RMSE, and R². Compared with traditional DELM, the combined model of SSA optimized DELM proposed in this article reduces the above RMSE and MAE by 4.801% and 5.566%, respectively, and increases R² by 2.589%. Compared with DE-DELM, PSO-DELM and WA-DELM, the model proposed in this paper reduces RMSE, MAE and increases R² by 1.726%, 0.686%, 0.609%; 4.215%, 3.970%, 3.676%; 1.726%, 1.294%, and 0.647%, respectively.

- (3)

- In the current wind power forecasting model, the input and output samples are normalized power time series, which is sensitive to noise and abnormal data. In the future, we will consider using advanced data processing methods to structure the original data, reduce noise, and then separately predict the sequence obtained from the decomposition and fuse the prediction results to improve the robustness of the model.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Conflicts of Interest

References

- Oh, E.; Son, S.-Y. Theoretical energy storage system sizing method and performance analysis for wind power forecast uncertainty management. Renew. Energy 2020, 155, 1060–1069. [Google Scholar] [CrossRef]

- Zhou, M.; Wang, B.; Guo, S.; Watada, J. Multi-objective prediction intervals for wind power forecast based on deep neural networks. Inf. Sci. 2020, 550, 207–220. [Google Scholar] [CrossRef]

- Santhosh, M.; Venkaiah, C.; Vinod Kumar, D.M. Review on Key Technologies and Applications in Wind Power Forecasting. High Volt. Eng. 2021, 47, 1129–1143. [Google Scholar]

- Huang, B.; Liang, Y.; Qiu, X. Wind Power Forecasting Using Attention-Based Recurrent Neural Networks: A Comparative Study. IEEE Access 2021, 9, 40432–40444. [Google Scholar] [CrossRef]

- Han, L.; Jing, H.; Zhang, R.; Gao, Z. Wind power forecast based on improved Long Short Term Memory network. Energy 2019, 189, 116300. [Google Scholar] [CrossRef]

- Wu, Q.; Lin, H. Short-Term Wind Speed Forecasting Based on Hybrid Variational Mode Decomposition and Least Squares Support Vector Machine Optimized by Bat Algorithm Model. Sustainability 2019, 11, 652. [Google Scholar] [CrossRef] [Green Version]

- Yu, Y.; Yang, M.; Han, X.; Zhang, Y.; Ye, P. A Regional Wind Power Probabilistic Forecast Method Based on Deep Quantile Regression. IEEE Trans. Ind. Appl. 2021, 57, 4420–4427. [Google Scholar] [CrossRef]

- Hong, D.; Ji, T.; Li, M.; Wu, Q. Ultra-short-term forecast of wind speed and wind power based on morphological high frequency filter and double similarity search algorithm. Int. J. Electr. Power Energy Syst. 2018, 104, 868–879. [Google Scholar] [CrossRef]

- Li, Q.; Zhang, X.Y.; Ma, T.J.; Ma, T.; Wang, H.; Yin, H.S. Multi-step Ahead Ultra-short Term Forecasting of Wind Power Based on ECBO-VMD-WKELM. Power Syst. Technol. 2021, 3, 1–14. [Google Scholar]

- Li, J.; Li, M. Prediction of ultra-short-term wind power based on BBO-KELM method. J. Renew. Sustain. Energy 2019, 11, 056104. [Google Scholar] [CrossRef]

- Yang, M.; Zhang, L.; Cui, Y.; Yang, Q.; Huang, B. The impact of wind field spatial heterogeneity and variability on short-term wind power forecast errors. J. Renew. Sustain. Energy 2019, 11, 033304. [Google Scholar] [CrossRef] [Green Version]

- An, G.; Jiang, Z.; Cao, X.; Liang, Y.; Zhao, Y.; Li, Z.; Dong, W.; Sun, H. Short-term Wind Power Prediction Based On Particle Swarm Optimization-Extreme Learning Machine Model Combined with Adaboost Algorithm. IEEE Access 2021, 9, 1. [Google Scholar] [CrossRef]

- Li, L.-L.; Zhao, X.; Tseng, M.-L.; Tan, R.R. Short-term wind power forecasting based on support vector machine with improved dragonfly algorithm. J. Clean. Prod. 2019, 242, 118447. [Google Scholar] [CrossRef]

- Zhao, H.; Zhao, H.; Guo, S. Short-Term Wind Electric Power Forecasting Using a Novel Multi-Stage Intelligent Algorithm. Sustainability 2018, 10, 881. [Google Scholar] [CrossRef] [Green Version]

- Dolatabadi, A.; Abdeltawab, H.; Mohamed, Y.A. Short-term Wind Power Prediction Based on Dynamic Cluster Division and BLSTM Deep Learning Method. High Volt. Eng. 2021, 47, 1195–1203. [Google Scholar]

- Alhaidari, F.; AlMotiri, S.H.; Al Ghamdi, M.A.; Khan, M.A.; Rehman, A.; Abbas, S.; Khan, K.M.; Rahman, A.U. Intelligent Software-Defined Network for Cognitive Routing Optimization using Deep Extreme Learning Machine Approach. Comput. Mater. Contin. 2021, 67, 1269–1285. [Google Scholar] [CrossRef]

- Nayak, D.R.; Das, D.; Dash, R.; Majhi, S.; Majhi, B. Deep extreme learning machine with leaky rectified linear unit for multiclass classification of pathological brain images. Multimed. Tools Appl. 2019, 79, 15381–15396. [Google Scholar] [CrossRef]

- Zhao, F.X.; Liu, Y.X.; Huo, K. A radar target classification algorithm based on dropout constrained deep extreme learning machine. J. Radars 2018, 7, 613–621. [Google Scholar]

- Khatab, Z.E.; Hajihoseini, A.; Ghorashi, S.A. A Fingerprint Method for Indoor Localization Using Autoencoder Based Deep Extreme Learning Machine. IEEE Sens. Lett. 2017, 2, 1–4. [Google Scholar] [CrossRef]

- Suzuki, G.; Takahashi, S.; Ogawa, T.; Haseyama, M. Team Tactics Estimation in Soccer Videos Based on a Deep Extreme Learning Machine and Characteristics of the Tactics. IEEE Access 2019, 7, 153238–153248. [Google Scholar] [CrossRef]

- Yin, Z.; Zhang, J. Task-generic mental fatigue recognition based on neurophysiological signals and dynamical deep extreme learning machine. Neurocomputing 2018, 283, 266–281. [Google Scholar] [CrossRef]

- Lu, P.; Ye, L.; Sun, B.; Zhang, C.; Zhao, Y.; Teng, J. A New Hybrid Prediction Method of Ultra-Short-Term Wind Power Forecasting Based on EEMD-PE and LSSVM Optimized by the GSA. Energies 2018, 11, 697. [Google Scholar] [CrossRef] [Green Version]

- Wu, J.; Zhou, T.; Li, T. A Hybrid Approach Integrating Multiple ICEEMDANs, WOA, and RVFL Networks for Economic and Financial Time Series Forecasting. Complexity 2020, 2020, 1–17. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhang, J.; Luo, L.; Gao, X. Optimization of LMBP high-speed railway wheel size prediction algorithm based on improved adaptive differential evolution algorithm. Int. J. Distrib. Sens. Netw. 2019, 15, 348. [Google Scholar] [CrossRef]

- Lei, B.Y.; Wang, Z.C.; Su, Y.Q.; Sun, W.Z.; Yang, L.Y. Research on Short-term Load Forecasting Method Based on EEMD-CS-LSSVM. Proc. CSU-EPSA 2021, 3, 1–7. [Google Scholar]

- Gómez, J.L.; Martínez, A.O.; Pastoriza, F.T.; Garrido, L.F.; Álvarez, E.; García, J.O. Photovoltaic Power Prediction Using Artificial Neural Networks and Numerical Weather Data. Sustainability 2020, 12, 10295. [Google Scholar] [CrossRef]

- Ahmadi, M.H.; Ahmadi, M.A.; Nazari, M.A.; Mahian, O.; Ghasempour, R. A proposed model to predict thermal conductivity ratio of Al2O3/EG nanofluid by applying least squares support vector machine (LSSVM) and genetic algorithm as a connectionist approach. J. Therm. Anal. Calorimetry. 2019, 135, 271–281. [Google Scholar] [CrossRef]

- Ahmadi, M.H.; Tatar, A.; Nazari, M.A.; Ghasempour, R.; Chamkha, A.J.; Yan, W.M. Applicability of connectionist methods to predict thermal resistance of pulsating heat pipes with ethanol by using neural networks. Int. J. Heat Mass Transf. 2018, 126, 1079–1086. [Google Scholar] [CrossRef]

- Ahmadi, M.H.; Sadeghzadeh, M.; Raffiee, A.H.; Chau, K.W. Applying GMDH neural network to estimate the thermal resistance and thermal conductivity of pulsating heat pipes. Eng. Appl. Comput. Fluid Mech. 2019, 13, 327–336. [Google Scholar] [CrossRef] [Green Version]

- Yue, X.Y.; Peng, X.A.; Lin, L. Short-term Wind Power Forecasting Based on Whales Optimization Algorithm and Support Vector Machine. Proc. CSU-EPSA 2020, 32, 146–150. [Google Scholar]

- Li, N.; He, F.; Ma, W.; Wang, R.; Zhang, X. Wind Power Prediction of Kernel Extreme Learning Machine Based on Differential Evolution Algorithm and Cross Validation Algorithm. IEEE Access 2020, 8, 68874–68882. [Google Scholar] [CrossRef]

- Devi, A.S.; Maragatham, G.; Boopathi, K.; Rangaraj, A.G. Hourly day-ahead wind power forecasting with the EEMD-CSO-LSTM-EFG deep learning technique. Soft Comput. 2020, 24, 12391–12411. [Google Scholar] [CrossRef]

- Yuan, J.; Zhao, Z.; Liu, Y.; He, B.; Wang, L.; Xie, B.; Gao, Y. DMPPT Control of Photovoltaic Microgrid Based on Improved Sparrow Search Algorithm. IEEE Access 2021, 9, 16623–16629. [Google Scholar] [CrossRef]

- Zhu, Y.; Yousefi, N. Optimal parameter identification of PEMFC stacks using Adaptive Sparrow Search Algorithm. Int. J. Hydrogen Energy 2021, 46, 9541–9552. [Google Scholar] [CrossRef]

- Liu, T.; Yuan, Z.; Wu, L.; Badami, B. Optimal brain tumor diagnosis based on deep learning and balanced sparrow search algorithm. Int. J. Imaging Syst. Technol. 2021, 12, 21. [Google Scholar] [CrossRef]

- Tuerxun, W.; Chang, X.; Hongyu, G.; Zhijie, J.; Huajian, Z. Fault Diagnosis of Wind Turbines Based on a Support Vector Machine Optimized by the Sparrow Search Algorithm. IEEE Access 2021, 9, 69307–69315. [Google Scholar] [CrossRef]

- Sulandri, S.; Basuki, A.; Bachtiar, F.A. Metode Deteksi Intrusi Menggunakan Algoritme Extreme Learning Machine dengan Correlation-based Feature Selection. J. Teknol. Inf. Dan Ilmu Kompʹut. 2021, 8, 103–110. [Google Scholar] [CrossRef]

- Inam, A.; Zhulim, S.A.; Din, S.U.; Atta, A.; Naaseer, I.; Siddiqui, S.Y.; Khan, M.A. Detection of COVID-19 Enhanced by a Deep Extreme Learning Machine. Intell. Autom. Soft Comput. 2021, 27, 701–712. [Google Scholar] [CrossRef]

- Wang, H.R.; Xian, Y. Optimal configuration of distributed generation based on sparrow search algorithm. IOP Conference Series. Earth Environ. Sci. 2021, 647, 012053. [Google Scholar]

- Rehman, A.; Athar, A.; Khan, M.A.; Abbas, S.; Fatima, A.; Rahman, A.U.; Saeed, A. Modelling, simulation, and optimization of diabetes type II prediction using deep extreme learning machine. J. Ambient. Intell. Smart Environ. 2020, 12, 125–138. [Google Scholar] [CrossRef]

- Qin, G.; Yan, Q.; Zhu, J.; Xu, C.; Kammen, D. Day-Ahead Wind Power Forecasting Based on Wind Load Data Using Hybrid Optimization Algorithm. Sustainability 2021, 13, 1164. [Google Scholar] [CrossRef]

| Sample Point | Wind Power (kW) | Wind Speed (m/s) | Wind Direction (°) |

|---|---|---|---|

| 1 | 752.73 | 6.60 | 242.78 |

| 2 | 589.07 | 5.98 | 234.98 |

| 3 | 1109.13 | 7.42 | 235.15 |

| 4 | 1482.46 | 8.19 | 238.48 |

| 5 | 1523.43 | 8.27 | 237.03 |

| RMSE | MAE | R² | |

|---|---|---|---|

| RF | 285.881 | 159.592 | 0.601 |

| BP | 132.592 | 94.360 | 0.826 |

| ELM | 128.356 | 77.146 | 0.893 |

| DELM | 121.268 | 73.917 | 0.903 |

| DE-DELM | 117.473 | 72.875 | 0.911 |

| PSO-DELM | 116.243 | 72.689 | 0.915 |

| WA-DELM | 116.153 | 72.467 | 0.921 |

| SSA-DELM | 115.446 | 69.803 | 0.927 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

An, G.; Jiang, Z.; Chen, L.; Cao, X.; Li, Z.; Zhao, Y.; Sun, H. Ultra Short-Term Wind Power Forecasting Based on Sparrow Search Algorithm Optimization Deep Extreme Learning Machine. Sustainability 2021, 13, 10453. https://doi.org/10.3390/su131810453

An G, Jiang Z, Chen L, Cao X, Li Z, Zhao Y, Sun H. Ultra Short-Term Wind Power Forecasting Based on Sparrow Search Algorithm Optimization Deep Extreme Learning Machine. Sustainability. 2021; 13(18):10453. https://doi.org/10.3390/su131810453

Chicago/Turabian StyleAn, Guoqing, Ziyao Jiang, Libo Chen, Xin Cao, Zheng Li, Yuyang Zhao, and Hexu Sun. 2021. "Ultra Short-Term Wind Power Forecasting Based on Sparrow Search Algorithm Optimization Deep Extreme Learning Machine" Sustainability 13, no. 18: 10453. https://doi.org/10.3390/su131810453

APA StyleAn, G., Jiang, Z., Chen, L., Cao, X., Li, Z., Zhao, Y., & Sun, H. (2021). Ultra Short-Term Wind Power Forecasting Based on Sparrow Search Algorithm Optimization Deep Extreme Learning Machine. Sustainability, 13(18), 10453. https://doi.org/10.3390/su131810453