A Framework for Evaluating and Disclosing the ESG Related Impacts of AI with the SDGs

Abstract

:1. Introduction

2. Sustainability, the SDGs, and Efforts to Tackle the Sustainability of Corporations

There are several reasons for the current concern with corporate social responsibility. In recent years, the level of criticism of the business system has risen sharply. Not only has the performance of business been called into question, but so too have the power and privilege associated with large corporations. Some critics have even questioned the corporate system’s ability to cope with future problems.[14] (p. 59)

3. The Sustainability Impacts of AI

… we considered as AI any software technology with at least one of the following capabilities: perception—including audio, visual, textual, and tactile (e.g., face recognition), decision-making (e.g., medical diagnosis systems), prediction (e.g., weather forecast), automatic knowledge extraction and pattern recognition from data (e.g., discovery of fake news circles in social media), interactive communication (e.g., social robots or chat bots), and logical reasoning (e.g., theory development from premises). This view encompasses a large variety of subfields, including machine learning.

Sustainability reporting, as promoted by the GRI Standards, is an organisation’s practice of reporting publicly on its economic, environmental, and/or social impacts, and hence its contributions—positive or negative—towards the goal of sustainable development.[38] (p. 3)

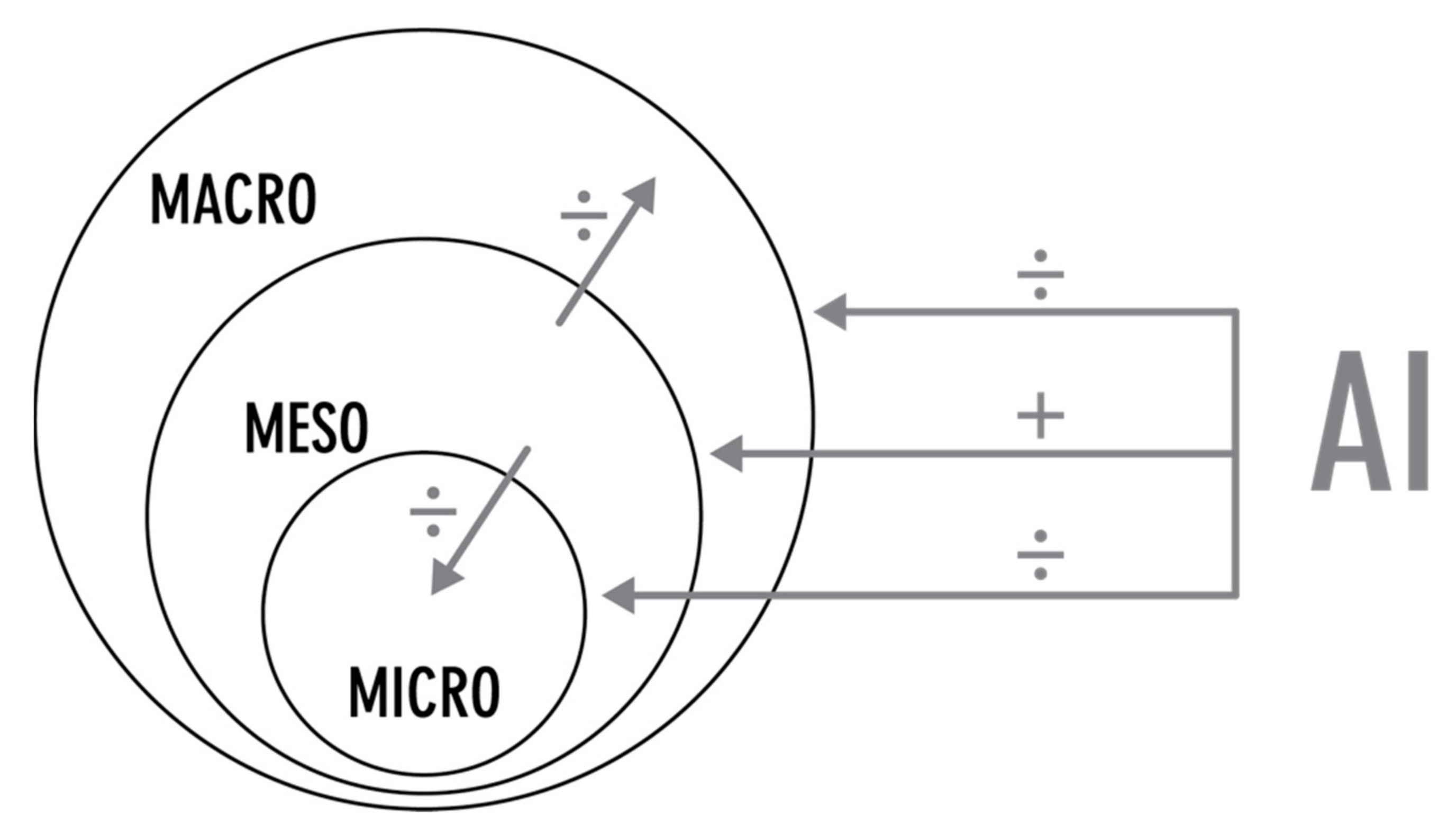

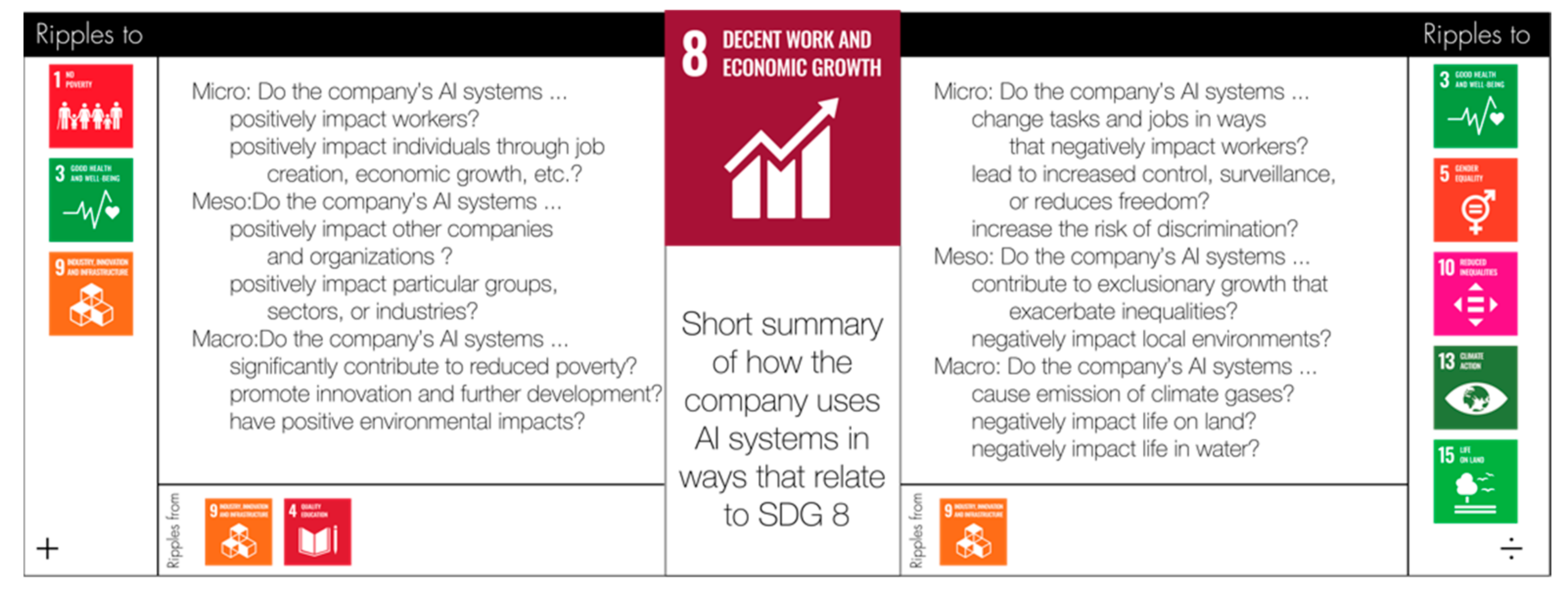

4. Using the SDGs to Evaluate AI System Impacts

4.1. The Process and the Framework

4.2. AI Evaluation in Practice

And, as we make advancements in AI, we are asking ourselves tough questions—like not only what computers can do, but what should they do. That’s why we are investing in tools for detecting and addressing bias in AI systems and advocating for thoughtful government regulation.[60] (p. 12)

… is a powerful force for good, and all of us here at Microsoft are working together to foster a sustainable future where everyone has access to the benefits it provides and the opportunities it creates.[42] (p. 4)

5. Conclusions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Makridakis, S. The forthcoming Artificial Intelligence (AI) revolution: Its impact on society and firms. Futures 2017, 90, 46–60. [Google Scholar] [CrossRef]

- De Sousa, W.G.; de Melo, E.R.P.; Bermejo, P.H.D.S.; Farias, R.A.S.; Gomes, A.O. How and where is artificial intelligence in the public sector going? A literature review and research agenda. Gov. Inf. Q. 2019, 36, 101392. [Google Scholar] [CrossRef]

- Di Vaio, A.; Palladino, R.; Hassan, R.; Escobar, O. Artificial intelligence and business models in the sustainable development goals perspective: A systematic literature review. J. Bus. Res. 2020, 121, 283–314. [Google Scholar] [CrossRef]

- Brynjolfsson, E.; Mcafee, A. The business of artificial intelligence. Harv. Bus. Rev. 2017, 7, 3–11. [Google Scholar]

- Walker, J.; Pekmezovic, A.; Walker, G. Sustainable Development Goals: Harnessing Business to Achieve the SDGs through Finance, Technology and Law Reform; John Wiley & Sons: Hoboken, NJ, USA, 2019. [Google Scholar]

- Verbin, I. Corporate Responsibility in the Digital Age: A Practitioner’s Roadmap for Corporate Responsibility in the Digital Age; Routledge: London, UK, 2020. [Google Scholar]

- United Nations. Transforming Our World: The 2030 Agenda for Sustainable Development; Division for Sustainable Development Goals: New York, NY, USA, 2015. [Google Scholar]

- Van Wynsberghe, A. Sustainable AI: AI for sustainability and the sustainability of AI. AI Ethics 2021, 1–6. [Google Scholar] [CrossRef]

- Brundtland, G.H.; Khalid, M.; Agnelli, S.; Al-Athel, S.; Chidzero, B. Our Common Future; Oxford University Press: New York, NY, USA, 1987; Volume 8. [Google Scholar]

- Demuijnck, G.; Fasterling, B. The social license to operate. J. Bus. Ethics 2016, 136, 675–685. [Google Scholar] [CrossRef]

- Moon, J. Corporate Social Responsibility: A Very Short Introduction; OUP Oxford: Oxford, UK, 2014. [Google Scholar]

- Marczewska, M.; Kostrzewski, M. Sustainable business models: A bibliometric performance analysis. Energies 2020, 13, 6062. [Google Scholar] [CrossRef]

- Nosratabadi, S.; Mosavi, A.; Shamshirband, S.; Kazimieras Zavadskas, E.; Rakotonirainy, A.; Chau, K.W. Sustainable business models: A review. Sustainability 2019, 11, 1663. [Google Scholar] [CrossRef] [Green Version]

- Jones, T.M. Corporate social responsibility revisited, redefined. Calif. Manag. Rev. 1980, 22, 59–67. [Google Scholar] [CrossRef]

- Petit, N. Big Tech and the Digital Economy: The Moligopoly Scenario; Oxford University Press: Oxford, UK, 2020; p. 11. [Google Scholar]

- Zuboff, S. The Age of Surveillance Capitalism: The Fight for a Human Future at the New Frontier of Power: Barack Obama’s Books of 2019; PublicAffairs: New York, NY, USA, 2019. [Google Scholar]

- Vinuesa, R.; Azizpour, H.; Leite, I.; Balaam, M.; Dignum, V.; Domisch, S.; Felländer, A.; Langhans, S.D.; Tegmark, M.; Nerini, F.F. The role of artificial intelligence in achieving the Sustainable Development Goals. Nat. Commun. 2020, 11, 1–10. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Berenberg. Understanding the SDGs in Sustainable Investing; Joh Berenberg, Gossler & Co. KG: Hamburg, Germany, 2018. [Google Scholar]

- Esty, D.C.; Cort, T. (Eds.) Values at Work: Sustainable Investing and ESG Reporting; Palgrave McMillan: Cham, Switzerland, 2020. [Google Scholar]

- Eckhart, M. Financial Regulations and ESG Investing: Looking Back and Forward. In Values at Work; Esty, D.C., Cort, T., Eds.; Palgrave McMillan: Cham, Switzerland, 2020; pp. 211–228. [Google Scholar]

- European Commision. The European Green Deal; European Commision: Geneva, Switzerland, 2019. [Google Scholar]

- EU Technical Expert Group on Sustainable Finance. Taxonomy: Final Report of the Technical Expert Group on Sustainable Finance; EU Technical Expert Group on Sustainable Finance: Brussels, Belgium, 2020. [Google Scholar]

- Bose, S. Evolution of ESG Reporting Frameworks. In Values at Work; Esty, D.C., Cort, T., Eds.; Palgrave McMillan: Cham, Switzerland, 2020; pp. 13–33. [Google Scholar]

- World Economic Forum. Measuring Stakeholder Capitalism: Towards Common Metrics and Consistent Reporting of Sustainable Value Creation; World Economic Forum: Graubunden, Switzerland, 2020. [Google Scholar]

- SDG Compass. SDG Compass: The Guide for Business Action on the SDGs; Global Reporting Initiative: Amsterdam, The Netherlands, 2015. [Google Scholar]

- Chui, M.; Harryson, M.; Manyika, J.; Roberts, R.; Chung, R.; van Heteren, A.; Nel, P. Notes from the AI Frontier: Applying AI for Social Good; McKinsey Global Institute: San Francisco, CA, USA, 2018. [Google Scholar]

- Sætra, H.S. AI in context and the sustainable development goals: Factoring in the unsustainability of the sociotechnical system. Sustainability 2021, 13, 1738. [Google Scholar] [CrossRef]

- Truby, J. Governing Artificial Intelligence to benefit the UN Sustainable Development Goals. Sustain. Dev. 2020, 28, 946–959. [Google Scholar] [CrossRef]

- Khakurel, J.; Penzenstadler, B.; Porras, J.; Knutas, A.; Zhang, W. The rise of artificial intelligence under the lens of sustainability. Technologies 2018, 6, 100. [Google Scholar] [CrossRef] [Green Version]

- Toniolo, K.; Masiero, E.; Massaro, M.; Bagnoli, C. Sustainable business models and artificial intelligence: Opportunities and challenges. In Knowledge, People, and Digital Transformation; Springer: Cham, Switzerland, 2020; pp. 103–117. [Google Scholar]

- Yigitcanlar, T.; Cugurullo, F. The sustainability of artificial intelligence: An urbanistic viewpoint from the lens of smart and sustainable cities. Sustainability 2020, 12, 8548. [Google Scholar] [CrossRef]

- Dignum, V. Responsible Artificial Intelligence: How to Develop and Use AI in a Responsible Way; Springer: Cham, Switzerland, 2019. [Google Scholar]

- Floridi, L.; Cowls, J.; Beltrametti, M.; Chatila, R.; Chazerand, P.; Dignum, V.; Luetge, C.; Madelin, R.; Pagallo, U.; Rossi, F. AI4People—An ethical framework for a good AI society: Opportunities, risks, principles, and recommendations. Minds Mach. 2018, 28, 689–707. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- ITU. AI4Good Global Summit. Available online: https://aiforgood.itu.int (accessed on 31 January 2021).

- Tomašev, N.; Cornebise, J.; Hutter, F.; Mohamed, S.; Picciariello, A.; Connelly, B.; Belgrave, D.C.; Ezer, D.; van der Haert, F.C.; Mugisha, F. AI for social good: Unlocking the opportunity for positive impact. Nat. Commun. 2020, 11, 1–6. [Google Scholar] [CrossRef]

- Google. AI for Social Good: Applying AI to Some of the World’s Biggest Challenges. Available online: https://ai.google/social-good/ (accessed on 20 February 2021).

- Berberich, N.; Nishida, T.; Suzuki, S. Harmonizing Artificial Intelligence for Social Good. Philos. Technol. 2020, 33, 613–638. [Google Scholar] [CrossRef]

- GRI. Consolidated Set of GRI Sustainability Reporting Standards 2020; GRI: Amsterdam, The Netherland, 2020. [Google Scholar]

- GRI. Linking the SDGs and the GRI Standards; GRI: Amsterdam, The Netherland, 2020. [Google Scholar]

- Nižetić, S.; Djilali, N.; Papadopoulos, A.; Rodrigues, J.J. Smart technologies for promotion of energy efficiency, utilization of sustainable resources and waste management. J. Clean. Prod. 2019, 231, 565–591. [Google Scholar] [CrossRef]

- Kaab, A.; Sharifi, M.; Mobli, H.; Nabavi-Pelesaraei, A.; Chau, K.-w. Combined life cycle assessment and artificial intelligence for prediction of output energy and environmental impacts of sugarcane production. Sci. Total Environ. 2019, 664, 1005–1019. [Google Scholar] [CrossRef]

- Microsoft. Microsoft and the United Nations Sustainable Development Goals; Microsoft: Redmond, WA, USA, 2020. [Google Scholar]

- Google. Environmental Report 2019; Google: Menlo Park, CA, USA, 2019. [Google Scholar]

- Google. Working Together to Apply AI for Social Good. Available online: https://ai.google/social-good/impact-challenge (accessed on 23 February 2021).

- Nilashi, M.; Rupani, P.F.; Rupani, M.M.; Kamyab, H.; Shao, W.; Ahmadi, H.; Rashid, T.A.; Aljojo, N. Measuring sustainability through ecological sustainability and human sustainability: A machine learning approach. J. Clean. Prod. 2019, 240, 118162. [Google Scholar] [CrossRef]

- Houser, K.A. Can AI Solve the Diversity Problem in the Tech Industry: Mitigating Noise and Bias in Employment Decision-Making. Stan. Tech. L. Rev. 2019, 22, 290. [Google Scholar]

- Noble, S.U. Algorithms of Oppression: How Search Engines Reinforce Racism; New York University Press: New York, NY, USA, 2018. [Google Scholar]

- Buolamwini, J.; Gebru, T. Gender shades: Intersectional accuracy disparities in commercial gender classification. In Proceedings of the Conference on Fairness, Accountability and Transparency, New York, NY, USA, 23–24 February 2018; pp. 77–91. [Google Scholar]

- Bender, E.M.; Gebru, T.; McMillan-Major, A.; Shmitchell, S. On the dangers of stochastic parrots: Can language models be too big. In Proceedings of the 2021 ACM Conference on Fairness, Accountability, and Transparency, Virtual Event Canada, 3–10 March 2021. [Google Scholar] [CrossRef]

- Solove, D.J. Privacy and power: Computer databases and metaphors for information privacy. Stan. L. Rev. 2000, 53, 1393. [Google Scholar] [CrossRef] [Green Version]

- Yeung, K. ‘Hypernudge’: Big Data as a mode of regulation by design. Inf. Commun. Soc. 2017, 20, 118–136. [Google Scholar] [CrossRef] [Green Version]

- Sætra, H.S. When nudge comes to shove: Liberty and nudging in the era of big data. Technol. Soc. 2019, 59, 101130. [Google Scholar] [CrossRef]

- Culpepper, P.D.; Thelen, K. Are we all amazon primed? consumers and the politics of platform power. Comp. Political Stud. 2020, 53, 288–318. [Google Scholar] [CrossRef] [Green Version]

- Gillespie, T. The politics of ‘platforms’. New Media Soc. 2010, 12, 347–364. [Google Scholar] [CrossRef]

- Sagers, C. Antitrust and Tech Monopoly: A General Introduction to Competition Problems in Big Data Platforms: Testimony Before the Committee on the Judiciary of the Ohio Senate. Available online: https://papers.ssrn.com/sol3/papers.cfm?abstract_id=3471823 (accessed on 17 October 2019).

- Sætra, H.S. The tyranny of perceived opinion: Freedom and information in the era of big data. Technol. Soc. 2019, 59, 101155. [Google Scholar] [CrossRef]

- Sunstein, C.R. # Republic: Divided Democracy in the Age of Social Media; Princeton University Press: Princeton, NJ, USA, 2018. [Google Scholar]

- Brevini, B. Black boxes, not green: Mythologizing artificial intelligence and omitting the environment. Big Data Soc. 2020, 7, 2053951720935141. [Google Scholar] [CrossRef]

- Microsoft. Awards & Recognition. Available online: https://www.microsoft.com/en-us/corporate-responsibility/recognition (accessed on 15 February 2021).

- Microsoft. Microsoft 2018: Corporate Social Responsibility Report; Microsoft: Redmond, WA, USA, 2018. [Google Scholar]

- Microsoft. Microsoft 2019: Corporate Social Responsibility Report; Microsoft: Redmond, WA, USA, 2019. [Google Scholar]

- Microsoft. Our Commitment to Sustainable Development. Available online: https://www.microsoft.com/en-us/corporate-responsibility/un-sustainable-development-goals (accessed on 20 February 2021).

- Microsoft. The Future Computed: Artificial Intelligence and Its Role in Soviety; Microsoft: Redmond, WA, USA, 2018. [Google Scholar]

- Smith, B.; Browne, C.A. Tools and Weapons: The Promise and the Peril of the Digital Age; Penguin: New York, NY, USA, 2019. [Google Scholar]

- Manokha, I. The Implications of Digital Employee Monitoring and People Analytics for Power Relations in the Workplace. Surveill. Soc. 2020, 18, 540–554. [Google Scholar] [CrossRef]

- Europan Commision. Europe Fit for the Digital Age: Commission Proposes New Rules and Actions for Excellence and Trust in Artificial Intelligence; Europan Commision: Geneva, Switzerland, 2021. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sætra, H.S. A Framework for Evaluating and Disclosing the ESG Related Impacts of AI with the SDGs. Sustainability 2021, 13, 8503. https://doi.org/10.3390/su13158503

Sætra HS. A Framework for Evaluating and Disclosing the ESG Related Impacts of AI with the SDGs. Sustainability. 2021; 13(15):8503. https://doi.org/10.3390/su13158503

Chicago/Turabian StyleSætra, Henrik Skaug. 2021. "A Framework for Evaluating and Disclosing the ESG Related Impacts of AI with the SDGs" Sustainability 13, no. 15: 8503. https://doi.org/10.3390/su13158503

APA StyleSætra, H. S. (2021). A Framework for Evaluating and Disclosing the ESG Related Impacts of AI with the SDGs. Sustainability, 13(15), 8503. https://doi.org/10.3390/su13158503