Complex Thinking and Sustainable Social Development: Validity and Reliability of the COMPLEX-21 Scale

Abstract

1. Introduction

2. Literature Review

2.1. The Complex Thinking as an Epistemological Approach

2.2. Complex Thinking as High Order Thinking

2.3. Complex Thinking as a Macro-Competence

2.4. Complex Thinking as an or Comprehensive Performance

2.5. Scales to Assess Complex Thinking

3. Materials and Methods

3.1. Participants

3.2. Procedure

3.3. Ethical Aspects

4. Results

4.1. Stage 1. Design and Peer Review Process

4.2. Stage 2. Content Validity

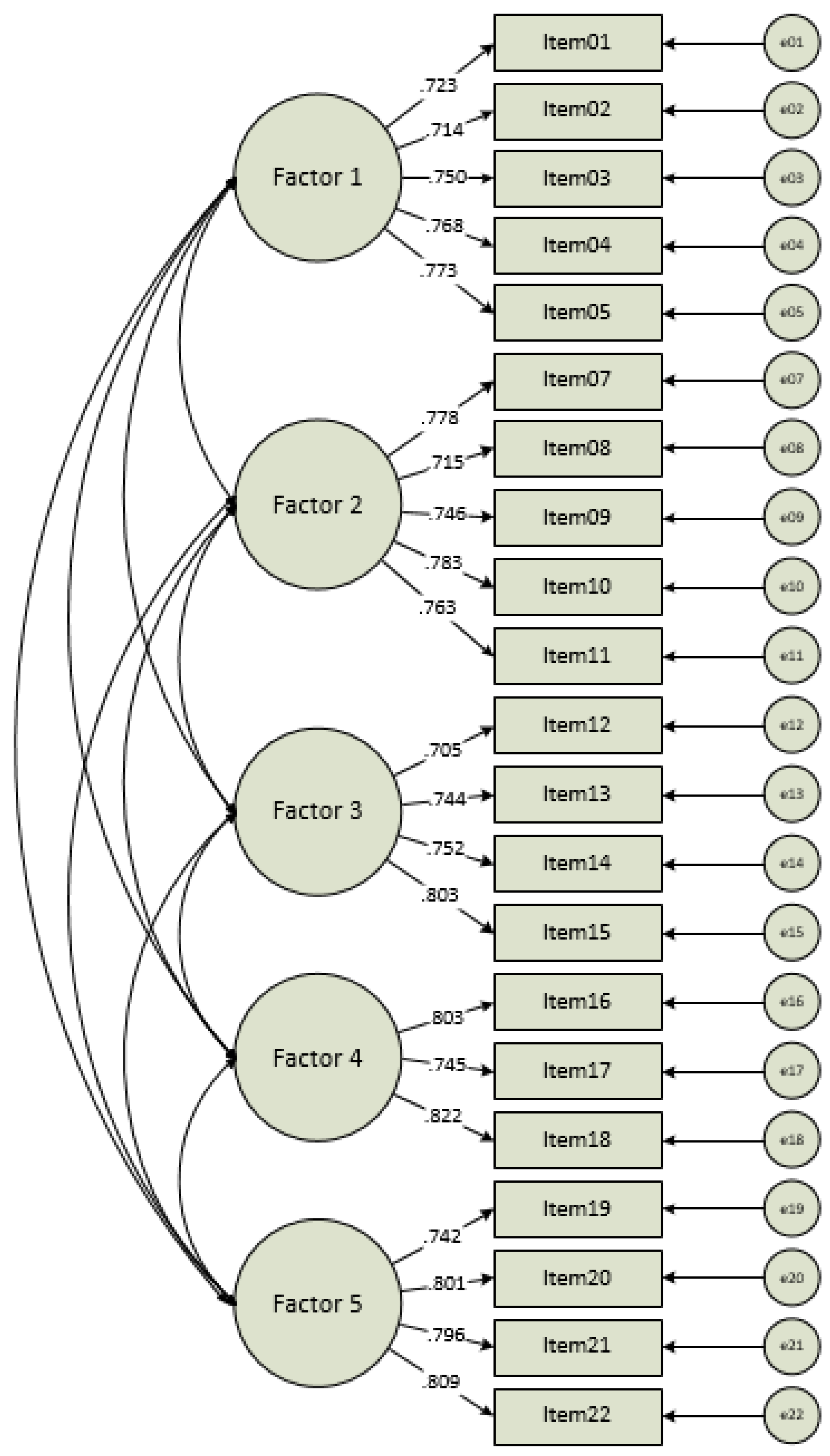

4.3. Stage 3. Construct Validity

4.4. Stage 4. Convergent Validity

5. Discussion

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A. Complex Thinking Scale in University Students

- There are 21 questions classified in five dimensions assessing complex thinking.

- Read each question carefully and choose the frequency of the process: Never, practically never, sometimes, practically always, and always. Choose the most suitable option regarding what you´ve done in the last six months.

- All of the questions must be answered.

- Your answers are fully confidential.

- This is simply a self-evaluating instrument and it will allow you to assess your development level in complex thinking. This is not a personality or intelligence test.

- By answering the instrument, you agree to participate in the process. All of the information will be strictly confidential.

| Problem Solving | Never | Practically Never | Sometimes | Practically Always | Always |

|---|---|---|---|---|---|

| 1. Are you able to identify, detect and/or deal with a problem to be solved? | |||||

| 2. Do you understand what a problem is and the different aspects composing it, such as the need that must be solved, the context of the problem and the challenge to overcome? | |||||

| 3. Do you understand problems by establishing the causes and consequences, as well as the side effects and the appropriateness of possible solutions? | |||||

| 4. Do you propose alternatives to solve problems, analyze them, compare them to each other and then pick the best option while considering possible situations of uncertainty in the context? | |||||

| 5. While facing a problem, do you find the solution by analyzing the several factors, relating them to each other, taking into account the possible side consequences and considering the uncertainty elements? | |||||

| Critical Analysis | Never | Practically Never | Sometimes | Practically Always | Always |

| * 6. Do you question the facts to find opportunity areas and to implement improvements? | |||||

| 7. Do you verify information, taking into account the bibliographic resources and the facts of the context? | |||||

| 8. Do you analyze your own and other people´s ideas, recognize the positive aspects and detect possible weak points to suggest new improvements? | |||||

| 9. Do you argue about—situations and problems while avoiding the generalizations and by assuming the possible weak points of your analysis? | |||||

| 10. Do you make your choices by considering both the positive and negative aspects of a situation and to achieve a specific goal? | |||||

| 11. Do you think and act in a flexible way and are you able to adapt to the situations of the context to resolve the problems? | |||||

| Metacognition | Never | Practically Never | Sometimes | Practically Always | Always |

| 12. Do you think about how are you going to carry out activities with the purpose of focusing on them, finishing them and achieving a specific purpose, while trying to correct possible errors? | |||||

| 13. Do you make changes in the way actions are carried out by thinking about them and do you correct your errors with the purpose of finishing the activities and achieving a specific goal? | |||||

| 14. Do you self-assess achievements and aspects to improve in the implementation of activities, and are you aware of the learning generated in order to use it in new situations? | |||||

| 15. Do you self-assess your moral actions, acknowledge your mistakes and make changes for the better in your actions? | |||||

| Systemic Analysis | Never | Practically Never | Sometimes | Practically Always | Always |

| 16. Do you face a problem from different points of view or perspectives while looking for their complementarity? | |||||

| 17. Do you intend to join forces with others to understand and solve problems of context more efficiently? | |||||

| 18. Do you intent to identify uncertain situations while addressing problems and do you face them with flexible strategies? | |||||

| Creativiy | Never | Practically Never | Sometimes | Practically Always | Always |

| 19. Do you find solutions to the problems without letting yourself be carried away by tradition or authority? | |||||

| 20. Are you the one proposing solutions to the problems and are these different from the ones already established in the context and the reported ones in bibliography resources? | |||||

| 21. Do you change the way in which you explain and solve a problem, through a different synthesis, a question that changes the analysis or even a new solution? | |||||

| 22. Do you intend to make a great impact in the problem-solving process regarding what has been done up to now and by following new strategies? |

References

- Mylek, M.R.; Schirmer, J. Understanding acceptability of fuel management to reduce wildfire risk: Informing communication through understanding complexity of thinking. For. Policy Econ. 2020, 113, 102120. [Google Scholar] [CrossRef]

- Axpe, M.R.V. Ciencias de la complejidad vs. pensamiento complejo. Claves para una lectura crítica del concepto de cientificidad en Carlos Reynoso. Pensam. Rev. Investig. Inf. Filos. 2019, 75, 87–106. [Google Scholar]

- Costa, V.T.; Meirelles, B.H.S. Adherence to treatment of young adults living with HIV/AIDS from the perspective of complex thinking. Texto Context. Enferm. 2019, 28, 1–15. [Google Scholar] [CrossRef]

- Stoian, A.P.; Mitrofan, G.; Colceag, F.; Suceveanu, A.I.; Hainarosie, R.; Pituru, S.; Diaconu, C.C.; Timofte, D.; Nitipir, C.; Poiana, C.; et al. Oxidative Stress in Diabetes. A model of complex thinking applied in medicine. Rev. Chim. 2018, 69, 2515–2519. [Google Scholar] [CrossRef]

- Block, M. Complex Environment Calls for Complex Thinking: About Knowledge Sharing Culture. J. Rev. Glob. Econ. 2019, 8, 141–152. [Google Scholar] [CrossRef]

- Lin, Y.-T. Impacts of a flipped classroom with a smart learning diagnosis system on students’ learning performance, perception, and problem solving ability in a software engineering course. Comput. Hum. Behav. 2019, 95, 187–196. [Google Scholar] [CrossRef]

- Krevetzakis, E. On the Centrality of Physical/Motor Activities in Primary Education. J. Adv. Educ. Res. 2019, 4, 24–33. [Google Scholar] [CrossRef]

- Hanlon, J.P.; Prihoda, T.J.; Verrett, R.G.; Jones, J.D.; Haney, S.J.; Hendricson, W.D. Critical Thinking in Dental Students and Experienced Practitioners Assessed by the Health Sciences Reasoning Test. J. Dent. Educ. 2018, 82, 916–920. [Google Scholar] [CrossRef]

- Peeters, M.J.; Zitko, K.L.; Schmude, K.A. Development of Critical Thinking in Pharmacy Education. Innov. Pharm. 2016, 7. [Google Scholar] [CrossRef][Green Version]

- Tran, T.B.L.; Ho, T.N.; MacKenzie, S.V.; Le, L.K. Developing assessment criteria of a lesson for creativity to promote teaching for creativity. Think. Ski. Creat. 2017, 25, 10–26. [Google Scholar] [CrossRef]

- Martinsen, Ø.L.; Furnham, A. Cognitive style and competence motivation in creative problem solving. Pers. Individ. Differ. 2019, 139, 241–246. [Google Scholar] [CrossRef]

- Morin, E. The Seven Knowledge Necessary for the Education of the Future; Santillana-Unesco: Paris, France, 1999. [Google Scholar]

- Morin, E. Introduction to Complex Thinking; Gedisa: Barcelona, Spain, 1995. [Google Scholar]

- Terrado, P.R. Aplicación de las teorías de la complejidad a la comprensión del territorio. Estud. Geogr. 2018, 79, 237–265. [Google Scholar] [CrossRef]

- Morin, E. La Mente Bien Ordenada. Repensar la Reforma. Reformar el Pensamiento; Seix Barral: Barcelona, Spain, 2000. [Google Scholar]

- Lipman, M. Pensamiento Complejo y Educación [Complex Thinking and Education]; Ediciones de la Torre: Madrid, Spain, 1997. [Google Scholar]

- Saremi, H.; Bahdori, S. The Relationship between Critical Thinking with Emotional Intelligence and Creativity among Elementary School Principals in Bojnord City, Iran. Int. J. Life Sci. 2015, 9, 33–40. [Google Scholar] [CrossRef][Green Version]

- Klimenko, O.; Aristizábal, A.; Restrepo, C. Pensamiento crítico y creativo en la educación preescolar: Algunos aportes desde la neuropsicopedagogia. Katharsis 2019, 28, 59–89. [Google Scholar] [CrossRef]

- Hasan, R.; Lukitasari, M.; Utami, S.; Anizar, A. The activeness, critical, and creative thinking skills of students in the Lesson Study-based inquiry and cooperative learning. J. Pendidik. Biol. Indones. 2019, 5, 77–84. [Google Scholar] [CrossRef][Green Version]

- Siburian, O.; Corebima, A.D.; Saptasari, M. The Correlation between Critical and Creative Thinking Skills on Cognitive Learning Results. Eurasian J. Educ. Res. 2019, 19, 1–16. [Google Scholar] [CrossRef]

- Avetisyan, N.; Hayrapetyan, L.R. Mathlet as a new approach for improving critical and creative thinking skills in mathematics. Int. J. Educ. Res. 2017, 12. Available online: https://cutt.ly/otxYbSB (accessed on 8 May 2021).

- Beaty, R.E.; Benedek, M.; Silvia, P.J.; Schacter, D.L. Creative Cognition and Brain Network Dynamics. Trends Cogn. Sci. 2016, 20, 87–95. [Google Scholar] [CrossRef]

- Asefi, M.; Imani, E. Effects of active strategic teaching model (ASTM) in creative and critical thinking skills of architecture students. Archnet-IJAR Int. J. Arch. Res. 2018, 12, 209–222. [Google Scholar] [CrossRef]

- Beaty, R.E.; Seli, P.; Schacter, D.L. Network neuroscience of creative cognition: Mapping cognitive mechanisms and individual differences in the creative brain. Curr. Opin. Behav. Sci. 2019, 27, 22–30. [Google Scholar] [CrossRef]

- Pacheco, C.S. Art Education for the Development of Complex Thinking Metacompetence: A Theoretical Approach. Int. J. Art Des. Educ. 2019, 39, 242–254. [Google Scholar] [CrossRef]

- Murrain, E.; Barrera, N.F.; Vargas, Y. Cuatro reflexiones sobre la docencia. Rev. Repert. Med. Cirugía 2017, 26, 242–248. [Google Scholar] [CrossRef]

- Puziol, J.K.P.; Barreyro, G.B. Alfa tuning Latin America project: The relationship between elaboration and imple-mentation in participating Brazilian universities. Acta Sci. 2018, 40, e37338. [Google Scholar]

- Gomez, J.T.A. La competencia europeísta: Una competencia integradora. Bordón. Rev. Pedagog. 2015, 67, 35. [Google Scholar] [CrossRef]

- Cuadra-Martínez, D.J.; Castro, P.J.; Juliá, M.T. Tres Saberes en la Formación Profesional por Competencias: Integración de Teorías Subjetivas, Profesionales y Científicas. Form. Univ. 2018, 11, 19–30. [Google Scholar] [CrossRef]

- Palma, M.; Rios, I.D.L.; Miñán, E. Generic competences in engineering field: A comparative study between Latin America and European Union. Procedia Soc. Behav. Sci. 2011, 15, 576–585. [Google Scholar] [CrossRef]

- Beneitone, P.; Esquetini, C.; González, J.; Maletá, M.M.; Siufi, G.; Wagenaar, R. Reflexões e Perspectivas da Edu-Cação Superior na América Latina: Informe Final do Projeto Tuning—2004–2007; Universidad de Deusto: Bilbao, Spain, 2007. [Google Scholar]

- Anastacio, M.R. Proposals for Teacher Training in the Face of the Challenge of Educating for Sustainable Development: Beyond Epistemologies and Methodologies. In Universities and Sustainable Communities: Meeting the Goals of the Agenda 2030; Springer: Cham, Switzerland, 2020. [Google Scholar]

- Luna-Nemecio, J.; Tobón, S.; Juárez-Hernández, L.G. Sustainability-based on socioformation and complex thought or sustainable social development. Resour. Environ. Sustain. 2020, 2, 100007. [Google Scholar] [CrossRef]

- Luna-Nemecio, J. Para pensar el Desarrollo Social Sostenible: Múltiples Enfoques, un Mismo Objetivo. 2020. Available online: https://www.researchgate.net/profile/Josemanuel-Luna-Nemecio/publication/339628029_Para_pensar_el_desarrollo_social_sostenible_multiples_enfoques_un_mismo_objetivo/links/5e5d2bfaa6fdccbeba138607/Para-pensar-el-desarrollo-social-sostenible-multiples-enfoques-un-mismo-objetivo.pdf (accessed on 8 May 2021).

- Jarquín-Cisneros, L.M. How to generate ethical teachers through Socioformation to achieve Sustainable Social Development? Ecocience Int. J. 2019, 1, 29–32. [Google Scholar] [CrossRef]

- Santoyo-Ledesma, D. Approach to Sustainable Social Development and Human Talent Management in the context of socioformation. Ecocience Int. J. 2019, 1, 112–123. [Google Scholar] [CrossRef]

- Maury Mena, S.C.; Marín Escobar, J.C.; Ortiz Padilla, M.; Gravini Donado, M. Competencias genéricas en estudiantes de educación superior de una universidad privada de Barranquilla Colombia, desde la perspectiva del Proyecto Alfa Tuning América Latina y del Ministerio de Educación Nacional de Colombia (MEN). Rev. Espac. 2018, 39. Available online: https://cutt.ly/vtnRKmb (accessed on 8 May 2021).

- Batrićević, A.; Joldžić, V.; Stanković, V.; Paunović, N. Solving the Problems of Rural as Environmentally Desirable Segment of Sustainable Development. Econ. Anal. 2018, 51, 79–91. [Google Scholar] [CrossRef][Green Version]

- Kung, C.-C.; Mu, J.E. Prospect of China’s renewable energy development from pyrolysis and biochar applications under climate change. Renew. Sustain. Energy Rev. 2019, 114, 109343. [Google Scholar] [CrossRef]

- Gherheș, V.; Obrad, C. Technical and Humanities Students’ Perspectives on the Development and Sustainability of Artificial Intelligence (AI). Sustainability 2018, 10, 3066. [Google Scholar] [CrossRef]

- Prado, R.A. La socioformación: Un enfoque de cambio educativo. Rev. Iberoam. Educ. 2018, 76, 57–82. [Google Scholar] [CrossRef]

- Martínez, J.E.; Tobón, S.; López, E. Complex Thought and Quality Accreditation of Curriculum in Online Higher Education. Adv. Sci. Lett. 2019, 25, 54–56. [Google Scholar] [CrossRef]

- Universidad Tecnológica Indoamérica Modelo Educativo; Universidad Indoamérica: Quito, Ecuador, 2019; Available online: https://issuu.com/cife/docs/modelo_educativo_uti (accessed on 8 May 2021).

- CIFE. Modelo Educativo Socioformaivo; CIFE: Morelos, Mexico, 2017; Available online: www.cife.edu.mx (accessed on 8 May 2021).

- Universidad Nacional Hermilio Valdizan. Modelo Educativo; Unheval: Huánuco, Peru, 2017. [Google Scholar]

- UNFV. Modelo Educativo de la UNFV, Socioformativo-Humanista; UNFV: Lima, Peru, 2017; Available online: https://issuu.com/cife/docs/modelo_educativo_unfv (accessed on 8 May 2021).

- Serrano, R.; Macias, W.; Rodriguez, K.; Amor, M.I. Validating a scale for measuring teachers’ expectations about generic competences in higher education. J. Appl. Res. High. Educ. 2019, 11, 439–451. [Google Scholar] [CrossRef]

- Burgos, J.A.B.; Salvador, M.R.A.; Narváez, H.O.P. Del pensamiento complejo al pensamiento computacional: Retos para la educación contemporánea. Sophía 2016, 2, 143. [Google Scholar] [CrossRef]

- Ortega-Carbajal, M.F.; Hernández-Mosqueda, J.S.; Tobón-Tobón, S. Análisis documental de la gestión del conoci-miento mediante la cartografía conceptual. Ra Ximhai 2015, 11, 141–160. [Google Scholar] [CrossRef]

- Kember, D.; Leung, D.Y.P. Development of a questionnaire for assessing students’ perceptions of the teaching and learning environment and its use in quality assurance. Learn. Environ. Res. 2009, 12, 15–29. [Google Scholar] [CrossRef]

- Gargallo, B.; Suárez-Rodríguez, J.M.; Almerich, G.; Verde, I.; Iranzo, M.I.; Àngels, C. Validación del cuestionario SEQ en población universitaria española. Capacidades del alumno y entorno de enseñanza/aprendizaje. Anales Psicol. 2018, 34, 519–530. [Google Scholar] [CrossRef]

- Watson, G. Watson-Glaser Critical Thinking Appraisal; Psychological Corporation: San Antonio, TX, USA, 1980; Available online: https://cutt.ly/3tWodUY (accessed on 8 May 2021).

- D’Alessio, F.A.; Avolio, B.E.; Charles, V. Studying the impact of critical thinking on the academic performance of executive MBA students. Think. Ski. Creat. 2019, 31, 275–283. [Google Scholar] [CrossRef]

- Facione, P.; Facione, N. The California Critical Thinking Dispositions Inventory (CCTDI): And the CCTDI Test Manual; The California Academic Press: Milbrae, CA, USA, 1992. [Google Scholar]

- Bayram, D.; Kurt, G.; Atay, D. The Implementation of WebQuest-supported Critical Thinking Instruction in Pre-service English Teacher Education: The Turkish Context. Particip. Educ. Res. 2019, 6, 144–157. [Google Scholar] [CrossRef]

- Insight Assessment. California Critical Thinking Skills Test: CCTST Test Manual: “The Gold Standard” Test of Critical Thinking; California Academic Press: San Jose, CA, USA, 2013. [Google Scholar]

- Heilat, M.Q.; Seifert, T. Mental motivation, intrinsic motivation and their relationship with emotional support sources among gifted and non-gifted Jordanian adolescents. Cogent Psychol. 2019, 6, 1587131. [Google Scholar] [CrossRef]

- Kaufmann, G.; Martinsen, Ø. The AE Scale. Revised; Department of General Psychology, University of Bergen: Bergen, Norway, 1992; (revised 30 October 2020). [Google Scholar]

- Toapanta-Pinta, P.; Rosero-Quintana, M.; Salinas-Salinas, M.; Cruz-Cevallos, M.; Vasco-Morales, S. Percepción de los estudiantes sobre el proyecto integrador de saberes: Análisis métricos versus ordinales. Educ. Médica 2019. [Google Scholar] [CrossRef]

- Leach, S.; Immekus, J.C.; French, B.F.; Hand, B. The factorial validity of the Cornell Critical Thinking Tests: A multi-analytic approach. Think. Ski. Creat. 2020, 37, 100676. [Google Scholar] [CrossRef]

- Amrina, Z.; Desfitri, R.; Zuzano, F.; Wahyuni, Y.; Hidayat, H.; Alfino, J. Developing Instruments to Measure Students’ Logical, Critical, and Creative Thinking Competences for Bung Hatta University Students. Int. J. Eng. Technol. 2018, 7, 128–131. [Google Scholar] [CrossRef]

- Poondej, C.; Lerdpornkulrat, T. The reliability and construct validity of the critical thinking disposition scale. J. Psychol. Educ. Res. 2015, 23, 23–36. [Google Scholar]

- Said-Metwaly, S.; Kyndt, E.; Noortgate, W.V.D. The factor structure of the Verbal Torrance Test of Creative Thinking in an Arabic context: Classical test theory and multidimensional item response theory analyses. Think. Ski. Creat. 2020, 35, 100609. [Google Scholar] [CrossRef]

- Burin, D.I.; Gonzalez, F.M.; Barreyro, J.P.; Injoque-Ricle, I. Metacognitive regulation contributes to digital text comprehension in E-learning. Metacogn. Learn. 2020, 15, 391–410. [Google Scholar] [CrossRef]

- Gok, T. Development of problem solving strategy steps scale: Study of validation and reliability. Asia-Pac. Educ. Res. 2011, 20, 151–161. [Google Scholar]

- Penfield, R.D.; Giacobbi, J.P.R. Applying a Score Confidence Interval to Aiken’s Item Content-Relevance Index. Meas. Phys. Educ. Exerc. Sci. 2004, 8, 213–225. [Google Scholar] [CrossRef]

- Cronbach, L.J. Coefficient alpha and the internal structure of tests. Psychometrika 1951, 16, 297–334. [Google Scholar] [CrossRef]

- Raykov, T. Estimation of Composite Reliability for Congeneric Measures. Appl. Psychol. Meas. 1997, 21, 173–184. [Google Scholar] [CrossRef]

- Hair, J.F.; Black, W.; Babin, B.; Anderson, R. Multivariate Data Analysis, 7th ed.; Pearson: London, UK, 2014; Available online: https://files.pearsoned.de/inf/ext/9781292035116 (accessed on 8 May 2021).

- Fornell, C.; Larcker, D.F. Evaluating Structural Equation Models with Unobservable Variables and Measurement Error. J. Mark. Res. 1981, 18, 39. [Google Scholar] [CrossRef]

- Borgonovi, F.; Greiff, S. Societal level gender inequalities amplify gender gaps in problem solving more than in academic disciplines. Intelligence 2020, 79, 101422. [Google Scholar] [CrossRef]

- Fitriani, H.; Asy’Ari, M.; Zubaidah, S.; Mahanal, S. Exploring the Prospective Teachers’ Critical Thinking and Critical Analysis Skills. J. Pendidik. IPA Indones. 2019, 8, 379–390. [Google Scholar] [CrossRef][Green Version]

- Flavell, J.H. Metacognition and cognitive monitoring: A new area of cognitive-developmental inquiry. Am. Psychol. 1979, 34, 906–911. [Google Scholar] [CrossRef]

- Velkovski, V. Application of the systemic analysis for solving the problems of dependent events in agricultural lands-characteristics and methodology. Trakia J. Sci. 2019, 17, 319–323. [Google Scholar] [CrossRef]

- Curran, P.J.; West, S.G.; Finch, J.F. The robustness of test statistics to nonnormality and specification error in confirmatory factor analysis. Psychol. Methods 1996, 1, 16–29. [Google Scholar] [CrossRef]

- Fabrigar, L.R.; Wegener, D.T.; Maccallum, R.C.; Strahan, E.J. Evaluating the use of exploratory factor analysis in psychological research. Psychol. Methods 1999, 4, 272–299. [Google Scholar] [CrossRef]

- Hu, L.T.; Bentler, P.M. Cutoff criteria for fit indexes in covariance structure analysis: Conventional criteria versus new alternatives. Struct. Equ. Model. 1999, 6, 1–55. [Google Scholar] [CrossRef]

- Kaplan, D. Evaluating and Modifying Covariance Structure Models: A Review and Recommendation. Multivar. Behav. Res. 1990, 25, 137–155. [Google Scholar] [CrossRef] [PubMed]

- Putnick, D.L.; Bornstein, M.H. Measurement invariance conventions and reporting: The state of the art and future directions for psychological research. Dev. Rev. 2016, 41, 71–90. [Google Scholar] [CrossRef] [PubMed]

- Cheung, G.W.; Rensvold, R.B. Evaluating Goodness-of-Fit Indexes for Testing Measurement Invariance. Struct. Equ. Model. A Multidiscip. J. 2002, 9, 233–255. [Google Scholar] [CrossRef]

- Chen, F.F. Sensitivity of Goodness of Fit Indexes to Lack of Measurement Invariance. Struct. Equ. Model. A Multidiscip. J. 2007, 14, 464–504. [Google Scholar] [CrossRef]

- Wu, H.; Leung, S.-O. Can Likert Scales be Treated as Interval Scales?—A Simulation Study. J. Soc. Serv. Res. 2017, 43, 527–532. [Google Scholar] [CrossRef]

- Hair, J.F.; Black, W.C.; Babin, B.J.; Anderson, R.E. Multivariate Data Analysis: A Global Perspective; Prentice Hall: Upper Saddle River, NJ, USA, 2010. [Google Scholar]

- Luna-Nemecio, J. Determinaciones socioambientales del COVID-19 y vulnerabilidad económica, espacial y sanitario-institucional. Rev. Cienc. Soc. 2020, 26, 21–26. [Google Scholar] [CrossRef]

- Roozenbeek, J.; Schneider, C.R.; Dryhurst, S.; Kerr, J.; Freeman, A.L.J.; Recchia, G.; van der Bles, A.M.; van der Linden, S. Susceptibility to misinformation about COVID-19 around the world. R. Soc. Open Sci. 2020, 7, 1–15. [Google Scholar] [CrossRef]

- Lawrence, R.J. Responding to COVID-19: What’s the Problem? J. Hered. 2020, 97, 583–587. [Google Scholar] [CrossRef]

- Connelly, L.M. What Is Factor Analysis? Medsurg Nurs. 2019, 28, 322–330. [Google Scholar]

- Disabato, D.J.; Goodman, F.R.; Kashdan, T.B.; Short, J.L.; Jarden, A. Different types of well-being? A cross-cultural examination of hedonic and eudaimonic well-being. Psychol. Assess. 2016, 28, 471–482. [Google Scholar] [CrossRef] [PubMed]

- Gau, J.M. The Convergent and Discriminant Validity of Procedural Justice and Police Legitimacy: An Empirical Test of Core Theoretical Propositions. J. Crim. Justice 2011, 39, 489–498. [Google Scholar] [CrossRef]

- Cseh, M.; Crocco, O.S.; Safarli, C. Teaching for Globalization: Implications for Knowledge Management in Organizations. In Social Knowledge Management in Action; Springer: Berlin/Heidelberg, Germany, 2019; pp. 105–118. [Google Scholar]

- Knutson, J.S.; Friedl, A.S.; Hansen, K.M.; Hisel, T.Z.; Harley, M.Y. Convergent Validity and Responsiveness of the SULCS. Arch. Phys. Med. Rehabil. 2019, 100, 140–143.e1. [Google Scholar] [CrossRef] [PubMed]

- Vaughn, M.G.; Roberts, G.; Fall, A.-M.; Kremer, K.; Martinez, L. Preliminary validation of the dropout risk inventory for middle and high school students. Child. Youth Serv. Rev. 2020, 111, 104855. [Google Scholar] [CrossRef]

- Degener, S.; Berne, J. Complex Questions Promote Complex Thinking. Read. Teach. 2016, 70, 595–599. [Google Scholar] [CrossRef]

- Viguri, M. Science of complexity vs. complex thinking keys for a critical reading of the concept of scientific in Carlos Reynoso. Pensam. Rev. Investig. Inf. Filos. 2019, 75, 87–106. [Google Scholar] [CrossRef]

- Mara, J.; Lavandero, J.; Hernández, L.M. The educational model as the foundation of university action: The experience of the Technical University of Manabi, Ecuador. Rev. Cuba. Educ. Super. 2018, 37, 151–164. [Google Scholar]

| Name of the Scale or Questionnaire | Type | Dimensions or Aspects That it Evaluates | Number of Items | Reference |

|---|---|---|---|---|

| Study Engagement Questionnaire (SEQ) | General | Critical thinking; creative thinking; self-managed learning; adaptability; problem solving; communication skills; interpersonal skills; active learning; teaching for understanding; assessment; coherence of curriculum; teacher–students relationship; feedback to assist learning; relationship with other students; cooperative learning | 35 | Kember and Leung [50] Gargallo et al. [51] |

| Watson–Glaser Critical Thinking Appraisal | General | Inference; Recognition of assumptions; Deduction; Interpretation; Evaluation of arguments | 80 | Watson-Glaser [52] y D’Alessio et al. [53] |

| California Critical Thinking Disposition Inventory | General | pen-mindedness; Self-confidence; Maturity; Analyticity; Systematicity; Inquiry; Truth seeking | 75 | Facione and Facione [54] y Bayram et al. [55] |

|

California Measure of Mental Motivation (CM3) | General | learning orientation; creative problem solving; cognitive integrity; scholarly rigor; and technological orientation | 74 | Insight Assessment [56] y Heilat and Seifert [57] |

| A-E scale | General | Motivation; understanding; problem solving; cognitive process | 30 | Kaufmann and Martinsen [58], y Martinsen and Furnham [11] |

| Knowledge Integrator Project (PIS-1) | General | Critical analysis; problem solving; knowledge integration; promotion of different learning activities; generation of learning; ICT promotion; academic performance. | 28 | Toapanta-Pinta et al. [59] |

| Cornell Critical Thinking Tests | Specific | Critical thinking | 71 | Leach et al. [60] |

| R & D research | Specific | Critical thinking | 9 | Amrina et al. [61] |

| Critical Thinking Disposition Scale | Specific | Critical thinking | 20 | Poondej and Lerdpornkulrat [62] |

| Torrance Tests of Creative Thinking (TTCT) | Specific | Critical thinking | 18 | Said-Metwaly et al. [63] |

| Metacognitive inventory (MCI) | Specific | Metacognition | 18 | Burin et al. [64] |

| Problem Solving Strategy Steps scale (PSSS) | Specific | Problem Solving | 25 | Gok [65] |

| N | 16 Judges |

|---|---|

| Gender (%) | Women: 50% Men: 50% |

| Age (mean ± standard deviation) | 50.6 (±16.88) |

| Last degree (%) | Master’s degree: 50% PhD: 50% |

| Years of experience as a university professor | 17.8 (±9.08) |

| Years of research experience (media ± standard deviation) | 11.8 (±6.93) |

| Average of articles published in the area (media ± standard deviation) | 16.7 (±18.25) |

| Average of books published in the area (media ± standard deviation) | 3.8 (±7.21) |

| Average of book chapters published in the area (media ± standard deviation) | 7.8 (±11.28) |

| Average of presentations (media ± standard deviation) | 25.5 (±37.79) |

| Average of conferences in the area (media ± standard deviation) | 46.4 (±36.07) |

| Reviewer experience, measured in a range of 1 to 4 (media ± standard deviation) | 3.8 (±0.58) |

| Continuous training courses that have been taken in the area (media ± standard deviation) | 7.9 (±7.12) |

| Complex Thinking Ability | Definition | References | Number of Items |

|---|---|---|---|

| Problem solving | “Capacity to engage in cognitive processing to understand and resolve problem situations where a method of solution is not immediately obvious. It includes the willingness to engage with such situations in order to achieve one’s potential” | Borgonovi and Greif [71] (p. 3) | 5 |

| Critical analysis | “Is an intellectual process that is actively and skillfully conceptualizing, applying, analyzing, synthesizing, and or evaluating information collected, or produced by observing, reflecting, considering, or communicating, as a guide to trust and do” | Fitriani et al. [72] (p. 380) | 6 |

| Metacognition | “One’s knowledge concerning one’s own cognitive processes and products or anything related to them […] refers, among other things, to the active monitoring and consequent regulation and orchestration of these processes […] usually in the service of some concrete goal or objective” | Flavell [73] (p. 232) | 4 |

| Systemic analysis | “Is a methodology for analyzing and solving problems using systemic research and comparison of alternatives that are performed on the basis of the cost-to-cost ratio for their implementation and the expected results.” | Velkovski [74] (p. 322) | 3 |

| Creativity | “Creatively is a divergent thinking process, which is the competences to provide alternative answers based on the information provided” | Amrina et al. [75] (p. 129) | 4 |

| Variable | Mean | SD | Aiken’s V | 95% Confidence Interval | ||

|---|---|---|---|---|---|---|

| Lower Limit | Upper Limit | |||||

| Problem solving | Suitability | 3.81 | 0.390 | 0.938 | 0.832 | 0.979 |

| Understandability | 3.63 | 0.484 | 0.875 | 0.753 | 0.941 | |

| Critical analysis | Suitability | 3.75 | 0.559 | 0.917 | 0.804 | 0.967 |

| Understandability | 3.88 | 0.331 | 0.958 | 0.860 | 0.988 | |

| Metacognition | Suitability | 3.65 | 0.310 | 0.85 | 0.76 | 0.91 |

| Understandability | 3.54 | 0.40 | 0.85 | 0.72 | 0.92 | |

| Systemic analysis | Suitability | 3.81 | 0.390 | 0.938 | 0.832 | 0.979 |

| Understandability | 3.75 | 0.433 | 0.917 | 0.804 | 0.967 | |

| Creativity | Suitability | 3.81 | 0.390 | 0.938 | 0.832 | 0.979 |

| Understandability | 3.69 | 0.583 | 0.896 | 0.778 | 0.955 | |

| General scale Total | Suitability | 3.69 | 0.583 | 0.896 | 0.778 | 0.955 |

| Understandability | 3.75 | 0.559 | 0.917 | 0.804 | 0.967 | |

| Satisfaction | 4.50 | 0.791 | 0.875 | 0.772 | 0.935 | |

| Mean | Standard Deviation | Skewness | Kurtosis | |

|---|---|---|---|---|

| Question 01 | 2.83 | 0.815 | −0.039 | −0.122 |

| Question 02 | 3.04 | 0.786 | −0.195 | 0.294 |

| Question 03 | 3.04 | 0.872 | −0.028 | −0.157 |

| Question 04 | 3.12 | 0.817 | −0.081 | −0.139 |

| Question 05 | 3.12 | 0.892 | 0.069 | −0.191 |

| Question 06 | 3.34 | 0.819 | −0.602 | 0.654 |

| Question 07 | 2.70 | 0.937 | 0.135 | 0.129 |

| Question 08 | 2.94 | 0.902 | −0.085 | 0.136 |

| Question 09 | 2.84 | 0.940 | −0.014 | 0.472 |

| Question 10 | 2.81 | 0.992 | −0.082 | −0.231 |

| Question 11 | 2.68 | 0.944 | 0.030 | −0.403 |

| Question 12 | 3.03 | 0.864 | −0.232 | −0.189 |

| Question 13 | 2.80 | 0.900 | −0.153 | −0.127 |

| Question 14 | 2.98 | 0.880 | 0.063 | 0.197 |

| Question 15 | 2.75 | 1.013 | 0.003 | −0.083 |

| Question 16 | 2.78 | 0.923 | 0.036 | −0.190 |

| Question 17 | 2.95 | 0.899 | −0.009 | −0.092 |

| Question 18 | 2.82 | 0.945 | 0.235 | −0.084 |

| Question 19 | 2.97 | 0.890 | 0.039 | −0.114 |

| Question 20 | 2.82 | 0.953 | 0.200 | −0.095 |

| Question 21 | 2.81 | 0.896 | 0.226 | −0.072 |

| Question 22 | 2.93 | 0.945 | 0.179 | −0.330 |

| 95% Confidence Interval | ||||||||

|---|---|---|---|---|---|---|---|---|

| Factor | Indicator | Symbol | Estimate | Std. Error | z-Value | p | Lower | Upper |

| Problem solving | ITEM 1 | λ11 | 0.596 | 0.029 | 20.297 | <0.001 | 0.538 | 0.653 |

| ITEM 2 | λ12 | 0.560 | 0.029 | 19.581 | <0.001 | 0.504 | 0.616 | |

| ITEM 3 | λ13 | 0.657 | 0.031 | 21.277 | <0.001 | 0.596 | 0.717 | |

| ITEM 4 | λ14 | 0.605 | 0.029 | 20.798 | <0.001 | 0.548 | 0.662 | |

| ITEM 5 | λ15 | 0.669 | 0.032 | 21.134 | <0.001 | 0.607 | 0.731 | |

| Critical analysis | ITEM 6 | λ21 | 0.425 | 0.032 | 13.410 | <0.001 | 0.363 | 0.487 |

| ITEM 7 | λ22 | 0.724 | 0.032 | 22.277 | <0.001 | 0.660 | 0.787 | |

| ITEM 8 | λ23 | 0.646 | 0.032 | 20.029 | <0.001 | 0.583 | 0.709 | |

| ITEM 9 | λ24 | 0.679 | 0.034 | 20.282 | <0.001 | 0.614 | 0.745 | |

| ITEM 10 | λ25 | 0.774 | 0.034 | 22.567 | <0.001 | 0.707 | 0.841 | |

| ITEM 11 | λ26 | 0.732 | 0.033 | 22.375 | <0.001 | 0.668 | 0.796 | |

| Metacognition | ITEM 12 | λ31 | 0.618 | 0.031 | 19.873 | <0.001 | 0.557 | 0.679 |

| ITEM 13 | λ32 | 0.676 | 0.032 | 21.209 | <0.001 | 0.613 | 0.738 | |

| ITEM 14 | λ33 | 0.663 | 0.031 | 21.352 | <0.001 | 0.602 | 0.723 | |

| ITEM 15 | λ34 | 0.798 | 0.035 | 22.731 | <0.001 | 0.729 | 0.867 | |

| Systemic analysis | ITEM 16 | λ41 | 0.740 | 0.032 | 23.173 | <0.001 | 0.677 | 0.802 |

| ITEM 17 | λ42 | 0.678 | 0.032 | 21.310 | <0.001 | 0.616 | 0.740 | |

| ITEM 18 | λ43 | 0.778 | 0.032 | 24.139 | <0.001 | 0.715 | 0.842 | |

| Creativity | ITEM 19 | λ51 | 0.650 | 0.032 | 20.400 | <0.001 | 0.587 | 0.712 |

| ITEM 20 | λ52 | 0.758 | 0.033 | 22.979 | <0.001 | 0.694 | 0.823 | |

| ITEM 21 | λ53 | 0.709 | 0.031 | 22.850 | <0.001 | 0.648 | 0.770 | |

| ITEM 22 | λ54 | 0.762 | 0.032 | 23.527 | <0.001 | 0.698 | 0.825 | |

| Index | Expected Value (Hair et al., 2014) | Value Obtained |

|---|---|---|

| Chi-square (χ2) | Non-significative | 409.513, p < 0.001 |

| Degrees of freedom (df) | - | 179 |

| χ2/df ratio | <3.0 | 2.28 |

| Tucker–Lewis index (TLI) | >0.90 | 0.963 |

| Comparative fit index (CFI) | >0.90 | 0.971 |

| Root-mean-square error of approximation (RMSEA) | <0.08 | 0.045 |

| Model | χ2 | df | χ2/df | RMSEA | TLI | CFI | ΔRMSEA | ΔTLI | ΔCFI |

|---|---|---|---|---|---|---|---|---|---|

| Configural | 635.341 | 358 | 1.775 | 0.036 | 0.954 | 0.964 | - | - | - |

| Metric | 663.238 | 374 | 1.773 | 0.035 | 0.954 | 0.963 | 0.001 | 0.000 | 0.001 |

| Scalar | 688.940 | 395 | 1.744 | 0.035 | 0.956 | 0.962 | 0.001 | −0.002 | 0.002 |

| Factor | # of Items | Composite Reliability | AVE | Standardized Factor Loads |

|---|---|---|---|---|

| Problem solving | 5 | 0.862 | 0.56 | Item01 (0.72); Item02 (0.71); Item03 (0.75); Item04 (0.77); Item05 (0.77) |

| Critical analysis | 5 | 0.794 | 0.57 | Item07 (0.78); Item08 (0.72); Item09 (0.75); Item10 (0.78); Item11 (0.76) |

| Metacognition | 4 | 0.838 | 0.57 | Item12 (0.71); Item13 (0.74); Item14 (0.75); Item15 (0.80) |

| Systemic analysis | 3 | 0.833 | 0.63 | Item16 (0.80); Item17 (0.75); Item18 (0.82) |

| Creativity | 4 | 0.867 | 0.62 | Item19 (0.74); Item20 (0.80); Item21 (0.80); Item22 (0.81) |

| 1 | 2 | 3 | 4 | 5 | ||

|---|---|---|---|---|---|---|

| Factor 1 | 1 | 0.746 | ||||

| Factor 2 | 2 | 0.818 | 0.757 | |||

| Factor 3 | 3 | 0.844 | 0.858 | 0.752 | ||

| Factor 4 | 4 | 0.800 | 0.839 | 0.870 | 0.791 | |

| Factor 5 | 5 | 0.780 | 0.940 | 0.865 | 0.821 | 0.787 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tobón, S.; Luna-Nemecio, J. Complex Thinking and Sustainable Social Development: Validity and Reliability of the COMPLEX-21 Scale. Sustainability 2021, 13, 6591. https://doi.org/10.3390/su13126591

Tobón S, Luna-Nemecio J. Complex Thinking and Sustainable Social Development: Validity and Reliability of the COMPLEX-21 Scale. Sustainability. 2021; 13(12):6591. https://doi.org/10.3390/su13126591

Chicago/Turabian StyleTobón, Sergio, and Josemanuel Luna-Nemecio. 2021. "Complex Thinking and Sustainable Social Development: Validity and Reliability of the COMPLEX-21 Scale" Sustainability 13, no. 12: 6591. https://doi.org/10.3390/su13126591

APA StyleTobón, S., & Luna-Nemecio, J. (2021). Complex Thinking and Sustainable Social Development: Validity and Reliability of the COMPLEX-21 Scale. Sustainability, 13(12), 6591. https://doi.org/10.3390/su13126591