A Framework for Evaluating Agricultural Ontologies

Abstract

1. Introduction

2. Materials and Methods

A Review of Ontology Evaluation Methods

- Evaluation against a gold standard—This method compares an ontology with common standards or with another ontology that is considered as a benchmark. Such a method is typically used in cases where the ontology was automatically or semi-automatically generated. In many cases, the application of this method is impossible since such a gold standard does not exist.

- Application-based evaluation—The application-based evaluation (or task-based evaluation [45]) uses the ontology for completing tasks within an application and measure its effectiveness. Since comparing several optional ontologies in the context of a given application environment is usually not feasible, often the proposed ontology is evaluated in a quantitative or qualitative manner by measuring its suitability for performing tasks within the application. The advantage of this method is that it allows assessing how well the ontology fulfils its objectives. Nevertheless, the evaluation is only relevant for that particular application. If the ontology is to be used in another application, the evaluation is irrelevant.

- Criteria-based evaluation—This method evaluates the ontology against a set of predefined criteria. Depending on the criteria, the evaluation is conducted either automatically [45] or manually, usually by experts [9]. Various criteria have been used in the literature. Gruber [40] defines five criteria: Clarity—the ontology should effectively and objectively communicate the definitions of terms; Coherence—the ontology should support inferences that are consistent with the definitions and have no contradictions; Extendibility—it should be possible to extend the ontology to support possible uses of the shared vocabulary, without altering the ontology; Minimal encoding bias—the conceptualization should be as independent of the particular encoding being used as possible; and Minimal ontological commitment—the ontology should define as few restrictions on the domain of discourse as possible. Gómez-Pérez [46] also defines five criteria that are partially overlapping with Gruber’s criteria: Consistency and Expendability, which are similar to Gruber’s coherence and extendibility, respectively; Conciseness—definitions should be clear and unambiguous, yet expressed in few words; Completeness—the ontology captures all that is known about the real world in a finite structure; and Sensitiveness—how sensitive the ontology is to small changes in a given definition. Some criteria (e.g., expendability, clarity and completeness) are difficult to evaluate and require manual (and subjective) inspection by domain experts or ontology engineers [45]. Other criteria can be measured quantitatively by various measures. For example, consistency can be measured based on the number of circularity errors, partition errors and semantic inconsistency errors; and conciseness can be measured based on the number of redundancy errors, grammatical redundancy errors and number of identical formal definitions of classes [47]. The selection of appropriate criteria and measures for the evaluation of an ontology depends on the ontology requirements and goals, as demonstrated by [45].

- Data-driven evaluation—In this evaluation method, the ontology is compared with relevant sources of data (e.g., documents, dictionaries, etc.) about the domain of discourse. For example, Brewster et al. [48] use such a method to determine the degree of structural fit between an ontology and a corpus of documents.

3. Results

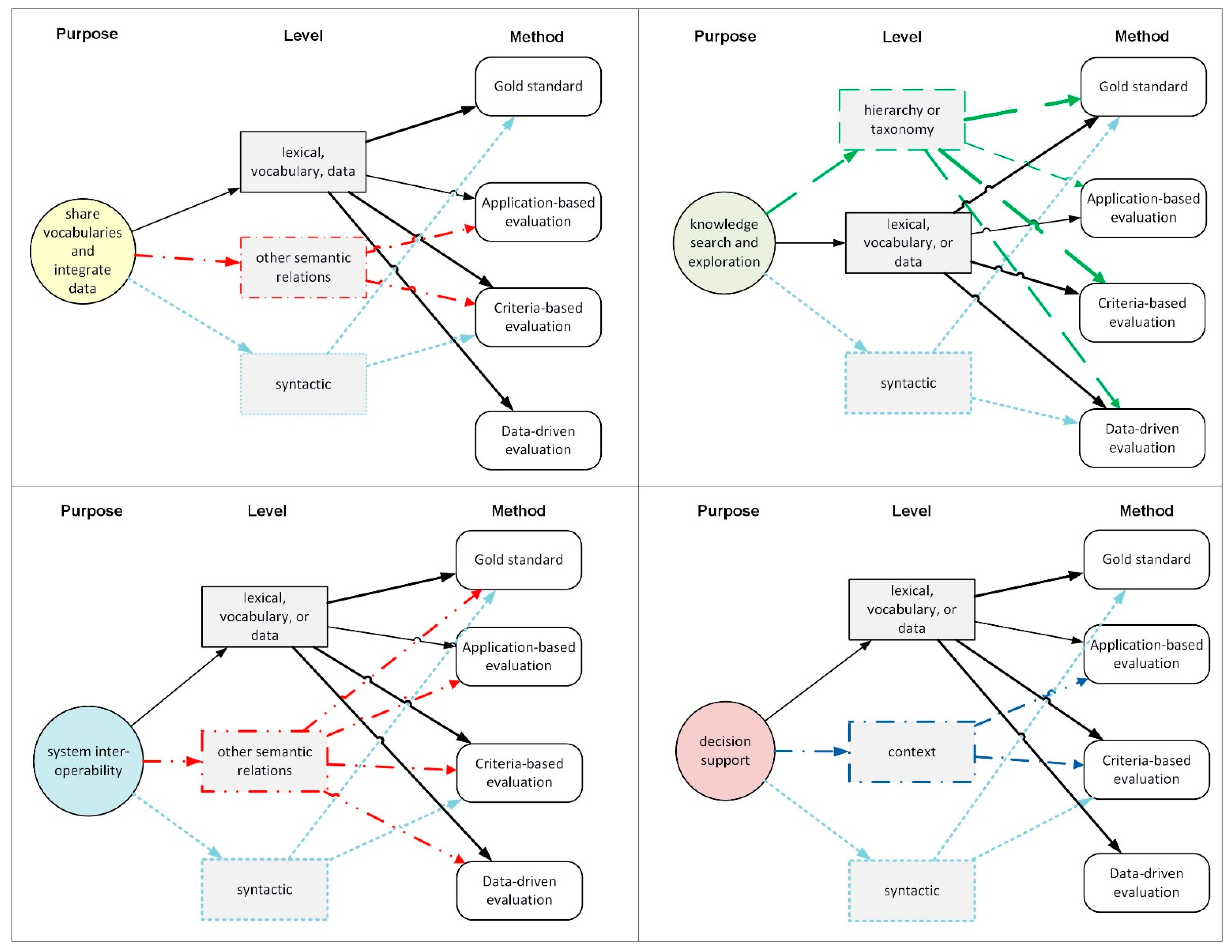

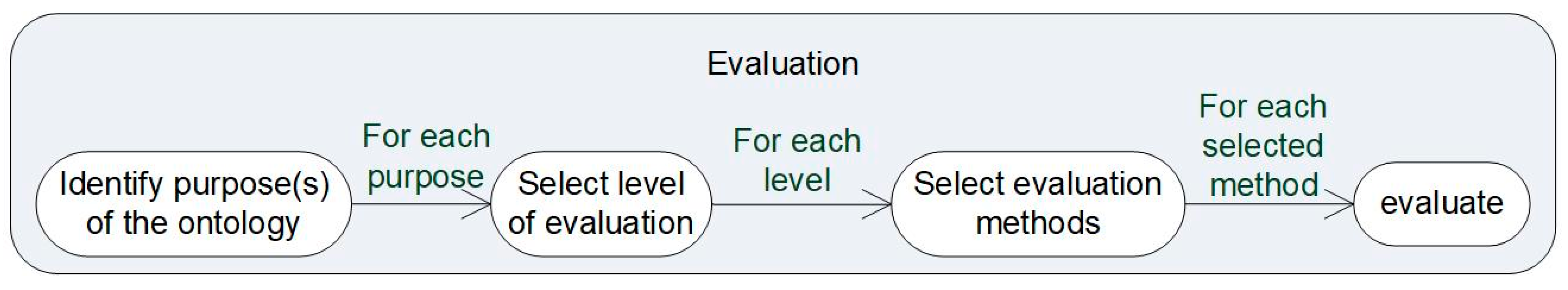

3.1. Proposed Framework for Agricultural Ontology Evaluation

3.2. Framework Application: The Case of a Pest-Control Ontology

- Step 1: Identifying purposes

- Step 2: Selecting levels of evaluation

- Step 3: Selecting evaluation methods

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Chandrasekaran, B.; Josephson, J.R.; Benjamins, V.R. What are ontologies, and why do we need them? IEEE Intell. Syst. 1999, 14, 20–26. [Google Scholar] [CrossRef]

- W3C. Ontology Driven Architecture and Potential Uses of the Semantic Web in Systems and Software Engineering. 2006. Available online: http://www.w3.org/2001/sw/BestPractices/SE/ODA/ (accessed on 3 June 2021).

- Gruber, T.R. A translation approach to portable ontology specifications. Knowl. Acquis. 1993, 5, 199–220. [Google Scholar] [CrossRef]

- Chukkapalli, S.S.L.; Mittal, S.; Gupta, M.; Abdelsalam, M.; Joshi, A.; Sandhu, R.; Joshi, K. Ontologies and Artificial Intelligence Systems for the Cooperative Smart Farming Ecosystem. IEEE Access 2020, 8, 164045–164064. [Google Scholar] [CrossRef]

- Noy, N.F.; McGuinness, D.L. Ontology Development 101: A Guide to Creating Your First Ontology; Technical Report; Knowledge Systems Laboratory. 2001. Available online: https://protege.stanford.edu/publications/ontology_development/ontology101.pdf (accessed on 3 June 2021).

- Roussey, C.; Soulignac, V.; Champomier, J.C.; Abt, V.; Chanet, J.P. Ontologies in agriculture. In Proceedings of the International Conference on Agricultural Engineering (AgEng 2010), Clermont-Ferrand, France, 6–8 September 2010. [Google Scholar]

- Berners-Lee, T.; Hendler, J.; Lassila, O. The Semantic Web: A new form of Web content that is meaningful to computers will unleash a revolution of new possibilities. In The Future of the Web, 1st ed.; The Rosen Publishing Gorup: New York, NY, USA, 2007; pp. 70–80. [Google Scholar]

- Bose, R.; Sugumarat, V. Semantic Web Technologies for Enhancing Intelligent DSS Environments. In Decision Support for Global Enterprises; Springer: Boston, MA, USA, 2007. [Google Scholar]

- Delir Haghighi, P.; Burstein, F.; Zaslavsky, A.; Arbon, P. Development and evaluation of ontology for intelligent decision support in medical emergency management for mass gatherings. Decis. Support Syst. 2013, 54, 1192–1204. [Google Scholar] [CrossRef]

- Yu, J.; Thom, J.A.; Tam, A. Evaluating Ontology Criteria for Requirements in a Geographic Travel Domain. In On the Move to Meaningful Internet Systems 2005: CoopIS, DOA, and ODBASE; Hutchison, D., Kanade, T., Kittler, J., Kleinberg, J.M., Mattern, F., Mitchell, J.C., Naor, M., Nierstrasz, O., Pandu Rangan, C., Steffen, B., et al., Eds.; Springer: Berlin/Heidelberg, Germany, 2005; pp. 1517–1534. ISBN 978-3-540-29738-3. [Google Scholar]

- Yang, J.; Lin, Y. Study on Evolution of Food Safety Status and Supervision Policy—A System Based on Quantity, Quality, and Development Safety. Sustainability 2019, 11, 6656. [Google Scholar] [CrossRef]

- Meng, X.; Xu, C.; Liu, X.; Bai, J.; Zheng, W.; Chang, H.; Chen, Z. An Ontology-Underpinned Emergency Response System for Water Pollution Accidents. Sustainability 2018, 10, 546. [Google Scholar] [CrossRef]

- Spoladore, D.; Pessot, E. Collaborative Ontology Engineering Methodologies for the Development of Decision Support Systems: Case Studies in the Healthcare Domain. Electronics 2021, 10, 1060. [Google Scholar] [CrossRef]

- Spoladore, D.; Mahroo, A.; Trombetta, A.; Sacco, M. DOMUS: A domestic ontology managed ubiquitous system. J. Ambient. Intell. Hum. Comput. 2021, 42, 1190. [Google Scholar] [CrossRef]

- Ali, F.; Ali, A.; Imran, M.; Naqvi, R.A.; Siddiqi, M.H.; Kwak, K.-S. Traffic accident detection and condition analysis based on social networking data. Accid. Anal. Prev. 2021, 151, 105973. [Google Scholar] [CrossRef]

- Ali, F.; El-Sappagh, S.; Islam, S.R.; Ali, A.; Attique, M.; Imran, M.; Kwak, K.-S. An intelligent healthcare monitoring framework using wearable sensors and social networking data. Future Gener. Comput. Syst. 2021, 114, 23–43. [Google Scholar] [CrossRef]

- Beck, H.; Kim, S.; Hagan, D. A crop-pest ontology for extension publications. In Proceedings of the EFITA/WCCA Joint Congress on IT in Agriculture, Vila Real, Portugal, 25–28 July 2005. [Google Scholar]

- Chang, C.; Guojian, X.; Guangda, L. Thesaurus and ontology technology for the improvement of agricultural information retrieval. In Agricultural Information and IT, Proceedings of the IAALD AFITA WCCA 2008, Tokyo University of Agriculture, Tokyo, Japan, 24–27 August 2008; Nagatsuka, T., Ninomiya, S., Eds.; International Association of Agricultural Information Specialists (IAALD): Tokyo, Japan; Asian Federation of Information Technology in Agriculture (AFITA): Tokyo, Japan, 2008; ISBN 978-4-931250-02-4. [Google Scholar]

- Li, D.; Kang, L.; Cheng, X.; Li, D.; Ji, L.; Wang, K.; Chen, Y. An ontology-based knowledge representation and implement method for crop cultivation standard. Math. Comput. Model. 2013, 58, 466–473. [Google Scholar] [CrossRef]

- Liao, J.; Li, L.; Liu, X. An integrated, ontology-based agricultural information system. Inf. Dev. 2015, 31, 150–163. [Google Scholar] [CrossRef]

- Song, G.; Wang, M.; Ying, X.; Yang, R.; Zhang, B. Study on Precision Agriculture Knowledge Presentation with Ontology. AASRI Procedia 2012, 3, 732–738. [Google Scholar] [CrossRef]

- Tomic, D.; Drenjanac, D.; Hoermann, S.; Auer, W. Experiences with creating a Precision Dairy Farming Ontology (DFO) and a Knowledge Graph for the Data Integration Platform in agriOpenLink. Agrárinform. J. Agric. Inform. 2015, 6, 115–126. [Google Scholar] [CrossRef][Green Version]

- Zhang, N.; Wang, M.; Wang, N. Precision agriculture—A worldwide overview. Comput. Electron. Agric. 2002, 36, 113–132. [Google Scholar] [CrossRef]

- Aminu, E.F.; Oyefolahan, I.O.; Abdullahi, M.B.; Salaudeen, M.T. An OWL Based Ontology Model for Soils and Fertilizations Knowledge on Maize Crop Farming: Scenario for Developing Intelligent Systems. In Proceedings of the 15th International Conference on Electronics, Computer and Computation (ICECCO), Abuja, Nigeria, 10–12 December 2019; pp. 1–8. [Google Scholar]

- Su, X.-L.; Li, J.; Cui, Y.-P.; Meng, X.-X.; Wang, Y.-Q. Review on the Work of Agriculture Ontology Research Group. J. Integr. Agric. 2012, 11, 720–730. [Google Scholar] [CrossRef]

- Liu, X.; Bai, X.; Wang, L.; Ren, B.; Lu, S.; Lin, L. Review and Trend Analysis of Knowledge Graphs for Crop Pest and Diseases. IEEE Access 2019, 7, 62251–62264. [Google Scholar] [CrossRef]

- AGROVOC. AGROVOC Thesaurus. Available online: http://aims.fao.org/vest-registry/vocabularies/agrovoc-multilingual-agricultural-thesaurus (accessed on 3 June 2021).

- Sánchez-Alonso, S.; Sicilia, M.-A. Using an AGROVOC-based ontology for the description of learning resources on organic agriculture. In Metadata and Semantics; Sicilia, M.-A., Lytras, M.D., Eds.; Springer: Boston, MA, USA, 2009; pp. 481–492. ISBN 978-0-387-77744-3. [Google Scholar]

- Alfred, R.; Chin, K.O.; Anthony, P.; San, P.W.; Im, T.L.; Leong, L.C.; Soon, G.K. Ontology-Based Query Expansion for Supporting Information Retrieval in Agriculture. In The 8th International Conference on Knowledge Management in Organizations; Uden, L., Wang, L.S.L., Corchado Rodríguez, J.M., Yang, H.-C., Ting, I.-H., Eds.; Springer: Dordrecht, The Netherlands, 2014; pp. 299–311. ISBN 978-94-007-7286-1. [Google Scholar]

- Aqeel-ur, R.; Zubair, S.A. ONTAgri: Scalable Service Oriented Agriculture Ontology for Precision Farming. In Proceedings of the International Conference on Agricultural and Biosystems Engineering (ICABE 2011), Hong Kong, China, 20 February 2011. [Google Scholar]

- Goumopoulos, C.; Kameas, A.D.; Cassells, A. An ontology-driven system architecture for precision agriculture applications. Int. J. Metadata Semant. Ontol. 2009, 4, 72–84. [Google Scholar] [CrossRef]

- Gaire, R.; Lefort, L.; Compton, M.; Falzon, G.; Lamb, D.; Taylor, K. Demonstration: Semantic Web Enabled Smart Farm with GSN. In Proceedings of the International Semantic Web Conference (Posters & Demos), Sydney, Australia, 21–25 October 2013. [Google Scholar]

- Palavitsinis, N.; Manouselis, N. Agricultural Knowledge Organization Systems: An Analysis of an Indicative Sample. In Handbook of Metadata, Semantics and Ontologies; Sicilia, M.-A., Ed.; World Scientific: London, UK, 2014; pp. 279–296. ISBN 978-981-283-629-8. [Google Scholar]

- Arnaud, E.; Laporte, M.-A.; Kim, S.; Aubert, C.; Leonelli, S.; Miro, B.; Cooper, L.; Jaiswal, P.; Kruseman, G.; Shrestha, R.; et al. The Ontologies Community of Practice: A CGIAR Initiative for Big Data in Agrifood Systems. Patterns 2020, 1, 100105. [Google Scholar] [CrossRef]

- Antle, J.M.; Basso, B.; Conant, R.T.; Godfray, H.C.J.; Jones, J.W.; Herrero, M.; Howitt, R.E.; Keating, B.A.; Munoz-Carpena, R.; Rosenzweig, C.; et al. Towards a new generation of agricultural system data, models and knowledge products: Design and improvement. Agric. Syst. 2017, 155, 255–268. [Google Scholar] [CrossRef]

- Valin, M. Connecting Data Sources for Sustainability. 2021. Available online: https://www.precisionag.com/sponsor/skyward/connecting-data-sources-for-sustainability/ (accessed on 26 May 2021).

- Visoli, M.; Ternes, S.; Pinet, F.; Chanet, J.P.; Miralles, A.; Bernard, S.; de Sousa, G. Computational architecture of OTAG project. EFITA 2009, 2009, 165–172. [Google Scholar]

- Fonseca, F.T.; Egenhofer, M.J.; Davis, C.A.; Borges, K.A.V. Ontologies and knowledge sharing in urban GIS. Comput. Environ. Urban Syst. 2000, 24, 251–272. [Google Scholar] [CrossRef]

- Grüninger, M.; Fox, M.S. Methodology for the Design and Evaluation of Ontologies. In Workshop on Basic Ontological Issues in Knowledge Sharing; International Joint Conference on AI (IJCAI-95): Montreal, QC, Canada, 1995. [Google Scholar]

- Gruber, T.R. Toward principles for the design of ontologies used for knowledge sharing? Int. J. Hum. Comput. Stud. 1995, 43, 907–928. [Google Scholar] [CrossRef]

- Pinet, F.; Ventadour, P.; Brun, T.; Papajorgji, P.; Roussey, C.; Vigier, F. Using UML for Ontology Construction: A Case Study in Agriculture. In Seventh Agricultural Ontology Service (AOS) Workshop on “Ontology-Based Knowledge Discovery: Using Metadata and Ontologies for Improving Access to Agricutural Information”; Macmillan Edition, India Ltd.: Bangalore, India, 2006; pp. 735–739. [Google Scholar]

- Uschold, M.; King, M. Towards a Methodology for Building Ontologies. In Workshop on Basic Ontological Issues in Knowledge Sharing, Held in Conjunction with IJCAI-95; The University of Edinburgh: Edinburgh, UK, 1995. [Google Scholar]

- Goldstein, A.; Fink, L.; Raphaeli, O.; Hetzroni, A.; Ravid, G. Addressing the ‘Tower of Babel’ of pesticide regulations: An ontology for supporting pest-control decisions. J. Agric. Sci. 2019, 157, 493–503. [Google Scholar] [CrossRef]

- Brank, J.; Grobelnik, M.; Mladenić, D. A survey of ontology evaluation techniques. In Proceedings of the Conference on Data Mining and Data Warehouses (SiKDD 2005), Citeseer Ljubljana, Slovenia, 17 October 2005. [Google Scholar]

- Yu, J.; Thom, J.A.; Tam, A. Ontology evaluation using wikipedia categories for browsing. In Proceedings of the Sixteenth ACM Conference on Information and Knowledge Management, Lisbon, Portugal, 6–10 November 2007. [Google Scholar]

- Gómez-Pérez, A. Towards a framework to verify knowledge sharing technology. Expert Syst. Appl. 1996, 11, 519–529. [Google Scholar] [CrossRef]

- Gómez-Pérez, A. Evaluation of ontologies. Int. J. Intell. Syst. 2001, 16, 391–409. [Google Scholar] [CrossRef]

- Brewster, C.; Alani, H.; Dasmahapatra, S.; Wilks, Y. Data driven ontology evaluation. In Proceedings of the International Conference on Language Resources and Evaluation, Lisbon, Portugal, 26–28 May 2004. [Google Scholar]

- Maedche, A.; Staab, S. Measuring Similarity between Ontologies. In Proceedings of the European Conference on Knowledge Acquisition and Management, Sigüenza, Spain, 1–4 October 2002; Springer: Berlin/Heidelberg, Germany, 2002; pp. 251–263. [Google Scholar]

- Bournaris, T.; Papathanasiou, J. A DSS for planning the agricultural production. IJBIR 2012, 6, 117. [Google Scholar] [CrossRef]

- Guarino, N.; Welty, C. Evaluating ontological decisions with OntoClean. Commun. ACM 2002, 45. [Google Scholar] [CrossRef]

| Goal | Share Vocabularies, Integrate Data 1 | Knowledge Search and Exploration 2 | System Interoperability 3 | Decision Support 4 | Construction Method Described 5 | Evaluation Method Described 6 | |

|---|---|---|---|---|---|---|---|

| Study | |||||||

| OTAG [6,37] | ✓ | ✓ | − | − | |||

| Urban GIS [6,38] | ✓ | ✓ | partial | − | |||

| Chinese Agricultural Thesaurus [18] | ✓ | ✓ | − | − | |||

| PLANTS [31] | ✓ | + | + | ||||

| ONTAgri [30] | ✓ | − | − | ||||

| Smart Farm with GSN [32] | ✓ | ✓ | − | − | |||

| Precision Dairy Farming [22] | ✓ | + | − | ||||

| Crop Cultivation Standards [19] | ✓ | + | − | ||||

| Precision Agriculture Knowledge [21] | ✓ | − | − | ||||

| Ontology-based AIS [20] | ✓ | ✓ | Partial | + | |||

| Crop-pest Ontology [17] | ✓ | + | − | ||||

| Organic Agriculture Learning [28] | ✓ | ✓ | Partial | − | |||

| Soil and Fertilization [24] | ✓ | + | + | ||||

| Crop Pest and Disease [26] | ✓ | ✓ | + | Partial | |||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Goldstein, A.; Fink, L.; Ravid, G. A Framework for Evaluating Agricultural Ontologies. Sustainability 2021, 13, 6387. https://doi.org/10.3390/su13116387

Goldstein A, Fink L, Ravid G. A Framework for Evaluating Agricultural Ontologies. Sustainability. 2021; 13(11):6387. https://doi.org/10.3390/su13116387

Chicago/Turabian StyleGoldstein, Anat, Lior Fink, and Gilad Ravid. 2021. "A Framework for Evaluating Agricultural Ontologies" Sustainability 13, no. 11: 6387. https://doi.org/10.3390/su13116387

APA StyleGoldstein, A., Fink, L., & Ravid, G. (2021). A Framework for Evaluating Agricultural Ontologies. Sustainability, 13(11), 6387. https://doi.org/10.3390/su13116387