Abstract

As a type of open innovation, emerging crowdsourcing platforms have garnered significant attention from users and companies. This study aims to determine how online seeker signals affect the user participation behavior of the solver in the open innovation crowdsourcing community, by means of which to achieve the long-term sustainable development of the emerging crowdsourcing platform. We performed data analysis based on the system of regression equation approach in order to conduct quantitative research. We found that online reputation and salary comparison positively influence user participation behavior, and that interpersonal trust acts as a strong mediator in the relationship between salary comparison and user participation behavior. In addition, we observed an elevation in task information diversification as a moderator, which positively affects online seeker signals on user participation behavior. Furthermore, an upsurge was noted in task information overload as a moderator, which adversely affects online seeker signals on user participation behavior. The contributions of this article include the application of the innovative signal transmission model, and online task information quality has important guiding significance on how to design task descriptions for emerging crowdsourcing platforms in order to stimulate user participation behavior.

1. Introduction

The rapid advancement of the service economy has created an environment where a company’s product development and design are no longer restricted to its own innovation. Today, a growing number of companies are using the Internet to acquire new key knowledge from the outside world [], and users are progressively transformed into value co-creators for the company []. Crowdsourcing platforms are means that use the Internet to provide external knowledge to companies for open innovation []. The term “crowdsourcing” was first proposed by the American journalist Jeff Howe []. Owing to the advancement of modern information technology, crowdsourcing platforms offer the advantages of low costs and multiple channels, which facilitate multiple users to resolve research and development issues, program design, and other innovative tasks through crowdsourcing platforms like Innocentive, ZBJ.COM, and Taskcn.com.

Domestic open innovation is prevalent on several platforms, among which the leading and most valuable is the crowdsourcing platform. The domestic crowdsourcing platform has numerous seekers and tasks. The seeker could be a company or an individual. Although transactions are frequent, if the solvers’ participation is insufficient, the final result could be undesirable. For example, many domestic crowdsourcing websites, such as Taskcn.com, cannot attract enough solvers to participate in the tasks, resulting in the unsustainable development of the platform, and these sites currently face many problems. While the seeker releases significant information, the solver has inadequate motivation to participate in the task. Previous scholars’ research on user participation behavior mainly focused on participation intentions [] and continuous participation intentions [,]. Few scholars can really use objective data to measure which variables can objectively affect their users’ participation behavior. Therefore, there is a large gap between previous research and actual user participation behavior, which has certain limitations. This article aims to crawl the objective data of the webpage through Python, and then to objectively measure user participation behavior, in order to make up for the research gap.

The biggest concern for companies and users is the presence of information asymmetry between one another. In order to resolve the problem of information asymmetry, a solution is proposed using signaling theory. Signaling theory is principally about decreasing the asymmetry of information between two parties []. Trust is acknowledged in theoretical research as a vital factor in user participation in the platform []. In addition, we aim to help users to discover more tasks of interest to them and increase their involvement in the tasks. In turn, the company can have more selections to choose the best one from. The literature review reveals that research into the trust mechanism is primarily focused on the e-commerce industry and platforms [,]. Crowdsourcing platforms could be regarded as a typical example of e-commerce. Meanwhile, it is obvious that seekers and solvers need to build trust before knowledge transactions, and so we should focus on how to build trust between seeker and solver. Thus, the signaling theory between them can also be cited. Signaling theory helps to reduce uncertainty, so that the solver can make better decisions about whether to participate in tasks. When resolving the existing information asymmetry issue and endorsing the exchange, providers can mark the quality of their products or services using indicators like price, guarantee, or reputation. In our context, the seeker aims to use signaling theory to make more solvers focus on their tasks and be willing to participate in the tasks so that both parties can reach an exchange or cooperation. Taking signaling theory as a theoretical perspective, we used seeker reputation and task price as the design mechanism to decrease information asymmetry. In addition, we examined the effects of different design mechanisms on solvers’ participation, and used interpersonal trust as a mediating variable to investigate whether different design mechanisms should use a mediation to affect the seekers’ participation. Furthermore, different effects occur in different situations; for example, the introduction of a company’s product will not affect the users’ behavior; however, it will affect the correlation between design mechanism and behavior. Hence, we assume that online task information quality will act as a moderator variable to affect the influence of the design mechanism on the solvers’ participation.

The research deductions have a significant contribution to both theory and practice. We applied signaling theory to the context of open innovation, escalating the boundaries of theoretical application. Meanwhile, we positioned the dependent variable as user participation behavior in the context of open innovation. Thus, this study concentrates more on the actual participation behavior of users, rather than their intention to participate. Regarding practice, this can help the seeker to attract more users to participate, and assist the solver in establishing trust and understanding of the seeker to protect their rights and interests. Moreover, this can help to enhance the platform management mechanism, including the trust mechanism. Therefore, the platform can develop more sustainably.

Primarily based on the theoretical perspective of signaling theory, this study uses online seeker signals to assess user participation behavior. Meanwhile, we explored the roles of task information diversification and task information overload in moderating the correlation. Section 2 presents the theoretical basis and hypothesis proposed; Section 3 presents methods; Section 4 presents empirical models and data results; and Section 5 provides discussion.

2. Theoretical Background and Hypotheses

2.1. Theoretical Background

2.1.1. Crowdsourcing Platforms

The crowdsourcing platform is a creation of open innovation, and the online crowdsourcing community has become a critical source of innovation and knowledge dissemination, enabling the online public to give full play to their talents []. Some papers are based on the form of tasks, and whether or not monetary compensation is present, dividing crowdsourcing platforms into three categories: virtual labor markets (VLMs), tournament crowdsourcing (TC), and open collaboration (OC) []. VLMs are an IT-mediated market, wherein the solver can complete online services anywhere and exchange microtasking for monetary compensation []. TC is another type of crowdsourcing with monetary compensation. On the IT-mediated crowdsourcing platform Kaggle, or an internal platform such as Challenge.gov, the seeker holds a competition to publish its mission and devises rules and rewards for the game, and then the solver who wins the competition receives a reward []. In the OC model, organizations publish issues to the platform through the IT system, and the public participates voluntarily, with no monetary compensation. Such crowdsourcing platforms include Wikipedia, or using social media and online communities to get contributions [].

In this study, the crowdsourcing platform has several features. First, it is an online network service trading platform for microtask crowdsourcing in the Chinese context. Microtasking comprises design, development, copywriting, marketing, decoration, life, and corporate service. Second, the seeker must select the best one or several of the many solvers to whom to offer a reward. Thus, competition exists between solvers, and the greater the number of solvers simultaneously, the more likely it is for the works of the winning bidder to be selected by the seeker. Augmenting the competition quality by increasing the number of bidders can enhance platform efficiency. Finally, although not all solvers become successful bidders, the works submitted by solvers can be shared by everyone on the platform, contributing to the platform via user participation.

Prior studies on crowdsourcing platforms tended to explore user intentions [,], whereas this study attempts to focus on the actual participation of users. Hence, we used the ratio of the number of bidders to the number of viewers to demonstrate the users’ participation behavior.

2.1.2. Information Asymmetry and Trust

Information asymmetry transpires when “different people know different information” []. As some information is private, asymmetry occurs between those who own the information and those who do not, and those who own the information might make better decisions. Reportedly, the information asymmetry between the two parties could result in inefficient transactions and could lead to market failure []. Two types of information are predominantly crucial for asymmetry: information about quality, and information about intention []. When the solver does not comprehend the seeker’s information characteristics, information asymmetry is essential. Conversely, when the seeker is concerned about the solver’s behavior or intentions, information asymmetry is also crucial []. The seeker can send observable information about itself to the less informed solver in order to promote communication [].

Signaling theory considers reputation to be a vital “signal” used by external stakeholders to assess a company []. Reputation is defined as an assessment of the target’s desirability established by outsiders []. On the e-commerce platform, reputation is the buyer’s timely assessment of the seller’s products or services. On crowdsourcing platforms, reputation is the assessment of the seeker received by the solver, along with its own level. Moreover, the reward price can also be used as a signal []. On crowdsourcing platforms, as the task completion party, the solver must attain the corresponding reward from the seeker. Through reward, the solver can create an understanding of the seeker, thereby decreasing the information asymmetry between one another.

In the sharing economy, products or services primarily rely on individuals; thus, the sharing economy focuses more on trust between users. Gefen et al. [] termed this “interpersonal trust”—that is, one party feels that they can rely on the other party’s behavior, and acquires a sense of security and comfort. On crowdsourcing platforms, from the standpoint of the solver, the seeker’s reputation (e.g., ratings and text comments), feedback responses (e.g., response speed and feedback content), personal authentication, and exhibition of characteristics all exert a positive impact on the solver’s trust [].

2.2. Hypotheses

When two parties (individuals or companies) are exposed to dissimilar information, signaling theory is beneficial for explaining their behavior []. In this study, the two parties are the seeker and the solver. Strategic signaling implies actions taken by the seeker to affect the solver’s views and behaviors []. Reportedly, information technology features are signals of online communities that could affect the solver’s trust and participation []. Moreover, IT features, such as reputation mechanisms, could help the seeker to mark their identity level and contribution to this platform []. Furthermore, providing such IT features could help to enhance interpersonal trust [] and directly increase user participation.

Based on the existing literature, we can summarize six ways to enhance user participation in online communities []: getting information, giving information, reputation building, relationship development, recreation, and self-discovery. As mentioned earlier, signaling reputation could serve to directly increase user participation in crowdsourcing, because most of the seeker’s reputation comes from the previous assessment of the solver and the seeker’s own contract volume on this platform. All of these factors are likely to enhance the participation behavior of the solver in the first place. Hence, we hypothesize the following:

Hypothesis 1.

Online reputation positively correlates with user participation behavior.

Yao et al. [] claimed that in the context of C2C online trading, seller reputation systems could decrease serious information asymmetry. Thus, in the context of the crowdsourcing platform, the seeker’s reputation could be used to decrease the information asymmetry. In addition, the mitigation of information asymmetry could build interpersonal trust among both parties []. Thus, we imagine that the seeker’s online reputation could build trust between both parties, so that more solvers can participate in it. Hence, we hypothesize the following:

Hypothesis 2.

Interpersonal trust positively mediates the correlation between online reputation and user participation behavior.

Additional methods to boost users to participate in crowdsourcing include the rewards provided by network services. Reward is an external form of motivation to increase participation in virtual communities []. Thus, unless users receive a satisfactory reward to make up for the resources they invested, they will not participate []. In the buying and selling market, as consumers are keen to get price information on the market, price comparison services are created, through which consumers can compare the prices of different retailers in order to make better decisions. Thus, on our crowdsourcing platform, the solver decides whether to participate in the task by evaluating the reward for the task; if the reward level is high, he/she might choose to participate. Hence, we hypothesize the following:

Hypothesis 3.

Salary comparison positively correlates with user participation behavior.

The signals of price comparison services closely correlate with the practicality of information, including information asymmetry or symmetry []. Consumers use tools like price-watch services to resolve the problem of information asymmetry. Moreover, Lee et al. [] reported that some products in the market are homogenized products that can only be distinguished by price. If a consumer receives the price comparison, it will lead to lower search costs, which, in turn, would decrease information asymmetry.

In our crowdsourcing context, the solver has the right to know the salary levels of all seekers releasing tasks in the market. Unquestionably, the solver expects that the reward for the task in which he/she participates is above the average level. Accordingly, the solver can decrease the search cost and evade unnecessary losses, thereby establishing interpersonal trust between the two parties. Trust is clearly the most critical issue in determining why users continue to use Web services []. Owing to the establishment of interpersonal trust, the solver is involved in the task. Hence, we hypothesize the following:

Hypothesis 4.

Interpersonal trust positively mediates the correlation between salary comparison and user participation behavior.

Based on the existing literature, information quality captures e-commerce content issues, and if a potential buyer or supplier wishes to initiate a transaction through the Internet, the Web content should be modified, complete, relevant, and easy to comprehend []. The information quality of crowdsourcing platforms is primarily based on online task information quality. Task information diversification implies that the seeker’s description of its task is comprehensive and clear, through multiple forms such as text and pictures; this is a manifestation of high-quality task information. Notably, task information overload is manifested by the excessive length of a task’s description by the seeker, making the solver burdened with reading and insufficient understanding; this is an example of low-quality task information. Of note, online task information quality is a type of signal—such as task information diversification and task information overload—that is recognized as a context variable that moderates the impact of online seeker signals on user participation behavior.

Hence, we can suppose that task information diversification plays a positive role in this, which would render the positive correlation between online seeker signals and user participation behavior more significant. Thus, we hypothesize the following:

Hypothesis 5a.

Task information diversification positively moderates (strengthens) the positive correlation between online reputation and user participation behavior.

Hypothesis 5b.

Task information diversification positively moderates (strengthens) the positive correlation between salary comparison and user participation behavior.

Nevertheless, task information overload exerts a weakening effect on the positive correlation between online seeker signals and user participation behavior, because it can allow the solver to form a negative attitude toward the task, thinking that the task is too complicated. Hence, we hypothesize the following:

Hypothesis 6a.

Task information overload negatively moderates (weakens) the positive correlation between online reputation and user participation behavior.

Hypothesis 6b.

Task information overload negatively moderates (weakens) the positive correlation between salary comparison and user participation behavior.

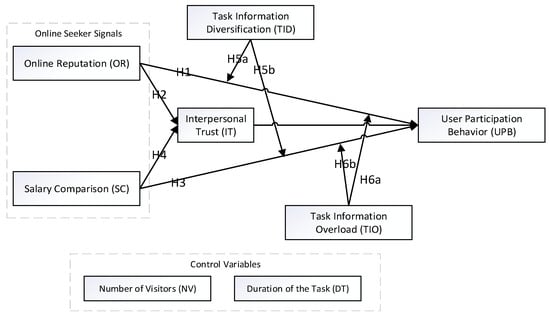

2.3. Theoretical Framework

Figure 1 presents the theoretical framework of the research, followed by four main hypotheses and four subhypotheses.

Figure 1.

Theoretical framework.

3. Material and Methods

3.1. Research Context and Data Collection

This study tested the proposed research hypotheses with transaction data collected from one of China’s three innovative knowledge and skills sharing service platforms, EPWK.com. As of the end of June 2020, this platform has had over 22 million registered users. In addition, the data on the website, both past and present, are relatively public, and the task models are diverse. Overall, we selected this website as the data source.

The crowdsourcing platform in this study contained six different task models, as follows: “single reward”; “multiperson reward”; “tendering task”; “employment task”; “piece-rate reward”; and “direct employment.” The number of bidders for direct employment tasks was one, which was determined by the seeker. The other task models were multiperson bidding, in which the seeker selected the best task works. In this study, we used the Web crawling tool Python to collect data between June 30 2010 and December 31 2019. Meanwhile, in order to ensure data integrity, only “completed” tasks were selected for data collection. After data cleaning, sample data of 110,000 tasks were obtained. Owing to the lack of data in the direct employment task, there was no way to perform a comprehensive analysis of this type of task and delete it. Finally, we obtained 28,887 valid sample data, and these data were standardized. Examples of raw data before standardization are shown in Table 1.

Table 1.

Examples of raw data before standardization.

3.2. Variables and Overall Approach

3.2.1. Variables

Table 2 shows all of the variables used in this study. The dependent variable was the user participation behavior, which was equal to the ratio of the number of solvers for a task to the number of visitors for a task. The higher the ratio, the more solvers were involved in the task’s creation, which would create more works, bring more choices to the seekers, and also share ideas with everyone involved. In addition, the independent variables included online reputation and salary comparison. The mediating variable was interpersonal trust; the trust between two parties increased the number of solvers from different credit ratings. The moderating variables included task information diversification and task information overload. Most of the variables were shown directly on the task release homepage and the seeker’s homepage, and could be seen by the solvers.

Table 2.

Constructs and measurement.

Online reputation represented the seeker’s trust level on his/her homepage, which is measured by the seeker’s credit rating. Salary comparison demonstrates the level of reward for the solvers; this is measured by salary differences between similar tasks. Table 2 shows the specific calculation method.

Task information diversification implies diverse descriptions of different forms of task information by the seeker; this is measured by the number of attachment contents, which represents other forms in addition to the text on the task release homepage. Both task requirements and supplementary requirements describe the seeker’s request for his/her task in the form of text. Some tasks would also include attachments. The attachments contain text-like and other forms of task descriptions. Therefore, what we are concerned about is the number of other forms of task description. In addition, task information overload is measured by the bytes of attachment text on the task release homepage. If the number of bytes in the task description is too high, it will cause a certain amount of information overload to the solver.

Control variables include the number of visitors and the duration of the task. The visitors to the task could become solvers or not. The duration of the task is the number of days from the release of requirements to the acceptance of payment.

Table 3 presents the descriptive statistics. Table 4 reports the correlations between key variables. Of note, multicollinearity might not be a major issue for this study.

Table 3.

Descriptive statistics.

Table 4.

Correlations between key variables.

3.2.2. Overall Approach

In this study, we used the system of regression approach and SEM analysis for comparative analysis. This article follows the guidelines of quantitative models []. AMOS–LISREL-type search algorithms provide fitting algorithms, but tend to work well only for normally distributed survey data. However, the data used in this article do not conform to the multivariate normal distribution. Therefore, this article chooses the system of regression approach and SEM analysis, which have well-understood fitting measures. Furthermore, we used Stata 15 and SmartPLS 3 software for our analysis.

4. Empirical Models and Data Results

4.1. Adoption of Independent Variables and User Participation Behavior

Equations (1) and (2) outline our empirical models for Hypotheses 1 and 3. We index the task by i. In all equations, we controlled the duration of the task and the number of visits. We conducted OLS regressions in order to estimate Equations (1) and (2)—OLS:1 and OLS:2, respectively. Columns 2 and 3 of Table 5 show the OLS regression results. Column 2 adds the influence of the online reputation on the user participation behavior. Column 3 adds the influence of the salary comparison on the user participation behavior.

Table 5.

Estimation results for user participation behavior.

Based on the results shown in column 2, we find that there is a significant positive correlation between online reputation and user participation behavior (β = 0.03, p < 0.01). Thus, Hypothesis 1, which predicts a positive effect of online reputation on user participation behavior, is supported. Meanwhile, we also observed a positive and significant correlation between salary comparison and user participation behavior (β = 0.063, p < 0.01). Thus, Hypothesis 3, which predicts a positive effect of salary comparison on user participation behavior, is supported.

4.2. Mediating Effect Analysis

Equations (1)–(6) outline our empirical models for Hypothesis 2 and Hypothesis 4. We index the task by i. In all equations, we controlled the duration of the task and the number of visits. We conducted OLS regressions in order to estimate Equations (1)–(6). Columns 2–7 of Table 6 show the OLS regression results. Columns 2–4 add the mediating effect of interpersonal trust on the relationship between online reputation and user participation behavior. Columns 5–7 add the mediating effect of interpersonal trust on the relationship between salary comparison and user participation behavior.

Table 6.

Estimation results for the mediating effects of interpersonal trust.

Based on the results shown in column 2, we observed a positive and significant correlation between online reputation and user participation behavior (β = 0.03, p < 0.01). In column 3, we observed a negative and significant correlation between online reputation and interpersonal trust (β = −0.022, p < 0.01). In column 4, we observed a positive and significant correlation between online reputation and user participation behavior (β = 0.031, p < 0.01), and a positive and significant correlation between interpersonal trust and user participation behavior (β = 0.051, p < 0.01). Thus, we can conclude that interpersonal trust plays a mediating role between online reputation and user participation behavior. Since the coefficient of OLS:3 corresponding to online reputation is −0.022, which is a negative number, this indicates that there is a negative mediating effect. Thus, Hypothesis 2, which predicts a positive mediating role between online reputation and user participation behavior, is not supported.

In this study, we performed the Sobel and bootstrapping tests using Stata software; the results are shown in Table 7. The indirect effect of Hypothesis 2 is −0.001, the direct effect of Hypothesis 2 is 0.031, and the total effect of Hypothesis 2 is 0.030. The p value of the Sobel test of the mediation effect is 0.003, which is less than 0.05, indicating that the mediation effect is established. The mediation effect accounts for −3.75% of the total effect—that is, interpersonal trust has a mediating effect between online reputation and user participation behavior, and there is a certain negative mediation. The results were contrary to the prediction of Hypothesis 2. Therefore, as shown in Table 7, Hypothesis 2 is not supported.

Table 7.

Significance analysis of the direct and indirect effects by regression analysis.

Based on the results shown in column 5 of Table 6, we observed a positive and significant correlation between salary comparison and user participation behavior (β = 0.063, p < 0.01). In column 6, we observed a positive and significant correlation between salary comparison and interpersonal trust (β = 0.358, p < 0.01). In column 7, we observed a positive and significant correlation between salary comparison and user participation behavior (β = 0.046, p < 0.01), and a positive and significant correlation between interpersonal trust and user participation behavior (β = 0.047, p < 0.01). Thus, we can conclude that interpersonal trust plays a mediating role between salary comparison and user participation behavior. Since the coefficient of OLS:5 corresponding to salary comparison is 0.358, which is a positive number, this indicates that there is a positive mediating effect. Thus, Hypothesis 4, which predicts a positive mediating role between salary comparison and user participation behavior, is supported.

In this study, we performed the Sobel and bootstrapping tests using Stata software; the results are shown in Table 7. The indirect effect of Hypothesis 4 is 0.017, the direct effect of Hypothesis 4 is 0.046, and the total effect of Hypothesis 4 is 0.063. The p value of the Sobel test of the mediation effect is 0, which is less than 0.05, indicating that the mediation effect is established. The mediation effect accounts for 26.8% of the total effect—that is, interpersonal trust has a mediating effect between salary comparison and user participation behavior, and there is a certain positive mediation. These results support Hypothesis 4, as shown in Table 7.

In order to further verify the mediating effects of Hypothesis 2 and Hypothesis 4, we also performed the Sobel and bootstrapping tests using SmartPLS software; the results are shown in Table 8. The indirect effect of Hypothesis 2 is 0.005, while the direct effect of Hypothesis 2 is 0.143. The P value of the Sobel test of the mediation effect is 0, which is less than 0.05, indicating that the mediation effect is established—that is, interpersonal trust has a mediating effect between online reputation and user participation behavior, and there is a certain positive mediation. These results support Hypothesis 2, as shown in Table 8. The indirect effect of Hypothesis 4 is 0.049, while the direct effect of Hypothesis 4 is 0.173. The P value of the Sobel test of the mediation effect is 0, which is less than 0.05, indicating that the mediation effect is established—that is, interpersonal trust has a mediating effect between salary comparison and user participation behavior, and there is a certain positive mediation. These results support Hypothesis 4, as shown in Table 8.

Table 8.

Significance analysis of the direct and indirect effects by SEM analysis.

4.3. Moderating Effect Analysis

Equations (1)–(2) and (7)–(10) outline our empirical models for Hypotheses 5a and 5b. We index the task by i. In all equations, we controlled the duration of the task and the number of visits. We conducted OLS regressions in order to estimate Equations (1)–(2) and (7)–(10). Columns 2–7 of Table 9 show the OLS regression results. Columns 2–4 add the moderating effect of task information diversification on the relationship between online reputation and user participation behavior. Columns 5–7 add the moderating effect of task information diversification on the relationship between salary comparison and user participation behavior.

Table 9.

Estimation results for the moderating effects of task information diversification.

According to column 4, online reputation and task information diversification have a strongly positively significant interaction effect on user participation behavior (β = 0.008, p < 0.01). Since the ANOVA test is significant (p = 1.563 × 10−7 < 0.05), it can be concluded that there is a significant difference between Equation (7) and Equation (8), and that task information diversification has a positive moderating effect []. Thus, Hypothesis 5a, which predicts a positive moderating role between online reputation and user participation behavior, is supported.

According to column 7, salary comparison and task information diversification have a strongly positively significant interaction effect on user participation behavior (β = 0.005, p < 0.01). Since the ANOVA test is significant (p = 0.018 < 0.05), it can be concluded that there is a significant difference between Equation (9) and Equation (10), and that task information diversification has a positive moderating effect []. Thus, Hypothesis 5b, which predicts a positive moderating role between salary comparison and user participation behavior, is supported.

Equations (1), (2) and (11)–(14) outline our empirical models for Hypotheses 6a and 6b. We index the task by i. In all equations, we controlled the duration of the task and the number of visits. We conducted OLS regressions in order to estimate Equations (1), (2) and (11)–(14). Columns 2–7 in Table 10 show the OLS regression results. Columns 2–4 add the moderating effect of task information overload on the relationship between online reputation and user participation behavior. Columns 5–7 add the moderating effect of task information overload on the relationship between salary comparison and user participation behavior.

Table 10.

Estimation results for the moderating effects of task information overload.

According to column 4, online reputation and task information overload have a strongly negatively significant interaction effect on user participation behavior (β = −0.005, p < 0.01). Since the ANOVA test is significant (p = 4.027 × 10−5 < 0.05), it can be concluded that there is a significant difference between Equation (11) and Equation (12), and that task information overload has a negative moderating effect []. Thus, Hypothesis 6a, which predicts a negative moderating role between online reputation and user participation behavior, is supported.

According to column 7, salary comparison and task information overload have a strongly negatively significant interaction effect on user participation behavior (β = −0.011, p < 0.01). Since the ANOVA test is significant (p = 4.502 × 10−8 < 0.05), it can be concluded that there is a significant difference between Equation (13) and Equation (14), and that task information overload has a negative moderating effect []. Thus, Hypothesis 6b, which predicts a negative moderating role between salary comparison and user participation behavior, is supported.

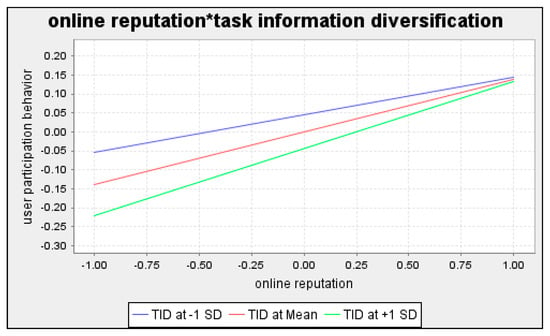

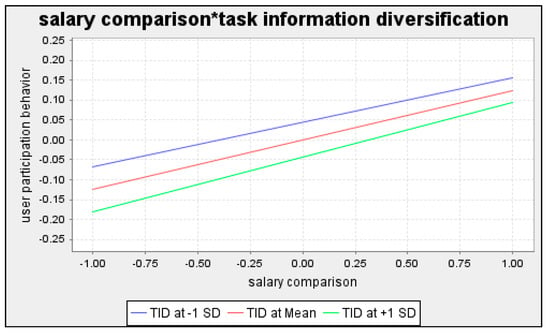

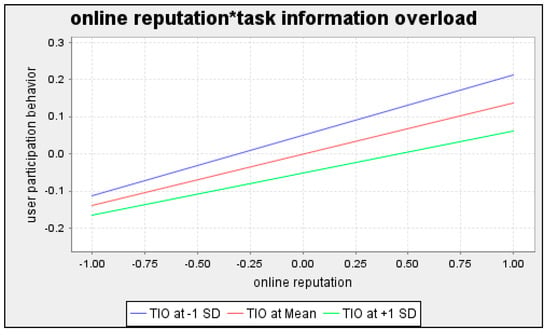

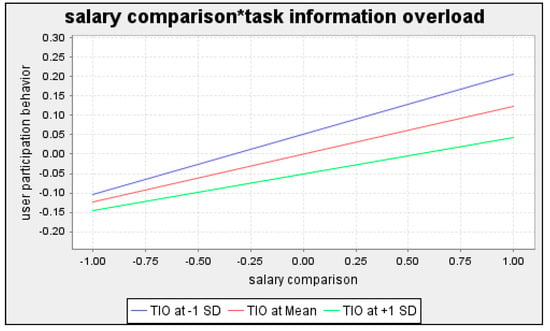

In order to further verify Hypotheses 5 and 6, this article uses SEM analysis in order to test the moderating effects, and the results are shown in Figure 2, Figure 3, Figure 4 and Figure 5. The three lines shown in Figure 2 and Figure 4 represent the correlation between online reputation and user participation behavior, while Figure 3 and Figure 5 represent the correlation between salary comparison and user participation behavior. The middle line signifies the correlation for average levels of the moderator variables task information diversification and task information overload []. The other two lines depict the relationship between the x-axis and the y-axis for higher and lower levels of the moderator variables task information diversification and task information overload.

Figure 2.

Moderating effect simple slope analysis for Hypothesis 5a.

Figure 3.

Moderating effect simple slope analysis for Hypothesis 5b.

Figure 4.

Moderating effect simple slope analysis for Hypothesis 6a.

Figure 5.

Moderating effect simple slope analysis for Hypothesis 6b.

Figure 2 and Figure 3 show that the correlation between online reputation and user participation behavior, along with the correlation between salary comparison and user participation behavior, are positive in the middle line. Thus, higher levels of online reputation would cause higher levels of user participation behavior, and higher levels of salary comparison would cause higher levels of user participation behavior. Moreover, higher task information diversification entails a stronger correlation between online reputation and user participation behavior, whereas lower levels of task information diversification build a weaker correlation between online reputation and user participation behavior. Hence, the simple slope plot supports our previous discussion of the positive interaction terms of Hypothesis 5a. Meanwhile, higher task information diversification entails a stronger correlation between salary comparison and user participation behavior, whereas lower levels of task information diversification result in a weaker correlation between salary comparison and user participation behavior. Hence, the simple slope plot supports our previous discussion of the positive interaction terms of Hypothesis 5b.

Figure 4 and Figure 5 show that the correlation between online reputation and user participation behavior, along with the correlation between salary comparison and user participation behavior, are positive in the middle line. Thus, higher levels of online reputation would cause higher levels of user participation behavior, and higher levels of salary comparison would cause higher levels of user participation behavior. Moreover, higher task information overload entails a weaker correlation between online reputation and user participation behavior, whereas lower levels of task information overload build a stronger correlation between online reputation and user participation behavior. Hence, the simple slope plot supports our previous discussion of the negative interaction terms of Hypothesis 6a. Meanwhile, higher task information overload entails a weaker correlation between salary comparison and user participation behavior, whereas lower levels of task information overload result in a stronger correlation between salary comparison and user participation behavior. Hence, the simple slope plot supports our previous discussion of the negative interaction terms of Hypothesis 6b.

5. Discussion

5.1. Conclusions

This study suggests that online seeker signals, including online reputation and salary comparison, could affect user participation behavior, and that online seeker signals could also positively affect user participation behavior by building interpersonal trust. Moreover, online task information quality, including task information diversification and task information overload, play a moderating role between online seeker signals and user participation behavior.

Of note, some hypothetical results corroborate our expectations (Hypothesis 1, Hypothesis 3, and Hypothesis 4). Online reputation can exert a positive impact on user participation behavior. The seeker’s reputation is derived from the seeker’s own credit, which in turn derives from his/her performance. However, the conclusion of this article shows that interpersonal trust plays a negative mediating role in the relationship between online reputation and user participation behavior, as demonstrated by the regression analysis. The reason for this may be that for those solvers with different credit ratings, the seeker’s credit is not enough to generate sufficient trust. Thus, we need to consider the online reputation of other forms of seeker in the later stage, in order to increase the trust of the solver. However, through SEM analysis, the empirical results of the mediating effects supported Hypothesis 2; thus, Hypothesis 2 may be controversial, and we will conduct in-depth analysis on it in the future.

Furthermore, salary comparison exerts a positive impact on user participation behavior. A comparative analysis reveals the reward level of the target task compared to other tasks of the same type; these data are not currently displayed on the platform, and are attained through self-calculation. Thus, we hope that later versions of the platform will display such information, in order to assist users in deciding whether or not to participate in the task. The intuitive feeling of these salary comparisons would increase the solver’s trust in the seeker, and, meanwhile, their own labor could be guaranteed; thus, solvers would be more willing to participate in the task.

This study demonstrates that task information diversification in online task information quality plays a positive moderating role between online reputation and user participation behavior (Hypothesis 5a); this is because the diversity of tasks would clarify the expression of tasks, which in itself improves the solver’s cognition or impression of the seeker, which invisibly enhances the positive correlation between online reputation and user participation behavior. Meanwhile, the predictions of Hypothesis 5b are supported by these results. Task information diversification plays a positive moderating role between salary comparison and user participation behavior, because the greater the task information diversification, the higher the clarity perceived by the task solver, and the greater the understandability of the task as perceived by the solver. Although solvers get the same reward, they expect to spend less energy on understanding the task; thus, task information diversification would improve the relationship between salary comparison and user participation behavior.

Furthermore, as task information overload would cause a burden on the solver, it plays a negative moderating role in the impact of online seeker signals on user participation behavior; this suggests to us that the seeker should be concise when describing the task. Even if more task information is required, description text bytes should be as concise as possible, in order to avoid unnecessary burden on the solver. Hence, Hypothesis 6a and Hypothesis 6b are verified in this study.

5.2. Contributions

This study quantitatively validated the mechanism model of crowdsourcing platform solvers’ user participation behavior by using a system of regression equations and SEM analysis, which has crucial theoretical and practical significance.

5.2.1. Theoretical Contributions

First, from the standpoint of the information signal, this study applies signaling theory to the situation of open innovation, expanding the boundaries of theoretical application. The leading research on signaling theory is in the fields of online commerce [] and online communities [], but seldom focuses on open innovation; this is one of our theoretical contributions.

Second, the dependent variable in this study is user participation behavior, which is a distinct dependent variable in the open innovation scenario. Although the calculation method for user participation behavior is a conversion rate, the connotation it expresses is the proportion of people who can really participate in the task among the number of solvers who browse it. In previous studies on information signals, the dependent variable was usually user perception behavior or user intention behavior [], whereas our study focused on the actual participation behavior of users; this is one of the theoretical contributions of this study.

Third, the moderating variable in this article is online task information quality. We aimed to determine whether the impact of online seeker signals on user participation behavior would be influenced by the moderating variable. In several cases, the seeker’s task description was ignored by the researchers. As a type of online task information, its quality, such as task information diversification and task information overload, would affect the behavior of the solver indirectly. The proposal of such a moderating variable and the study of user behavior were other theoretical contributions of this study.

5.2.2. Practical Implications

First, for the seeker, both independent variables positively affect user participation behavior, which shows that the seeker needs to focus on the online reputation and mission salary. The seeker must focus on their own credit and salary level in order to attract more solvers to join, so that it can get more and better quality works, and select the winning solver from them. Meanwhile, the online task information quality also showed that solvers would like to see clearer task descriptions, the reason being that task information diversification positively moderates user participation behavior, while task information overload negatively moderates user participation behavior; thus, the seeker should pay more attention to this aspect.

Second, for the solver, the salary comparison enables the solver to view the salary levels of each task more intuitively, and then to have an initial impression of the seeker in order to ascertain whether or not it is worth bidding. Furthermore, the enhancement of the online task information quality can also enable the solver to better understand the task, and decrease unnecessary information asymmetry.

Third, this article aims to solve the problem of platform sustainability. Crowdsourcing platforms, as bilateral sharing platforms, enable more solvers to participate in a given task, which can raise the seeker’s satisfaction with the task. Moreover, this can increase the traffic and activity of the platform, and with the help of digital technology, the platform can absorb more capable solvers through the optimization and standardization of the platform interface, the quality of tasks can be improved, and the platform can develop more sustainably, especially in the current post-pandemic era.

5.3. Limitations and Future Research

First, as concerns the platform, we only used a crowdsourcing platform, because different platforms have their own preferences and considerations for their own development. In our future research, we would study different types of platforms, such as ZBJ.com. Second, we only used objective, second-hand data in this study. For some explanations of the underlying mechanisms, other methods could be used in future studies to enrich results in the later stages, such as surveys or experiments. In the later period, we will conduct corresponding interviews with the solver on the platform. At the same time, we will conduct a questionnaire survey and analysis of platform users based on the page design, in order to further study and develop the platform optimization of the open innovation crowdsourcing community. Third, the research in this article is in the context of China. In the future, the model should be extended to foreign crowdsourcing platforms, in order to increase the universality of the conclusions.

Author Contributions

Conceptualization, S.G. and X.J.; methodology, X.J.; software, X.J.; validation, S.G., X.J. and Y.Z.; formal analysis, X.J.; investigation, X.J.; resources, S.G.; data curation, X.J.; writing—original draft preparation, X.J.; writing—review and editing, Y.Z.; visualization, X.J.; supervision, Y.Z.; project administration, Y.Z.; funding acquisition, S.G. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Social Science Fund of China (17BJY033).

Data Availability Statement

The data presented in this study are available on request from the corresponding author.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Pohlisch, J. Internal Open Innovation—Lessons Learned from Internal Crowdsourcing at SAP. Sustainability 2020, 12, 4245. [Google Scholar] [CrossRef]

- Wooten, J.O.; Ulrich, K.T. Idea Generation and the Role of Feedback: Evidence from Field Experiments with Innovation Tournaments. Prod. Oper. Manag. 2016, 26, 80–99. [Google Scholar] [CrossRef]

- Cui, T.; Ye, H. (Jonathan); Teo, H.H.; Li, J. Information technology and open innovation: A strategic alignment perspective. Inf. Manag. 2015, 52, 348–358. [Google Scholar] [CrossRef]

- Howe, J. The Rise of Crowdsourcing. Wired 2006, 14, 1–4. [Google Scholar]

- Ahn, Y.; Lee, J. The Effect of Participation Effort on CSR Participation Intention: The Moderating Role of Construal Level on Consumer Perception of Warm Glow and Perceived Costs. Sustainability 2019, 12, 83. [Google Scholar] [CrossRef]

- Wang, M.-M.; Wang, J.-J. Understanding Solvers’ Continuance Intention in Crowdsourcing Contest Platform: An Extension of Expectation-Confirmation Model. J. Theor. Appl. Electron. Commer. Res. 2019, 14, 17–33. [Google Scholar] [CrossRef]

- Liu, Y.; Liu, Y. The Effect of Workers’ Justice Perception on Continuance Participation Intention in the Crowdsourcing Market. Internet Res 2019, 29, 1485–1508. [Google Scholar] [CrossRef]

- Spence, M. Signaling in Retrospect and the Informational Structure of Markets. Am. Econ. Rev. 2002, 92, 434–459. [Google Scholar] [CrossRef]

- Zhou, T. Understanding online community user participation: A social influence perspective. Internet Res. 2011, 21, 67–81. [Google Scholar] [CrossRef]

- Lu, Y.; Zhao, L.; Wang, B. From virtual community members to C2C e-commerce buyers: Trust in virtual communities and its effect on consumers’ purchase intention. Electron. Commer. Res. Appl. 2010, 9, 346–360. [Google Scholar] [CrossRef]

- Wu, X.; Shen, J. A Study on Airbnb’s Trust Mechanism and the Effects of Cultural Values—Based on a Survey of Chinese Consumers. Sustainability 2018, 10, 3041. [Google Scholar] [CrossRef]

- García Martinez, M.; Walton, B. The wisdom of crowds: The potential of online communities as a tool for data analysis. Technovation 2014, 34, 203–214. [Google Scholar] [CrossRef]

- Taeihagh, A. Crowdsourcing, Sharing Economies and Development. J. Dev. Soc. 2017, 33, 191–222. [Google Scholar] [CrossRef]

- Luz, N.; Silva, N.; Novais, P. A survey of task-oriented crowdsourcing. Artif. Intell. Rev. 2015, 44, 187–213. [Google Scholar] [CrossRef]

- Glaeser, E.L.; Hillis, A.; Kominers, S.D.; Luca, M. Crowdsourcing City Government: Using Tournaments to Improve Inspection Accuracy. Am. Econ. Rev. 2016, 106, 114–118. [Google Scholar] [CrossRef]

- Mergel, I. Open collaboration in the public sector: The case of social coding on GitHub. Gov. Inf. Q. 2015, 32, 464–472. [Google Scholar] [CrossRef]

- Ye, H. (Jonathan); Kankanhalli, A. Solvers’ participation in crowdsourcing platforms: Examining the impacts of trust, and benefit and cost factors. J. Strat. Inf. Syst. 2017, 26, 101–117. [Google Scholar] [CrossRef]

- Stiglitz, J.E. Information and the Change in the Paradigm in Economics. Am. Econ. Rev. 2002, 92, 460–501. [Google Scholar] [CrossRef]

- Akerlof, G.A. The Market for “Lemons”: Quality Uncertainty and the Market Mechanism. Q. J. Econ. 1970, 84, 488–500. [Google Scholar] [CrossRef]

- Stiglitz, J.E. The Contributions of the Economics of Information to Twentieth Century Economics. Q. J. Econ. 2000, 115, 1441–1478. [Google Scholar] [CrossRef]

- Elitzur, R.; Gavious, A. Contracting, signaling, and moral hazard: A model of entrepreneurs, ‘angels,’ and venture capitalists. J. Bus. Ventur. 2003, 18, 709–725. [Google Scholar] [CrossRef]

- Spence, M. Job Market Signaling. Q. J. Econ. 1973, 87, 355–374. [Google Scholar] [CrossRef]

- Connelly, B.L.; Certo, S.T.; Ireland, R.D.; Reutzel, C.R. Signaling Theory: A Review and Assessment. J. Manag. 2010, 37, 39–67. [Google Scholar] [CrossRef]

- Standifird, S.S.; Weinstein, M.; Meyer, A.D. Establishing Reputation on the Warsaw Stock Exchange: International Brokers as Legitimating Agents. Acad. Manag. Proc. 1999, 1999, K1–K6. [Google Scholar] [CrossRef]

- Chakraborty, S.; Swinney, R. Signaling to the Crowd: Private Quality Information and Rewards-Based Crowdfunding. Manuf. Serv. Oper. Manag. 2020, 23, 155–169. [Google Scholar] [CrossRef]

- Gefen, D.; Benbasat, I.; Pavlou, P. A Research Agenda for Trust in Online Environments. J. Manag. Inf. Syst. 2008, 24, 275–286. [Google Scholar] [CrossRef]

- Teubner, T.; Hawlitschek, F.; Dann, D. Price Determinants on Airbnb: How Reputation Pays Off in the Sharing Economy. J. Self-Governance Manag. Econom. 2017, 5, 53–80. [Google Scholar]

- Zmud, R.W.; Shaft, T.; Zheng, W.; Croes, H. Systematic Differences in Firm’s Information Technology Signaling: Implications for Research Design. J. Assoc. Inf. Syst. 2010, 11, 149–181. [Google Scholar] [CrossRef]

- Benlian, A.; Hess, T. The Signaling Role of IT Features in Influencing Trust and Participation in Online Communities. Int. J. Electron. Commer. 2011, 15, 7–56. [Google Scholar] [CrossRef]

- Ba, S. Establishing online trust through a community responsibility system. Decis. Support Syst. 2001, 31, 323–336. [Google Scholar] [CrossRef]

- Leimeister, J.M.; Ebner, W.; Krcmar, H. Design, Implementation, and Evaluation of Trust-Supporting Components in Virtual Communities for Patients. J. Manag. Inf. Syst. 2005, 21, 101–131. [Google Scholar] [CrossRef]

- Khan, M.L. Social media engagement: What motivates user participation and consumption on YouTube? Comput. Hum. Behav. 2017, 66, 236–247. [Google Scholar] [CrossRef]

- Yao, Z.; Xu, X.; Shen, Y. The Empirical Research About the Impact of Seller Reputation on C2c Online Trading: The Case of Taobao. WHICEB Proc. 2014, 60, 427–435. [Google Scholar]

- Malaquias, R.F.; Hwang, Y. An empirical study on trust in mobile banking: A developing country perspective. Comput. Hum. Behav. 2016, 54, 453–461. [Google Scholar] [CrossRef]

- Horng, S.-M. A Study of Active and Passive User Participation in Virtual Communities. J. Electron. Comm. Res. 2016, 17, 289–311. [Google Scholar]

- Yang, D.; Xue, G.; Fang, X.; Tang, J. Incentive Mechanisms for Crowdsensing: Crowdsourcing With Smartphones. IEEE/ACM Trans. Netw. 2015, 24, 1732–1744. [Google Scholar] [CrossRef]

- Xu, Q.; Liu, Z.; Shen, B. The Impact of Price Comparison Service on Pricing Strategy in a Dual-Channel Supply Chain. Math. Probl. Eng. 2013, 2013, 1–13. [Google Scholar] [CrossRef]

- Lee, H.G.; Lee, S.C.; Kim, H.Y.; Lee, R.H. Is the Internet Making Retail Transactions More Efficient?: Comparison of Online and Offline Cd Retail Markets. Electron. Commer. Res. Appl. 2003, 2, 266–277. [Google Scholar] [CrossRef]

- Delone, W.H.; McLean, E.R. Measuring e-Commerce Success: Applying the DeLone & McLean Information Systems Success Model. Int. J. Electron. Commer. 2004, 9, 31–47. [Google Scholar] [CrossRef]

- Pesämaa, O.; Zwikael, O.; Hair, J.F.; Huemann, M. Publishing quantitative papers with rigor and transparency. Int. J. Proj. Manag. 2021, 39, 217–222. [Google Scholar] [CrossRef]

- Hong, Z.; Zhu, H.; Dong, K. Buyer-Side Institution-Based Trust-Building Mechanisms: A 3S Framework with Evidence from Online Labor Markets. Int. J. Electron. Commer. 2020, 24, 14–52. [Google Scholar] [CrossRef]

- Hair, J.F., Jr.; Hult, G.T.M.; Ringle, C.; Sarstedt, M. A Primer on Partial Least Squares Structural Equation Modeling (PLS-SEM); Sage Publications: Thousand Oaks, CA, USA, 2016. [Google Scholar]

- Mavlanova, T.; Benbunan-Fich, R.; Koufaris, M. Signaling theory and information asymmetry in online commerce. Inf. Manag. 2012, 49, 240–247. [Google Scholar] [CrossRef]

- Liang, Y.; Ow, T.T.; Wang, X. How do group performances affect users’ contributions in online communities? A cross-level moderation model. J. Organ. Comput. Electron. Commer. 2020, 30, 129–149. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).