Development of Platform Independent Mobile Learning Tool in Saudi Universities

Abstract

1. Introduction

1.1. Research Motivation

1.2. Problem Formulation

1.3. Research Questions

- Developing a platform-independent m-learning tool using structured programming as a case study;

- Analysing students’ academic performance in a KAU by using the MLT in structured programming course;

- Evaluating the effectiveness and usability of the m-learning tool using various research instruments.

2. Related Works

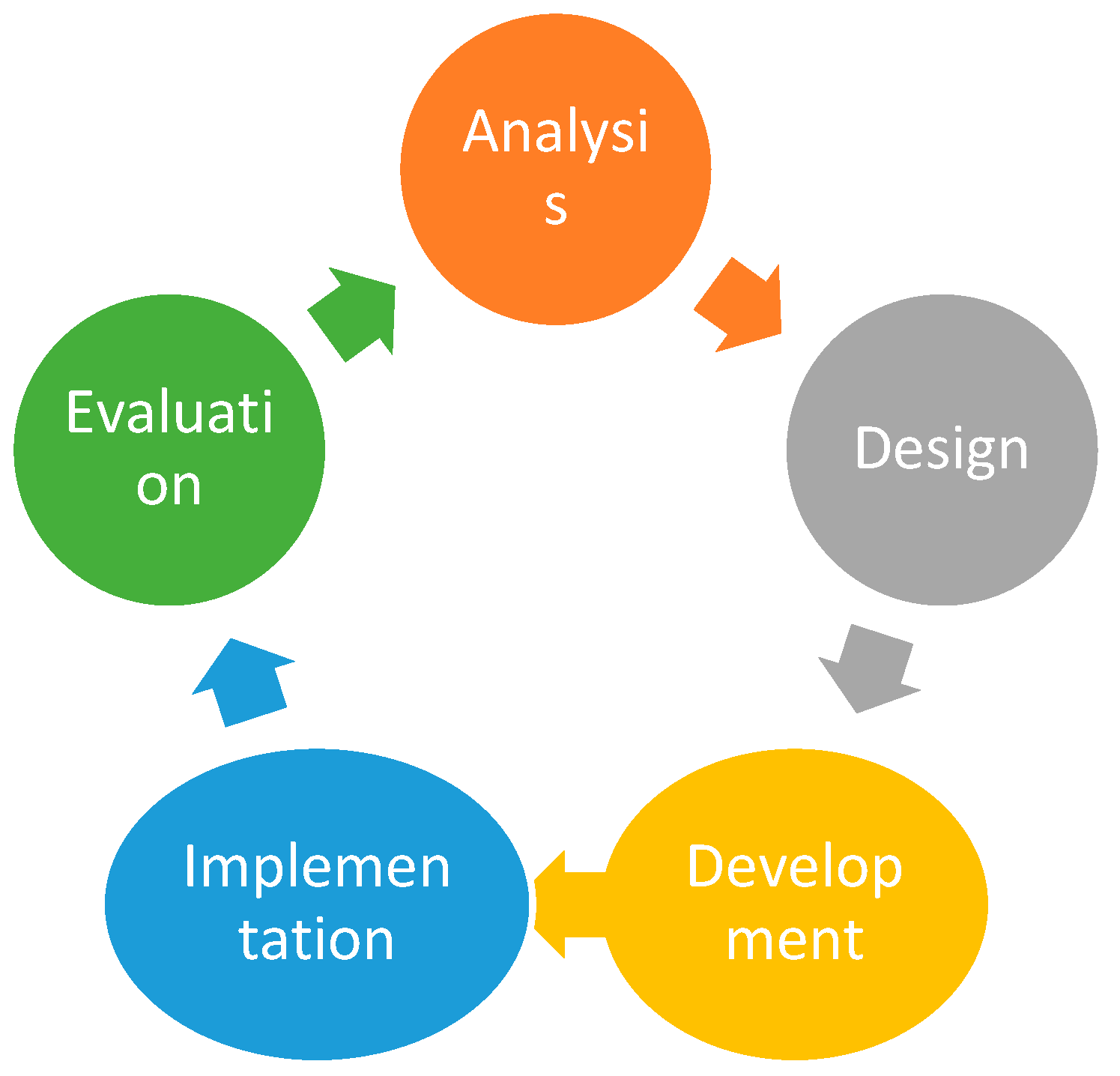

3. Proposed Architecture

3.1. Analysis Phase

3.2. Design Phase

3.3. Development Phase

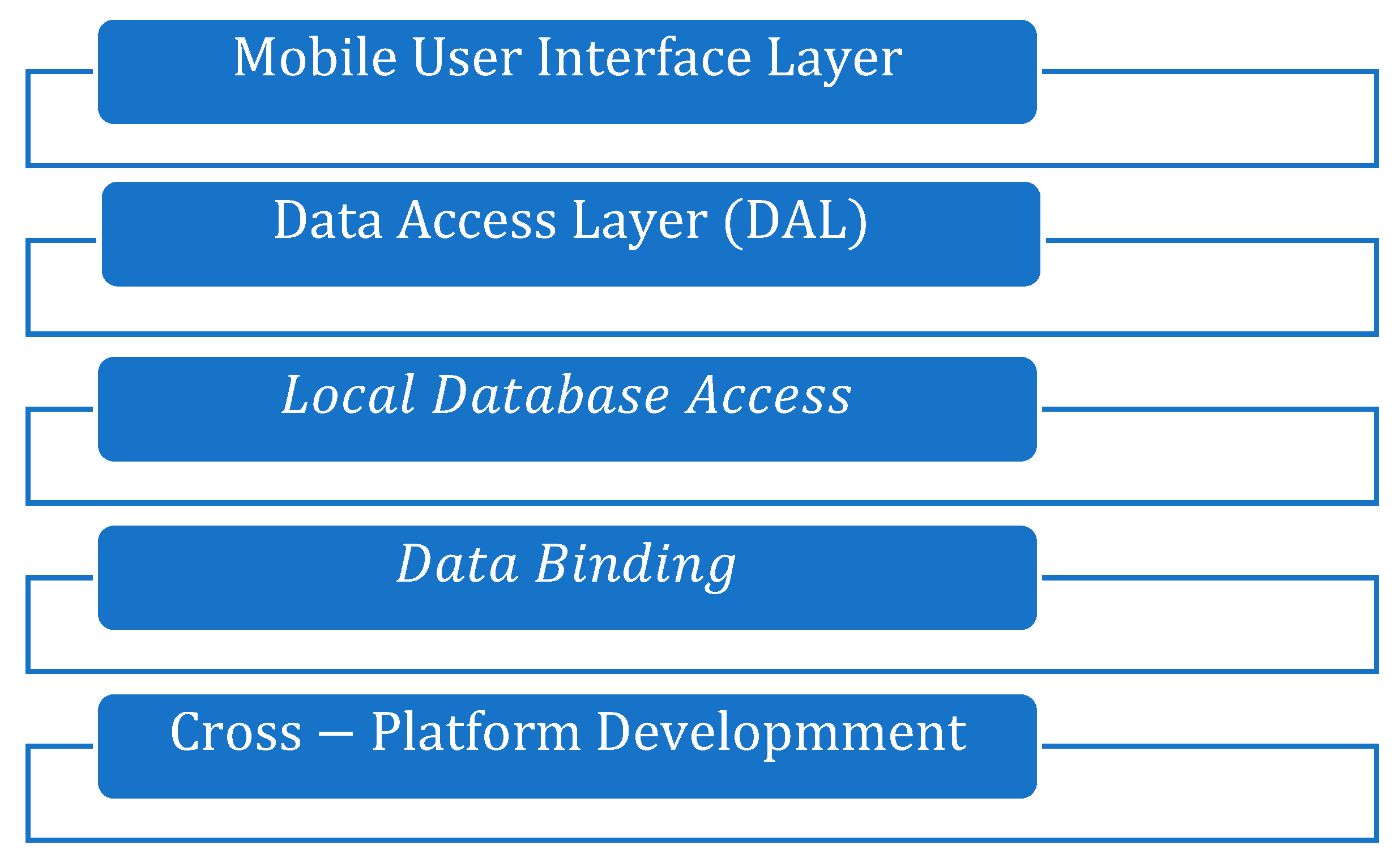

3.3.1. Layered Architecture of Xamarin Framework

|

} } |

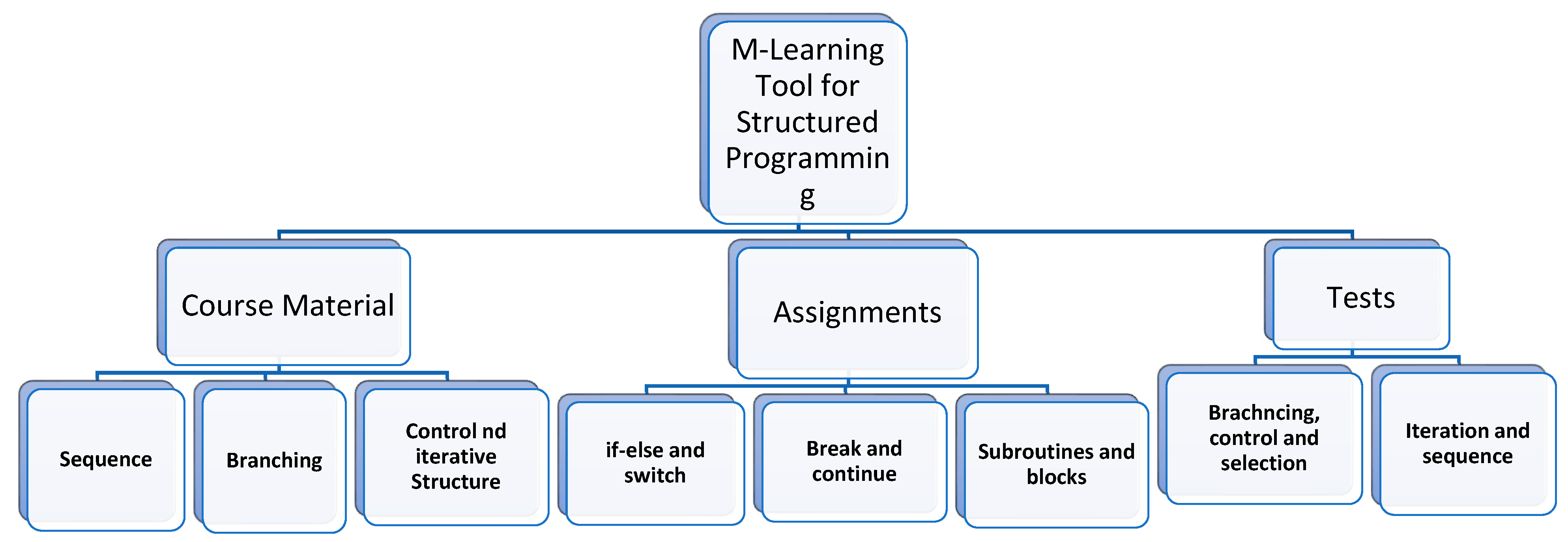

3.3.2. Content Selection

3.3.3. Output Screen

| Algorithm 1. Calculating and Displaying the Outcome |

| Input: answer chosen for a specific question Output: response key, outcome (total questions, correct responses, obtained ranking, and accuracy) Start:

|

3.4. Implementation Stage

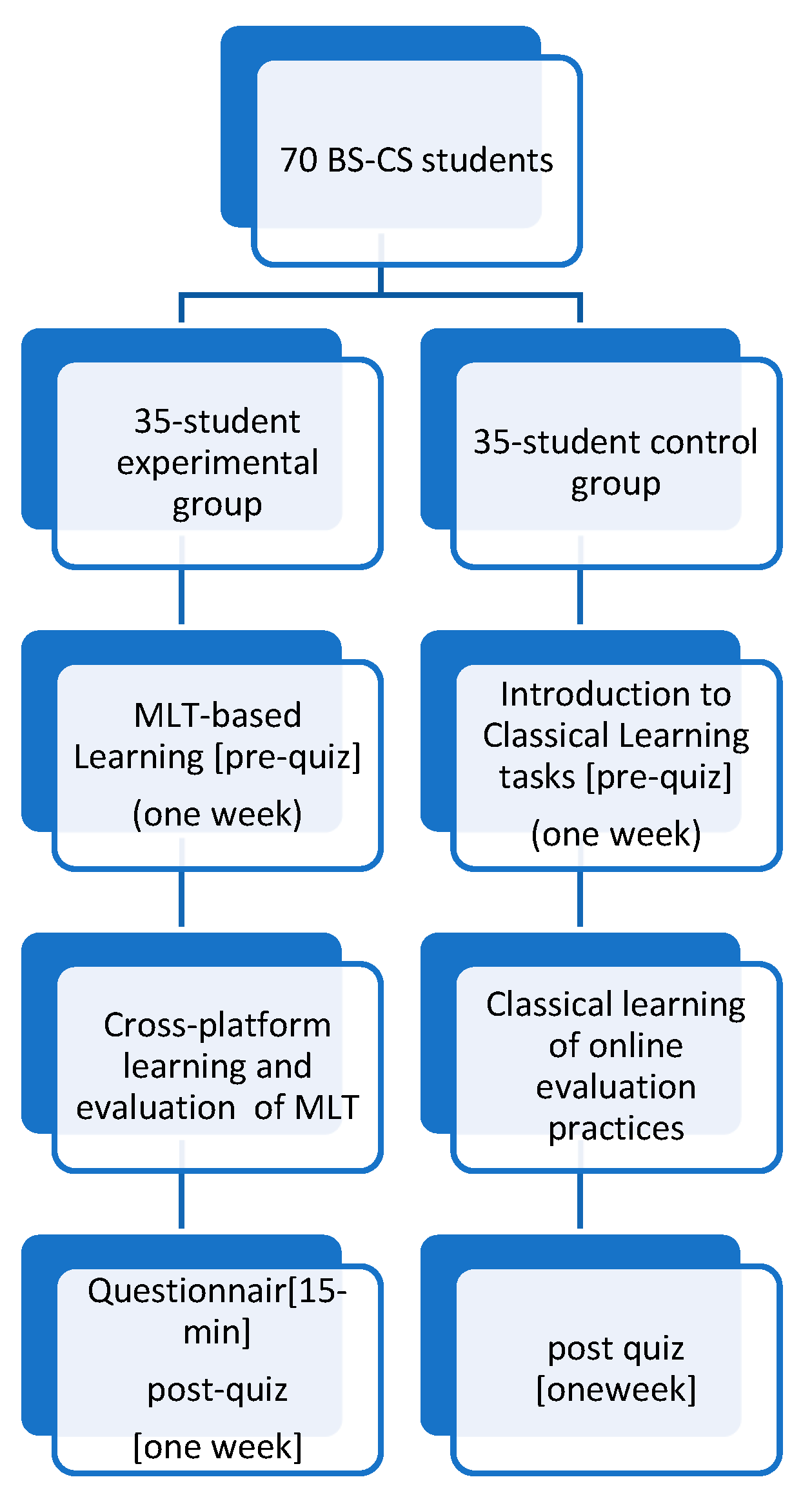

3.4.1. Participants

3.4.2. Research Instruments

4. Results and Discussion

4.1. Addressing RQ1

4.1.1. Setup

| // Implementation on Android Platform { { { } } { { { } } { { } } |

4.1.2. Performance Evaluation

4.2. Addressing RQ2

4.3. Addressing RQ3

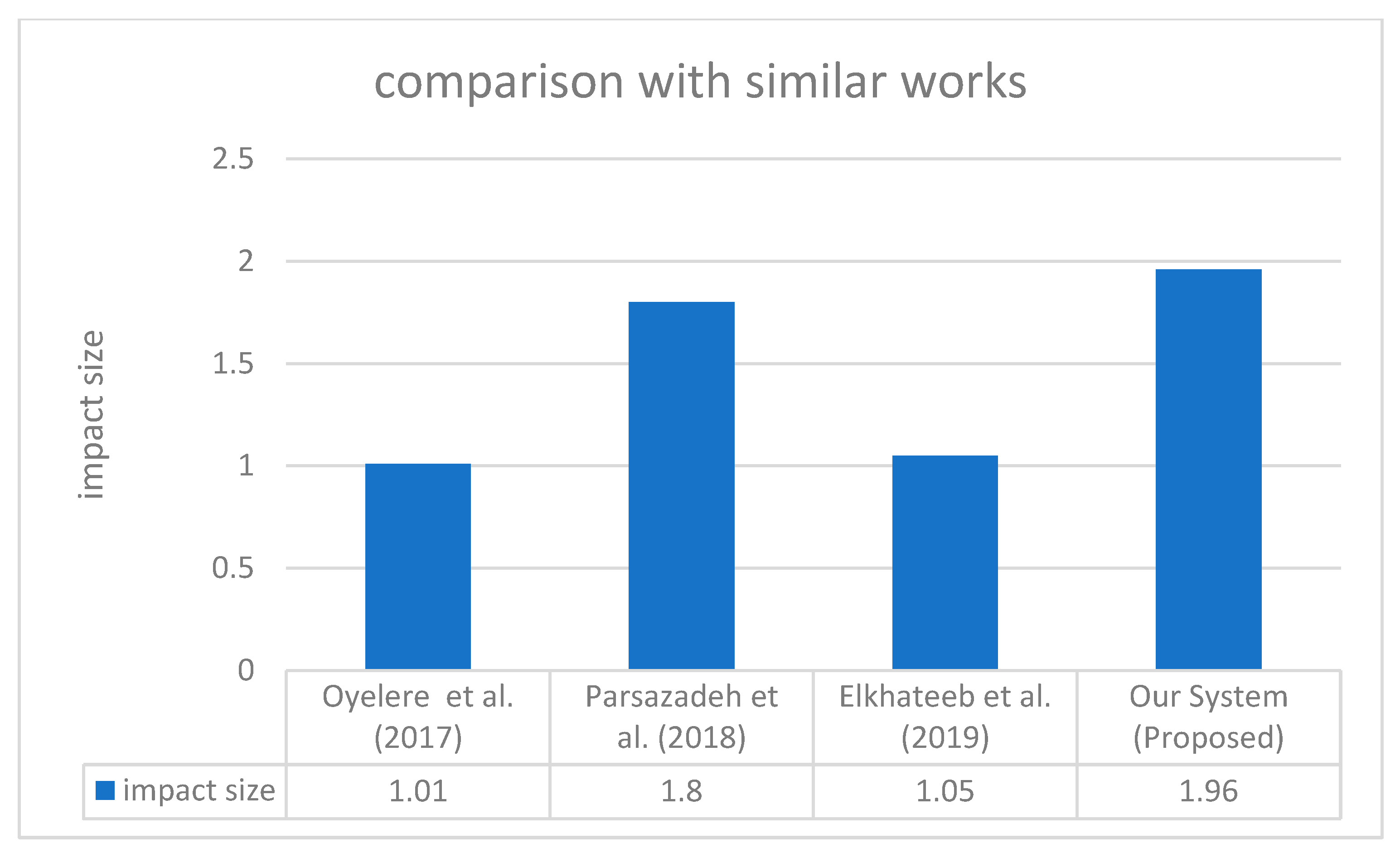

4.4. Comparison to Similar Works

5. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A. Multiple-Choice Questions

| 1. In Java, what is the default return type of a method? 2. Choose from the following Java compound-assignment arithmetic operators. 3. In Java, an ELSE clause must be headed by a …….. statement. 4. In the Java language, what is an alternate to SWITCH? 5. Select a valid Java loop name from the list below. |

Appendix B. Open-Ended Questions

| Pre-test Questions 1. What is conditional transfer of control? Provide examples. 2. What are the advantages of functions? Post-test Questions 3. Which iterative constructs are supported in structured programming? Provide examples. 4. What are pointers? Explain. |

References

- Education (n.d.). Available online: https://www.saudiembassy.net/education (accessed on 6 April 2021).

- Zydney, J.M.; Warner, Z. Mobile Apps for Science Learning: Review of Research. Comput. Educ. 2016, 94, 1–17. [Google Scholar] [CrossRef]

- Elkhateeb, M.; Shehab, A.; El-bakry, H. Mobile Learning System for Egyptian Higher Education Using Agile-Based Approach. Educ. Res. Int. 2019, 2019, 7531980. [Google Scholar] [CrossRef]

- Zubair, M.; Sana, I.; Nasir, K.; Iqbal, H.; Masud, F.; Ismail, S. Quizzes: Quiz Application Development Using Android-Based MIT APP Inventor Platform. Int. J. Adv. Comput. Sci. Appl. 2016, 7. [Google Scholar] [CrossRef]

- Yassine, A.; Berrada, M.; Tahiri, A.; Chenouni, D. A Cross-Platform Mobile Application for Learning Programming Basics. Int. J. Interact. Mob. Technol. 2018, 12, 139. [Google Scholar] [CrossRef]

- Martinez, M.; Lecomte, S. Towards the Quality Improvement of Cross-Platform Mobile Applications. In Proceedings of the 2017 IEEE/ACM 4th International Conference on Mobile Software Engineering and Systems (MOBILESoft), Buenos Aires, Argentina, 22–23 May 2017. [Google Scholar]

- Huber, S.; Demetz, L.; Felderer, M. Analysing the Performance of Mobile Cross-Platform Development Approaches Using UI Interaction Scenarios. In Communications in Computer and Information Science; Springer International Publishing: Cham, Switzerland, 2020; pp. 40–57. [Google Scholar]

- El-Kassas, W.S.; Abdullah, B.A.; Yousef, A.H.; Wahba, A.M. Taxonomy of Cross-Platform Mobile Applications Development Approaches. Ain Shams Eng. J. 2017, 8, 163–190. [Google Scholar] [CrossRef]

- Parsazadeh, N.; Ali, R.; Rezaei, M. A Framework for Cooperative and Interactive Mobile Learning to Improve Online Information Evaluation Skills. Comput. Educ. 2018, 120, 75–89. [Google Scholar] [CrossRef]

- Viberg, O.; Andersson, A.; Wiklund, M. Designing for sustainable mobile learning–re-evaluating the concepts “formal” and “informal”. Interact. Learn. Environ. 2021, 29, 130–141. [Google Scholar] [CrossRef]

- Al-Emran, M.; Mezhuyev, V.; Kamaludin, A. Towards a Conceptual Model for Examining the Impact of Knowledge Management Factors on Mobile Learning Acceptance. Technol. Soc. 2020, 61, 101247. [Google Scholar] [CrossRef]

- Nikolopoulou, K.; Gialamas, V.; Lavidas, K.; Komis, V. Teachers’ readiness to adopt mobile learning in classrooms: A study in Greece. Technol. Knowl. Learn. 2021, 26, 53–77. [Google Scholar] [CrossRef]

- Pedro, L.F.M.G.; de Barbosa, C.M.M.O.; das Santos, C.M.N. A Critical Review of Mobile Learning Integration in Formal Educational Contexts. Int. J. Educ. Technol. High. Educ. 2018, 15. [Google Scholar] [CrossRef]

- Kumar Basak, S.; Wotto, M.; Bélanger, P. E-Learning, M-Learning and D-Learning: Conceptual Definition and Comparative Analysis. E-Learn. Digit. Media 2018, 15, 191–216. [Google Scholar] [CrossRef]

- Connolly, C.; Hijón-Neira, R.; Grádaigh, S.Ó. Mobile Learning to Support Computational Thinking in Initial Teacher Education: A Case Study. Int. J. Mob. Blended Learn. 2021, 13, 49–62. [Google Scholar] [CrossRef]

- Oyelere, S.S.; Suhonen, J. Design and Implementation of MobileEdu M-Learning Application for Computing Education in Nigeria: A Design Research Approach. In Proceedings of the 2016 International Conference on Learning and Teaching in Computing and Engineering (LaTICE), Mumbai, India, 31 March–3 April 2016. [Google Scholar]

- Rodríguez, C.D.; Cumming, T.M. Employing Mobile Technology to Improve Language Skills of Young Students with Language-Based Disabilities. Assist. Technol. 2017, 29, 161–169. [Google Scholar] [CrossRef] [PubMed]

- Viberg, O.; Grönlund, Å. Understanding Students’ Learning Practices: Challenges for Design and Integration of Mobile Technology into Distance Education. Learn. Media Technol. 2017, 42, 357–377. [Google Scholar] [CrossRef]

- Lehner, F.; Nosekabel, H. The Role of Mobile Devices in E-Learning First Experiences with a Wireless E-Learning Environment. In Proceedings of the IEEE International Workshop on Wireless and Mobile Technologies in Education, Vaxjo, Sweden, 30 August 2002. [Google Scholar]

- Sharples, M.; Corlett, D.; Westmancott, O. The Design and Implementation of a Mobile Learning Resource. Pers. Ubiquitous Comput. 2002, 6, 220–234. [Google Scholar] [CrossRef]

- Chan, Y.-Y.; Leung, C.-H.; Wu, A.K.W.; Chan, S.-C. MobiLP: A Mobile Learning Platform for Enhancing Lifewide Learning. In Proceedings of the 3rd IEEE International Conference on Advanced Technologies, Athens, Greece, 9–11 July 2003. [Google Scholar]

- Singh, D.; Zaitun, A.B. Mobile Learning In Wireless Classrooms. Malays. Online J. Instr. Technol. 2006, 3, 26–42. [Google Scholar]

- Hudaya, A.; Mahmud, A.; Izuddin, A.; Rahman, M.A. M-Learning Management Tool Development in Campus-Wide Environment. In Proceedings of the 2006 InSITE Conference; Informing Science Institute: Manchester, UK, 2006. [Google Scholar]

- Motiwalla, L.F. Mobile learning: A framework and evaluation. Comput. Educ. 2007, 3, 581–596. [Google Scholar] [CrossRef]

- Evgeniya, G.; Angel, S.; Tsvetozar, G. A general classification of mobile learning systems. In Proceedings of the International Conference on Computer Systems and Technologies, Valencia, Spain, 16–17 June 2005. [Google Scholar]

- Niazi, R.; Mahmoud, Q.H. A Web-based Tool for Generating Quizzes for Mobile Devices. In Proceedings of the A Poster at the 39th ACM Technical Symposium on Computer Science Education (SIGCSE), Portland, OR, USA, 12–15 March 2008. [Google Scholar]

- Tan, T.-H.; Liu, T.-Y. The MObile-Based Interactive Learning Environment (MOBILE)and a Case Study for Assisting Elementary School English Learning. In Proceedings of the IEEE International Conference on Advanced Learning Technologies, Joensuu, Finland, 30 August–1 September 2004. [Google Scholar]

- Statti, A.; Villegas, S. The Use of Mobile Learning in Grades K–12: A Literature Review of Current Trends and Practices. Peabody J. Educ. 2020, 95, 139–147. [Google Scholar] [CrossRef]

- Seels, B.B.; Richey, R.C. Instructional Technology: The Definition and Domains of the Field, 1994 ed.; Information Age Publishing: Charlotte, NC, USA, 2012. [Google Scholar]

- Heinich, R.; Molenda, M.; Russell, J.D. Instructional Media and the New Technologies of Instruction: Tchrs’; John Wiley & Sons: Nashville, TN, USA, 1982. [Google Scholar]

- ELM Learning. Iterative Design Models: ADDIE vs. SAM [Internet]. Elmlearning.com. 2020. Available online: https://elmlearning.com/iterative-design-models-addie-vs-sam/ (accessed on 17 May 2021).

- Wang, S.-K.; Hsu, H.-Y. Using ADDIE model to design Second Life activities for online learners. Techtrends Tech Trends 2009, 53. [Google Scholar] [CrossRef]

- Martinez, M. Two Datasets of Questions and Answers for Studying the Development of Cross-Platform Mobile Applications Using Xamarin Framework. In Proceedings of the 2019 IEEE/ACM 6th International Conference on Mobile Software Engineering and Systems (MOBILESoft), Montreal, QC, Canada, 25 May 2019. [Google Scholar]

- Oakleaf, M. Staying on Track with Rubric Assessment: Five Institutions Investigate Information Literacy Learning. Available online: https://www.aacu.org/publications-research/periodicals/staying-track-rubric-assessment (accessed on 18 May 2021).

- So, S. Mobile Instant Messaging Support for Teaching and Learning in Higher Education. Internet High. Educ. 2016, 31, 32–42. [Google Scholar] [CrossRef]

- Lai, P.C. The Literature Review of Technology Adoption Models and Theories for the Novelty Technology. J. Inf. Syst. Technol. Manag. 2017, 14. [Google Scholar] [CrossRef]

- Alshabeb, A.M.; Alharbi, O.; Almaqrn, R.K.; Albazie, H.A. Studies Employing the Unified Theory of Acceptance and Use of Technology (UTAUT) as a Guideline for the Research: Literature Review of the Saudi Context. Adv. Soc. Sci. Res. J. 2020, 7, 18–23. [Google Scholar] [CrossRef][Green Version]

- Davis, F.D. Perceived Usefulness, Perceived Ease of Use, and User Acceptance of Information Technology. MIS Q. 1989, 13, 319. [Google Scholar] [CrossRef]

- Hermes, D. Xamarin Mobile Application Development: Cross-Platform C# and Xamarin.Forms Fundamentals, 1st ed.; APRESS: New York, NY, USA, 2015. [Google Scholar]

- Why Is 1 GB of RAM Enough for an iPhone or Windows Phone but Not an Android?—Quora. Available online: https://www.quora.com/Why-is-1-GB-of-RAM-enough-for-an-iPhone-or-Windows-phone-but-not-an-Android (accessed on 17 May 2021).

- Cohen, J. Statistical Power Analysis for the Behavioral Sciences; Elsevier Science: Amsterdam, The Netherlands, 2013. [Google Scholar]

| Research Question | Motivation |

|---|---|

| RQ1: How can a platform-independent m-learning tool be designed using structured programming as a case study? | Develop the proposed cross-platform m-learning application based on the analysis design development implementation and assessment model (ADDIE) and use it to assist students in learning structured programming courses. |

| RQ2. Is there an increase in student academic performance at KAU by using a mobile learning tool (MLT) in structured programming courses? | To assess the progress of the learning of students in a pre- and post-class information test. Comparing post-test outcomes to pre-test results as the covariate for control and experimental classes. |

| RQ3: To what degree do the users acknowledge that the m-learning tool is beneficial and consider it an effective learning tool? | To determine the degree to which the developed m-learning application assists students in improving their online information testing abilities based on questionnaire responses. |

| Week | Course Content |

|---|---|

| Week 1 | The fundamentals of structure programming |

| Week 2 | Control structures |

| Week 3 | Basics of functions |

| Week 4 | Parameters and overloading |

| Week 5 | Single and multidimensional arrays |

| Week 6 | Structures and classes |

| Week 7 | Pointers |

| Week 8 | Dynamic arrays |

| Week 9 | Recursion |

| Week 10 | File handling |

| Device | CPU Usage (in Seconds) | Memory Usage (avg.) (in MBs) |

|---|---|---|

| Android | 33.2 | 450 |

| Windows Phone | 3.8 | 530 |

| iOS | 2.9 | 244 |

| Pre-Test Descriptive Statistics | Post-Test Descriptive Statistics and ANCOVA Results | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Learner Group | n | Mean | SD | p | Mean | SD | p | Adj. Mean | Std. Error | f-Value | d |

| Experimental | 31 | 17.25 | 1.4142 | 0.261 | 29.04 | 5.725 | 0.251 | 29.04 | 1.15 | 6.09 | 1.96 |

| Control | 30 | 17.19 | 1.3102 | 0.242 | 25.36 | 5.185 | 0.818 | 25.37 | 1.15 | ||

| Student Groups | N | Mean | SD | T | |

|---|---|---|---|---|---|

| Questions 1–12 | Experimental | 71 | 5.12 | 0.85 | −15.45 |

| Control | 71 | 3.01 | 0.76 |

| Questions | Experimental Group | Control Group | T | ||

|---|---|---|---|---|---|

| Mean | SD | Mean | SD | ||

| Effectiveness | |||||

| 3.46 | 1.24 | 2.01 | 1.03 | −6.47 |

| 4.02 | 0.77 | 2.24 | 1.21 | −9.85 |

| Satisfaction | |||||

| 3.47 | 1.41 | 2.33 | 1.05 | −6.73 |

| 5.11 | 0.77 | 2.62 | 1.12 | −10.66 |

| 4.04 | 1.32 | 2.08 | 1.03 | −7.21 |

| 5.42 | 0.91 | 2.42 | 1.23 | −11.61 |

| Learnability | |||||

| 4.43 | 0.61 | 3.44 | 1.20 | −4.23 |

| 5.10 | 0.72 | 2.41 | 1.09 | −8.41 |

| 4.08 | 1.01 | 3.11 | 1.27 | −9.05 |

| Performance | |||||

| 3.07 | 1.33 | 2.50 | 1.07 | −5.71 |

| 5.26 | 1.32 | 3.84 | 1.46 | −6.33 |

| Timeliness | |||||

| 5.01 | 1.54 | 2.63 | 1.50 | −7.09 |

| Recognizability | |||||

| 3.09 | 1.44 | 2.60 | 1.08 | −5.81 |

| 3.16 | 0.89 | 2.01 | 1.67 | −9.24 |

| Perceived Stress | |||||

| 5.12 | 1.44 | 2.54 | 1.42 | −6.01 |

| Malfunction | |||||

| 4.02 | 1.32 | 2.21 | 1.40 | −6.68 |

| 3.06 | 0.78 | 1.98 | 1.47 | −8.11 |

| Authors | Year/Publication | Architecture | Platform | Results |

|---|---|---|---|---|

| Oyelere et al. [16] | 2017 | ANOVA, t-test, DSR, MobileEdu model | Android | Mean = 4.57 SD = 1.56 t-value = −7.87 effect size = 1.01 |

| Parsazadeh et al. [9] | 2018 | ADDIE and cooperative and interactive mobile learning application (CIMLA) | Android | effect size = 1.91 |

| Elkhateeb et al. [3] | 2019 | Agile-based approach | iOS | Mean = 43.5600 SD = 3.08492 effect size = 1.05 |

| Our system (proposed) | 2021 | ADDIE model implemented in the Xamarin framework | Platform- independent (iOS, Android, and Windows Phone) | effect size = 1.96 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Alghazzawi, D.M.; Hasan, S.H.; Aldabbagh, G.; Alhaddad, M.; Malibari, A.; Asghar, M.Z.; Aljuaid, H. Development of Platform Independent Mobile Learning Tool in Saudi Universities. Sustainability 2021, 13, 5691. https://doi.org/10.3390/su13105691

Alghazzawi DM, Hasan SH, Aldabbagh G, Alhaddad M, Malibari A, Asghar MZ, Aljuaid H. Development of Platform Independent Mobile Learning Tool in Saudi Universities. Sustainability. 2021; 13(10):5691. https://doi.org/10.3390/su13105691

Chicago/Turabian StyleAlghazzawi, Daniyal M., Syed Hamid Hasan, Ghadah Aldabbagh, Mohammed Alhaddad, Areej Malibari, Muhammad Zubair Asghar, and Hanan Aljuaid. 2021. "Development of Platform Independent Mobile Learning Tool in Saudi Universities" Sustainability 13, no. 10: 5691. https://doi.org/10.3390/su13105691

APA StyleAlghazzawi, D. M., Hasan, S. H., Aldabbagh, G., Alhaddad, M., Malibari, A., Asghar, M. Z., & Aljuaid, H. (2021). Development of Platform Independent Mobile Learning Tool in Saudi Universities. Sustainability, 13(10), 5691. https://doi.org/10.3390/su13105691