Using Virtual Reality to Assess Landscape: A Comparative Study Between On-Site Survey and Virtual Reality of Aesthetic Preference and Landscape Cognition

Abstract

1. Introduction

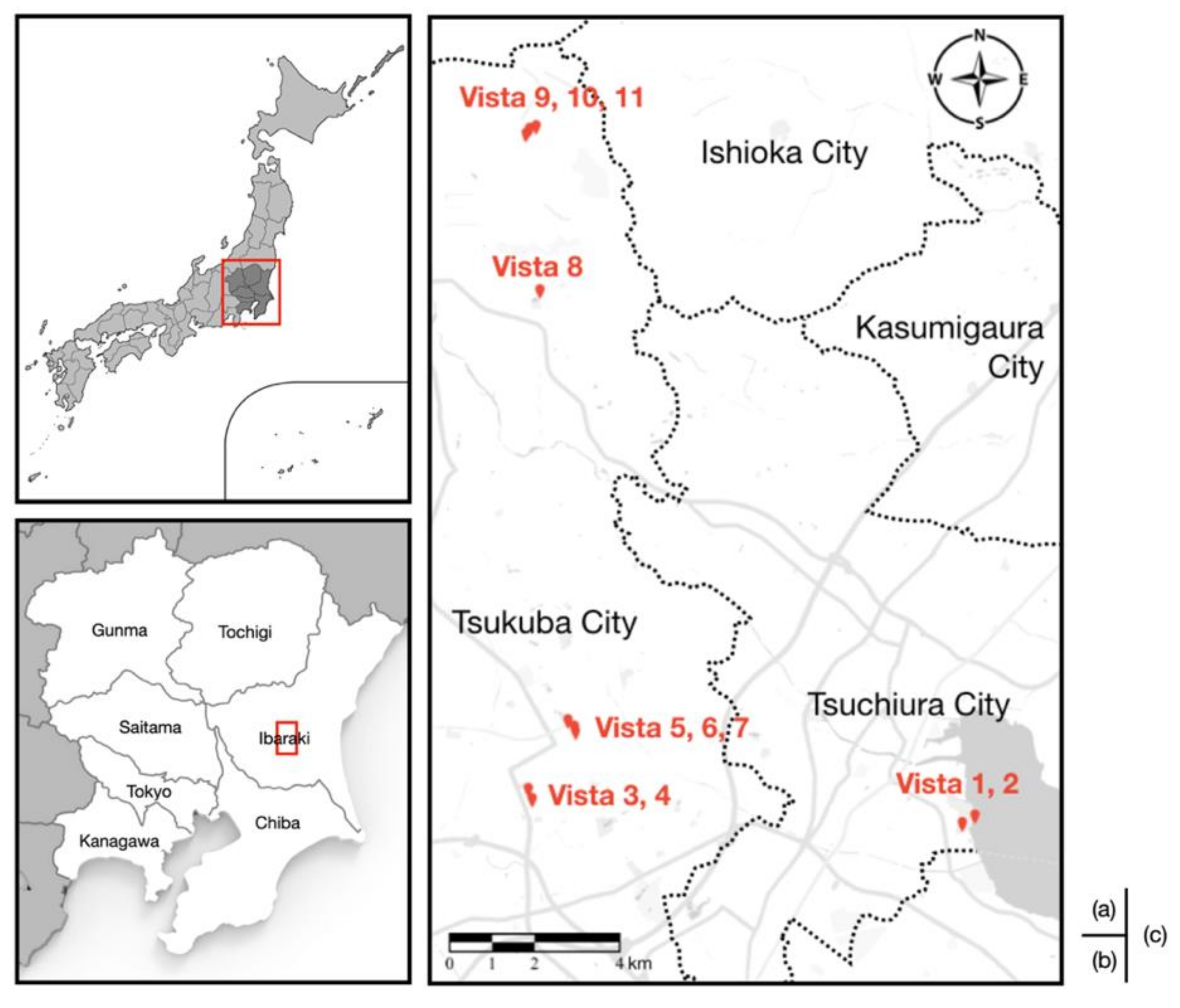

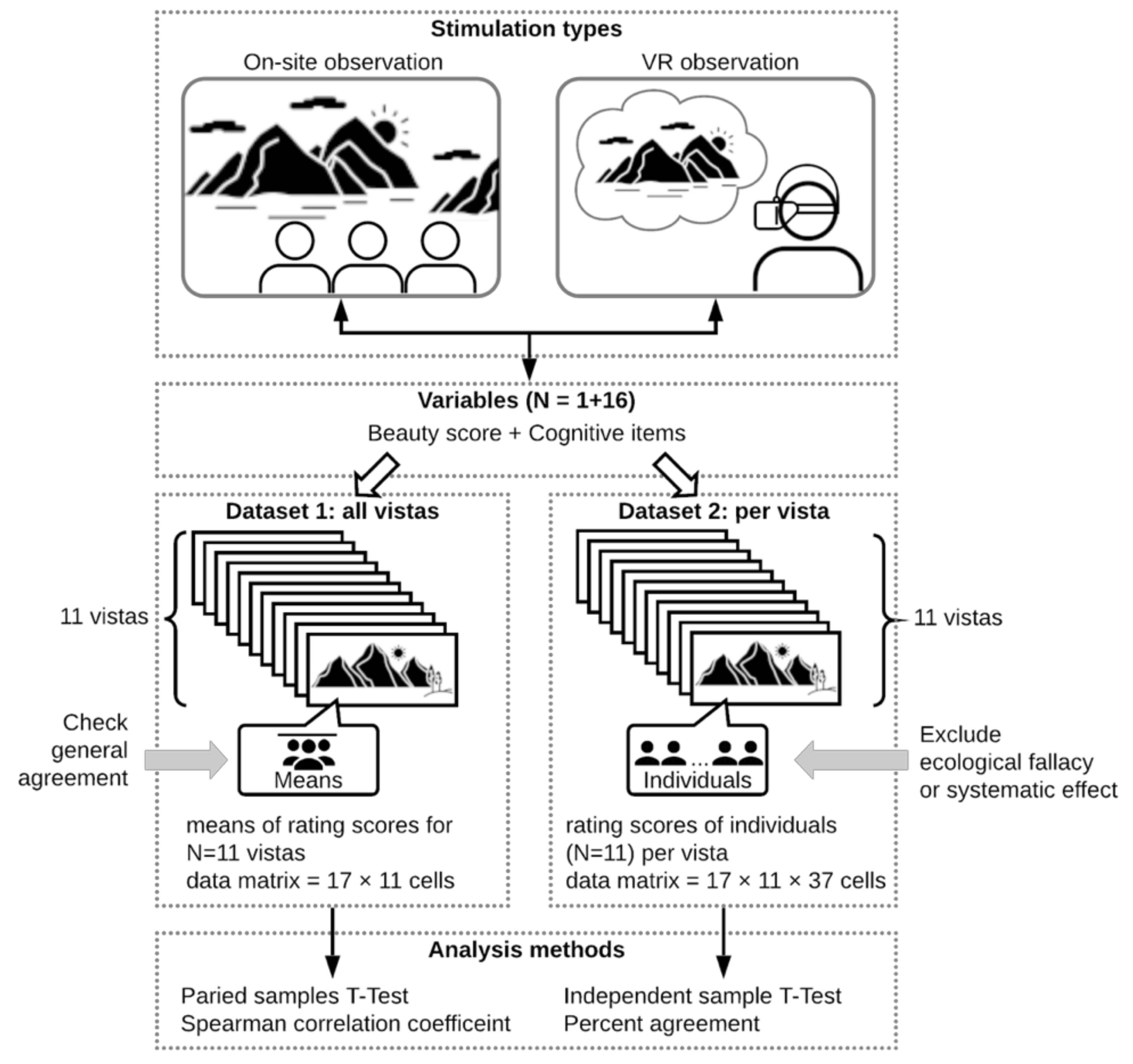

2. Materials and Methods

2.1. Questionnaire

2.2. On-Site Survey Data Collection

2.3. VR Data Collection

2.4. Respondents

2.5. Statistical Analysis

3. Results

3.1. Reliability Analysis and Descriptive Statistics

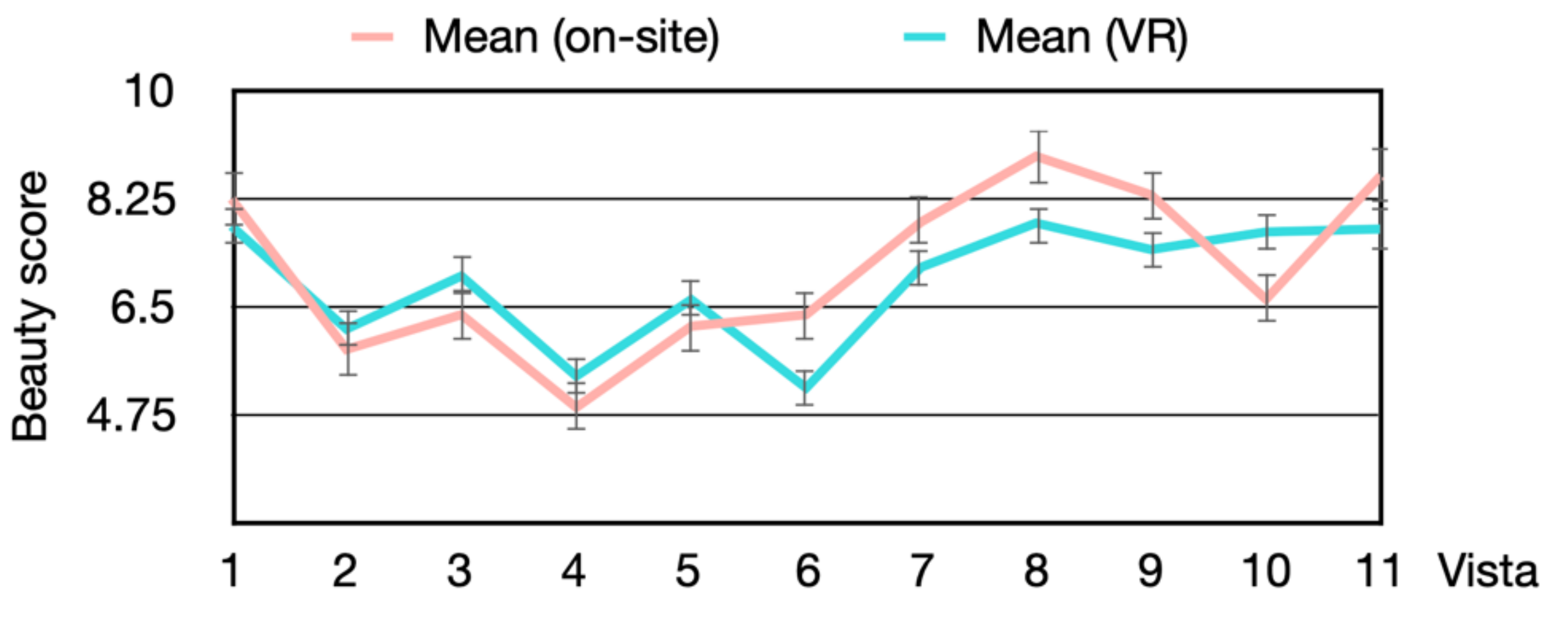

3.2. Aesthetic Preference

3.2.1. Agreement of Mean “Beauty” Scores for All Vistas

3.2.2. Agreement of “Beauty” Scores of Individuals per Vista

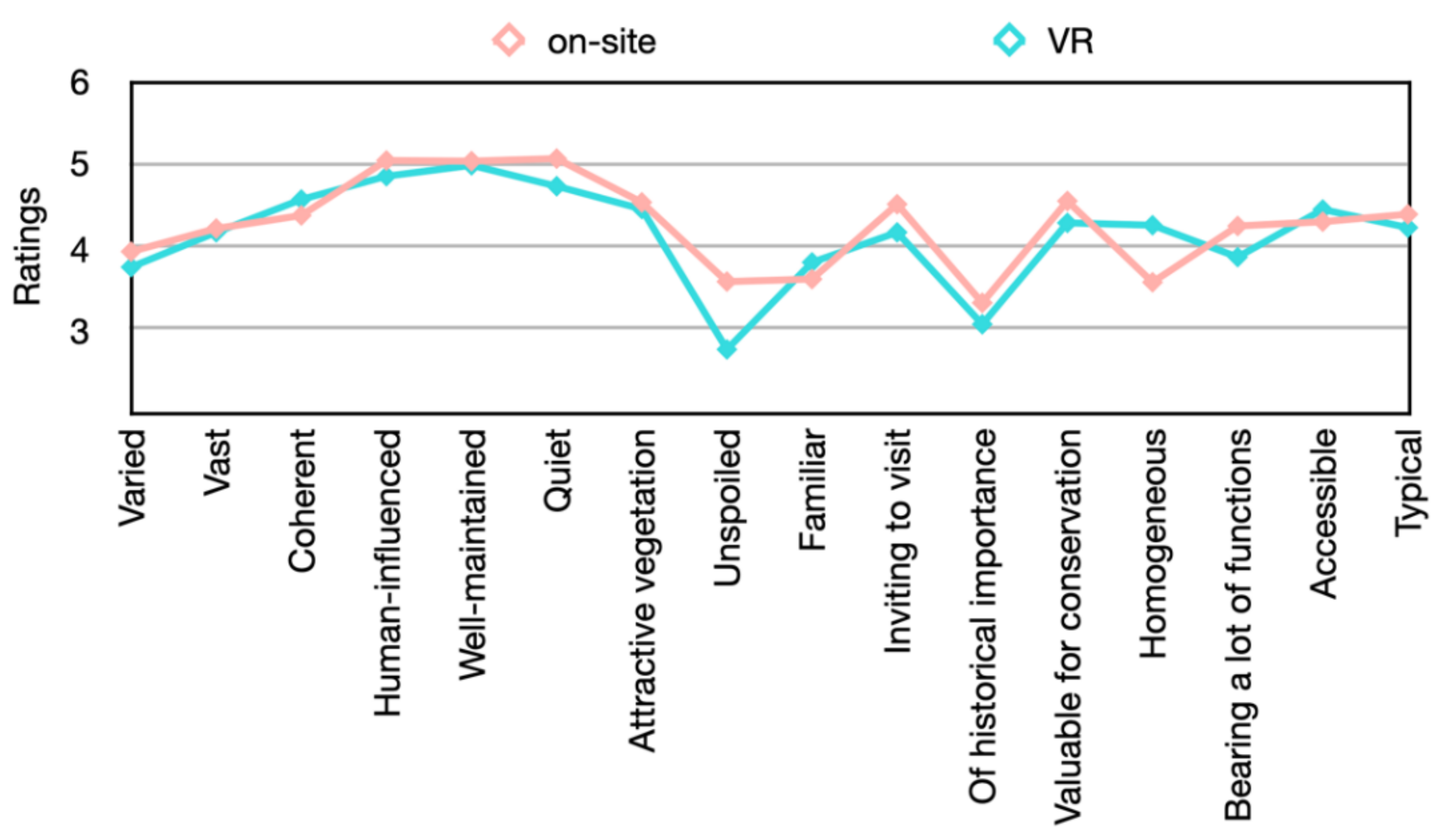

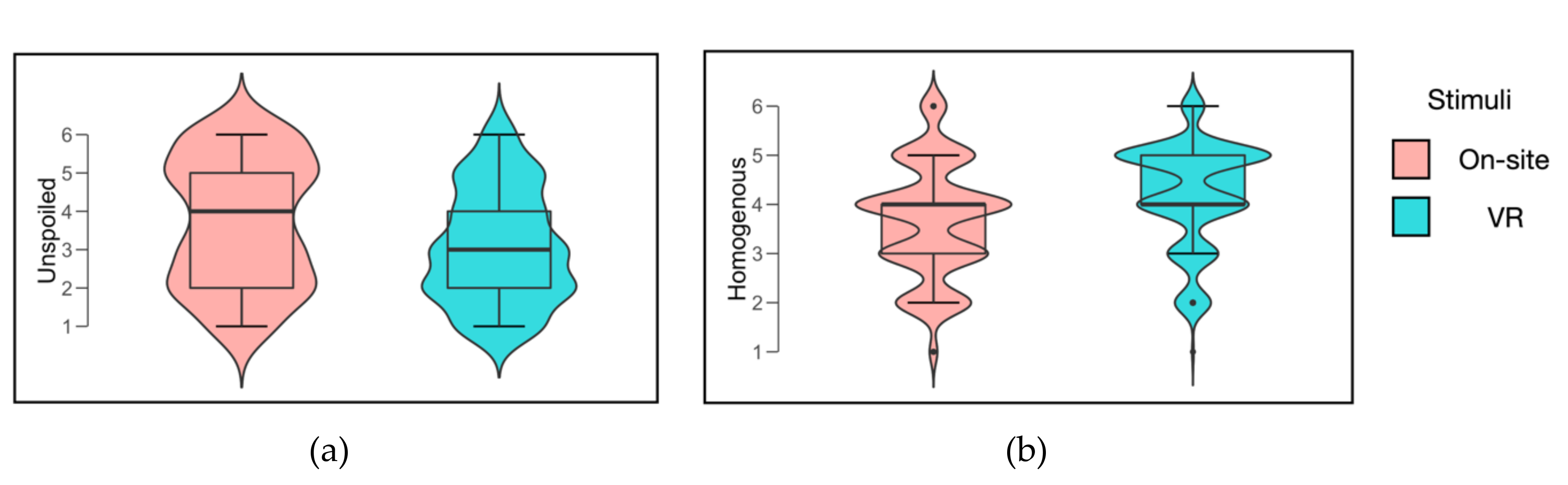

3.3. Cognitive Attributes

3.3.1. Agreement of Mean Cognitive Ratings for All Vistas

3.3.2. Agreement of Cognitive Ratings of Individuals per Vista

4. Discussion

4.1. Overview of Findings

4.2. Ecological Fallacy and Systematic Effect

4.3. Comparing with the Validity of Using Photographs

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Appendix A

References

- Buhyoff, G.J.; Miller, P.A.; Roach, J.W.; Zhou, D.; Fuller, L.G. An AI methodology for landscape visual assessments. AI Appl. 1994, 8, 1–13. [Google Scholar]

- Sevenant, M.; Antrop, M. Landscape Representation Validity: A Comparison between On-site Observations and Photographs with Different Angles of View. Landsc. Res. 2011, 36, 363–385. [Google Scholar] [CrossRef]

- Hetherington, J.; Daniel, T.C.; Brown, T.C. Is motion more important than it sounds: The medium of presentation in environment perception research. J. Environ. Psychol. 1993, 13, 283–291. [Google Scholar] [CrossRef]

- Zube, E.H.; Pitt, D.G.; Anderson, T.W. Perception and measurement of scenic resources in the Southern Connecticut River Valley. Landsc. Res. 1974, 1, 10–11. [Google Scholar] [CrossRef]

- Trent, R.B.; Neumann, E.; Kvashny, A. Presentation mode and question format artifacts in visual assessment research. Landsc. Urban Plan. 1987, 14, 225–235. [Google Scholar] [CrossRef]

- Kaplan, S. The restorative benefits of nature: Toward an integrative framework. J. Environ. Psychol. 1995, 15, 169–182. [Google Scholar] [CrossRef]

- Saito, K.; Furuya, K.; Subashiri, S. A Study on Landscape Evaluation by Video Image. Jpn. Inst. Landsc. Archit. 1985, 49, 179–184. [Google Scholar] [CrossRef]

- Daniel, T.C.; Meitner, M.M. Representational Validity of Landscape Visualizations: The Effects of Graphical Realism on Perceived Scenic Beauty of Forest Vistas. J. Environ. Psychol. 2001, 21, 61–72. [Google Scholar] [CrossRef]

- Bishop, I.D.; Wherrett, J.R.; Miller, D.R. Assessment of path choices on a country walk using a virtual environment. Landsc. Urban Plan. 2001, 52, 225–237. [Google Scholar] [CrossRef]

- Lange, E. The limits of realism: Perceptions of virtual landscapes. Landsc. Urban Plan. 2001, 54, 163–182. [Google Scholar] [CrossRef]

- Orland, B.; Budthimedhee, K.; Uusitalo, J. Considering virtual worlds as representations of landscape realities and as tools for landscape planning. Landsc. Urban Plan. 2001, 54, 139–148. [Google Scholar] [CrossRef]

- Riva, G.; Mantovani, F.; Capideville, C.S.; Preziosa, A.; Morganti, F.; Villani, D.; Gaggioli, A.; Botella, C.; Alcañiz, M. Affective Interactions Using Virtual Reality: The Link between Presence and Emotions. Cyberpsychol. Behav. 2007, 10, 45–56. [Google Scholar] [CrossRef] [PubMed]

- Wilson, C.J.; Soranzo, A. The Use of Virtual Reality in Psychology: A Case Study in Visual Perception. Comput. Math. Methods Med. 2015, 2015, 151702. [Google Scholar] [CrossRef] [PubMed]

- Cruz-Neira, C.; Sandin, D.J.; DeFanti, T.A. Surround-Screen Projection-Based Virtual Reality: The Design and Implementation of the CAVE. In Proceedings of the 20th Annual Conference on Computer Graphics and Interactive Techniques; Association for Computing Machinery: New York, NY, USA, 1993; pp. 135–142. [Google Scholar]

- Baran, P.K.; Tabrizian, P.; Zhai, Y.; Smith, J.W.; Floyd, M.F. An exploratory study of perceived safety in a neighborhood park using T immersive virtual environments. Urban For. Urban Green. 2018, 35, 72–81. [Google Scholar] [CrossRef]

- Yu, C.-P.; Lee, H.-Y.; Luo, X.-Y. The effect of virtual reality forest and urban environments on physiological and psychological responses. Urban For. Urban Green. 2018, 35, 106–114. [Google Scholar] [CrossRef]

- Wang, X.; Shi, Y.; Zhang, B.; Chiang, Y. The Influence of Forest Resting Environments on Stress Using Virtual Reality. Int. J. Environ. Res. Public. Health 2019, 16, 3263. [Google Scholar] [CrossRef]

- Vercelloni, J.; Clifford, S.; Caley, M.J.; Pearse, A.R.; Brown, R.; James, A.; Christensen, B.; Bednarz, T.; Anthony, K.; González-Rivero, M.; et al. Using virtual reality to estimate aesthetic values of coral reefs. R. Soc. Open Sci. 2018, 5, 172226. [Google Scholar] [CrossRef]

- Ruotolo, F.; Maffei, L.; Di Gabriele, M.; Iachini, T.; Masullo, M.; Ruggiero, G.; Senese, V.P. Immersive virtual reality and environmental noise assessment: An innovative audio–visual approach. Environ. Impact Assess. Rev. 2013, 41, 10–20. [Google Scholar] [CrossRef]

- Iachini, T.; Maffei, L.; Ruotolo, F.; Senese, V.P.; Ruggiero, G.; Masullo, M.; Alekseeva, N. Multisensory Assessment of Acoustic Comfort Aboard Metros: A Virtual Reality Study. Appl. Cogn. Psychol. 2012, 26, 757–767. [Google Scholar] [CrossRef]

- Ruotolo, F.; Senese, V.P.; Ruggiero, G.; Maffei, L.; Masullo, M.; Iachini, T. Individual reactions to a multisensory immersive virtual environment: The impact of a wind farm on individuals. Cogn. Process. 2012, 13, 319–323. [Google Scholar] [CrossRef]

- Palmer, J.F.; Hoffman, R.E. Rating reliability and representation validity in scenic landscape assessments. Landsc. Urban Plan. 2001, 54, 149–161. [Google Scholar] [CrossRef]

- Parsons, T.D. Ecological Validity in Virtual Reality- Based Neuropsychological Assessment. In Encyclopedia of Information Science and Technology; IGI Global: Hershey, PA, USA, 2015; pp. 1006–1015. ISBN 978-1-4666-5888-2. [Google Scholar]

- Parsons, T.D. Virtual Reality for Enhanced Ecological Validity and Experimental Control in the Clinical, Affective and Social Neurosciences. Front. Hum. Neurosci. 2015, 9, 660. [Google Scholar] [CrossRef] [PubMed]

- Sanchez-Vives, M.V.; Slater, M. From presence to consciousness through virtual reality. Nat. Rev. Neurosci. 2005, 6, 332–339. [Google Scholar] [CrossRef] [PubMed]

- Risko, E.F.; Laidlaw, K.; Freeth, M.; Foulsham, T.; Kingstone, A. Social attention with real versus reel stimuli: Toward an empirical approach to concerns about ecological validity. Front. Hum. Neurosci. 2012, 6, 143. [Google Scholar] [CrossRef]

- Lim, E.-M.; Honjo, T.; Umeki, K. The validity of VRML images as a stimulus for landscape assessment. Landsc. Urban Plan. 2006, 77, 80–93. [Google Scholar] [CrossRef]

- Usoh, M.; Catena, E.; Arman, S.; Slater, M. Using Presence Questionnaires in Reality. Presence Teleoperators Virtual Environ. 2000, 9, 497–503. [Google Scholar] [CrossRef]

- Ramirez, E.J. Ecological and ethical issues in virtual reality research: A call for increased scrutiny. Philos. Psychol. 2019, 32, 211–233. [Google Scholar] [CrossRef]

- Cosgrove, D.E. The Idea of Landscape. In Social Formation and Symbolic Landscape; Originally Croom Helm Historical Geography; University of Wisconsin Press: Madison, WI, USA, 1998; pp. 13–38. ISBN 978-0-299-15514-8. [Google Scholar]

- Sevenant, M.; Antrop, M. Cognitive attributes and aesthetic preferences in assessment and differentiation of landscapes. J. Environ. Manag. 2009, 90, 2889–2899. [Google Scholar] [CrossRef]

- Filova, L.; Vojar, J.; Svobodova, K.; Sklenicka, P. The effect of landscape type and landscape elements on public visual preferences: Ways to use knowledge in the context of landscape planning. J. Environ. Plan. Manag. 2015, 58, 2037–2055. [Google Scholar] [CrossRef]

- Tveit, M.; Ode, Å.; Fry, G. Key concepts in a framework for analysing visual landscape character. Landsc. Res. 2006, 31, 229–255. [Google Scholar] [CrossRef]

- Penning-Rowsell, E.C. A public preference evaluation of landscape quality. Reg. Stud. 1982, 16, 97–112. [Google Scholar] [CrossRef]

- Coeterier, J.F. Dominant Attributes in the Perception and Evaluation of the Dutch Landscape. Landsc. Urban Plan. 1996, 34, 27–44. [Google Scholar] [CrossRef]

- Sevenant, M.; Antrop, M. Settlement models, land use and visibility in rural landscapes: Two case studies in Greece. Landsc. Urban Plan. 2007, 80, 362–374. [Google Scholar] [CrossRef]

- Van der Jagt, A.P.N.; Craig, T.; Anable, J.; Brewer, M.J.; Pearson, D.G. Unearthing the picturesque: The validity of the preference matrix as a measure of landscape aesthetics. Landsc. Urban Plan. 2014, 124, 1–13. [Google Scholar] [CrossRef]

- Lyon, E. Demographic Correlates of Landscape Preference. Environ. Behav. 1983, 15, 487–511. [Google Scholar] [CrossRef]

- Strumse, E. Demographic Differences in the Visual Preferences for Agrarian Landscapes in Western Norway. J. Environ. Psychol. 1996, 16, 17–31. [Google Scholar] [CrossRef]

- Svobodova, K.; Sklenicka, P.; Molnarova, K.; Salek, M. Visual preferences for physical attributes of mining and post-mining landscapes with respect to the sociodemographic characteristics of respondents. Ecol. Eng. 2012, 43, 34–44. [Google Scholar] [CrossRef]

- Watson, P.F.; Petrie, A. Method agreement analysis: A review of correct methodology. Theriogenology 2010, 73, 1167–1179. [Google Scholar] [CrossRef]

- Cohen, J. The Effect Size Index: D. In Statistical Power Analysis for the Behavioral Sciences; Lawrence Erlbaum Associates: Mahwah, NJ, USA, 1988; pp. 20–26. ISBN 0-8058-0283-5. [Google Scholar]

- Roth, M. Validating the use of Internet survey techniques in visual landscape assessment—An empirical study from Germany. Landsc. Urban Plan. 2006, 78, 179–192. [Google Scholar] [CrossRef]

- Meitner, M.J. Scenic beauty of river views in the Grand Canyon: Relating perceptual judgments to locations. Landsc. Urban Plan. 2004, 68, 3–13. [Google Scholar] [CrossRef]

- Robinson, W.S. Ecological Correlations and the Behavior of Individuals. Am. Sociol. Rev. 1950, 15, 351–357. [Google Scholar] [CrossRef]

- John, J. Hsieh Ecological fallacy. Available online: https://www.britannica.com/science/ecological-fallacy (accessed on 31 January 2020).

- Stewart, T.R.; Middleton, P.; Downton, M.; Ely, D. Judgments of photographs vs. field observations in studies of perception and judgment of the visual environment. J. Environ. Psychol. 1984, 4, 283–302. [Google Scholar] [CrossRef]

- Bergen, S.D.; Ulbricht, C.A.; Fridley, J.L.; Ganter, M.A. The validity of computer-generated graphic images of forest landscape. J. Environ. Psychol. 1995, 15, 135–146. [Google Scholar] [CrossRef]

- Nunnally, J.C. Psychometric Theory, 2nd ed.; McGraw-Hill: New York, NY, USA, 1978; ISBN 978-0-07-047465-9. [Google Scholar]

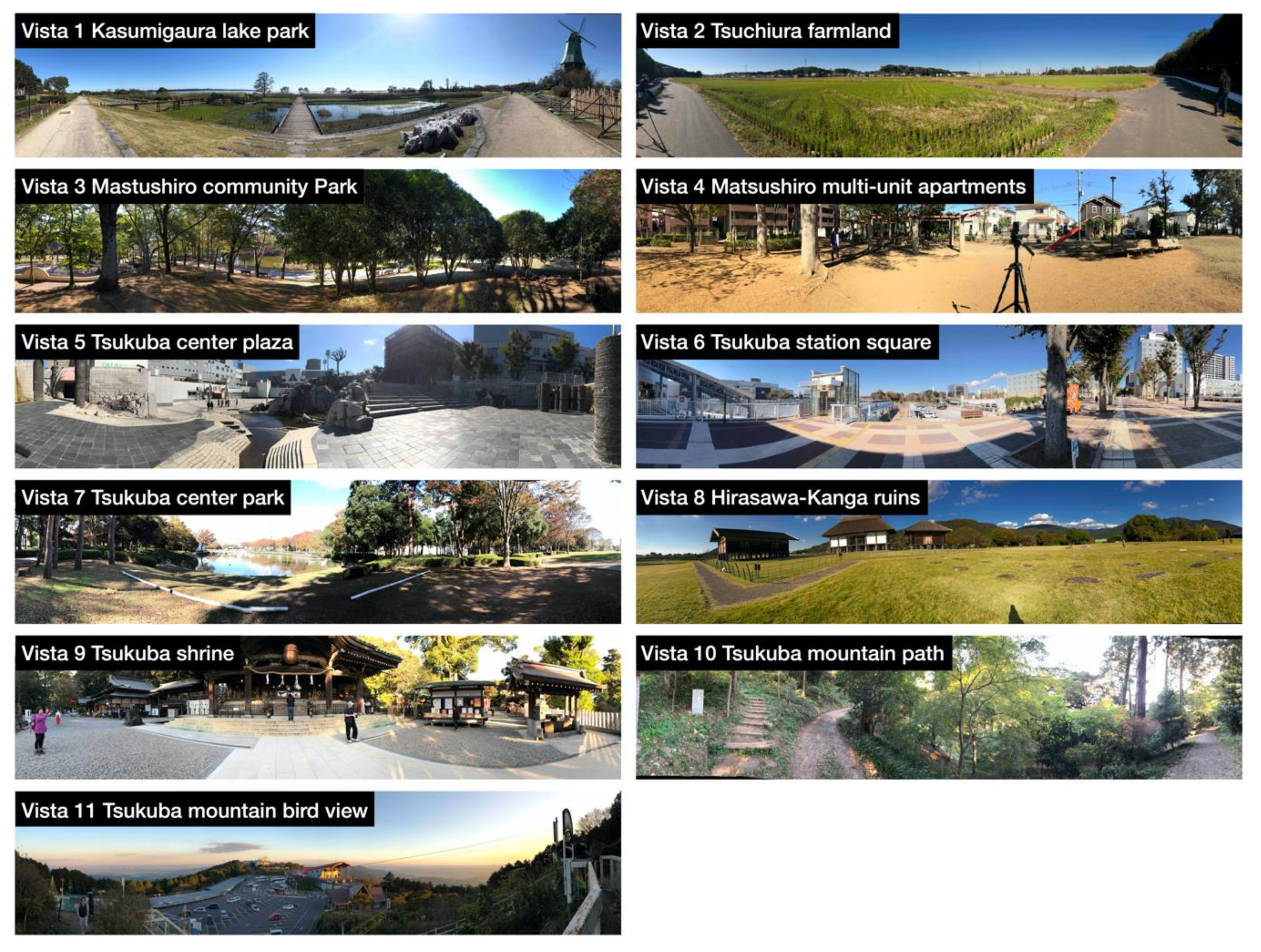

| No. | Description | Landcover | Hydrology | Cultural Features |

|---|---|---|---|---|

| Vista 1 | Kasumigaura lake park | Park and waters | Lake | Recreation structures |

| Vista 2 | Tsuchiura farmland | Farm land and bushland | Paddy | Farming |

| Vista 3 | Mastushiro community park | Park and structures | Pond | Recreation structures |

| Vista 4 | Matsushiro multi-unit apartments | Structures | None | Multi-storey and recreation structures |

| Vista 5 | Tsukuba center plaza | Structures | Channel | Commercial buildings and recreation structures |

| Vista 6 | Tsukuba station square | Structures | None | Infrastructures and commercial buildings |

| Vista 7 | Tsukuba center park | Park, water and forest | Pond | Monument |

| Vista 8 | Hirasawa-Kanga ruins | Meadow and structures | None | Ancient houses |

| Vista 9 | Tsukuba shrine | Structures and forest | None | Religious structures |

| Vista 10 | Tsukuba mountain path | Forest | None | None |

| Vista 11 | Tsukuba mountain bird view | Structures and farmland | None | Infrastructures and farming |

| On-Site | VR | |||

|---|---|---|---|---|

| Mean | SD | Mean | SD | |

| Vista 1 | 8.3 | 1.4 | 7.8 | 1.3 |

| Vista 2 | 5.8 | 1.8 | 6.1 | 1.2 |

| Vista 3 | 6.4 | 2.2 | 7.0 | 1.4 |

| Vista 4 | 4.9 | 1.7 | 5.4 | 1.4 |

| Vista 5 | 6.2 | 1.8 | 6.6 | 1.2 |

| Vista 6 | 6.4 | 1.6 | 5.2 | 1.5 |

| Vista 7 | 7.9 | 1.5 | 7.1 | 1.4 |

| Vista 8 | 8.9 | 1.8 | 7.9 | 1.7 |

| Vista 9 | 8.3 | 1.4 | 7.4 | 1.2 |

| Vista 10 | 6.6 | 2.2 | 7.7 | 1.5 |

| Vista 11 | 8.6 | 0.9 | 7.8 | 1.3 |

| Beauty Score | t | p | Cohen’s d | |

|---|---|---|---|---|

| Vista 1 | 0.98 | 0.33 | 0.33 | |

| Vista 2 | −0.67 | 0.51 | −0.22 | |

| Vista 3 | −1.04 | 0.31 | −0.34 | |

| Vista 4 | −1.00 | 0.32 | −0.33 | |

| Vista 5 | −0.88 | 0.39 | −0.29 | |

| Vista 6 | 2.29 | 0.03 | * | 0.76 |

| Vista 7 | 1.49 | 0.14 | 0.50 | |

| Vista 8 | 1.88 | 0.07 | 0.62 | |

| Vista 9 | 2.13 | 0.04 | * | 0.71 |

| Vista 10 | −1.79 | 0.08 | −0.59 | |

| Vista 11 | 2.33 | 0.03 | * | 0.77 |

| Item | t | p | Cohen’s d | |

|---|---|---|---|---|

| Varied | 0.89 | 0.40 | 0.27 | |

| Vast | 0.36 | 0.73 | 0.11 | |

| Coherent | −1.07 | 0.31 | −0.32 | |

| Human-influenced | 1.89 | 0.09 | 0.57 | |

| Well-maintained | 0.33 | 0.75 | 0.10 | |

| Quiet and silent | 1.50 | 0.17 | 0.45 | |

| Attractive vegetation | 0.59 | 0.57 | 0.18 | |

| Unspoiled | 6.32 | 0.00 | *** | 1.90 |

| Familiar | −0.97 | 0.36 | −0.29 | |

| Inviting to visit | 1.55 | 0.15 | 0.47 | |

| Of historical importance | 0.99 | 0.34 | 0.30 | |

| Valuable for conservation | 1.71 | 0.12 | 0.52 | |

| Homogenous | −3.55 | 0.01 | * | −1.07 |

| Bearing a lot of functions | 2.15 | 0.06 | 0.65 | |

| Accessible | −1.30 | 0.23 | −0.39 | |

| Typical | 0.73 | 0.49 | 0.22 |

| Vista | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Items | ||||||||||||

| Varied | 0.36 | 0.83 | 0.20 | 0.31 | 0.13 | 0.03 | 0.01 | 0.88 a | 0.23 | 0.44 a | 0.28 | |

| Vast | 0.96 | 0.04a | 0.99 | 0.82 | 0.68 | 0.03 | 0.88 | 0.64 | 0.35 | 0.50 | 0.77 | |

| Coherent | 0.54 | 0.00a | 0.23 a | 0.04 | 0.84 | 0.82 | 0.66 | 0.75 | 0.71 | 0.33 a | 0.78 | |

| Human-influenced | 0.60 | 0.57 | 0.13 | 0.16 | 0.88 | 0.49 | 0.10 | 0.29 | 0.98 | 0.81 | 0.95 | |

| Well-maintained | 0.12 | 0.08 | 0.18 | 0.05 | 0.72 | 0.35 | 0.61 | 0.02 | 0.56 | 0.61 | 0.48 | |

| Quiet and silent | 0.00 | 0.00a | 0.60 | 0.96 | 0.68 a | 0.67 | 0.62 | 0.02a | 0.02 | 0.03 | 0.09 | |

| Attractive vegetation | 0.46 | 0.21 | 0.68 | 0.13 | 1.00 | 0.59 | 0.55 | 0.26 | 0.08 | 0.93 | 0.74 | |

| Unspoiled | 0.01 | 0.08 a | 0.15 | 0.27 | 0.77 | 0.19 | 0.38 | 0.53 | 0.15 | 0.20 | 0.07 | |

| Familiar | 0.02 | 0.34 | 0.86 | 0.32 | 0.44 a | 0.00 | 0.01 | 0.63 | 0.44 | 0.87 | 0.48 | |

| Inviting to visit | 0.82 | 0.99 | 0.71 | 0.04 | 0.46 | 0.00 | 0.06 | 0.05 | 0.05 | 0.52 | 0.47 | |

| Of historical importance | 0.26a | 0.21 | 0.42 | 0.22 | 0.15 | 0.03 | 0.01 | 0.22 a | 0.47 | 0.08 a | 0.39 | |

| Valuable for conservation | 0.83 | 0.63 a | 0.49 a | 0.45 | 0.40 | 0.05 a | 0.05 | 0.98 | 0.94 | 0.43 | 0.69 | |

| Homogenous | 0.86 | 0.01 | 0.00 | 0.01 | 0.74 | 0.00 | 0.00 | 0.95 a | 0.96 | 0.07 | 0.94 | |

| Bearing a lot of functions | 0.54 | 0.15 | 0.10 | 0.33 | 0.04 | 0.38 | 0.03 | 0.60 | 0.65 | 0.82 | 0.03a | |

| Accessible | 0.94 | 0.82 | 0.90 | 0.06 a | 0.40 a | 0.17 | 0.59 | 0.70 | 0.81 | 0.85 | 0.76 | |

| Typical | 0.96 a | 0.21 | 0.48 | 0.06 | 0.25 | 0.50 | 0.31 a | 0.07 | 0.11 | 0.38 | 0.16 | |

| Rating Variable | Pair of Stimulus Types | ||

|---|---|---|---|

| On-Site & Panorama Photo | On-Site & Normal Photo | On-Site & VR | |

| Beauty | 0.84 *** | 0.77 ** | 0.87 *** |

| Varied | 0.55 | 0.41 | 0.63 * |

| Vast | 0.90 *** | 0.87 *** | 0.95 *** |

| Coherent | 0.91 *** | 0.63 * | 0.50 |

| Well-maintained | 0.71 *** | 0.85 *** | 0.65 * |

| Quiet and silent | 0.71 ** | 0.70 * | 0.58 |

| Attractive vegetation | 0.72 ** | 0.81 *** | 0.79 ** |

| Unspoiled | 0.92 *** | 0.85 *** | 0.93 *** |

| Familiar | 0.62 * | 0.36 | 0.73 * |

| Inviting to visit | 0.87 *** | 0.69 * | 0.86 *** |

| Of historical importance | 0.59 * | 0.28 | 0.80 ** |

| Valuable for conservation | 0.85 *** | 0.74 ** | 0.95 *** |

| Bearing a lot of functions | 0.75 ** | 0.57 | 0.76 ** |

| Accessible | 0.56 | 0.63 * | 0.88 *** |

| Human-influenced | 0.77 ** | 0.84 *** | 0.93 *** |

| Homogenous | 0.77 ** | 0.72 ** | 0.61 * |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shi, J.; Honjo, T.; Zhang, K.; Furuya, K. Using Virtual Reality to Assess Landscape: A Comparative Study Between On-Site Survey and Virtual Reality of Aesthetic Preference and Landscape Cognition. Sustainability 2020, 12, 2875. https://doi.org/10.3390/su12072875

Shi J, Honjo T, Zhang K, Furuya K. Using Virtual Reality to Assess Landscape: A Comparative Study Between On-Site Survey and Virtual Reality of Aesthetic Preference and Landscape Cognition. Sustainability. 2020; 12(7):2875. https://doi.org/10.3390/su12072875

Chicago/Turabian StyleShi, Jiaying, Tsuyoshi Honjo, Kaixuan Zhang, and Katsunori Furuya. 2020. "Using Virtual Reality to Assess Landscape: A Comparative Study Between On-Site Survey and Virtual Reality of Aesthetic Preference and Landscape Cognition" Sustainability 12, no. 7: 2875. https://doi.org/10.3390/su12072875

APA StyleShi, J., Honjo, T., Zhang, K., & Furuya, K. (2020). Using Virtual Reality to Assess Landscape: A Comparative Study Between On-Site Survey and Virtual Reality of Aesthetic Preference and Landscape Cognition. Sustainability, 12(7), 2875. https://doi.org/10.3390/su12072875