1. Introduction

The role of telecommunication networks in the evolution of society as a whole continues to grow. Fifth-generation (5G) telecommunication networks are currently being created, and, in the foreseeable future, Networks 2030 will play a decisive role in creating an efficient digital economy [

1]. On the other hand, the requirements of modern networks are very new and complicated. The existing traditional networks cannot fulfill these requirements, thus, there is a need for new approaches for both types of networks: 5G and Networks 2030 [

2].

Now, it is generally accepted that the main direction of the communication network development is the 5G network. Initially, 5G networks were based on the Internet of Things (IoT) concept, which requires the creation of ultra-high-density communication networks with one million devices per square kilometer [

3]. This non-trivial problem has been complicated recently with the Tactile Internet concept, which requires a round-trip delay limitation of 1 ms for tactile sensation transmission [

4,

5]. The requirement mentioned above leads to the reconsideration of the communication network’s basic design in favor of decentralization due to the fundamental light-speed limit. These requirements refer not only to tactile information, but also to unmanned vehicles with network support, augmented reality applications, etc. Therefore, during the implementation of the 5G concept, a new concept of an ultra-low-latency network appears. This idea, according to the available investigations of the future Network 2030, will determine the basics of communication network design in the long term [

6].

Tactile Internet builds a real-time interactive system between the human and the machine, and introduces a new evolution in human–machine (H2M) communication [

7,

8]. Tactile Internet enables the remote transfer of physical habits and thus introduces a new paradigm shift towards skill-based networks instead of content-based networks. The Tactile Internet expects to make a revolution in the communication industry because of its massive impact on human life. Tactile Internet will have great applications in many fields, such as health care, including remote surgery, industrial automation, Virtual and Augmented Reality, gaming, and education [

9].

In 2030 (and even earlier, in our opinion), communication networks, H2M, and, in particular, human-to-avatar (H2A) interactions will play an important role. In addition, the requirements of networks in many aspects will be determined by the Industry 4.0 implementation, which is now taking a well-defined form due to work on the Industrial Internet of Things. Furthermore, these network and industry interactions require a new approach for the compatibility of the technical tools and facilities, services, classes, and quality of service and experience. However, the existing investigations in this area are now at the level of primary system studies and, unfortunately, could not allow definition and verification of the parametric requirements of such networks.

The emergence of the concept of telecommunication networks with ultra-low latency is associated with the work in the Tactile Internet field. In 2014, the International Telecommunication Union issued a special report, called Tactile Internet [

10], which was developed under the guidance of Prof. Gerhard Fettweis. This report discussed the formulation of the requirement for a round-trip delay in the transmission of tactile sensations of 1 ms. Work on the Tactile Internet also appeared in well-known journals [

11,

12]. Later, for fifth-generation communication networks, in several studies, this requirement was considered to be an ultra-low-latency service [

13,

14,

15]. Understanding of the broad prospects for the introduction of such services has led to the emergence of the concept of communication networks with ultra-low latency [

5,

16]. The existing works on ultra-low-latency services for fifth-generation telecommunication networks focused on the physical and channel levels of radio networks [

14,

15], using the millimeter-wave capabilities for peak speeds in 5G networks to achieve ultra-low latency [

13,

17]. To build a 5G network core [

5] and subsequent generations [

18], they will rely on the use of the capabilities of software-defined networks (SDN) and mesh networks. The assumption is that communication networks with ultra-low latency will be one of the main technologies for creating networks in 2030 [

19,

20,

21], along with the transfer of tactile sensations and holographic telecommunications.

With the recent innovation of control systems, the replacement of human control with autonomous control becomes a demand for vehicle systems. As a result, it pushes the development of a reliable communication system that is able to provide a communication medium for such networks and their applications. However, designing such networks faces many challenges due to the high mobility of cars and the required latency of the applications that are expected to be run. In addition, such networks should support an enormous amount of traffic and a high density of vehicles. Furthermore, the development of the new technologies and infrastructure is inevitable. Therefore, motivated by such considerations, we proposed a novel multilevel cloud system for autonomous vehicles which is built over the Tactile Internet. In addition, base stations at the edge of the radio-access network (RAN) with different technologies of antennas are used in our system. Furthermore, the core network was designed based on SDN technology with the deployment of a multi-controller scheme.

The rest of the study is organized as follows. An overview of related works is presented in

Section 2. An introduction of our system model in terms of communication, computation, and security models is presented in

Section 3. Simulation-based experiments are reported and discussed in

Section 4. Finally,

Section 5 concludes the paper.

2. Related Works

Numerous works and studies have been proposed to address Vehicular Ad-Hoc Networks (VANET) and autonomous vehicles based on different layers [

22,

23]. In [

24], Raza et al. studied the features of the functioning of VANET networks based on a three-layer architecture: Cloud Layer, Edge Cloud Layer, and Smart Vehicular Layer. The authors wrote about the possibilities that the technology of edge computing provides. It is described as an implementation option of SDN and in terms of the functionality of its network elements when implemented in the VANET network. The authors also described in detail the main components of automobiles and the necessary supported network infrastructure technologies for organizing a modern intelligent network. Additionally, in this article, a lot of attention is paid to solving the mobility problem, offering various route approaches: Routing, SDN, and 5G approaches. Finally, the paper concludes with a summary of the most pressing issues that Vehicular Edge Computing faces.

Liu et al. [

25] explored the security issue for autonomous vehicles within an automotive network built on the Edge Computing architecture. They considers the threats that exist for various categories of automotive devices that provide autonomy: Sensors, control systems, etc., and also suggests ways to protect against these threats. The paper helps to understand all aspects of building autonomous driving systems: Safety aspects, aspects of problems related to the computing power and energy consumption of automobile devices, and aspects of the interaction of autonomous vehicles.

In [

26], a mathematical analysis of automobile network models was carried out, providing effective communication between vehicles. The paper clearly shows the main problems that exist for providing ultra-reliable low-latency communications (URLLC). The authors proposed the theory of extreme values and Lyapunov optimization as a powerful mathematical tool for modeling extremely long bursts and power distribution.

Recently, Nkenyereye et al. [

27] proposed an architecture for an automobile network which is based on a software-defined network (SDN) and mobile edge computing (MEC), and has the optimal ability to distribute the radio resources and to reduce transmission delays. The authors took as the basis for the network a peripheral computer network with multiple access, which implements distributed computing on the evolved node B (eNB). The architecture is presented in four logical levels: Forwarding level, control level, multiple-access level, and access level. The paper described an example of a scenario of the interaction of logical levels when it detects packages that are not mapped in the Open-Flow table.

In contrast to the above-mentioned studies, we proposed a novel multilevel cloud system for autonomous vehicles which is built over the Tactile Internet. In addition, base stations at the edge of the radio-access network (RAN) with different technologies of antennas are used in our system. Furthermore, the core network was designed based on SDN technology with the deployment of a multi-controller scheme.

3. System Model and Problem Formulation

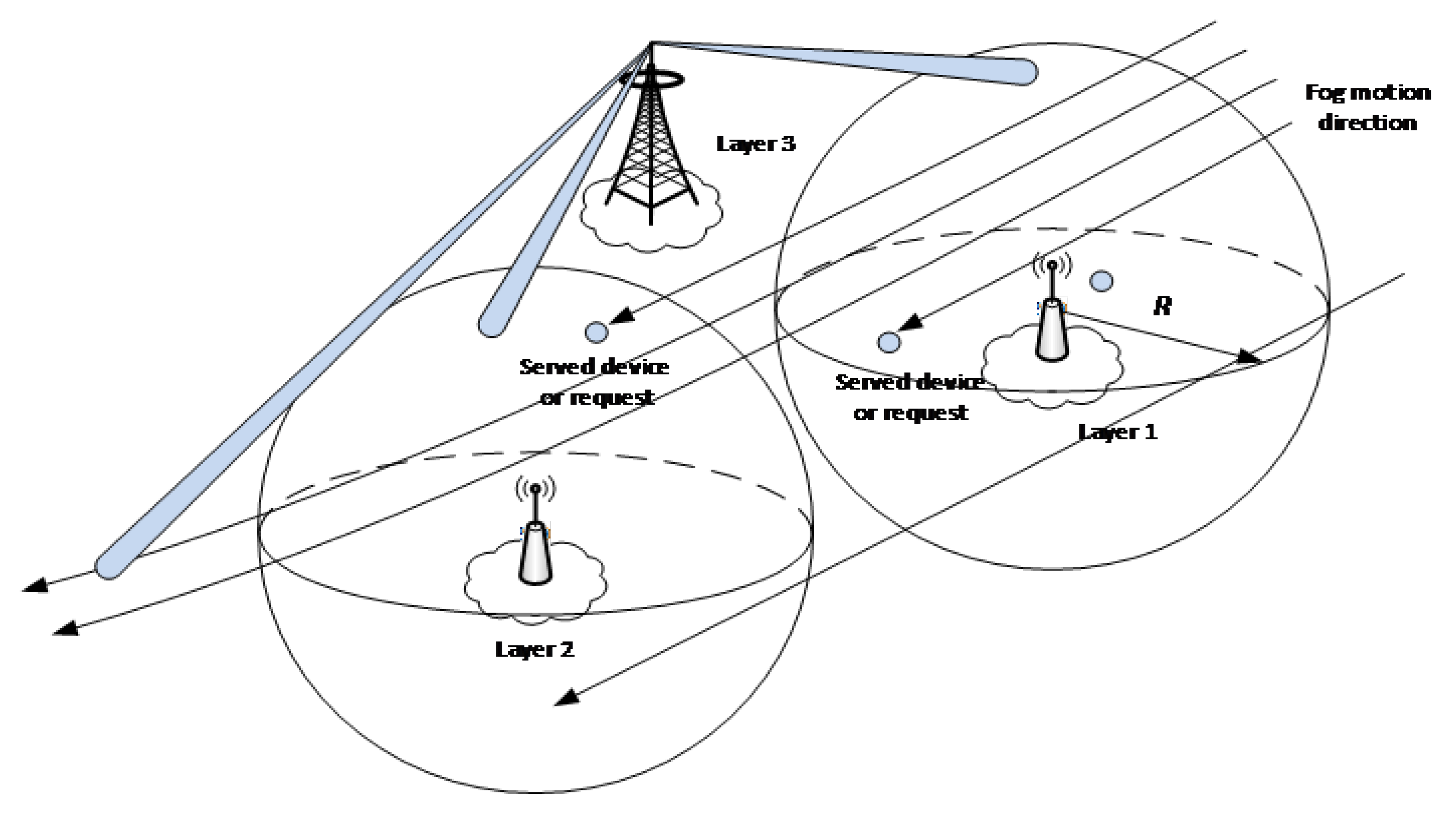

As outlined in the preceding section, our work considers a multi-level cloud computing system for autonomous vehicles whose architectural layout consists of four layers, as presented in

Figure 1. The physical hardware is represented in the first layer, in which RSUs are distributed across the roads, and the vehicles powered with On-Board Units (OBUs) can communicate. Then, the centralized remote management units are connected with RSUs and are responsible for forwarding and exchanging service control packages. Afterward, heterogeneous edge cloud servers, represented in the second layer, are deployed at the edge of the radio-access network. In our proposed system, edge-cloud servers are classified into two main types, which are micro-cloud edge and mini-cloud edge servers. The micro-cloud edge servers (cellular base stations) have limited energy and computing capabilities, in which each RSU is connected. In addition, each set of micro-cloud edge servers is grouped and connected with a mini-cloud edge server, which can provide higher energy and computing capabilities. Afterward, the SDN network is represented in the third layer, and is deployed at the core network. In addition, the SDN network layer consists of two main sub-layers, which are the data plane sub-layer and control plane layer. The first one has a set of distributed Open-Flow switches, in which the appropriate version of the Open-Flow protocol is provided. However, the distributed SDN controllers exist in the second sub-layer, which is responsible for controlling and managing the whole network. Finally, the application programming interfaces (APIs) are utilized to manage and control the SDN network, and the interface is provided by the core network to the last layer, which is represented by the application server.

In the layers of our proposed system, there are two main network interfaces, which are the car–car and car–infrastructure networks. The approach mentioned above can be made over the appropriate IEEE 802.11 communication interface, as previously introduced. In this context, a high-speed fiber wireless (FiWi) network facilitates the connection of the distributed edge servers in the second layers. Where the MEC server can provide the computing capabilities at the edge of the access network and connect with the RSU station, it can offload the computation data and reduce the traffic passed to the core network. In addition, the network congestion and packet loss are also reduced.

MEC is a prospective technology for service provisioning in future networks [

28,

29,

30]. It provides increasing network quality for new services, such as the Internet of Things, Tactile Internet, Augmented Reality, and others. In addition, it has vital uses in many applications, including in vehicular networks, such as VANET or any others. Therefore, regarding the above structure, two different scenarios are studied, which are in a VANET-based environment and a Fog-based environment. In the following subsections, the system models and problems for simulation of these scenarios are presented with more details.

3.1. System Model

3.1.1. VANET-Based Environment

The vehicular network provided by VANET functionality or by any other approach, provides delivery of messages from or to the number of cars equipped with necessary devices. These messages create the traffic flow directed to the service-provisioning equipment, i.e., this traffic is directed to the nearest access point and then through the core network, the server, and the data storage unit. The server and data storage unit are part of the cloud resources.

The star network structure with the cloud in the center is a classical approach to the service implementation. In this case, a large number of traffic sources (cars), powerful servers, and data storage, in theory, provides good resource utilization. This approach has some important disadvantages, which are low reliability due to the one server, high network traffic concentration at the server access point due to the network’s star structure, and the high delay due to the network delivery and server processing. In this regard, the Edge Computing or MEC approach removes these problems. MEC provides low delivery time due to the cloud’s proximity to the users, low delivery time due to the short path in the network, and short service time due to the low traffic. In this case, resource usage marches lower due to the instability of traffic generated by a relatively small group of users.

Our assumption is that the modeling of the service of a message in the cloud is as a queuing system. According to this assumption, the mean delay caused by the service of a message in the cloud is proportional to the following equation:

where

is the performance (throughput) of the server in the number of messages per unit of time,

—is the traffic intensity, which may be expressed by the ratio of the rate of incoming messages to the performance

, and

—is the incoming message rate (the number of incoming messages per unit of time).

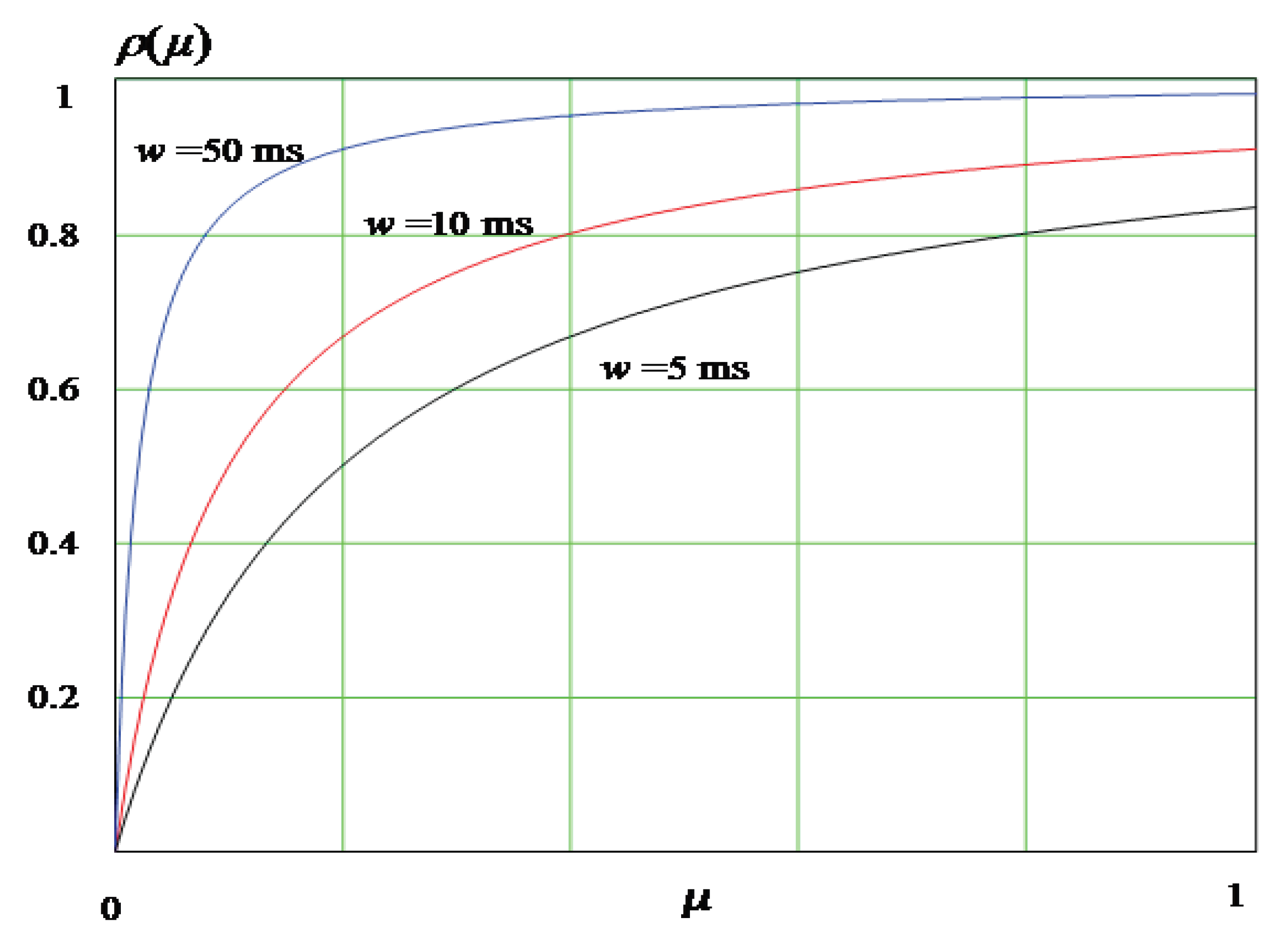

If there are no losses in the system,

is equal to the server usage. If we fix the delay value

it is easy to show from (

1) that the utilization

depends on the performance value

, as shown in

Figure 2. It is clear from the shown dependence that to provide high utilization, we have to increase the performance. This means that to provide high utilization, we have to choose high traffic and high performance values. These conditions correspond to the star structure network conditions.

According to these results, the implementation of MEC is not a very efficient solution for resource utilization. Using MEC provides coverage of a relatively small territory with a relatively small number of users; this means a relatively low traffic intensity. Using equipment with high performance is not an efficient solution, but using low-performance servers negatively affects the quality of service. This problem is caused by the relatively low traffic (number of users) and traffic instability. Therefore, using MEC solutions to improve the quality of service, we have to choose a solution to improve equipment utilization. These are opposite requirements. In this paper, we propose a multi-level structure to improve equipment utilization for MEC solutions, as shown in

Figure 3.

In this structure, the first MEC layer of the system is for medium or small cells (micro- or mini-cells). Each of these cells is equipped by the base station with an isotropic antenna and necessary computational resources (cloud). These cells serve users around the antennas. The second MEC layer consists of a cell equipped with a beamforming antenna. The cell can serve any subscriber in the first-layer cells through beamforming technology. When the first layer resources are busy, the second-layer cell serves requests from subscribers.

3.1.2. Fog-Based Environment

The moving Fog indicates that a large number of devices are connected to the network, which is based on any carrier objects, such as vehicles, bikes, copters, people, animals, and so on. It is a mixture of different devices that are used for different services. These can also be devices installed in the road environment, rubbish bins, building walls, installed on the trees, on the ground, and so on. This environment, unlike the VANET environment, has a higher density and can consist of a number of different devices with different requirements for the quality of service. This means that we have to consider not only traffic originating from them, but also different types of devices and behaviors of devices, including motion characteristics. Some of these devices have to be served immediately (with minimal delay), but some of them allow relatively long delays (delay-tolerant services, such as telemetry services).

A possible structure of this system may be like the one shown in

Figure 4. There are a number of levels in this system. These levels can have different or identical realizations. For example, a base station with an isotropic antenna and with a micro-cloud based on the vehicle serves requirements from the incoming Fog, which must be served as soon as possible. The next layer of the cloud with the same realization serves requirements that were not served by the first cloud, and awaiting service. The third cloud, realized in the third base station with a directed driven antenna, serves requirements that present excess traffic for both lower clouds.

To model originated traffic, we propose the model of the Fog. We assume that the Fog is a flow of devices moving in a single direction (

Figure 4). In general, each device can be moved in its own direction, but this case is not realistic. In addition, we assume that the area of service of the base stations presents a sphere with radius R. Therefore, we assume a single common direction for all devices in the considered area.

This approach provides the use of any number of layers. These layers can be implemented as stationary base stations on the road elements or as moving base stations on vehicles or drones.

3.2. Problem Formulation

3.2.1. VANET Environment

We assume that the user traffic presents the Poisson flow and that the service time is described by an exponential distribution. The queuing model, in general, corresponds to an M/M/1/k system with a finite buffer. There is a probability that the arrival message leaves the system (lost probability).

In this equation, , where a is the incoming message intensity and is the incoming traffic intensity. In this case, it is equal to server performance.

Queue mean size can be calculated from the following equation with

:

The mean response time for the first-level server can be calculated by:

To compare a finite-queue-size system with an infinite-queue-size system, we calculate the response time for the M/M/1 system:

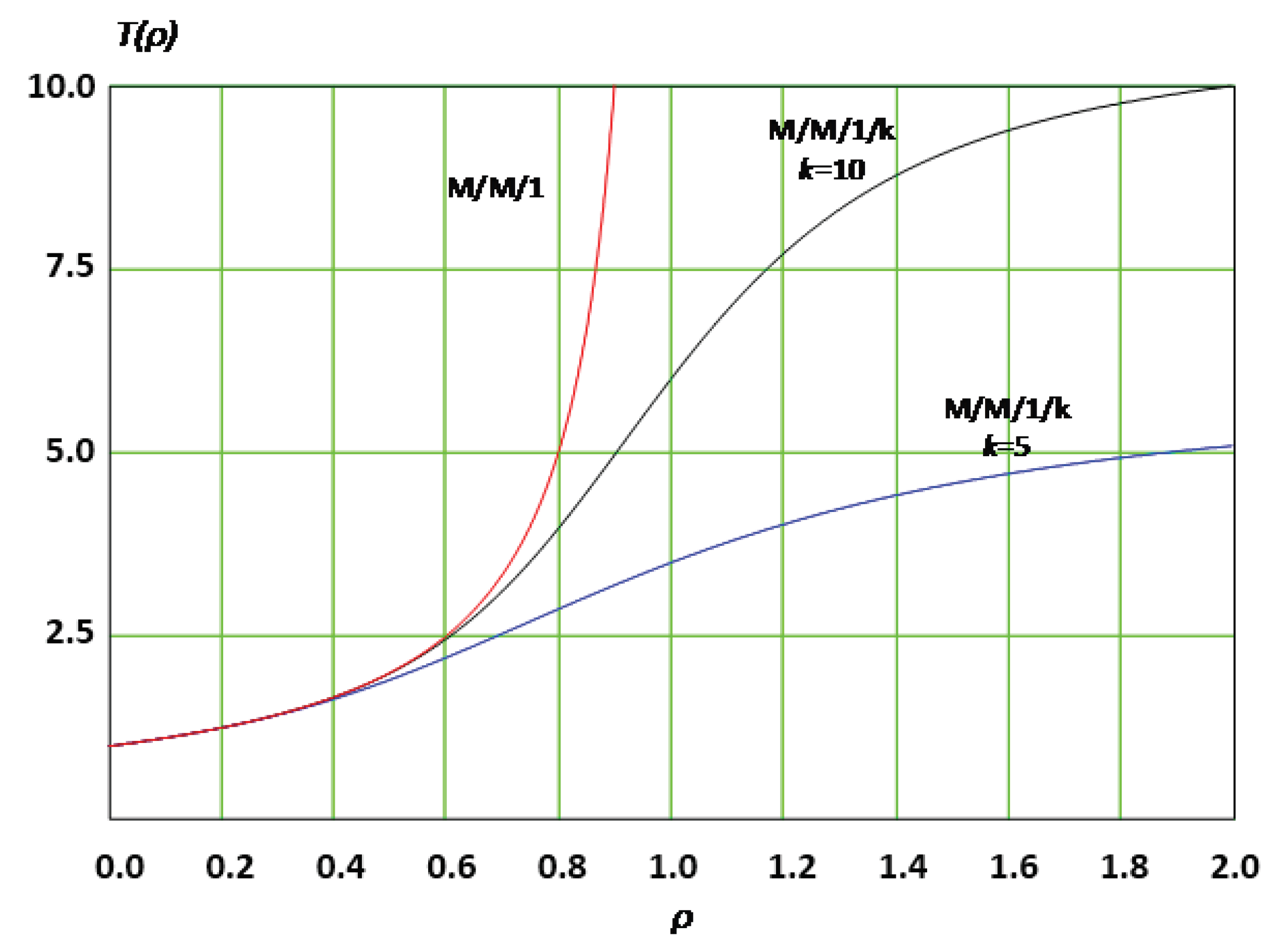

These dependences are shown in the

Figure 5.

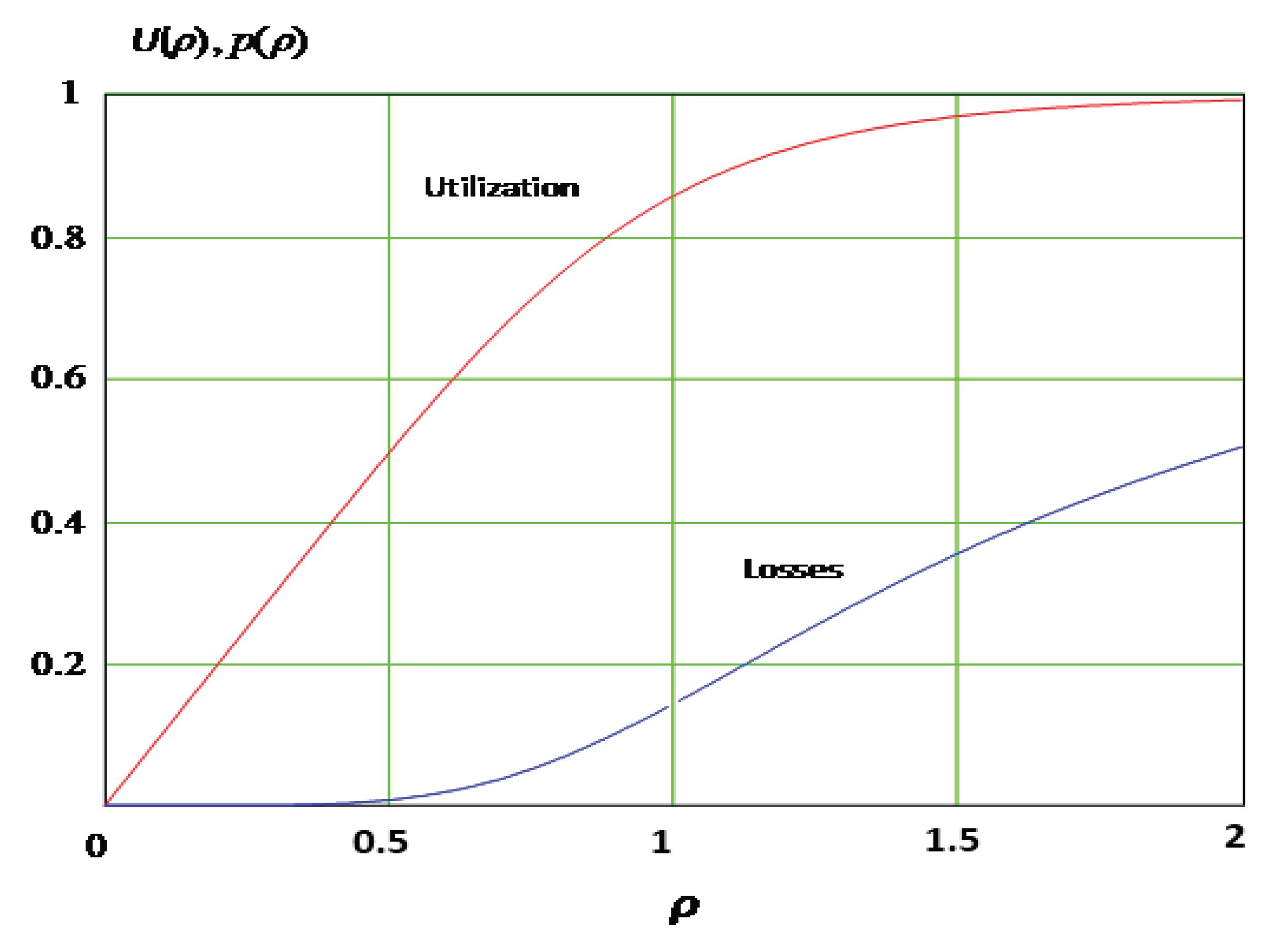

Additionally, we consider the usage of server dependence. For the M/M/1 system, utilization of the server is equal to the offered traffic; for the M/M/1/k system, utilization of the server can be calculated as follows:

where

is the probability that the server has no job at the moment.

Considering both figures, we can conclude that the response time for the finite-size queue is significantly lower than the response time for the infinite-size queue. High utilization of the infinite-size queue (more than 0.8) brings a rapid growth of the response time, but the finite-size queue has a restricted value for the mean response time. Utilization of the server with a traffic intensity near 1 (

Figure 6) is greater than 0.8 (

Figure 2). Therefore, using the finite-size queue (relatively small-size queue) and relatively high traffic intensity brings low response time and high usage of the resources, but in these conditions, the loss probability is relatively high (more than 0.1).

We propose the model of MEC implementation, taking into account assumptions about the queuing model given above. To reach high utilization of the MEC equipment (micro- or mini-cloud), we have to chosen a high-utilization mode of the MEC cloud. However, this leads to quality-of-service degradation, i.e., response time or losses grow. To address this, we propose using several layers, each including clouds that are intended to service excess traffic coming from the nearest layers. The structure of the model is shown in

Figure 5.

The proposed system works in the following order. Messages originate in the area of VANET, restricted by the EDC area, and come to the first-layer cloud, represented by the queuing system M/M/1k (Layer 1). We assume that the performance of the cloud is defined by the service time . We choose the value of t according to the requirement for delivery time and requirements for the utilization of the server.

3.2.2. Fog Environment

Firstly, in this section, we assume that the number of devices coming to the first cloud over the time

is:

where

, and

denote the area of the sphere, the speed of the Fog (meters per second), the time interval (in seconds), and the number of devices;

R denotes the radius of the sphere (in meters). In addition,

where

denotes the probability of losses in the layer

.

Any device can be in the area of the

ith cloud over random time

with average:

The probability density function for the

is defined as shown in the [

31]:

The probability that the device will be not served in the base station is the probability that the stay time in the area is not bigger then the response time

:

It would be nice to describe this system with a queuing system with impatient multi-priority requests that leave the queue before service if the waiting time exceeds the stay time in the area of the base station, but there is no closed-form of the equation for such a system. Therefore, we propose using the M/M/1/prio queuing model, where the mean response time must be less than the .

We can calculate

from Equation (

7) as:

where

is probability that the time in the service area will be less than the

. We can consider

as the probability of losses at the given layer.

We use the M/M/1/prio model to describe the waiting time of the each layer, where:

where

M denotes the number of priorities,

denotes the traffic intensity of the priority

j, and

denotes the mean service time for the

priority request.

Implementing multilayer MEC according to this model, we have to choose the system parameters so that they satisfy the following condition:

This inequality means that the mean response time is less than the time stayed in the base station area with probability

. If

is very small, Equation (

15) indicates that, on average,

i priority requests are served in the area of the base station.

Using Equations (

13)–(

15), we can estimate the necessary performance of the cloud for each priority and number of clouds (layers).

4. Simulation Model and Discussion

The structure of the simulation model is shown in

Figure 7. It presents a queuing system. Each layer model corresponds to one server and one queue. Each queue has one input and two outputs. The input of the queue receives input requests coming to this layer. One of the outputs is the output from the top of the queue and is connected to a server. Requests, which are not served at this layer (lost requirements), leave the queue through this output and go to the next layer. The reasons for why requests are not served are different for different environments.

The structure of the model is identical for both environments that we considered above: VANET and Fog environments. In the first case, each layer describes the stationary cell of the base station and the lost requirements caused by excess traffic. In the second case, the lost requirements caused by excessive waiting of more than .

In general, we can use multi-priority requirements in both models, but we assume that in the VANET environment, all requirements (messages) are homogenous, so we use queues without priorities. In the Fog environment, we assume a number of different devices for different services; therefore, we use a multi-priority model in the second case.

4.1. Analysis of Simulation Results Obtained for the VANET Environment

The traffic flows at the second and upper layers present an aggregation of requests that were lost at the lower layer. From Reference [

32], it is known that this is Palm’s flow [

33].

We created a discreet event-driven simulation model to study the behavior of the two-layer system. The model includes two layers of the model in

Figure 7.

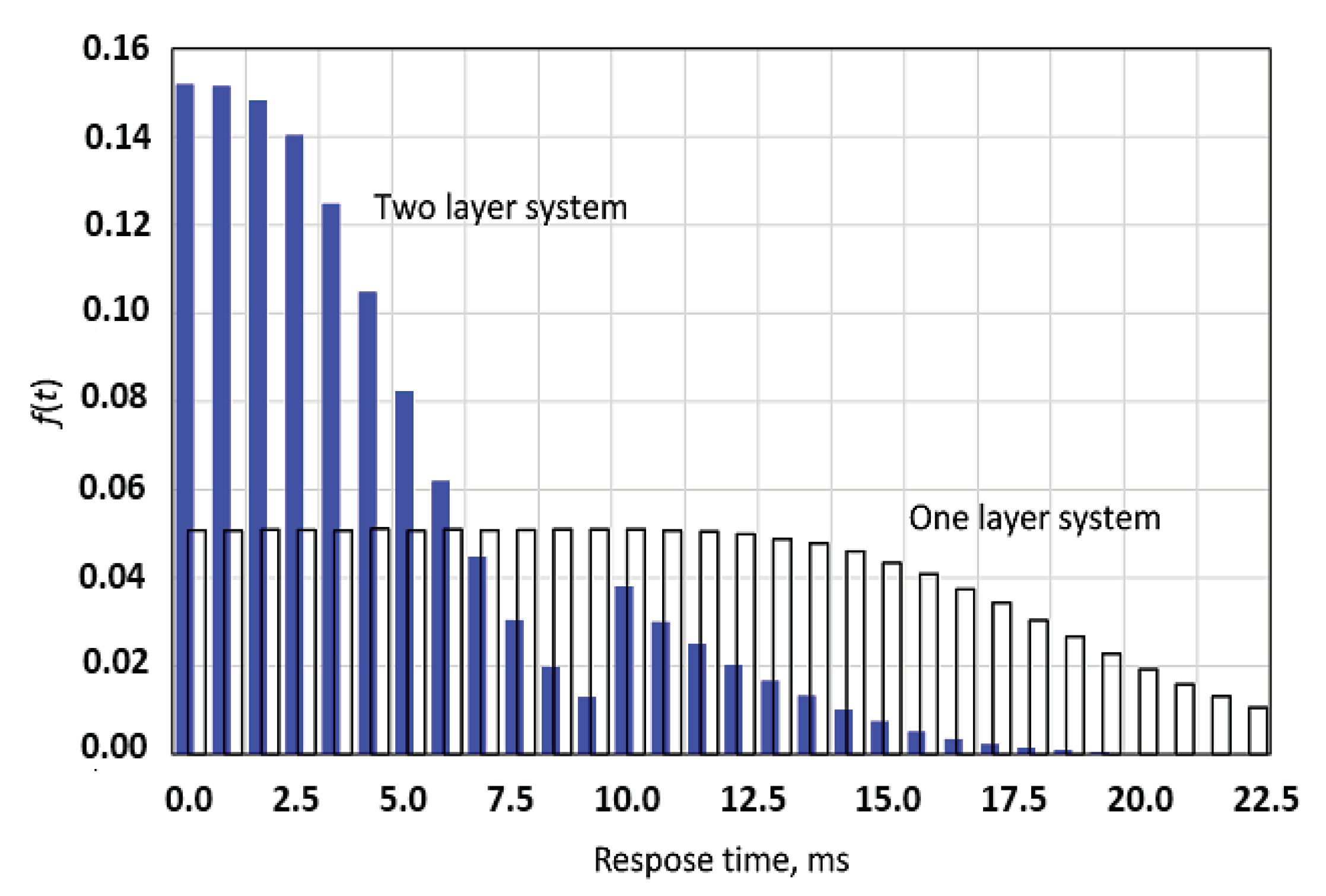

We describe the service delay according to the response time. The distribution of the response time from the simulation results is shown in

Figure 8.

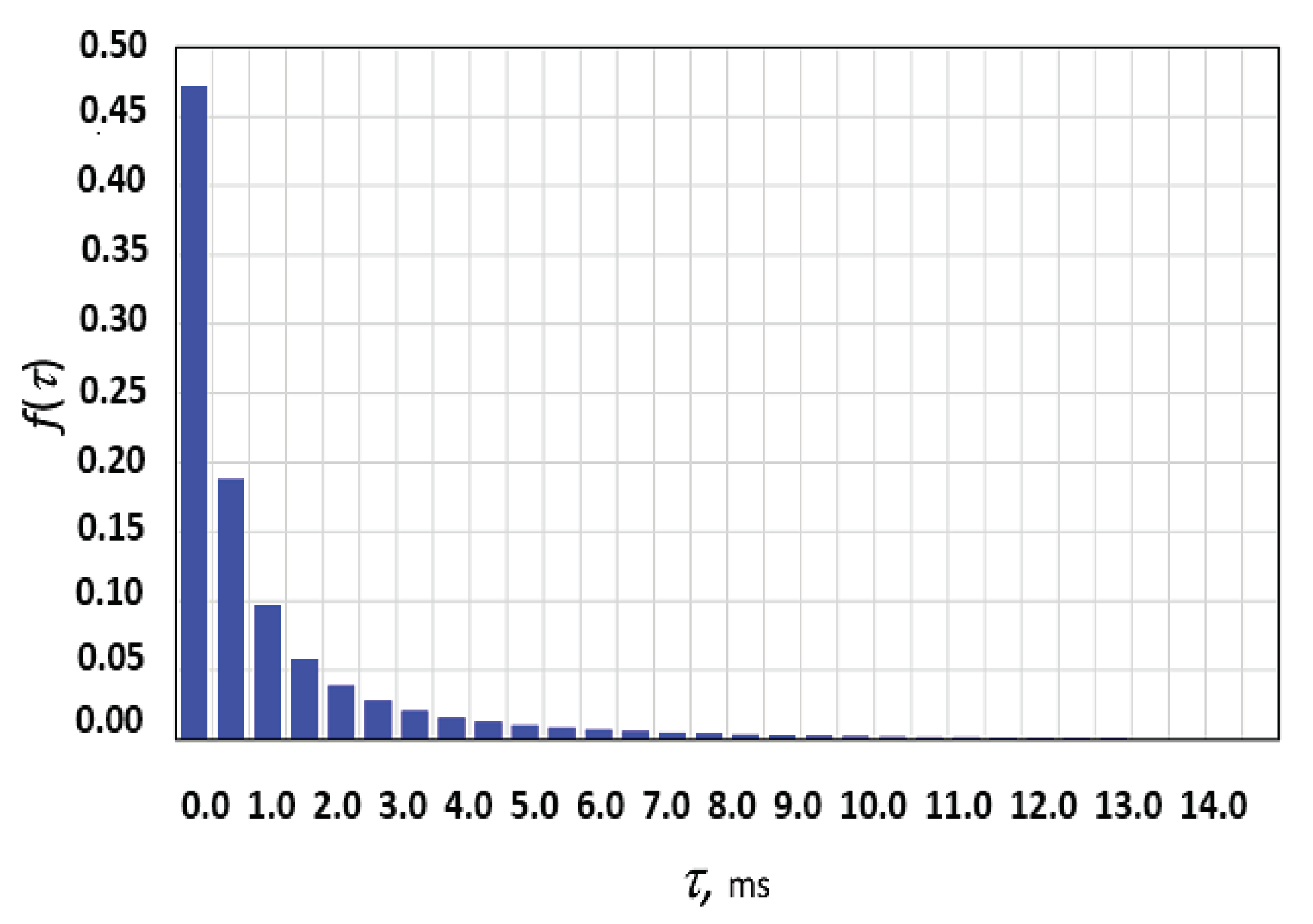

The inter-arrival time distribution of the traffic coming to the second layer is shown in

Figure 9.

Study of the second-layer traffic shows that this traffic presents Palm’s flow. The coefficient of variation for the system with five (5) first-layer clouds and one (1) second-layer cloud. Increasing the first layer of clouds leads to a decrease in the coefficient of variation. Thus, we can propose that the aggregation of a big number of flows from the first layer leads to changes in the second-layer traffic properties, close to Poissonian traffic.

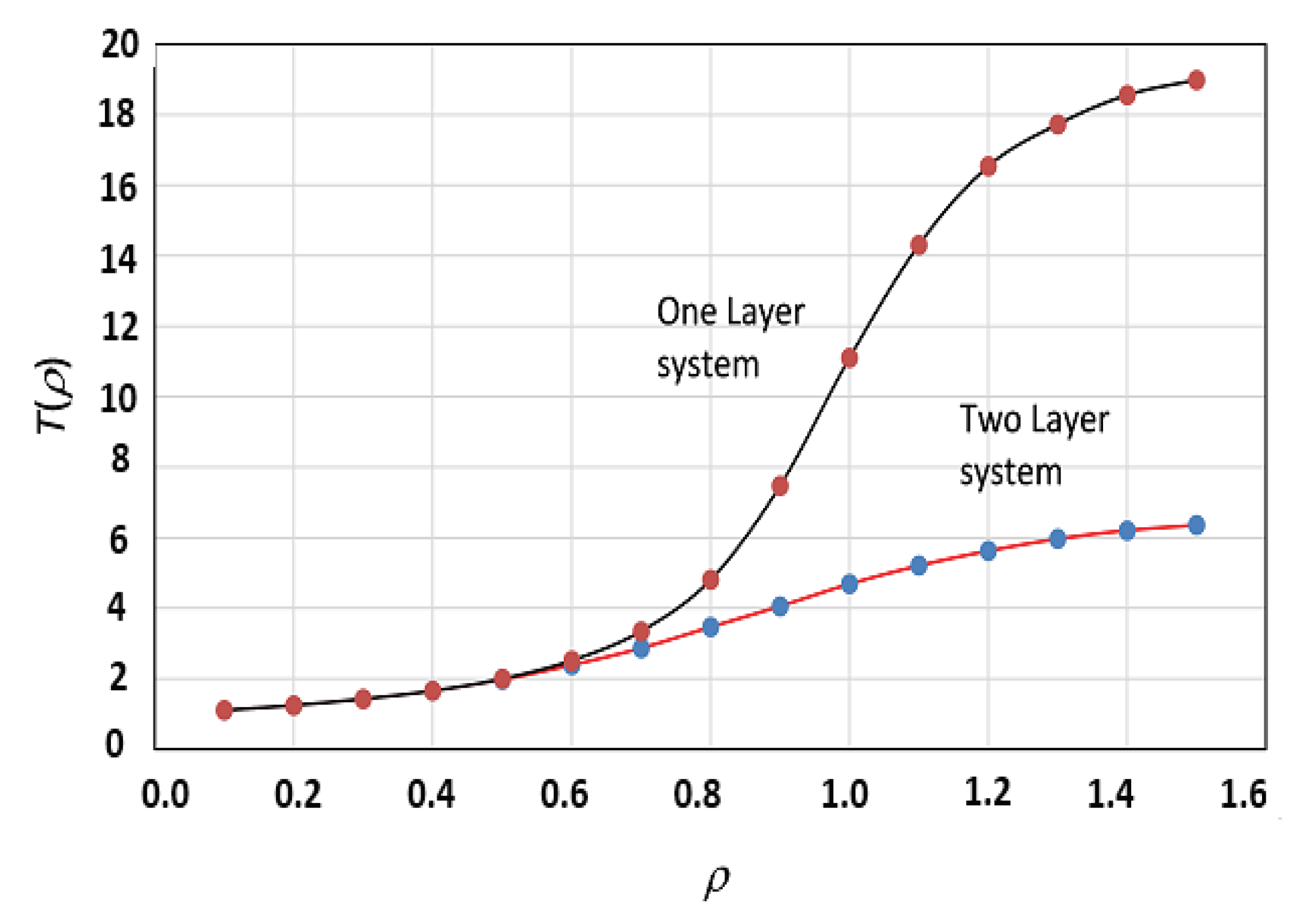

The response time dependence of the traffic intensity for the one-layer and two-layer systems is shown in

Figure 10.

It is clear from the given results that the two-layer system provides high and stable performance in the wide range of the user traffic intensity variation. The one-layer system provides stable performance just in the range from 0 to 0.8, approximately.

Therefore, we can conclude that the multilayer MEC system provides better resource utilization and better quality of service.

4.2. Analysis of Simulation Results Obtained for the Fog Environment

The input traffic presents a Poissonian flow consisting of three types of requests with different priorities. We assume that the numbers of devices of each priority are equal. Devices come to the first-layer cloud and stay there during random times, distributed according to (

10) with mean (

9). If the request is not served during that time, the request leaves the queue and goes to the next layer. The order of requests in the queue is defined by priorities. Requests not served by the current layer go to the next layer. By choosing the parameters of the server, we can provide the mode when the main portion of the highest-priority requests is served by the first-layer cloud, the next-priority requests served by the next-layer cloud, and so on.

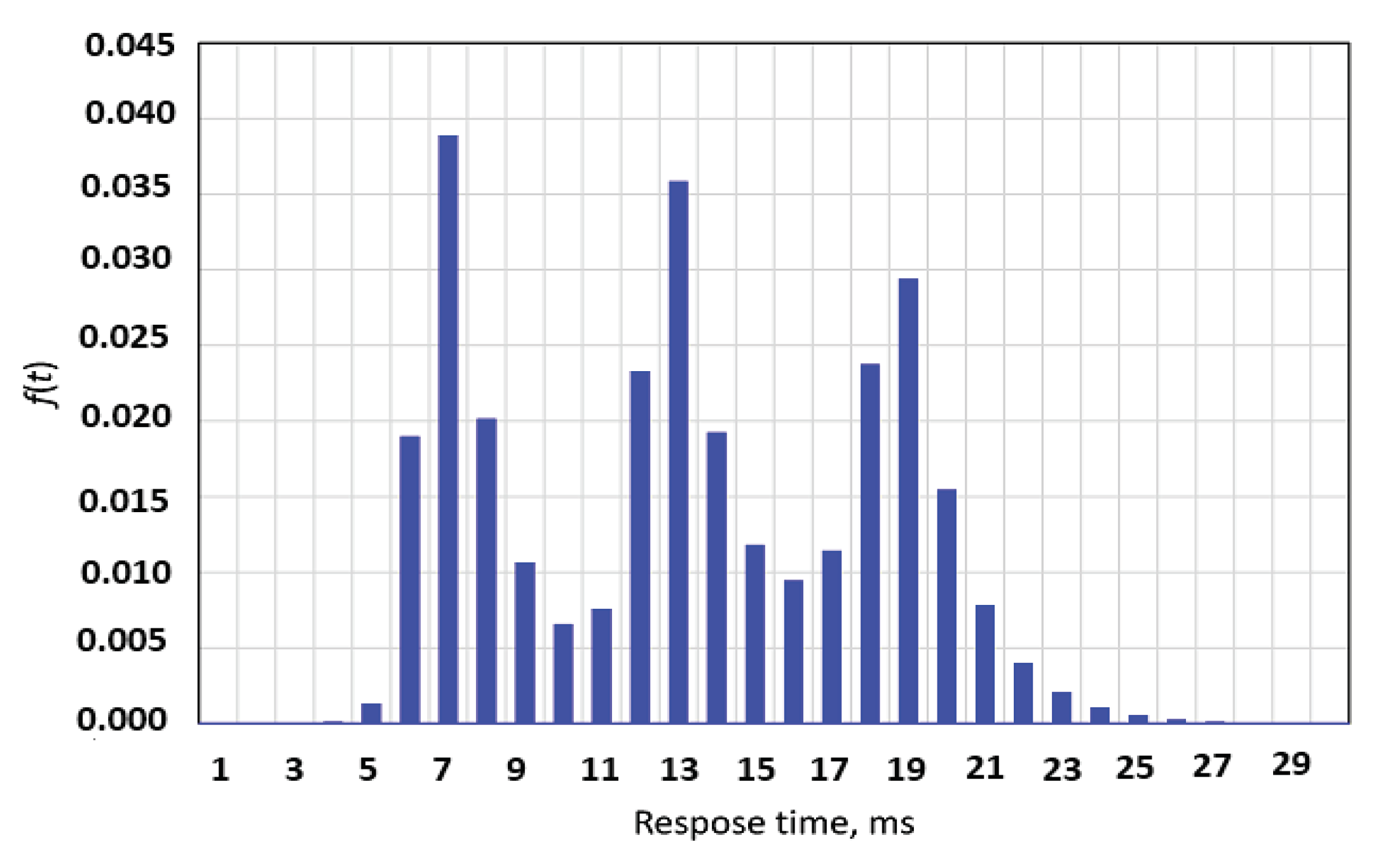

The response time distribution obtained by the simulation model with three priorities for requests and three layers is shown in the

Figure 11.

Response time distribution is a multimodal distribution with a number of modes corresponding to the number of layers. This is an average response time for all priorities.

The system parameters in this example provide the service of more than 80% of the first-priority requests by the first layer, more than 80% of the second-priority requests by the second layer, and the same for the third layer. By choosing the number of layers, we can provide the necessary quality of service for different priority requests. The performance of one layer depends on the access network throughput and the server (cloud) performance. The throughput may be changed by using a necessary number of frequency channels or servers correspondently.

Response time increases by increasing the traffic rate. At a low traffic rate, the first layer can serve all requests. Increasing the traffic rate will cause the difference of the response time for different priorities increase too. In practice, the high-traffic mode is more important. In practice, if each layer is a vehicle equipped with the access point and server, we can change the number of layers dynamically. We can increase the number of layers when it is necessary by moving a vehicle to the corresponding point.

The Fog environment service model considered above describes the motion of the Fog relative to the base stations, but with the same model, we can describe a general case where the Fog and base stations are mobile. In this case, we have to choose speed and direction parameters as a vector sum for all elements of the system.

The impact of the traffic on the response time shown in

Figure 12.

5. Conclusions

As a conclusion, we can say that MEC technology is a good approach to increasing the quality of service in a local area, but sometimes, small area size leads to unstable traffic and low utilization of equipment. The aforementioned case is most relevant for VANET networks in which the number of users (car flow) is unstable and highly time-dependent. To improve the quality of MEC solutions, we propose a method to improve the utilization of equipment by implementing a multilayer system of clouds. This structure provides us the use of MEC local (first layer) clouds with a high-value utilization of equipment, but the next-layer cloud prevents degradation of quality in the lower-layer cloud. It is possible to connect some lower-layer clouds to one upper-layer cloud. The upper-layer cloud can serve only excess traffic coming from the lower layer or any additional traffic coming from any area. In addition, using base stations with different technologies of antennas can help realize this method, for example. In theory, it is possible to use several layers. The main idea is that the upper layer serves the excess traffic of the lower layer. Furthermore, modeling of the proposed method shows that it can significantly improve the utilization of resources with no quality degradation in the case of stationary traffic. In the case of non-stationary traffic, the efficiency of this method may increase. Additionally, using the MEC approach for the Fog environment allows the easy implementation of the service system by using a number of mobile stations placed on vehicles. We can consider a group of such stations as a multilayer service system. We have to use a number of priorities for different devices in the Fog in this case. The Fog environment model given above may be useful for estimating the parameters of the system.

In this paper, we considered VANET and Fog environments as two important applications of MEC technology for future networks. The models proposed in the paper allow the choice of the parameters of the service system. The main idea of the proposed methods involves increasing the utilization of the resources by using a number of layers, which may be dynamically changed if it is necessary.

We presume that future work in this direction will involve descriptions of non-stationary traffic and the method of the resource distribution by service layers.