Enhancing Preservice Teachers’ Key Competencies for Promoting Sustainability in a University Statistics Course

Abstract

1. Introduction

- What statistical knowledge do preservice teachers have?

- What is the self-perceived degree of statistical knowledge?

- What is the measured degree of statistical knowledge?

- What is the relationship between the self-perceived, premeasured, and post-measured degrees of statistical knowledge?

- How effective is the project with statistical investigation in terms of enhancing preservice teachers’ statistical knowledge?

- What is the degree of increase in statistical knowledge consequential to the statistical investigation project?

- How did the increase in statistical knowledge occur?

- How did the project support preservice teachers’ development of statistical thinking?

- How were preservice teachers engaged in groupwork presentations?

- How did the project support preservice teachers in making data-driven decisions?

2. Literature Review

2.1. Statistical Knowledge and Statistical Thinking

2.2. Teacher Education with Statistical Investigation Projects and Sustainability

- the abilities to learn from others; to understand and respect the needs, perspectives, and actions of others (empathy); to understand, relate to, and be sensitive to others (empathic leadership); to deal with conflicts in a group; and to facilitate collaborative and participatory problem solving.

3. Materials and Methods

3.1. Participants

3.2. The Statistical Investigation Project

3.3. The Pre- and Posttest Sheet

3.4. Data Analyses for Each Research Question

3.4.1. Research Question 1

3.4.2. Research Question 2

3.4.3. Research Question 3

4. Results

4.1. What Statistical Knowledge do Preservice Teachers Have?

4.1.1. What Is the Self-Perceived Degree of Statistical Knowledge?

4.1.2. What Is the Measured Degree of Statistical Knowledge?

4.1.3. What Is the Relationship between the Self-Perceived, Premeasured, and Post-Measured Degrees of Statistical Knowledge?

4.2. How Effective Is the Project with Statistical Investigation in Terms of Enhancing Preservice Teachers’ Statistical Knowledge?

4.2.1. What Is the Degree of Increase in Statistical Knowledge Because of the Statistical Investigation Project?

4.2.2. How Did the Increase of Statistical Knowledge Occur?

Topic 1: Meaning of Population and Sample

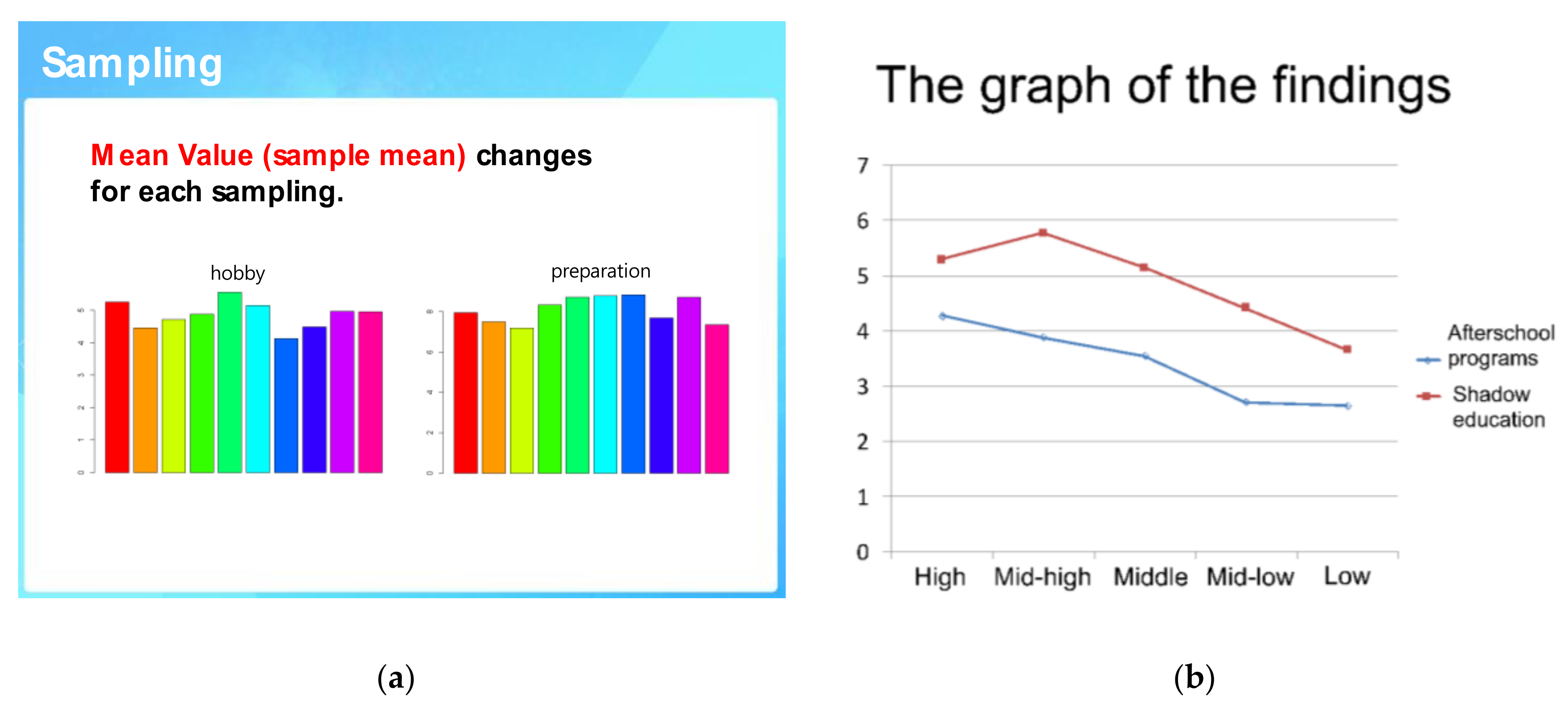

Topic 2. Sampling

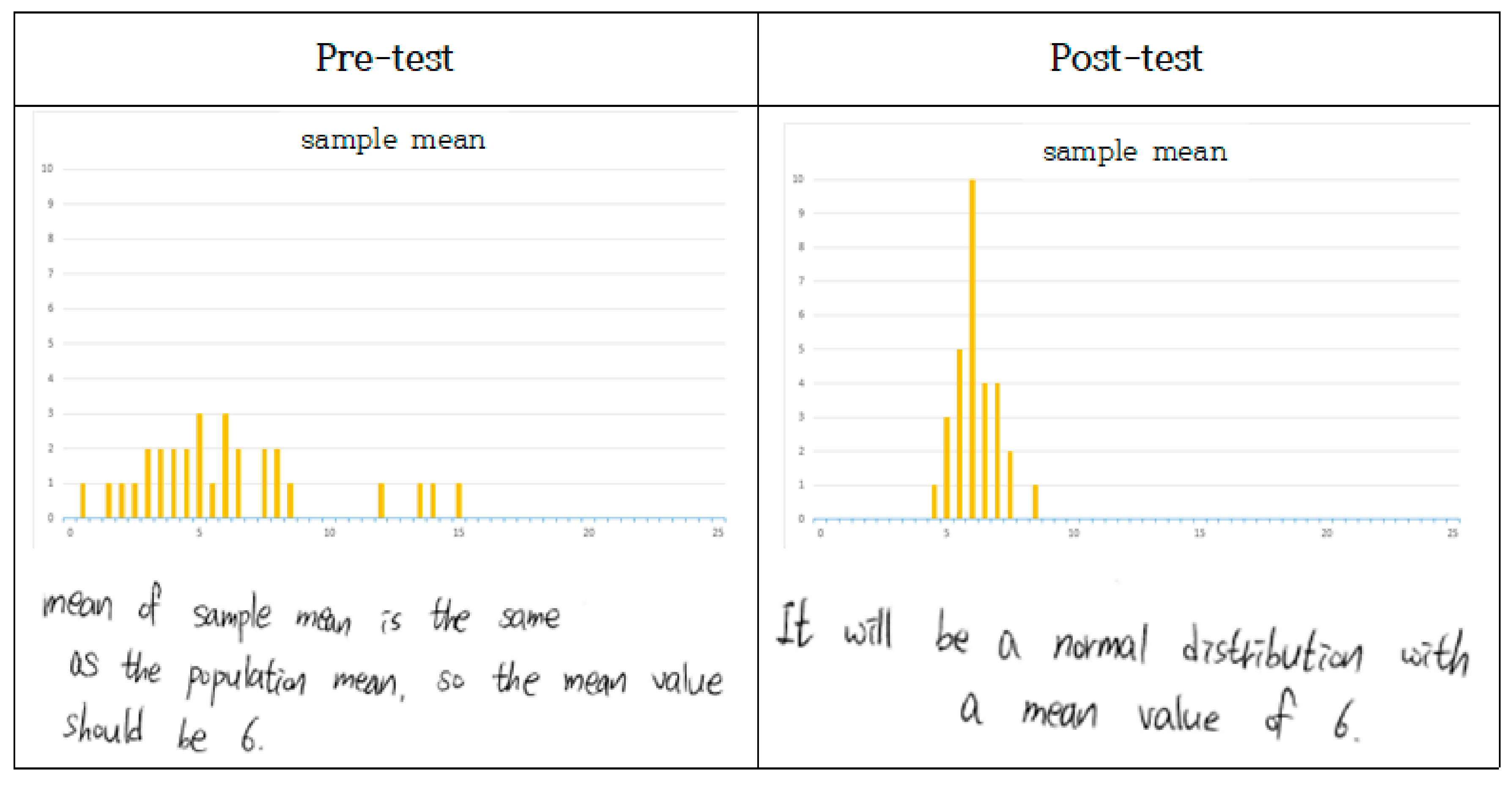

Topic 3. Population Means and Sample Means

Topic 4. Estimating the Population Mean and its Interpretation

4.3. How Did the Project Support Preservice Teachers’ Development of Statistical Thinking?

4.3.1. How Were Preservice Teachers Engaged in Groupwork Presentations?

4.3.2. How Did the Project Support Preservice Teachers in Making Data-Driven Decisions?

- Before getting the results, I had originally anticipated that the number one purpose (of shadow education) would be supplementing and deepening lessons at school. Indeed, it was entrance preparations to the next level of the schooling system. I am certain that, for this purpose, shadow education includes consultation of the statement of purpose, training for on-site interview, etc. Such services are expensive and yet occur for a short period. During the project, I did not consider time as a variable, which might be the reason (for the discrepancy between my anticipation and the results from the data analysis).

5. Discussion

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- United Nations. Transforming our World: The 2030 Agenda for Sustainable Development; United Nations General Assembly: New York, NY, USA, 2015. [Google Scholar]

- UNESCO. Education for Sustainable Development Goals: Learning Objectives; UNESCO: Paris, France, 2017. [Google Scholar]

- Lee, S.J. Study of Statistical Teaching; Graduate School of Seoul National University: Seoul, Korea, 2000. [Google Scholar]

- Franklin, C.; Kader, G.; Mewborn, D.; Moreno, J.; Peck, R.; Perry, M.; Scheaffer, R. Guidelines for Assessment and Instruction in Statistics Education (GAISE) Report: A Pre-K–12 Curriculum Framework; American Statistical Association: Alexandria, VA, USA, 2007. [Google Scholar]

- Gal, I. Statistical literacy, meanings, components, responsibilities. In The Challenge of Developing Statistical Literacy, Reasoning and Thinking; Ben-Zvi, D., Garfield, J., Eds.; Kluwer Academic Publishers: Dordrecht, The Netherlands, 2004; pp. 47–78. [Google Scholar]

- Rim, H. Analysis on Middle School Third Graders’ Understanding of Math Curriculum Achievement Standard from the Results of National Assessment of Educational Achievement. J. Curric. Evaluat. 2018, 21, 219–241. [Google Scholar] [CrossRef]

- Kim, W.K.; Moon, S.Y.; Byun, J.Y. Mathematics teachers knowledge and belief on the high school probability and statistics. Math. Educ. 2006, 45, 381–406. [Google Scholar]

- Kim, J.R.; KIm, Y.H. A study of the policy change of teacher’ education in Korea with an analysis of America statistical literacy education. Korean Sch. Math. Soc. 2017, 20, 163–186. [Google Scholar]

- Kim, J.H. An Analysis of Misconception and Their Causes by Students in the ‘Statistical Estimation’; Seoul National University Graduate School of Education: Seoul, Korea, 2012. [Google Scholar]

- Ben-Zvi, D.; Makar, K. International perspectives on the teaching and learning of statistics. In The Teaching and Learning of Statistics; Ben-Zvi, D., Makar, K., Eds.; Springer: Cham, Switzerland, 2016; pp. 1–10. [Google Scholar]

- Chance, B.; del Mas, R.; Garfield, J. Reasoning about sampling distributions. In The Challenge of Developing Statistical Literacy, Reasoning and Thinking; Ben-Zvi, D., Garfield, J., Eds.; Springer: Dordrecht, The Netherlands, 2004; pp. 295–323. [Google Scholar]

- Lovett, J.N.; Lee, H.S. Preservice Secondary Mathematics Teachers’ Statistical Knowledge: A Snapshot of Strengths and Weaknesses. J. Stat. Educ. 2018, 26, 214–222. [Google Scholar] [CrossRef]

- Francis, D.C.; Hudson, R.; Vesperman, C.; Perez, A. Comparing Technology-supported Teacher Education Curricular Models for Enhancing Statistical Content Knowledge. Interdiscip. J. Probl. Learn. 2014, 8, 50–64. [Google Scholar] [CrossRef][Green Version]

- Groth, R.E. Developing Statistical Knowledge for Teaching during design-based research. Stat. Educ. Res. J. 2017, 16, 376–396. [Google Scholar]

- Makar, K.; Confrey, J. Variation talk: Articulating meaning in statistics. Stat. Educ. Res. J. 2005, 4, 27–54. [Google Scholar]

- Garfield, J.; Ben-Zvi, D. Research on Statistical Literacy, Reasoning, and Thinking: Issues, Challenges, and Implications. In The Challenge of Developing Statistical Literacy, Reasoning and Thinking; Springer Science and Business Media LLC: Dordrecht, The Netherlands, 2004; pp. 397–409. [Google Scholar]

- Marriott, J.; Davies, N.; Gibson, L. Teaching, Learning and Assessing Statistical Problem Solving. J. Stat. Educ. 2009, 17, 17. [Google Scholar] [CrossRef]

- Lee, J.Y. An Analysis on the Mathematics Teachers’ Subject Matter Knowledge of Normal Distribution; Graduate School of Korean National University of Education: Cheongju, Korea, 2016. [Google Scholar]

- Magiera, M.; van den Kieboom, L.; Moyer, J. Relationships Among Features of Pre-Service Teachers’ Algebraic Thinking. In Proceedings of the 35th IGPME Conference, Ankara, Turkey, 10–15 July 2011; pp. 169–176. [Google Scholar]

- Franklin, C.; Bargagliotti, A.; Case, C.; Kader, G.; Scheaffer, R.; Spangler, D. Statistics Education of Teachers (SET); American Statistical Association: Alexandria, VA, USA, 2015. [Google Scholar]

- Kim, C.Y. Analysis of Student’s Misconception and Development of Geogebra Learning Material of Population Mean Estimation; Graduate School of Korean National University of Education: Cheongwon, Korea, 2014. [Google Scholar]

- Ko, E.S.; Park, M.S. Pre-service elementary school teachers’ statistical literacy related to statistical problem solving. Sch. Math. 2017, 19, 443–459. [Google Scholar]

- Lee, J.H. Statistics reasoning ability. J. Korean Sch. Math. 2011, 14, 299–327. [Google Scholar]

- 2014. Available online: http://efsandquality.glos.ac.uk/ (accessed on 12 July 2020).

- Statistics Korea. 2020. Available online: https://mdis.kostat.go.kr/index.do (accessed on 12 July 2020).

- Ben-Zvi, D.; Garfield, J. Statistical literacy, reasoning, and thinking: Goals, definitions, and challenges. In The Challenge of Developing Statistical Literacy, Reasoning and Thinking; Ben-Zvi, D., Garfield, J., Eds.; Kluwer Academic Publishers: Dordrecht, The Netherlands, 2004; pp. 3–18. [Google Scholar]

- Tak, B.; Ku, N.-Y.; Kang, H.-Y.; Lee, K.-H. Preservice Secondary Mathematics Teachers’ Statistical Literacy in Understanding of Sample. Math. Educ. 2017, 56, 19–39. [Google Scholar] [CrossRef][Green Version]

- Shin, B.M. An analysis of teachers’ Pedagogical Content Knowledge on probability. Sch. Math. 2008, 10, 463–487. [Google Scholar]

- De Vetten, A.; Schoonenboom, J.; Keijzer, R.; van Oers, B. The development of informal statistical inference content knowledge of pre-service primary school teachers during a teacher college intervention. Educ. Stud. Math. 2018, 99, 217–234. [Google Scholar] [CrossRef]

- Doerr, H.; Jacob, B. Investigating secondary teachers’ statistical understandings. J. Stat.Educ. 2018, 26, 776–786. [Google Scholar]

- Ko, E.S.; Lee, K.H. Pre-service Teachers’ understanding of statistical sampling. J. Educ. Res. Math. 2011, 21, 17–32. [Google Scholar]

- Choi, M.J.; Lee, J.H.; Kim, W.K. An analysis of Mathematical Knowledge for Teaching of statistical estimation. Math. Educ. 2016, 55, 317–334. [Google Scholar] [CrossRef]

- Han, G.H.; Jeon, Y.J. A Comparative study on misconception about statistical estimation that future math teachers and high school students have. J. Korean Sch. Math. Soc. 2018, 21, 247–266. [Google Scholar]

- Wild, C.J.; Pfannkuch, M. Statistical thinking in empirical enquiry. Intern. Stat. Rev. 1999, 67, 223–248. [Google Scholar] [CrossRef]

- Garfield, J.; Ben-Zvi, D. How Students Learn Statistics Revisited: A Current Review of Research on Teaching and Learning Statistics. Int. Stat. Rev. 2007, 75, 372–396. [Google Scholar] [CrossRef]

- Kim, D.E.; Kang, P.L.; Lee, M.H. Analysis on pre-service mathematics teachers’ statistical literacy in lesson plan. East Asian Math. J. 2019, 35, 429–449. [Google Scholar]

- Casey, S.A.; Wasserman, N.H. Teachers’ knowledge about informal line of best fit. Stat. Educ. Res. J. 2015, 14, 8–35. [Google Scholar]

- Burgess, T. Investigating the “Data Sense” of Preservice Teachers. In Proceedings of the Sixth International Conference on Teaching Statistics, Cape Town, South Africa, 7–12 July 2002. [Google Scholar]

- Heaton, R.M.; Mickelson, W.T. The Learning and Teaching of Statistical Investigation in Teaching and Teacher Education. J. Math. Teach. Educ. 2002, 5, 35–59. [Google Scholar] [CrossRef]

- Makar, K.; Confrey, J. Secondary Teachers’ Statistical Reasoning in Comparing Two Groups. In The Challenge of Developing Statistical Literacy, Reasoning and Thinking; Springer Science and Business Media LLC: Dordrecht, The Netherlands, 2004; pp. 353–373. [Google Scholar]

- Lovett, J.N. The Preparedness of Preservice Secondary Mathematics Teachers to Teach Statistics: A Cross-Institutional Mixed Method Study. Unpublished Doctoral Dissertation, NC State University, Raleigh, 2016. Available online: https://repository.lib.ncsu.edu/handle/1840.16/11008 (accessed on 12 July 2020).

- Watson, J.M.; Moritz, J.B. Developing Concepts of Sampling. J. Res. Math. Educ. 2000, 31, 44. [Google Scholar] [CrossRef]

- Pfannkuch, M. The Role of Context in Developing Informal Statistical Inferential Reasoning: A Classroom Study. Math. Think. Learn. 2011, 13, 27–46. [Google Scholar] [CrossRef]

- Mickelson, W.T.; Heaton, R.M. Primary Teachers’ Statistical Reasoning about Data. In The Challenge of Developing Statistical Literacy, Reasoning and Thinking; Springer Science and Business Media LLC: Dordrecht, The Netherlands, 2004; pp. 327–352. [Google Scholar]

- Biehler, R.; Ben-Zvi, D.; Bakker, A.; Makar, K. Technology for Enhancing Statistical Reasoning at the School Level. In Third International Handbook of Mathematics Education; Springer Science and Business Media LLC: Dordrecht, The Netherlands, 2012; Volume 27, pp. 643–689. [Google Scholar]

- Cobb, G.W.; Moore, D.S. Mathematics, statistics, and teaching. Am. Math. Month. 1997, 104, 801–823. [Google Scholar] [CrossRef]

- Conway, B.; Martin, W.G.; Strutchens, M.; Kraska, M.; Huang, H. The Statistical Reasoning Learning Environment: A Comparison of Students’ Statistical Reasoning Ability. J. Stat. Educ. 2019, 27, 171–187. [Google Scholar] [CrossRef]

- Wilkerson, M.H.; Laina, V. Middle school students’ reasoning about data and context through storytelling with repurposed local data. ZDM 2018, 50, 1223–1235. [Google Scholar] [CrossRef]

- Ball, D.L.; Feiman-Nemser, S. Using textbooks and teachers’ guides: A dilemma for beginning teachers and teacher educators. Curric. Inquiry 1988, 18, 401–423. [Google Scholar] [CrossRef]

- Lee, H.; Hollebrands, K. Preparing to teach mathematics with technology: An integrated approach to developing technological pedagogical content knowledge. Contemp. Issues Technol. Teacher Educ. 2008, 8, 326–341. [Google Scholar]

- Reston, E.; Bersales, L.G. Fundamental statistical ideas in the school curriculum and in training teachers. In Teaching Statistics in School Mathematics-Challenges for Teaching and Teacher Education; Batanero, C., Burrill, G., Reading, C., Rossman, A., Eds.; Springer: Dordrecht, The Netherlands, 2011; pp. 57–69. [Google Scholar]

- Datnow, A.; Hubbard, L. Teacher capacity for and beliefs about data-driven decision making: A literature review of international research. J. Educ. Chang. 2015, 17, 7–28. [Google Scholar] [CrossRef]

- Gummer, E.; Mandinach, E. Building a Conceptual Framework for Data Literacy. Teach. College Record 2015, 117, 1–22. [Google Scholar]

- Marsh, J.A. Interventions promoting educators’ use of data: Research insights and gaps. Teach. College Record 2012, 114, 1–48. [Google Scholar]

- Datnow, A.; Park, V.; Kennedy-Lewis, B. Affordances and constraints in the context of teacher collaboration for the purpose of data use. J. Educ. Adm. 2013, 51, 341–362. [Google Scholar] [CrossRef]

- Green, J.L.; Smith, W.M.; Kerby, A.T.; Blankenship, E.E.; Schmid, K.K.; Carlson, M.A. Introductory statistics: Preparing in-service middle-level mathematics teachers for classroom research. Stat. Educ. Res. J. 2018, 17, 216–238. [Google Scholar]

- Ben-Zvi, D.; Gravemeijer, K.; Ainley, J. Design of Statistics Learning Environments. In Handbook of Comparative Studies on Community Colleges and Global Counterparts; Springer Science and Business Media LLC: Dordrecht, The Netherlands, 2017; pp. 473–502. [Google Scholar]

- Gould, R. Statistics and the Modern Student. Int. Stat. Rev. 2010, 78, 297–315. [Google Scholar] [CrossRef]

- Hall, J. Using Census at School and TinkerPlots™ to support Ontario elementary teachers’ statistics teaching and learning. In Teaching Statistics in School Mathematics: Challenges for Teaching and Teacher Education; Batanero, C., Burrill, G., Reading, A., Rossman, A., Eds.; Springer Science and Business Media LLC: Dordrecht, The Netherlands, 2008. [Google Scholar]

- Schafer, D.W.; Ramsey, F.L. Teaching the Craft of Data Analysis. J. Stat. Educ. 2003, 11, 11. [Google Scholar] [CrossRef]

- Heiberger, R.M.; Neuwirth, E. R Through Excel: A Epreadsheet Interface for Statistics, Data Analysis, and Graphics; Springer: Dordrecht, The Netherlands, 2009. [Google Scholar]

- Lee, H.S.; Kersaint, G.; Harper, S.R.; Driskell, S.O.; Jones, D.L.; Leatham, K.R.; Angotti, R.L.; Adu-Gyamfi, K. Teachers’ use of transnumeration in solving statistical tasks with dynamic statistical software. Stat. Educ. Res. J. 2014, 13, 25–52. [Google Scholar]

- Lee, S.-B.; Park, J.; Choi, S.H.; Kim, D.-J. Re-exploring teaching and learning of probability and statistics using Excel. J. Korea Soc. Comput. Inf. 2016, 21, 85–92. [Google Scholar] [CrossRef][Green Version]

- Teaching statistical thinking through investigative projects. In Teaching Statistics in School Mathematics-Challenges for Teaching and Teacher Education; Springer: Dordrecht, The Netherlands, 2011; pp. 109–120.

- MacGillivray, H.; Pereira-Mendoza, L. Teaching Statistical Thinking Through Investigative Projects. In New ICMI Study Series; Springer Science and Business Media LLC: Dordrecht, The Netherlands, 2011; Volume 14, pp. 109–120. [Google Scholar]

- Ben-Zvi, D. Using Wiki to promote collaborative learning in statistics education. Technol. Innovat. Stat. Educ. 2007, 1. Available online: https://escholarship.org/uc/item/6jv107c7 (accessed on 12 July 2020).

- Nolan, D.; Lang, D.T. Dynamic, Interactive Documents for Teaching Statistical Practice. Int. Stat. Rev. 2007, 75, 295–321. [Google Scholar] [CrossRef][Green Version]

- LOCUS Project. Available online: https://locus.statisticseducation.org (accessed on 12 July 2020).

- Woo, J.H.; Park, K.S.; Lee, J.H.; Park, K.M.; Lim, J.H.; Kwon, S.I.; Nam, J.Y.; Kim, J.H.; Kang, H.Y. Probability and Statistics Textbook; Donga: Seoul, Korea, 2017. [Google Scholar]

- Bae, J.S.; Yeo, T.K.; Cho, S.H.; Kim, M.K.; Chun, H.J.; Jo, S.H.; Byun, D.Y. Probability and Statistics Textbook, 2nd ed.; Kumsung: Seoul, Korea, 2018. [Google Scholar]

- Braun, V.; Clarke, V. Using thematic analysis in psychology. Qualit. Res. Psychol. 2006, 3, 77–101. [Google Scholar] [CrossRef]

- Kuckartz, U. Qualitative Text Analysis: A Systematic Approach. In ICME-13 Monographs; Springer Science and Business Media LLC: Dordrecht, The Netherlands, 2019; pp. 181–197. [Google Scholar]

- Nahm, F.S. Understanding Effect Size. Hanyang Med Rev. 2015, 35, 40. [Google Scholar] [CrossRef][Green Version]

- Cohen, J. Statistical Power Analysis for the Behavioral Sciences, 2nd ed.; Lawrence Erlbaum Associates: Hillsdale, NJ, USA, 1988. [Google Scholar]

- Song, S.E. The Analysis of Prospective Mathematics Teachers‘ Capacity Recognition and Knowledge about Statistical Problem Solving; Ewha Womans University Graduate School of Education: Seoul, Korea, 2019. [Google Scholar]

- Aydın, S.; Aydin, S. Using Excel in Teacher Education for Sustainability. J. Teach. Educ. Sustain. 2016, 18, 89–104. [Google Scholar] [CrossRef]

- Kutluca, T.; Yalman, M.; Tum, A. Use of Interactive Whiteboard in Teaching Mathematics for Sustainability and its Effect on the Role of Teacher. Discourse Commun. Sustain. Educ. 2019, 10, 113–132. [Google Scholar] [CrossRef]

- Innabi, H. Teaching Statistics for Sustainability. In Proceedings of the 10th International Conference on Teaching Statistics, Kyoto, Japan, 8–13 July 2018. [Google Scholar]

- Barwell, R. Some Thoughts on a Mathematics Education for Environmental Sustainability. In International Perspectives on the Teaching and Learning of Geometry in Secondary Schools; Springer Science and Business Media LLC: Dordrecht, The Netherlands, 2018; pp. 145–160. [Google Scholar]

- Makar, K.; de Sousa, B.; Gould, R. Sustainability in Statistics Education. In Proceedings of the 9th International Conference on Teaching Statistics, ICOTS9, 13 July 2014; International Statistical Institute: Voorburg, The Netherlands, 2020; Available online: https://icots.info/9/proceedings/contents.html (accessed on 20 September 2020).

| Session Number | Activity |

|---|---|

| 1 |

|

| 2 |

|

| 3 |

|

| 4 |

|

| 5 |

|

| 6 |

|

| Statistics | Topic 1 | Topic 2 | Topic 3 | Topic 4 | Mean of Topics 1–4 | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Sel (6) | Pre (6) | Pos (6) | Sel (6) | Pre (5) | Pos (5) | Sel (6) | Pre (5) | Pos (5) | Sel (6) | Pre (10) | Pos (10) | Sel (6) | Pre (6) | Pos (6) | |

| Min. | 5 | 3 | 4 | 4 | 2 | 3 | 5 | 1 | 2 | 4 | 0 | 1 | |||

| Med. | 5.5 | 5 | 5 | 5 | 5 | 5 | 5.5 | 3 | 3 | 5 | 7 | 8 | |||

| IQR | 1 | 1 | 1 | 1.5 | 1 | 1 | 1.25 | 2 | 2 | 1.33 | 4 | 2 | |||

| Max. | 6 | 6 | 6 | 6 | 5 | 5 | 6 | 5 | 4 | 6 | 9 | 9 | |||

| Mean | 5.91 | 4.87 | 5.22 | 5.36 | 4.35 | 4.57 | 5.68 | 2.91 | 3.04 | 5.37 | 6.17 | 7.35 | 5.58 | 4.32 | 4.69 |

| M. Mean | 5.91 | 4.87 | 5.22 | 5.36 | 5.22 | 5.48 | 5.68 | 3.49 | 3.65 | 5.37 | 3.70 | 4.41 | |||

| SD | 0.294 | 0.757 | 0.671 | 0.658 | 0.982 | 0.662 | 0.395 | 1.04 | 0.928 | 0.619 | 2.76 | 1.95 | |||

| Topic | Variables | Self-Perceived | Post |

|---|---|---|---|

| Mean of Topics 1–4 | Self-perceived | 1 | −0.065 |

| Pre | −0.051 | 0.400 | |

| Topic 1 | Self-perceived | 1 | −0.117 |

| Pre | 0.177 | 0.055 | |

| Topic 2 | Self-perceived | 1 | −0.079 |

| Pre | 0.295 | 0.519 * | |

| Topic 3 | Self-perceived | 1 | −0.244 |

| Pre | −0.441 * | 0.450 * | |

| Topic 4 | Self-perceived | 1 | 0.377 |

| Pre | 0.388 | 0.561 ** |

| t-Test | Mean of Topics 1–4 | Topic 1 | Topic 2 | Topic 3 | Topic 4 | |||||

|---|---|---|---|---|---|---|---|---|---|---|

| Score | Pre | Post | Pre | Post | Pre | Post | Pre | Post | Pre | Post |

| 4.32 | 4.69 | 4.87 | 5.22 | 4.35 | 4.57 | 2.91 | 3.04 | 6.17 | 7.35 | |

| t-value | −3.147 | 1.734 | 1.186 | 0.504 | −2.526 | |||||

| p-value | 0.005 * | 0.083 | 0.236 | 0.614 | 0.019 * | |||||

| Cohen’s d | −0.67 | −0.49 | −0.26 | −0.13 | −0.49 | |||||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Suh, H.; Kim, S.; Hwang, S.; Han, S. Enhancing Preservice Teachers’ Key Competencies for Promoting Sustainability in a University Statistics Course. Sustainability 2020, 12, 9051. https://doi.org/10.3390/su12219051

Suh H, Kim S, Hwang S, Han S. Enhancing Preservice Teachers’ Key Competencies for Promoting Sustainability in a University Statistics Course. Sustainability. 2020; 12(21):9051. https://doi.org/10.3390/su12219051

Chicago/Turabian StyleSuh, Heejoo, Sohyung Kim, Seonyoung Hwang, and Sunyoung Han. 2020. "Enhancing Preservice Teachers’ Key Competencies for Promoting Sustainability in a University Statistics Course" Sustainability 12, no. 21: 9051. https://doi.org/10.3390/su12219051

APA StyleSuh, H., Kim, S., Hwang, S., & Han, S. (2020). Enhancing Preservice Teachers’ Key Competencies for Promoting Sustainability in a University Statistics Course. Sustainability, 12(21), 9051. https://doi.org/10.3390/su12219051