Abstract

The enormous volume and largely varying quality of available reviews provide a great obstacle to seek out the most helpful reviews. While Naive Bayesian Network (NBN) is one of the matured artificial intelligence approaches for business decision support, the usage of NBN to predict the helpfulness of online reviews is lacking. This study intends to suggest HPNBN (a helpfulness prediction model using NBN), which adopts NBN for helpfulness prediction. This study crawled sample data from Amazon website and 8699 reviews comprise the final sample. Twenty-one predictors represent reviewer and textual traits as well as product traits of the reviews. We investigate how the expanded list of predictors including product, reviewer, and textual characteristics of eWOM (online word-of-mouth) has an effect on helpfulness by suggesting conditional probabilities of the binned determinants. The prediction accuracy of NBN outperformed that of the k-nearest neighbor (kNN) method and the neural network (NN) model. The results of this study can support determining helpfulness and support website design to induce review helpfulness. This study will help decision-makers predict the helpfulness of the review comments posted to their websites and manage more effective customer satisfaction strategies. When prospect customers feel such review helpfulness, they will have a stronger intention to pay a regular visit to the target website.

1. Introduction

eWOM (online word-of-mouth) plays an increasingly enormous role for consumers to obtain information on purchase decisions and as one of the WOM communications, reviews represent the bulk of user-generated content [1]. One of the critical challenges for market-driven product design to satisfy customer needs is to analyze vast amounts of online reviews to determine helpful reviews accurately [2]. Online reviews are described as “peer-generated product evaluations posted on company or third party websites” [3] or “a type of product information created by users based on personal usage experience” [4]. Online reviews increase consumer awareness and represent a credible source of information regarding product and service quality [5]. They can support manufacturers in predicting consumer reaction to their products for improving the products [6].

Recently, researchers have shown that customers, in creating online reviews, are under social influence from others [7,8]. The importance of explaining or predicting review helpfulness can be understood from its role in attracting customers’ behaviors. Review helpfulness can be treated as enabling the promotion of purchasing behavior [9]. The intention of consumers to online purchase is greatly influenced by perceived review usefulness [10]. The usefulness of eWOM information has a significant influence on customer trust [10]. Consumers will provide greater trust in review contents if reviews are valuable to satisfy their information needs [11], which makes a rigorous analytics method crucial to suggest the prediction of online review helpfulness. Thus, this so-called “social navigation” community-based voting technique [12] is much adopted to support readers in sorting out useful reviews, especially for products with a huge amount of online reviews. Online reviews can bring diagnostic value, representing their helpfulness for several stages of the decision process in purchasing. Major websites like Amazon.com present a “Most Helpful First” option in sorting reviews according to the “usefulness” dimension, using the count of readers who responded affirmatively to the question asking whether the review is useful.

This study has the following objectives. First, this study examines the previous literature on predicting helpfulness [13,14,15,16], offers an expanded list of predictors, including product, reviewer, and textual characteristics in order to explain helpfulness, and through NBN, suggests the importance of each determinant using conditional probability of the binned determinants. This may be especially insightful because review helpfulness can be affected by the characteristics of the product, reviewer, and textual traits. For the class 1 of helpfulness class, if the conditional probability of the bin with greater value exceeds that of other bins and that for the class 0 of helpfulness class, this shows that the determinant is positively affecting helpfulness. Further, through comparing the conditional probability of different determinants, we can suggest which has the greater effect on helpfulness than the other determinants. This will improve understanding of the impact of product, reviewer, and textual traits on online review usefulness in a consumer-oriented mechanism where reviews are highlighted according to their expected helpfulness.

Second, while there are several studies of using business intelligence (BI) in online reviews, the studies using NBN to predict helpfulness are rare. This study intends to overcome this gap by suggesting and validating a data mining model using NBN for determining helpfulness of reviews. Because of its simplicity and high computational efficiency, Naive Bayesian Network (NBN) is a good machine learning tool providing high prediction performance, especially appropriate for samples with high dimensions. NBN is a machine learning algorithm which is computationally data-driven and produces good performance on noisy datasets. NBN is posited to have simplicity and computational efficiency because NBN is a nonparametric method and NBN does not require assumption on the distribution of data and estimating or optimizing parameters or weights like parametric methods such as regression or neural network. Given that an enormous number of review records are required to be processed, this study focuses on investigating the performance of NBN by comparing it with other methodologies based on the studies regarding the factors affecting helpfulness [3,14,15,16,17,18]. It is necessary to use NBN in predicting helpfulness as the application a Naive Bayesian network requires less complexity and computational resources than other methods, given the large volume of review data to be processed.

The sample data crawled from Amazon.com are used to predict review helpfulness based on the traits of product, review, and text, which is represented as HPNBN (a helpfulness prediction model using NBN), where review helpfulness is transformed into a categorical predictor representing high or low value. Our study enables the automatically analyzing of review content and improvement in the ability of BI by identifying targeted customer opinions. In order to validate our model, the predictive performance of NBN is contrasted with that of multivariate discriminant analysis (MDA) and k-nearest neighbor (kNN) and the neural networks (NN) method in terms of prediction error.

2. Research Background

Online review classification becomes a critical task because of the proliferated availability of online reviews due to the advent of big data analytics technologies and the increasing need to access them in order to suggest marketing strategy. In the past several years, for text classification, many methods based on machine learning and statistical theory have been suggested to overcome information overload problems in predicting helpfulness of reviews. An enormous number of product reviews on online sites exist to attract customers’ attention. Using data analytics becomes much more necessary as it becomes crucial for website management to choose and improve helpfulness of reviews to lower the likelihood that consumers stop vising their websites and to improve the attractiveness of their websites [9]. While researchers have suggested effective solutions in the field of text categorization [19], studies on data mining for determining the online review helpfulness need to be expanded in order to provide coherent and cohesive insights [20].

Recent studies using data mining techniques in various online product review analyses include applying data mining models to predict review helpfulness [16,17,21,22,23]. Various BI methods are employed such as k-nearest neighbors (kNN) [24], inductive learning based on rough set theory [25], ordinal logistic regression analysis [21], backpropagation neural networks [26], a neural deep learning model [27,28,29,30], support vector regression [31], and content analysis to assess text comments using logistic regression [18].

Although there exist many studies using BI in online reviews, the studies using NBN to predict helpfulness are scarce. It is required to adopt NBN in predicting helpfulness as the application of a Naive Bayesian network demands less complexity and computational resources than other methods.

3. Determinants of Helpfulness

The quality assessment becomes crucial as a great number of reviews that are different in quality are posted everyday [32]. Helpfulness can be described as the ratio of positive votes out of total votes a review receives [33]. As helpfulness is often treated as a binary helpfulness measure according to whether the original percentage is greater than a threshold value [20,23], our study considers helpfulness as a binary value showing “helpful” or “unhelpful” after applying threshold point of 0.5, which is generally suggested as a well adopted threshold in the previous literature [21,24]; when helpfulness is greater than 0.5, the review becomes “helpful”, otherwise, the review becomes “unhelpful”.

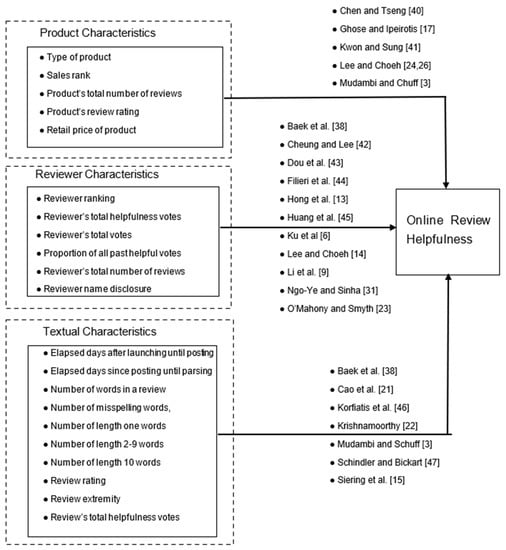

While there exist a large number of studies on review helpfulness prediction, the rationale regarding specific selection of features is still vague and needs further research [34,35,36,37]. As this study intends to encompass various aspects of reviews beyond text, our study categorizes various factors affecting review usefulness according to Baek et al. [38], Filieri [11], Filieri et al. [39], Ghose and Ipeirotis [17], and Lee and Cheoh [26]. Our study classifies determinants to helpfulness as product, reviewer, and text-related features as suggested in Figure 1, denoting the specific previous studies supporting their effects.

Figure 1.

The model of determinants for review helpfulness (See [3,6,9,13,14,15,17,21,22,23,24,26,31,38,40,41,42,43,44,45,46,47]).

For product traits, the review usefulness of a specific product is affected by the review activity level of that product [17,26,41]. Chen and Tseng [40] discovered that reviews with a number of comments on multiple features of products turned out to be high-quality reviews. Consumers demand different information to support purchase decisions on search or experience goods [3]. For a search good, it is easier for customers to obtain objective and comparable information about product quality before purchasing. An experience good is having subjective attributes which are hard to compare and to obtain information regarding product quality before purchasing.

Based on the previous literature which covers product, reviewer, and textual characteristics separately, this study integrates these previous models and suggests the model of determinants for review helpfulness which are classified into product, reviewer, and review as in Figure 1. The product data encompass (1) type of product (experience goods = 1, search goods = 0), (2) sales rank, (3) product’s total number of reviews, (4) product’s review rating, and (5) retail price of product.

For reviewer traits, reviewer rank, disclosure of reviewer name, visibility of source, expertise, and trustworthiness affect individuals’ perception of product, message, and reviewer [9,14,43,44,45]. O’Mahony and Smyth [23] suggest the categories of the features for helpfulness as user reputation, social, and sentiment. The authorship of a product review is positively associated with the review persuasiveness [9]. A reviewer’s reputation represented by reviewer helpfulness is a crucial intrinsic quality for review helpfulness [48] and is directly related to review trustfulness [6]. Consumers are encouraged to contribute to post reviews to maintain reputation. Reviewer ranking is crucial for review helpfulness of experience goods [38]. Reviewer’s expertise and disclosure of reviewer name turned out to be important predictors for the helpfulness from meta-analysis of the eWOM literature [13]. Reviewer engagement features include reputation, commitment, and current activity (the total number of reviews by reviewer) [31].

Reviewer attributes also have an effect on consumer perceptions of reviews [18]. The reviewer traits include (6) reviewer ranking, (7) reviewer’s total helpfulness votes, (8) reviewer’s total votes, (9) proportion of all past helpful votes (among the total count of votes) which is the reviewer history macro, (10) reviewer’s total number of reviews, and (11) reviewer name disclosure.

For textual traits, review depth and review rating are important determinants to helpfulness [46]. The review content-related signals are the most important predictors for helpfulness [15,22,46]. Data filtering with sentiment analysis is likely to increase the evaluation performance in determining useful reviews [49]. Reviews with extreme arguments tend to be considered as more helpful [21]. Review depth (the number of arguments) is positively related to agreements by providing readers more to think about [38], and can improve diagnosticity and helpfulness [3] and perceived value of the reviews. The linguistic style cues (such as counts of wh-words like where and which, sentences, and words) exert a positive influence on helpfulness.

The textual traits represent the stylistic traits which can have an effect on helpfulness [17,21]. The textual traits include (12) elapsed days after launching until posting, (13) elapsed days since posting until parsing, (14) number of words in a review, (15) number of misspelt words, (16) number of one letter long words, (17) number of words 2–9 letters long, (18) number of words 10+ letters long, (19) review rating, (20) review extremity indicating the absolute deviation of the reviewers’ ratings from the average ratings, and (21) review’s total helpfulness votes.

We suggest how product, reviewer, and textual characteristics of eWOM have an effect on helpfulness by indicating conditional probabilities of variables. For the class 1 of helpfulness class, if the conditional probability of the bin with greater value is greater, this shows that the determinant is positively affecting helpfulness. For instance, the last bin of the product’s review rating has the greatest probability among all bins for the class 1 of helpfulness class, and provides greater probability than for class 0 of the helpfulness class, and this shows that product’s review rating is positively affecting helpfulness. Further, by comparing the conditional probability of different determinants, we can suppose which has the greatest effect on helpfulness than the other determinants.

4. Naive Bayesian Network (NBN) in This Study

The Bayes classifier is based on three principles [50]: (1) Search out the other cases where the predictor values are identical to new case; (2) Decide what classes they all belong to or which class is majority class; (3) Regard that class as the prediction class for the new case. The Bayesian network, which is a directed and acyclic graph, is widely used where training time is linear in both the number of instances and attributes. The nodes and arcs of the Bayesian network are attributes and attribute dependencies, respectively. The conditional probabilities pertaining to each node show the attribute dependencies. The nodes in a Bayesian network are represented by the set of attributes , and . The top node belonging to a Bayesian network is represented by the class variable , and the value which has is c. The following represents Bayesian network classifier

The Bayes modification includes the following procedures:(1) For class 1, produce the individual probabilities that each predictor value in the case to be classified occurs in class 1; (2) Multiply these probabilities each other, then multiplies the proportion of cases belonging to class 1; (3) Repeat steps 1 and 2 for all classes; (4) Calculate a probability for class i by taking the value produced in step 2 for class i and dividing it by the sum of such values for all classes; (5) For the set of predictor values, the prediction class is determined as the class with the highest probability value. Given that all attributes are conditionally independent, the following is the Bayes formula for the probability that a case has outcome class :

The Naive Bayes formula to calculate the probability that a case with a given set of predictor values belong to class 1 among m classes is as follows:

Due to the computational simplicity, it is easy to construct a classifier for Naive Bayes. Its prediction performance is close to that of perceptron networks or decision trees [51]. The assumption of conditional independence leads to its computation efficiency and simplicity of NBN. As the simple form for the Bayesian network classifier, the classifier for Naive Bayes is as follows:

Although there exist many studies of using BI in online reviews, the studies adopting NBN to predict helpfulness are scarce. This study purports to investigate this gap by investigating and testing a data mining model for predicting helpfulness of reviews. Given the enormous number of review records to be analyzed, NBN is competitive in predicting helpfulness because of requiring less complex and computational resources than other methods.

Our model based on Naive Bayesian Network has advantages in that firstly, it employs crucial determinants for helpfulness, as suggested in Figure 1, and secondly, our model can allow no underlying assumptions of determinants, providing simplicity and computational efficiency by adopting a simplistic cutoff probability method which establishes a cutoff probability (mostly 0.5) for the helpfulness class. While the conditional independence assumption results in biased posterior probabilities, it is easy to build a Naive Bayes classifier due to the simplicity of computing the probability that a case has outcome class . Our model has important merit in selecting crucial determinants affecting online review helpfulness. In order to accomplish this merit, this study crawled sample data from Amazon.com and 8699 reviews comprise the final sample, and 21 predictors represent reviewer and textual traits as well as product traits of the reviews. In June 2014, the sample was crawled and the year date of reviews range from 1998 to 2013 (see Table 1 and Table A1 (Appendix A) for the distributions of sample and variables). All the reviews are in Korean. We crawled the site using software aid. The software used to crawl the sample includes Python and Beautifulsoup. We include all the records once they were crawled from Amazon.

Table 1.

The sample of the study.

The types of products are shown in Table 1. Only product type and the disclosure of reviewer name among the independent variables are categorical variables. In order to experiment with the Bayesian classifier, we discretize continuous variables into multiple bins (see last column in Table A1). The number of bins for each variable is determined such that the count of values pertaining to each bin is same. The value for each bin is given by the mean of the values belonging to each bin. In order to examine the importance for each variable for helpfulness, the mean difference for each of the 21 variables between the helpful group and non-helpful group is contrasted based on the training sample in Partition ID of 1 (Table 2). Except the number of words one letter long, all variables show significant difference between these groups. This study continues to use the number of words one letter long as it is theoretically posited to be important variable in previous studies such as Cao et al. [21] and Ghose and Ipeirotis [17].

Table 2.

Mean difference between helpfulness classes (based on training sample in Partition ID of 1).

5. Results

To validate our model performance, this study compares the predictive performance of the NBN model with that of MDA, kNN, and NN, which are widely used statistical and artificial intelligence (AI) analytics models for forecasting categorical outcomes. kNN (best k method) or case-based reasoning (CBR) are for experience-based problem solving and learning by retrieving past solutions and by adapting past solutions to solve new problems [50]. MDA is chosen as a reference method as this is a highly popular and powerful statistical classification method used as a benchmarking method like regression [50,52]. When we suppose a linear relationship among variables, MDA provides computationally fast results, classifying a large number of records. kNN is chosen as a reference method because in order to compare NBN with another AI method, our study chooses another nonparametric method like kNN in order to compare the predictive capability of these two nonparametric AI methods. kNN selects from one k-nearest neighbor and determines the best k which results in the lowest error rate in test set. Our study compares NBN with NN as NN is a popular method with high tolerance to noisy data and the ability to capture a highly complicated relationship among variables [53], which are the traits that non-parametric methods like kNN or NBN do not provide. NN is chosen to compare NBN with a parametric method based on optimized weights of networks. This study uses one hidden layer with the same number of nodes in the hidden layer as the number of nodes in the input layer.

To investigate the prediction performance of NBN, MDA, kNN, and NN, the study sample is divided into two subsamples—a training sample and a test sample, which are composed of 8449 and 250 records, respectively. The n-fold cross validation of samples is utilized to show the stability of the comparison results. A total of 8699 records are divided into 35 subsets, each of which has 250 records, respectively. When each of these subsets is used as a test sample one by one, the records left over are used as a training sample (Table 3). That is, for comparing the prediction performance among data mining methods, 35 pairs of training and test samples are built, each having 8449 and 250 records, respectively. Based on each training sample, BI methods create a prediction result for the target variables in the corresponding test sample. The prediction error is computed using a classification rate for helpfulness class. The classification rates are then averaged across a number of test samples. Further, in order to reduce the possibility of overfitting and collinearity, our study reduces the number of variables into 12 variables after considering the theoretical significance in their relation with helpfulness and the number of bins (variables with a larger number of bins are avoided as they can cause overfitting in NBN): (1) type of product (experience goods = 1, search goods = 0), (2) product’s total number of reviews, (3) product’s review rating, (4) reviewer name disclosure, (5) elapsed days after launching until posting, (6) elapsed days since posting until parsing, (7) number of misspelling words, (8) number of length one words, (9) number of length 2–9 words, (10) number of length 10 words, (11) review rating, and (12) review extremity indicating the absolute deviation of the reviewers’ ratings from the average ratings. From 35 test samples, the predictive ability of the NBN, MDA, kNN, and NN can be suggested from their misclassification ratios (Table 3).

Table 3.

Comparison of misclassification rate (t-value for NBN-MDA = −0.023, significance = 0.982; t-value for NBN-kNN = −5.319, significance = 0.000, t-value for NBN-NN = −7.244, significance = 0.000).

The prediction of helpfulness class in the corresponding test sample is produced using each trained NBN, MDA, kNN, and NN model. In order to examine the extent that there exists a major difference among errors in three models, a paired t-test is used for the average misclassification ratios from 35 test samples. NBN is comparable to MDA as the t-value (t = −0.023) is not so large. NBN largely outperformed kNN and NN, as the t-value is very large and significant (t = −5.319 p < 0.000; t = −7.244, p < 0.000). We used a smaller number (12) of input variables for comparison of prediction performance and thus, overfitting or multicollinearity due to the number of variables can be a smaller issue in classification rate. The significant difference in classification rate between NBN and kNN or NN can be due to the binned input variables, which probably lower the prediction performance of kNN or NN.

We investigate how the past traits of eWOM have an effect on helpfulness by suggesting conditional probabilities of variables. To provide the conditional probabilities of NBN, MDA, the 8699 cases are randomly divided into training sample (60%) and test sample (40%), each having 5219 and 3480 cases, respectively. The conditional probabilities are suggested in Table 4. The greater the conditional probability for some specific value of predictors, the greater the importance of that variable with that specific value. For instance, the conditional probability for binned proportion of review rating having 5, given that output class (helpfulness class) equal to 1 is 0.66, is greater than 0.51 in the case of the helpfulness class being equivalent to 0. This shows that review rating contributes to helpfulness class.

Table 4.

Conditional probabilities for example variables (using the first random partition).

Table 5 shows one example of prediction performance of training and test samples using NBN and the accuracy of test data is not much lower than that of the training sample, showing that the prediction model is trained appropriately for effective predicting.

Table 5.

Classification matrix for predicting helpfulness class (using the first test sample in n-fold cross validation of samples).

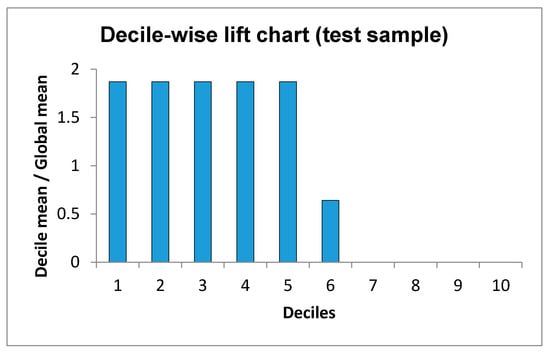

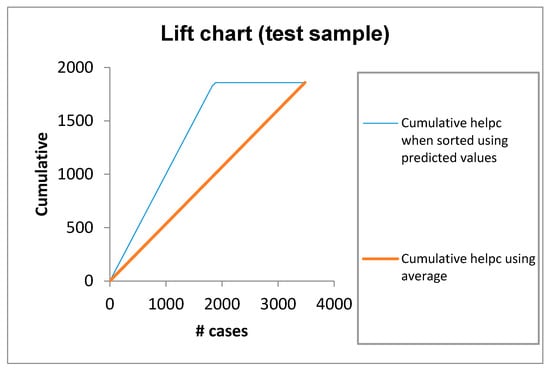

The lift chart in Figure 2 is to validate performance of the NBN model against “no model, pick randomly”, which classifies the class for all cases in the test sample as the most frequent class and is usually adopted as the benchmark [50]. The large gap between the two lines in the lift curve indicates the ability of the NBN model to predict the important class (i.e., helpfulness class = 1), relative to its average prevalence.

Figure 2.

Lift chart for test sample (Partition ID = 1).

By using product, reviewer, and textual traits of eWOM and using the crawled sample of 8699 reviews and 21 predictors of eWOM, we explain how the past characteristics of eWOM exert an influence on helpfulness by suggesting conditional probabilities of determinants. The greater conditional probability for some specific value of determinants represents the greater effect of that determinant with that specific value. The classification accuracy of NBN outperformed that of the k-nearest neighbor (kNN) method, which indicates the main premise of our method. This shows that NBN can be utilized to search out helpful reviews, providing an effective marketing solution. This indicates better marketing practice in online reviews because NBN can decrease the processing loads required to classify helpful reviews from a large number of review records.

6. Implications and Conclusions

6.1. Implications for Researchers

This study provides several implications for researchers. First, this study is one of a few research works to use NBN in predicting helpfulness. Given the enormous volume of review records to be processed, this study focuses on examining the performance of NBN by comparing with other methodologies. It turns out that the NBN model is comparable to conventional statistical analysis i.e., MDA, and largely outperformed the other BI method, i.e., kNN, in prediction performance, indicating that the proposed NBN method has merits in especially high dimensional data, as in this sample of online reviews where the relationships between independent and dependent variables (helpfulness) have complexity. NBN is one of the machine learning algorithms which is computationally based, data-driven, and unlike statistical models, does not require assumptions on data distribution, and performs well on noisy datasets [54,55], and is a rigorous machine learning method particularly suitable for samples with high dimensions [56]. NBN in this study can help overcome the prediction difficulty caused by the large variation exhibited by review content and quality, and enables the analyzing of a large number of online consumer reviews, which await full analysis of their characteristics. NBN is well suited for learning from nonlinear relationships among variables which do not demand prior knowledge of specific functional form. Our study tested a smaller number of determinants for comparison of prediction performance and this shows that overfitting or multicollinearity from the number of determinants can be less of an issue in predicting helpfulness. The significant difference between NBN and kNN or NN can be explained as that the binned input variables possibly decrease the prediction performance of kNN or NN.

Second, this study develops the previous literature on predicting helpfulness to provide an expanded list of predictors including product, reviewer, and textual data in order to explain helpfulness. This may be especially insightful because review helpfulness can be affected by the characteristics of the product, reviewer, and textual characteristics. For the class 1 of helpfulness class, if the conditional probability of the bin with greater value turns out to be greater than for the class 0 of helpfulness class, this indicates that the determinant is positively affecting helpfulness. For example, the last bin of product’s review rating has the greatest probability among all bins for the class 1 of helpfulness class, and provides greater probability than for the class 0 of helpfulness class; this shows that product’s review rating is positively affecting helpfulness. Further, by comparing the conditional probability of different determinants, we can suppose which determinant has the greatest effect on helpfulness than the other determinants. This will improve understanding of the impact of product, reviewer, and textual traits on online review helpfulness in a consumer-oriented mechanism where reviews are highlighted according to their expected helpfulness.

There are limitations and future research issues. Our study is data-driven and thus, the results can be different with another dataset. When using a different dataset, the selection of variables can be adjusted or reduced to encompass more crucial variables, and the number of data in this study can be expanded to provide more stable results. The determinants composed in this study can also be expandable to include more recent data and future studies can consider other variables with significant relationships with helpfulness. The relationship between determinants and helpfulness should be further investigated on a theoretical basis of eWOM research. Further, the NBN method used in this study can be expanded further to accommodate more unique hybrid methods in predicting helpfulness of online review comments. For instance, deep learning methods like LSTM (Long Short-Term Memory) and CNN (Convolutional Neural Network) can be integrated with NBN to create a more differentiated approach.

6.2. Implications for Practitioners

This study provides implications for practice. NBN can suggest a promising method to rate and highlight very recently posted reviews which readers have not spent enough time on rating. With the selection of variables having more a crucial relationship with helpfulness, the expanded number of recent data than our current sample can provide more useful results than other methods in terms of online marketing business practice. This can help in finding helpful reviews better and e-commerce and social media websites can improve their existing filtering and rating process. This study can provide advantages to social network sites by supporting them to evaluate new reviews rapidly and adjust their ranking for presentation in an efficient way. Our model supports buyers to create better reviews and thus, assist other buyers in determining their buying decisions. The results of this study can offer guidelines for building helpful reviews and for implementation of consumer online review sites to facilitate helpfulness votes. The study provides several clues about online review site design by indicating the impact of eWOM traits on review helpfulness. The effective prediction of helpfulness is important because helpful reviews can greatly enhance attitudes toward purchasing online and potential benefits to companies in terms of sales [14]. The review sites with the crucial eWOM traits for helpfulness can contribute to the sales and market share of companies.

This study can be utilized to concentrate on the factors enhancing helpfulness like review extremity, reviewer ranking, and reviewer name disclosure to adjust the design of review websites and rank reviews according to these traits. Marketers should evaluate important aspects of eWOM for helpfulness in building e-marketing strategies. Using the prediction results, an online review site can rank the new reviews rapidly so that the more helpful reviews can be displayed prominently. Those helpful reviews tend to receive the consumer attention for that product. The manufacturer could evaluate helpful reviews in building an improved manufacturing and marketing strategy.

6.3. Conclusions

Applying a data mining approach to eWOM is invaluable to recommend helpful reviews [22] because eWOM is a crucial way to understand and predict consumer needs and purchase decisions. This paper intends to predict review helpfulness with NBN by using various characteristics of eWOM and using a crawled sample of 8699 reviews and 21 predictors of eWOM. We investigate how the past characteristics of eWOM have an effect on helpfulness by suggesting conditional probabilities of variables. The greater conditional probability for some specific value of predictors indicates the greater importance of that variable with that specific value. The prediction accuracy of NBN outperformed that of the k-nearest neighbor (kNN) method, which shows the validity of our method. Our study can be used to find helpful reviews, which can turn out to be a useful marketing tool. Our study can support practice in online review marketing as NBN would reduce the efforts necessary to predict review helpfulness from an enormous volume of eWOM data.

Author Contributions

Conceptualization, S.L.; methodology, S.L., J.Y.C.; software, S.L., J.Y.C.; validation, J.Y.C.; formal analysis, S.L., J.Y.C.; resources, K.C.L.; data curation, S.L. and J.Y.C.; writing—original draft preparation, S.L.; writing—review and editing, K.C.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

Table A1.

Distribution of research variables (NA = not applicable).

Table A1.

Distribution of research variables (NA = not applicable).

| Category | Variables | Min | Max | Mean | Standard Deviation | Number of Bins |

|---|---|---|---|---|---|---|

| Product | Type of Product | 0 | 1 | 0.41 | 0.49 | NA |

| Sales Rank | 13 | 1,183,280 | 30,481.50 | 48,288.011 | 394 | |

| Product’s Total Number of Reviews | 1 | 385 | 37.29 | 40.275 | 124 | |

| Product’s Review Rating | 1.50 | 5.00 | 4.37 | 0.46 | 69 | |

| Retail Price of Product | 0.01 | 82.99 | 11.75 | 9.19 | 149 | |

| Reviewer | Reviewer Ranking | 0 | 9,712,959 | 1,572,362.55 | 3,448,559.04 | 664 |

| Reviewer’s Total Helpfulness Votes | 0 | 74,151 | 3755.59 | 8203.176 | 232 | |

| Reviewer’s Total Votes | 0 | 81,380 | 4884.28 | 9675.629 | 187 | |

| Proportion of all Past Helpful Votes | 0.00 | 1.00 | 0.55 | 0.43 | 11 | |

| Reviewer’s Total Number of Reviews | 1 | 11,351 | 902.98 | 1495.14 | 135 | |

| Reviewer Name Disclosure | 0 | 1 | 0.35 | 0.48 | NA | |

| Review | Elapsed Days after Launching until Posting | 0 | 5013 | 2243.51 | 1231.303 | 27 |

| Elapsed Days since Posting until Parsing | 1 | 5047 | 1960.87 | 1150.16 | 30 | |

| Number of Words in a Review | 6 | 1361 | 140.76 | 130.36 | 111 | |

| Number of Misspelt Words | 1 | 26 | 2.64 | 2.04 | 129 | |

| Number of Words One Letter Long | 1 | 12 | 1.57 | 0.77 | 114 | |

| Number of Words 2–9 Letters Long | 1 | 351 | 45.01 | 26.29 | 117 | |

| Number of Words 10 Letters Long | 1 | 26 | 2.56 | 2.02 | 165 | |

| Review Rating | 1 | 5 | 4.34 | 1.01 | 42 | |

| Review Extremity | 0.00 | 3.80 | 0.66 | 0.63 | 76 | |

| Reviews’ Total Helpfulness Votes | 0 | 205 | 3.67 | 10.178 | 703 | |

| Output Variable | Helpfulness Class | 0.00 | 1.00 | 0.55 | 0.43 | NA |

References

- Kroenke, D.M. Experiencing MIS, 3rd ed.; Prentice Hall: Upper Saddle River, NJ, USA, 2012. [Google Scholar]

- Liu, Y.; Jin, J.; Ji, P.; Harding, J.A.; Fung, R.Y.K. Identifying helpful online reviews: A product designer’s perspective. Comput. Aided Des. 2013, 45, 180–194. [Google Scholar] [CrossRef]

- Mudambi, S.M.; Schuff, D. What makes a helpful online review? A study of customer reviews on Amazon.com. MIS Q. 2010, 34, 185–200. [Google Scholar] [CrossRef]

- Chen, Y.; Xie, J. Online consumer review: Word-of-mouth as a new element of marketing communication mix. Manag. Sci. 2008, 54, 477–491. [Google Scholar] [CrossRef]

- Li, X.; Hitt, L.M. Self-selection and information role of online product reviews. Inf. Syst. Res. 2008, 19, 456–474. [Google Scholar] [CrossRef]

- Ku, Y.-C.; Wei, C.-P.; Hsiao, H.-W. To whom should I listen? Finding reputable reviewers in opinion-sharing communities. Decis. Support Syst. 2012, 53, 534–542. [Google Scholar] [CrossRef]

- Kunja, S.R.; Gvrk, A. Examining the effect of eWOM on the customer purchase intention through value co-creation (VCC) in social networking sites (SNSs): A study of select Facebook fan pages of smartphone brands in India. Manag. Res. Rev. 2018, 43, 245–269. [Google Scholar] [CrossRef]

- Wang, A.; Zhang, M.; Hann, I.H. Socially nudged: A quasi-experimental study of friends’ social influence in online product ratings. Inf. Syst. Res. 2018, 29, 641–655. [Google Scholar] [CrossRef]

- Li, M.; Huang, L.; Tan, C.-H.; Wei, K.-K. Helpfulness of online product reviews as seen by consumers: Source and content features. Int. J. Electron. Commer. 2013, 17, 101–136. [Google Scholar] [CrossRef]

- Elwalda, A.; Lü, K.; Ali, M. Perceived derived attributes of online customer reviews. Comput. Hum. Behav. 2016, 56, 306–319. [Google Scholar] [CrossRef]

- Filieri, R. What makes online reviews helpful? A diagnosticity-adoption framework to explain informational and normative influences in e-WOM. J. Bus. Res. 2015, 68, 1261–1270. [Google Scholar] [CrossRef]

- Gilbert, E.; Karahalios, K. Understanding deja reviewers. In Proceedings of the CSCW 2010: The 2010 ACM Conference on Computer Supported Cooperative Work, Savannah, GA, USA, 6–10 February 2010; pp. 225–228. [Google Scholar]

- Hong, H.; Xua, D.; Wang, G.A.; Fan, W. Understanding the determinants of online review helpfulness: A meta-analytic investigation. Decis. Support Syst. 2017, 102, 1–11. [Google Scholar] [CrossRef]

- Lee, S.; Choeh, J.Y. The determinants of helpfulness of online reviews. Behav. Inf. Technol. 2016, 35, 853–863. [Google Scholar] [CrossRef]

- Siering, M.; Muntermann, J.; Rajagopalan, B. Explaining and predicting online review helpfulness: The role of content and reviewer-related signals. Decis. Support Syst. 2018, 108, 1–12. [Google Scholar] [CrossRef]

- Singh, J.P.; Irani, S.; Rana, N.P.; Dwivedi, Y.K.; Saumya, S.; Roy, P.K. Predicting the “helpfulness” of online consumer reviews. J. Bus. Res. 2017, 70, 346–355. [Google Scholar] [CrossRef]

- Ghose, A.; Ipeirotis, P.G. Estimating the helpfulness and economic impact of product reviews: Mining text and reviewer characteristics. IEEE Trans. Knowl. Data Eng. 2011, 23, 1498–1512. [Google Scholar] [CrossRef]

- Weathers, D.; Swain, S.D.; Grover, V. Can online product reviews be more helpful? Examining characteristics of information content by product type. Decis. Support Syst. 2016, 79, 12–23. [Google Scholar] [CrossRef]

- Bai, X. Predicting consumer sentiments from online text. Decis. Support Syst. 2011, 50, 732–742. [Google Scholar] [CrossRef]

- Pan, Y.; Zhang, J.Q. Born unequal: A study of the helpfulness of user-generated product reviews. J. Retail. 2011, 87, 598–612. [Google Scholar] [CrossRef]

- Cao, Q.; Duan, W.; Gan, Q. Exploring determinants of voting for the ‘helpfulness’ of online user reviews: A text mining approach. Decis. Support Syst. 2011, 50, 511–521. [Google Scholar] [CrossRef]

- Krishnamoorthy, S. Linguistic features for review helpfulness prediction. Expert Syst. Appl. 2015, 42, 3751–3759. [Google Scholar] [CrossRef]

- O’Mahony, M.P.; Smyth, B. A classification-based review recommender. Knowl. Based Syst. 2010, 23, 323–329. [Google Scholar] [CrossRef]

- Lee, S.; Choeh, J.Y. Exploring the determinants of and predicting the helpfulness of online user reviews using decision trees. Manag. Decis. 2017, 55, 681–700. [Google Scholar] [CrossRef]

- Chung, W.; Tseng, T.-L. Discovering business intelligence from online product reviews: A rule-induction framework. Expert Syst. Appl. 2012, 39, 11870–11879. [Google Scholar] [CrossRef]

- Lee, S.; Choeh, J.Y. Predicting the Helpfulness of Online Reviews using Multilayer Perceptron Neural Networks. Expert Syst. Appl. 2014, 41, 3041–3046. [Google Scholar] [CrossRef]

- Du, J.; Rong, J.; Michalska, S.; Wang, H.; Zhang, Y. Feature selection for helpfulness prediction of online product reviews: An empirical study. PLoS ONE 2019, 14, e0226902. [Google Scholar] [CrossRef] [PubMed]

- Fan, M.; Feng, C.; Guo, L.; Sun, M.; Li, P. Product-Aware Helpfulness Prediction of Online Reviews. In Proceedings of the WWW’19: The World Wide Web Conference, San Francisco, CA, USA, 13–17 May 2019; pp. 2715–2721. [Google Scholar] [CrossRef]

- Malik, M.S.I. Predicting users’ review helpfulness: The role of significant review and reviewer characteristics. Soft Comput. 2020, 24, 13913–13928. [Google Scholar] [CrossRef]

- Olatunji, I.E.; Li, X.; Wai Lam, W. Context-aware helpfulness prediction for online product reviews. In Proceedings of the 15th Asia Information Retrieval Societies Conference, AIRS 2019, Hong Kong, China, 7–9 November 2019. [Google Scholar] [CrossRef]

- Ngo-Ye, T.L.; Sinha, A.P. The influence of reviewer engagement characteristics on online review helpfulness: A text regression model. Decis. Support Syst. 2014, 61, 47–58. [Google Scholar] [CrossRef]

- Min, H.-J.; Park, J.C. Identifying helpful reviews based on customer’s mentions about experiences. Expert Syst. Appl. 2012, 39, 11830–11838. [Google Scholar] [CrossRef]

- Shannon, K.M. Predicting Amazon review helpfulness ratio. Tech. Rep. 2017. [Google Scholar] [CrossRef]

- Almutairi, Y.; Abdullah, M.; Alahmadi, D. Review helpfulness prediction: Survey. Period. Eng. Nat. Sci. 2019, 7, 420–432. [Google Scholar] [CrossRef]

- Du, J.; Rong, J.; Wang, H.; Zhang, Y. Helpfulness prediction for online reviews with explicit content-rating interaction. In Web Information Systems Engineering—WISE 2019; Cheng, R., Mamoulis, N., Sun, Y., Huang, X., Eds.; Springer: Cham, Switzerland, 2019; Volume 11881. [Google Scholar]

- Chiriatti, G.; Brunato, D.; Dell’Orletta, F.; Venturi, G. What Makes a Review Helpful? Predicting the Helpfulness of Italian TripAdvisor Reviews; Istituto di Linguistica Computazionale “Antonio Zampolli” (ILC–CNR): Pisa, Italy, 2019. [Google Scholar]

- Haque, M.E.; Tozal, M.E.; Islam, A. Helpfulness prediction of online product reviews. In Proceedings of the 18th ACM Symposium on Document Engineering 2018, Halifax, NS, Canada, 28–31 August 2018. [Google Scholar] [CrossRef]

- Baek, H.; Ahn, J.; Choi, Y. Helpfulness of online consumer reviews: Readers’ objectives and review cues. Int. J. Electron. Commer. 2012, 17, 99–126. [Google Scholar] [CrossRef]

- Filieri, R.; Raguseo, E.; Vitari, C. When are extreme ratings more helpful? Empirical evidence on the moderating effects of review characteristics and product type. Comput. Hum. Behav. 2018, 88, 134–142. [Google Scholar] [CrossRef]

- Chen, C.C.; Tseng, Y.-D. Quality evaluation of product reviews using an information quality framework. Decis. Support Syst. 2011, 50, 755–768. [Google Scholar] [CrossRef]

- Kwon, O.; Sung, Y. Shifting selves and product reviews: How the effects of product reviews vary depending on the self-views and self-regulatory goals of consumers. Int. J. Electron. Commer. 2012, 17, 59–82. [Google Scholar] [CrossRef]

- Cheung, C.M.; Lee, M.K. What drives consumers to spread electronic word of mouth in online consumer-opinion platforms. Decis. Support Syst. 2012, 53, 218–225. [Google Scholar] [CrossRef]

- Dou, X.; Walden, J.A.; Lee, S.; Lee, J.Y. Does source matter? Examining source effects in online product reviews. Comput. Hum. Behav. 2012, 28, 1555–1563. [Google Scholar] [CrossRef]

- Filieri, R.; Raguseo, E.; Vitari, C. What moderates the influence of extremely negative ratings? The role of review and reviewer characteristics. Int. J. Hosp. Manag. 2019, 77, 333–341. [Google Scholar] [CrossRef]

- Huang, A.H.; Chen, K.; Yen, D.C.; Tran, T.P. A study of factors that contribute to online review helpfulness. Comput. Hum. Behav. 2015, 48, 17–27. [Google Scholar] [CrossRef]

- Korfiatis, N.; García-Bariocanal, E.; Sánchez-Alonso, S. Evaluating content quality and helpfulness of online product reviews: The interplay of review helpfulness vs. review content. Electron. Commer. Res. Appl. 2012, 11, 205–217. [Google Scholar] [CrossRef]

- Schindler, R.M.; Bickart, B. Perceived helpfulness of online consumer reviews: The role of message content and style. J. Consum. Behav. 2012, 11, 234–243. [Google Scholar] [CrossRef]

- Lee, S.; Choeh, J.Y. The interactive impact of online word-of-mouth and review helpfulness on box office revenue. Manag. Decis. 2018, 56, 849–866. [Google Scholar] [CrossRef]

- Lubis, F.F.; Rosmansyah, Y.; Supangkat, S.H. Improving course review helpfulness prediction through sentiment analysis. In Proceedings of the 2017 International Conference on ICT for Smart Society (ICISS), Tangerang, Indonesia, 18–19 September 2017. [Google Scholar] [CrossRef]

- Shmueli, G.; Patel, N.R.; Bruce, P.C. Data Mining for Business Analytics, 3rd ed.; John Wiley & Sons, Inc.: Hoboken, NJ, USA, 2018. [Google Scholar]

- Koc, L.; Mazzuchi, T.A.; Sarkani, S. A network intrusion detection system based on a Hidden Naïve Bayes multiclass classifier. Expert Syst. Appl. 2012, 39, 13492–13500. [Google Scholar] [CrossRef]

- Olson, D.; Shi, Y. Introduction to Data Mining; McGraw-Hill International Edition: New York, NY, USA, 2007. [Google Scholar]

- Bogaert, M.; Ballings, M.; Hosten, M.; Van den Poel, D. Identifying soccer players on Facebook through predictive analytics. Decis. Anal. 2017, 14, 274–297. [Google Scholar] [CrossRef]

- Farid, D.M.; Zhang, L.; Rahman, C.M.; Hossain, M.A.; Strachan, R. Hybrid decision tree and naïve Bayes classifiers for multi-class classification tasks. Expert Syst. Appl. 2014, 41, 1937–1946. [Google Scholar] [CrossRef]

- Gao, Y.; Xu, A.; Hu, P.J.-H.; Cheng, T.-H. Incorporating association rule networks in feature category-weighted Naive Bayes model to support weaning decision making. Decis. Support Syst. 2017, 96, 27–38. [Google Scholar] [CrossRef]

- Hernández-González, J.; Inza, I.; Lozano, J.A. Learning Bayesian network classifiers from label proportions. Pattern Recognit. 2013, 46, 3425–3440. [Google Scholar]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).