Evaluation for Teachers and Students in Higher Education

Abstract

1. Introduction

- (a)

- At different points in time—not only at the end but also at the beginning of and during the process;

- (b)

- With different tools, complementary to the anachronistic monopoly of the traditional exam [20];

- (c)

- (d)

- Finally, with different purposes—not only to reward, sanction, categorize or school the student (modality, promotion, etc.), but also to redirect efforts and raise awareness.

- (a)

- Persistent rigidity of educational institutions and systems;

- (b)

- Traditional attitudes toward teacher training;

- (c)

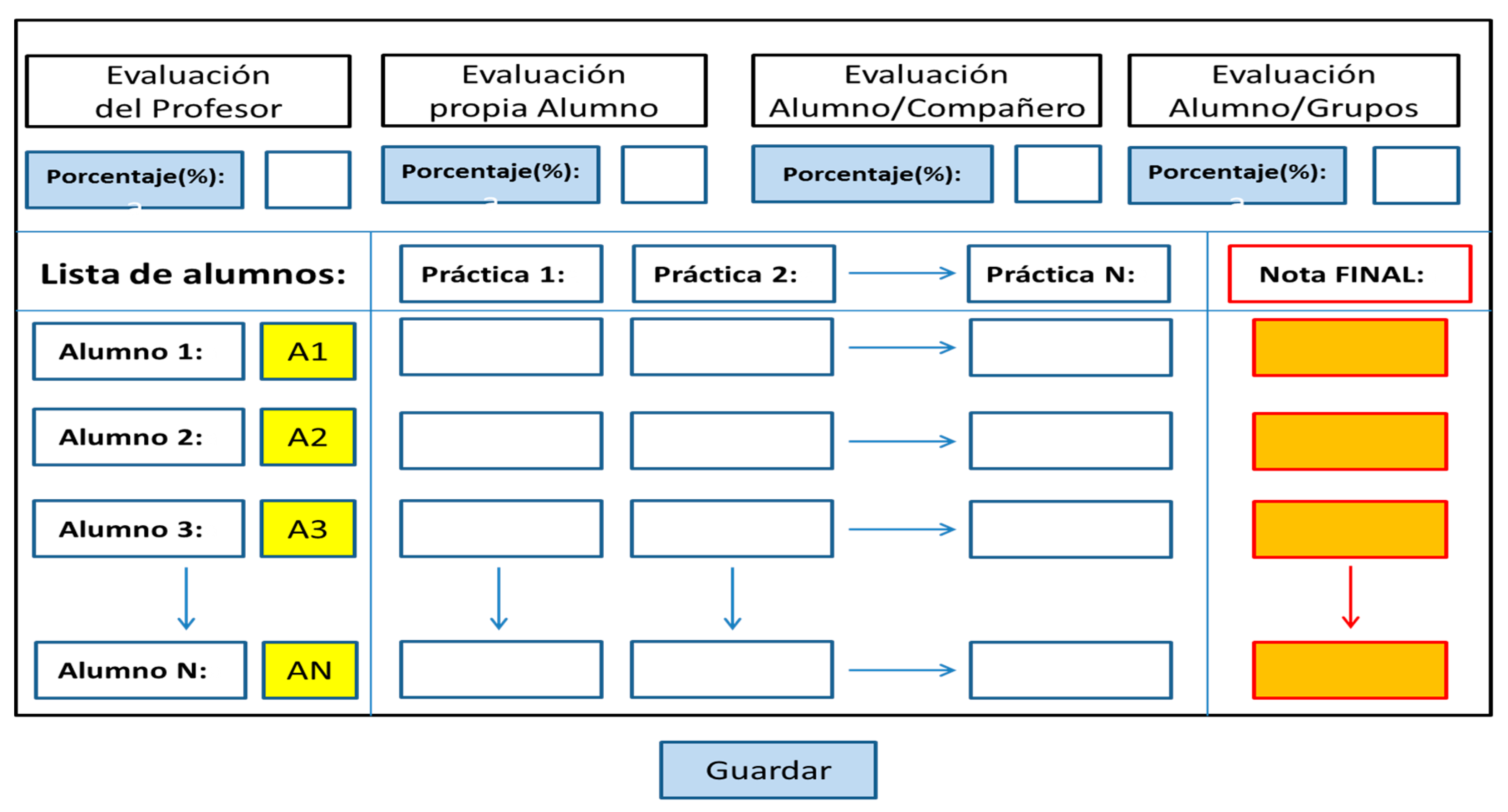

- The additional complexity of recording diverse scores and their weighted calculation to obtain the overall score, as well as the lack of resources to facilitate this.

2. Material and Methods

- A definition process made up of a sub-phase of requirements of potential users and planning of activities and times.

- A development process, in which two sub-phases are addressed:

- The design of a pattern, developed using the corresponding programming language.

- Software maintenance to optimize the product in its non-final version.

- A constant maintenance process, where technical problems of the final version will be solved, and, where appropriate, it is replaced by an upgraded version, in a regular cycle.

- Content analysis, based on voluntary and anonymous statements issued by participants, teachers, and students, duly instructed and experienced in the use of the platform.

- Statistical analysis based on the data obtained using a self-filled Likert multiple response estimation scale.

2.1. Participants

- 30 teachers from the University of Granada from different specialties attended the specific course on this topic (entitled “Combined evaluation of students, classmates, and teachers through PLEVALUA digital platform”, organized by the Quality, Innovation and Prospective Unit of the University of Granada (2018)). They each had under 12 years of teaching experience (M = 6.50, sd = 2.76) and there were more women (56.67%) than men (43.33%).

- Regarding the students, a total of 140 students working towards a primary education teaching degree from the same university, at their second (41.43%) and fourth stage (58.57%) took part, which implies that they already had some university experience (M = 3.17, sd = 1.72). The proportion of women compared to that of men is even greater in this case (74.62% and 25.38%, respectively), which correlates with the reality of the classrooms in these studies.

2.2. Instruments and Procedure

2.3. Analysis of Data

- Multiple evaluation assessment (MEA), which encompasses partial assessments by modalities provided they are conceived as part of the whole.

- Assessment of the use of PLEVALUA (AUP), with all categories on concrete and global aspects of the platform.

- Critical review of the multiple evaluation (CME) in general or of any of its constituent modalities as well as a commitment to some in exclusivity.

- Critical review of the use of PLEVALUA (CUP), difficulties, limitations, lack of functionality, etc. that impact on the task for which it was devised.

- Relating (through Pearson’s “r” parametric test to the group of students (n = 140) whose data distribution was normal, according to the Kolmogorov-Smirnov (KS) test calculation, and similar variances, according to the homoskedasticity test performed with the Levene “L” test, and in the case of teachers, the non-parametric coefficient of Spearman’s rho “ρ” for data (n = 30) that despite following a normal distribution, according to KS, does not own the necessary homoscedasticity for the calculation of parametric tests).

- Differentiating (Student’s t for the same sample) dependent variables on the perception of the specific evaluation modality and the functionality of the platform for such evaluation modality (two blocks) of the scale.

3. Results

3.1. Platform Description

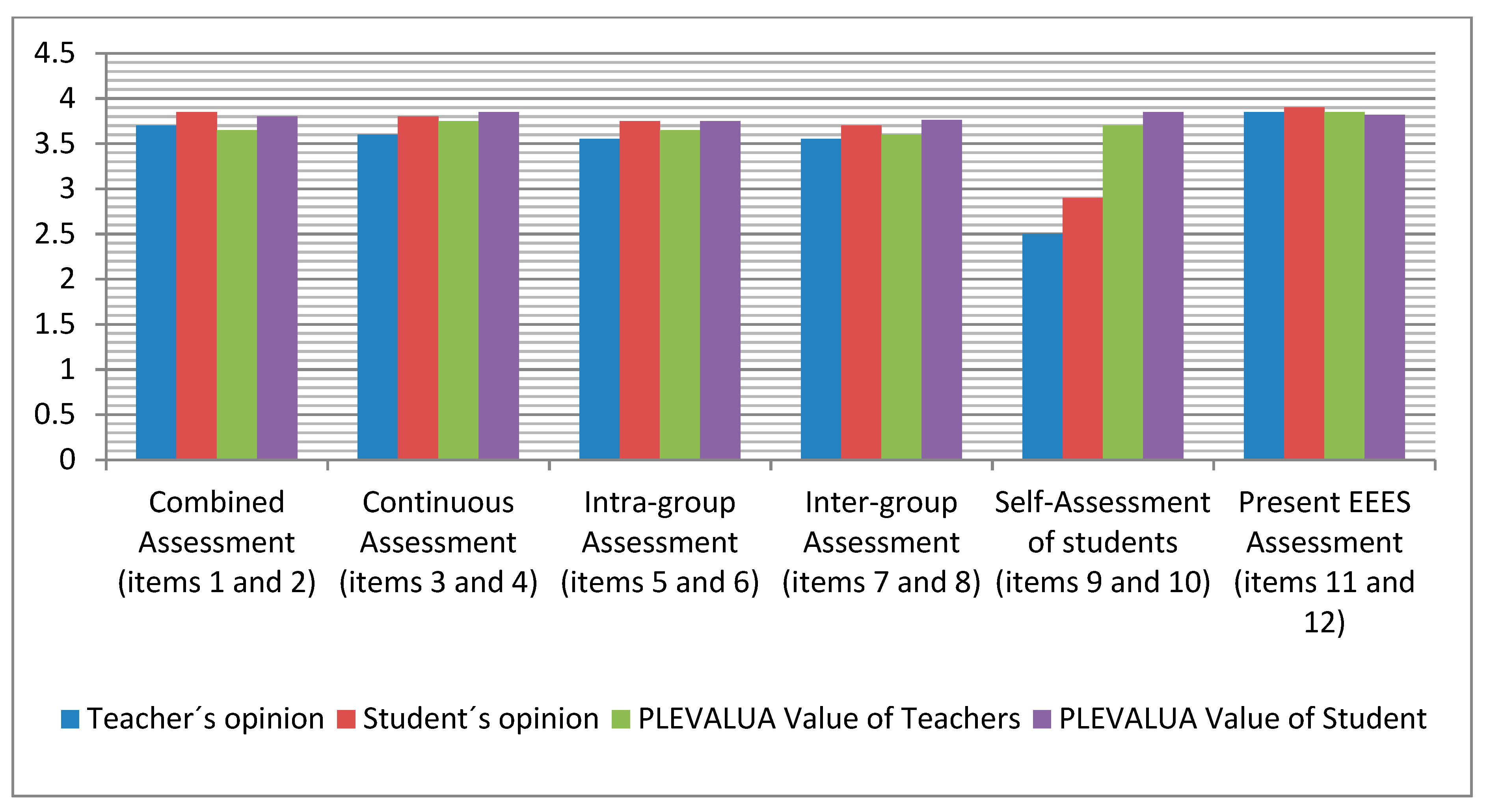

3.2. Platform Validation

- “I like this, (…) the platform allows you to evaluate at any time and anonymously so that other students do not know because it is something that requires privacy” (ST13);

- “(…) does not pose any difficulty once you look at the tutorials“ (ST4);

- “(…) the tutorials are better than the written explanation for teachers and students that other platforms offer” (TE7).

- “(…) some classmates overestimated their effort and their mark, and also that of their friends, but it has been gradually controlled by the teacher’s emphasis” (ST10);

- “(…) delivering evaluation to students can bring about issues, both about their evaluation and that of others, even if it is very modern (…)” (TE5);

- “I regard the employment of a platform for the serious task of evaluating as inappropriate (…)” (ST9).

4. Discussion and Conclusions

Author Contributions

Funding

Conflicts of Interest

Appendix A

| Value From 1 (Total Disagreement) to 4 (Total Agreement) | 1 | 2 | 3 | 4 |

|---|---|---|---|---|

| 1. Does it seem appropriate to combine traditional (hetero), peer (co) and self (self) assessments | ||||

| 2. Does the platform enable or favour this combined assessment in a university class group? | ||||

| 3. The teacher must begin to share the evaluation process with the students themselves | ||||

| 4. Do you think that the continuous evaluation by the teacher through the platform is viable? | ||||

| 5. The operational co-workers of each practice group should also be evaluators? | ||||

| 6. Do you think the platform makes the evaluation of colleagues in the same work group viable? | ||||

| 7. The practices presented in class by each group must be evaluated by the rest of the groups? | ||||

| 8. Do you think it allows the continuous evaluation of other work groups within the classroom? | ||||

| 9. Each student is a builder of their own learning and must also be an evaluator of it? | ||||

| 10. Do you think it allows the student’s continuous self-assessment during their subject practices? | ||||

| 11. Do you think the evaluation methodology is consistent with the current EHEA where the student is more active? | ||||

| 12. Do you think the evaluation platform involves a current evaluation according to the EHEA in class? |

References

- Pérez, R. Quo vadis, evaluación? Reflexiones pedagógicas en torno a un tema tan manido como relevante. Rev. Investig. Educ. 2019, 34, 13–30. [Google Scholar] [CrossRef]

- Moro, A.I. La evaluación de las técnicas de evaluación en la enseñanza universitaria: La experiencia de macroeconomía. Cult. Educ. 2016, 28, 843–862. Available online: https://dialnet.unirioja.es/servlet/articulo?codigo=5738670 (accessed on 11 April 2019).

- Barriopedro, M.; de Subijana, L.; Gómez Ruano, M.A.; Rivero, A. La coevaluación como estrategia para mejorar la dinámica del trabajo en grupo: Una experiencia en Ciencias del Deport. Rev. Complut. Educ. 2016, 27, 571–584. [Google Scholar] [CrossRef]

- Gallego Noche, B.; Quesada, M.A.; Gómez Ruiz, J.; Cubero, J. La evaluación y retroalimentación electrónica entre iguales para la autorregulación y el aprendizaje estratégico en la universidad: La percepción del alumnad. REDU 2017, 15, 127–146. [Google Scholar] [CrossRef]

- Ibarra, M.S.; Rodríguez Gómez, G. Modalidades participativas de evaluación. Un análisis de la percepción del profesorado y de los estudiantes universitario. Rev. Investig. Educ. 2014, 32, 339–361. [Google Scholar] [CrossRef]

- Rodríguez Gómez, G.; Ibarra, M.S.; García Jiménez, E. Autoevaluación, evaluación entre iguales y coevaluación: Conceptualización y práctica en las universidades española. REINED 2013, 11, 198–210. Available online: File:///C:/Users/T101/Downloads/Dialnet-AutoevaluacionEvaluacionEntreIgualesYCoevaluacion-4734976%20(2).pdf (accessed on 1 February 2019).

- Ibarra-Sáiz, M.S.; Rodríguez Gómez, G. EvalCOMIX®: A web-based programme to support collaboration in assessment. In Smart Technology Applications in Business Environments; Issa, T., Kommers, P., Issa, T., Isaías, P., Issa, T.B., Eds.; IGI Global: Hershey, PA, USA, 2017; pp. 249–275. [Google Scholar] [CrossRef]

- Lukas, J.F.; Santiago, K.; Lizasoain, L.; Etxebarria, J. Percepciones del alumnado universitario sobre su evaluación. Bordón 2017, 69, 103–122. Available online: https://recyt.fecyt.es/index.php/BORDON/article/view/43843 (accessed on 5 February 2020).

- Rodríguez Espinosa, H.; Retrespo, L.F.; Luna, G. Percepción del estudiantado sobre la evaluación del aprendizaje en la educación superio. Educare 2016, 20, 1–17. [Google Scholar] [CrossRef]

- Gallego Ortega, J.L.; Rodríguez Fuentes, A. Alternancia de roles en la evaluación universitaria: Docentes y discentes evaluadores y evaluado. REDU 2017, 15, 349–366. [Google Scholar] [CrossRef]

- Arnáiz, C.M.; Bernardino, A.C. Los resultados de los estudiantes en un proceso de evaluación con metodologías distinta. Rev. Investig. Educ. 2013, 31, 275–293. [Google Scholar] [CrossRef]

- Pascual-Gómez, I.; Lorenzo-Llamas, E.M.; Monge-López, C. Análisis de validez en la evaluación entre iguales: Un estudio en educación superio. RELIEVE 2015, 21, 1–17. [Google Scholar] [CrossRef][Green Version]

- Quesada, V.; García-Jiménez, E.; Gómez-Ruiz, M.A. Student Participation in Assessment Processe. In Learning and Performance Assessment; Khosrow, M., Ed.; IGI Global: Hershey, PA, USA, 2020; pp. 1226–1247. Available online: https://www.igi-global.com/chapter/student-participation-in-assessment-processes/237579 (accessed on 1 May 2020).

- Mok, M.M.; Lung, L.; Cheng, D.P.W.; Cheung, H.P.; Ng, M.L. Self-assessment in Higher Education: Experience in using a metacognitive approach in five case studie. Assess. Eval. High. Educ. 2017, 31, 415–433. [Google Scholar] [CrossRef]

- Yan, Z.; Brown, G.T.L. A cyclical self-assessment process: Towards a model of how students engage in self-assessment. Assess. Eval. High. Educ. 2017, 42, 1247–1262. [Google Scholar] [CrossRef]

- Yan, Z. Self-assessment in the process of self-regulated learning and its relationship with academic achievement. Assess. Eval. High. Educ. 2019, 44, 224–238. [Google Scholar] [CrossRef]

- Boud, D.; Falchikov, N. Rethinking Assessment in Higher Education. Learning Longer Term; Routledge: London, UK, 2017. [Google Scholar]

- Boud, D.; Molloy, E. Feedback in Higher and Professional Education; Routledge: London, UK, 2013. [Google Scholar]

- Carrizosa, E.; Gallardo, J.I. Autoevaluación, Coevaluación y Evaluación de los aprendizaje In III Jornadas sobre docencia del Derecho y TIC; Barcelona, E., Khosrow-Pour, M., Eds.; Information Resources Management Association: New York, NY, USA, 2020; Available online: http://www.uoc.edu/symposia/dret_tic2012/pdf/4.6.carrizosa-esther-y-gallardo-jospdf (accessed on 10 October 2019).

- López Lozano, L.; Solís Ramírez, E. Con qué evalúan los estudiantes de magisterio en formación. Campo. Abierto. 2016, 35, 55–67. Available online: file:///C:/Users/T101/Downloads/Dialnet-ConQueEvaluanLosEstudiantesDeMagisterioEnFormacion-5787079.pdf (accessed on 15 May 2019).

- Ion, G.; Cano, E. El proceso de implementación de la evaluación por competencias por competencias en la Educación Superio. REINED 2011, 9, 246–258. Available online: http://reined.webuviges/index.php/reined/article/view/128 (accessed on 25 June 2019).

- Tejada Fernández, J.; Ruiz Bueno, C. Evaluación de competencias profesionales en educación superior: Retos e implicacione. Educación XXI 2015, 19, 17–38. [Google Scholar] [CrossRef]

- Morrell, L.J. Iterated assessment and feedback improves student outcomes. Stud. High. Educ. 2019, 44, 105–123. [Google Scholar] [CrossRef]

- Rodríguez Gómez, G.; Ibarra, M.S.; Gómez Ruiz, M.Á. e-Autoevaluación en la universidad: Un reto para profesores y estudiante. Rev. Educ. 2011, 356, 401–430. Available online: http://www.educacionyfp.gob.es/revista-de-educacion/numeros-revista-educacion/numeros-anteriores/2011/re356/re356-17.html (accessed on 2 July 2019).

- García Jiménez, E. La evaluación del aprendizaje: De la retroalimentación a la autorregulación. El papel de las tecnología. RELIEVE 2015, 21, 1–24. [Google Scholar] [CrossRef][Green Version]

- Saiz, M.; Bol, A. Aprendizaje basado en la evaluación mediante rúbricas en educación superio. Suma Psicológica 2014, 21, 28–35. Available online: http://www.sciencedirect.com/science/article/pii/S0121438114700049 (accessed on 1 July 2019). [CrossRef][Green Version]

- De Pablos, J.; Colás, M.; López Gracia, A.; García-Lázaro, I. Los usos de las plataformas digitales en la enseñanza universitaria. Perspectivas desde la investigación educativa. Rev. Docencia Univ. 2019, 17, 59–72. [Google Scholar] [CrossRef]

- De Benito, B.; Salinas, J.M. La investigación basada en diseño en Tecnología Educativa. Rev. Interuniv. Investig. Tecnol. Educ. 2016, 44–59. [Google Scholar] [CrossRef]

- Pressman, S. Ingeniería del Software: Un Enfoque Práctico, 5th ed.; McGraw-Hill/Interamericana de España: Madrid, España, 2002. [Google Scholar]

- Plataforma de Evaluación UGr. Available online: http://www.linyadoo.com/plevalua_ug (accessed on 14 May 2019).

- Quesada, V.; Rodríguez Gómez, G.; Ibarra, M.S. Planificación e innovación de la evaluación en educación superior: La perspectiva del profesorad. Rev. Investig. Educ. 2017, 35, 53–70. [Google Scholar] [CrossRef]

| M and Sd | Mo and % | t Student p-Value | |||

|---|---|---|---|---|---|

| TE | ST | TE | ST | ||

| 1. Does it seem appropriate to you to combine traditional (hetero) assessment with peer (co) and self (self) assessment? | 3.70 0.85 | 3.85 0.78 | 4 50 | 4 85.02 | t = 2.54 p = 0.85 |

| 3. The teacher must begin to share the evaluation process with the students themselves | 3.60 0.81 | 3.80 0.75 | 4 70.25 | 4 70.15 | t = 1.35 p = 0.61 |

| 5. The operational co-workers of each practice group should also be evaluators … | 3.55 0.61 | 3.75 0.84 | 3 61.11 | 4 58.50 | t = 3.21 p = 0.09 |

| 7. The practices presented in class by each group must be evaluated by the rest of the groups … | 3.55 0.69 | 3.70 0.77 | 3 55.55 | 4 63.88 | t = 0.94 p = 0.08 |

| 9. Each student is a builder of their own learning and must also be an evaluator of it … | 2.50 0.96 | 2.90 0.95 | 3 40.80 | 3 50% | t = 3.68 p = 0.04 |

| 11. Do you think the current assessment needs to adapt to the current EHEA where the student is most active? | 3.85 0.49 | 3.90 0.64 | 4 75.33 | 4 85.85 | t = 1.82 p = 0.16 |

| TOTAL average-mode dispersion | 3.46 0.74 | 3.65 0.79 | 4 - 3 | ||

| M and Sd | Mo and % | t Student p-Value | |||

|---|---|---|---|---|---|

| DO | ES | DO | ES | ||

| 2. Does the platform enable or favour this combined assessment in a university class group? | 3.65 0.62 | 3.80 0.53 | 4 65.50 | 4 80.20 | t = 1.23 p = 0.45 |

| 4. Do you think that the continuous evaluation by the teacher through the platform is viable? | 3.75 0.61 | 3.75 0.42 | 4 61.75 | 4 84.54 | t = 2.20 p = 0.86 |

| 6. Do you think the platform makes the evaluation of colleagues in the same work group viable? | 3.65 0.61 | 3.76 0.46 | 4 55.40 | 4 78.56 | t = 4.23 p = 0.77 |

| 8. Do you think it allows the continuous evaluation of other work groups within the classroom? | 3.60 0.60 | 3.70 0.49 | 3 57.33 | 4 79.89 | t = 0.94 p = 0.56 |

| 10. Do you think it allows the student’s continuous self-assessment during their subject practices? | 3.70 0.51 | 3.85 0.40 | 4 58.50 | 4 83.33 | t = 1.89 p = 0.68 |

| 12. Do you think that the evaluation platform involves a current evaluation according to the EHEA in class? | 3.85 0.51 | 3.82 0.45 | 4 54.45 | 4 81.05 | t = 0.95 p = 0.06 |

| TOTAL average-mode dispersion | 3.70 0.56 | 3.81 0.48 | 4 | ||

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Alain Botaccio, L.; Gallego Ortega, J.L.; Navarro Rincón, A.; Rodríguez Fuentes, A. Evaluation for Teachers and Students in Higher Education. Sustainability 2020, 12, 4078. https://doi.org/10.3390/su12104078

Alain Botaccio L, Gallego Ortega JL, Navarro Rincón A, Rodríguez Fuentes A. Evaluation for Teachers and Students in Higher Education. Sustainability. 2020; 12(10):4078. https://doi.org/10.3390/su12104078

Chicago/Turabian StyleAlain Botaccio, Lineth, José Luis Gallego Ortega, Antonia Navarro Rincón, and Antonio Rodríguez Fuentes. 2020. "Evaluation for Teachers and Students in Higher Education" Sustainability 12, no. 10: 4078. https://doi.org/10.3390/su12104078

APA StyleAlain Botaccio, L., Gallego Ortega, J. L., Navarro Rincón, A., & Rodríguez Fuentes, A. (2020). Evaluation for Teachers and Students in Higher Education. Sustainability, 12(10), 4078. https://doi.org/10.3390/su12104078