1. Introduction

Advanced machine learning technology makes the automatically style transfer possible because of its powerful fitting ability [

1,

2,

3,

4,

5]. Style transfer as a popular method applicated in artistic creation has attracted much attention. It commonly combines the style information from a style image with the original content image [

6,

7,

8]. The fused picture preserves the features of the content image and style image simultaneously. The strong ability of features extraction using convolution neural network improves the quality of the synthetic image by style transfer. By adopting style transfer, people can create works of art easily and don’t need to care how to professionally draw a picture [

9,

10]. Additionally, much repetitive work can be omitted and the business costs can be reduced. The improved quality and the automated process make style transfer popular in artistic creation [

11,

12,

13], font style transformation [

14,

15,

16], movie effects rendering [

17,

18] and some engineering fields [

19,

20,

21,

22].

Traditional style transfer mainly adopts the following methods.

- (1)

Stroke-Based Rendering: Stroke-based rendering refers to the method of adding a virtual stroke to a digital canvas to render a picture with a particular style [

23,

24,

25]. The obvious disadvantage is that its application scenarios are only limited to oil paintings, watercolors, and sketches and it’s not flexible enough.

- (2)

Image Analogy: Image analogy is used to learn the mapping relationship between a pair of source images and target images. The source images are transferred by a supervised way. The training set includes a pair of uncorrected source images and corresponding stylized images with a particular pattern [

26,

27]. The analogy method owns an effective performance and its shortage is that the paired training data is difficult to obtain.

- (3)

Image Filtering: Image filtering method adopts a combination of different filters (such as bilateral and Gaussian filters) to render a given image [

28,

29].

- (4)

Texture Synthesis: The texture denotes the repetitive visual pattern in an image. In texture synthesis, similar textures are added to the source image [

30,

31]. However, those texture synthesis-based algorithms only use low-level features and their performance is limited.

Recent years, Gatys et al. [

6] present a new solution for style transfer combined with the convolution neural network. It regards the style transfer as the optimization problem and adopts iterations to optimize each pix in the stylized picture. The pretrained Visual Geometry Group 19 (VGG19) network is introduced to extract the content feature and style feature from the content image and style image, respectively. Owing the greatly improved performance to the neural network, the method Gatys et al. proposed is also called neural style transfer. Though neural style transfer performs much better than some traditional methods, some drawbacks still trouble the researchers. Firstly, neural style transfer needs to iterate to optimize each pix of the stylized image and it’s not applicable to some delay-sensitive applications especially those needing real-time processing. Secondly, though the style features can be well integrated into the stylized image, the content information is inevitable lost. For example, the color in the content image will be mixed with the color in the style image and the lines in the stylized image will show varying degrees of distortion.

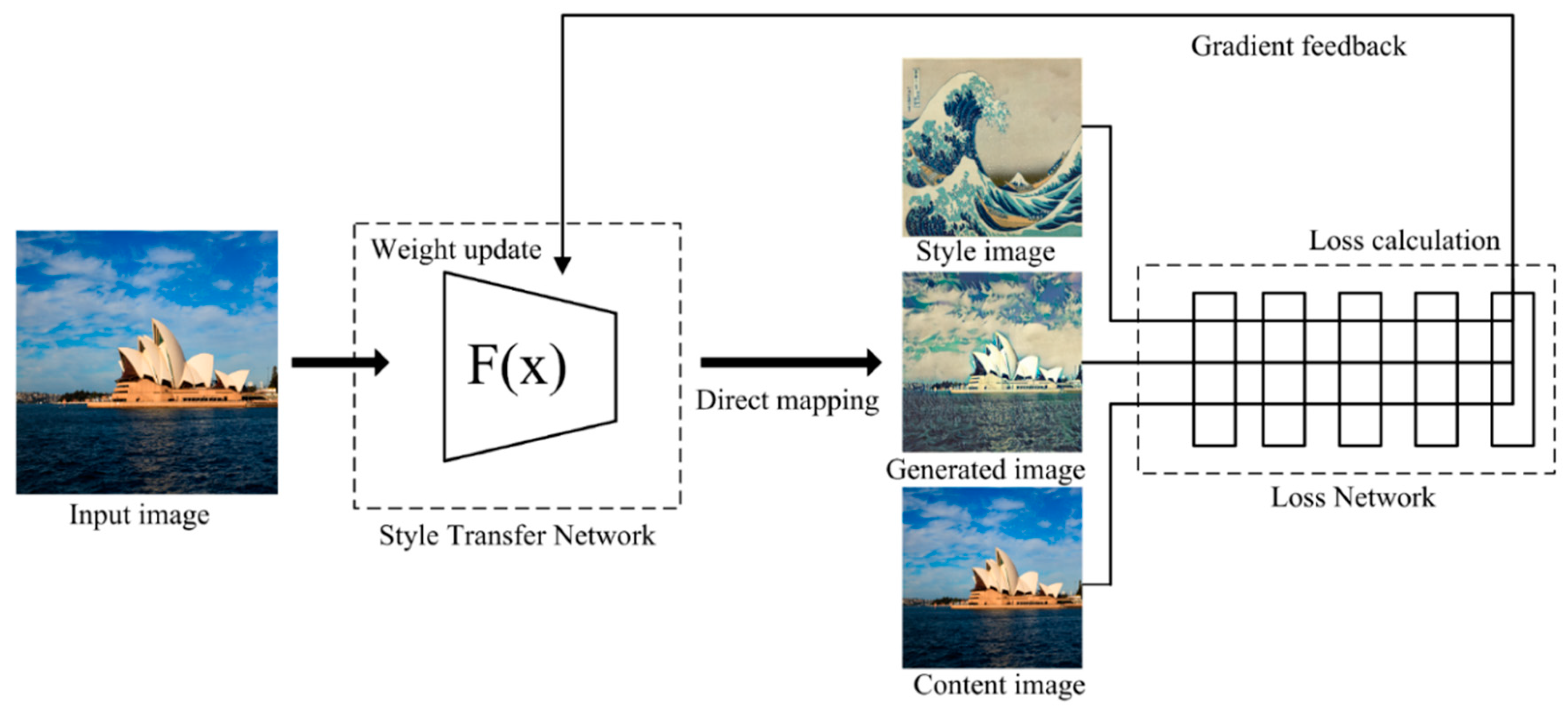

In order to make up for the lack of the classic neural style transfer, an improved style transfer algorithm adopting a deep neural network structure is presented. The whole network is composed of two parts, style transfer network and loss network. The style transfer network conducts a direct mapping between the content image and the stylized image. The loss network computes the content loss, style loss, and TV loss between the content image, style image, and stylized image generated by the style transfer network. Then the weight of the style transfer network can be updated according to the calculated loss. The style transfer network needs to be trained while the loss network adopts the first few layers of the pretrained VGG19. A cross training strategy is presented to make the style transfer network to preserve more detailed information. Finally, numerous experiments are conducted and the performances are compared between our presented algorithm and the classic neural style transfer algorithm.

We outline the paper as follows.

Section 1 introduces the background of style transfer. Some parallel works are summarized in

Section 2.

Section 3 demonstrates the effects of feature extraction from different layers of VGG19.

Section 4 has a specific illustration of our proposed algorithm.

Section 5 conducts the experiments and analyzes the experiment results. Merits and demerits are discussed in

Section 6.

Section 7 makes a conclusion for the whole paper.

2. Related Work

A deep network structure with multiple convolutional layers is proposed for image classification [

32]. Small filters are introduced for detailed features extraction and less parameters need to be trained simultaneously. Due to the favorable expansibility of the well trained VGG network, many other researchers adopt it as a pretrained model for further training.

In order to solve the content losing problem, a deep convolution neural network with dual streams is introduced for feature extraction and an edge capture filter is introduced for synthetic image quality improving [

33]. The convolution network contains two parts, detail recognizing network and style transfer network. A detail filter and a style filter are respectively applied to process the synthesized images from the detail recognizing network and style transfer network for detail extraction and color extraction. Finally, a style fuse model is used to integrate the detailed image and the color image into a high-quality style transfer image.

A style transfer method for color sketch synthesis is proposed by adopting dilated residual blocks to fuse the semantic content with the style image and it works without dropping the spatial resolution [

34]. Additionally, a filtering process is conducted after the liner color converts.

A novel method combined with the local and global style losses is presented to improve the quality of stylized images [

35]. The local style preserves the details of style image while the global style captures more global structural information. The fused architecture can well preserve the structure and color of the content image and it reduces the artifacts.

An end-to-end learning schema is created to optimize both the encoder and the decoder for better features extraction [

36]. The original pretrained VGG is fine-tuned to adequately extract features from style or content image.

In order to preserve the conspicuous regions in style and content images, the authors adopt a localization network to calculate the region loss from the SqueezeNet network [

37]. The stylized image can preserve the conspicuous semantics regions and simple texture.

Advanced Generative Adversarial Networks (GAN) technology is introduced to style transfer for cartoon images [

38]. Network training using the unpaired images makes the training set easier to build. To simulate the sharp edges of cartoon images, the edge loss is added to the loss function. The Gaussian smoothing method is first used to blur the content image, and then the discriminator determines the blurred image as a negative sample. A pre-trained process is executed in the previous several epochs for the generator to make the GAN network converge more quickly.

A multiple style transfer method based on GAN is proposed in [

39]. The generator is composed of an encoder, a gated transformer, and a decoder. The gated transformer contains different branches and different styles can be adopted by passing different branches.

3. Features Extraction from VGG19

In a pretrained convolution neural network, the convolution kernels own the ability to extract the features from a picture. Therefore, similarly as the classic neural style transfer, we adopted VGG19 to extract the features from content and style images. VGG19 is a very deep convolution neural network trained with ImageNet dataset and has excellent performance in image classification, object positioning, etc. VGG19 owns good versatility for features extractions and many works adopt it as the pretrained model. Different from the classic neural style transfer, we first analyze the extracted features by the VGG19 to select the suitable layers for feature extraction.

Since the features extracted by each layer of the VGG19 have multiple channels and cannot be directly visualized, we adopt the gradient descent algorithm to reconstruct the original image according to the features extracted by the different layer of the VGG19. The reconstructed images are initialized with Gaussian noise and then we put the initialized images into the VGG19. Then the extracted features are compared in the same layer and their

loss are calculated. Next, the

loss is back propagated to the reconstructed image and the reconstructed image is updated according to the gradient. When reconstructing the content image, we directly use the extracted features to calculate the

loss, as shown in Formula (1).

where

and

denote the original image and the reconstructed image, respectively.

denotes the output value of the

j-th layer of VGG19 network.

denote the width, height, and number of channels of the

j-th layer of VGG19 network.

denotes the Euclidean norm of the vector

. When reconstruct the style image, the Gram matrix needs to be firstly calculated by Formula (2).

where

denotes the reshaped matrix using the extracted features of the

j-th layer of VGG19. Then the style loss can be defined as Formula (3).

We exchange the layers used for features extraction and conduct much experiments. The experiment results are shown as follows.

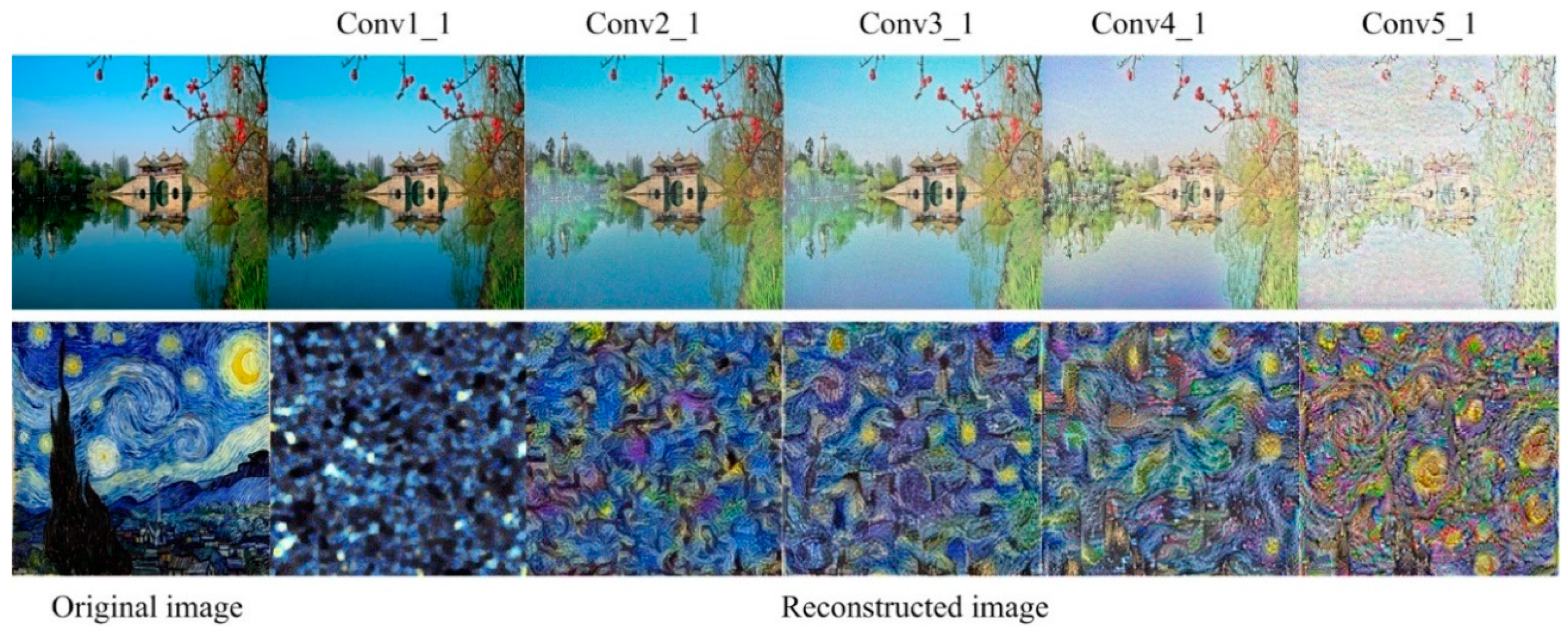

As we can clearly see from

Figure 1, the lower layers of VGG19 can preserve much more detailed information of content images. While, the deeper layers of VGG19 are more interested in the regular texture which represents the style of a picture. Therefore, the lower layers of VGG19 are more suitable for content features extraction and the deeper layers are more suitable for style features extraction.

4. Proposed Method

4.1. Data Processing

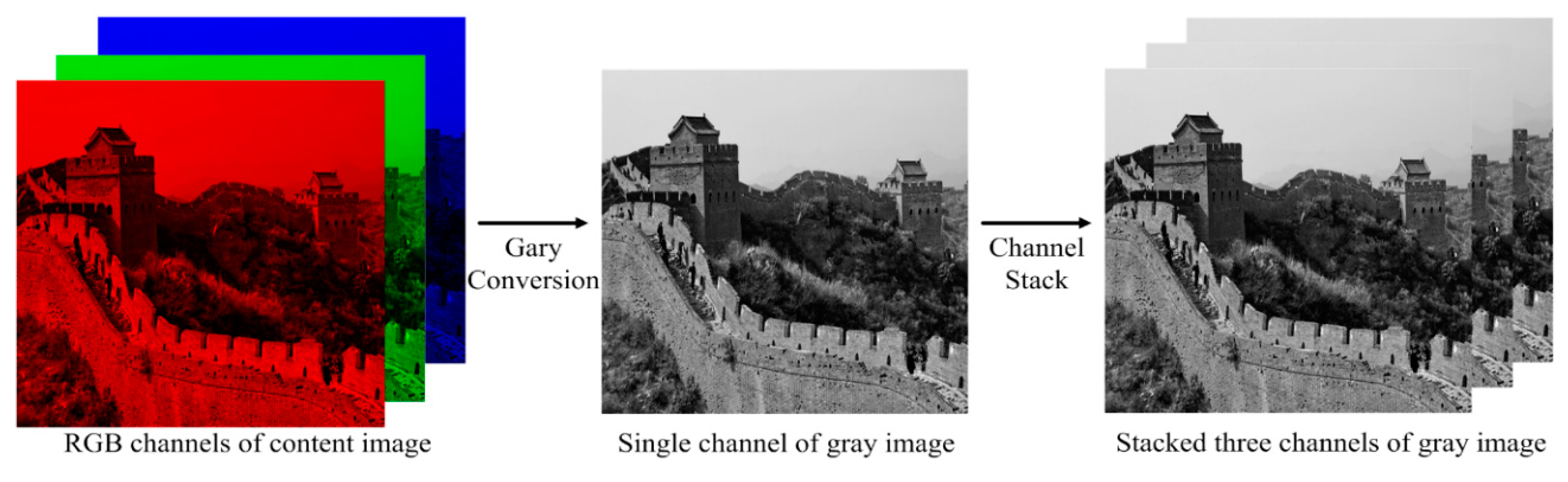

We firstly process the input data of the network. In order to avoid the problem of color mixing in the classic neural style transfer, a gray conversion is conducted for the content image. We use the classic physiology formula to transform each RGB pixel of content image into grey pixel as Formula (4).

where

R(

x),

G(

x), and

B(

x) denote the value of the Red, Green, and Blue (RBG) channels of the pixel

x in content image respectively. After the gray conversion, the original content image with three RGB channels is transformed into a gray image with one grayed channel. Whereas, the style image is an RGB picture with three channels, the grayed content image still needs to be converted to the form of three channels to match the format of the style image. Thus, we just simply stack three identical grayed channels as the content image with RGB format. The gray conversion is shown in

Figure 2.

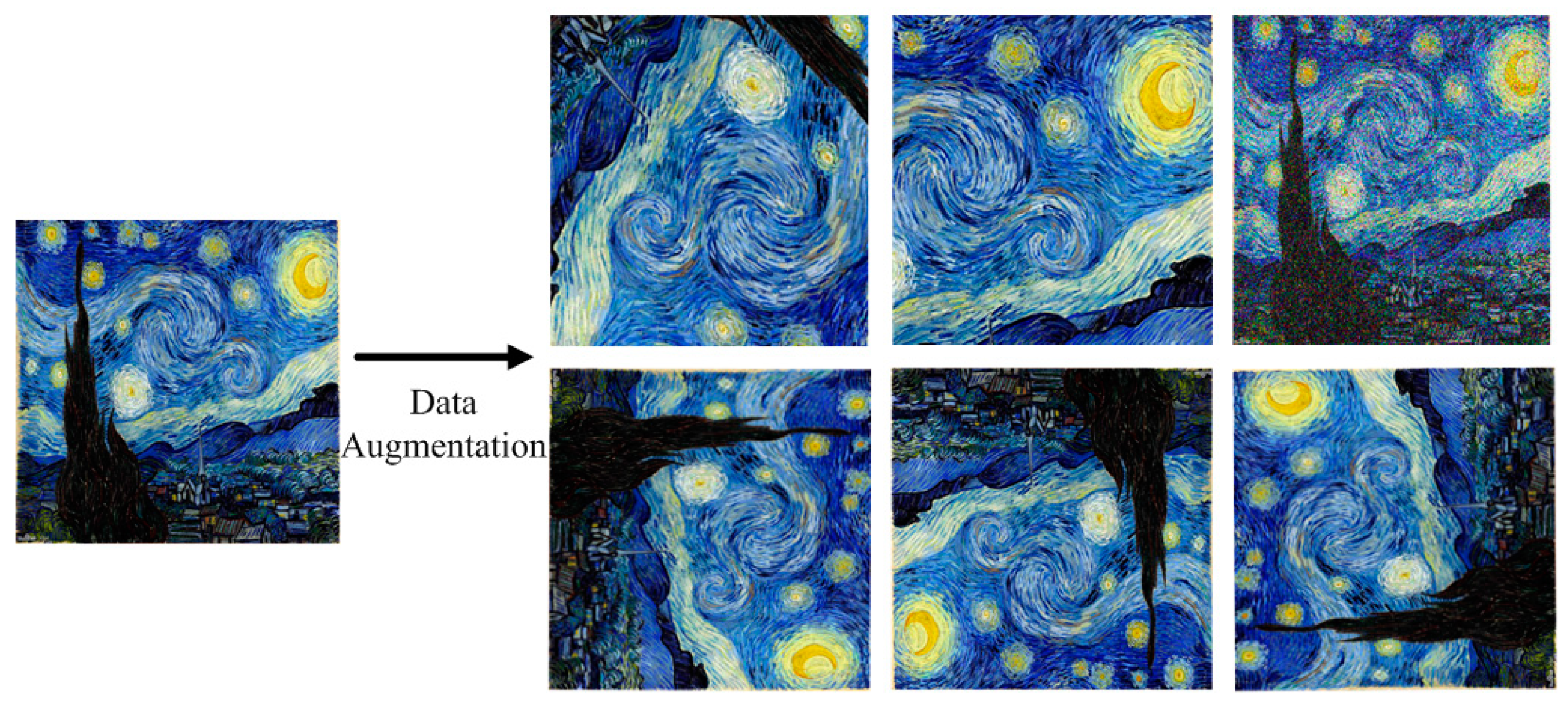

Another problem we need to solve is that the style image we used is not limited to the fully texture image. Some regular texture may only concentrate in a centralized area, and we need to use those local features to render the whole content image. Therefore, it’s necessary to conduct the data augmentation for the style image to enhance the local features. Following operations are taken for data augmentation.

- (1)

Zoom in on the original image and then crop the image of the same size.

- (2)

Randomly rotate the image at a certain angle and change the orientation of the image content.

- (3)

Flip the image horizontally or vertically.

- (4)

Randomly occlude part of the image.

- (5)

Randomly perturb RGB value of each pixel of the image by adding salt and pepper noise or Gaussian noise.

The data augmentation is illustrated as

Figure 3.

4.2. Network Model

The whole network contains two components and they are style transfer network and loss network. The style transfer network realizes a direct mapping between the content image and the stylized image. Then the stylized image is inputted to the loss network to calculate the content and style losses with inputted content and the style image. Next, the weight of the style transfer network will be updated according to the losses calculated in the loss network using gradient descent algorithm. The style transfer network is a deep neural network composed of multiple convolution layers and residual blocks. The weight of each layer in the style transfer network is randomly initialized while the loss network adopts the first few convolution layers of the pretrained VGG19 network. During the training process, only the weight of the style transfer network will be updated. The size of the images must be the same during the training phase, while in the test phase, we can input different sizes of images. The whole network structure and the operation flow are shown in

Figure 4.

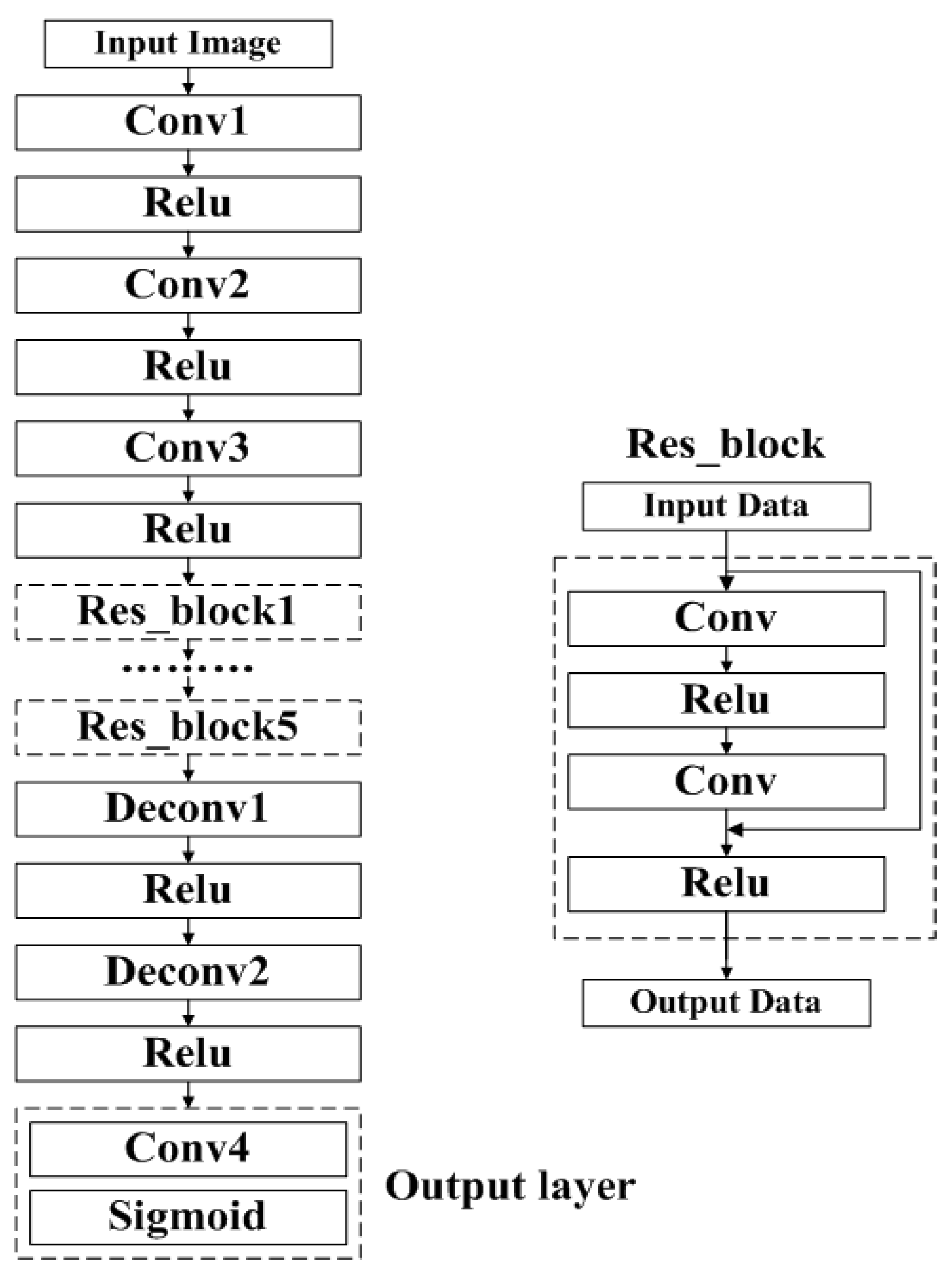

4.3. Style Transfer Network

The style transfer network is stacked by multiple convolution layers, residual blocks, and deconvolution layers. The convolution layers and deconvolution layers adopt short stride for down sampling and up sampling, respectively. Specifically, the style transfer network is composed of four convolution layers, five residual blocks, and two deconvolution layers. Besides the output layer, each convolution or deconvolution layer is followed by a Relu activation layer. The residua block is firstly represented by He et al. [

1] in 2016. After two liner transformations, the input data and its initial value is added through a “shortcut” and then the added value is inputted to the Relu activation layers. The whole structure of the style transfer network is shown as

Figure 5.

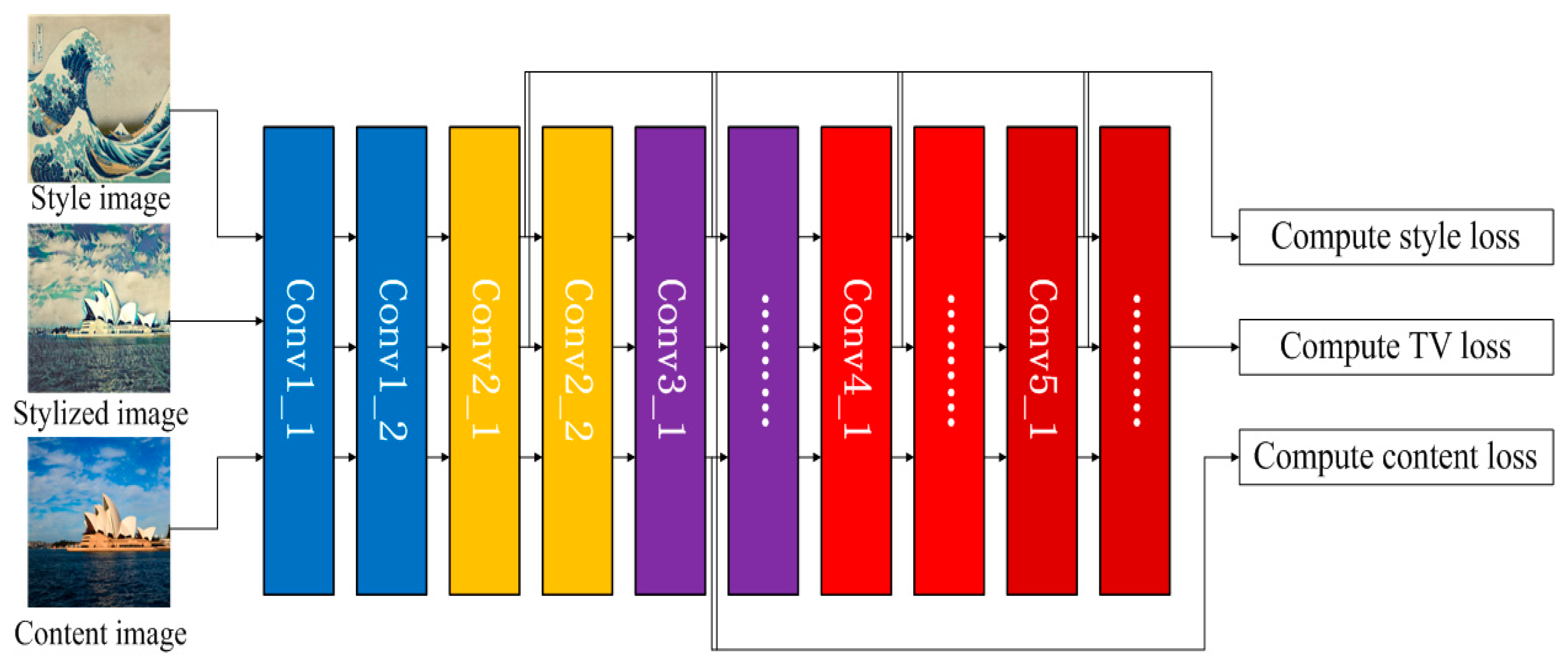

4.4. Loss Network and Loss Function

The input of the loss network contains three parts, the style image, the stylized image generated by the style transform network, and the content image. The loss network adopts the first few layers of the VGG19 to extract the features of images and its structure and workflow is shown as

Figure 6.

In previous sections, we analyze the ability of feature extraction for different layers in VGG19. The shallower convolutional layers extract the lower features of the image, thus preserving a large amount of detailed information. While the deeper convolution layers can extract higher features in the image, thereby preserving the style information of the image. According to the above rules, we finally adopt “Conv3_1” in VGG19 to extract the content features. Similarly, we adopt “Conv2_1”, “Conv3_1”, “Conv4_1”, and “Conv5_1” in VGG19 to extract the style features.

Content loss describes the difference of features between stylized image and content image. It can be calculated using Formula (5).

where

denotes the batch size of the input data.

,

and

denotes the height, width, and number of channels of the

l-th layer, respectively.

and

represent the

-th value of the

k-th channel after the content image and stylized image are activated by the

l-th layer of VGG19.

Gram matrix can be seen as an eccentric covariance matrix between features of an image. It can reflect the correlation between the two features. Additionally, the diagonal elements of the Gram matrix also reflect the trend of each feature that appears in the image. Gram matrix can measure the features of each dimension and the relationship between different dimensions. Therefore, it can reflect the general style of the entire image. We only need to compare the Gram matrix between different images to represent the difference of their styles. The gram matrix can be calculated using Formula (6).

where

and

both denote the number of channels in the

-th layer.

Style loss means the difference between the Gram matrix of the stylized image and the Gram matrix of the style image. It can be calculated using Formula (7).

where

and

denote the Gram matrix of extracted features in

-th layer for the style image and stylized image.

Then we define the total style loss as the weight sum of all layers and it can be defined as Formula (8).

where

denotes the weight of

-th layer.

In order to make the generated stylized image smoother, TV loss is introduced to be a regularizer to increase the smoothness of the generated image. TV loss calculates the square of the difference between each pixel and the next pixel in the horizontal and vertical directions. TV loss can be calculated using Formula (9).

Finally, we can define the total loss as the weight sum of

,

and

. The total loss can be represented as Formula (10).

where

,

and

are three adjustment factors and their values can be adjusted according to the actual demand. We will have a discussion on their values in

Section 5.3.

The final target in the training phase is to minimize . The weight of the style transfer network will be updated according to the total loss by gradient descent algorithm.

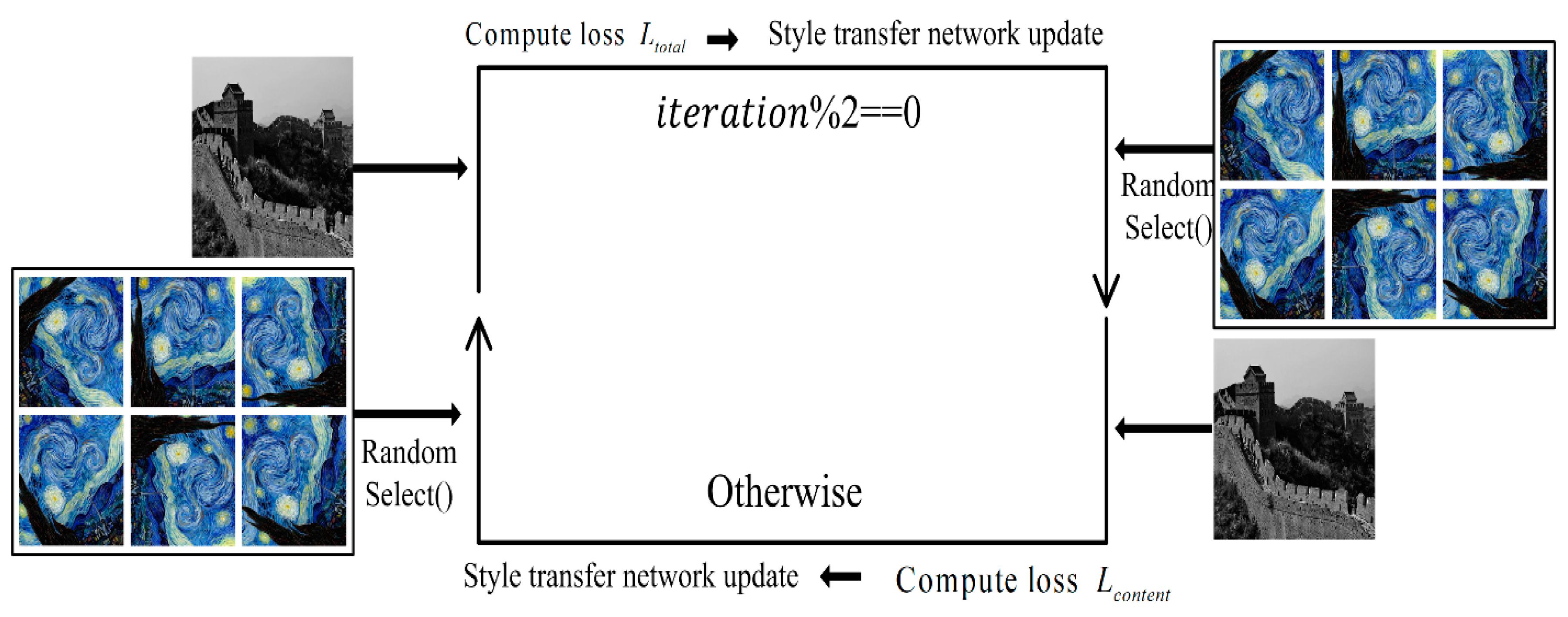

4.5. Cross Training Strategy

In order to preserve as much content information as possible, we use a cross training method by rotationally adopting different loss function. When the num of iteration is even, we adopt the original total loss as loss function, otherwise, the content loss will be chosen as the loss function. The loss function can be defined as Formula (11).

The workflow of the training process is shown as

Figure 7.

5. Experiments and Analysis

5.1. Experiment Environment and Parameters

In order to have an evaluation of our presented algorithm, we compare it with the classic neural style transfer algorithm proposed by Gatys et al. The experiment environment is a workstation using the Ubuntu operation system. The workstation is also equipped with a GTX 1080 Ti (10G Memory) graphics card to accelerate the training process of the style transfer network. The relevant software versions are shown in the table below (

Table 1).

The specific information of convolution kernels in the style transfer network are illustrated in

Table 2.

The relevant parameters of the network are listed in

Table 3.

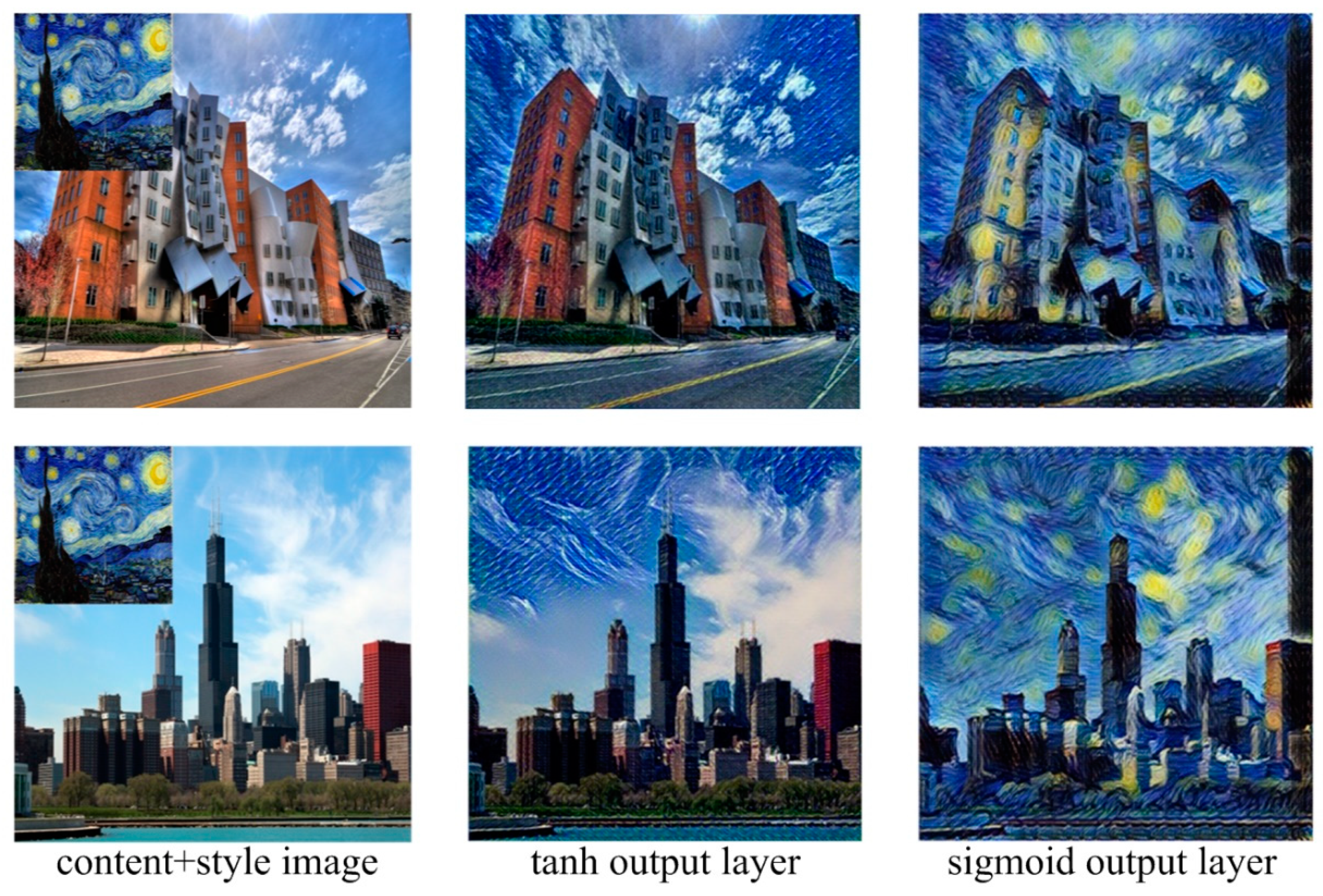

5.2. Activation Function in Output Layer

In our proposed algorithm, the output layer of the style transfer network can adopt tanh or sigmoid as its activation function. When adopting tanh as the activation function, the final output will adopt Formula (12).

where

is the output value of the previous layer. When adopting sigmoid as the activation function, the final output will adopt Formula (13). In both ways, the output value of the output layer can be between 0 and 255.

In order to have an evaluation of two different activation functions in the output layer, we test the performance of the network using the same content and style image. The experiment result is shown as

Figure 8. We can clearly see from

Figure 8 that when adopting tanh as the activation function, the performance is poor and only part of the image is stylized. Whereas, the style image can be well integrated into the content image by the method of adopting sigmoid as the activation function in the output layer.

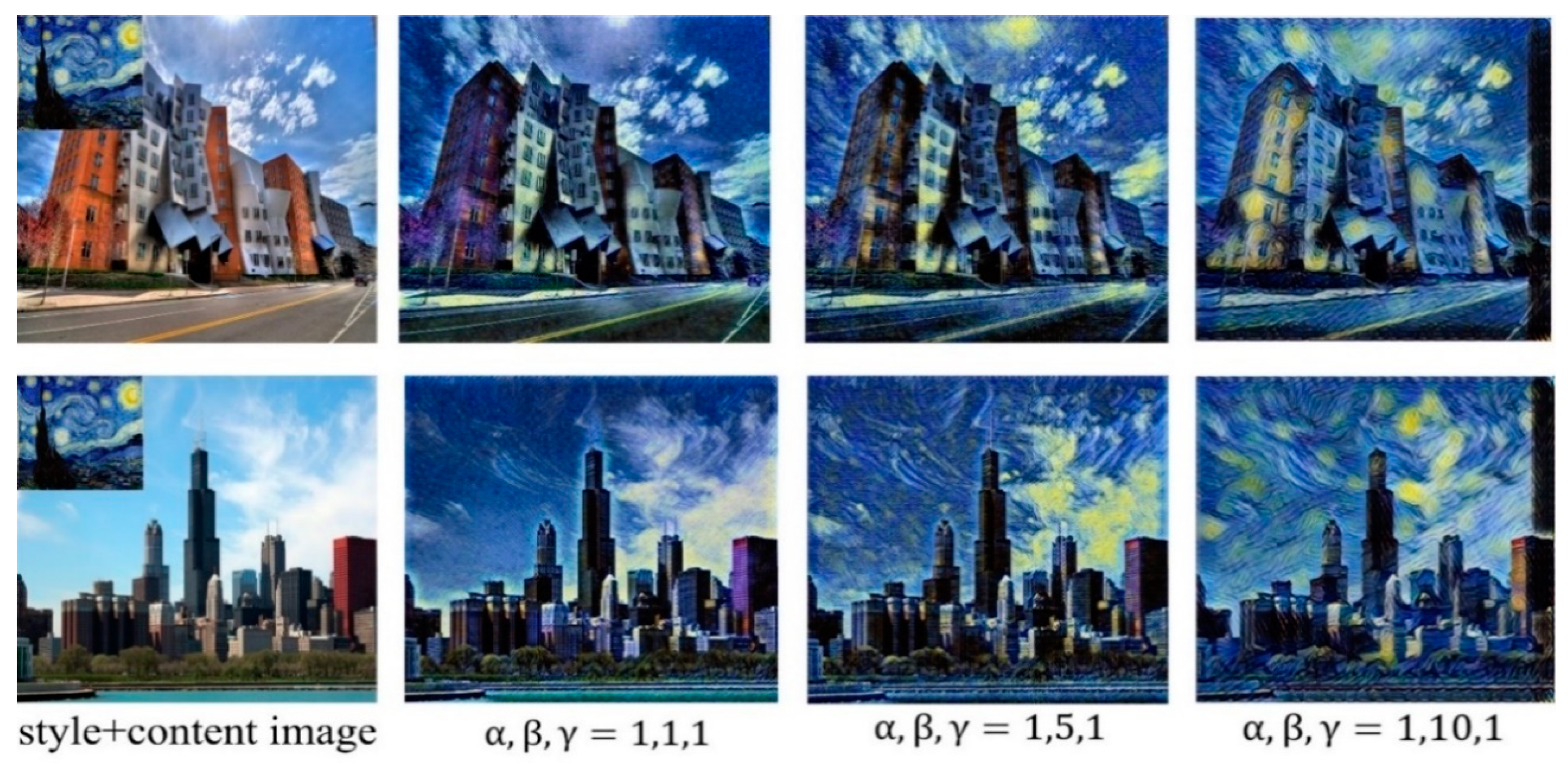

5.3. Loss Control Factors Adjustment

As we have discussed in the loss network,

are three parameters to adjust the proportion of content loss, style loss, and TV loss. The proportion of content loss and style loss has a significant influence on the levels of stylization. When the content loss accounts for a larger proportion, the stylized image will preserve more information of the content image. On the contrary, the stylized image will be better rendered with the value of

increasing. TV loss only affects the smoothness of the stylized image and it owns a small impact on the overall rendering effect. The rendering can be strengthened by decreasing the value of

or increasing the value of

. Different applications can retrofit the values of

and

based on their requirements. As

Figure 9 illustrates, when

is 1, the stylization is shallow and when

is increased to 10, the stylization is obvious.

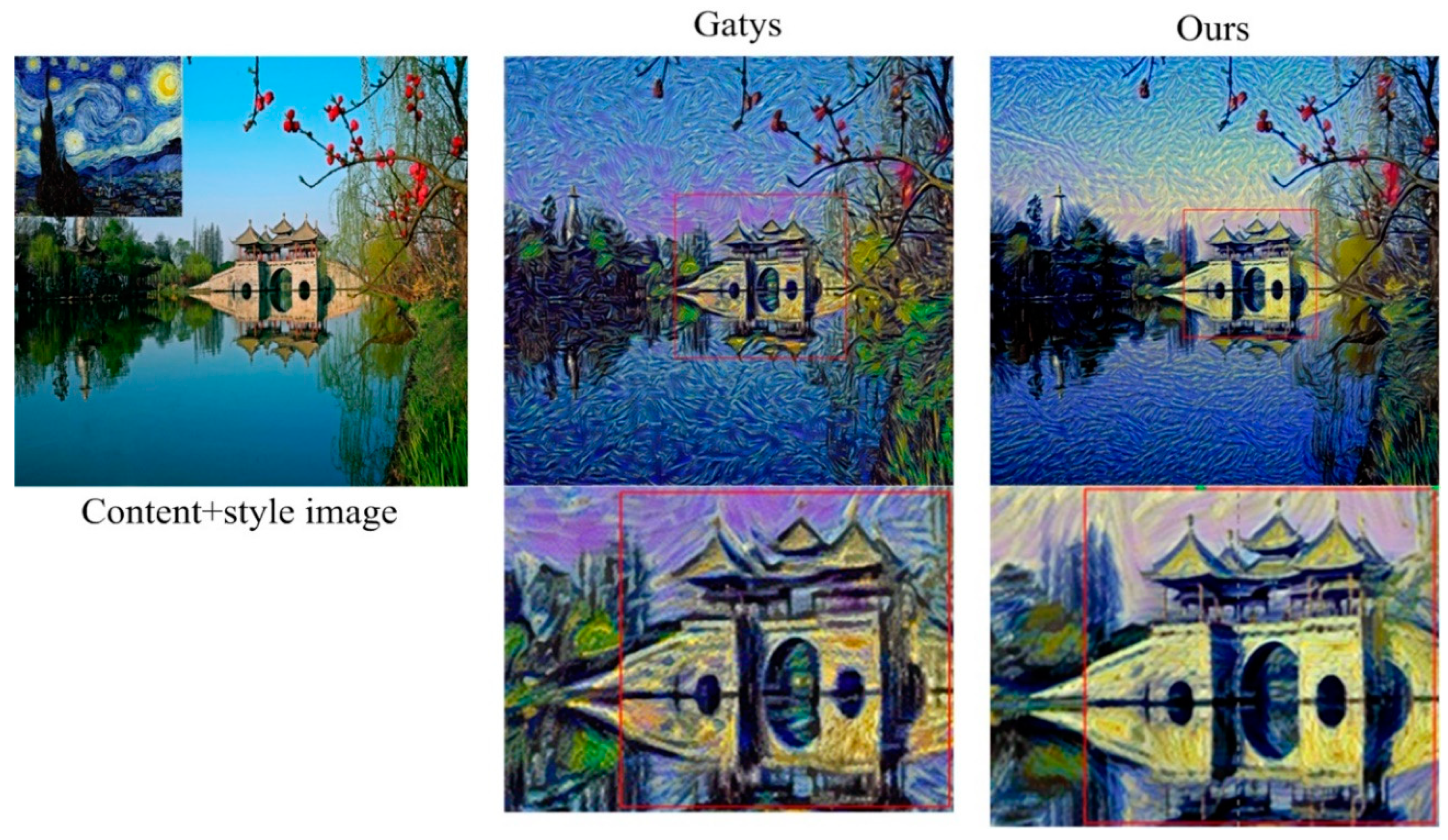

5.4. Comparison of Details Preserving

In a stylized image, we expect the objects in it are still recognizable while the background is well rendered. Classic neural style transfer achieves a great performance in image rendering, however, it’s weak to preserve the details in the content image. In order to evaluate the performance of the presented algorithm, we compare it with the classic neural style transfer in terms of details preserving. Both two algorithms adopt the same content and style image with

pixels. Classic neural style transfer iterates 1000 times for fully rendering while our presented algorithm iterates 100 epochs for fully training. The experiment result is shown as

Figure 10. As

Figure 10 illustrates, the classic neural style transfer destroyed partial details from the content image. As you can clearly see in the enlarged picture, that the pillars and the roof of the pavilion have different degrees of missing. While, in our improved algorithm, those details are preserved and the background is well rendered.

5.5. Comparison of Characters Rendering

Sometimes, characters are contained in the content image and commonly, we expect those characters can preserve their original features rather than be rendered. In order to have an evaluation of the presented algorithm in terms of characters rendering, we compare it with the classic neural style transfer. Both two algorithms use the same content and style image with 1024 × 1024 pixels. Classic neural style transfer iterates only 500 times to preserve more features of characters and our presented algorithm still iterates 100 epochs for fully training. The experiment result is shown as

Figure 11. As we can clearly see from

Figure 11, that both two algorithms achieve a good performance in stylization. However, the classic neural style transfer algorithm stylizes the characters the same as the background which results in the facial features, contours, etc., of the characters become blurred and distorted. This can be explained as that classic neural style transfer adopts the optimization method to convert the original image to the stylized image. Therefore, the network will treat each pixel in the picture indiscriminately, making the content image close to the style image. While in our presented algorithm, the trained deep neural network will recognize the characters in the image and separate them from the background. Thus, the characters still keep complete features and clear outlines.

5.6. Comparison of Time Consuming

Finally, we have an evaluation of our presented algorithm compared with the classic neural style transfer in terms of time consuming. Images with different pixels are tested respectively. Both in the classic neural style transfer and our present algorithm, the network needs to be adjusted to fit different sizes of input data. Graphics Processing Unit (GPU) is only used when execute the two different algorithms. Images with high resolution will have a better visual effect and meanwhile, it takes more time for the network to render. Experiment result is shown in

Table 4. As we can clearly see from

Table 4, the time consuming of both two algorithms increases with image pixel increasing. For the image with the same pixel, our proposed algorithm achieves an enhancement of three orders of magnitude compared with the classic neural style transfer. However, in our presented algorithm, a long time is needed to train the style transfer network.

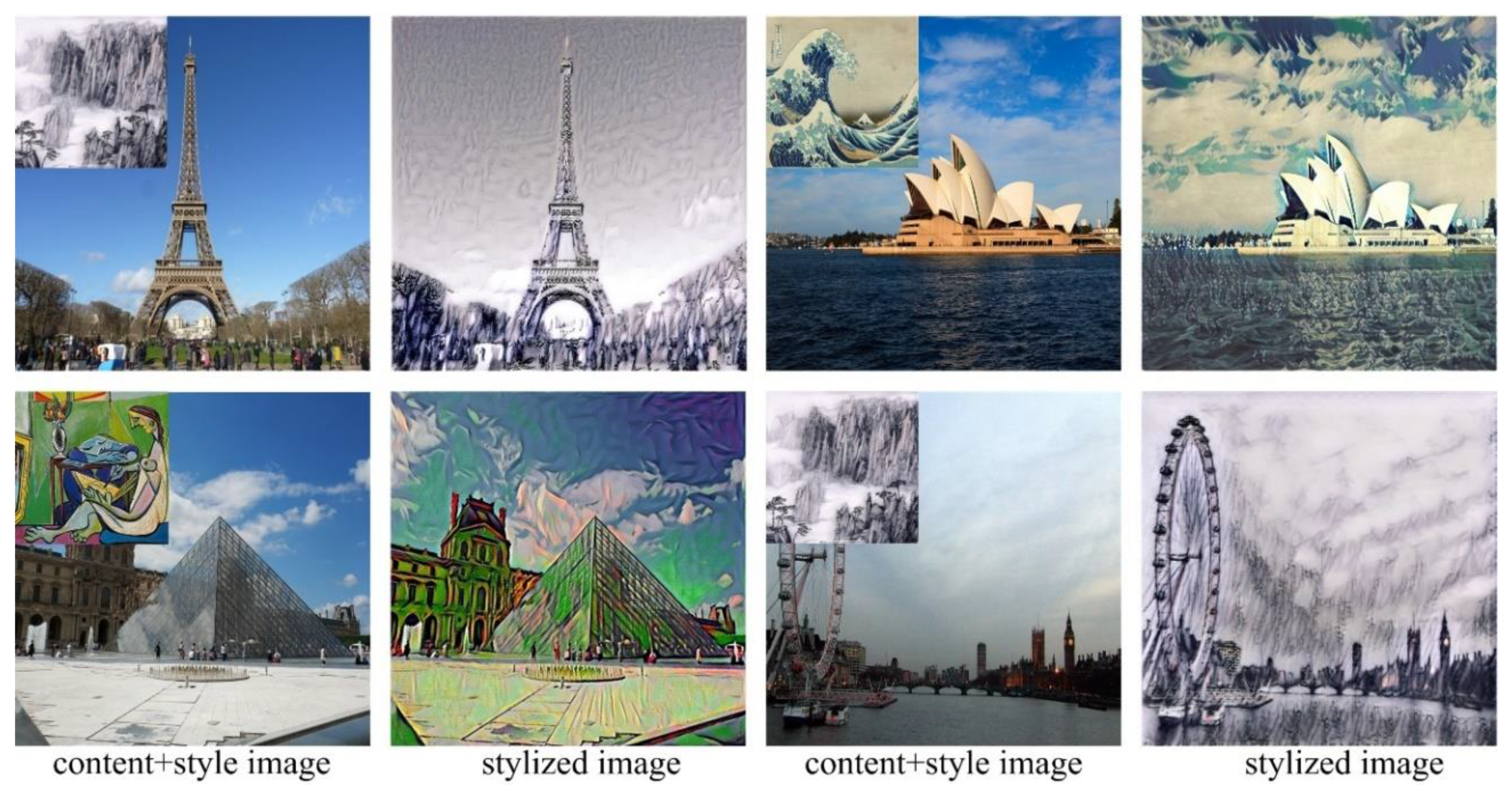

5.7. Other Examples Using Proposed Algorithm

Some other examples using the proposed algorithm are shown as

Figure 12.

6. Discussion

The convolution neural network owns an excellent ability for features extraction and it provides an alternative way for the feature comparison between different images. The classic neural style transfer algorithm regards the stylization task as an image-optimization-based online processing problem. While, our presented algorithm regards it as a model-optimization-based offline processing problem. The most prominent advantage of the presented algorithm is the short running time for stylization. Since the network model can be trained in advance, it’s suitable for those delay sensitive application especially real-time style transfer. Another advantage is that it can separate important targets from the background to avoid the content loss. Contrary to image-optimization, model-optimization aims to train a model to directly map the content image to the stylized image. The training process makes the model capable to recognize different objects such as characters and buildings, therefore, it can better preserve the details of those objects and separate them from the background.

Meanwhile, there are also some demerits of the proposed algorithm. It’s inflexible to switch the style. Once we want to change the style, we need to train a brand-new model which may take a lot of time. However, the image-optimization-based method only needs to change the style image and then iterates to the final solution. Additionally, our presented algorithm needs to run on the device with better performance such as computation and memory which increases the cost.

7. Conclusions

Classic neural style transfer has the demerits of time consuming and details losing. In order to accelerate the speed of stylization and improve the quality of the stylized image, in this paper, we present an improved style transfer algorithm based on a deep feedforward neural network. A style transfer network stacked by multiple convolution layers and a loss network work based on VGG19 are respectively constructed. Three different losses which represent the content, style, and smoothness are defined in the loss network. Then, the style transfer network is trained in advance, adopting the training set, and the loss is calculated by the loss network to update the weight of the style transfer network. Meanwhile, a cross training strategy is adopted during the training process. Our feature work will mainly focus on single model based multi-style transfer and special style transfer combined with Generative Adversarial Networks (GAN).