Identifying Factors Affecting the Quality of Teaching in Basic Science Education: Physics, Biological Sciences, Mathematics, and Chemistry

Abstract

1. Introduction

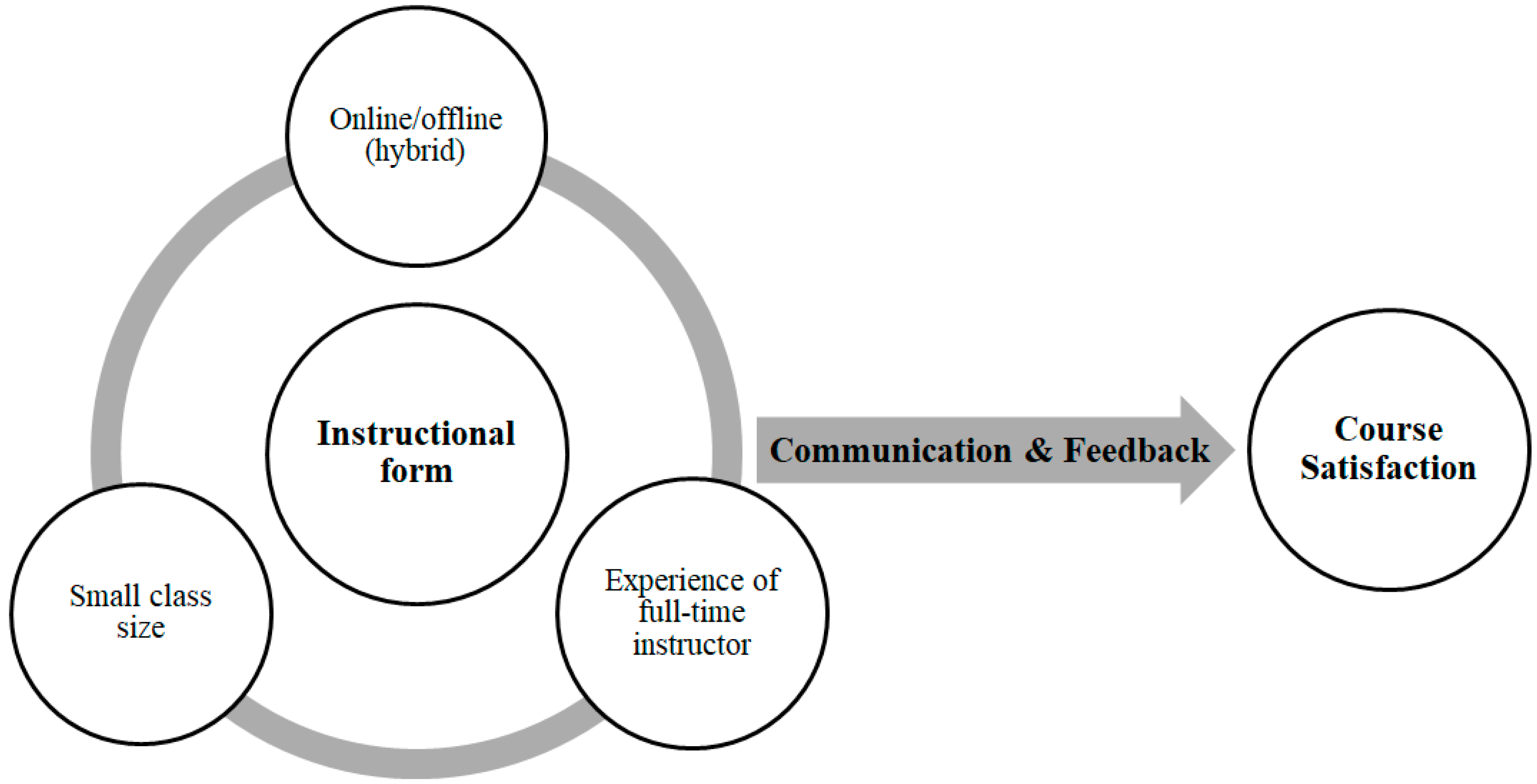

2. Theoretical Framework

2.1. Class Size Effect

2.2. Mode of Course Delivery Effect

2.3. Instructor Experience Effect

3. Student Evaluation Data and Empirical Models

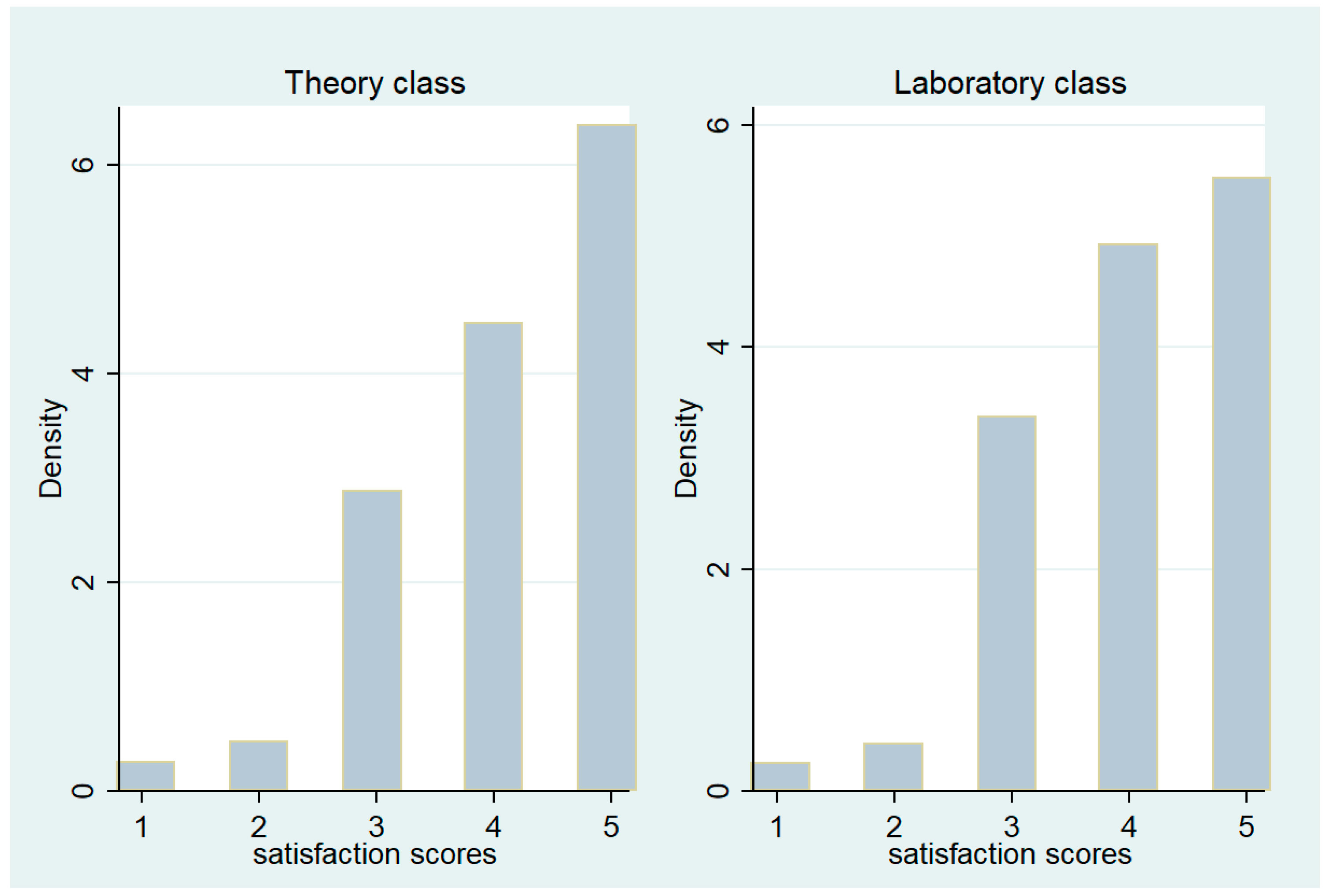

3.1. Dependent Variable

3.2. Independent Variables

4. Main Results

4.1. Class Size

4.2. Mode of Course Delivery

4.3. Instructor Experience

4.4. Interaction Effects of Class Type and Feedback-Related Factors

4.5. Other Determinants

4.6. Feedback Effects by Subjects

5. Discussion

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Kwan, K.P. How fair are student ratings in assessing the teaching performance of university teachers? Assess. Eval. High. Educ. 1999, 24, 181–195. [Google Scholar] [CrossRef]

- Artz, B.; Welsch, D.M. The effect of student evaluations on academic success. Education 2013, 8, 100–119. [Google Scholar] [CrossRef]

- Baek, W.; Cho, J. Challenging the sustainability of an education system of evaluation and labor market outcomes. Sustainability 2015, 7, 16060–16075. [Google Scholar] [CrossRef]

- Cho, D.; Baek, W.; Cho, J. Why do good performing students highly rate their instructors? Evidence from a natural experiment. Econ. Educ. Rev. 2015, 49, 172–179. [Google Scholar] [CrossRef]

- Cho, D.; Cho, J. Does more accurate knowledge of course grade impact teaching evaluation? Educ. Financ. Policy 2017, 12, 224–240. [Google Scholar] [CrossRef]

- Lee, J.; Cho, J. Who teaches economics courses better? Using student–professor matched data for the principle of economics course. Appl. Econ. Lett. 2014, 21, 934–937. [Google Scholar] [CrossRef]

- Spooren, P.; Brockx, B.; Mortelmans, D. On the validity of student evaluation of teaching: The state of the art. Rev. Educ. Res. 2013, 83, 598–642. [Google Scholar] [CrossRef]

- Kember, D.; Leung, D.Y.; Kwan, K. Does the use of student feedback questionnaires improve the overall quality of teaching? Assess. Eval. High. Educ. 2002, 27, 411–425. [Google Scholar] [CrossRef]

- Balam, E.M.; Shannon, D.M. Student ratings of college teaching: A comparison of faculty and their students. Assess. Eval. High. Educ. 2010, 35, 209–221. [Google Scholar] [CrossRef]

- Griffin, B.W. Instructor reputation and student ratings of instruction. Contemp. Educ. Psychol. 2001, 26, 534–552. [Google Scholar] [CrossRef]

- Kulik, J.A. Student ratings: Validity, utility, and controversy. New Dir. Inst. Res. 2001, 9–25. [Google Scholar] [CrossRef]

- Marsh, H.W. Students’ evaluations of university teaching: Research findings, methodological issues, and directions for future research. Int. J. Educ. Res. 1987, 11, 253–388. [Google Scholar] [CrossRef]

- Hofman, J.E.; Kremer, L. Attitudes toward higher education and course evaluation. J. Educ. Psychol. 1980, 72, 610–617. [Google Scholar] [CrossRef]

- Douglas, P.D.; Carroll, S.R. Faculty evaluations: Are college students influenced by differential purposes. Coll. Stud. J. 1980, 21, 360–365. [Google Scholar]

- Feldman, K.A. Identifying exemplary teachers and teaching: Evidence from student Ratings. In The Scholarship of Teaching and Learning in Higher Education: An Evidence-Based Perspective; Perry, R.P., Smart, J.C., Eds.; Springer: Dordrecht, The Netherlands, 2007; pp. 93–143. [Google Scholar]

- Marsh, H.W. Distinguishing between good (useful) and bad workloads on students’ evaluations of teaching. Am. Educ. Res. J. 2001, 38, 183–212. [Google Scholar] [CrossRef]

- Ting, K. A multilevel perspective on student ratings of instruction: Lessons from the Chinese experience. Res. High. Educ. 2000, 41, 637–661. [Google Scholar] [CrossRef]

- Bedard, K.; Kuhn, P. Where class size really matters: Class size and student ratings of instructor effectiveness. Econ. Educ. Rev. 2008, 27, 253–265. [Google Scholar] [CrossRef]

- Arnold, I.J.M. Do examinations influence student evaluations? Int. J. Educ. Res. 2009, 48, 215–224. [Google Scholar] [CrossRef]

- Brockx, B.; Spooren, P.; Mortelmans, D. Taking the grading leniency story to the edge. The influence of student, teacher, and course characteristics on student evaluations of teaching in higher education. Educ. Assess. Eval. Account. 2011, 23, 289–306. [Google Scholar] [CrossRef]

- Marsh, H.W.; Roche, L.A. Effects of grading leniency and low workload on students’ evaluations of teaching: Popular myth, bias, validity, or innocent bystanders? J. Educ. Psychol. 2000, 92, 202–228. [Google Scholar] [CrossRef]

- Tom, G.; Swanson, S.; Abbott, C.; Cajocum, E. The effect of student perception of instructor evaluations on faculty evaluation scores. Coll. Stud. J. 1990, 24, 268–273. [Google Scholar]

- McManus, D.O.C.; Dunn, R.; Denig, S.J. Effects of traditional lecture versus teacher-constructed & student-constructed self-teaching instructional resources on short-term science achievement & attitudes. Am. Biol. Teach. 2003, 65, 93–103. [Google Scholar]

- McPherson, M.A.; Jewell, R.T. Leveling the playing field: Should student evaluation scores be adjusted? Soc. Sci. Q. 2007, 88, 868–881. [Google Scholar] [CrossRef]

- McPherson, M.A.; Jewell, R.T.; Kim, M. What determines student evaluation scores? A random effects analysis of undergraduate economics classes. East. Econ. J. 2009, 35, 37–51. [Google Scholar] [CrossRef]

- Jeong, S.; Kang, Y. Development and application of mathematics teaching-learning model considering learning styles of the students of engineering college. Korean J. Commun. Math. Educ. 2013, 27, 407–428. [Google Scholar] [CrossRef][Green Version]

- Park, J.S.; Pyo, Y.S. Improvement strategies of teaching methods for university basic mathematics education courses by ability grouping. Korean J. Commun. Math. Educ. 2013, 27, 19–37. [Google Scholar] [CrossRef][Green Version]

- Pyo, Y.S.; Park, J.S. Effective management strategies of basic mathematics for low achievement students in University General Mathematics. Korean J. Commun. Math. Educ. 2010, 24, 525–541. [Google Scholar]

- Bickel, W.E. Effective schools: Knowledge, dissemination, inquiry. Educ. Res. 1983, 12, 3–5. [Google Scholar] [CrossRef]

- Lasch, C. ‘Excellence’ in education: Old refrain or new departure? Issues Educ. 1985, 3, 1–12. [Google Scholar]

- Tobin, K.; Fraser, B.J. What does it mean to be an exemplary science teacher? J. Res. Sci. Teach. 1990, 27, 3–25. [Google Scholar] [CrossRef]

- Zoller, U. Faculty teaching performance evaluation in higher science education: Issues and implications (A “Cross-Cultural” case study). Sci. Educ. 1992, 76, 673–684. [Google Scholar] [CrossRef]

- Liu, T.Y.; Tan, T.H.; Chu, Y.L. Outdoor natural science learning with an RFID-supported immersive ubiquitous learning environment. Educ. Technol. Soc. 2009, 12, 161–175. [Google Scholar]

- Tan, T.H.; Lin, M.S.; Chu, Y.L.; Liu, T.Y. Educational affordances of a ubiquitous learning environment in a natural science course. Educ. Technol. Soc. 2012, 15, 206–219. [Google Scholar]

- Braxton, J.M.; Milem, J.F.; Sullivan, A.S. The influence of active learning on the college student departure process: Toward a revision of Tinto’s theory. J. High. Educ. 2000, 71, 569–590. [Google Scholar] [CrossRef]

- Freeman, S.; Eddy, S.L.; McDonough, M.; Smith, M.K.; Okoroafor, N.; Jordt, H.; Wenderoth, M.P. Active learning increases student performance in science, engineering, and mathematics. Proc. Natl. Acad. Sci. USA 2014, 111, 8410–8415. [Google Scholar] [CrossRef]

- Bonwell, C.C.; Eison, J.A. Active Learning: Creating Excitement in the Classroom. 1991 ASHE-ERIC Higher Education Reports; Associations for the Study of Higher Education: Washington, DC, USA, 1991. [Google Scholar]

- Prince, M. Does active learning work? A review of the research. J. Eng. Educ. 2004, 93, 223–231. [Google Scholar] [CrossRef]

- Di Fuccia, D.; Witteck, T.; Markic, S.; Eilks, I. Trends in practical work in German science education. Eurasia J. Math. Sci. Technol. Educ. 2012, 8, 59–72. [Google Scholar]

- Eilks, I.; Byers, B. The need for innovative methods of teaching and learning chemistry in higher education—Reflections from a project of the European Chemistry Thematic Network. Chem. Educ. Res. Pract. 2010, 11, 233–240. [Google Scholar] [CrossRef]

- Hofstein, A. The laboratory in chemistry education: Thirty years of experience with developments, implementation, and research. Chem. Educ. Res. Pract. 2004, 5, 247–264. [Google Scholar] [CrossRef]

- Hofstein, A.; Lunetta, V.N. The laboratory in science education: Foundations for the twenty-first century. Sci. Educ. 2004, 88, 28–54. [Google Scholar] [CrossRef]

- Lunetta, V.N.; Hofstein, A.; Clough, M.P. Learning and teaching in the school science laboratory: An analysis of research, theory, and practice. In Handbook of Research on Science Education; Abell, S.K., Lederman, N.G., Eds.; Routledge: Mahwah, NJ, USA, 2007; pp. 393–441. [Google Scholar]

- Moore, S.; Kuol, N. Students evaluating teachers: Exploring the importance of faculty reaction to feedback on teaching. Teach. High. Educ. 2005, 10, 57–73. [Google Scholar] [CrossRef]

- De Corte, E. New perspectives on learning and teaching in higher education. In Goals and Purposes of Higher Education; Burgen, A., Ed.; Jessica Kingsley: London, UK, 1996; pp. 112–132. [Google Scholar]

- Nicol, D.J. Research on Learning and Higher Education Teaching. Briefing Paper 45; Universities and Colleges Staff Development Agency (UCosDA): Sheffield, UK, 1997. [Google Scholar]

- Nicol, D.J.; Macfarlane-Dick, D. Formative assessment and self-regulated learning: A model and seven principles of good feedback practice. Stud. High. Educ. 2006, 31, 199–218. [Google Scholar] [CrossRef]

- Brockbank, A.; McGill, I. Facilitating Reflective Learning in Higher Education, 2nd ed.; Society for Research into Higher Education and Open University Press: Buckingham, UK, 2007. [Google Scholar]

- Honey, P.; Mumford, A. The Manual of Learning Styles; Peter Honey: Maidenhead, UK, 1992. [Google Scholar]

- DeNisi, A.S.; Kluger, A.N. Feedback effectiveness: Can 360-degree appraisals be improved? Acad. Manag. Exec. 2000, 14, 129–139. [Google Scholar] [CrossRef]

- Ory, J.C. Changes in evaluating teaching in higher education. Theory Pract. 1991, 30, 30–36. [Google Scholar] [CrossRef]

- Ambrose, S.A.; Bridges, M.W.; DiPietro, M.; Lovett, M.C.; Norman, M.K. How Learning Works: Seven Research-Based Principles for Smart Teaching; Jossey-Bass: San Francisco, CA, USA, 2010. [Google Scholar]

- Ivanic, R.; Clark, R.; Rimmershaw, R. What am I supposed to make of this? The messages conveyed to students by tutors’ written comments. In Student Writing in Higher Education: New Contexts; Lea, M.R., Stierer, B., Eds.; Open University Press: Buckingham, UK, 2000. [Google Scholar]

- Marsh, H.W.; Dunkin, M.J. Students’ evaluations of university teaching: A multidimensional perspective. In Higher Education: Handbook of Theory and Research; Perry, R.P., Smart, J.C., Eds.; Agathon Press: New York, NY, USA, 1992; Volume 8, pp. 143–233. [Google Scholar]

- Blatchford, P. The Class Size Debate: Is Small Better? Open University Press: Philadelphia, PA, USA, 2003. [Google Scholar]

- Pedder, D. Are small classes better? Understanding relationships between class size, classroom processes and pupils’ learning. Oxf. Rev. Educ. 2006, 32, 213–234. [Google Scholar] [CrossRef]

- Carbone, E.; Greenberg, J. Teaching large classes: Unpacking the problem and responding creatively. Improv. Acad. 1998, 17, 311–326. [Google Scholar] [CrossRef]

- Light, R.J. Making the Most of College; Harvard University Press: Cambridge, MA, USA, 2001. [Google Scholar]

- Astin, A.W. What Matters in College? Jossey-Bass: San Francisco, CA, USA, 1993. [Google Scholar]

- Tinto, V. Leaving College: Rethinking the Causes and Cures of Student Attrition, 2nd ed.; University of Chicago Press: Chicago, IL, USA, 1993. [Google Scholar]

- Kelly, H.F.; Ponton, M.K.; Rovai, A.P. A comparison of student evaluations of teaching between online and face-to-face courses. Internet High. Educ. 2007, 10, 89–101. [Google Scholar] [CrossRef]

- Stojić, S.M.S.; Dobrijević, G.; Stanišić, N.; Stanić, N. Characteristics and activities of teachers on distance learning programs that affect their ratings. Int. Rev. Res. Open Distrib. Learn. 2014, 15, 248–262. [Google Scholar] [CrossRef][Green Version]

- Estelami, H. An exploratory study of the drivers of student satisfaction and learning experience in hybrid-online and purely online marketing courses. Mark. Educ. Rev. 2012, 22, 143–156. [Google Scholar] [CrossRef]

- Ramachandran, R.; Sparck, E.M.; Levis-Fitzgerald, M. Investigating the effectiveness of using application-based science education videos in a general chemistry lecture course. J. Chem. Educ. 2019, 96, 479–485. [Google Scholar] [CrossRef]

- Litzinger, T.; Lattuca, L.R.; Hadgraft, R.; Newstetter, W. Engineering education and the development of expertise. J. Eng. Educ. 2011, 100, 123–150. [Google Scholar] [CrossRef]

- Pintrich, P.R. A motivational science perspective on the role of student motivation in learning and teaching contexts. J. Educ. Psychol. 2003, 95, 667–686. [Google Scholar] [CrossRef]

- Van Driel, J.H.; Beijaard, D.; Verloop, N. Professional development and reform in science education: The role of teachers’ practical knowledge. J. Res. Sci. Teach. 2001, 38, 137–158. [Google Scholar] [CrossRef]

- Centra, J.A. Will teachers receive higher student evaluations by giving higher grades and less course work? Res. High. Educ. 2003, 44, 495–518. [Google Scholar] [CrossRef]

- Dee, K.C. Reducing the workload in your class won’t “Buy” you better teaching evaluation scores: Re-refutation of a persistent myth. In Proceedings of the 2004 American Society for Engineering Education Annual Conference and Exposition, American Society for Engineering Education, Salt Lake City, UT, USA, 20 September–1 October 2004. Session 1331. [Google Scholar]

- Dee, K.C. Student perceptions of high course workloads are not associated with poor student evaluations of instructor performance. J. Eng. Educ. 2007, 96, 69–78. [Google Scholar] [CrossRef]

- Paulsen, M.B. Evaluating teaching performance. New Dir. Inst. Res. 2002, 2002, 5–18. [Google Scholar] [CrossRef]

- Eggleston, J.F.E.; Galton, M.J.; Jones, M.E. Processes and Products of Science Teaching; Macmillan Education: London, UK, 1976. [Google Scholar]

- Handelsman, J.; Ebert-May, D.; Beichner, R.; Bruns, P.; Chang, A.; DeHaan, R.; Gentile, J.; Lauffer, S.; Stewart, J.; Tilghman, S.M.; et al. Scientific teaching. Science 2004, 304, 521–522. [Google Scholar] [CrossRef]

- Blanchflower, D.G.; Oswald, A.J. International happiness: A new view on the measure of performance. Acad. Manag. Perspect. 2011, 25, 6–22. [Google Scholar]

- Davies, M.; Hirschberg, J.; Lye, J.; Johnston, C.; McDonald, I. Systematic influences on teaching evaluations: The case for caution. Aust. Econ. Pap. 2007, 46, 18–38. [Google Scholar] [CrossRef]

- Isely, P.; Singh, H. Do higher grades lead to favorable student evaluations? J. Econ. Educ. 2005, 36, 29–42. [Google Scholar] [CrossRef]

- Krautmann, A.C.; Sander, W. Grades and student evaluations of teachers. Econ. Educ. Rev. 1999, 18, 59–63. [Google Scholar] [CrossRef]

- Langbein, L. Management by results: Student evaluation of faculty teaching and the mis-measurement of performance. Econ. Educ. Rev. 2008, 27, 417–428. [Google Scholar] [CrossRef]

- Spencer, P.A.; Flyr, M.L. The Formal Evaluation as an Impetus to Classroom Change: Myth or Reality? University of California Press: Riverside, CA, USA, 1992. [Google Scholar]

- Centra, J.A.; Gaubatz, N.B. Is there gender bias in student evaluations of teaching? J. High. Educ. 2000, 71, 17–33. [Google Scholar] [CrossRef]

- Fernandez, J.; Mateo, M. Student and faculty gender in ratings of university teaching quality. Sex Roles 1997, 37, 997–1003. [Google Scholar] [CrossRef]

- Tobin, K. Research on science laboratory activities: In pursuit of better questions and answers to improve learning. Sch. Sci. Math. 1990, 90, 403–418. [Google Scholar] [CrossRef]

- Gess-Newsome, J.; Southerland, S.A.; Johnston, A.; Woodbury, S. Educational reform, personal practical theories, and dissatisfaction: The anatomy of change in college science teaching. Am. Educ. Res. J. 2003, 40, 731–767. [Google Scholar] [CrossRef]

- Wilson, R. Why Teaching is Not Priority No. 1. The Chronicle of Higher Education. Available online: https://www.chronicle.com/article/Why-Teaching-Is-Not-Priority/124301 (accessed on 10 June 2019).

- Higgins, R.; Hartley, P.; Skelton, A. Getting the message across: The problem of communicating assessment feedback. Teach. High. Educ. 2001, 6, 269–274. [Google Scholar] [CrossRef]

- Brinko, K.T. The practice of giving feedback to improve teaching: What is effective? J. High. Educ. 1993, 64, 574–593. [Google Scholar] [CrossRef]

| Theory Class | Laboratory Class (Experiment Practice) | |||

|---|---|---|---|---|

| Satisfaction scores | 3.94 | (0.94) | 3.92 | (0.97) |

| Instructor’s tenure | 7.11 | (7.34) | 12.50 | (8.23) |

| Professor | 0.26 | (0.44) | 0.59 | (0.49) |

| Associate | 0.13 | (0.34) | 0.26 | (0.44) |

| Assistant | 0.15 | (0.36) | 0.13 | (0.34) |

| Lecturer | 0.46 | (0.50) | 0.02 | (0.13) |

| Class size | 79.04 | (26.93) | 27.96 | (5.81) |

| Hybrid course | 0.65 | (0.48) | 0.50 | (0.42) |

| Students’ age | 20.28 | (2.04) | 19.99 | (1.87) |

| Female student | 0.20 | (0.40) | 0.20 | (0.40) |

| Grade in the current course | 3.02 | (1.22) | 3.58 | (0.91) |

| Expected grade | 3.29 | (0.83) | 3.45 | (0.74) |

| Sample size | 51,399 | 12,132 | ||

| All | All | |

|---|---|---|

| (1) | (2) | |

| Laboratory class | −0.078 *** | −0.159 *** |

| (0.017) | (0.018) | |

| Tenure (in years) | −0.004 *** | −0.005 *** |

| (0.001) | (0.001) | |

| Instructors’ rank | ||

| Professor | 0.098 *** | 0.106 *** |

| (0.016) | (0.016) | |

| Associate | 0.132 *** | 0.141 *** |

| (0.012) | (0.012) | |

| Assistant | 0.180 *** | 0.185 *** |

| (0.011) | (0.011) | |

| Log of class size | −0.045 *** | −0.047 *** |

| (0.013) | (0.015) | |

| Hybrid course | 0.068 *** | 0.063 *** |

| (0.011) | (0.011) | |

| Lab Ⅹ Tenure | 0.004 *** | |

| (0.001) | ||

| Lab Ⅹ Log(size) | −0.008 *** | |

| (0.003) | ||

| Lab Ⅹ Hybrid | 0.259 *** | |

| (0.015) | ||

| Students’ age | 0.037 *** | 0.037 *** |

| (0.002) | (0.002) | |

| Female student | −0.096 *** | −0.096 *** |

| (0.009) | (0.009) | |

| Grade in the current course | −0.005 | −0.005 |

| (0.004) | (0.004) | |

| Expected grade | 0.421 *** | 0.421 *** |

| (0.005) | (0.005) | |

| Department | ||

| Biological Sciences | 0.062 *** | 0.060 *** |

| (0.014) | (0.014) | |

| Mathematics | 0.052 *** | 0.052 *** |

| (0.011) | (0.011) | |

| Chemistry | 0.071 *** | 0.074 *** |

| (0.013) | (0.013) | |

| Year | Yes | Yes |

| Constant | 1.820 *** | 1.842 *** |

| (0.073) | (0.081) | |

| Observations | 63,531 | 63,531 |

| R-squared | 0.145 | 0.145 |

| Theory | Laboratory | |

|---|---|---|

| (3) | (4) | |

| Tenure (in years) | −0.003 ** | 0.004 *** |

| (0.002) | (0.001) | |

| Instructors’ rank | ||

| Professor | 0.066 | 0.083 *** |

| (0.070) | (0.019) | |

| Associate | 0.030 | 0.148 *** |

| (0.068) | (0.013) | |

| Assistant | 0.040 | 0.200 *** |

| (0.071) | (0.012) | |

| Log of class size | −0.003 | −0.083 *** |

| (0.018) | (0.031) | |

| Hybrid course | 0.269 | 0.062 *** |

| (0.511) | (0.012) | |

| Student’s age | 0.038 *** | 0.035 *** |

| (0.002) | (0.005) | |

| Female student | −0.089 *** | −0.121 *** |

| (0.010) | (0.021) | |

| Grade in the current course | −0.002 | −0.020 * |

| (0.004) | (0.011) | |

| Expected grade | 0.421 *** | 0.422 *** |

| (0.006) | (0.013) | |

| Department | ||

| Biological Sciences | 0.002 | 0.215 *** |

| (0.017) | (0.028) | |

| Mathematics | 0.010 | 0.181 *** |

| (0.013) | (0.064) | |

| Chemistry | 0.002 | 0.147 *** |

| (0.019) | (0.019) | |

| Year | Yes | Yes |

| Constant | 1.643 *** | 2.021 *** |

| (0.094) | (0.153) | |

| Observations | 51,399 | 12,132 |

| R-squared | 0.151 | 0.128 |

| Physics | Biological Sciences | Mathematics | Chemistry | |

|---|---|---|---|---|

| (5) | (6) | (7) | (8) | |

| Laboratory class | −0.228 *** | 0.101 ** | 0.121 * | −0.095 * |

| (0.031) | (0.050) | (0.069) | (0.054) | |

| Tenure (in years) | −0.002 | 0.011 *** | 0.012 *** | 0.006 *** |

| (0.002) | (0.002) | (0.001) | (0.002) | |

| Instructors’ rank | ||||

| Professor | 0.171 *** | −0.011 | 0.255 *** | 0.016 |

| (0.050) | (0.042) | (0.026) | (0.037) | |

| Associate | 0.175 *** | 0.136 *** | 0.133 *** | 0.069 *** |

| (0.042) | (0.036) | (0.020) | (0.022) | |

| Assistant | 0.251 *** | 0.101 | 0.240 *** | −0.014 |

| (0.041) | (0.074) | (0.015) | (0.027) | |

| Log of class size | −0.117 *** | −0.111 | −0.036 | −0.072 ** |

| (0.031) | (0.076) | (0.023) | (0.034) | |

| Hybrid course | 0.137 *** | 0.037 *** | 0.061 *** | 0.073 *** |

| (0.039) | (0.032) | (0.015) | (0.012) | |

| Student’s age | 0.043 *** | 0.037 *** | 0.036 *** | 0.036 *** |

| (0.005) | (0.005) | (0.003) | (0.004) | |

| Female student | −0.099 *** | −0.086 *** | −0.093 *** | −0.096 *** |

| (0.021) | (0.022) | (0.015) | (0.017) | |

| Grade in the current course | −0.013 | −0.049 *** | 0.010 * | −0.016 * |

| (0.009) | (0.014) | (0.006) | (0.009) | |

| Expected grade | 0.449 *** | 0.428 *** | 0.406 *** | 0.414 *** |

| (0.011) | (0.017) | (0.008) | (0.011) | |

| Year | Yes | Yes | Yes | Yes |

| Constant | 1.883 *** | 1.344 *** | 1.516 *** | 2.246 *** |

| (0.172) | (0.340) | (0.124) | (0.204) | |

| Observations | 14,729 | 7,445 | 25,875 | 15,482 |

| R-squared | 0.157 | 0.126 | 0.157 | 0.126 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cho, J.; Baek, W. Identifying Factors Affecting the Quality of Teaching in Basic Science Education: Physics, Biological Sciences, Mathematics, and Chemistry. Sustainability 2019, 11, 3958. https://doi.org/10.3390/su11143958

Cho J, Baek W. Identifying Factors Affecting the Quality of Teaching in Basic Science Education: Physics, Biological Sciences, Mathematics, and Chemistry. Sustainability. 2019; 11(14):3958. https://doi.org/10.3390/su11143958

Chicago/Turabian StyleCho, Joonmo, and Wonyoung Baek. 2019. "Identifying Factors Affecting the Quality of Teaching in Basic Science Education: Physics, Biological Sciences, Mathematics, and Chemistry" Sustainability 11, no. 14: 3958. https://doi.org/10.3390/su11143958

APA StyleCho, J., & Baek, W. (2019). Identifying Factors Affecting the Quality of Teaching in Basic Science Education: Physics, Biological Sciences, Mathematics, and Chemistry. Sustainability, 11(14), 3958. https://doi.org/10.3390/su11143958