Evaluating Knowledge and Assessment-Centered Reflective-Based Learning Approaches

Abstract

1. Introduction

2. Method

2.1. Context

2.2. Participants

2.3. Sequential Methodologies and Conceptual Framework

2.4. Instruments and Measures

2.4.1. The RLQuest

2.4.2. Assessment of the Students’ Reflections

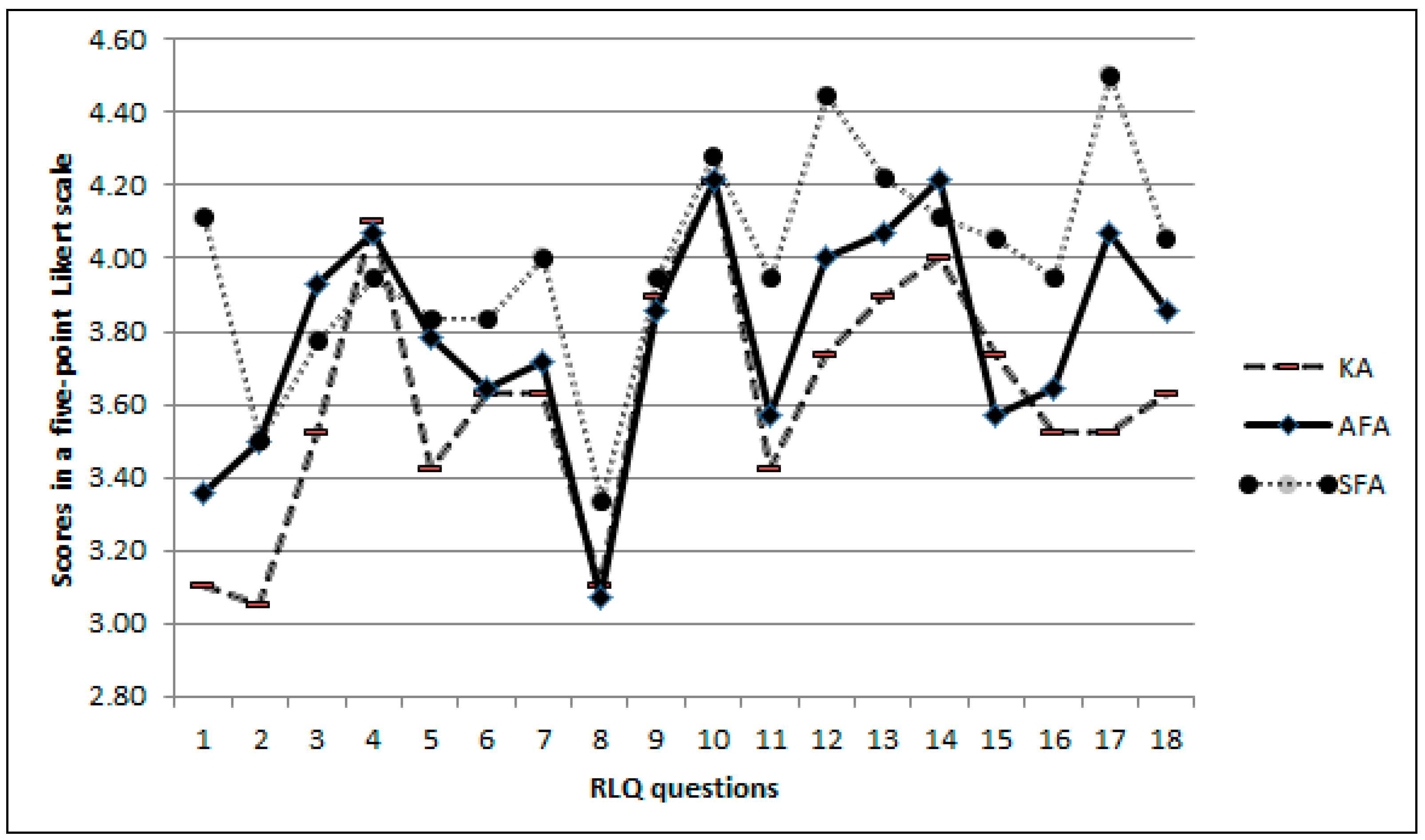

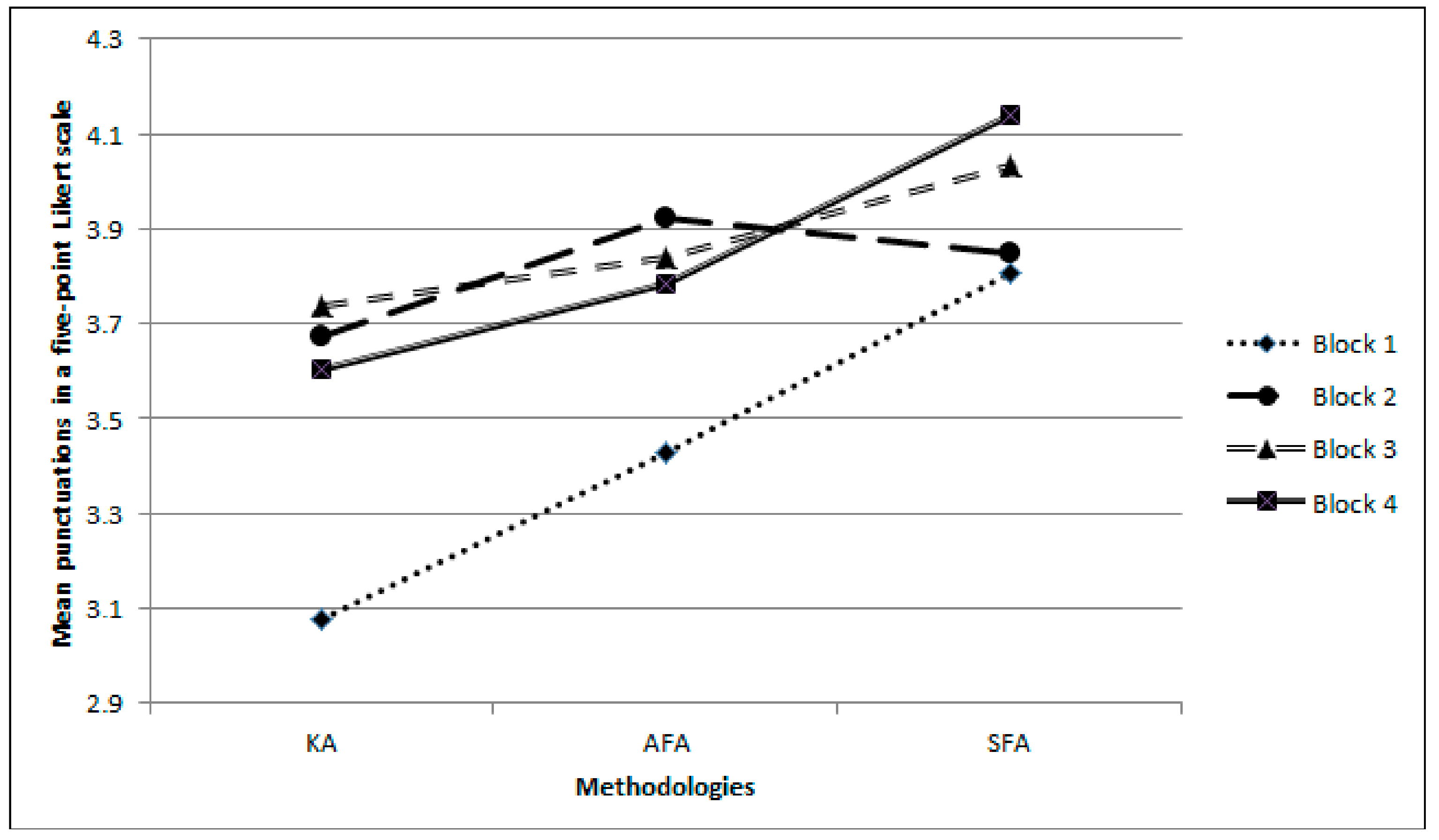

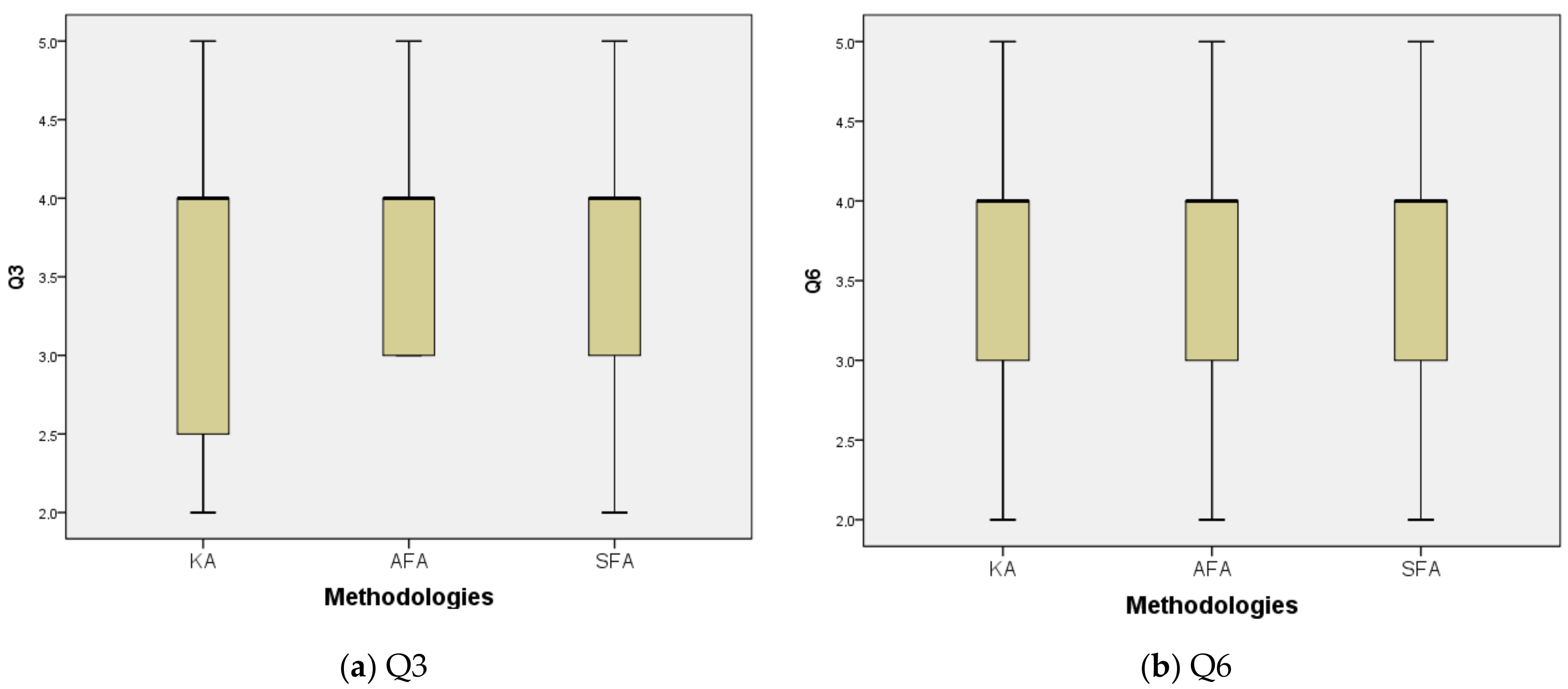

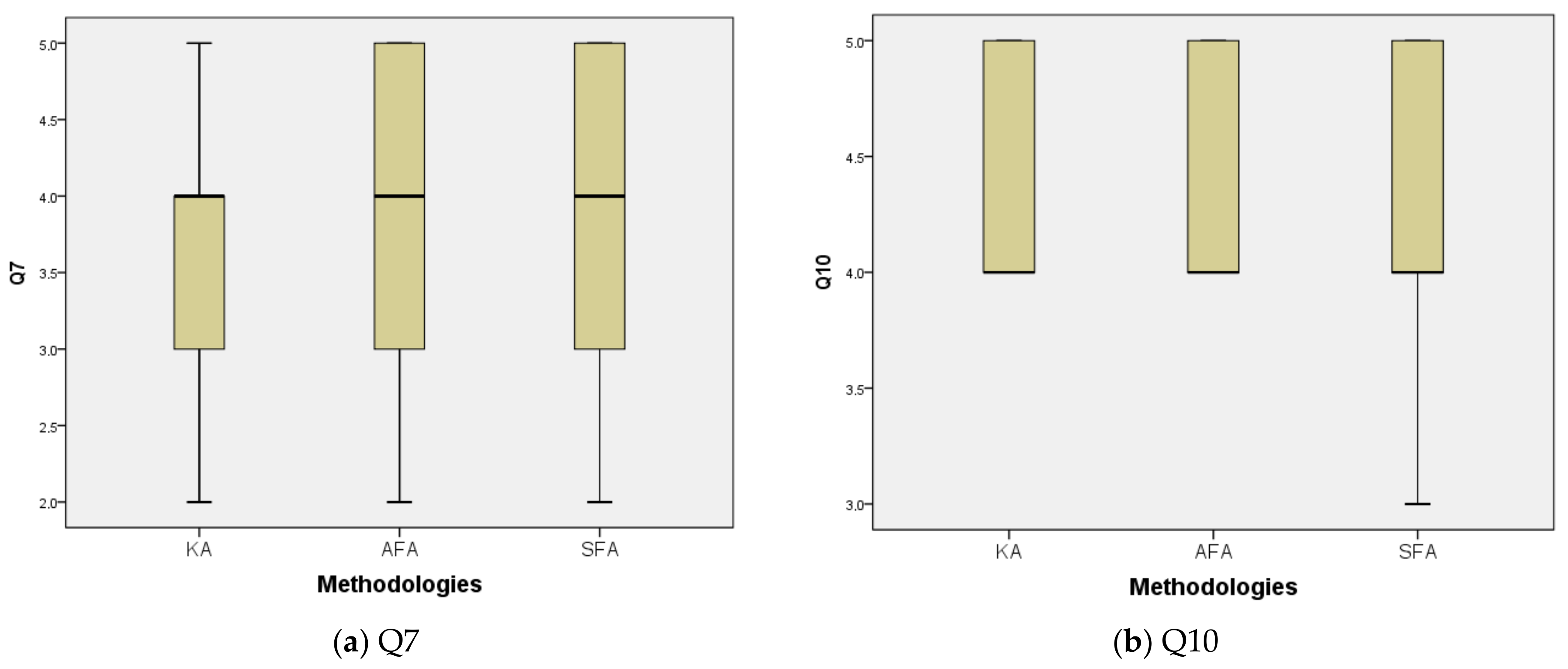

3. Results

4. Discussion and Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Boud, D.; Keogh, R.; Walter, D. Reflection: Turning Experience into Learning; Routledge & Kegan Paul: London, UK, 1985. [Google Scholar]

- Tomkins, A. “It was a great day when…”: An exploratory case study of reflective learning through storytelling. J. Hosp. Leis. Sports Tour. Educ. 2009, 8, 123–131. [Google Scholar] [CrossRef]

- Schön, D. The Reflective Practitioner: How Professionals Think in Action; Basic Books: New York, NY, USA, 1983. [Google Scholar]

- Dewey, J. How We Think; Prometheus Books: Buffalo, NY, USA, 1933. [Google Scholar]

- Kolb, D.A. Experiential Learning: Experience as the Source of Learning and Development; Prentice Hall: Englewood Cliffs, NJ, USA, 1984. [Google Scholar]

- Korthagen, F.A.J. Reflective teaching and preservice teacher education in the Netherlands. J. Teach. Educ. 1985, 36, 11–15. [Google Scholar] [CrossRef]

- Kolb, A.Y.; Kolb, D.A. Learning Styles and Learning Spaces: Enhancing Experiential Learning in Higher Education. Acad. Manag. Learn. Educ. 2005, 4, 193–212. [Google Scholar] [CrossRef]

- Felder, R.M. Learning and teaching styles in engineering education. Eng. Educ. 1988, 78, 674–681. [Google Scholar]

- Osterman, K.F.; Kottkamp, R.B. Reflective Practice for Educators: Improving Schooling through Professional Development; Corwin Press: Newbury Park, CA, USA, 1993. [Google Scholar]

- Scott, A.G. Enhancing reflection skills through learning portfolios: An empirical test. J. Manag. Educ. 2010, 34, 430–457. [Google Scholar] [CrossRef]

- Healey, M.; Jenkins, A. Kolb’s experiential learning theory and its application in geography in higher education. J. Geogr. 2000, 99, 185–195. [Google Scholar] [CrossRef]

- Beatty, I.D.; Gerace, W.J. Technology-enhanced formative assessment: A research-based pedagogy for teaching science with classroom response technology. J. Sci. Educ. Technol. 2009, 18, 146–162. [Google Scholar] [CrossRef]

- Cowie, B.; Bell, B. A model of formative assessment in science education. Assess. Educ. Princ. Policy Pract. 1999, 6, 101–116. [Google Scholar] [CrossRef]

- 14 Heemsoth, T.; Heinze, T. Secondary school students learning from reflections on the rationale behind self-made errors: A field experiment. J. Exp. Educ. 2016, 84, 98–118. [Google Scholar] [CrossRef]

- Cañabate, D.; Martínez, G.; Rodríguez, D.; Colomer, J. Analysing emotions and social skills in physical education. Sustainability 2018, 10, 1585. [Google Scholar] [CrossRef]

- Herranen, J.; Vesterinen, V.-M.; Aksela, M. From learner-centered to learner-driven sustainability education. Sustainability 2018, 10, 2190. [Google Scholar] [CrossRef]

- Mackenna, A.F.; Yalvac, B.; Light, G.J. The role of collaborative reflection on shaping engineering faculty teaching approaches. J. Eng. Educ. 2009, 98, 17–26. [Google Scholar] [CrossRef]

- Songer, N.B.; Ruiz-Primo, M.A. Assessment and science education: Our essential new priority? J. Res. Sci. Teach. 2012, 49, 683–690. [Google Scholar] [CrossRef]

- Alonzo, A.C.; Kobarg, M.; Seidel, T. Pedagogical content knowledge as reflected in teacher-student interactions: Analysis of two video cases. J. Res. Sci. Teach. 2012, 49, 1211–1239. [Google Scholar] [CrossRef]

- Abdulwahed, M.; Nagy, Z.K. Applying Kolb’s experiential learning cycle for laboratory education. J. Eng. Educ. 2009, 98, 283–294. [Google Scholar] [CrossRef]

- Anderson, J.R.; Reder, L.M.; Simon, H.A. Situated learning and education. Educ. Res. 1996, 25, 5–11. [Google Scholar] [CrossRef]

- Johri, A.; Olds, B.M. Situated engineering learning: Bridging engineering education research and the learning sciences. J. Eng. Educ. 2011, 100, 151–185. [Google Scholar] [CrossRef]

- Crouch, C.H.; Mazur, E. Peer instruction: Ten years of experience and results. Am. J. Phys. 2001, 69, 970–977. [Google Scholar] [CrossRef]

- Fraser, J.M.; Timan, A.L.; Miller, K.; Dowd, J.E.; Tucker, L.; Mazur, E. Teaching and physics education research: Bridging the gap. Rep. Prog. Phys. 2014, 77, 032401. [Google Scholar] [CrossRef] [PubMed]

- Henderson, C.; Dancy, M. Impact of physics education research on the teaching of introductory quantitative physics in the United States. Phys. Educ. Res. 2009, 5, 020107. [Google Scholar] [CrossRef]

- May, D.B.; Etkina, E. College physics students’ epistemological self-reflection and its relationship to conceptual learning. Am. J. Phys. 2002, 70, 1249–1258. [Google Scholar] [CrossRef]

- Fullana, J.; Pallisera, M.; Colomer, J.; Fernández, R.; Pérez-Burriel, M. Reflective learning in higher education: A qualitative study on students’ perceptions. Stud. High. Educ. 2016, 41, 1008–1022. [Google Scholar] [CrossRef]

- Hake, R.R. Interactive-engagement versus traditional methods: A six-thousand-student survey on mechanics test data for introductory physics courses. Am. J. Phys. 1998, 53, 64–74. [Google Scholar] [CrossRef]

- Mezirow, J. An overview of transformative learning. In Lifelong Learning; Sutherland, P., Crowther, J., Eds.; Routledge: London, UK, 2006. [Google Scholar]

- Kember, D.; McKay, J.; Sinclair, K.; Wong, F.K.Y. A four-category scheme for coding and assessing the level of reflection in written work. Assess. Eval. High. Educ. 2008, 33, 369–379. [Google Scholar] [CrossRef]

- Chamoso, J.M.; Cáceres, M.J. Analysis of the reflections of student-teachers of mathematics when working with learning portfolios in Spanish university classrooms. Teach. Teach. Educ. 2009, 25, 198–206. [Google Scholar] [CrossRef]

- Prevost, L.B.; Haudek, K.C.; Henry, E.N.; Berry, M.C.; Urban-Lurain, M. Automated text analysis facilitates using written formative assessments for just-in-time teaching in large enrollment courses. In Proceedings of the ASEE Annual Conference and Exposition, Atlanta, GA, USA, 23–26 June 2013. [Google Scholar]

- Nicol, D.J.; Macfarlane-Dick, D. Formative assessment and self-regulated learning: A model and seven principles of good feedback practice. Stud. High. Educ. 2006, 31, 199–218. [Google Scholar] [CrossRef]

- Jacoby, J.C.; Heugh, S.; Bax, C.; Branford-White, C. Enhancing learning through formative assessment. Innov. Educ. Teach. Int. 2014, 51, 72–83. [Google Scholar] [CrossRef]

- Smith, D.M.; Kolb, D. Users’ Guide for the Learning Style Inventory: A Manual for Teachers and Trainers; McBer: Boston, MA, USA, 1986. [Google Scholar]

- Schellings, G. Applying learning strategy questionnaires: Problems and possibilities. Metacogn. Learn. 2011, 6, 91–109. [Google Scholar] [CrossRef]

- Thomas, G.P.; Anderson, D.; Nashon, S. Development of an instrument designed to investigate elements of science students’ metacognition, self-efficacy and learning processes: The SEMLI-S. Int. J. Sci. Educ. 2008, 30, 1701–1724. [Google Scholar] [CrossRef]

- Mokhtari, K.; Reichard, C. Assessing students’ metacognitive awareness of reading strategies. J. Educ. Psychol. 2002, 94, 249–259. [Google Scholar] [CrossRef]

- Feldon, D.F.; Peugh, J.; Timmerman, B.E.; Maher, M.A.; Hurst, M.; Strickland, D.; Gilmore, J.A.; Stiegelmeyer, C. Graduate students’ teaching experiences improve their methodological research skills. Science 2011, 333, 1037–1039. [Google Scholar] [CrossRef] [PubMed]

- Richardson, J.T.E. Methodological issues in questionnaire-based research on student learning in higher education. Educ. Psychol. Rev. 2004, 16, 347–358. [Google Scholar] [CrossRef]

- Watts, M.; Lawson, M. Using a meta-analysis activity to make critical reflection explicit in teacher education. Teach. Teach. Educ. 2009, 25, 609–616. [Google Scholar] [CrossRef]

- Creswell, J. Research Design: Qualitative, Quantitative and Mixed Methods Approaches, 2nd ed.; Psychological Association: Washington, DC, USA, 2003. [Google Scholar]

- Barrera, A.; Braley, R.T.; Slate, J.R. Beginning teacher success: An investigation into the feedback from mentors of formal mentoring programs. Mentor. Tutor. Partnersh. Learn. 2010, 18, 61–74. [Google Scholar] [CrossRef]

- Cronbach, L.J. Coefficient alpha and the internal structure of tests. Psychometrika 1951, 16, 297–334. [Google Scholar] [CrossRef]

- Gliem, J.A.; Gliem, R.R. Calculating, interpreting, and reporting Cronbach’s alpha reliability coefficient for Likert-type scales. In Proceedings of the Midwest Research-to-Practice Conference in Adult, Continuing, and Community Education, The Ohio State University, Columbus, OH, USA, 8–10 October 2003; pp. 82–88. [Google Scholar]

- Kember, D.; Leung, D.Y.P.; Jones, A.; Loke, A.Y.; McKay, J.; Sinclair, K.; Tse, H.; Webb, C.; Wong, F.K.Y.; Wong, M.W.L.; Yeung, E. Development of a questionnaire to measure the level of reflective thinking. Assess. Eval. High. Educ. 2000, 25, 381–395. [Google Scholar] [CrossRef]

- Black, P.E.; Plowright, D. A multi-dimensional model of reflective learning for professional development. Reflect. Pract. 2010, 11, 245–258. [Google Scholar] [CrossRef]

- Koole, S.; Dornan, T.; Aper, L.; De Wever, B.; Scherpbier, A.; Valcke, M.; Cohen-Schotanus, J.; Derese, A. Using video-cases to assess student reflection: Development and validation of an instrument. BMC Med. Educ. 2012, 12, 22. [Google Scholar] [CrossRef] [PubMed]

- Wolters, C.A.; Benzon, M.B. Assessing and predicting college students’ use of strategies for the self-regulation of motivation. J. Exp. Educ. 2013, 81, 199–221. [Google Scholar] [CrossRef]

- Hargreaves, J. So how do you feel about that? Assessing reflective practice. Nurs. Educ. Today 2004, 24, 196–201. [Google Scholar] [CrossRef] [PubMed]

- Konak, A.; Clark, T.C.; Nasereddin, M. Using Kolb’s experimental learning cycle to improve student learning in virtual computer laboratories. Comput. Educ. 2014, 72, 11–22. [Google Scholar] [CrossRef]

- Leijen, Ä.; Valtna, K.; Leijen, D.A.J.; Pedaste, M. How to determine the quality of students’ reflection. Stud. High. Educ. 2012, 37, 203–217. [Google Scholar] [CrossRef]

- Stice, J.E. Using Kolb’s learning cycle to improve student learning. Eng. Educ. 1987, 77, 291–296. [Google Scholar]

- David, A.; Wyrick, P.; & Hilsen, L. Using Kolb’s cycle to round out learning. In Proceedings of the 2002 American Society for Engineering Education Annual Conference & Exposition, Montreal, QC, Canada, 16–19 June 2002. [Google Scholar]

- Vygotsky, L.S.; Cole, M. Mind in Society: The Development of Higher Psychological Processes; Harvard University Press: Cambridge, MA, USA, 1978. [Google Scholar]

- Colomer, J.; Pallisera, M.; Fullana, J.; Pérez-Burriel, M.; Fernández, R. Reflective learning in higher education: A comparative analysis. Procedia-Soc. Behav. Sci. 2013, 93, 364–370. [Google Scholar] [CrossRef]

| Questions Q1 to Q18 of the RLQuest |

|---|

| 1. Knowledge about oneself |

| Q1. Analyze my behavior when confronting professional and daily situations. |

| Q2. Analyze what my emotions are when confronting professional and daily situations. |

| 2. Connecting the experience with knowledge |

| Q3. Connect knowledge with my own experiences, emotions and attitudes. |

| Q4. Select relevant information and data in a given situation. |

| Q5. Formulate/contrast hypotheses in a given situation. |

| Q6. Provide reasons/arguments for decision-making in a given situation. |

| 3. Self-reflection on the learning process |

| Q7. Improve my written communication skills. |

| Q8. Improve my oral communication skills. |

| Q9. Identify the positive aspects of my knowledge and skills. |

| Q10. Identify the negative aspects of my knowledge and skills. |

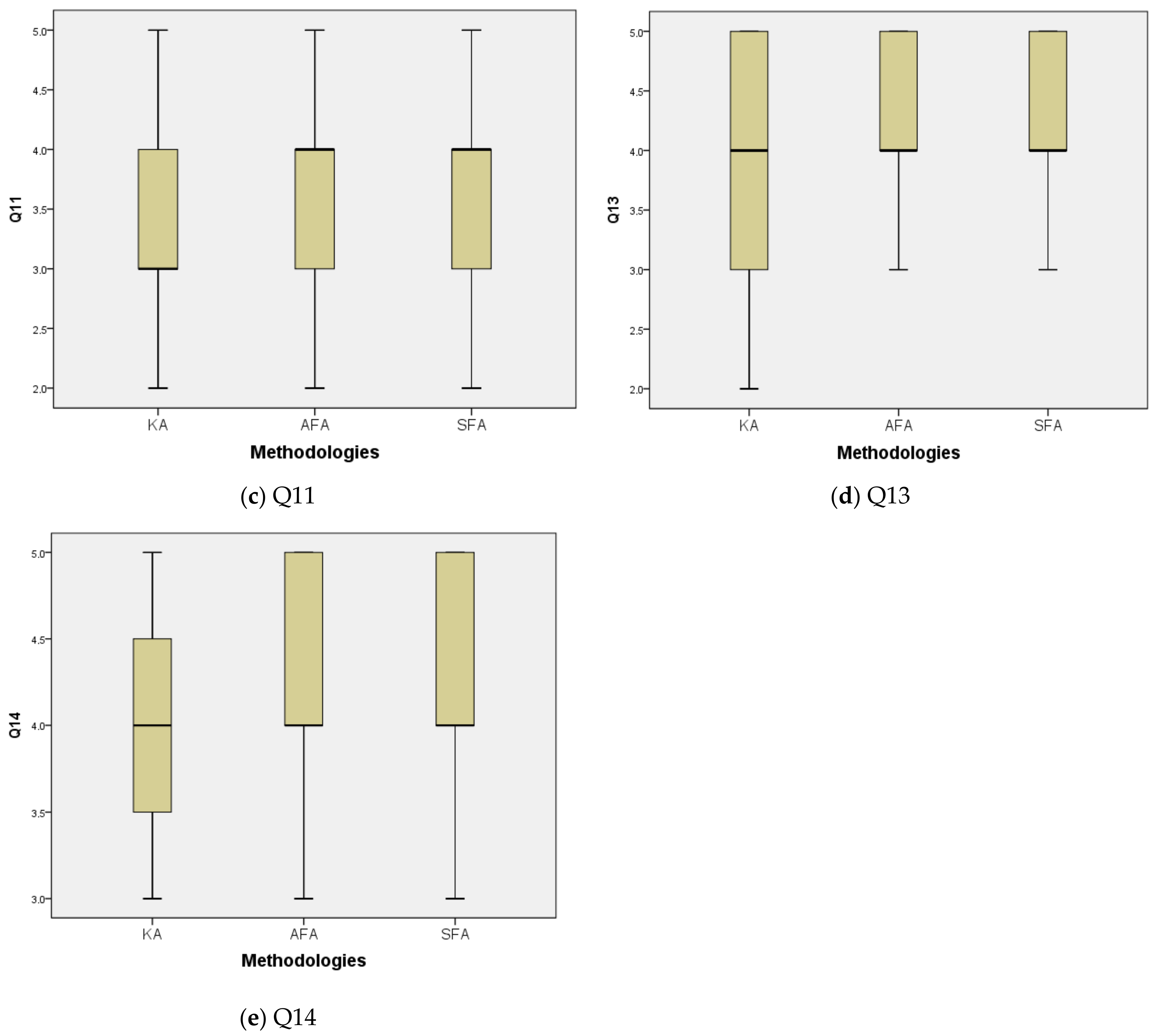

| Q11. Identify the positive aspects of my attitudes. |

| Q12. Identify the negative aspects of my attitudes. |

| Q13. Become aware of what I learn and how I learn it. |

| Q14. Understand that what I learn and how I learn it is meaningful for me. |

| 4. Self-regulating learning |

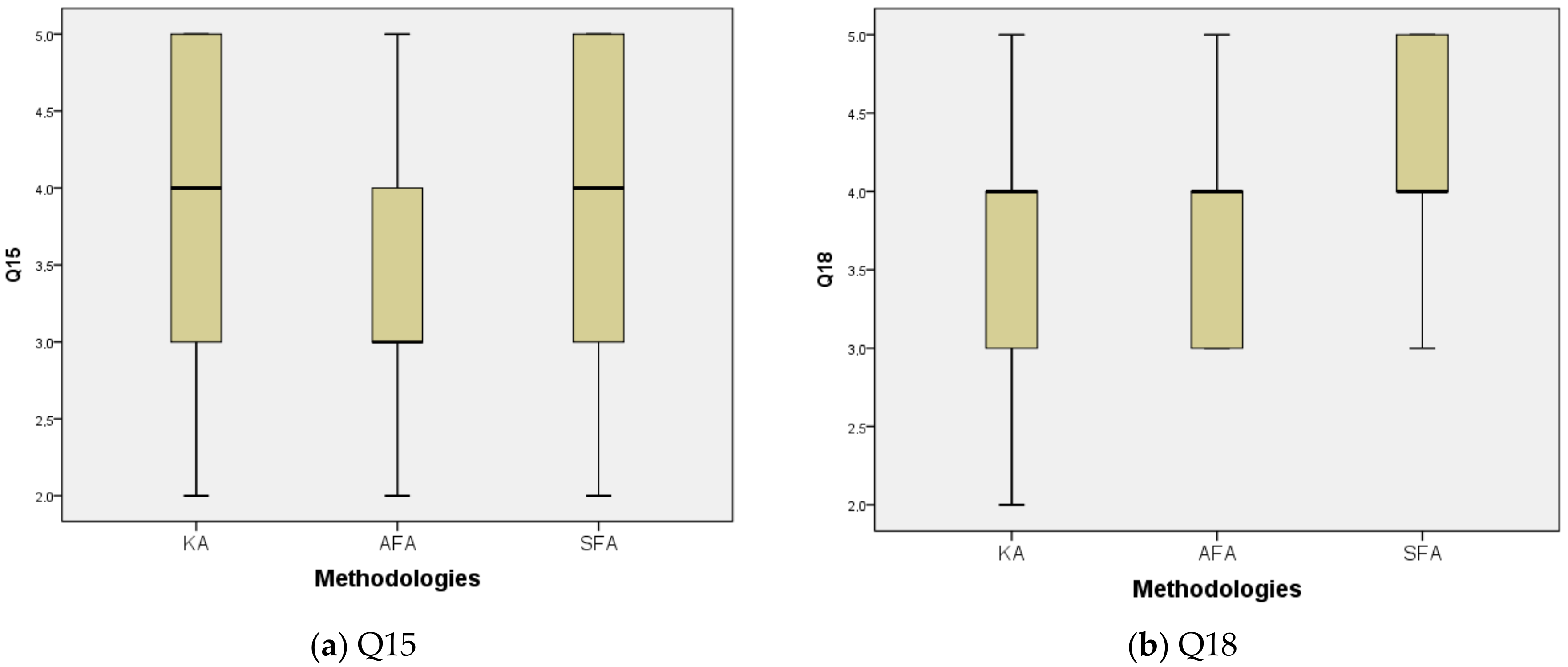

| Q15. Plan my learning: the steps to follow, organize material and time. |

| Q16. Determine who or what I need to consult. |

| Q17. Regulate my learning, analyzing the difficulties I have and evaluating how to resolve the problems I encounter. |

| Q18. Evaluate how I plan my learning, the result, and what I need to do to improve. |

| Questions 1 to 10 for the AFA and SFA approaches |

|---|

| 1. Formulate searching questions that help to analyze your own actions/thoughts during the whole process of viewing–analyzing–writing the experience. |

| 2. What knowledge/feelings/values/former experiences do I use to formulate my answer(s). |

| 3. What do I need to describe the experience? Do I identify the knowledge/skills that are necessary to describe the experiment? |

| 4. Who or what do I need to consult to describe the experiment? |

| 5. How do I organize myself to develop the experiment? |

| 6. I identify the difficulties behind the definition of the understanding of the experiment. |

| 7. I have planned the timing for the process of writing up the activities. |

| 8. What should I do to improve the process of the activity? |

| 9. What will the result of the process be? |

| 10. What did I learn going through the understanding of the experience? |

| Questions 1 to 6 for the SFA |

|---|

| 1. Description of the experiment. Summarize it by writing a short abstract describing the experiment. |

| 2. Describe the methodology steps used in the experiment. |

| 3. Write the hypothesis that was supported by the experiment. |

| 4. Describe the analysis of the data obtained from the experiment. |

| 5. Formulate questions derived from the experiment. |

| 6. List the new knowledge that you have created from the experiment. |

| Approach | Category 1 | Category 2 | Category 3 | Category 4 |

|---|---|---|---|---|

| KA | Non-reflection | Understanding | Reflection | Critical reflection |

| Activity 1/2 | 24 | 57 | 12 | 7 |

| Activity 3 | 19 | 46 | 21 | 14 |

| Activity 4 | 11 | 33 | 39 | 17 |

| AFA | Non-reflection | Understanding | Reflection | Critical reflection |

| Activity 5/6 | 5 | 32 | 43 | 20 |

| Activity 7 | 4 | 25 | 48 | 23 |

| Activity 8 | 4 | 19 | 49 | 28 |

| SFA | Non-reflection | Understanding | Reflection | Critical reflection |

| Activity 9/10 | 3 | 27 | 43 | 27 |

| Activity 11 | 4 | 31 | 36 | 29 |

| Activity 12 | 3 | 29 | 42 | 26 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Colomer, J.; Serra, L.; Cañabate, D.; Serra, T. Evaluating Knowledge and Assessment-Centered Reflective-Based Learning Approaches. Sustainability 2018, 10, 3122. https://doi.org/10.3390/su10093122

Colomer J, Serra L, Cañabate D, Serra T. Evaluating Knowledge and Assessment-Centered Reflective-Based Learning Approaches. Sustainability. 2018; 10(9):3122. https://doi.org/10.3390/su10093122

Chicago/Turabian StyleColomer, Jordi, Laura Serra, Dolors Cañabate, and Teresa Serra. 2018. "Evaluating Knowledge and Assessment-Centered Reflective-Based Learning Approaches" Sustainability 10, no. 9: 3122. https://doi.org/10.3390/su10093122

APA StyleColomer, J., Serra, L., Cañabate, D., & Serra, T. (2018). Evaluating Knowledge and Assessment-Centered Reflective-Based Learning Approaches. Sustainability, 10(9), 3122. https://doi.org/10.3390/su10093122