1. Introduction

In most parts of the world, with the advancement of industry, urban infrastructure is continuously increasing and developing. In the process of urban expansion, the numbers of tunnels on railways and roads are increasing each year, and the traffic volume of vehicles and people is also increasing. In recent years, the construction of long-distance tunnels with a length of more than 1 km has been rapidly increasing to connect cities and to provide smooth transportation. However, along with the increasing number of tunnels, car accidents in tunnels are also occurring more frequently. In particular, in the case of a fire caused by a car accident in a tunnel, the risk is extremely high because the space is limited and isolated from the outside. The greatest dangers are the smoke and high temperatures that accompany fires, which can cause large-scale casualties. In addition, unlike in above-ground structures, fires inside tunnels are very difficult to extinguish for the following reasons: toxic gas generation by incomplete combustion, difficulty in ventilation of combustion products, unexpected changes in air flow direction, confusion regarding the direction of evacuation, and so forth.

The Gottard tunnel fire in Switzerland is an example of this type of accident. The Gottard tunnel is a 16.918-km-long structure that carries two-way traffic. It is recognized as a dangerous tunnel in which an average of 4 fires occur annually. On 24 October 2001, approximately 1 km from the south exit, two trucks caught fire after a head-on collision; 11 people were killed, and 28 people were reported missing. In addition, the smoke spread rapidly, and 9 people suffocated. In the case of the Mont Blanc tunnel fire, on 24 March 1999 at 10:46 a.m., a leading driver’s cigarette butt flew into the air intake of a refrigerated truck loaded with 9 tons of margarine. At 10:50 p.m., although the visibility dropped by more than 30%, the vehicle continued to run, ignoring the sensor warning, and the truck exploded in 52 min. The driver abandoned the vehicle and informed the control center through an emergency call. However, the exact location of the accident could not be confirmed due to the inability to identify the closed-circuit television camera (CCTV) video data. In addition, the tunnel fire brigade arrived in 57 min, but due to dense smoke, access was difficult, and many casualties occurred. As illustrated by these cases, if a large-scale fire accident occurs in a tunnel, not only loss of vehicles but also large-scale personal injury may occur. In addition, it is necessary to close the tunnel for a long time from accident to recovery, and the social and economic impact is also severe.

In this paper, we propose a method for rapid localization of vehicles and victims and decision making of fire fighters for sustainable search and rescue activities at an accident site where smoke is rapidly filling the tunnel from the initial fire or heat source. We also propose a novel search solution and application using various sensors and Internet of Things (IoT) technology. We make the following assumptions regarding a concrete accident environment: (1) clear visibility cannot be secured due to thick smoke in the space surrounding the fire; (2) normal illumination is impossible due to disruption of the power supply; (3) there may be many victims in the closed space including inside of vehicles; (4) the search range is wide and a long time for search and rescue is required; and (5) a search for missing person is needed after the end of the initial disaster response. The main contribution of the proposed system lies in judging and propagating the internal situation accurately and quickly even in emergency fire situations. To this end, the proposed system is classified into three types of response: initial situation detection and propagation using IoT sensor, robot operation for securing visibility in smoke, and localization of fire brigade. In the first response, in addition to the existing temperature and smoke sensors, camera-based flame recognition and accident vehicle tracking using the radar are performed simultaneously for accurate detection. The information collected is propagated through the optic fiber cable in the tunnel, but it also suggests an ad hoc network technique that modifies the ‘better approach to mobile Ad hoc networking (BATMAN)’ protocol for stable communication, even when the infrastructure has collapsed due to an accident. In the second response, if the internal situation can not be confirmed accurately despite the first response, the robot enters on behalf of the firefighter and an additional internal image is acquired. The robot not only ensures the safety of firefighters from the risk of further explosions, but also helps them acquire information faster than firefighters themselves can gather it. In the third response, after the firefighter completes the judgment of the internal situation, real-time location tracking of firefighters is performed using ultra-wideband (UWB) devices. For this purpose, in addition to the above-mentioned IoT sensors, UWB anchors are also installed at intervals of 40 m, and firefighters also have mobile devices with UWB embedded therein.

The rest of this paper is organized as follows. In

Section 2, we review the existing search and rescue equipment that can be used in fire sites and analyze the challenges related to addressing tunnel fires. In

Section 3, we introduce various techniques and equipment for precise accident location tracking, and we consider their application in detail in an accident environment based on the above assumptions. We propose a concrete scenario for how the proposed search and rescue equipment can be linked with current firefighting response practices.

Section 4 presents the performance analysis of the proposed equipment and applications.

Section 5 presents additional discussion of the proposed system operations.

Section 6 summarizes the proposed technologies and services, and concludes with directions for future work.

2. Related Works

2.1. IoT and Monitoring Technology

Recently, various sensors and situation cognitive technologies have been developed with advances in IoT technology, and accordingly, various solutions for fire and accident detection have been proposed for roads and tunnels. In Ref. [

1], temperature sensors for detecting fires in tunnels were introduced. A sensor network using them was installed, and the sensing distance performance was measured. Although the transmission distance varied depending on the surface conditions and structure of the tunnel, it was shown that it is possible to sufficiently monitor an actual tunnel environment. In Ref. [

2], it was demonstrated that IoT devices in these tunnels play a very important role in generating and delivering accurate information in the event of a fire.

Among the various IoT sensors, the most commonly used is the camera [

3,

4,

5]. There are many vehicle-tracking approaches for roadside intersection monitoring, and they assume predictable vehicle motions [

6]. Feature-based approaches can be used to handle partial occlusions in intersections [

7]. There are also in-vehicle road monitoring solutions to estimate the pose and motion parameters of an oncoming vehicle by means of various signal processing filters [

8,

9]. However, such camera-based vehicle detection techniques have the disadvantage that the throughput is poor when the number of vehicles increases sharply or when there are many environmental obstacles to be processed on the road. Recently, many alternative techniques for real time tracking of vehicle speed and direction, and algebra-using radar systems, have been widely studied. Tan-Jan et al. [

10] proposed a microwave radar detector that detects individual vehicles on the road by reducing the noise signal and side lobes within each traffic lane. Yichao et al. [

11] proposed another radar-based vehicle detection method that mitigates the interference of urban obstacles, such as trees and buildings. This radar system has been fully demonstrated in a relatively poor environment, the tunnel [

12,

13].

In addition to vehicle accidents, methods for detecting fires have also been utilized in various ways. Typically, fire detection methods use cameras [

14,

15,

16]. Rui et al. [

14] proposed a novel flame-detection scheme based on multiple features, such as chromatic, dynamic, texture, and contour features, to improve the sensitivity and reliability. Martin et al. [

15] reliably detected fire and rejected non-fire motion on a large dataset of real videos. In Ref. [

16], probability density functions were generated to explore irregular flame patterns, especially under poor external conditions such as shadows, reflective surrounding area, and changing neon signs.

2.2. Firefighting Robot Technology

In general, due to the nature of fire disasters, there are many cases where fire fighters cannot enter the burning structure due to high temperatures and thick smoke. If these conditions cannot be overcome, many people may be injured. To this end, robot technologies are being explored to secure fire fighters’ safety in such a dangerous environment and to support effective fire fighting activities [

17,

18,

19]. Many robotic platforms with various forms, including ground driving and unmanned aerial vehicles (UAVs), have been developed. To help remote operators who are located far away from fire sites, Park et al. [

20] proposed a robot-based remote monitoring system with a wireless image transmitter. In Ref. [

21], a mobile rescue robot was employed to explore and monitor the nuclear accident at the Fukushima power plant; this study revealed that reliable communication between the operator and robot system is crucial to gather internal information. This means that interworking and communication with the previously mentioned IoT devices is important [

22]. Kim et al. [

23] proposed a portable fire evacuation guide robot system; it gathers temperature, O

2 and CO gas information through wireless communication. In addition, it provides voice communication between firefighters and victims at remote sites. Recently, UAVs have been recognized as a promising option because they can replace firefighters’ activities in some situations and can be applied for the detection of spreading fires and fire suppression [

24]. Huy et al. [

25] proposed a distributed control framework designed for a group of UAVs that can monitor a wildfire in a large space from the remote and safe distance. Wolfgang et al. [

26] also presented an integrated system for early forest fire detection by using UAV equipped with gas sensors, a thermal camera and microwave sensors. Yuan et al. [

27], proposed another UAV approach for forest fire detection and tracking using median filtering, color space conversion, morphological operation, and blob counter. Since these various types of robots have different advantages and disadvantages and fire scenes are so diverse and unpredictable, it is important to combine appropriate robot solutions. Especially, since it is not possible to use existing global position system (GPS) signals in the tunnel, it is very difficult to track the location of UAVs. Also, in a tunnel of 1 km or more, it is difficult to transfer large-capacity data (e.g., video and thermal image) through the wireless local area network (WLAN)-based communication. Meanwhile, where the various fire detection sensors are pre-distributed, there have been several theoretical studies conducted to optimize the communication routing and the method for constructing the network design to efficiently acquire sensing data in the networks [

28,

29,

30,

31]. However, this assumes that precise flight control and routing are possible beforehand, so it is still difficult in the field of fire that requires extempore and prompt countermeasures for unpredictable risk factors.

2.3. Localization and Tracking Technology

There is increasing interest in various location-based service (LBS) technologies to estimate the exact point of an accident and the location of a fire in a disaster scene. Generally, the global positioning system (GPS) enables positioning in any part of the world, but in indoor or underground spaces object tracking is difficult, due to the restriction of signal arrival. In particular, GPS devices cannot be used in tunnels due to their closed structure, so other radio frequency (RF)-based solutions should be utilized. The most representative examples are positioning methods using WiFi or Bluetooth. Liu et al. [

32] proposed a WiFi-based peer-assisted localization approach to obtain accurate ranging estimation among peer devices and then map their locations. The ranging error showed about 2-m accuracy. Xudong et al. [

33] proposed a Bluetooth-based indoor localization approach that jointly uses iBeacon [

34] and inertial sensors in large open areas. Their performance showed 90% errors within 3.5-m ranging accuracy. In addition, UWB-based solutions [

35,

36] are being developed for more accurate indoor positioning. Because these positioning solutions have different strengths and weaknesses depending on their respective frequency bands, bandwidths, and installation costs, it is necessary to understand the disaster environment to be addressed in advance and to select the appropriate method or combination of methods.

3. Proposed Disaster Response Systems for Tunnel Accidents

This section describes vision technology and network technology for detection of fires in tunnels, radar technology for detection of accident vehicles, robot technology for initial discovery, localization technology for rescue team tracing, and redundant search avoidance techniques. It also describes the control server technology that can control the whole system.

3.1. Arhictecture of Smart Disaster Response System

We assume that there are at least several Internet protocol (IP) cameras, fire detection sensors (e.g., smoke, temperature, gas sensors, etc.) and radars for tracking moving objects in the tunnel. They are connected to a wired or wireless network (i.e., IoT network). The collected data are stored and monitored through the control server in the tunnel management office. At the initial ignition, the approximate fire point and the position of victims can be known through these IoT facilities. When an accident occurs, the tunnel manager first reports to the fire station after the initial response. The initial response includes operation of a tunnel entrance blocking facility, ventilation fan operation, emergency radio broadcast, and dispatch of tow vehicles when necessary.

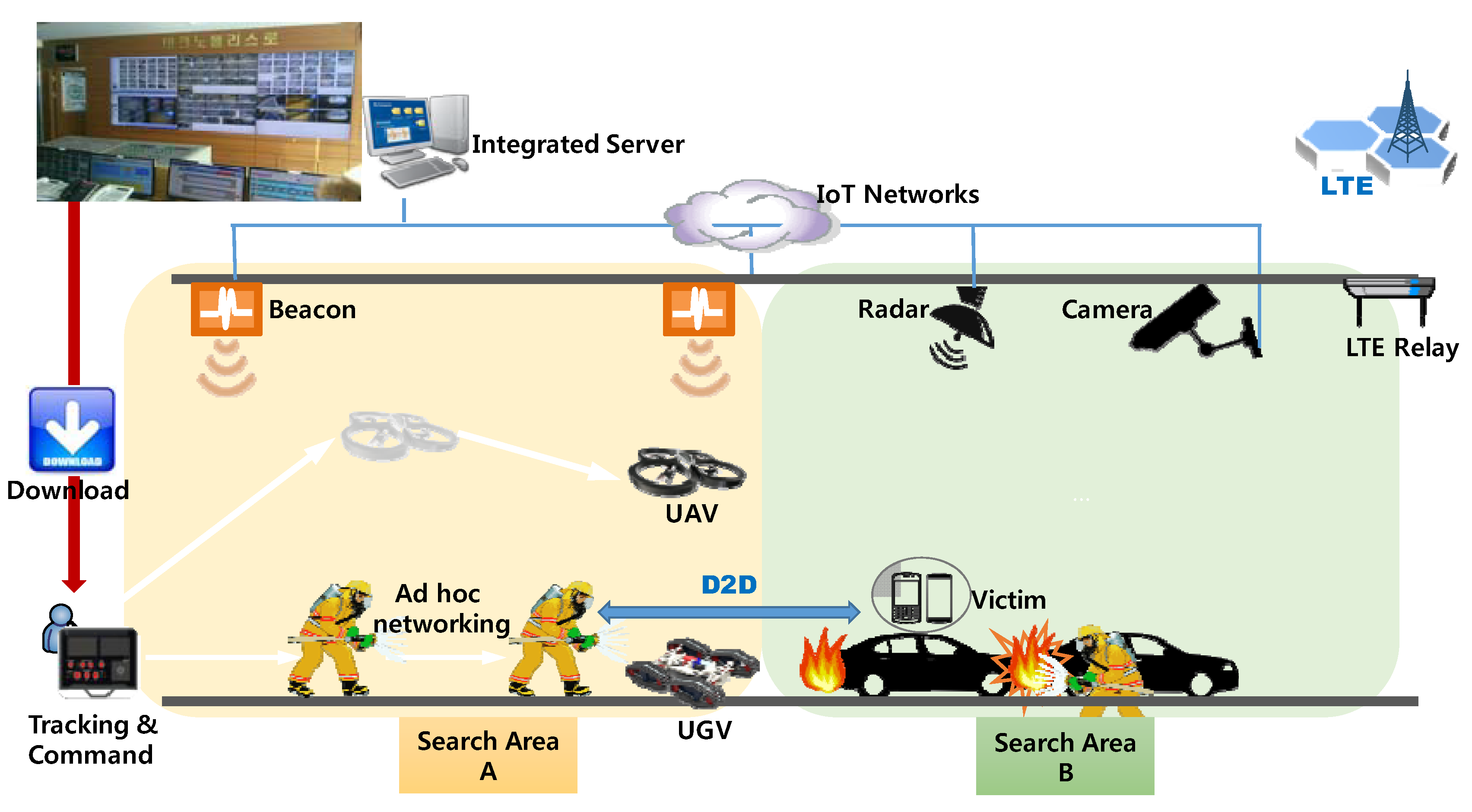

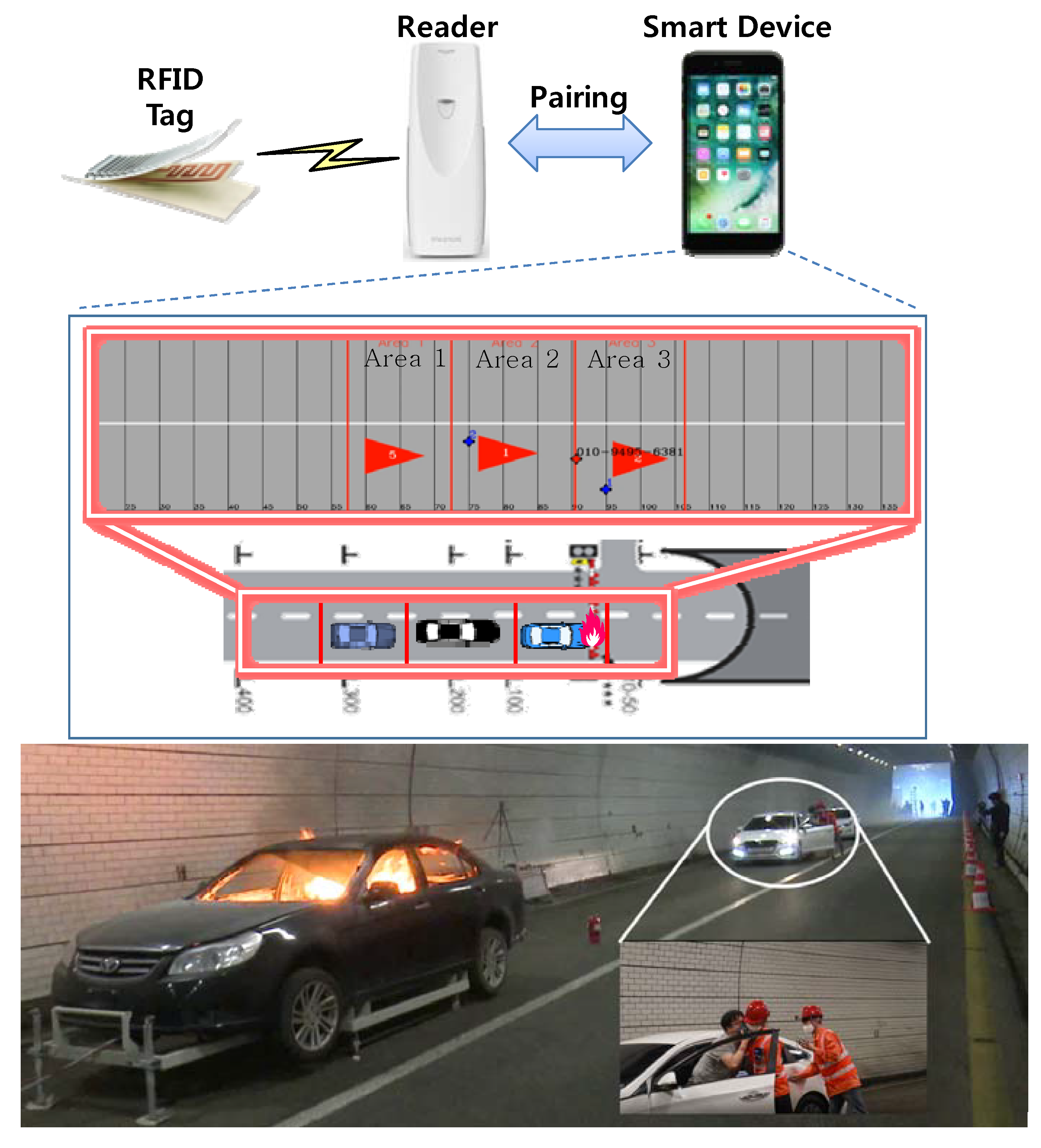

The principles of the general accident response manual specifies arrival on the spot within 5 min after receipt of the first report, but it is actually difficult to achieve this for tunnels outside the city. For some off-urban highways it takes more than 15 min for fire fighting crews to arrive. Therefore, it is very important to actively work with patrol cars or management staff of the tunnel management agency. As part of the initial response of the on-site patrol officer, an UAV or unmanned ground vehicle (UGV) can be deployed for rapid internal navigation and information gathering. The disaster information obtained from the robot can be transmitted to the surrounding fire fighters through device-to-device (D2D) networking or IoT networks. After the fire fighters arrive, the ubiquitous sensor network (USN) server’s initially collected information (e.g., video, fire location) and on-site map data are downloaded to the fire fighter’s field command headquarters (or computer in the fire truck). In addition, it is possible to access the web server of the tunnel management office through smart devices (e.g., smart phones, laptop computers) equipped with an Internet interface, which are consulted by the fire fighters before the arrival at the site, so that the initial accident information can be easily downloaded in advance.

The field fire fighting commander determines the number of fire fighters to be sent in and the number of additional robots sent in by considering the initial information (e.g., fire location, fire type, map, etc.) obtained from the IoT sensors. The commanding computer uses the information to divide the search area in the tunnel into n areas (Area A and Area B in

Figure 1) by accident point or search priority and then assigns a search area to each fire brigade to enter. At this time, according to the situation in the field, robots can be sent in first for the safety of the fire fighters, and the range and procedure of the search operation can be adjusted according to the commander’s judgment. Also, to perform an effective search and minimize unnecessary search delays, the unrecovered areas are reallocated to robots or fire fighters who have already completed their search missions.

The patrol robot transmits the position information of isolated victims and the accurate fire location through the wireless network to the surrounding firefighters or the command vehicle via its own sensors (e.g., IR camera, temperature sensor, gas sensor, position sensor, etc.). At this time, it is assumed that the fire fighters have wireless terminals using the same wireless channel.

Meanwhile, it is very important to search for victims who cannot escape from the tunnel without assistance. The IP camera in the tunnel, the camera of a robot sent in, or a fire fighter may detect them, but a victim may inform the fire fighter of his or her own position using a smart phone. This enables direct communication with fire fighters through long-term evolution (LTE) or WiFi-Direct technology [

37]. If a victim does not carry a smart phone, there is no way to confirm his or her location in this way. However, tunnel accidents occur mainly in vehicles. Therefore, it is possible to perform a precise search based on the position of the vehicle obtained by radar based on the fact that the victim is in the vehicle or near the vehicle. The communication scenarios for each entity at an accident scene in a tunnel can be classified as follows.

Between robots: Robots form a wireless ad hoc network to support communication inside and outside the tunnel.

Between robots and fire fighters: The vehicle or victim information detected by a robot is broadcast to all surrounding fire fighters or the nearest fire fighter.

Between fire fighters: Fire fighters communicate and report mutual search and rescue collaboration messages as well as the commander’s instructions.

Between robots and victims: Robots and victims exchange their location information and evacuation guidance instructions.

Types of data: image/video, audio, position, various control information.

In the above communication scenario, it is difficult to transmit or receive data smoothly to the outside because the transmission range of conventional WiFi is limited in a long tunnel of 1 km or more. To overcome this problem, an ad hoc network is installed that serves as a relay for data transmission between equipment and fire fighters. In other words, by constructing a temporary network, it is possible to provide seamless communication services even in extreme situations, such as power outages in a tunnel or infrastructure collapse.

3.2. IoT and Vision Sensor for Early Fire Detection

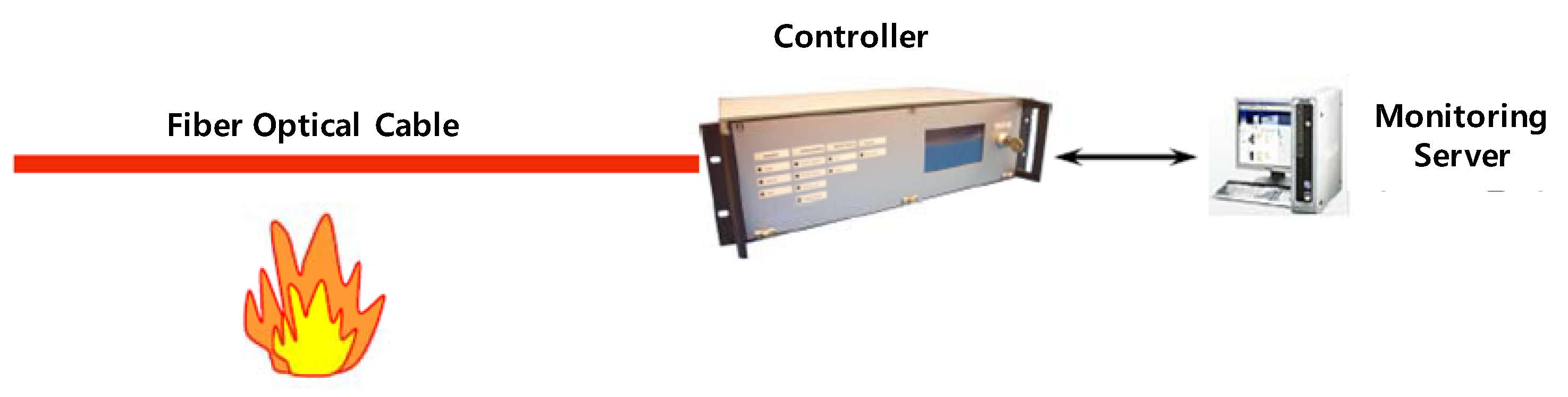

Most tunnel fires occur in vehicles that are running, so the fire is first detected by the driver. The driver makes an emergency stop and alerts the status to the control center via an emergency call, but if the driver is injured or unaware of the fire, the fire sensors installed in the tunnel must detect it. Currently, one of most accurate and robust types of fire detection equipment is fiber optic cable, which measures the distribution temperature of objects and senses temperature changes by distance and region. The advantage of the fiber-optic method is that it has a low maintenance cost. In addition, it provides accurate measurements and is almost permanently usable. However, the initial installation cost is high and the scalability is low.

Figure 2 shows the overall architecture of the fiber optic cable system.

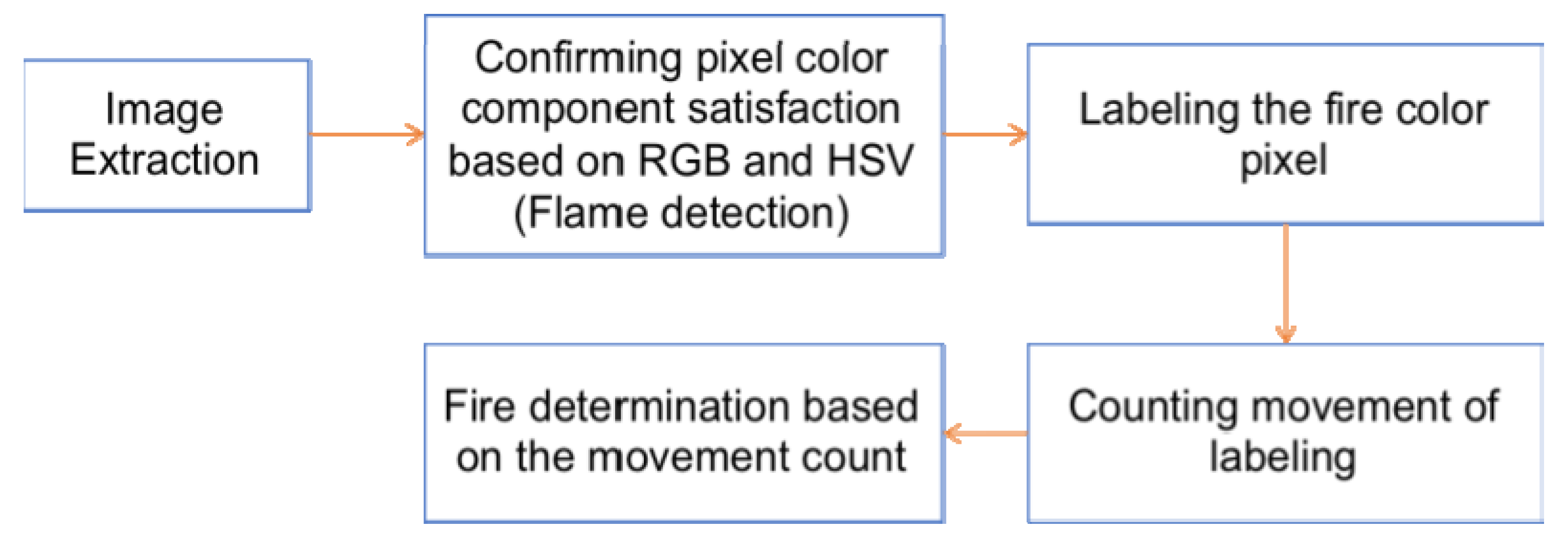

The second fire recognition system is a camera-based monitoring technique such as CCTV, which is relatively inexpensive to install and most commonly used. In general, it is possible to detect objects in the range of 100 m to 150 m by using a high-definition (HD) camera, and it is possible to judge the situation, in that the field can be checked in real time. However, there is an inconvenience that the administrator must constantly perform visual monitoring. To this end, it is possible to recognize objects and the fire situations in real time using IoT-based image-processing technology. In the proposed system, the occurrence of a fire is judged based on the diffusion pattern of flame and smoke. Although the flame shape tends to be changed irregularly with different environmental conditions, it has the unique color value in the specific range. In order to distinguish the color of the flame in the range, we generate the digital image which is converted into hue-saturation-value (HSV) color space by using the hue values except for the brightness and saturation. Then, the scheme extracts the red, green, blue (RGB), H, V values of the pixels in order to configure the candidate region which is considered as real flame. For this calculation, we define expression (1) as follows.

By adopting expression (1) into digital pixel, the proposed scheme generates a flame candidate binary image, called F

1. After consecutive checking the fire zone candidate, if the count variable is more than the threshold candidate zones (it is set to 100) and the variable is concentrated more than 3 areas, the candidate region is judged to be a real fire zone. The overall operations and recognition algorithm of proposed scheme are summarized in

Figure 3.

The proposed vision algorithm enables real-time detection without human intervention by ensuring the recognition time of 3 to 10 s after the first fire occurs.

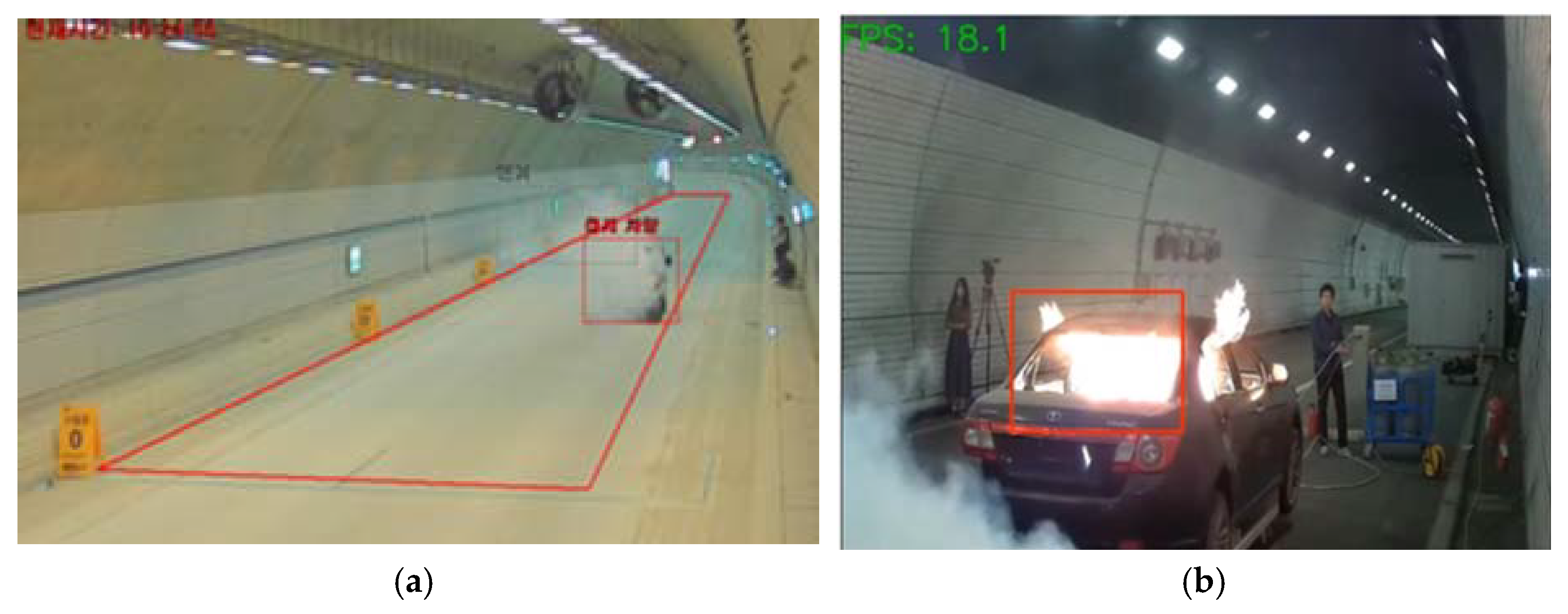

Figure 4 shows the technique of detecting smoke and flame through camera images.

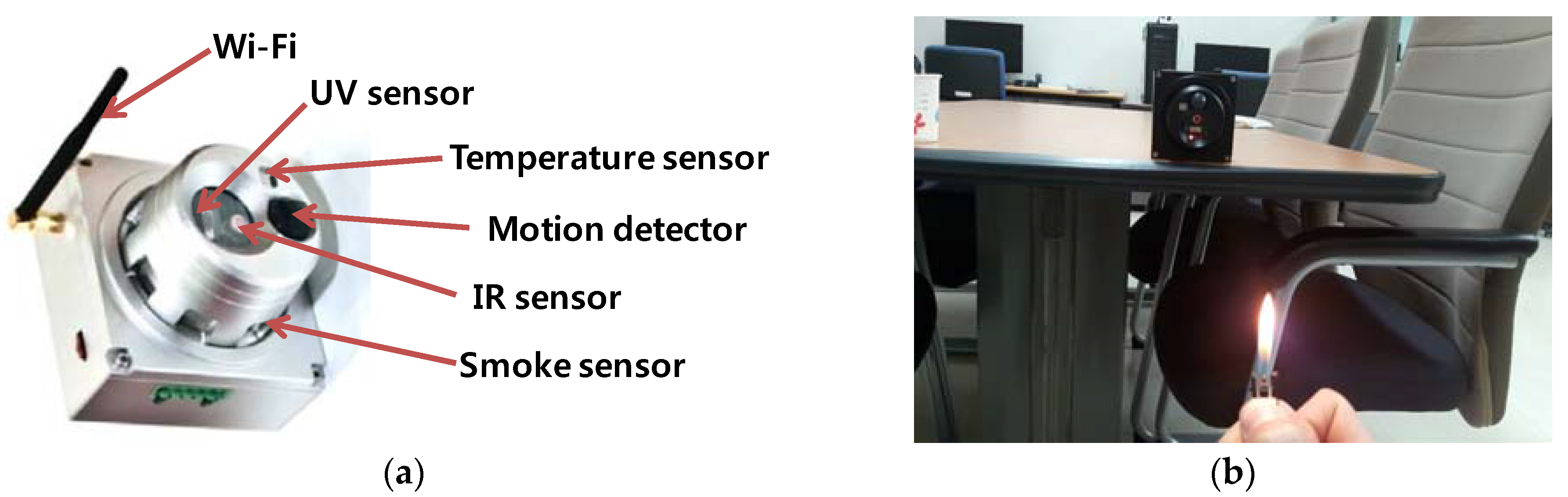

The third proposed device is a portable, scalable, wireless communication-based compact fire detector. Recently, the weight reduction of sensors has enabled the development of sensors that incorporate heat, smoke, flame, and ultraviolet (UV)/infrared (IR) sensors.

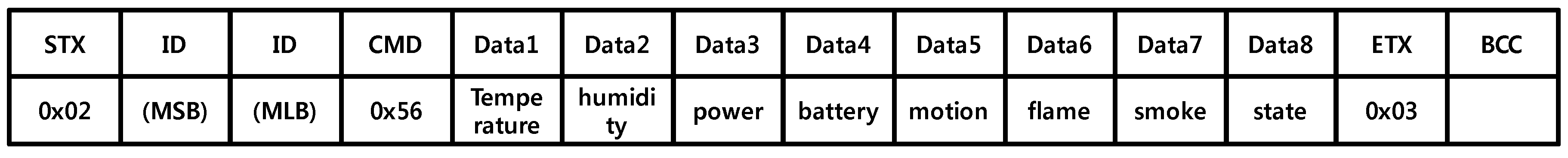

Figure 5 shows an integrated sensor platform for producing fire alerts using WiFi. The proposed system can be installed at intervals of 50 to 100 m depending on the WiFi transmission range. In addition to heat and smoke detection, motion sensors can be used to monitor emergency situations in a variety of environments, such as detecting activity of a victim. The detailed message format for transmission is shown in

Figure 6. Each data field has 1 byte and the total message size is 14 bytes. This message is transmitted through the RS-485 interface at 9600 bps, which requires additional Transmission Control Protocol and Internet Protocol (TCP/IP) converter for transmission via WiFi. The successfully received messages are stored in the database on the server for further investigation, which is shown in

Table 1.

In order to reliably transmit sensor data to the gateway using WiFi, proper routing protocol design is crucial especially in wireless multi-hop networks. The basic routing technique is based on BATMAN [

38], which is one of the most representative mesh network protocols. BATMAN periodically transmits originator messages (OGM) and uses path quality metric called transmission quality (TQ). However, in order to reflect the emergency of the fire scene, the proposed system uses temperature information as a routing metric for calculation of path TQ (p) having length n > 1 and traversing nodes (o

1, o

2, …, o

n) as shown in expression (2).

where α is an coefficient to penalize a longer route with (0 < α < 1).

and

are the current temperature of node o

i and thermal resistance degree of node o

i, respectively. Temperature can be measured in real time because all fire sensors are installed. As a result, the collected data is transmitted avoiding routes with high temperatures reaching the heat resistance limit of the sensor platform. If a user terminal without adopting a temperature sensor establishes a routing path based on a general TQ metric.

3.3. Radar Sensor for Vehicle Detection

Recently, many intelligent transportation system (ITS) systems have been studied to monitor the flow of vehicles on the road and for the detection of dangerous situations through radar. In particular, since radar uses radio wave transmission and reception based on the Doppler effect, it has the advantage that it can perform robust and accurate position tracking in extreme conditions, such as poor illumination, smoke, snow, and rain, in comparison with existing cameras or optical sensors. In a typical conventional positioning method, a global positioning system (GPS) sensor provides the position of a vehicle or an object in real time; however, it is impossible to receive a GPS signal in a closed space, such as a tunnel. Of course, radar signals are also very distorted due to diffuse reflection, signal fading, and the ghost effect [

39] in a tunnel. However, in the proposed system, a signal filtering [

13] process is introduced to enable real-time vehicle tracking in a tunnel. Vehicle accident detection using a radar sensor can be performed by checking whether the vehicle is moving at excessive speed or has performed an emergency stop. In addition, information regarding lane departure using the identification, speed, direction, and coordinates of the running vehicle can be obtained in real time. For example, if a vehicle suddenly stops in a tunnel, the radar suspects an accident first. This is because the tunnel is basically an area where parking is prohibited. However, detection of flame and smoke after the vehicle has stopped can be done by camera-based image analysis.

Figure 7 shows the installation of a radar in a tunnel to monitor the driving of vehicles and to display the acquired coordinates and the accident status to the control system. In

Figure 7a, the leading vehicle (ID 5) of the two moving vehicles has stopped 90 m into the tunnel, and the following vehicle (ID 9) has stopped. The exact x and y coordinate values are shown in

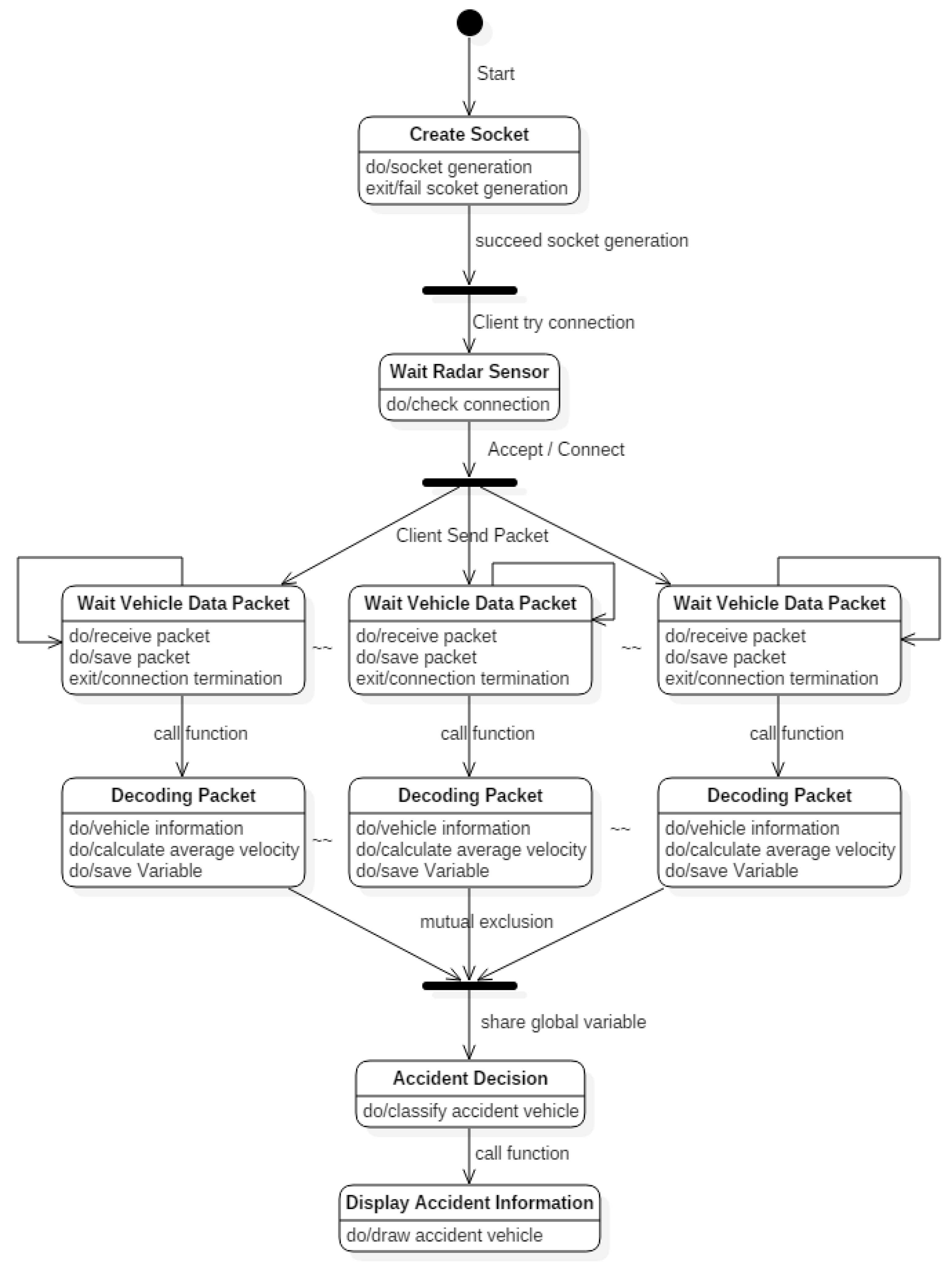

Figure 7c. The x coordinate is the distance the vehicle travels from the entrance, and the y coordinate is the horizontal distance away from the centerline of the tunnel. The radar sensor of the developed system has an accuracy of 0.25 m, and it can track up to 64 vehicles at the same time. The maximum detection distance per vehicle is about 250 m. Therefore, if two or three radars are installed in the tunnels at regular intervals, accurate vehicle position tracking and accident detection for all dangerous sections are possible. In addition, temperature, smoke, flame, humidity, and motion sensor measurements obtained by the previously mentioned fire sensor can be confirmed through the environmental information menu in the server. For multiple radar operations, we proposed a client/server based system architecture as shown in

Figure 8. Each radar device encodes its acquired information and transmits it as a packet, and the server system decodes it to display the moving vehicle throughout the tunnel and confirms whether or not an accident has occurred.

3.4. Robot for Prompt Search

When a fire occurs in a tunnel, the cause, scale, and location of the accident are accurately confirmed through CCTV, fire sensors, and radar, and this information is transmitted to the control server via the wired or wireless network in real time. If the fire is mild, the fire can be suppressed by the driver himself or herself. However, it usually takes 5 to 10 min for a fire truck to arrive at the scene, so the fire typically progresses rapidly, and smoke and fumes fill the tunnel. In this situation, it is possible to use fans for ventilation, but in small or old tunnels, fan installation may be insufficient. Thus, it is necessary to investigate the internal fire progress in detail because hasty entry may lead to the injury of fire fighters.

Of course, it is possible to monitor such a situation remotely through existing CCTV. However, since visibility is rapidly reduced by smoke and there are many blind spots, it is difficult to use existing camera equipment to observe tunnels. To overcome this problem, a robot that can collect information on the hot spot can be utilized in place of a fire fighter. There are various kinds of reconnaissance robots for disaster site deployment, but if a tunnel is longer than 1 km, the robot needs to have an average speed of 50 km/h to reach the fire within 1 to 2 min. For this purpose, a wheel-type ground-based robot is suitable for faster driving, in comparison to the caterpillar type. However, when a large number of vehicles are stuck in a tunnel due to an accident, access is difficult. Of course, if the fire brigade has secured a shoulder or emergency ramp, it will be possible to use a robot.

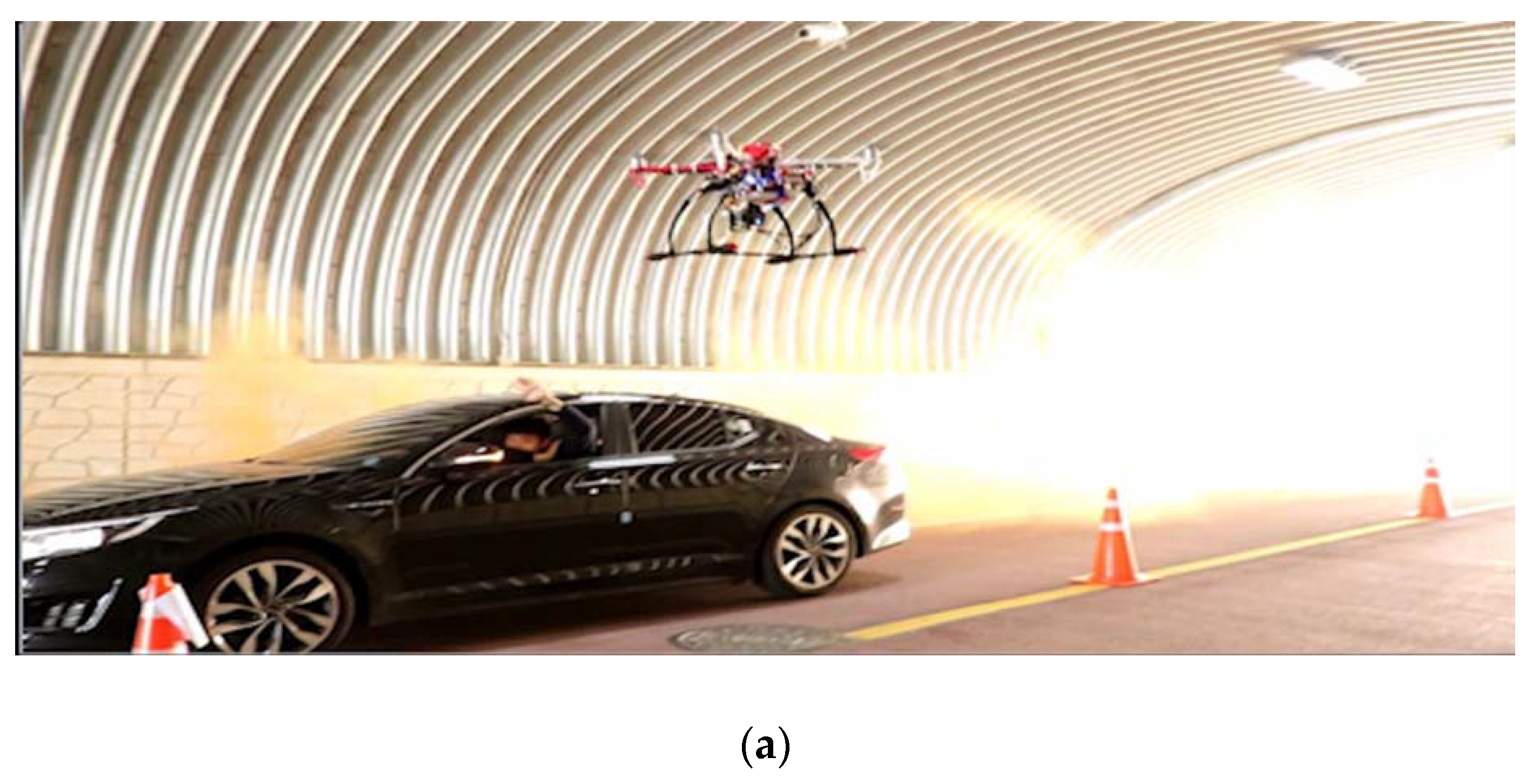

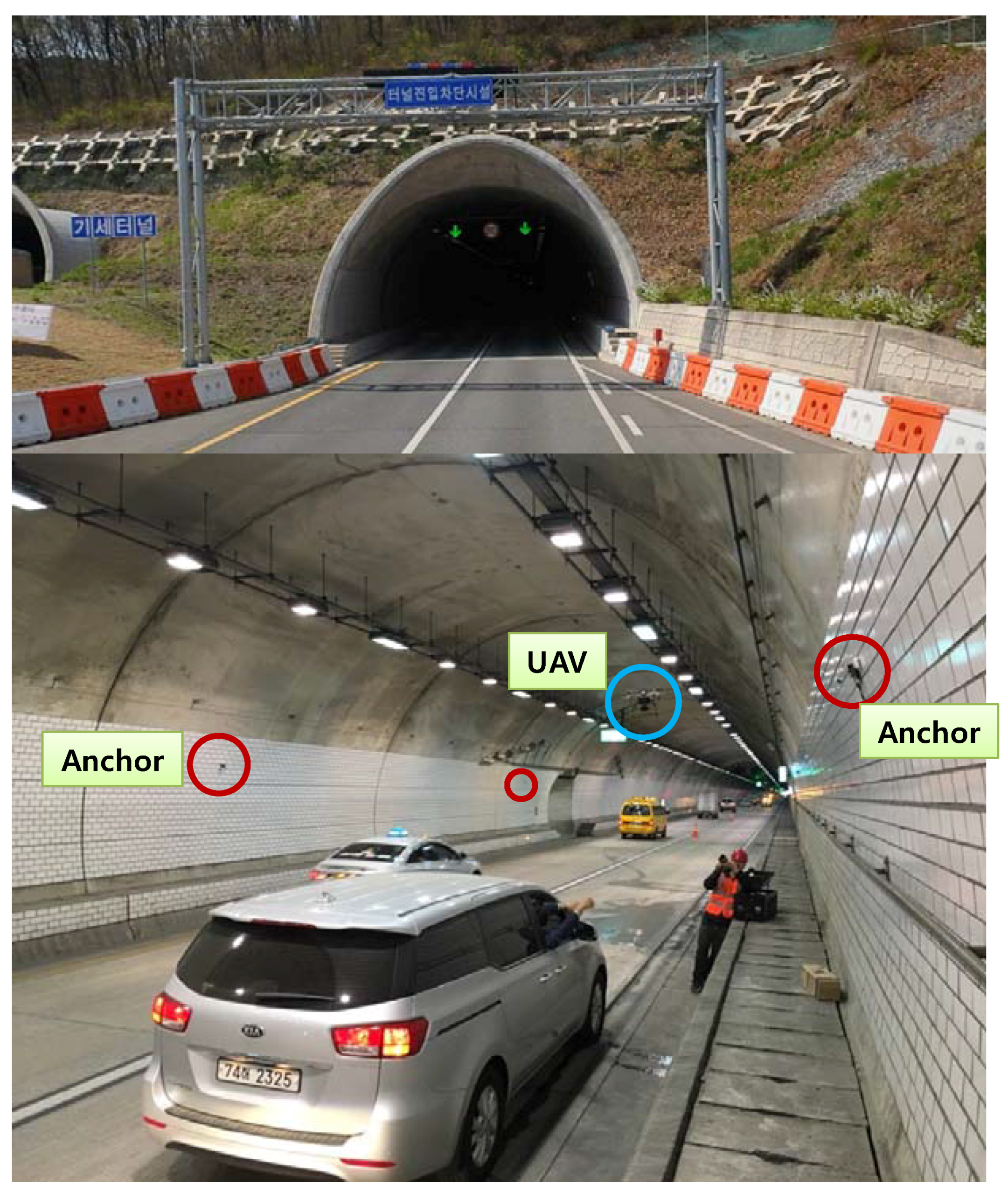

Another option is to use drones to quickly access the fire through the air, regardless of the vehicle’s identity. In particular, if the altitude is maintained at a certain height above the vehicles, straight flight is possible without collision with obstacles. It is important not only to have a high-resolution camera, but also to mount an IR camera on the robot to overcome the smoke and locate the fire and victims. Recently, as the drone platform has become popular, low-cost models and camera modules have been developed. In the proposed system, IR and red–green–blue (RGB) cameras are installed in a drone as shown in

Figure 9. The photographed image data is directly transmitted to a fire fighter who is operating the drone. The data can also be transmitted to a remote control center through a WiFi-based access point (AP) built into the tunnel or a wireless interface mounted on the controller. However, since the actual transmission distance of WiFi is about 100 m, when multi-hop communication is used in a tunnel of 1 km or more, the throughput is drastically decreased according to the increase of the number of hops. Therefore, in order to smoothly transmit and receive image data in a tunnel of 1 km or more, an analog RF communication method is suitable. The proposed system extends the communication range to more than 1 km by using RF module of 424 MHz. Another consideration is the issue of whether the UAV will receive data from sensors installed in the tunnel and participate directly in the routing procedure. Although it is possible to be developed, it is a problem that the weight of the payload and the battery consumption rate increase due to the addition of the RF of the same specifications as the sensor deployed in the tunnel. Moreover, due to the high mobility of the UAV, the additional handover delay increases. Therefore, the proposed fire response system provides the routing process only among the statically installed IoT sensors, for fast and stable transmission of emergency information considering the particularities of the tunnel. Despite this extended communication distance via analog based RF communication, it is still difficult to use automatic flight, such as the waypoint flight of a drone, because it is impossible to track its position using GPS inside a tunnel. Therefore, the burden of pilots to manipulate drones by relying on camera screens is still a challenging problem to be solved.

3.5. Localization and Commanding for Firefighters

By using the IoT sensor and robot described above, the firefighter collects information on the situation inside the tunnel. If it is judged that it is safe, even if the rescue crew is present, the actual fire brigade starts to enter. At this time, the most important duty of the fire brigade is to find and rescue the survivors in the vehicles involved in the accident. That is, it is important to identify the accurate location of survivors and fire fighters to support efficient search and rescue operations. For this purpose, the survivors can announce their location through their voices, but if they use smart phone and radio frequency identification (RFID) technology, they can transmit their location information through the wireless network. Fire fighters can also transmit their locations to the control center using RFID readers and dedicated terminals. In this proposed system, RFID tags are installed in the tunnel at regular intervals, and a user of an RFID reader approaches the tags and measures their coordinates and time. Then, the location information is transmitted to the remote control center. Although RFID systems vary according to RF specifications, they are generally recognizable at distances of 5 to 30 m, and tags are very cheap, so they can be installed over a wide area of the tunnel. A conceptual diagram of the constructed system is shown in

Figure 10. If an RFID reader reads a tag installed in the tunnel in advance, the data can be identified through a smart device paired with Bluetooth, and the corresponding coordinates are displayed on the map.

Meanwhile, the process in which a fire fighter searches for victims is repeated at least three times in the same area. This is because it is necessary to reaffirm that there are no victims who are found in the field. To do this, we divide the search area for each accident vehicle and report the number of times a search of the area is completed to the control center. Thereafter, the control center notifies the firefighter of the sections that have not been repeatedly searched more than three times, so that no unconfirmed sections are generated. At this time, the completion of the search in the corresponding area needs to be explicitly input through the application of the mobile terminal held by the firefighter. In other words, it is up to a firefighter’s personal judgment to decide whether or not to complete search operation in a certain area. In

Figure 10, three cars are divided into three search areas when the vehicles cause a chain collision accident. The positions of the three vehicles can be confirmed by using the previously proposed radar system, and the control server dynamically divides the accident spot into three search areas based on the location of stopped vehicles with the default option. Of course, according to the fire commander’s field judgment, the search area can be manually adjusted.

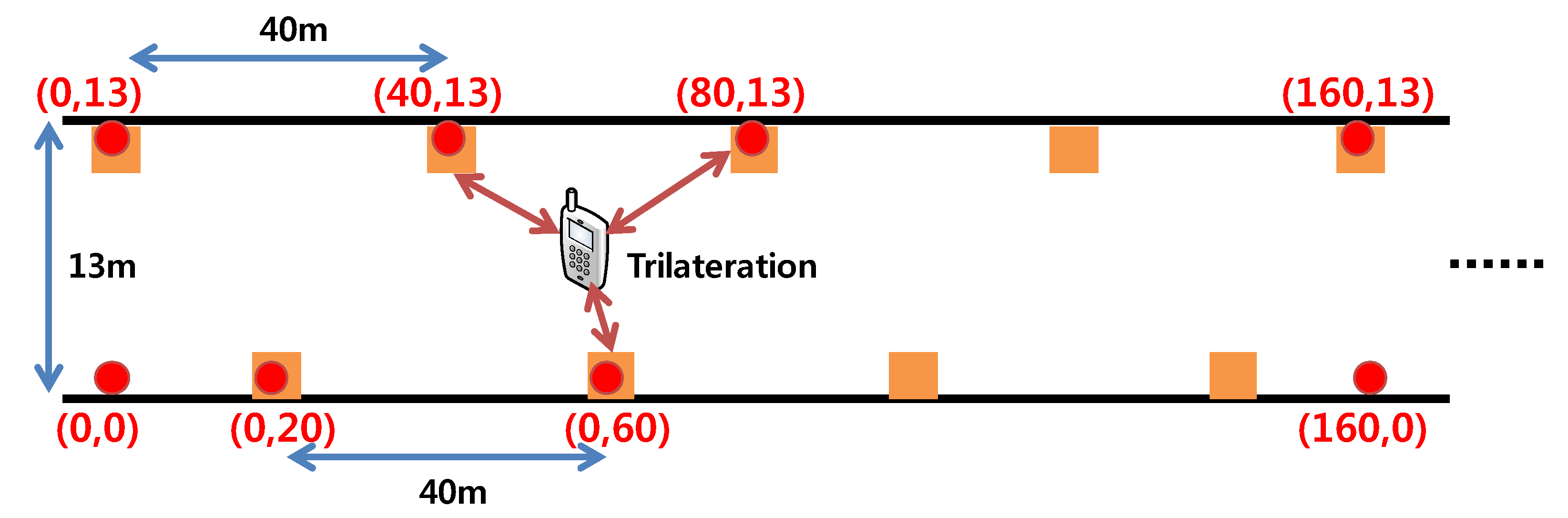

If more precise localization than that achieved by RFID is needed, the system can adopt an UWB technology. The proposed system is also compatible with UWB RF. It has been confirmed that it provides precise positioning with accuracy within 20 cm indoors when Decawave DW1000 (commercial product) [

40] is used. As shown in

Figure 11, the construction model for the basic positioning can measure the x, y coordinates through trilateration by receiving the distance information using UWB signals from three anchor nodes. Pairing connection using Bluetooth is also possible for interworking with existing smart devices. The measured data is displayed in real time on the map program in the terminal or the server computer. In

Figure 10, two fire fighters are sent into search area 2 and search area 3 indicated by blue dots. In addition, a red dot indicates a victim and helps the adjacent fire fighter to rescue the victim.

When it is installed inside a tunnel, the accuracy can be reduced to within 3 m due to wireless physical error and the loss of line of sight (LOS), because of obstruction by surrounding vehicles and walls. However, assuming that the length of a typical passenger vehicle is 5 m, there is no great difficulty in estimating the position of an accident vehicle. In order to obtain optimal accuracy performance, it is important to arrange the appropriate anchor nodes. In the proposed system, each anchor is arranged at intervals of 40 m per wall as shown in

Figure 12. The installation height of the anchor is set to about 4 m to overcome the non-loss of line of sight (NLOS) problem caused by vehicles. The target tunnel is selected for the 1.9 km-long motor way tunnel (latitude: 35.776131, longitude: 128.497783) located in Daegu city, Korea. The tunnel consists of two lanes, the road width is 13 m and the height from the floor to the ceiling is 7.5 m. The target tunnel reflects the latest design structure of a tunnel and vehicles running at a high speed of 80 m/h or more. It is also difficult to access the inside because this tunnel has a length of more than 1 km, like in the Gottard tunnel fire case. The shape of the inside of the tunnel and the progress of the experiment are shown in

Figure 13.

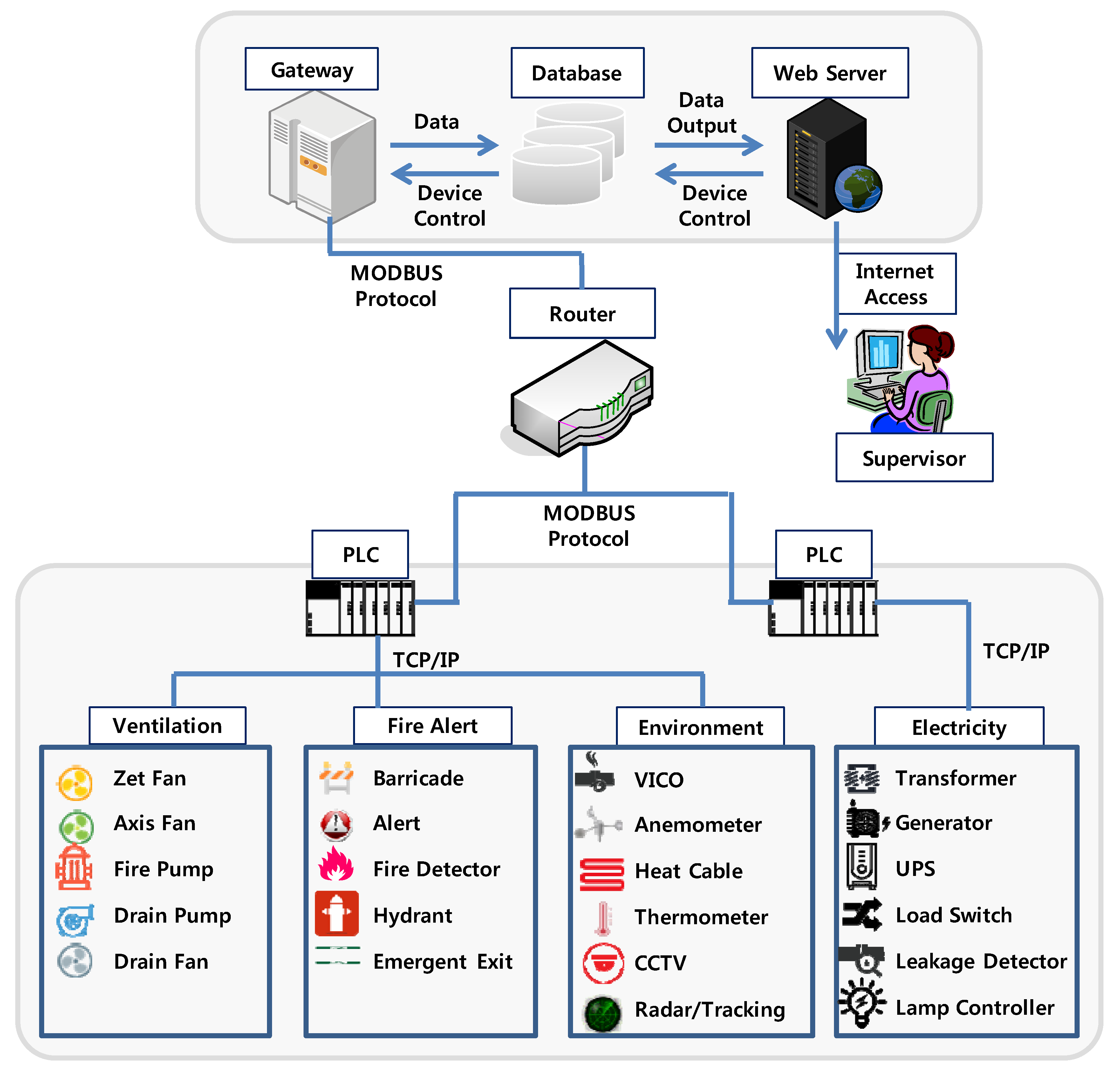

3.6. Integrated Remote Control System

To effectively control and monitor the search and rescue equipment, it is necessary to develop a control system that operates in an integrated manner. In addition, a system should also be developed to control the electricity and disaster prevention facilities in the tunnel in real time so that the manager can respond quickly to emergency situations. In general, to monitor and respond rapidly, the data collection period should be shortened to 100 ms. In addition, the data transmission rate should be maintained at 95% or more through adopting of the TCP/IP protocol for reliable data transmission and reception. Therefore, the proposed system is designed to perform web-based monitoring and control so that it can be easily accessed by related organizations (e.g., fire agency, police agency) that need information sharing. The system was designed to provide the commander in charge of a fire and accident scene with as much information as possible regarding conditions inside the tunnel. Thus, the proposed system assists prompt response by providing accurate and abundant situation information to firefighters in the disaster field.

Figure 14 shows the overall architecture of the proposed control system. Basically, it can monitor the status of disaster prevention equipment (e.g., ventilators, drainage pumps, barriers, generators, transformers, etc.) in a tunnel. In addition it can control accident monitoring equipment, such as CCTV and fire sensors. The communication protocol between various types of equipment maintains compatibility with legacy equipment using MODBUS [

41]. The main advantage of using the proposed integrated system is that it can monitor multiple tunnels and various facilities in one computer and can control these facilities in real time. In particular, it is easily accessible from any place where the Internet is connected through the web, and a separate database is maintained to provide operation history and statistics of the equipment.

4. Experiments and Discussion

4.1. Temperature and Smoke Experiments

It is important to understand the characteristics of tunnels, especially regarding heat and smoke, so that the search and rescue equipment developed in this study can be effectively used in the actual field. For this purpose, a fire dynamic simulator (FDS) [

42] was used to analyze the fire behavior in the tunnel. The experimental conditions of the tunnel were assumed to be 1 km in length, 4.5 m in height, and 13 m in width. In the simulated fire situation, one car was burning in the center of the tunnel, and the temperature inside the tunnel was set to 10 °C with 1 atm. The outside temperature was set to 15 °C. The condition of the fire source had a heat release rate (HRR) of 2500 kW, and the size of the fire source was set to 3 × 2 × 0.5 m. Experiments were carried out to measure the changes in temperature and smoke over time. The results are shown in

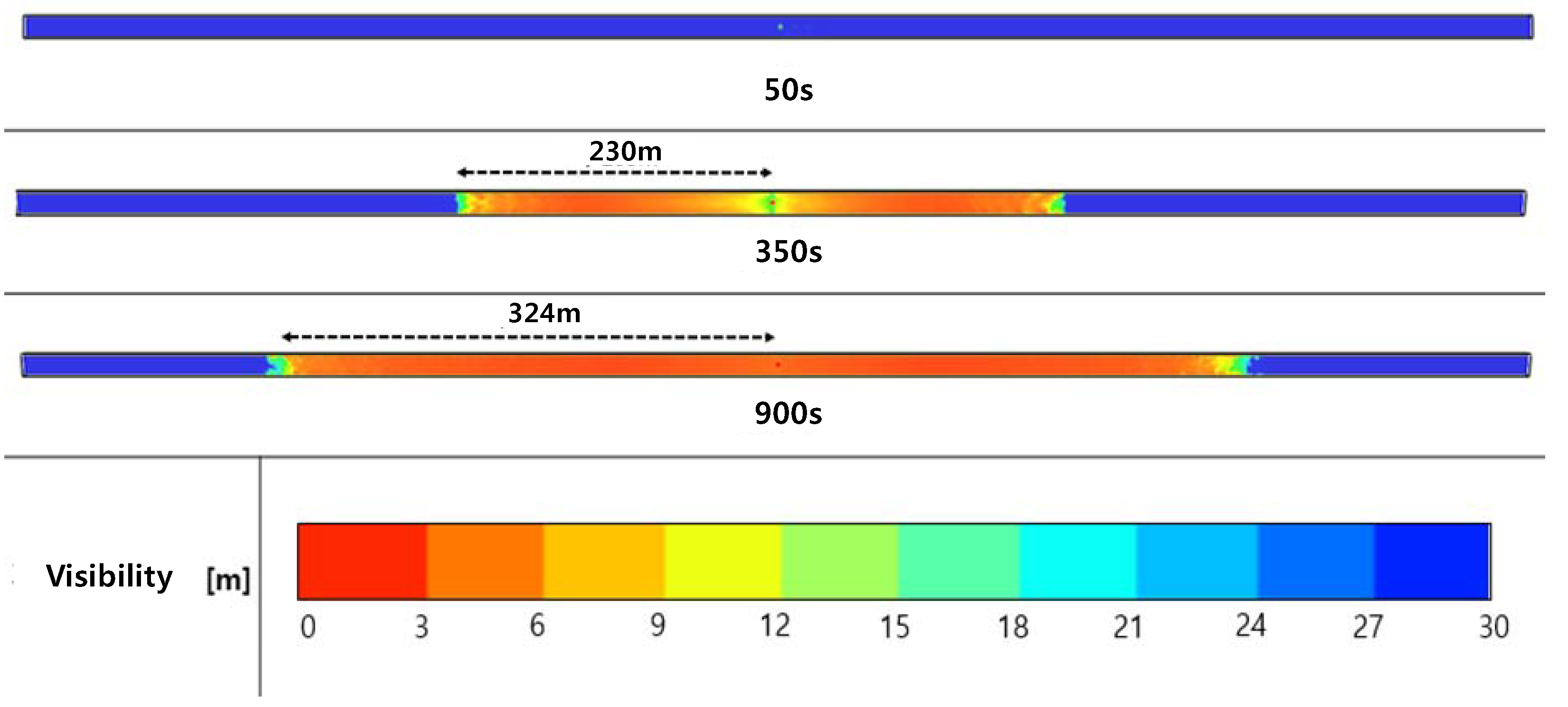

Figure 15 and 16. As seen in

Figure 15, the heat is not easily diffused and the temperature rises to a maximum of 450 °C at a height of 10 m above the source of the fire. In addition, it can be seen that the temperature rises to 300 °C near the ceiling above the fire source. This means that for the initial search operations, a robot or fire brigade can reach the fire up to 10 m distance without heat protection equipment. Of course, if the fire brigade is equipped with heat resistant clothing or equipment, it is possible to reach a fire source up to 1 m distance. However, when a vehicle fire spreads to a large number of neighboring vehicles, the amount of heat generated is further increased, and the temperature is expected to increase sharply.

Figure 16 shows the behavior of smoke over time under the same conditions as in

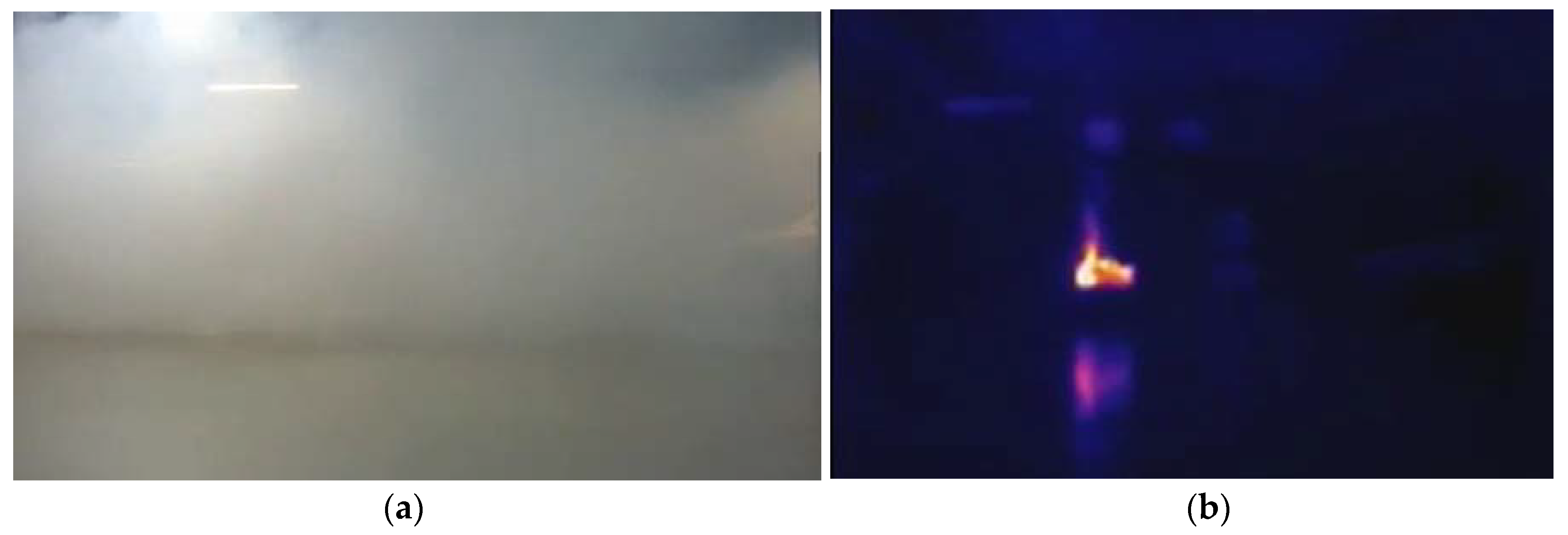

Figure 15, and smoke diffusion was not observed at 50 s after ignition. At 350 s, the visible distance of about 200 m from the ignition point was about 9 m, and when 900 s had elapsed, the visible distance to the vicinity of 320 m in both directions was confirmed to be about 9 m. This means that as the fire brigade’s input would be delayed; the smoke would spread and it would become very difficult to perform search and rescue operations. However, if an IR camera is used, images can be transmitted through the smoke, so it would not be difficult to locate the fire and victims.

Figure 17 shows that this is possible in an environment with an actual visibility distance of 9 m or less. Although it is not possible to identify a fire point by a conventional camera, an IR camera accurately recognizes a fire point.

Table 2 summarizes the fire recognition performance of the vision system proposed in

Section 3.2. Experiments were carried out to determine whether the fire alarm was successfully generated and the recognition ratio according to the distance between the fire point and the camera, illumination (day, night), fire type (gasoline, heptane, wood). Experimental results show that the alert message is successfully transmitted in all cases, and the recognition ratio is higher as the distance from the fire spot is closer and the surroundings are darker.

4.2. Communication Experiments

In the event of a fire or an accident, it is important that the data obtained from various sensors should correctly arrive at the control server through the wired or wireless networks. For this purpose, we defined the data delivery ratio (the number of packets successfully received against the total number of transmitted packets) and verified the performance. The experimental environment is based on the conceptual diagram in

Figure 1, and the gathered data is transmitted to the server via WiFi network. WiFi uses IEEE 802.11 b/g/n/ac specifications and maintains a communication distance of 50 m. The experimental results are summarized in

Table 3 based on the type of data and the data delivery ratio. Since the proposed system shows a delivery ratio of more than 97% in the wireless environment, it is considered that it can receive emergency signals accurately and successfully. Nevertheless, due to the characteristics of the wireless environment, reception errors may occur due to fading, distortion, packet collision, and so forth. However, if TCP/IP-based retransmission or error control is adopted, it can be overcome sufficiently.

In addition to the data delivery ratio, we also analyzed the delay time and the server response time for each data transmission. Transmission delay time is the delay between sending a packet in the buffer of the sending node and receiving it successfully from the receiving node. The server response time is the elapsed time until the server interprets the data and approves the connection. The delay time for each data is shown in

Table 4, the transmission time is close to real time. At most, it is about 500 ms. However, since the transmission of the image data takes about 1.8 s, the delay of the image data is longer than that of the other sensor data. This is because after the preprocessing of an image is finished, some software calculation time is required for it to be recognized as an image of fire. However, considering this, the accident can be propagated within 2 s. Therefore, the delay poses no great difficulty for actual rescue activities.

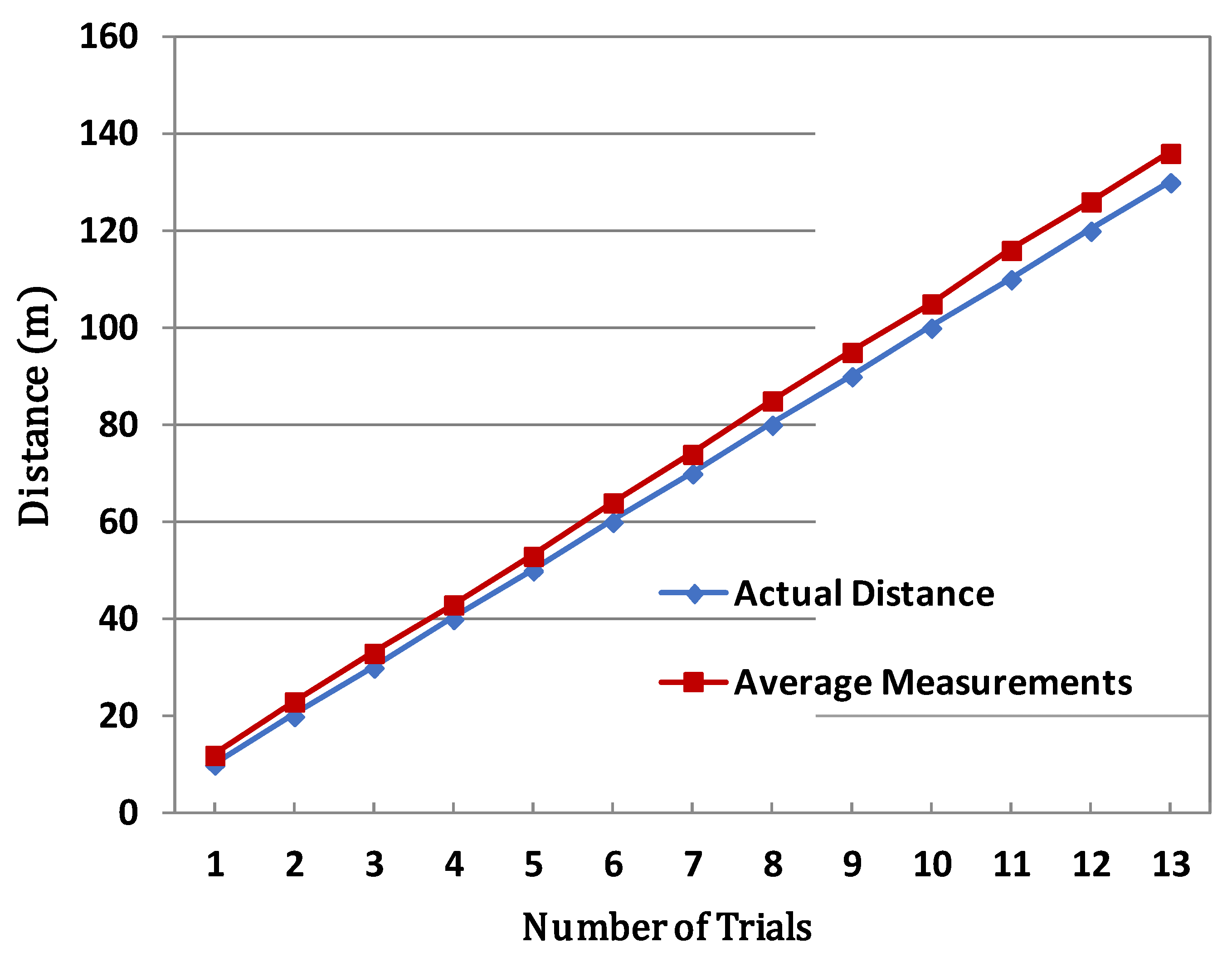

4.3. Localization Experiments

For efficient search and rescue when an accident involving a tunnel fire occurs, it is important to measure the positions of stopped vehicles, victims, and fire fighters in the tunnel. In an experiment to assess whether the system can accurately measure the position of the fire brigade, a firefighter carrying a UWB terminal repeatedly moved within the anchor’s radio range. We assumed that the anchor is already installed in the tunnel, as shown in

Figure 8. The measurement results are shown in

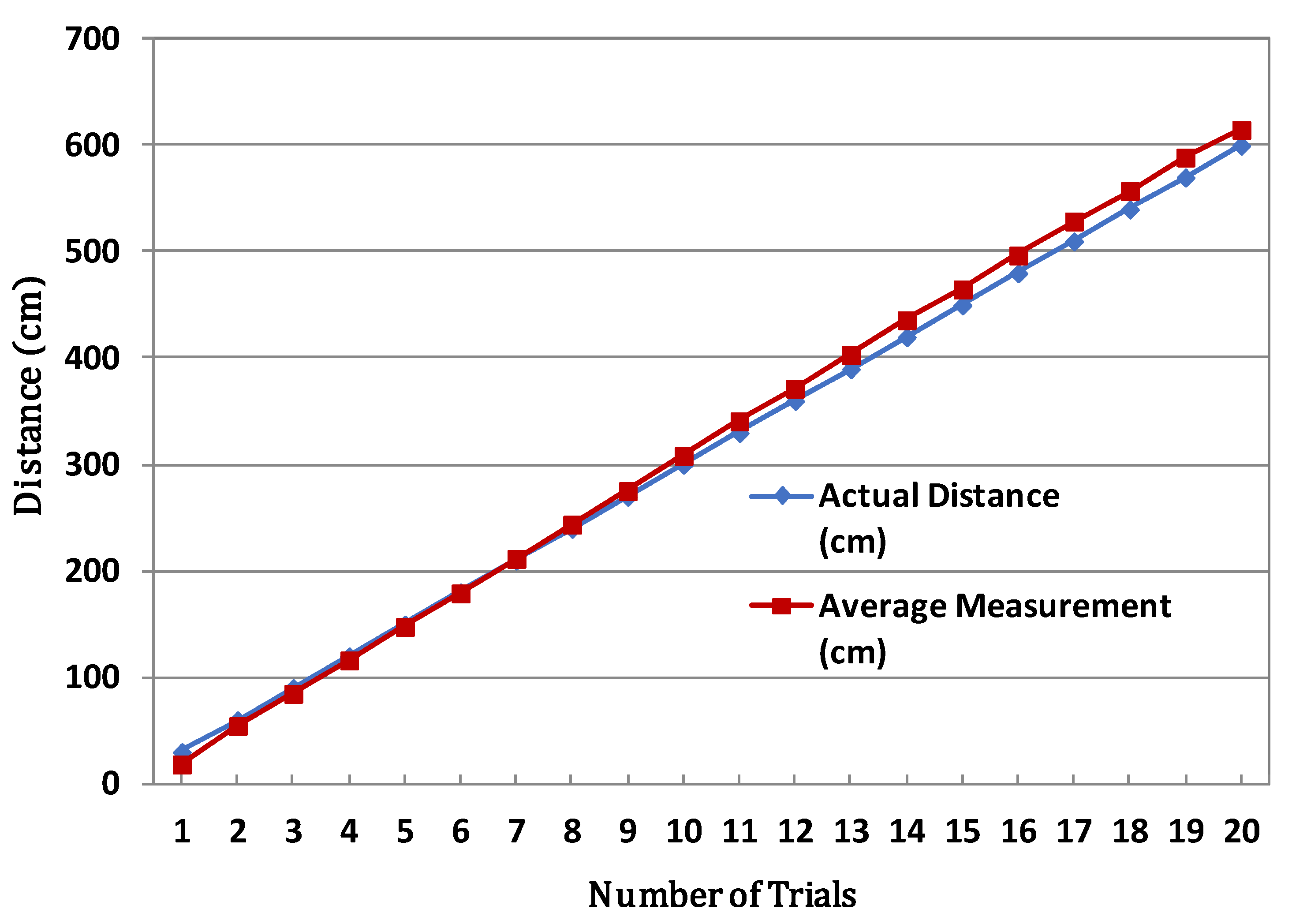

Figure 18, which shows a distance error of less than 20 cm in most sections. If the UWB infrastructure is successfully constructed, it would be possible to observe in real time how many fire fighters are working in which area and at what time. Thus, this system can efficiently support decisions regarding input personnel as well as the sequence and route of rescue activities according to the situation of the disaster site.

Figure 19 shows a comparison of the distance moved by an actual vehicle and the distance measured by the radar system. The radar model was a Smartmicro T-32 model [

43], and it was installed in an emergency parking lot in a tunnel. The average distance was calculated by repeatedly driving the vehicle more than 10 times. Of course, due to the nature of radar antenna, it is affected by the presence of surrounding obstacles and physical vibration of the platform. However, when measured under optimal conditions without any obstacles, the distance error is less than 5 m in most sections. In some sections, error of 6 to 7 m occurred. This is because there is more signal distortion and clutter diffusion as the distance increases. Also, some curved or sloping sections of roads and tunnels may cause accuracy degradation. However, considering that the length of a sedan-type car is about 5 m, accuracy of 5 m is very helpful for accident point estimation and situation judgment.

5. Discussion

The proposed search and rescue system introduced various technologies to cope with tunnel fires. In general, disaster response can be divided into two processes, initial detection and rescue. The most important factor is the accurate detection of accidents. This is also important in securing the golden time for recovery. For this purpose, the proposed system can monitor the whole accident situation through the use of heat, smoke, and flame sensors, camera-based image processing technology, and radar to detect the location of burning vehicles when a fire occurs. If the initial response fails, a fire brigade is dispatched. Thereafter, a robot capable of gathering data to assess the internal situation can be used while securing the safety of fire fighters. Finally, to support fire fighters’ efficient activities, a location-tracking device and an area search monitoring technology were proposed.

However, to make these proposed technologies more useful in the field, it is necessary to consider some of the following challenges:

Monitoring range and the number of sensors. Various kinds of sensors can be used to monitor fires and various algorithms are used to process the data gathered by them. However, when applied to a tunnel longer than 1 km, dozens of sensors must be arranged according to a certain pattern and rules. Typically, they should be installed at minimum intervals according to the sensor’s sensing range and wireless communication distance. The installation should not interfere with the traffic of the vehicles, and the detection performance should not be impaired by external factors, such as weather conditions. In particular, the degree of robustness against rain, snow, wind, and temperature changes may affect overall performance. Additionally, battery and power supply issues should be considered for long-term use. Regarding CCTV and radar, it is also important to secure the optimal detection distance and LOS visibility. Although recent performance improvements have been made with the introduction of signal filtering and pan–tilt–zoom (PTZ) technologies, it is still necessary to review the installation height, angle, and software calibration for optimal performance.

Communication and networking. The data obtained from various disaster-detection sensors must be transmitted accurately and quickly to the correct administrator or control server. Various communication solutions based on IoT (e.g., WiFi, Bluetooth, ZigBee, long-term evolution (LTE), etc.) can be applied for this purpose. The proposed system basically adopts a fiber optical cable built into the tunnel as a backbone and mainly uses WiFi for the backbone network connection. The most reliable communication method is to deploy APs at certain intervals to ensure the traffic distribution and network connectivity. However, if there is an infrastructure failure, such as a power outage or building collapse due to a fire, a mobile ad hoc network can be established to ensure network connectivity. To this end, routing protocols, such as ad hoc on-demand distance vector (AODV) [

44], dynamic source routing (DSR) [

45], and optimized link state routing (OLSR) [

46], have already been proposed as international standards, and recent open-source-based BATMAN [

47] algorithms are also applicable. However, when constructing a wireless network, physical errors due to unstable channel conditions (e.g., distortion, attenuation, interference), the hidden-terminal problem, and the exposed-terminal problem should be considered. In addition, since mobile devices employed by a fire brigade have mobility, the signal disconnecting problem due to delayed reconnection and routing failures are potential degradation factors. To cope with these problems, various solutions, such as active route maintenance, error control, and congestion control, can be considered.

Robot operation. Recently, since types of tunnels and buildings have diversified and the length of tunnels is increasing, the damage caused by fires is also increasing rapidly. Therefore, robots capable of acting as avatars for fire fighters are attracting increasing research interest. In particular, in a long space such as a tunnel, fast approach is important, and a high-speed flying robot can be a good solution. However, in the simulated fire experiment using this system, we found that the utilization of a robot is highly dependent on the ability to control the platform. In other words, since there is no fully autonomous platform so far, pilot intervention within a certain section is essential. Although a few autonomous algorithms make it possible to partially control obstacle avoidance, elaborate approach and rotation depend on the pilot’s personal capability and experience. Moreover, if a robot depends on camera images in a dark tunnel, it has a high probability to collide with the surrounding vehicles or walls. Of course, it is possible to improve the durability of robots for this purpose by developing a platform that is robust against collision; however, collision with a running vehicle or collision with a victim can cause more serious damage. Therefore, a trained professional pilot is needed when the manual operation of a robot is performed, and it is necessary to confirm the entire path for the robot’s navigation and the existence of the obstacles around the robot. To overcome these risk situations, various safety techniques, including attitude control, have been studied. Another problem is the limited battery capacity of the robot. Currently, most robots only have about 20 min of operation time, and charging time is relatively long. Therefore, if a robot is to be used over a long period of time, the battery operating time must be considered in advance. In a tunnel, the battery power consumption may increase due to wind and smoke resistance, so it is also necessary to confirm the environmental characteristics in advance.

Interaction with firefighters’ operations. The authors of [

48,

49] emphasized that fire fighters must arrive at the site of a fire within 10 min and perform rescue and fire suppression in order to successfully finish the fire fighting activities, because a vehicle fire usually reaches its peak within 5 to 10 min. If it is difficult to check the inside of a tunnel after arrival, it is recommended to use various monitoring devices and reconnaissance equipment. Although the CCTV, IoT sensors, radar devices, and robots proposed in this study are effective for initial information collection, additional fire activity information can be collected and provided during the fire suppression process. For example, when a large number of vehicles are involved in an accident with multiple collisions, and the flames spread to nearby vehicles due to explosions and wind, it is important to collect additional information, such as the direction of fire diffusion, additional explosion risk, and the locations of victims, during the fire suppression procedure. Therefore, if smoke is spreading due to additional fire, or there are difficulties to access the field due to dangerous materials, it is necessary to periodically share and confirm the collected information in the control center via the Internet or wireless networks. In addition, robot can be another solution for scouting or delivering relief items to spaces where it is difficult for fire fighters to approach due to the congestion of vehicles. However, if a fire has already reached its peak and the equipment is difficult to access, the fire brigade should use defensive tactics to prevent the fire spreading and then perform aggressive search and rescue activities after the fire has been suppressed to some extent. In other words, the proposed system should be appropriately combined with search and rescue activities before and after fire suppression rather than just direct fire suppression.

Table 5 summarizes the overall operation scenario of how the proposed system can collaborate with the conventional responses of the fire brigade and tunnel supervisors. The proposed system is categorized into three responses. The first response is the detection of the accident through the pre-installed IoT sensor, the second correspondence is the acquisition of the smoke image by the robot, and the third correspondence is the position tracking of the firefighters. As a result, since there are three ways to cope with a fire, even if one response fails, sufficient search and rescue can be completed in the remaining responses.

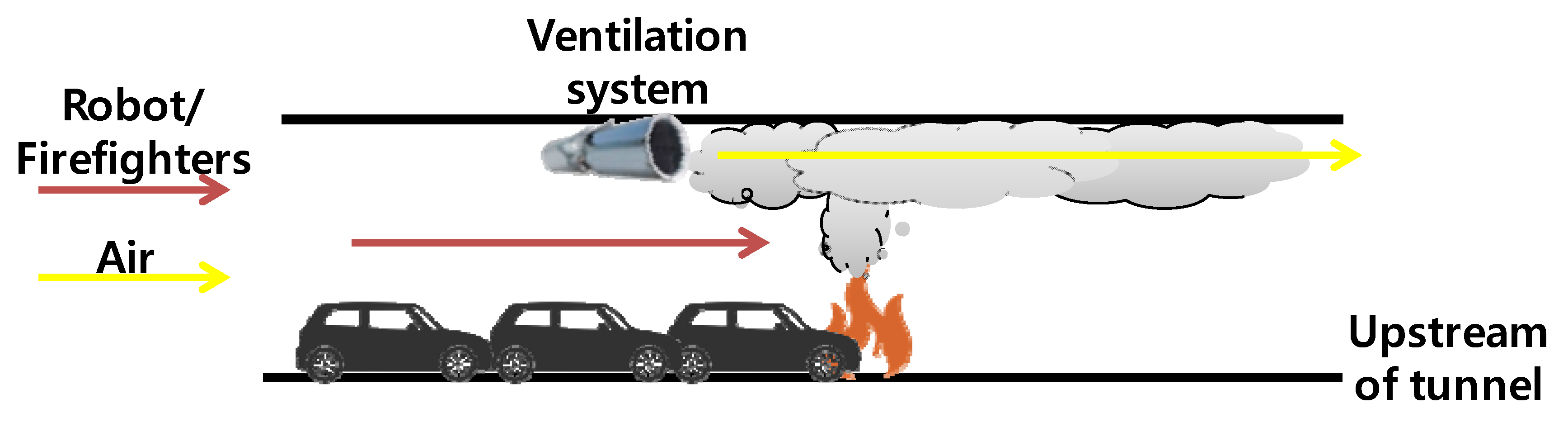

Cooperation with tunnel facilities. In the event of a fire, CCTV, radar, and IoT sensors first detect and report the danger, but the operation of related facilities for further response is controlled by using the disaster prevention equipment built into the tunnel. Typically, there are ventilation fans that remove smoke, radio equipment that broadcasts emergency announcements, and barriers that prevents the entry of additional vehicles into the tunnel. In particular, the ventilation fans must discharge smoke to the exit on the opposite side of the entrance where the fire brigade enters. However, it is important to maintain the speed of the ventilation fans below the critical speed at which the fire can spread.

Figure 20 illustrates the concept of the operation of a ventilation system and the entrance direction for fire fighters.

Future investigations. In this system, we propose a countermeasure based on three technologies, IoT, robots and localization, in case of a fire in a motor way tunnel. However, due to the nature of the fire, the rate of temperature increase and the direction of smoke diffusion, and evacuation routes may vary depending on the type and structure of the tunnel. For example, the width, height, slope, and degree of curvature of a road can directly affect the fire, and the effectiveness of fire facilities, including ventilation fans, is also significantly affected after a fire. In addition, when the proposed equipment uses wireless communication, unexpected signal distortion, attenuation, interference, disconnection and so on may occur due to high temperature and internal obstacles. To overcome these problems, it is necessary to provide quick data collection, analysis, and decision-making on the latest fire as well as on-site environmental analysis. Future research directions are to introduce artificial intelligence and big data-based analysis technology to solve these uncertainties.

6. Conclusions

Since a tunnel is a type of enclosed space, when a fire occurs it is very difficult to perform search and rescue operations, due to the rapid spread of fire and thick smoke. In particular, a lot of time is required for searching evacuation, because of its long and wide space. To effectively cope with such a difficult environment, it is important to promptly detect and recognize the location, causes, and type of accident, and to identify the positions of victims.

In this paper, we proposed a fire and accident detection system based on IoT technology, and also proposed a remote monitoring system in which it is applied. However, since it is still difficult to predict the spread pattern of a fire or disaster, it is necessary to equip firefighters with specialized equipment to directly check the internal situation. For this purpose, we proposed a system for adopting and operating ground reconnaissance robots and aerial reconnaissance robots. These sensors, robot equipment, and fire fighters should be able to communicate with each other, and their positions should also be grasped in real time. For this, UWB- and Bluetooth-based location tracking technology that can be operated in tunnels were analyzed, and a navigation technique using these devices was presented. The detection sensors, reconnaissance robots, and positioning technology proposed in this paper can be used alone, but the efficiency is expected to be maximized if the three technologies are complementarily used.

In future work, we plan to study technologies that more actively recognize accidents and respond to them immediately. We will also pursue the development of technologies to ensure the quick and efficient evacuation and safety of casualties and survivors.