Abstract

This study aimed to examine the interrater agreement of Critical-Care Pain Observation Tool-Neuro (CPOT-Neuro) scores as a newly developed tool for pain assessment in patients with critical illness and brain injury between raters using two methods of rating (bedside versus video) during standard care procedures (i.e., non-invasive blood pressure and turning). The bedside raters were research staff, and the two video raters had different backgrounds (health and non-health disciplines). Raters received standardized 45 min training by the principal investigator. Video recordings of 56 patient participants with a brain injury at different levels of consciousness were included. Interrater agreement was supported with an Intraclass Correlation Coefficient (ICC) > 0.65 for all pairs of raters and for each procedure. Interrater agreement was highest during turning in the conscious group, with ICCs ranging from 0.79 to 0.90. The use of video recordings was challenging for the observation of some behaviors (i.e., tearing, face flushing), which were influenced by factors such as lighting and the angle of the camera. Ventilator alarms were also challenging to distinguish from other sources for the video rater from a non-health discipline. Following standardized training, video technology was useful in achieving an acceptable interrater agreement of CPOT-Neuro scores between bedside and video raters for research purposes.

1. Introduction

A pain assessment in patients with a critical illness and a brain injury is challenging, as many of them may present with an altered level of consciousness (LOC). Although brain tissue itself is not sensitive, pain can arise from lesions on the external structures of the brain and other sources, such as invasive equipment necessary for mechanical ventilation [1] and standard care procedures common in the intensive care unit (ICU) [2]. Some standard care procedures may act as noxious stimuli, leading to nociception and pain. Nociception is the neural physiologic process of encoding noxious stimuli that may trigger autonomic (e.g., increased heart rate) or behavioral (e.g., motor withdrawal reflex) responses [3]. Nociception may also lead to pain, which is a personal experience that is best described by the person experiencing it. The patient’s self-report is considered as the “reference standard” measure for pain, which should be accepted and respected [3]. However, in the ICU, patients with a brain injury often cannot express their pain through verbal self-reporting due to altered LOC, sedation, or mechanical ventilation that may alter their ability to communicate [4]. This inability to self-report does not negate the possibility that a patient experiences pain [3]. In such a situation, nonverbal behaviors provide vital information [3], and behavioral assessment tools are recommended as an alternative reference method for pain assessment [4,5].

Several behavioral assessment tools currently exist for critically ill adults [6]. The Behavioral Pain Scale (BPS) [7] and the Critical-Care Pain Observation Tool (CPOT) [8] have been widely validated among ICU patients with various diagnoses; however, the content of these tools may be less applicable in patients with a brain injury and with an altered LOC [4]. Indeed, patients with a critical illness and brain injury exhibit various behaviors indicative of pain that may differ from behaviors exhibited by medical or surgical ICU patients. For instance, the contraction of the upper face (i.e., brow lowering, orbit tightening) was more frequently observed than the contraction of the lower face (i.e., upper lip raising, clenched teeth) or a simultaneous contraction of the upper and lower face (grimace) in ICU patients with a brain injury [9,10]. Also, tearing and face flushing were newly described in ICU patients with a brain injury [9,10]. Therefore, refinements to these tools are indicated for a more accurate detection of pain in this vulnerable patient group.

Based on this evidence, the content of the CPOT was adapted to refine the description of behaviors and their scoring and to include other behaviors indicative of pain in patients with a critical illness and a brain injury. The refined scale is called the CPOT-Neuro, which was recently validated in a multi-site study [11]. Our findings supported the ability of the CPOT-Neuro to discriminate between standard care procedures, which could be related to noxious (e.g., turning, endotracheal suctioning) or non-noxious stimuli (e.g., soft touch, non-invasive blood pressure [NIBP]) [6] as well as correlating with self-reported pain intensity. The interrater agreement between two bedside raters (i.e., research staff and ICU nursing staff) was also supported [11]. As part of this research study, patient participants were video-recorded during standard care procedures. In this report, we aimed to examine the interrater agreement of CPOT-Neuro scores between raters using two methods of rating (bedside versus video) in the context of an undergraduate student project. Guidelines for reporting reliability and agreement studies (GRRAS) were followed [12].

2. Materials and Methods

2.1. Design

A prospective cohort with repeated-measures design was used for the larger validation study [11]. This design allowed the collection of data across non-noxious and noxious procedures as part of standard care. To achieve the study objective, NIBP and turning were selected as non-noxious and noxious procedures, respectively, as these are commonly performed in ICU patients with a brain injury. Both procedures were performed as part of standard care on the same day by ICU nursing staff. Video recordings with a view of the patient’s face and body were included in this interrater agreement study.

2.2. Sample and Setting

A sample size greater than 33 patients was required to detect a minimal Intraclass Correlation Coefficient (ICC) value of 0.60 between pairs of two raters with an alpha of 0.05 and a power of 80% [13]. Video recordings of patients were performed in two university ICU settings from large tertiary trauma centers in Montreal with similar bed capacities, admitting, on average, 1000–1500 patients annually. We used a subsample of the larger validation study [11]. Using consecutive sampling, patients were considered eligible if they (1) were 18 years or older; (2) were admitted for brain injury (trauma-related or not) to the ICU for less than 4 weeks; (3) and had a Glasgow Coma Scale (GCS) score ≥ 4. Patients were excluded if they had sustained a spinal cord injury affecting the motor activity of the four limbs, had cognitive deficits or psychiatric conditions prior to the brain injury, were previously diagnosed with epilepsy, were receiving neuromuscular blocking agents, had a score of −5 (unarousable) on the Richmond Agitation Sedation Scale (RASS), or had suspected brain death. This study was approved by the research ethics committee of each participating site (#15-994 at site 1 and #2015-1164 at site 2). Patients capable of consenting or representatives qualified to consent for suddenly incapable patients provided written consent for this research study, and incapable patients who later recovered their ability to consent provided informed written consent.

2.3. Procedures

Video recordings were captured for the duration of procedures at the bedside using two cameras: (a) one for the face view (held by the research staff) and (b) one for the body view (on a tripod at the foot of the bed). As described in the larger study [11], patient participants were assessed for pain using the CPOT-Neuro by the research staff at the bedside. To achieve this study objective, patient participants were also assessed for pain with the CPOT-Neuro using their video recordings which were viewed independently by two video raters (VR): (a) one undergraduate student trainee in neuroscience newly trained in 2022 (VR1—VN) and (b) one trained research staff (PhD candidate in nursing) with 7 years of experience (VR2—MRL) using the CPOT and the CPOT-Neuro [11,14]. Interrater agreement between the CPOT-Neuro scores of the two video raters and the bedside raters (research staff member) during NIBP and turning was examined. The bedside raters involved 5 research staff members, including an experienced clinical research coordinator, 3 undergraduate nursing trainees, and a graduate nursing trainee (who was also involved as VR2).

2.4. Instruments

2.4.1. Critical-Care Pain Observation Tool–Neuro

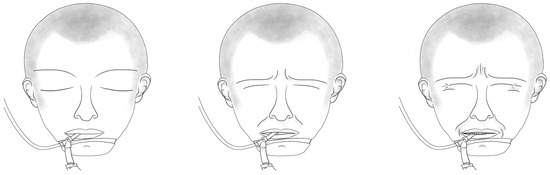

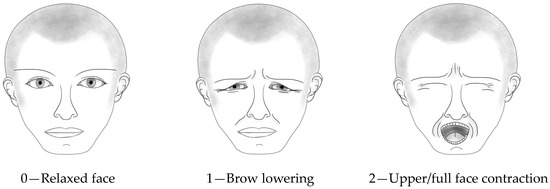

We used the CPOT-Neuro to assess pain in patient videos during the non-noxious and noxious procedures. The CPOT-Neuro development was previously described [11]. Briefly, this tool includes the following five behavioral items: (a) facial expression, (b) body movements, (c) ventilator compliance or vocalization (based on the patient’s condition, i.e., mechanically ventilated or not), (d) muscle tension, and (e) autonomic responses (tearing and/or face flushing). Facial expression (inspired by key facial responses to pain [15,16]), body movements, and ventilator compliance/vocalization are rated from 0 to 2 (see Figure 1 for facial expression), while muscle tension and autonomic responses are rated as absent (0) or present (1). Muscle tension is evaluated by performing a passive flexion and extension of the patient’s arm to feel any resistance to movements. The total score can range from 0 to 8, similar to the scoring of the original CPOT [8]. The validity of its use in ICU patients with a brain injury was supported by higher CPOT-Neuro scores during noxious compared to non-noxious procedures and a moderate positive correlation (Spearman rho = 0.63) with self-reported pain intensity during turning [11]. The interrater agreement of the CPOT-Neuro scores obtained during bedside turning between research staff and ICU nursing staff was supported with an ICC of 0.76 (95% confidence interval (CI): 0.68–0.82) [11].

Figure 1.

Facial expression scoring in CPOT-Neuro. © Céline Gélinas.

Bedside raters and the two video raters (VR1 and VR2) were trained by the principal investigator and author of the CPOT-Neuro (CG). This 45 min training was previously described [14]. Briefly, the training included the description of the tool’s items and scoring, as well as patient videos to practice scoring with the CPOT-Neuro and a competence test with three selected patient videos. Exact CPOT-Neuro scores or a difference of no more than one point were acceptable to pass the competence test.

2.4.2. Sociodemographic and Clinical Variables

Sociodemographic data were collected from each participant, including age, sex, and ethnicity. Clinical data were collected from the patient’s medical chart, including diagnosis and the severity of illness (Acute Physiology And Chronic Health Evaluation II [APACHE II]) [17], and level of consciousness (GCS score). The GCS was used to evaluate the patient’s LOC, which was clustered into 3 main categories: (a) unconsciousness (GCS ≤ 8), (b) altered LOC (GSC = 9–12), and (c) consciousness (GCS = 13–15) [18].

2.4.3. Data Analysis

SPSS software (version 24.0) was used for data analysis. Descriptive statistics (i.e., frequency, median, and interquartile range (IQR)) were calculated for CPOT-Neuro scores and sociodemographic and clinical data. The ICC (two-way mixed model) was used to examine the interrater agreement between the CPOT-Neuro scores of the bedside and video raters. A minimal ICC > 0.60 was required to represent the acceptable interrater agreement for research purposes [12].

3. Results

3.1. Sample Description

In total, videos of 56 patient participants (31 conscious, 17 altered LOC, 8 unconscious) were assessed using CPOT-Neuro. Participants were middle-aged, and the majority were Caucasian males admitted to the ICU for a traumatic brain injury (TBI). Patients with an altered LOC or who were unconscious were unable to self-report their pain (45% of the sample), and most of them were mechanically ventilated. APACHE II mean scores tended to increase with lower levels of consciousness, indicating the severity of injury. The patient sample is described in Table 1.

Table 1.

Sample description of patient participants.

3.2. CPOT-Neuro Scores of the Bedside and Video Raters

Low CPOT-Neuro scores were found during NIBP, and high scores were found during turning. Based on the descriptive statistics of CPOT-Neuro scores (Table 2), there seemed to be some differences between raters, especially during turning. VR1 provided the lowest CPOT-Neuro scores during turning in unconscious patients.

Table 2.

CPOT-Neuro scores (median and IQR) of the bedside and video raters for each procedure.

3.3. Interrater Agreement of CPOT-Neuro Scores between Pairs of Bedside and Video Raters

The interrater agreement coefficients of CPOT-Neuro scores between pairs of bedside and video raters for each procedure are described in Table 3. ICCs for the pairs of bedside and VR2 raters were calculated using a smaller sample (n = 35), considering that VR2 was also involved as the bedside rater for 21 patient participants. The ICCs for all three pairs of raters were > 0.65 for the total sample (n = 56). ICCs were also acceptable in each of the LOC groups except for the pair of the bedside and VR1 raters during NIBP in the altered LOC group with a low ICC of 0.44. Of note, ICC could not be obtained for the pair of bedside VR2 raters in the unconscious group due to the very small sample (n = 3). The interrater agreement was highest for all pairs of raters during turning in the conscious group, with ICCs ranging from 0.79 to 0.90.

Table 3.

Intraclass Correlation Coefficient (95% confidence interval) of CPOT-Neuro scores between pairs of bedside and video raters for each procedure.

4. Discussion

This is the first study reporting on the interrater agreement of CPOT-Neuro using video recordings. Overall, the interrater agreement between pairs of bedside and video raters was supported with ICCs > 0.60, which is appropriate for research purposes [12] and considering that different methods were used (bedside rating versus video rating). Overall, ICCs were higher during turning (0.74–0.80) compared to NIBP (0.65–0.69). A similar ICC (0.76) was also reported during turning in the larger validation study between bedside raters (research staff and ICU nursing staff) [11]. Interestingly, ICCs tended to be higher in the conscious group compared to the altered LOC and unconscious groups. Conscious ICU patients with a brain injury are more likely to exhibit common pain behaviors such as a grimace, whereas those who are unconscious or with an altered LOC express a variety of subtle behaviors that may be indicative of pain [1,9,10].

The use of video technology in behavioral observation is a key method for collecting research data [19]. Video rating offers many advantages over bedside rating. With video recordings, raters can replay and review observational data as many times as needed. Video rating allows for a level of observation and analysis that may not be attained as a bedside rater. However, video technology may lack the contextual data of real-time, like a bedside observation might provide [19,20,21].

Some technical challenges were identified with the use of video technology. Video recordings may not capture all facial expressions and movements of the patient, and nursing staff in the room often accidentally obstruct the view of the camera. The lighting in the room and the angle of the camera made it difficult for the video raters to recognize tears and face flushing for some patients. Another challenge of video rating was to evaluate muscle tension while viewing the passive flexion and extension of the patient’s arm performed by the bedside rater. This item is easier to assess when performed in real-time at the bedside so that muscle resistance may be felt by the rater. Although videos allowed raters to replay the recordings as many times as needed for observational rating, it may lead to the identification of behaviors that could be missed in real-time observation at the bedside.

The training appeared adequate to achieve a good interrater agreement between video raters with different backgrounds. Therefore, our results support that this type of training can be provided to people with and without a health-related background, which is consistent with other pain studies in individuals in rehabilitation or with dementia [16,20]. Scoring practice with patient videos and discussing behavioral observations are important aspects of the training. It is worth mentioning that it was more challenging for VR1 (non-health professionals) to recognize ventilator alarms from other sources in the video recordings. Training on the use of CPOT-Neuro is essential for both research and clinical purposes for proper pain assessment in this vulnerable population. The CPOT-Neuro could be further validated in acute or rehabilitation contexts of care.

Limitations

The sample was small, limiting the estimation of interrater agreement in the LOC groups, especially the altered LOC and unconscious groups. The sample was also less representative of patients with non-traumatic brain injury. The bedside and video raters were not blinded to procedures and may have observed more behaviors during turning that are known to be painful. ICC could not be obtained for the unconscious group due to the very small sample size. ICCs were good for research purposes but modest for clinical purposes [12].

5. Conclusions

The interrater agreement of CPOT-Neuro scores between bedside and video raters was supported for research purposes with ICCs, ranging from 0.65 to 0.80 in this sample of 56 ICU patients with a brain injury. Training strategies should include patient video scoring and a discussion on behavioral observations. The video technology was also useful for examining interrater agreement and could also be used to check intrarater agreement in future studies when interrater agreement is low to locate the source of unreliability (i.e., between and/or within raters) [22].

Author Contributions

Conceptualization, C.G.; methodology, C.G.; formal analysis, C.G. and V.N.; investigation, C.G., V.N. and M.R.-L.; resources, C.G. and M.R.-L.; data curation, C.G., V.N. and M.R.-L.; writing—original draft preparation, C.G., V.N. and M.R.-L.; writing—review and editing, C.G., V.N. and M.R.-L.; visualization, C.G. and V.N.; supervision, C.G.; project administration, C.G.; funding acquisition, C.G. All authors have read and agreed to the published version of the manuscript.

Funding

This study was supported by funding from the Canadian Institutes of Health Research (CIHR Funding #119486).

Institutional Review Board Statement

This study was conducted in accordance with the Declaration of Helsinki and approved by the Research Ethics Board of McGill University Health Center (protocol code MUHC-15-994 approved 19 June 2015) and Research Ethics Committee Centre intégré universitaire de santé et de services sociaux du Nord-de-l’Île-de-Montréal (protocol code 2015-1164 approved 2 July 2015).

Informed Consent Statement

Patients capable of consenting or representatives qualified to consent for suddenly incapable patients provided written consent for this research study, and incapable patients who later recovered their ability to consent provided informed written consent.

Data Availability Statement

The data presented in this study are available on request from the corresponding author. The data are not publicly available due to ethical guidelines.

Public Involvement Statement

There was no public involvement in any aspect of this research.

Guidelines and Standards Statement

This manuscript was drafted following the GRRAS (guidelines for reporting reliability and agreement studies) for reliability and agreement research.

Acknowledgments

The authors would like to express their gratitude to all ICU nurses, patients and their representatives who took part in this research study.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Roulin, M.J.; Ramelet, A.S. Generating and selecting pain indicators for brain-injured critical care patients. Pain. Manag. Nurs. 2015, 16, 221–232. [Google Scholar] [CrossRef] [PubMed]

- Puntillo, K.A.; Max, A.; Timsit, J.F.; Vignoud, L.; Chanques, G.; Robleda, G.; Roche-Campo, F.; Mancebo, J.; Divatia, J.V.; Soares, M.; et al. Determinants of procedural pain intensity in the intensive care unit. The Europain(R) study. Am. J. Respir. Crit. Care Med. 2014, 189, 39–47. [Google Scholar] [CrossRef] [PubMed]

- Raja, S.N.; Carr, D.B.; Cohen, M.; Finnerup, N.B.; Flor, H.; Gibson, S.; Keefe, F.J.; Mogil, J.S.; Ringkamp, M.; Sluka, K.A.; et al. The revised International Association for the Study of Pain definition of pain: Concepts, challenges, and compromises. Pain 2020, 161, 1976–1982. [Google Scholar] [CrossRef] [PubMed]

- Devlin, J.W.; Skrobik, Y.; Gélinas, C.; Needham, D.M.; Slooter, A.J.C.; Pandharipande, P.P.; Watson, P.L.; Weinhouse, G.L.; Nunnally, M.E.; Rochwerg, B.; et al. Clinical practice guidelines for the prevention and management of pain, agitation/sedation, delirium, immobility, and sleep disruption in adult patients in the ICU. Crit. Care Med. 2018, 46, e825–e873. [Google Scholar] [CrossRef]

- Herr, K.; Coyne, P.J.; Ely, E.; Gélinas, C.; Manworren, R.C.B. Pain assessment in the patient unable to self-report: Clinical practice recommendations in support of the ASPMN 2019 position statement. Pain Manag. Nurs. 2019, 20, 404–417. [Google Scholar] [CrossRef] [PubMed]

- Gélinas, C.; Joffe, A.M.; Szumita, P.M.; Payen, J.F.; Bérubé, M.; Shahiri, T.S.; Boitor, M.; Chanques, G.; Puntillo, K. A psychometric analysis update of behavioral pain assessment tools for noncommunicative, critically ill adults. AACN Adv. Crit. Care 2019, 30, 365–387. [Google Scholar] [CrossRef] [PubMed]

- Payen, J.F.; Bru, O.; Bosson, J.L.; Lagrasta, A.; Novel, E.; Deschaux, I.; Lavagne, P.; Jacquot, C. Assessing pain in critically ill sedated patients by using a behavioral pain scale. Crit. Care Med. 2001, 29, 2258–2263. [Google Scholar] [CrossRef]

- Gélinas, C.; Fillion, L.; Puntillo, K.A.; Viens, C.; Fortier, M. Validation of the Critical-Care Pain Observation Tool in adult patients. Am. J. Crit. Care 2006, 15, 420–427. [Google Scholar] [CrossRef]

- Gélinas, C.; Boitor, M.; Puntillo, K.A.; Arbour, C.; Topolovec-Vranic, J.; Cusimano, M.D.; Choiniere, M.; Streiner, D.L. Behaviors indicative of pain in brain-injured adult patients with different levels of consciousness in the intensive care unit. J. Pain. Symptom Manag. 2019, 57, 761–773. [Google Scholar] [CrossRef]

- Roulin, M.J.; Ramelet, A.S. Behavioral changes in brain-injured critical care adults with different levels of consciousness during nociceptive stimulation: An observational study. Intensive Care Med. 2014, 40, 1115–1123. [Google Scholar] [CrossRef]

- Gélinas, C.; Bérubé, M.; Puntillo, K.A.; Boitor, M.; Richard-Lalonde, M.; Bernard, F.; Williams, V.; Joffe, A.M.; Steiner, C.; Marsh, R.; et al. Validation of the Critical-Care Pain Observation Tool-Neuro in brain-injured adults in the intensive care unit: A prospective cohort study. Crit. Care 2021, 25, 142. [Google Scholar] [CrossRef]

- Kottner, J.; Audige, L.; Brorson, S.; Donner, A.; Gajewski, B.J.; Hrobjartsson, A.; Roberts, C.; Shoukri, M.; Streiner, D.L. Guidelines for Reporting Reliability and Agreement Studies (GRRAS) were proposed. Int. J. Nurs. Stud. 2011, 48, 661–671. [Google Scholar] [CrossRef]

- Walter, S.D.; Eliasziw, M.; Donner, A. Sample size and optimal designs for reliability studies. Stat. Med. 1998, 17, 101–110. [Google Scholar] [CrossRef]

- Richard-Lalonde, M.; Bérubé, M.; Williams, V.; Bernard, F.; Tsoller, D.; Gélinas, C. Nurses’ Evaluations of the feasibility and clinical utility of the use of the Critical-Care Pain Observation Tool-Neuro in critically ill brain-injured patients. Sci. Nurs. Health Pract. 2019, 2, 1–18. [Google Scholar] [CrossRef]

- Prkachin, K.M. The consistency of facial expressions of pain: A comparison across modalities. Pain 1992, 51, 297–306. [Google Scholar] [CrossRef] [PubMed]

- Solomon, P.E.; Prkachin, K.M.; Farewell, V. Enhancing sensitivity to facial expression of pain. Pain 1997, 71, 279–284. [Google Scholar] [CrossRef] [PubMed]

- Knaus, W.A.; Draper, E.A.; Wagner, D.P.; Zimmerman, J.E. APACHE II: A severity of disease classification system. Crit. Care Med. 1985, 13, 818–829. [Google Scholar] [CrossRef]

- Teasdale, G.; Murray, G.; Parker, L.; Jennett, B. Adding up the Glasgow Coma Score. Acta Neurochir. Suppl. 1979, 28, 13–16. [Google Scholar] [CrossRef]

- Haidet, K.K.; Tate, J.; Divirgilio-Thomas, D.; Kolanowski, A.; Happ, M.B. Methods to improve reliability of video-recorded behavioral data. Res. Nurs. Health 2009, 32, 465–474. [Google Scholar] [CrossRef]

- Ammaturo, D.A.; Hadjistavropoulos, T.; Williams, J. Pain in Dementia: Use of Observational Pain Assessment Tools by People Who Are Not Health Professionals. Pain. Med. 2017, 18, 1895–1907. [Google Scholar] [CrossRef]

- Kaasa, T.; Wessel, J.; Darrah, J.; Bruera, E. Inter-rater reliability of formally trained and self-trained raters using the Edmonton Functional Assessment Tool. Palliat. Med. 2000, 14, 509–517. [Google Scholar] [CrossRef] [PubMed]

- Streiner, D.L.; Norman, G.R.; Cairney, J. Health Measurement Scales—A Practical Guide to Their Development and Use, 5th ed.; Oxford University Press: New York, NY, USA, 2015; pp. 159–199. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).