The Energy Management Strategies for Fuel Cell Electric Vehicles: An Overview and Future Directions

Abstract

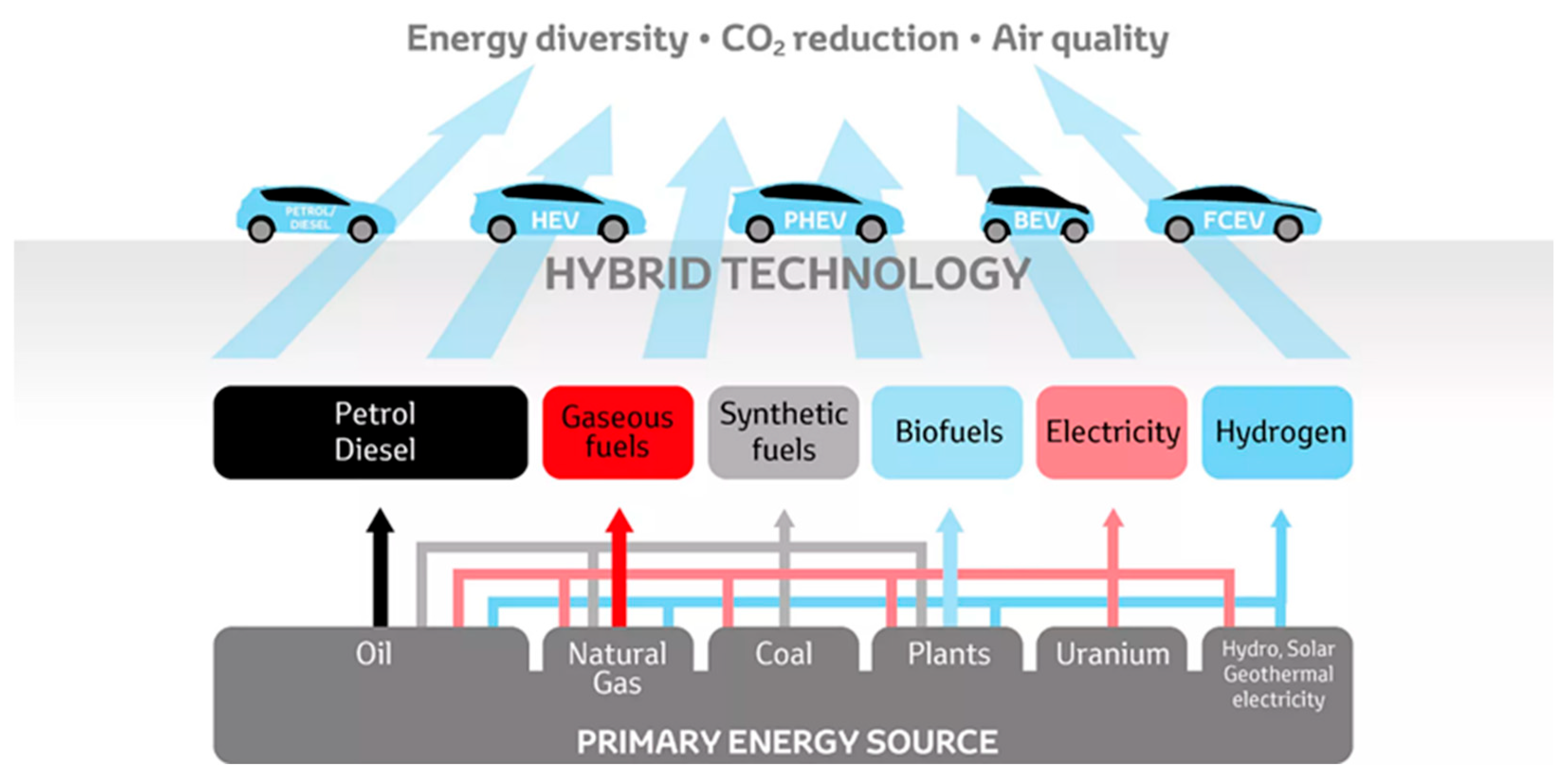

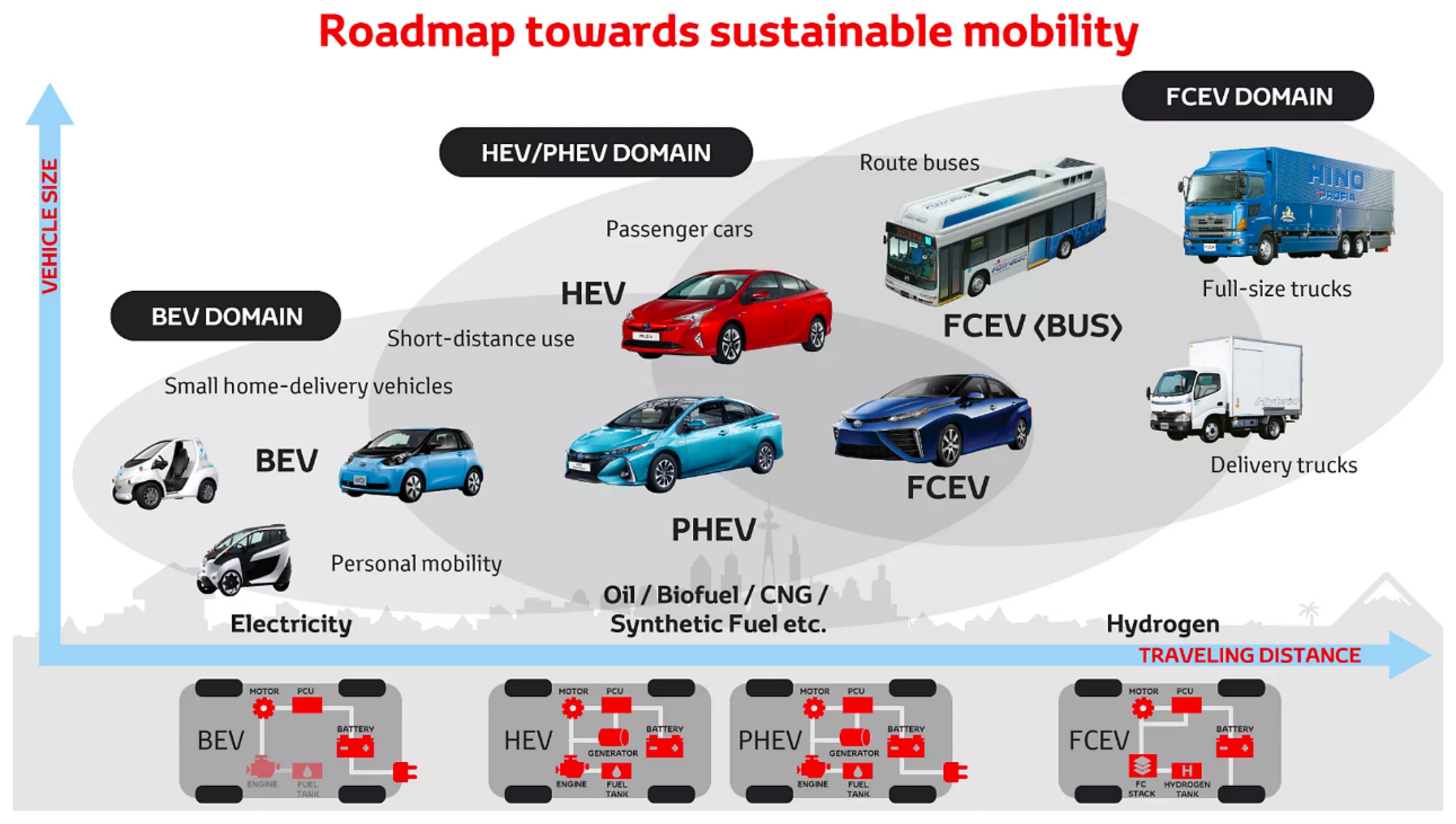

1. Introduction

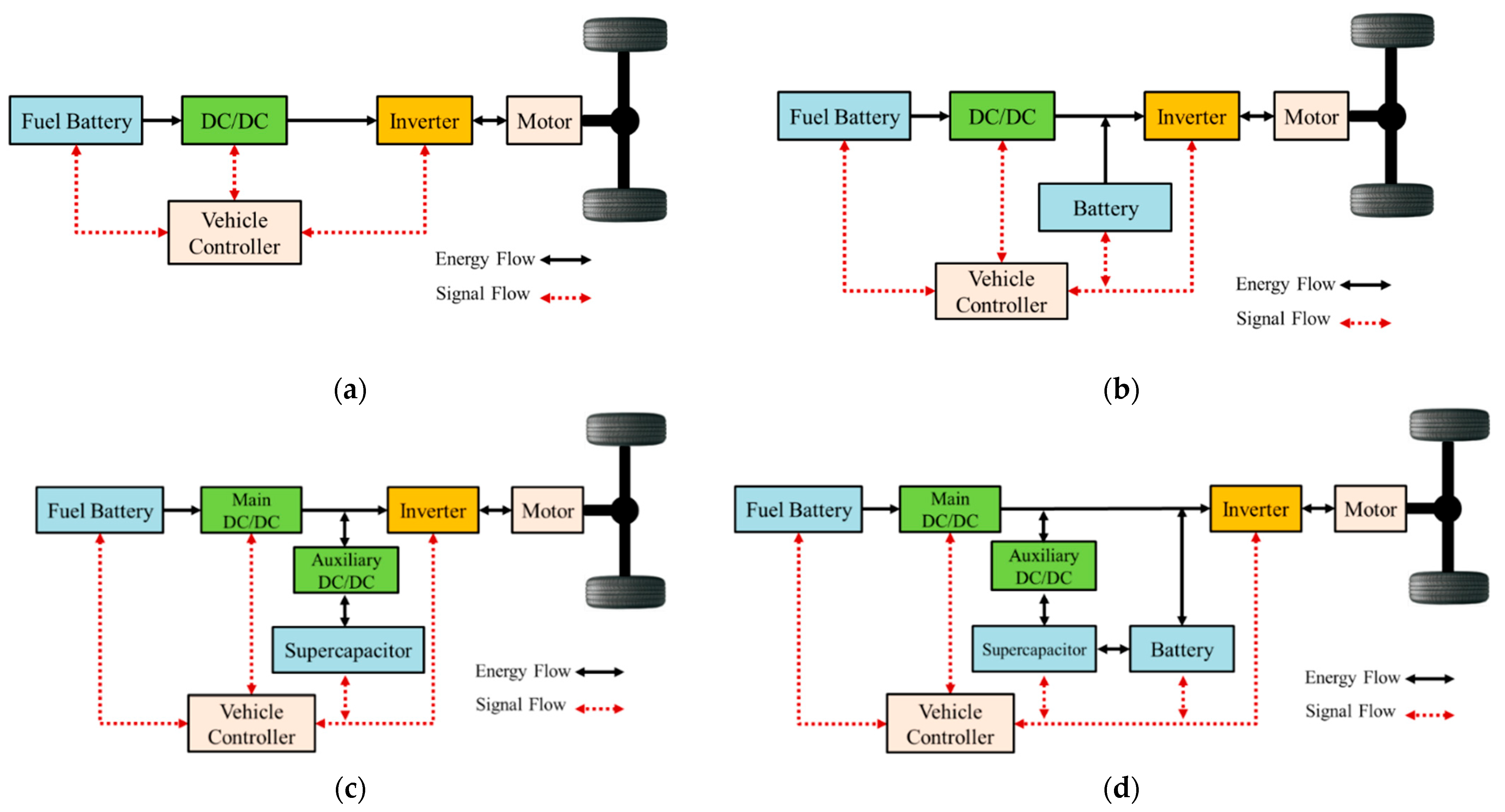

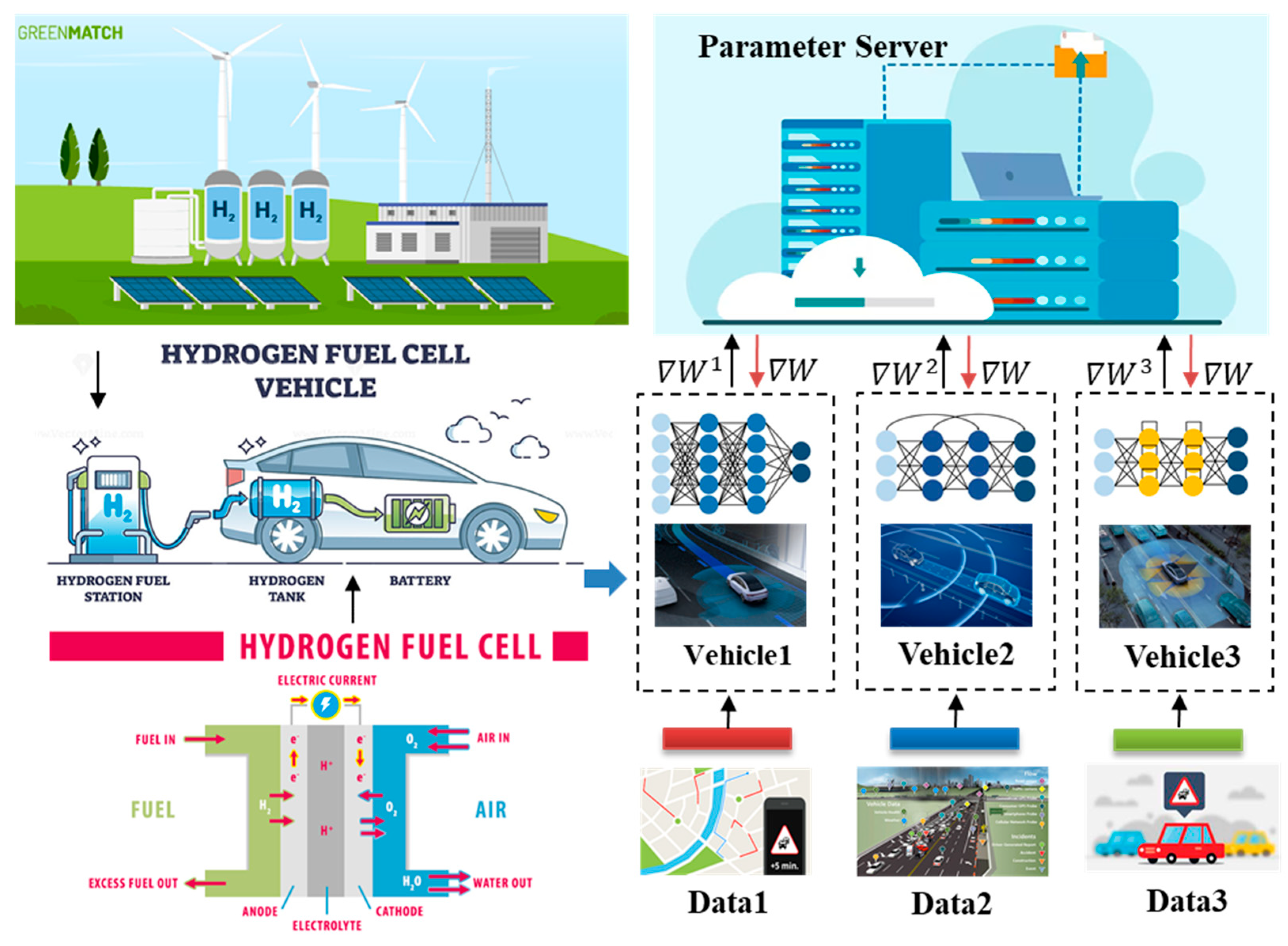

2. The Structural Design of Fuel Cell Hybrid Powertrain System

2.1. The Fuel Cell Standalone Powertrain System

2.2. The Fuel Cell-Battery Hybrid Powertrain System

2.3. The Fuel Cell-Supercapacitor Hybrid Powertrain System

2.4. The Fuel Cell-Battery-Supercapacitor Hybrid Powertrain System

2.5. Comparative Summary of Powertrain Architectures

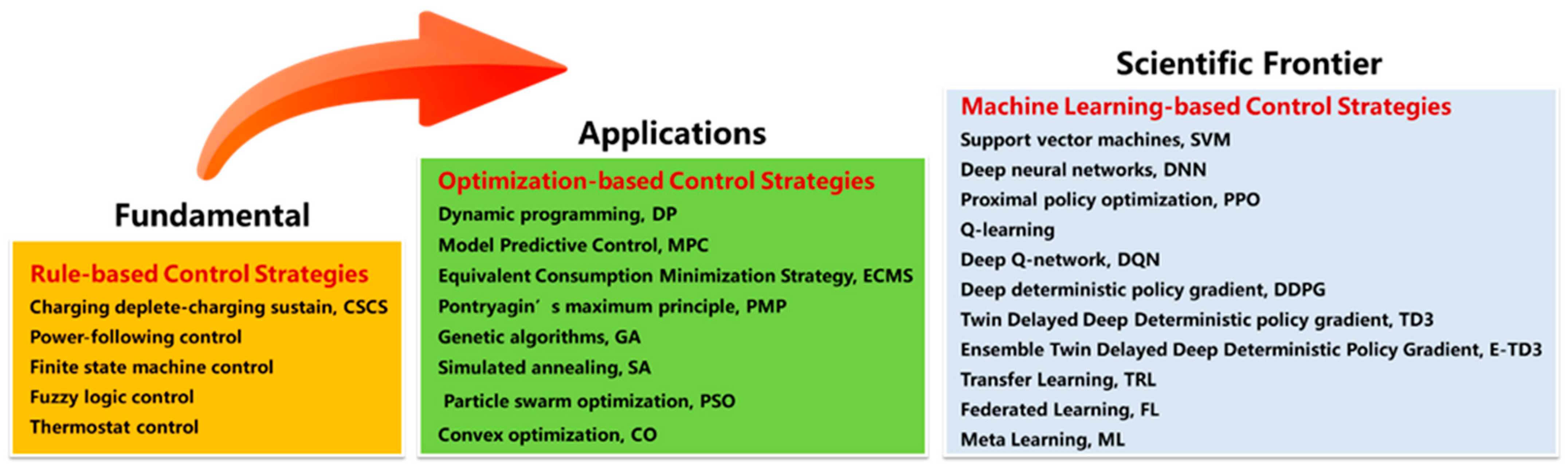

3. Research Progress on Energy Management Strategies

3.1. The Rule-Based Energy Management Strategy

3.2. The Optimization-Based Energy Management Strategy

3.2.1. The Global Optimization Control Strategy

3.2.2. The Real-Time Optimization Control Strategy

3.3. The Machine Learning-Based Energy Management Strategy

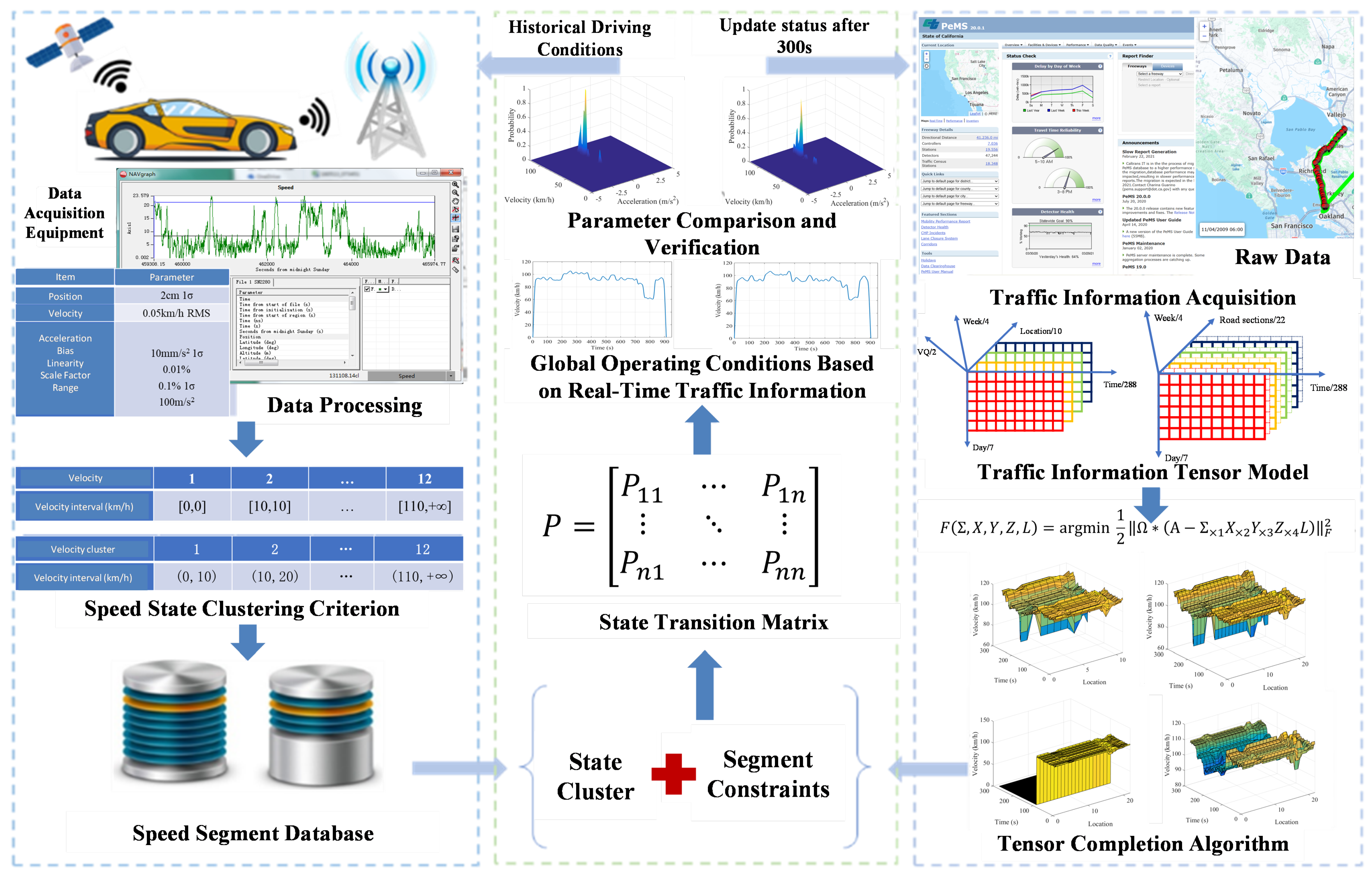

3.3.1. Supervised/Unsupervised Learning-Based Energy Management Strategy

3.3.2. The Reinforcement Learning-Based Energy Management Strategy

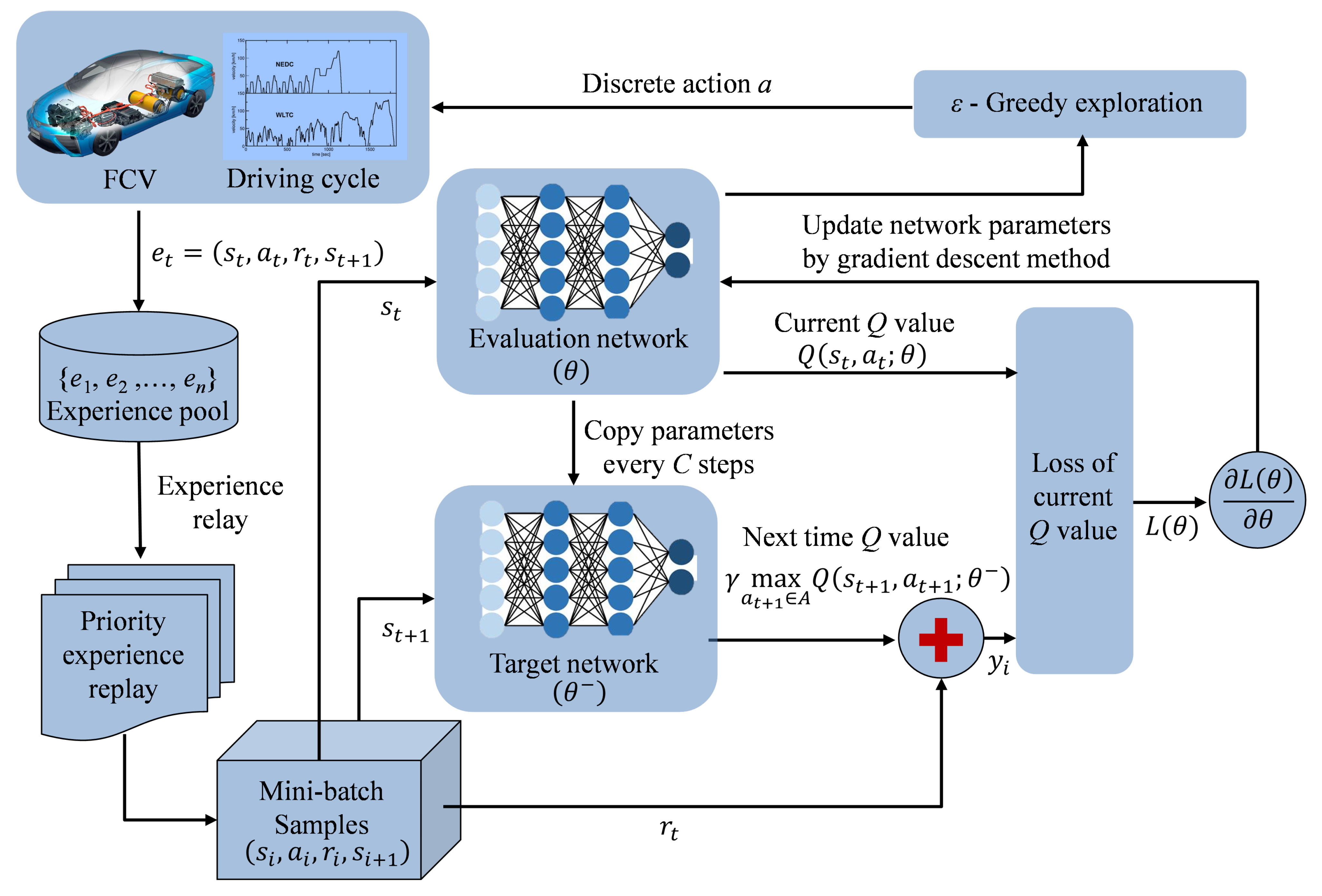

- Value Function-based Method

- 2.

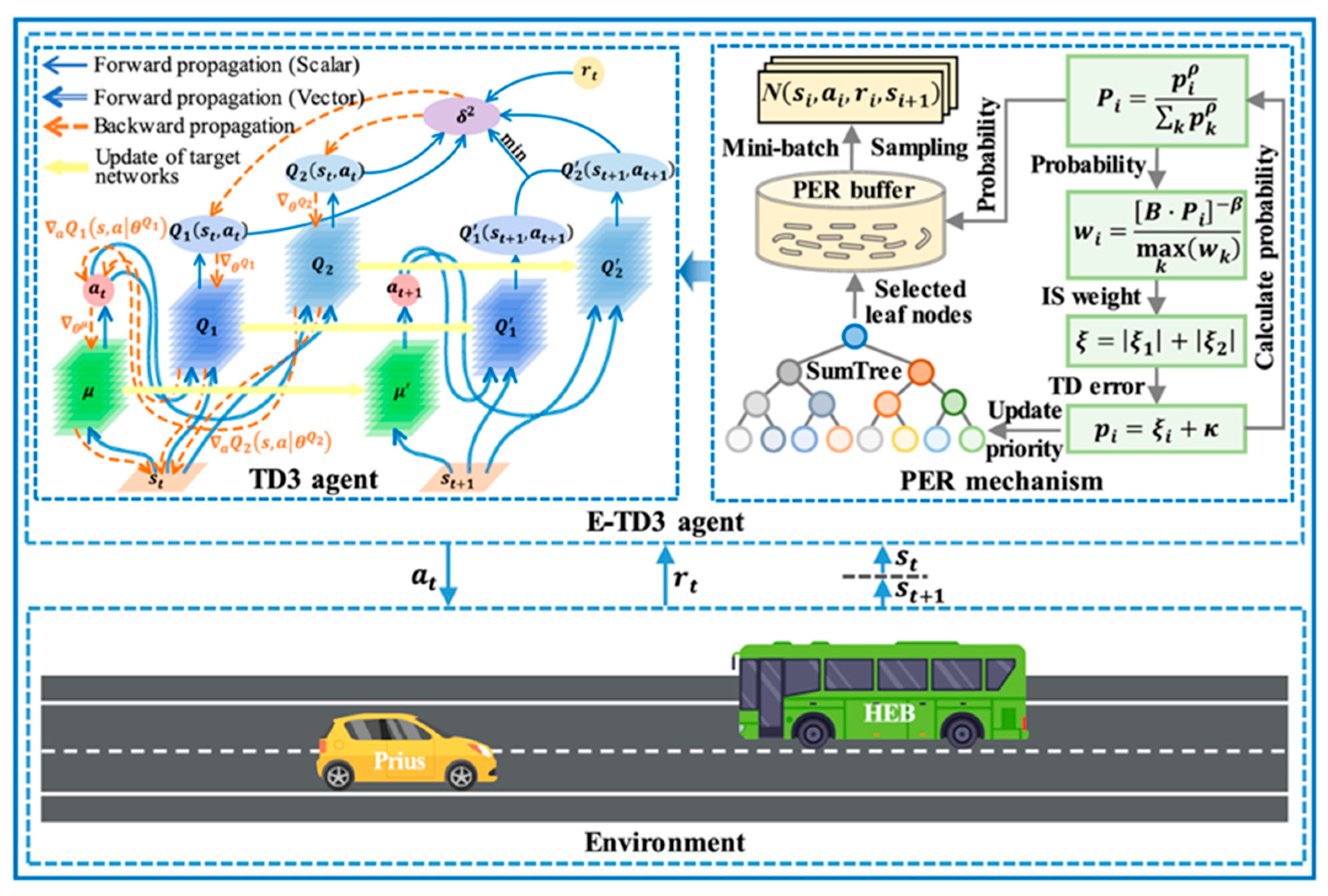

- Deep Deterministic Policy Gradient-based Method

- 3.

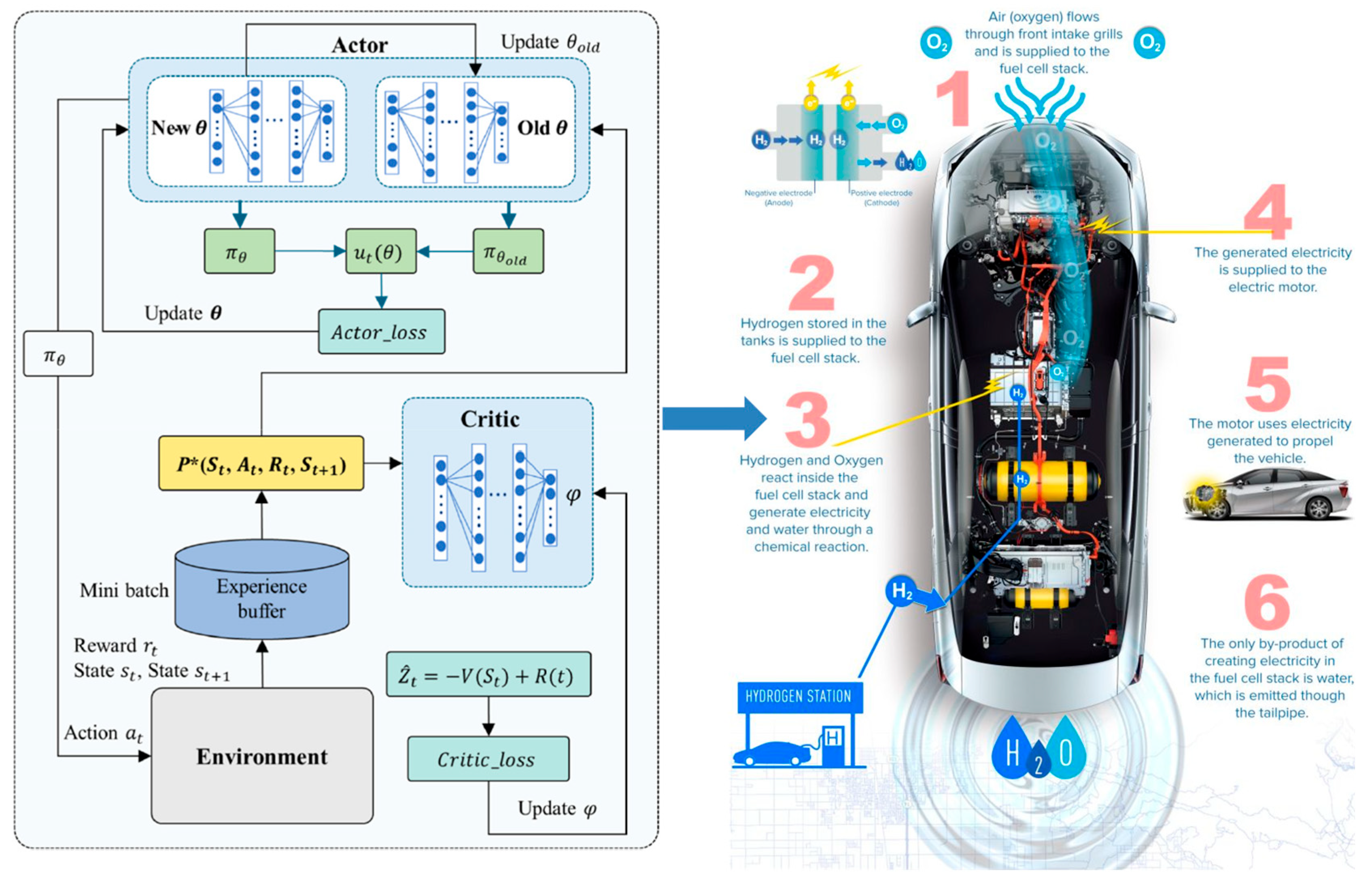

- Proximal Policy Optimization-based Method

- 4.

- Real-Time Deployability and Embedded Inference Analysis of DRL Methods

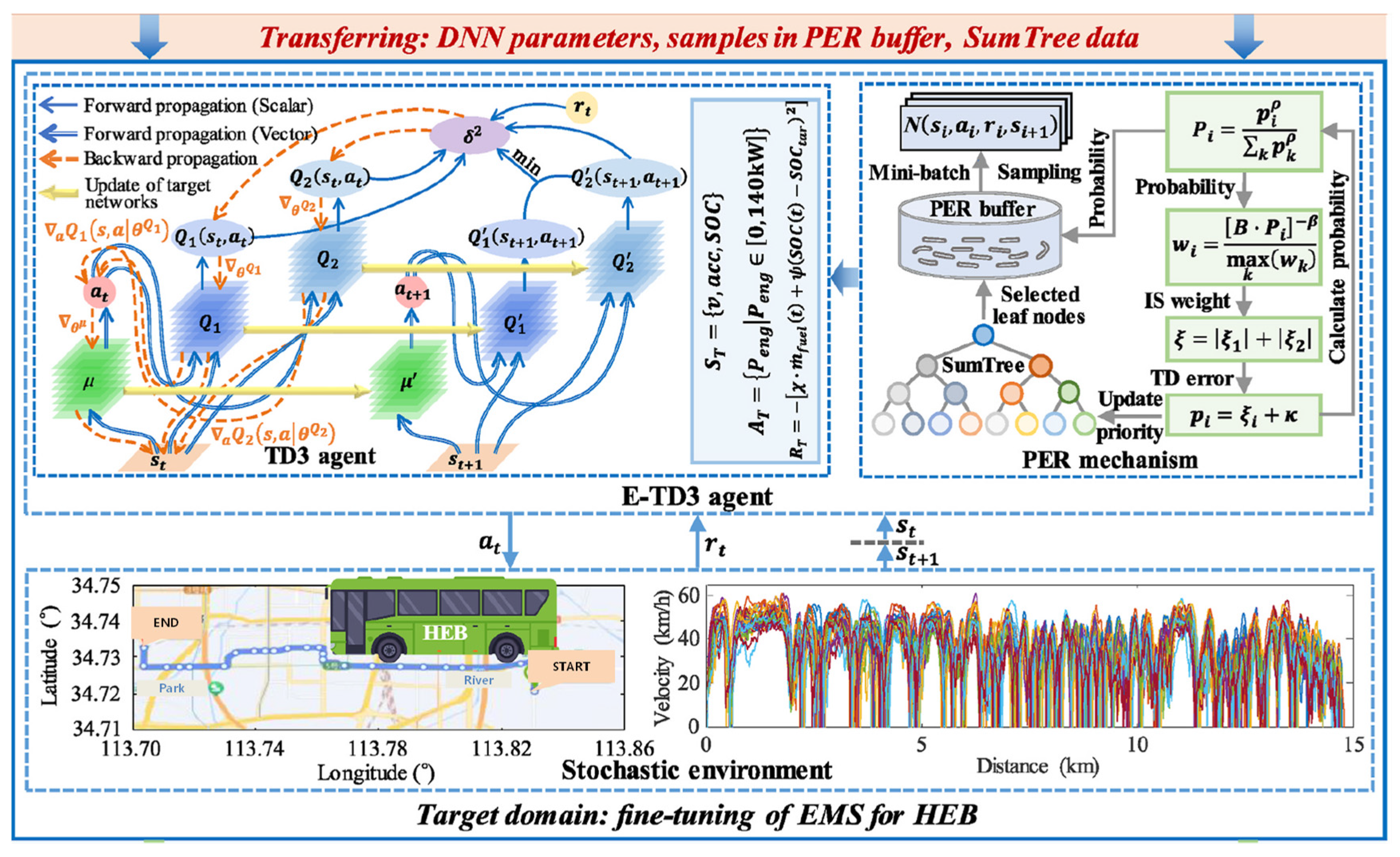

3.3.3. Transfer Learning-Based Energy Management Strategy

3.3.4. Federated Learning-Based Energy Management Strategy

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- The Right Car, in the Right Place, at the Right Time. January 2022. Available online: https://www.toyota.co.uk/discover-toyota/sustainability/sustainable-mobility (accessed on 1 January 2022).

- Nonobe, Y. Development of the fuel cell vehicle mirai. IEEJ Trans. Electr. Electron. Eng. 2017, 12, 5–9. [Google Scholar] [CrossRef]

- Kojima, K.; Fukazawa, K. Current status and future outlook of fuel cell vehicle development in Toyota. ECS Trans. 2015, 69, 213. [Google Scholar] [CrossRef]

- Manoharan, Y.; Hosseini, S.E.; Butler, B.; Alzhahrani, H.; Senior, B.T.F.; Ashuri, T.; Krohn, J. Hydrogen fuel cell vehicles; current status and future prospect. Appl. Sci. 2019, 9, 2296. [Google Scholar] [CrossRef]

- Shao, Z.; Yi, B. Developing trend and present status of hydrogen energy and fuel cell development. Bull. Chin. Acad. Sci. 2019, 34, 469–477. [Google Scholar]

- Xiong, S.; Song, Q.; Guo, B.; Zhao, E.; Wu, Z. Research and development of on-board hydrogen-producing fuel cell vehicles. Int. J. Hydrogen Energy 2020, 45, 17844–17857. [Google Scholar]

- Muthukumar, M.; Rengarajan, N.; Velliyangiri, B.; Omprakas, M.A.; Rohit, C.B.; Raja, U.K. The development of fuel cell electric vehicles—A review. Mater. Today Proc. 2021, 45, 1181–1187. [Google Scholar] [CrossRef]

- Pramuanjaroenkij, A.; Kakaç, S. The fuel cell electric vehicles: The highlight review. Int. J. Hydrogen Energy 2023, 48, 9401–9425. [Google Scholar] [CrossRef]

- He, H.; Meng, X. A Review on Energy Management Technology of Hybrid Electric Vehicles. Trans. Beijing Inst. Technol. 2022, 42, 773–783. [Google Scholar]

- Wang, Y.; Fan, Y.; Ou, K.; Wei, Z.; Zhang, J. Research Progress on Traffic Information-Integrated Energy Management for Fuel Cell Vehicles. Automot. Eng. 2024, 46, 2314–2328. [Google Scholar]

- Trencher, G.; Taeihagh, A.; Yarime, M. Overcoming barriers to developing and diffusing fuel-cell vehicles: Governance strategies and experiences in Japan. Energy Policy 2020, 142, 111533. [Google Scholar] [CrossRef]

- Ehsani, M.; Gao, Y.; Longo, S.; Ebrahimi, K. Modern Electric, Hybrid Electric, and Fuel Cell Vehicles; CRC Press: Boca Raton, FL, USA, 2018. [Google Scholar]

- Ahmadi, S.; Bathaee, S.M.T.; Hosseinpour, A.H. Improving fuel economy and performance of a fuel-cell hybrid electric vehicle (fuel-cell, battery, and ultra-capacitor) using optimized energy management strategy. Energy Convers. Manag. 2018, 160, 74–84. [Google Scholar] [CrossRef]

- Caux, S.; Gaoua, Y.; Lopez, P. A combinatorial optimisation approach to energy management strategy for a hybrid fuel cell vehicle. Energy 2017, 133, 219–230. [Google Scholar] [CrossRef]

- Lü, X.; Wu, Y.; Lian, J.; Zhang, Y.; Chen, C.; Wang, P.; Meng, L. Energy management of hybrid electric vehicles: A review of energy optimization of fuel cell hybrid power system based on genetic algorithm. Energy Convers. Manag. 2020, 205, 112474. [Google Scholar] [CrossRef]

- Zhao, X.; Wang, L.; Zhou, Y.; Pan, B.; Wang, R.; Wang, L.; Yan, X. Energy management strategies for fuel cell hybrid electric vehicles: Classification, comparison, and outlook. Energy Convers. Manag. 2022, 270, 116179. [Google Scholar] [CrossRef]

- Marzougui, H.; Amari, M.; Kadri, A.; Bacha, F.; Ghouili, J. Energy management of fuel cell/battery/ultracapacitor in electrical hybrid vehicle. Int. J. Hydrogen Energy 2017, 42, 8857–8869. [Google Scholar] [CrossRef]

- Gu, H.; Yin, B.; Yu, Y.; Sun, Y. Energy management strategy considering fuel economy and life of fuel cell for fuel cell electric vehicles. J. Energy Eng. 2023, 149, 04022054. [Google Scholar] [CrossRef]

- Min, D.; Song, Z.; Chen, H.; Zhang, T. Genetic algorithm optimized neural network based fuel cell hybrid electric vehicle energy management strategy under start-stop condition. Appl. Energy 2022, 306, 118036. [Google Scholar] [CrossRef]

- Showers, S.O.; Raji, A.K. State-of-the-art review of fuel cell hybrid electric vehicle energy management systems. AIMS Energy 2022, 10, 458–485. [Google Scholar] [CrossRef]

- Munoz, P.M.; Correa, G.; Gaudiano, M.E.; Fernández, D. Energy management control design for fuel cell hybrid electric vehicles using neural networks. Int. J. Hydrogen Energy 2017, 42, 28932–28944. [Google Scholar] [CrossRef]

- Davis, K.; Hayes, J.G. Fuel cell vehicle energy management strategy based on the cost of ownership. IET Electr. Syst. Transp. 2019, 9, 226–236. [Google Scholar] [CrossRef]

- Zhou, D.; Al-Durra, A.; Gao, F.; Ravey, A.; Matraji, I.; Simoes, M.G. Online energy management strategy of fuel cell hybrid electric vehicles based on data fusion approach. J. Power Sources 2017, 366, 278–291. [Google Scholar] [CrossRef]

- Carignano, M.G.; Costa-Castelló, R.; Roda, V.; Nigro, N.M.; Junco, S.; Feroldi, D. Energy management strategy for fuel cell-supercapacitor hybrid vehicles based on prediction of energy demand. J. Power Sources 2017, 360, 419–433. [Google Scholar] [CrossRef]

- Carignano, M.; Roda, V.; Costa-Castelló, R.; Valiño, L.; Lozano, A.; Barreras, F. Assessment of energy management in a fuel cell/battery hybrid vehicle. IEEE Access 2019, 7, 16110–16122. [Google Scholar] [CrossRef]

- Song, K.; Ding, Y.; Hu, X.; Xu, H.; Wang, Y.; Cao, J. Degradation adaptive energy management strategy using fuel cell state-of-health for fuel economy improvement of hybrid electric vehicle. Appl. Energy 2021, 285, 116413. [Google Scholar] [CrossRef]

- Chen, W.; Wang, Y.; Li, B. Overview on current research status and development trends of hydrogen-powered rail transit. Electr. Drive Locomot. 2023, 3, 1–11. [Google Scholar]

- Teng, T.; Zhang, X.; Dong, H.; Xue, Q. A comprehensive review of energy management optimization strategies for fuel cell passenger vehicle. Int. J. Hydrogen Energy 2020, 45, 20293–20303. [Google Scholar] [CrossRef]

- Aminudin, M.A.; Kamarudin, S.K.; Lim, B.H.; Majilan, E.H.; Masdar, M.S.; Shaari, N. An overview: Current progress on hydrogen fuel cell vehicles. Int. J. Hydrogen Energy 2023, 48, 4371–4388. [Google Scholar] [CrossRef]

- Han, X.; Li, F.; Zhang, T.; Song, K. Economic energy management strategy design and simulation for a dual-stack fuel cell electric vehicle. Int. J. Hydrogen Energy 2017, 42, 11584–11595. [Google Scholar] [CrossRef]

- Shen, D.; Lim, C.C.; Shi, P. Fuzzy model based control for energy management and optimization in fuel cell vehicles. IEEE Trans. Veh. Technol. 2020, 69, 14674–14688. [Google Scholar] [CrossRef]

- Wu, J.; Peng, J.; Li, M.; Wu, Y. Enhancing fuel cell electric vehicle efficiency with TIP-EMS: A trainable integrated predictive energy management approach. Energy Convers. Manag. 2024, 310, 118499. [Google Scholar] [CrossRef]

- Kandidayeni, M.; Trovão, J.P.; Soleymani, M.; Boulon, L. Towards health-aware energy management strategies in fuel cell hybrid electric vehicles: A review. Int. J. Hydrogen Energy 2022, 47, 10021–10043. [Google Scholar] [CrossRef]

- Fu, Z.; Li, Z.; Si, P.; Tao, F. A hierarchical energy management strategy for fuel cell/battery/supercapacitor hybrid electric vehicles. Int. J. Hydrogen Energy 2019, 44, 22146–22159. [Google Scholar] [CrossRef]

- Li, X.; Wang, Y.; Yang, D.; Chen, Z. Adaptive energy management strategy for fuel cell/battery hybrid vehicles using Pontryagin’s Minimal Principle. J. Power Sources 2019, 440, 227105. [Google Scholar] [CrossRef]

- Wang, Z.; Xie, Y.; Sun, W.; Zang, P. Modeling and Energy Management Strategy Research of Fuel Cell Bus. J. Tongji Univ. (Nat. Sci. Ed.) 2019, 47, 97–103. [Google Scholar]

- Serpi, A.; Porru, M. Modelling and design of real-time energy management systems for fuel cell/battery electric vehicles. Energies 2019, 12, 4260. [Google Scholar] [CrossRef]

- Abdeldjalil, D.; Negrou, B.; Youssef, T.; Samy, M.M. Incorporating the best sizing and a new energy management approach into the fuel cell hybrid electric vehicle design. Energy Environ. 2025, 36, 616–637. [Google Scholar] [CrossRef]

- Ahluwalia, R.K.; Wang, X.; Star, A.G.; Papadias, D.D. Performance and cost of fuel cells for off-road heavy-duty vehicles. Int. J. Hydrogen Energy 2022, 47, 10990–11006. [Google Scholar] [CrossRef]

- Sorlei, I.S.; Bizon, N.; Thounthong, P.; Varlam, M.; Carcadea, E.; Culcer, M.; Raceanu, M. Fuel cell electric vehicles—A brief review of current topologies and energy management strategies. Energies 2021, 14, 252. [Google Scholar] [CrossRef]

- Rezk, H.; Nassef, A.M.; Abdelkareem, M.A.; Alami, A.H.; Fathy, A. Comparison among various energy management strategies for reducing hydrogen consumption in a hybrid fuel cell/supercapacitor/battery system. Int. J. Hydrogen Energy 2021, 46, 6110–6126. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhang, C.; Fan, R.; Deng, C.; Wan, S.; Chaoui, H. Energy management strategy for fuel cell vehicles via soft actor-critic-based deep reinforcement learning considering powertrain thermal and durability characteristics. Energy Convers. Manag. 2023, 283, 116921. [Google Scholar] [CrossRef]

- Liu, Y.; Liu, J.; Qin, D.; Li, G.; Chen, Z.; Zhang, Y. Online energy management strategy of fuel cell hybrid electric vehicles based on rule learning. J. Clean. Prod. 2020, 260, 121017. [Google Scholar] [CrossRef]

- Liu, J.; Ren, F.; Yan, F.; Wu, Y.; Sun, Y.; Hu, D.; Chen, N. Energy Management Strategy for Hydrogen Fuel Cell Vehicle Considering Fuel Cell Stack Lifespan. Chin. J. Automot. Eng. 2023, 13, 517–527. [Google Scholar]

- Shi, J.; Xie, J.; Zhao, Y. Research on durability control strategy of vehicle fuel cell. Mod. Manuf. Eng. 2021, 8, 56–63. [Google Scholar]

- Jia, C.; Zhou, J.; He, H.; Li, J.; Wei, Z.; Li, K.; Shi, M. A novel energy management strategy for hybrid electric bus with fuel cell health and battery thermal-and health-constrained awareness. Energy 2023, 271, 127105. [Google Scholar] [CrossRef]

- Yan, M.; Xu, H.; Jin, L.; He, H.; Li, M.; Liu, H. Co-optimization for fuel cell buses integrated with power system and air conditioning via multi-dimensional prediction of driving conditions. Energy Convers. Manag. 2022, 271, 116339. [Google Scholar] [CrossRef]

- Wu, J.; Zhang, Y.; Ruan, J.; Liang, Z.; Liu, K. Rule and optimization combined real-time energy management strategy for minimizing cost of fuel cell hybrid electric vehicles. Energy 2023, 285, 129442. [Google Scholar] [CrossRef]

- Zhou, D.; Ravey, A.; Al-Durra, A.; Gao, F. A comparative study of extremum seeking methods applied to online energy management strategy of fuel cell hybrid electric vehicles. Energy Convers. Manag. 2017, 151, 778–790. [Google Scholar] [CrossRef]

- Chen, B.; Ma, R.; Zhou, Y.; Ma, R.; Jiang, W.; Yang, F. Co-optimization of speed planning and cost-optimal energy management for fuel cell trucks under vehicle-following scenarios. Energy Convers. Manag. 2024, 300, 117914. [Google Scholar] [CrossRef]

- İnci, M.; Büyük, M.; Demir, M.H.; İlbey, G. A review and research on fuel cell electric vehicles: Topologies, power electronic converters, energy management methods, technical challenges, marketing and future aspects. Renew. Sustain. Energy Rev. 2021, 137, 110648. [Google Scholar] [CrossRef]

- Lee, H.; Cha, S.W. Energy management strategy of fuel cell electric vehicles using model-based reinforcement learning with data-driven model update. IEEE Access 2021, 9, 59244–59254. [Google Scholar] [CrossRef]

- Zhou, D.; Al-Durra, A.; Matraji, I.; Ravey, A.; Gao, F. Online energy management strategy of fuel cell hybrid electric vehicles: A fractional-order extremum seeking method. IEEE Trans. Ind. Electron. 2018, 65, 6787–6799. [Google Scholar] [CrossRef]

- Song, K.; Wang, X.; Li, F.; Sorrentino, M.; Zheng, B. Pontryagin’s minimum principle-based real-time energy management strategy for fuel cell hybrid electric vehicle considering both fuel economy and power source durability. Energy 2020, 205, 118064. [Google Scholar] [CrossRef]

- Yuan, J.; Yang, L.; Chen, Q. Intelligent energy management strategy based on hierarchical approximate global optimization for plug-in fuel cell hybrid electric vehicles. Int. J. Hydrogen Energy 2018, 43, 8063–8078. [Google Scholar] [CrossRef]

- Zhao, Z.; Wang, T.; Li, M.; Wang, H.; Wang, Y. Optimization of fuzzy control energy management strategy for fuel cell vehicle power system using a multi-island genetic algorithm. Energy Sci. Eng. 2021, 9, 548–564. [Google Scholar] [CrossRef]

- Vimalraj, C.; Sivaraju, S.S.; Ranganayaki, V.; Elanthirayan, R. Economic analysis and effective energy management of fuel cell and battery integrated electric vehicle. J. Energy Storage 2024, 101, 113719. [Google Scholar] [CrossRef]

- Liu, T.; Huo, W.; Lu, B. Time-convolution based energy management strategy for fuel cell vehicles. J. Chongqing Univ. Technol. (Nat. Sci.) 2024, 38, 93–101. [Google Scholar]

- Gao, F.; Gao, X.; Zhang, H.; Yang, K.; Song, Z. Management Strategy for Fuel Cell Trams with Both Global and Transient Characteristics. Trans. China Electrotech. Soc. 2023, 38, 5923–5938. [Google Scholar]

- Togun, H.; Aljibori, H.S.S.; Abed, A.M.; Biswas, N.; Alshamkhani, M.T.; Niyas, H.; Paul, D. A review on recent advances on improving fuel economy and performance of a fuel cell hybrid electric vehicle. Int. J. Hydrogen Energy 2024, 89, 22–47. [Google Scholar] [CrossRef]

- Zhang, H.; Yang, J.; Zhang, J.; Xu, X. Multiple-population Firefly Algorithm-based Energy Management Strategy for Vehicle-mounted Fuel Cell DC Microgrid. Proc. CSEE 2021, 41, 833–846. [Google Scholar]

- Zhang, R.; Chen, Z.; Liu, S. Adaptive Energy Management Strategy for High Power Hydrogen Fuel Cell Heavy-Duty Truck Based on Low Pass Filter. Automot. Eng. 2021, 43, 1693–1701. [Google Scholar]

- Li, Q.; Wang, X.; Meng, X.; Zhang, G.; Chen, W. Comprehensive Energy Management Method of PEMFC Hybrid Power System Based on Online Identification and Minimal Principle. Proc. CSEE 2020, 40, 6991–7002. [Google Scholar]

- Fu, J.; Fu, Z.; Song, S. Best equivalent hydrogen consumption control for fuel cell vehicle based on Markov decision process-based. Control Theory Appl. 2021, 38, 1219–1228. [Google Scholar]

- Liu, N.; Yu, B.; Guo, A. Analysis of Power Tracking Management Strategy for Fuel Cell Hybrid System. J. Southwest Jiaotong Univ. 2020, 55, 1147–1154. [Google Scholar]

- Ji, C.; Li, X.; Liang, C. Simulation of Energy Management for Hybrid Power System of Vehicle Fuel Cell Lithium Ion Power Battery Based on LMSAMESim. J. Beijing Univ. Technol. 2020, 46, 58–67. [Google Scholar]

- Wang, Y.; Sun, B.; Li, W. Energy Management Strategy of Fuel Cell Electric Vehicles Based on Wavelet Rules. J. Univ. Jinan (Sci. Technol.) 2021, 35, 322–328. [Google Scholar]

- Lu, D.; Bao, Y. Control Parameter Optimization of Thermostatically Controlled Loads Based on a Genetic Algorithm. J. Electr. Power 2021, 36, 355–362. [Google Scholar]

- Zeng, F.; Yu, Y.; Bu, J. Research on energy management strategy for military intergrated starter generator hybrid vehicle based on finite state machine. Sci. Technol. Eng. 2020, 20, 7472–7483. [Google Scholar]

- Zhang, F.; Hu, B.; Zhang, H. An Energy Management Strategy for Fuel Cell Incremental Power System. Trans. Beijing Inst. Technol. 2024, 44, 51–59. [Google Scholar]

- Li, J.; Zhu, Y.; Xu, Y. Research on Control Strategy Optimization in Power Transmission System of Hybrid Electric Vehicle. Automot. Eng. 2016, 38, 10–14. [Google Scholar]

- Liu, J.; Xiao, P.; Fu, B.; Wang, J.; Zhao, Y.; He, L.; Chen, J. Research on Mode Switching Control Strategy of Parallel Hybrid Electric Vehicle. China J. Highw. Transp. 2020, 33, 42–50. [Google Scholar]

- Wang, Z.; Xie, Y.; Zang, P. Energy management strategy of fuel cell bus based on Pontryagin’s minimum principle. J. Jilin Univ. (Eng. Technol. Ed.) 2020, 50, 36–43. [Google Scholar]

- Hong, Z.; Li, Q.; Chen, W. An Energy Management Strategy Based on PMP for the Fuel Cell Hybrid System of Locomotive. Proc. CSEE 2019, 39, 3867–3879. [Google Scholar]

- Meng, X.; Li, Q.; Chen, W.; Zhang, G. An Energy Management Method Based on Pontryagin Minimum Principle Satisfactory Optimization for Fuel Cell Hybrid Systems. Proc. CSEE 2019, 39, 782–792. [Google Scholar]

- Zhang, A.; Fang, L.; Hu, H. Energy Management Strategy of Plug-in Fuel Cell Tram Based on Speed Optimization, PSO. Urban Mass Transit 2022, 25, 249–254. [Google Scholar]

- Wei, X.; Liu, B.; Leng, J. Research on Eco-Driving of Fuel Cell Vehicles via Convex Optimization. Automot. Eng. 2022, 44, 851–858. [Google Scholar]

- Zhang, Y.; Ma, R.; Zhao, D.; Huangfu, Y.; Liu, W. An online efficiency optimized energy management strategy for fuel cell hybrid electric vehicles. IEEE Trans. Transp. Electrif. 2022, 9, 3203–3217. [Google Scholar] [CrossRef]

- Shen, D.; Lim, C.C.; Shi, P. Robust fuzzy model predictive control for energy management systems in fuel cell vehicles. Control Eng. Pract. 2020, 98, 104364. [Google Scholar] [CrossRef]

- Chatterjee, D.; Biswas, P.K.; Sain, C.; Roy, A.; Ahmad, F.; Rahul, J. Bi-LSTM predictive control-based efficient energy management system for a fuel cell hybrid electric vehicle. Sustain. Energy Grids Netw. 2024, 38, 101348. [Google Scholar] [CrossRef]

- Wei, X.; Sun, C.; Ren, Q.; Zhou, F.; Huo, W.; Sun, F. Application of alternating direction method of multipliers algorithm in energy management of fuel cell vehicles. Int. J. Hydrogen Energy 2021, 46, 25620–25633. [Google Scholar] [CrossRef]

- Zhou, Y.; Li, H.; Ravey, A.; Péra, M.C. An integrated predictive energy management for light-duty range-extended plug-in fuel cell electric vehicle. J. Power Sources 2020, 451, 227780. [Google Scholar] [CrossRef]

- Mazouzi, A.; Hadroug, N.; Alayed, W.; Iratni, A.; Kouzou, A. Comprehensive optimization of fuzzy logic-based energy management system for fuel-cell hybrid electric vehicle using genetic algorithm. Int. J. Hydrogen Energy 2024, 81, 889–905. [Google Scholar] [CrossRef]

- Hosseini, S.M.; Kelouwani, S.; Kandidayeni, M.; Amammou, A.; Soleymani, M. Fuel efficiency through co-optimization of speed planning and energy management in intelligent fuel cell electric vehicles. Int. J. Hydrogen Energy 2025, 126, 9–21. [Google Scholar] [CrossRef]

- Xin, W.; Zheng, W.; Qin, J.; Wei, S.; Ji, C. Energy management of fuel cell vehicles based on model prediction control using radial basis functions. J. Sens. 2021, 2021, 9985063. [Google Scholar] [CrossRef]

- Du, C.; Huang, S.; Jiang, Y.; Wu, D.; Li, Y. Optimization of energy management strategy for fuel cell hybrid electric vehicles based on dynamic programming. Energies 2022, 15, 4325. [Google Scholar] [CrossRef]

- Lee, H.; Cha, S.; Kim, H.; Kim, S. Energy Management Strategy of Hybrid Electric Vehicle Using Stochastic Dynamic Programming; SAE Technical Paper; SAE International: Pennsylvania, PA, USA, 2015. [Google Scholar]

- Gao, F.; Zhang, H. Adaptive Instantaneous Equivalent Energy Consumption Optimization of Hydrogen Fuel Cell Hybrid Electric Tram. J. Mech. Eng. 2023, 59, 226–238. [Google Scholar]

- Nagem, N.A.; Ebeed, M.; Alqahtani, D.; Jurado, F.; Khan, N.H.; Hafez, W.A. Optimal design and three-level stochastic energy management for an interconnected microgrid with hydrogen production and storage for fuel cell electric vehicle refueling stations. Int. J. Hydrogen Energy 2024, 87, 574–587. [Google Scholar] [CrossRef]

- Ghaderi, R.; Kandidayeni, M.; Soleymani, M.; Boulon, L.; Trovão, J.P.F. Online health-conscious energy management strategy for a hybrid multi-stack fuel cell vehicle based on game theory. IEEE Trans. Veh. Technol. 2022, 71, 5704–5714. [Google Scholar] [CrossRef]

- Zhang, Y.; Ma, R.; Zhao, D.; Huangfu, Y.; Liu, W. A novel energy management strategy based on dual reward function Q-learning for fuel cell hybrid electric vehicle. IEEE Trans. Ind. Electron. 2021, 69, 1537–1547. [Google Scholar] [CrossRef]

- Guo, J.; He, H.; Sun, C. ARIMA-based road gradient and vehicle velocity prediction for hybrid electric vehicle energy management. IEEE Trans. Veh. Technol. 2019, 68, 5309–5320. [Google Scholar] [CrossRef]

- Zhou, Y.; Ravey, A.; Péra, M. Multi-mode predictive energy management for fuel cell hybrid electric vehicles using Markov driving pattern recognizer. Appl. Energy 2020, 258, 114057. [Google Scholar] [CrossRef]

- Lin, X.Y.; Zhang, G.J.; Wei, S.S. Velocity prediction using Markov Chain combined with driving pattern recognition and applied to Dual-Motor Electric Vehicle energy consumption evaluation. Appl. Soft Comput. 2021, 101, 106998. [Google Scholar] [CrossRef]

- Zhang, X.; Chen, Z.; Wang, W.; Fang, X. Prediction Method of PHEV Driving Energy Consumption Based on the Optimized CNN Bi-LSTM Attention Network. Energies 2024, 17, 2959. [Google Scholar] [CrossRef]

- Zhang, Z.; Wang, Y.X.; He, H.; Sun, F. A short-and long-term prognostic associating with remaining useful life estimation for proton exchange membrane fuel cell. Appl. Energy 2021, 304, 117841. [Google Scholar] [CrossRef]

- Lin, X.; Ye, J.; Wang, Z. Trip distance adaptive equivalent hydrogen consumption minimization strategy for fuel-cell electric vehicles integrating driving cycle prediction. Chin. J. Eng. 2024, 46, 376–384. [Google Scholar]

- Li, C.; Li, M.; Yu, M.; Wang, Z.; Zhao, X. Research on short-time speed prediction based on WSO-optimized CNN-BiLSTM. J. Chongqing Univ. Technol. (Nat. Sci.) 2024, 38, 38–47. [Google Scholar]

- Yan, M.; Xu, H.; Li, M.; He, H.; Bai, Y. Hierarchical predictive energy management strategy for fuel cell buses entering bus stops scenario. Green Energy Intell. Transp. 2023, 2, 100095. [Google Scholar] [CrossRef]

- Wei, X.; Sun, C.; Liu, B.; Huo, W.; Ren, Q.; Sun, F. Co-Optimization of Vehicle Speed and Energy for Fuel Cell Vehicles. J. Mech. Eng. 2023, 59, 204–212. [Google Scholar]

- Zhang, H.; Yang, J.; Zhang, J.; Song, P.; Xu, X. Pareto-Based Multi-Objective Optimization of Energy Management for Fuel Cell Tramway. Acta Autom. Sin. 2019, 45, 2378–2392. [Google Scholar]

- Yang, J.; Xu, X.; Zhang, J.; Song, P. Multi-objective Optimization of Energy Management Strategy for Fuel Cell Tram. J. Mech. Eng. 2018, 54, 153–159. [Google Scholar] [CrossRef]

- Yu, K.; Wang, S.; Yang, D.; Fu, H.; Liao, Y. Real-time Energy Management Strategy of Fuel Cell Vehicles Based on Multi-Objective Optimization. J. Zhengzhou Univ. (Eng. Sci.) 2024, 45, 80–88. [Google Scholar]

- Liu, P.; Li, Z.; Jiang, P.; Shu, H.; Liu, Z. A New Method for Vehicle Speed Planning and Model Prediction Control underV2I. J. Chongqing Univ. Technol. (Nat. Sci.) 2022, 41, 27–33. [Google Scholar]

- He, H.; Guo, J.; Peng, J.; Tan, H.; Chao, S. Real-time Global Driving Cycle Construction Method and the Application in Global Optimal Energy Management in Plug-in Hybrid Electric Vehicles. Energy 2018, 152, 95–107. [Google Scholar]

- Guo, J.; He, H.; Wei, Z.; Li, J. An economic driving energy management strategy for the fuel cell bus. IEEE Trans. Transp. Electrif. 2022, 9, 5074–5084. [Google Scholar] [CrossRef]

- Jia, C.; Liu, W.; He, H.; Chau, K.T. Deep reinforcement learning-based energy management strategy for fuel cell buses integrating future road information and cabin comfort control. Energy Convers. Manag. 2024, 321, 119032. [Google Scholar] [CrossRef]

- Guo, J.; He, H.; Li, J.; Liu, Q. Driving information process system-based real-time energy management for the fuel cell bus to minimize fuel cell engine aging and energy consumption. Energy 2022, 248, 123474. [Google Scholar]

- Lin, X.; Li, X.; Lin, H. Optimization Feedback Control Strategy Based ECMS for Plug-in FCHEV Considering Fuel Cell Decay China. J. Highw. Transp. 2019, 32, 153–161. [Google Scholar]

- Wang, Y.; Yu, Q.; Wang, X. Adaptive Optimal Energy Management Strategy of Fuel Cell Vehicle by Considering Fuel Cell Performance Degradation. J. Traffic Transp. Eng. 2022, 22, 190–204. [Google Scholar]

- Lin, X.; Xia, Y.; Wei, S. Energy management control strategy for plug-in fuel cell electric vehicle based on reinforcement learning algorithm. Chin. J. Eng. 2019, 41, 1332–1341. [Google Scholar]

- Li, X.; He, H.; Wu, J. Knowledge-guided deep reinforcement learning for multi-objective energy management of fuel cell electric vehicles. IEEE Trans. Transp. Electrif. 2024, 11, 2344–2355. [Google Scholar] [CrossRef]

- Quan, S.; He, H.; Wei, Z.; Chen, J.; Zhang, Z.; Wang, Y. Customized Energy Management for Fuel Cell Electric Vehicle Based on Deep Reinforcement Learning-Model Predictive Control Self-Regulation Framework. IEEE Trans. Ind. Inform. 2024, 20, 13776–13785. [Google Scholar] [CrossRef]

- Huang, R.; He, H.; Su, Q. An intelligent full-knowledge transferable collaborative eco-driving framework based on improved soft actor-critic algorithm. Appl. Energy 2024, 375, 124078. [Google Scholar] [CrossRef]

- Gao, F.; Zhang, H.; Wang, W.; Li, M.; Gao, X. Energy Saving Operation Optimization of Hybrid Energy Storage System for Hydrogen Fuel Cell Tram. Trans. China Electrotech. Soc. 2022, 37, 686–696. [Google Scholar]

- Liu, P.; Li, Q.; Meng, X.; Luo, S.; Li, L.; Liu, S.; Chen, W. Collaborative Optimization of Hydrogen Fuel Cell Urban Emu Operation Based on Multi-directional Differential Evolution Algorithm. Proc. CSEE 2024, 44, 1007–1019. [Google Scholar]

- Huang, R.; He, H.; Su, Q.; Härtl, M.; Jaensch, M. Type-and task-crossing energy management for fuel cell vehicles with longevity consideration: A heterogeneous deep transfer reinforcement learning framework. Appl. Energy 2025, 377, 124594. [Google Scholar] [CrossRef]

- Li, M.; Liu, H.; Yan, M.; Wu, J.; Jin, L.; He, H. Data-driven bi-level predictive energy management strategy for fuel cell buses with algorithmics fusion. Energy Convers. Manag. X 2023, 20, 100414. [Google Scholar] [CrossRef]

- Jui, J.J.; Ahmad, M.A.; Molla, M.M.I.; Rashid, M.I.M. Optimal energy management strategies for hybrid electric vehicles: A recent survey of machine learning approaches. J. Eng. Res. 2024, 12, 454–467. [Google Scholar] [CrossRef]

- Jouda, B.; Al-Mahasneh, A.J.; Mallouh, M.A. Deep stochastic reinforcement learning-based energy management strategy for fuel cell hybrid electric vehicles. Energy Convers. Manag. 2024, 301, 117973. [Google Scholar] [CrossRef]

- Ghaderi, R.; Kandidayeni, M.; Boulon, L.; Trovao, J.P. Q-learning based energy management strategy for a hybrid multi-stack fuel cell system considering degradation. Energy Convers. Manag. 2023, 293, 117524. [Google Scholar] [CrossRef]

- Wang, D.; Mei, L.; Xiao, F.; Song, C.; Qi, C.; Song, S. Energy management strategy for fuel cell electric vehicles based on scalable reinforcement learning in novel environment. Int. J. Hydrogen Energy 2024, 59, 668–678. [Google Scholar] [CrossRef]

- Deng, P.; Wu, X.; Yang, J.; Yang, G.; Jiang, P.; Yang, J.; Bian, X. Optimal online energy management strategy of a fuel cell hybrid bus via reinforcement learning. Energy Convers. Manag. 2024, 300, 117921. [Google Scholar] [CrossRef]

- Yin, Y.; Zhang, X.; Pan, X. Equivalent factor of energy management strategy for fuel cell hybrid electric vehicles based on Q-Learning. J. Automot. Saf. Energy 2022, 13, 785–795. [Google Scholar]

- Tang, X.; Chen, J.; Liu, T.; Qin, Y.; Cao, D. Distributed deep reinforcement learning-based energy and emission management strategy for hybrid electric vehicles. IEEE Trans. Veh. Technol. 2021, 70, 9922–9934. [Google Scholar] [CrossRef]

- Zheng, C.; Zhang, D.; Xiao, Y.; Li, W. Reinforcement learning-based energy management strategies of fuel cell hybrid vehicles with multi-objective control. J. Power Sources 2022, 543, 231841. [Google Scholar] [CrossRef]

- Chen, W.; Peng, J.; Ren, T.; Zhang, H.; He, H.; Ma, C. Integrated velocity optimization and energy management for FCHEV: An eco-driving approach based on deep reinforcement learning. Energy Convers. Manag. 2023, 296, 117685. [Google Scholar] [CrossRef]

- Jia, C.; He, H.; Zhou, J.; Li, J.; Wei, Z.; Li, K.; Li, M. A novel deep reinforcement learning-based predictive energy management for fuel cell buses integrating speed and passenger prediction. Int. J. Hydrogen Energy 2025, 100, 456–465. [Google Scholar] [CrossRef]

- Huang, R.; He, H.; Su, Q.; Härtl, M.; Jaensch, M. Enabling cross-type full-knowledge transferable energy management for hybrid electric vehicles via deep transfer reinforcement learning. Energy 2024, 305, 132394. [Google Scholar] [CrossRef]

- Peng, J.; Ren, T.; Chen, Z.; Chen, W.; Wu, C.; Ma, C. Efficient training for energy management in fuel cell hybrid electric vehicles: An imitation learning-embedded deep reinforcement learning framework. J. Clean. Prod. 2024, 447, 141360. [Google Scholar] [CrossRef]

- Chen, H.; Guo, G.; Tang, B.; Hu, G.; Tang, X.; Liu, T. Data-driven transferred energy management strategy for hybrid electric vehicles via deep reinforcement learning. Energy Rep. 2023, 10, 2680–2692. [Google Scholar] [CrossRef]

- Li, B.; Cui, Y.; Xiao, Y.; Fu, S.; Choi, J.; Zheng, C. An improved energy management strategy of fuel cell hybrid vehicles based on proximal policy optimization algorithm. Energy 2025, 317, 134585. [Google Scholar] [CrossRef]

- Ajani, T.S.; Imoize, A.L.; Atayero, A.A. An overview of machine learning within embedded and mobile devices–optimizations and applications. Sensors 2021, 21, 4412. [Google Scholar] [CrossRef]

- González, M.L.; Ruiz, J.; Andrés, L.; Lozada, R.; Skibinsky, E.S.; Fernández, J.; García-Vico, Á.M. Deep Learning Inference on Edge: A Preliminary Device Comparison. In International Conference on Intelligent Data Engineering and Automated Learning; Springer Nature: Cham, Switzerland, 2024; pp. 265–276. [Google Scholar]

- Kumar, R.; Sharma, A. Edge AI: A Review of Machine Learning Models for Resource-Constrained Devices. Artif. Intell. Mach. Learn. Rev. 2024, 5, 1–11. [Google Scholar]

- Chen, B.; Wang, M.; Hu, L.; Zhang, R.; Li, H.; Wen, X.; Gao, K. A hierarchical cooperative eco-driving and energy management strategy of hybrid electric vehicle based on improved TD3 with multi-experience. Energy Convers. Manag. 2025, 326, 119508. [Google Scholar] [CrossRef]

- Farooq, M.A.; Shariff, W.; Corcoran, P. Evaluation of thermal imaging on embedded GPU platforms for application in vehicular assistance systems. IEEE Trans. Intell. Veh. 2022, 8, 1130–1144. [Google Scholar] [CrossRef]

- Lucan Orășan, I.; Seiculescu, C.; Căleanu, C.D. A brief review of deep neural network implementations for ARM cortex-M processor. Electronics 2022, 11, 2545. [Google Scholar] [CrossRef]

- Niu, Z.; He, H. A data-driven solution for intelligent power allocation of connected hybrid electric vehicles inspired by offline deep reinforcement learning in V2X scenario. Appl. Energy 2024, 372, 123861. [Google Scholar] [CrossRef]

- Mesdaghi, A.; Mollajafari, M. Improve performance and energy efficiency of plug-in fuel cell vehicles using connected cars with V2V communication. Energy Convers. Manag. 2024, 306, 118296. [Google Scholar] [CrossRef]

| Architecture Type | Energy Efficiency | Cost (USD/kW) | Size/Weight (kg/kW) | Dynamic Performance | Typical Applications |

|---|---|---|---|---|---|

| Standalone Fuel Cell | 40–55% (sensitive to load fluctuations) | 800–1200 | 8–12 | Poor | Low-speed, stable-load vehicles |

| Fuel Cell–Battery Hybrid | 45–60% (optimized FC operation) | 500–800 | 10–15 | Good | Urban buses, logistics vehicles |

| Fuel Cell–Supercapacitor Hybrid | 45–58% (effective transient support) | 600–900 | 9–13 | Excellent | Short-distance buses, delivery vans |

| Fuel Cell–Battery–Supercapacitor Hybrid | 50–65% (full-range optimization) | 900–1500 | 12–18 | Excellent | Long-haul buses, heavy-duty trucks |

| Feature/Metric | DP (Dynamic Programming) | ADP (Approximate DP) | SDP (Stochastic DP) |

|---|---|---|---|

| Applicable Problem | Deterministic Optimization | Deterministic with Complexity | Optimization under Uncertainty |

| State Transition | |||

| Objective Function | |||

| Optimality | Global Optimum | Near-Optimal (Approximate) | Expected Value Optimum |

| Computational Complexity | Very High (offline only) | Medium (online feasible) | High (longer convergence) |

| Typical Runtime [85,86] | >1000 s (UDDS, MATLAB R2021b, I7-10750H) | 10–100 s (WLTC, MATLAB R2021b, I7-10750H) | 60–300 s (UDDS, MATLAB R2021b, I7-10750H) |

| Fuel Economy Improvement (vs. Rule) [87] | About 18–20% UDDS, WLTC (SOC∈(30, 70)) | About 12–15% WLTC (SOC∈(30, 70)) | About 10–13% UDDS (SOC∈(30, 70)) |

| Real-Time Applicability | No | Yes | Partial (depends on modeling) |

| Representative Use Case | Offline benchmark generation | Embedded control in EV/FCV | Adaptive strategies under traffic |

| Algorithm | Typical Model Size | Inference Latency (on Jetson/ARM) | Control Frequency | Deployment Feasibility |

|---|---|---|---|---|

| DQN [133] | About 0.5–2 M | 10–30 ms @ Jetson Xavier NX, FP32, batch = 1 | Low (1–10 Hz) | Moderate (needs pruning) |

| DDPG [134] | About 1–3 M | 15–40 ms Jetson TX2, FP16, batch = 1 | Medium (5–20 Hz) | Feasible |

| PPO [135] | About 1–2 M | 20–50 ms @ ARM Cortex-A72, PyTorch 1.8, FP32 | Medium (5–12 Hz) | Computationally heavier |

| TD3/E TD3 [136] | About 2–4 M | 25–60 ms Jetson Xavier, FP32, batch = 1 | Medium (5–10 Hz) | Needs optimization |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Published by MDPI on behalf of the World Electric Vehicle Association. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Guo, J.; He, H.; Jia, C.; Guo, S. The Energy Management Strategies for Fuel Cell Electric Vehicles: An Overview and Future Directions. World Electr. Veh. J. 2025, 16, 542. https://doi.org/10.3390/wevj16090542

Guo J, He H, Jia C, Guo S. The Energy Management Strategies for Fuel Cell Electric Vehicles: An Overview and Future Directions. World Electric Vehicle Journal. 2025; 16(9):542. https://doi.org/10.3390/wevj16090542

Chicago/Turabian StyleGuo, Jinquan, Hongwen He, Chunchun Jia, and Shanshan Guo. 2025. "The Energy Management Strategies for Fuel Cell Electric Vehicles: An Overview and Future Directions" World Electric Vehicle Journal 16, no. 9: 542. https://doi.org/10.3390/wevj16090542

APA StyleGuo, J., He, H., Jia, C., & Guo, S. (2025). The Energy Management Strategies for Fuel Cell Electric Vehicles: An Overview and Future Directions. World Electric Vehicle Journal, 16(9), 542. https://doi.org/10.3390/wevj16090542