1. Introduction

The permanent magnet synchronous motor (PMSM) is widely used in industrial, transportation, and new energy fields due to its high efficiency, high torque density, and excellent control performance. Since its internal permanent magnets are highly sensitive to temperature, excessive temperature may lead to insulation failure and magnetic performance degradation, affecting operating efficiency and service life. Therefore, accurate prediction of rotor temperature is of great significance for improving system performance and reducing energy consumption and risks. However, due to the high-speed rotation of the rotor permanent magnets inside the motor and the complexity of the rotor structure, it is difficult to arrange sensors, which also increases costs and potential safety hazards [

1,

2].

Traditional temperature prediction methods mainly include three types: Computational Fluid Dynamics (CFD), Finite Element Analysis (FEA), and Lumped-Parameter Thermal Network (LPTN) [

3,

4]. Dong et al. used CFD modeling to evaluate the temperature distribution of high-speed permanent magnet motors [

5]. Sun et al. applied 2-D finite element analysis to estimate the temperature rise in motor rotors [

6]. However, CFD and FEA are constrained by the ideal conditions of modeling and the accuracy of models. Moreover, they involve complex calculations and consume substantial computing resources, thus making them generally unsuitable for temperature monitoring with real-time requirements [

7]. The LPTN method is an approach used to build an equivalent network model based on thermodynamics theory [

8,

9]; it has a faster calculation speed than CFD and FEA, but due to the complexity of the PMSM’s structure and materials, the LPTN model may fail to capture the detailed distribution of the thermal field.

In recent years, Physics-Informed Neural Networks (PINNs), as hybrid methods integrating deep learning and physical principles, have begun to be applied in the field of real-time thermal prediction [

10]. However, in the scenario of motor rotor temperature prediction, PINNs have two key limitations. First, they rely heavily on accurate prior physical equations and precise quantification of physical parameters. During the actual operation of the motor, variable operating conditions, such as sudden load changes and transient speed changes, will cause physical parameters to vary with time, resulting in a mismatch between the fixed physical constraints in PINNs and the actual operating conditions [

11]. Second, the embedding of physical constraints increases the model complexity of the PINN [

12]. When processing the same motor temperature dataset, PINNs require more training time than data-driven deep learning models, which weakens their real-time advantage in on-board applications.

Classic deterministic methods for time series have long been applied in the field of temperature prediction. The Autoregressive Integrated Moving Average (ARIMA) model captures linear temporal dependencies by combining Autoregressive (AR) and Moving Average (MA) components, but it is unable to handle nonlinear relationships in motor rotor temperature [

13]. Exponential smoothing models (such as the Holt–Winters model) predict future values by assigning exponentially decreasing weights to historical data; however, they are sensitive to outliers in temperature data and cannot capture long-term temporal correlations [

14]. These limitations have prompted researchers to adopt deep learning techniques—which offer greater advantages in nonlinear feature extraction and long-sequence modeling—for motor rotor temperature prediction.

By contrast, deep learning (DL), leveraging its robust nonlinear modeling capability and data-driven nature, has demonstrated significant advantages in temperature prediction. Typical DL networks, represented by DNNs, LSTM, and CNNs, do not rely on explicit physical equation constraints, thus avoiding errors caused by incomplete physical assumptions. They can achieve efficient and accurate temperature estimation solely based on operating data such as current, voltage, and rotational speed. Meanwhile, they possess characteristics including excellent real-time performance, high cost-effectiveness, and strong adaptability, making them more aligned with the practical requirements of motor rotor temperature monitoring [

1,

15,

16,

17,

18,

19,

20].

In the field of time-series modeling, various deep learning techniques exhibit different modeling advantages based on their unique network structure designs, providing diverse technical pathways for motor temperature prediction. Long Short-Term Memory (LSTM) networks address the gradient vanishing problem of the traditional recurrent neural network (RNN) through a gating mechanism. They can effectively capture the long-term temporal dependencies of dynamic systems, making them particularly suitable for temperature sequence prediction involving complex transient features. Temporal Convolutional Networks (TCNs) adopt a dilated convolution structure, which can flexibly expand the receptive field by adjusting the dilation coefficient. While maintaining computational efficiency, TCNs capture multi-scale local temporal features, and their parallel computing capability is significantly superior to that of recurrent neural networks. Differential Feedforward Neural Networks (DFNNs) enhance the ability to model the rate of change in input features by introducing differential operators, demonstrating unique advantages in handling the nonlinear mapping relationship between motor operating parameters and temperature. Convolutional Neural Networks (CNNs) leverage the local receptive field and weight sharing mechanism to efficiently extract spatial correlation features from temperature sequences. Bidirectional Long Short-Term Memory (BiLSTM) networks utilize hidden layer structures in both forward and backward directions, enabling them to simultaneously capture the impact of future states on current temperatures. This makes BiLSTM more suitable for modeling bidirectional temporal processes such as motor start-up and shutdown.

The aforementioned technologies have been validated in the field of PMSM temperature prediction, and the relevant research findings provide important references for subsequent technical optimization. Oliver Wallscheid et al. [

17] were the first to apply LSTM to PMSM temperature time-series prediction, using a particle swarm optimization algorithm to search for the optimal hyperparameters of the model. However, this optimization method evaluates each candidate solution in the search space only once, making it difficult to fully traverse the global optimal domain, which limits the hyperparameter optimization accuracy to a certain extent. The TCN model constructed by Wilhelm Kirchgässner et al. [

1] achieved a mean squared error (MSE) of 3.04. Compared with the traditional RNN, this validates its efficiency in motor temperature series modeling. The Deep Feedforward Neural Network-Nonlinear AutoRegressive with eXogenous inputs (DFNN-NARX) model proposed by Jun Lee et al. [

18] demonstrates significantly better performance than traditional feedforward neural networks in the temperature estimation of stator windings and permanent magnets. Hosseini et al. [

19] compared the prediction effects of CNNs and LSTM and found that CNNs are more effective in predicting the temperatures of stators and rotor permanent magnets, achieving an MSE of 2.64 and an average coefficient of determination (R

2) of 0.9924. Mohammed Bouziane et al. [

20] used a recurrent neural network with BiLSTM units to model the complex relationships of motor parameters; the R

2 score of temperature prediction on the test set reached 0.99, confirming its modeling accuracy for nonlinear correlations.

Numerous studies have confirmed the effectiveness of the attention mechanism in time-series forecasting. Wang and Zhang [

21] proposed a multi-stage attention network: they leveraged the attention mechanism to capture the differential impacts of non-forecast sequences on target sequences, incorporated a score adjustment module to avoid the omission of key information, and combined a gated recurrent unit (GRU)-based LSTM network to enhance the capture of abrupt change information, with convergence optimized via the AdaHMG algorithm. When tested on the Nasdaq100 and PM2.5 datasets, the mean absolute error (MAE) and root mean square error (RMSE) of this network were reduced by 10.16–33.01% and 12.81–37.55%, respectively, compared with those of the dual-stage attention-based recurrent neural network (DA-RNN). Notably, the more non-forecast sequences there were, the more significant this advantage became. To address the high-dimensionality and nonlinearity issues of multivariate time-series data, Cheng et al. proposed the dual attention-based bidirectional long short-term memory (DABi-LSTM). This model uses input attention to screen key driving sequences, employs BiLSTM to bidirectionally extract temporal features, and integrates LSTM to optimize long-term dependency learning, thereby forming a collaborative architecture [

22]. In convolutional-based temporal modeling, Wang and Zhang [

21] adapted the temporal attention mechanism to the time dimension: they convolved the output of each layer of the TCN, generated dynamic weights through sigmoid mapping, and achieved performance improvements. Compared with LSTM and GRU, the RMSE and MAE of this modified model were reduced by an average of 10–37%; compared with the basic TCN, these metrics were further reduced by 0.8–10%, breaking the limitation of the “fixed receptive field” in traditional convolution.

Studies by Oliver Wallscheid [

17], Wilhelm Kirchgässner [

1], Mohammed Bouziane [

20], and others have shown that TCNs and BiLSTM exhibit excellent performance in time-series modeling. However, existing research also indicates that single deep learning methods have limited performance under complex operating conditions [

23,

24], and there is an urgent need to further improve model performance.

To solve the above problems, this paper proposes a TCN-BiLSTM-MHA prediction model based on a Hybrid Grey Wolf Optimization (H-GWO) algorithm. The model comprehensively utilizes the TCN to extract local time-series features, BiLSTM to capture bidirectional dependencies in sequences, and multi-head attention (MHA) to model the importance of different time steps of multi-dimensional information, thereby realizing in-depth mining of the relationship between rotor temperature and other features. At the same time, the H-GWO algorithm is introduced to optimize the model hyperparameters to improve the overall prediction accuracy and generalization ability.

The main contributions of this paper are as follows:

A hybrid prediction model integrating a TCN, BiLSTM and MHA is proposed, which achieves good experimental results in motor rotor temperature prediction.

A Hybrid Grey Wolf Optimization algorithm combining Tent chaotic mapping and differential evolution is used to optimize the key parameters of the prediction model, including the number of TCN channels, the number of neurons in BiLSTM hidden layers, and the learning rate, effectively improving the prediction performance of the model.

Ablation experiments and comparative experiments are carried out on public datasets, verifying the feasibility of the proposed model for rotor temperature prediction and providing a new idea for non-contact prediction of motor rotor temperature.

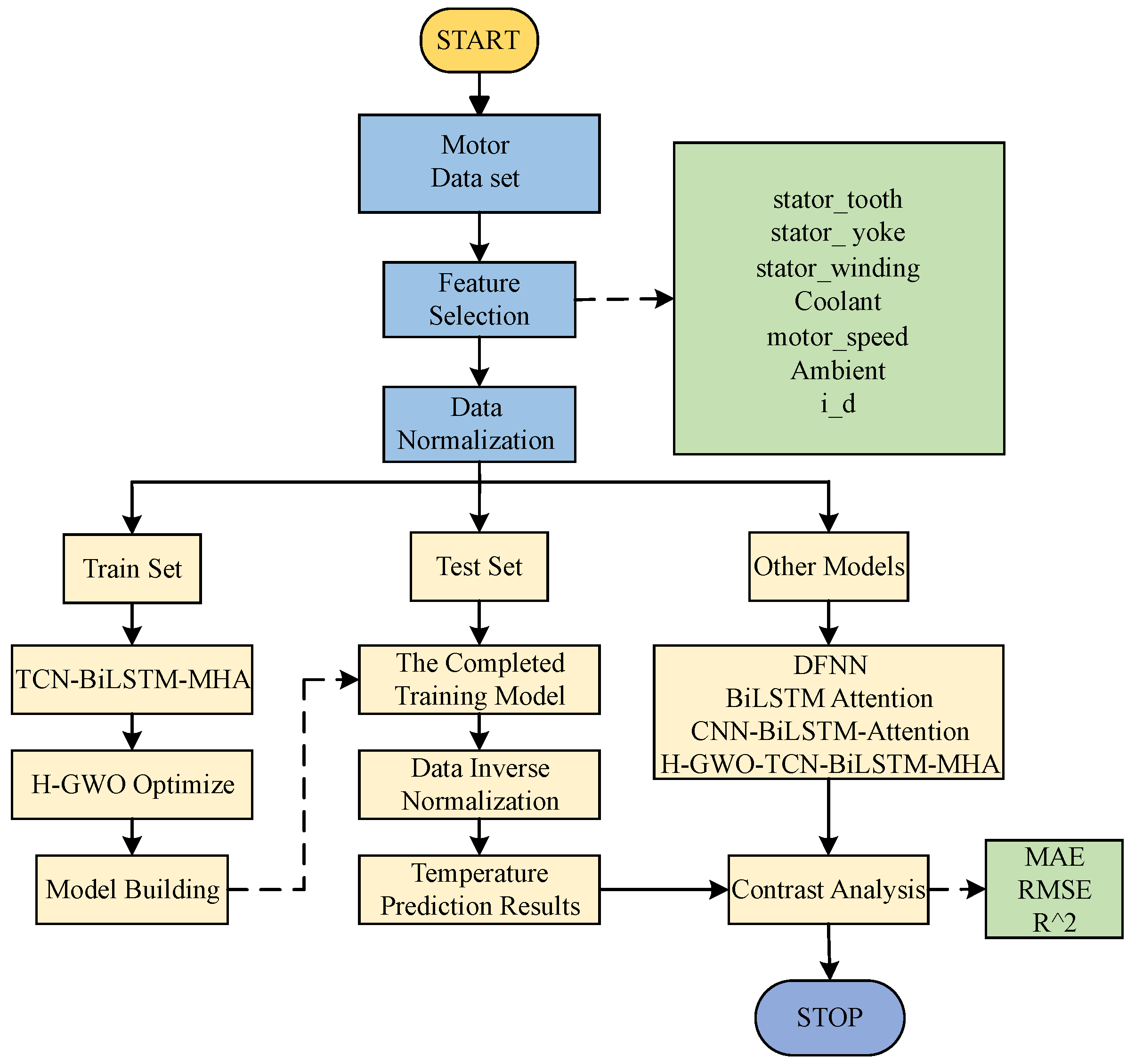

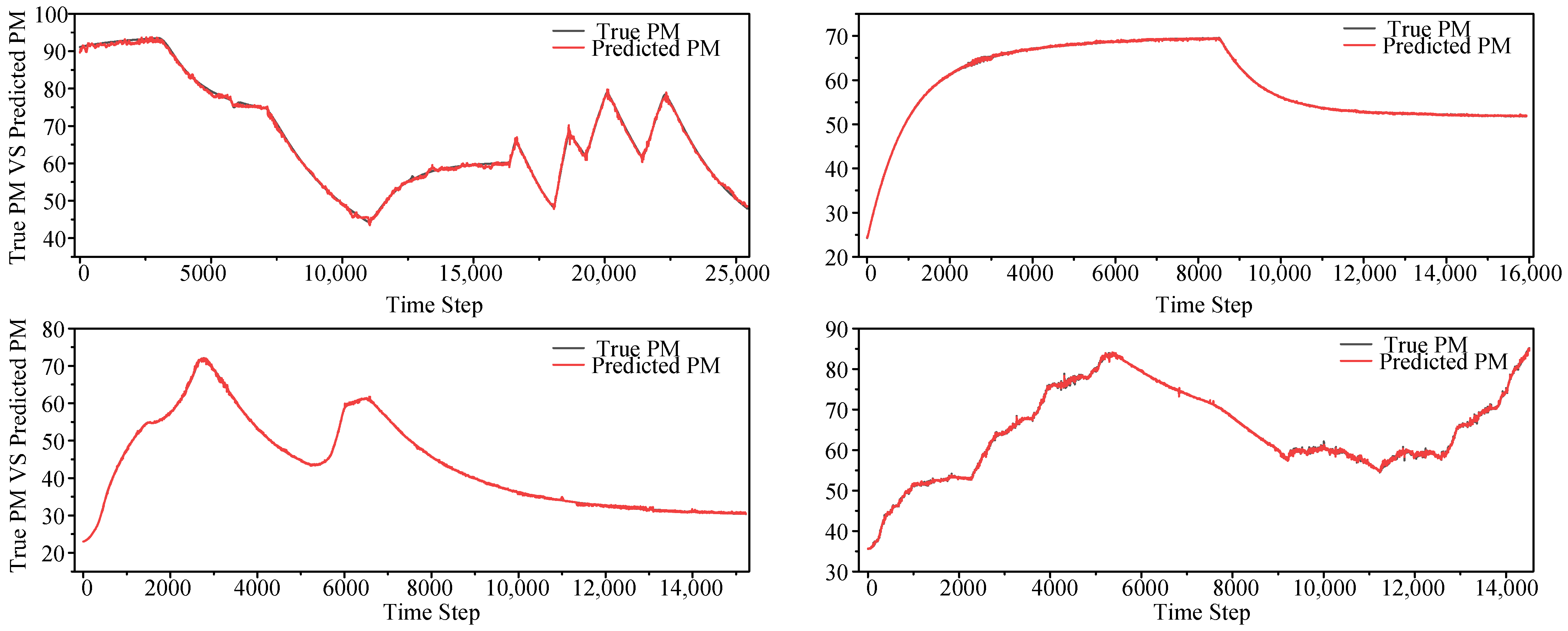

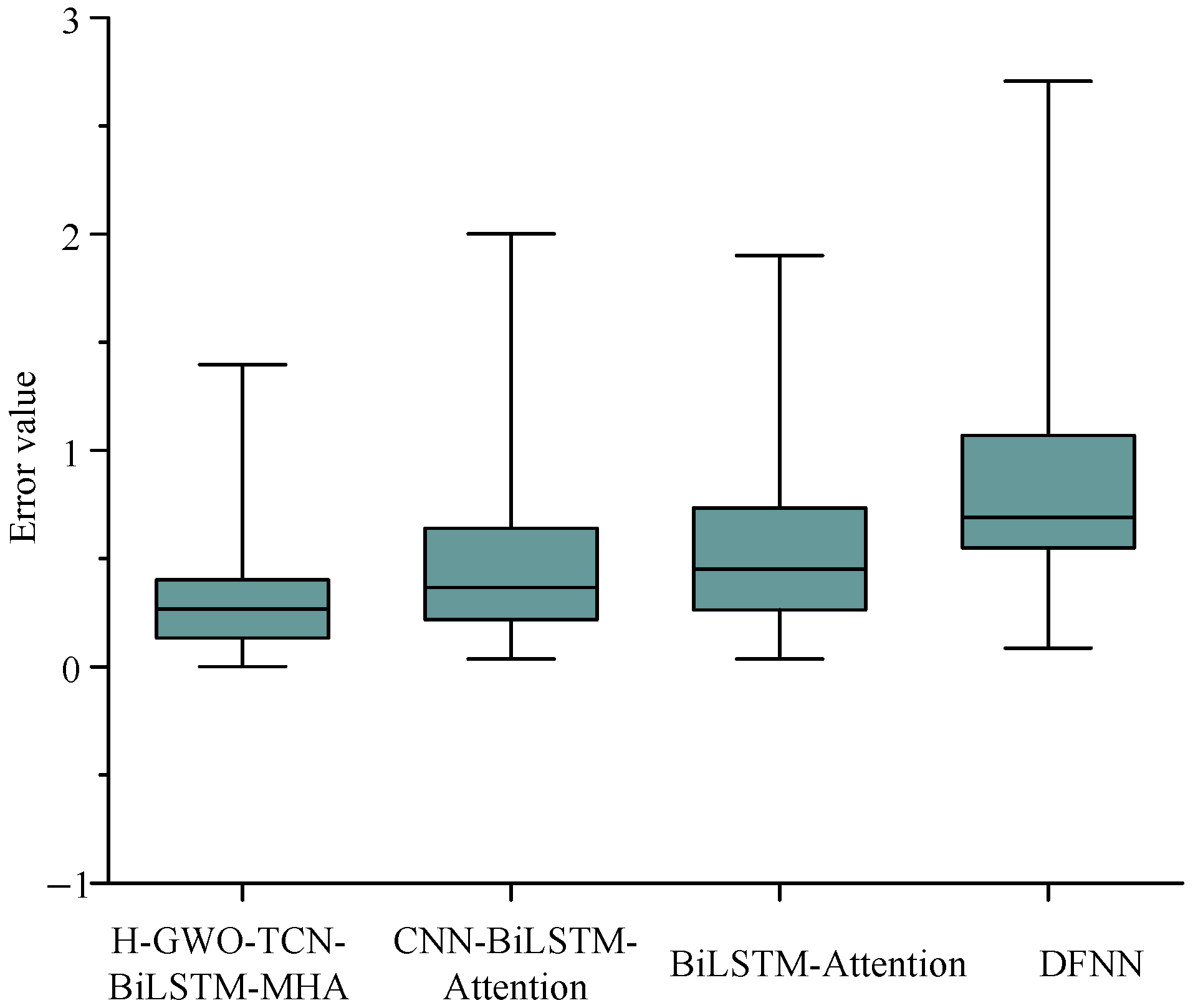

The overall framework of the paper is shown in

Figure 1. The paper uses a public motor dataset, selects appropriate input features, and divides the training set and test set in proportion. Then, the training set is input into the constructed temperature prediction model for training, and the model parameters are optimized by H-GWO. After that, the test set is fed into the trained model to finally obtain the prediction results for temperature data. Finally, the model is compared and analyzed with the DFNN, BiLSTM-Attention, and CNN-BiLSTM-Attention models to prove the advantages of the H-GWO-TCN-BiLSTM-MHA model.

2. TCN-BiLSTM-MHA Rotor Temperature Prediction Model

To address the issues of insufficient prediction accuracy and generalization ability of the standalone TCN or BiLSTM model, this paper constructs a composite model integrating time-series modeling, feature extraction, and a multi-head attention mechanism, namely, TCN-BiLSTM-MHA, from three aspects: input feature selection, model structure design, and parameter optimization strategies. Its structure is shown in

Figure 2. Firstly, through normalization and feature correlation analysis, the model inputs are ensured to be representative and stable, improving the modeling quality from the source. Secondly, by combining the TCN with BiLSTM, the TCN uses a one-dimensional convolutional structure to effectively extract important features within local time windows while maintaining good parallelism. This enhances the ability to model long-term dependent information and avoids the gradient vanishing problem in traditional recurrent neural networks. On this basis, the bidirectional recurrent structure of BiLSTM is introduced to fully explore the correlation of temperature changes in the time series, improving the model’s ability to perceive global dynamic features. Finally, since the TCN and BiLSTM have a limited ability to model the importance of different time steps and feature dimensions, which may lead to insufficient information utilization, MHA is introduced to weight the high-dimensional sequence representations extracted by BiLSTM, thereby highlighting the time points and feature channels that are more critical to the prediction task.

The combination of the TCN, BiLSTM, and MHA achieves hierarchical optimization from local feature extraction to temporal dependency modeling and then to key feature attention, significantly enhancing the model’s ability to predict rotor temperature under multi-variable and complex operating conditions. This effectively overcomes the shortcomings of single models in temporal modeling and feature weight allocation.

2.1. Temporal Convolutional Network

TCNs have shown certain advantages in multiple sequence data modeling tasks [

25], and their advantages stem from causal convolution operations. The causal convolution of the TCN appropriately pads the input data on the basis of one-dimensional convolution operations so that the input sequence

corresponds to the output sequence

, and the predicted value at time

t can only be related to the input values at time

t and before

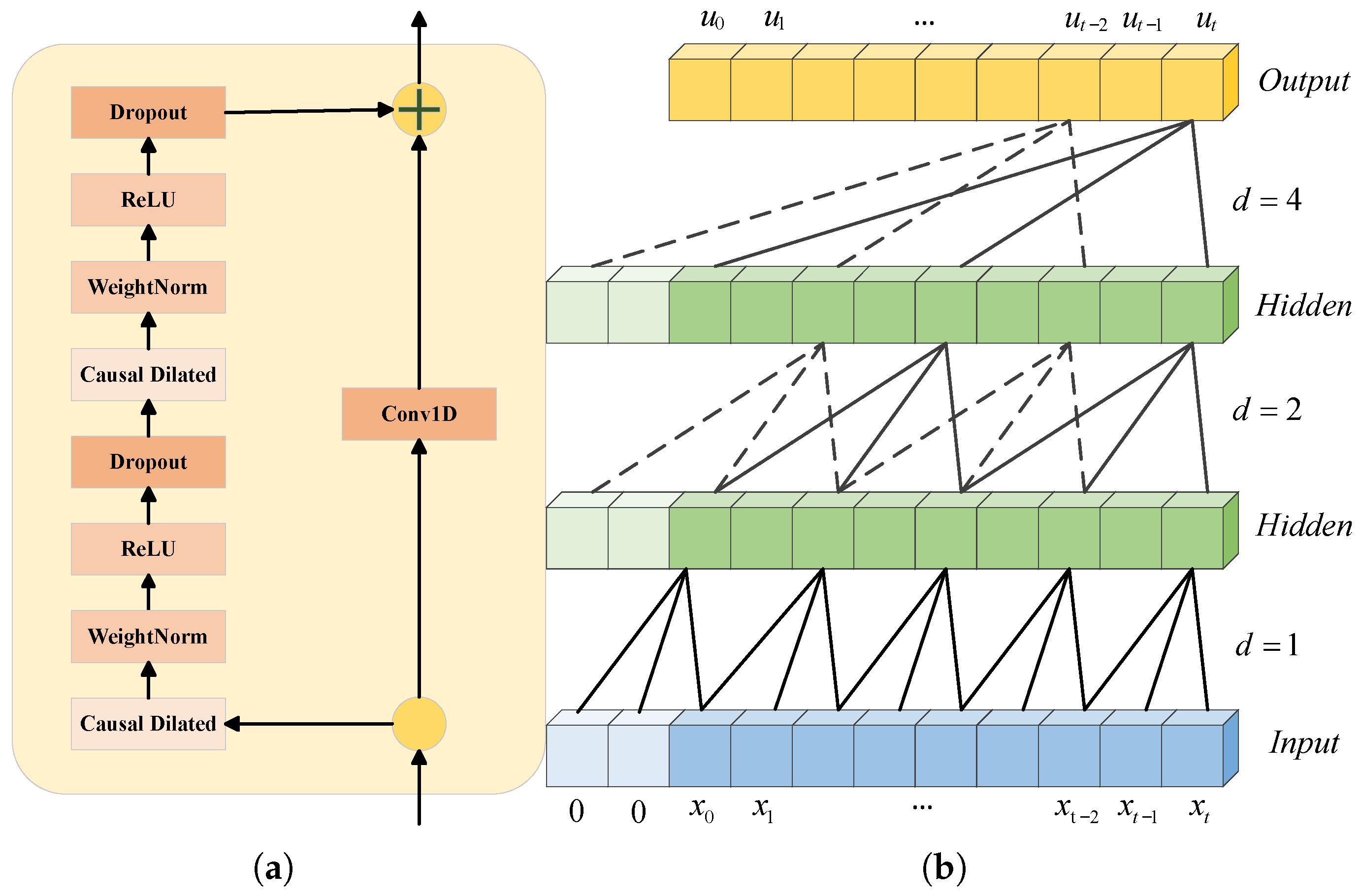

t. In addition, the TCN incorporates dilated convolution, where the convolution kernel performs jump sampling on the input sequence, expanding the receptive field in a hierarchical manner and covering longer dependencies with fewer layers. The causal convolution structure of the TCN is illustrated in

Figure 3a. Moreover, residual connection is an effective approach for the TCN to transmit information across layers. By leveraging the connectivity of residual blocks, connecting multiple residual blocks can effectively mitigate the gradient explosion issue and expand the model’s receptive field [

26]; the structure of the residual block is presented in

Figure 3b. The operation of the dilated causal convolution of the TCN on the convolution kernel

is shown in the following equation:

where

guides the past direction, and

is the input time series;

d is the dilation factor;

k is the size of the filter; and

represents the

convolution weight.

The input layer feeds time-series data into the TCN layers through sliding windows. Each TCN layer includes convolution, normalization, and ReLU activation operations. The four TCN layers are connected via residual links, each employing one-dimensional convolution with a kernel size of 5 and different dilation rates (1, 2, 4, 8). This allows the TCN layers to model the historical accumulation of rotor temperature rise and the changing trends of operating conditions, achieving multi-dimensional local feature extraction.

2.2. Bidirectional Long Short-Term Memory

BiLSTM is an improved recurrent neural network based on LSTM. By introducing input gates, forget gates, and output gates, it overcomes the problems of gradient vanishing and gradient explosion in RNNs [

27]. It can regulate the information flow by retaining important information and deleting irrelevant information, thus realizing the extraction of long-time-series information [

27]. The calculation equations for the input gate, forget gate, and output gate that constitute the LSTM unit are shown in (2):

where

represent the input sequence from the LSTM layer;

represents the sigmoid activation function;

is the output vector of the forget gate at time

t;

and

are the weight matrix and bias vector of the forget gate;

is the output vector of the input gate at time

t;

and

are the weight matrix and bias vector of the input gate;

represents the information stored in the state unit at time

t;

is the output value of the output gate;

represents the output value of the state unit at time

t; and

and

are the weight matrix and bias vector of the output gate.

BiLSTM integrates two complementary LSTM structures: one advances along the time axis in the forward direction, simulating the information flow from the past to the present; the other proceeds in the reverse direction, from the future to the past, capturing the impact of future information on the current moment. For the input sequence

, where

, BiLSTM captures bidirectional dependencies in the sequence and obtains a forward sequence

and reverse sequence

. The final output sequence

,

, is obtained by the following equation:

where

and

are weights, and

is bias.

2.3. Multi-Head Attention Mechanism

The attention mechanism is a computational model that simulates human visual and cognitive processes. It was initially introduced in machine translation tasks to address the problem of long-distance dependencies. In recent years, the attention mechanism has been extensively researched and applied in the field of deep learning, and it has been applied to various domains, such as natural language processing, computer vision, audio and video processing, etc. [

28]. Whether in sequence data or spatial data, the attention mechanism can effectively capture key information, quickly extract more effective information from a large amount of information, and reduce the impact of invalid information on the model training effect [

29].

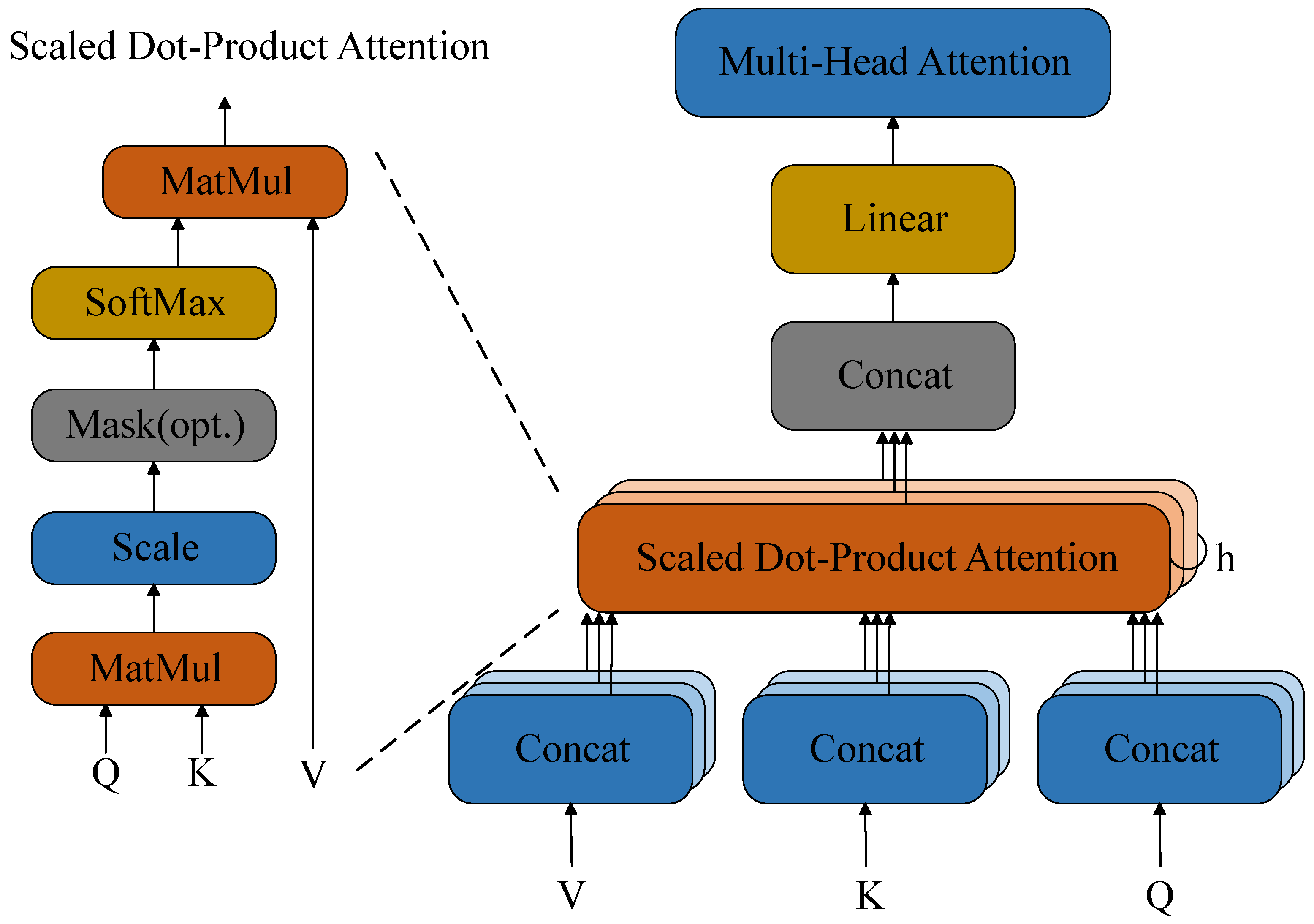

MHA is a combination of multiple self-attention structures [

30], as shown in

Figure 4. Compared with the single-head attention mechanism, different heads can focus on different patterns and extract more abundant information. In this paper, MHA is set with eight attention heads, which divide the original features into eight subspaces. First, three groups of linear transformations are performed on the input sequence in the feature dimension, with each head processing 16-dimensional data, as shown in Equation (

4), to obtain the corresponding Query (Q), Key (K), and Value (V) vectors. Then, after the input features are projected into low-dimensional subspaces, the attention distributions are calculated in parallel within different subspaces, as shown in Equation (

5). Subsequently, the attention outputs of all heads are concatenated in the feature dimension and fused through a linear transformation to generate the final multi-head attention output sequence, as shown in Equation (

6), resulting in the final output

.

represents the

i-th attention head; the input sequence of the

i-th head is

, where

denotes the dimension of the input vector for each head.

are three weight matrices, and

indicates the size of the feature dimension for each head.

represents the weight matrix, and

denotes the number of heads.

2.4. Output Module

To convert the temporal features from the multi-head attention mechanism into the final temperature prediction values, an output module consisting of an adaptive average pooling layer, a Dropout layer, and a fully connected layer is designed at the end of the model.

First, an average pooling operation is used to compress the sequence data

output by the attention mechanism in the temporal dimension, averaging the features of different time steps to obtain a fixed-length sequence representation:

To enhance the generalization ability of the model and prevent overfitting, a Dropout operation is employed to randomly discard some neurons from the pooled feature vector:

Finally, a linear fully connected layer is used to map the processed features to the final temperature prediction value:

where

W represents the weight matrix, and

b represents the bias vector.

2.5. Module Integration

In this study, the TCN, BiLSTM, and MHA models are integrated in a sequential and complementary manner to process the temperature time series of the motor rotor. During model operation, 64 windowed data samples are input per batch (batch_size), with each window having a data length (seq_length) of 64 and a feature dimension of 7; the time step of the sliding window is set to 1. The model starts with four cascaded TCN modules, each using a convolution kernel of size 5, with dilation factors of 1, 2, 4, and 8, respectively. The input data is fed through these four TCN modules sequentially, and the feature dimension of the output data from the last TCN module is mapped to 128, resulting in a sequence data output with the shape . Subsequently, the local feature map extracted by the TCN is input into the BiLSTM module. Each of the two LSTM layers in the BiLSTM is configured with 64 neurons, which process the 64-time-step data from the TCN in both forward and backward directions. This processing by BiLSTM yields a sequence data output maintaining the shape . Finally, the data is input into the MHA module. The MHA layer assigns weights to the correlations between the current time step and other time steps, and its output is a weighted key feature sequence with the shape —this sequence highlights information critical to temperature prediction. Eventually, the average pooling layer compresses the time dimension to 1, and the fully connected layer compresses the feature dimension to 1, generating 1 final predicted value for each time window.

3. Parameter Optimization Algorithm H-GWO Based on Chaos Map and Differential Evolution

In the task of motor rotor temperature prediction, model parameters directly affect the model’s ability to extract time-series features and the final prediction accuracy, such as sequence length, batch size, and number of convolution channels. Traditional methods such as manual empirical parameter tuning or grid search have problems such as low efficiency, difficulty in dimension expansion, and ease in trapping in local optima, which make it difficult to meet the optimization needs of complex model structures in practical engineering. To this end, this paper introduces the Grey Wolf Optimizer (GWO) to automatically search for and optimize the key hyperparameters of the model. The GWO is a swarm intelligence optimization algorithm that simulates the hunting behavior of grey wolf populations. Compared with other optimization algorithms [

31,

32], it has a stronger global search ability in dealing with problems with less gradient information, nonlinearity, and non-convex optimization. It is particularly suitable for deep learning models with complex parameter spaces and large computational overhead, such as TCN-BiLSTM-MHA.

This algorithm simulates the wolf pack in nature and sets up a four-level pyramid hierarchical structure consisting of

and

(current optimal solution, suboptimal solution, third optimal solution, and the remaining individuals), including three hunting behaviors: tracking, encircling, and attacking prey [

33,

34]. The application of the GWO algorithm in many fields has proven to have great advantages, but it still has drawbacks, such as being prone to falling into local optima and having slow convergence speed and low precision. To address this, Yukun Zheng et al. [

35] proposed an improved hybrid GWO (H-GWO) algorithm, which introduces the mutation and crossover strategies of the differential evolution (DE) algorithm and further combines the opposition-based learning technology to overcome the problems of the standard GWO, such as being prone to falling into local optima and insufficient population diversity. The block diagram of the H-GWO algorithm is shown in

Figure 5.

3.1. Population Initialization Based on Tent Map and Opposition-Based Learning

According to the set random value

, a chaotic sequence

is recursively generated using the Tent chaotic mapping method:

where

;

represents the number of individuals in the population, set to 20; and

represents the parameter to be optimized.

The obtained chaotic sequence is mapped to the search space to get the initial population

, the individual

, and

Meanwhile, Opposition-Based Learning (OBL) is introduced to generate the opposite individual

of

and form the population

. For the opposite individual,

where

and

represent the lower boundary and upper boundary of the

j-th parameter, respectively.

Finally, the mean squared error generated by the model (with individuals substituted in) when making predictions from the validation set is used as the fitness function. From the union set of populations and , the top N individuals with the optimal fitness are selected to form the optimized initial population .

3.2. Position Update

According to the fitness function of each individual in the population

X, the

,

,

, and

wolves are distinguished. All

wolves update their positions to track the prey based on the

,

, and

wolves, as the following Equations (13) and (14) show:

where

t represents the current iteration;

denotes the current position of the

i-th individual;

,

, and

represent the current positions of the

,

, and

wolves, respectively;

,

, and

represent the distances between the current

,

, and

wolves and the

i-th individual;

,

,

,

,

, and

are the coefficient vectors by the

i-th individual for

,

, and

.

,

, and

During the iteration process, linearly decreases from 2 to 0, and represent random vectors.

The wolves in the population are updated, and then a new population is formed, together with the , , and wolves, as .

3.3. Population Optimization Based on Differential Evolution Algorithm

According to the updated population

, the classic “DE/best/1” strategy in DE is used to generate

N mutant individuals

. These

N mutant individuals form a mutant population

. For each mutant individual,

where

and

are randomly selected individuals from

;

represents the

wolf at this time.

is a scaling factor, which is a fixed constant used to increase the diversity of the search;

represents the differential weight or scaling factor. A larger

F value will lead to a population with higher diversity, while a smaller value will result in faster convergence.

Next, a crossover operation is performed between

and

to generate experimental individuals

, forming a population

. For each experimental individual,

where

represents the crossover rate within the range of

, set to 0.8 here;

refers to a uniformly distributed random number; and

represents the randomly selected index.

To prevent individuals from going out of bounds, the following boundary constraint strategies are adopted for

and

:

Then, the greedy selection strategy is used to retain the

N best individuals from

and

:

where

represents the fitness function.

Finally, a judgment is made as to whether the maximum number of iterations has been reached. If so, the optimal individual and its fitness are output; otherwise, the position update and the population optimization process are repeated based on differential evolution.

3.4. Parameter Optimization

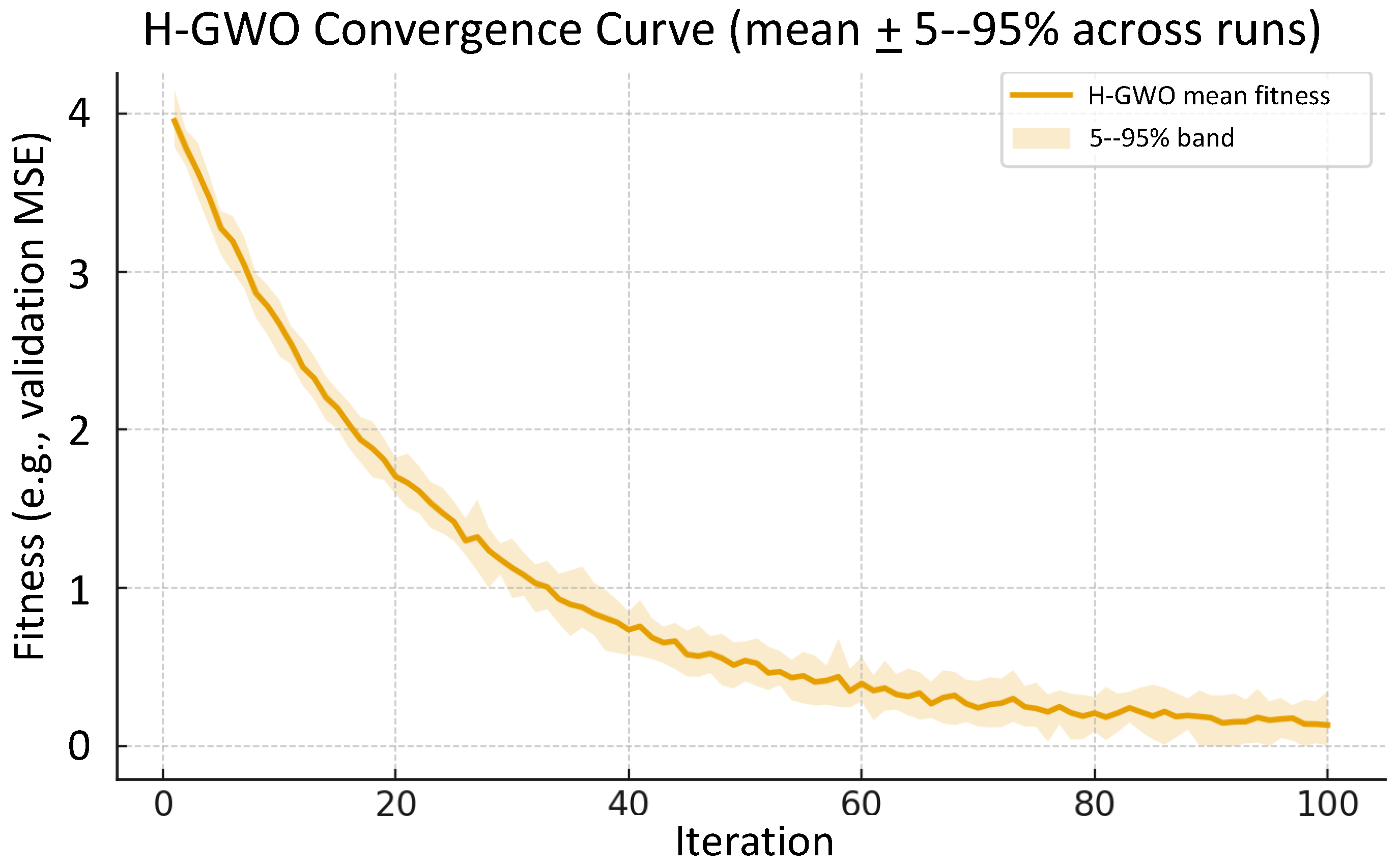

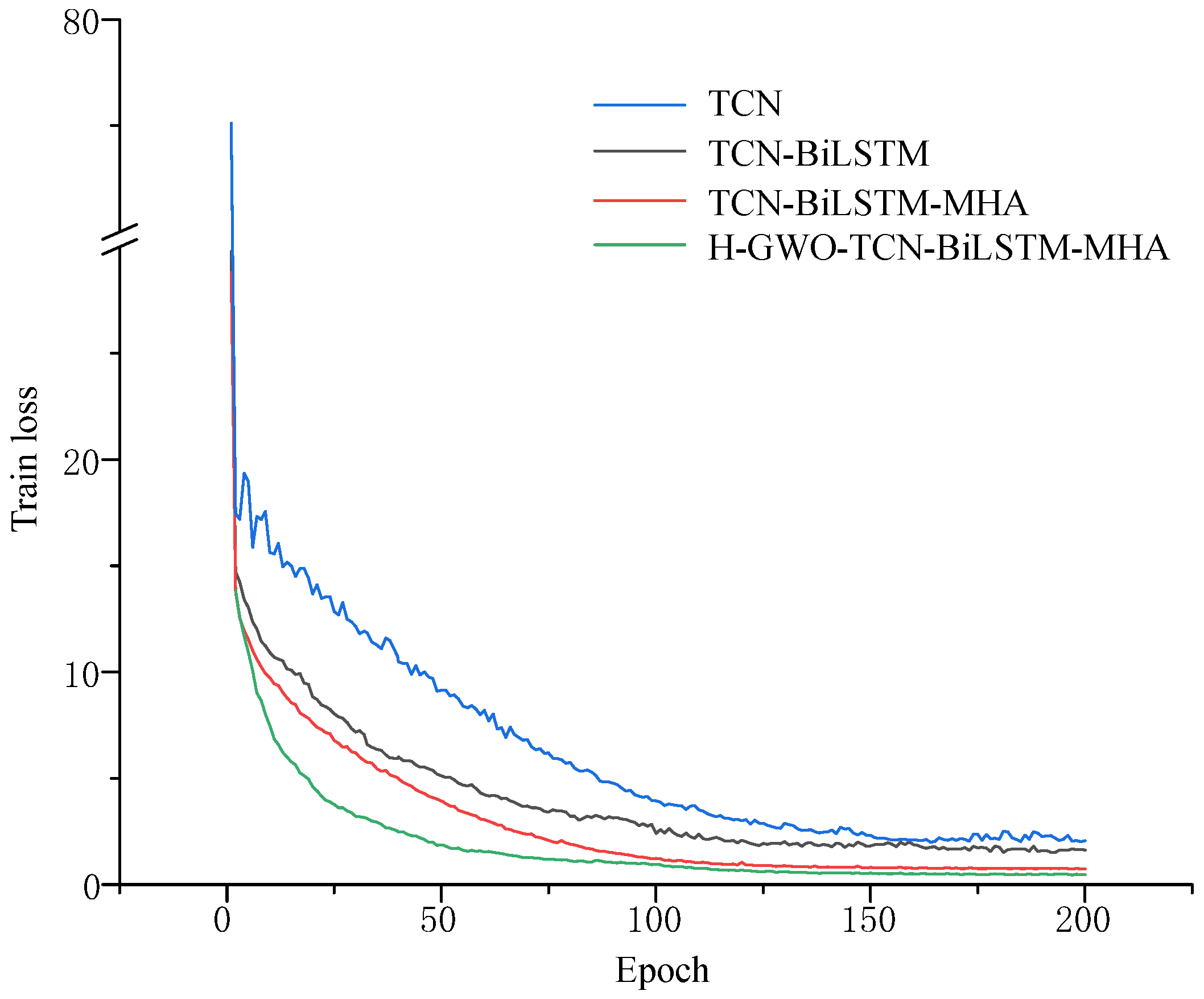

To evaluate the effectiveness of H-GWO in hyperparameter search, the convergence curve of fitness values during the optimization process was plotted, as shown in

Figure 6. It can be observed that the fitness value decreases rapidly in the early stage, suggesting that the algorithm is able to quickly locate promising solutions in the search space. As the number of iterations increases, the curve gradually stabilizes, indicating that H-GWO has essentially converged and identified an optimal set of hyperparameters at the global level. This result demonstrates the strong global search ability and stability of the proposed method in the optimization process.

H-GWO optimizes the convolution kernel size of the TCN layers, the number of BiLSTM neurons, the dropout rate, the learning rate, and the batch size for the prediction model. For the output data dimension of the TCN layer and the activation function of the BiLSTM layer, manual comparison and selection are carried out. After optimization, the optimal parameter combination of the TCN-BiLSTM-MHA model is shown in

Table 1.