YOLOv5s-F: An Improved Algorithm for Real-Time Monitoring of Small Targets on Highways

Abstract

1. Introduction

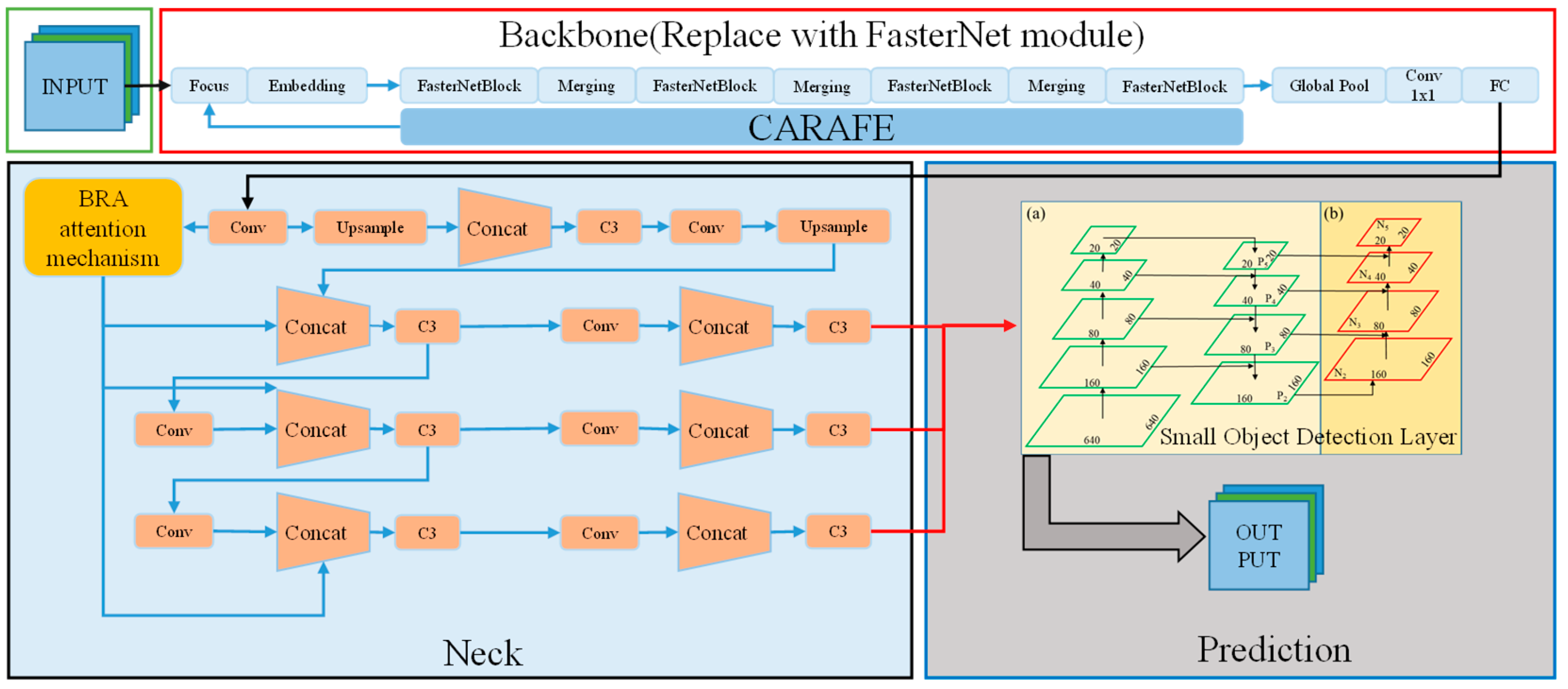

2. Improving the YOLOv5s Object Detection Algorithm

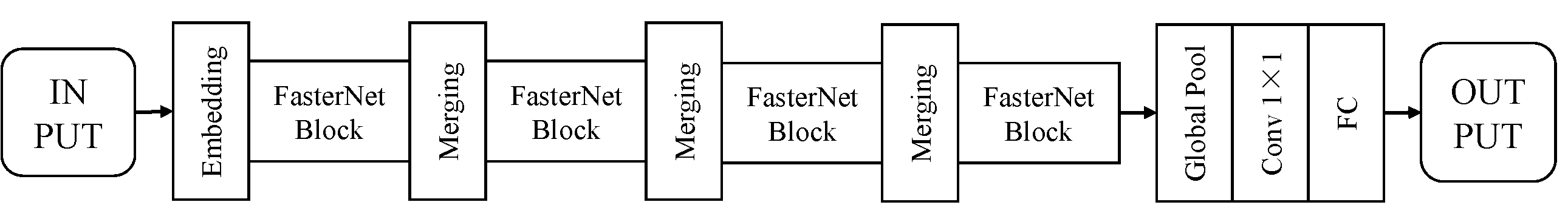

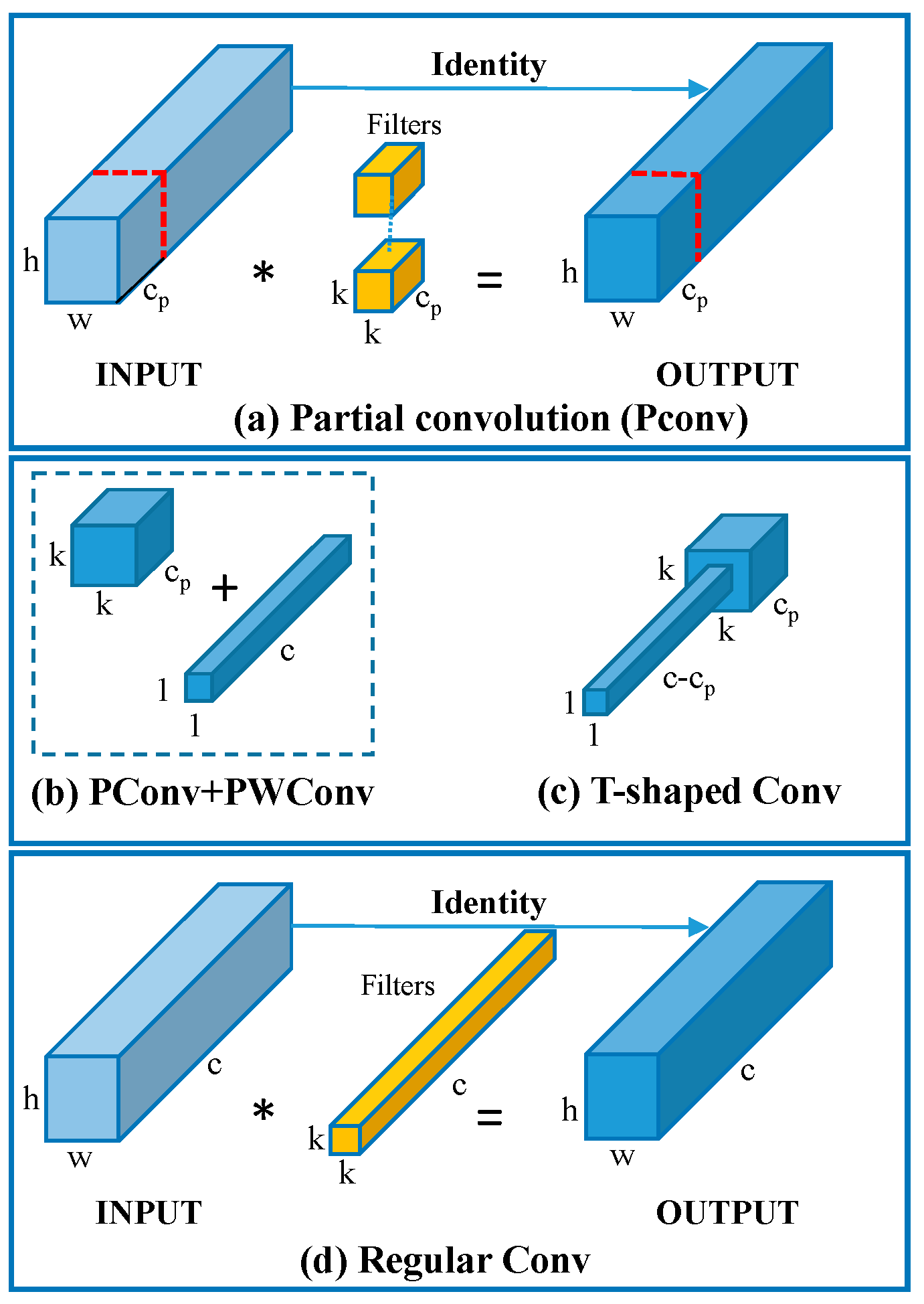

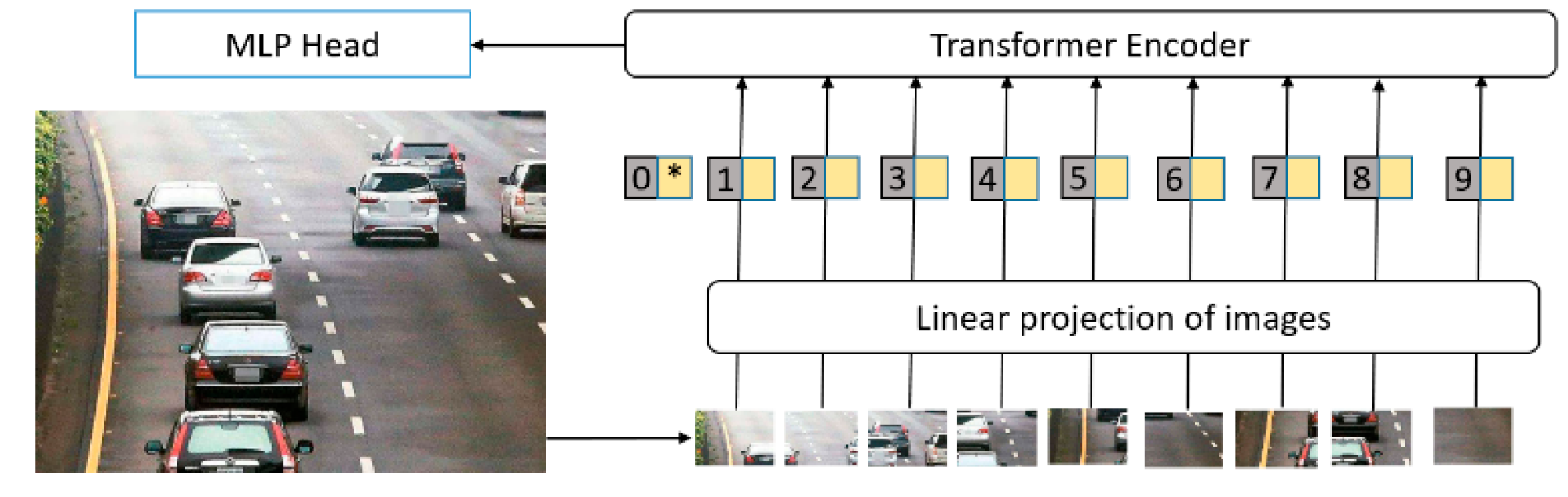

2.1. Design of Lightweight Backbone Network.

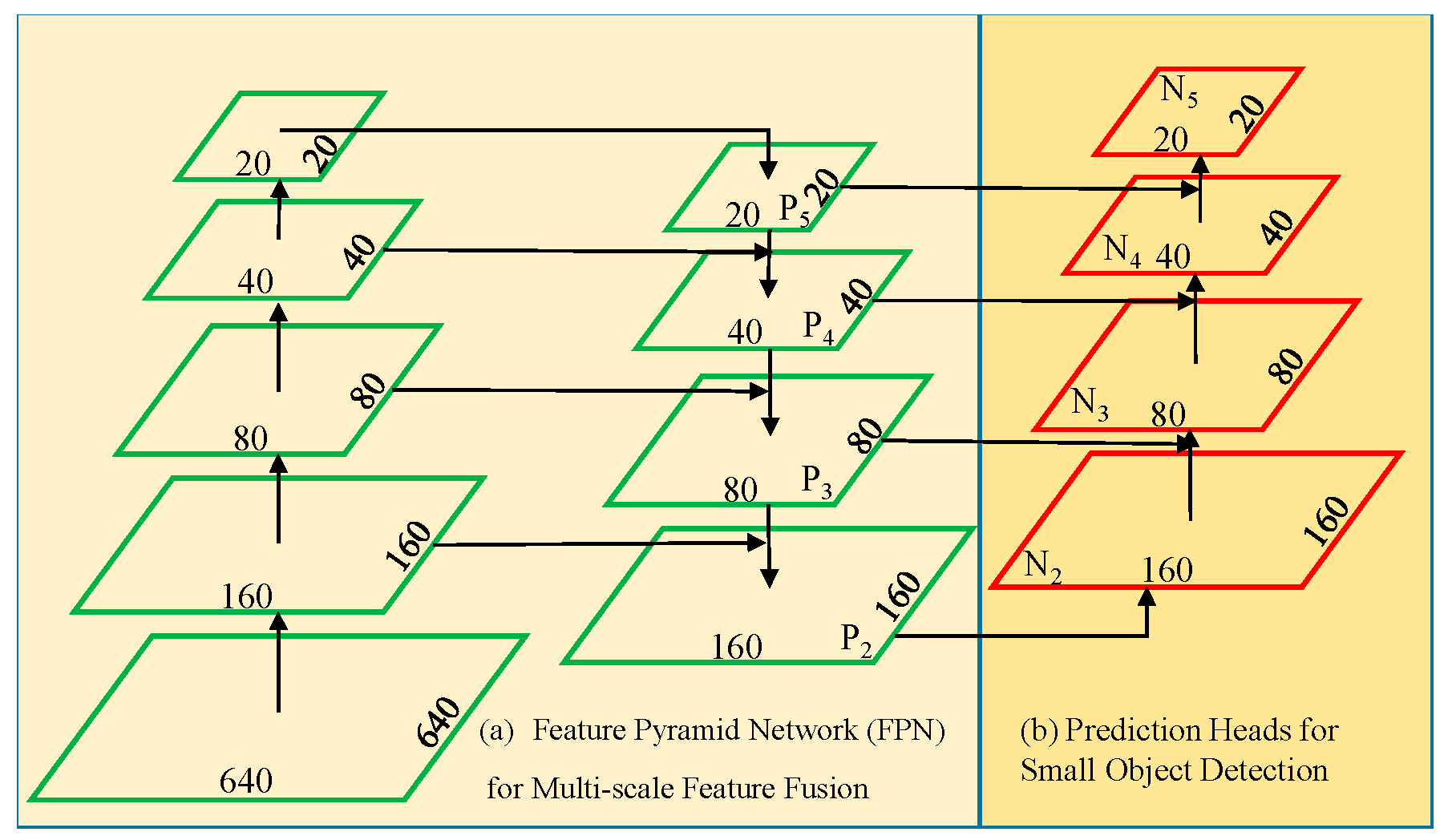

2.2. Design of Multi-Scale Feature Fusion Network

2.2.1. Small-Object Detection Layer

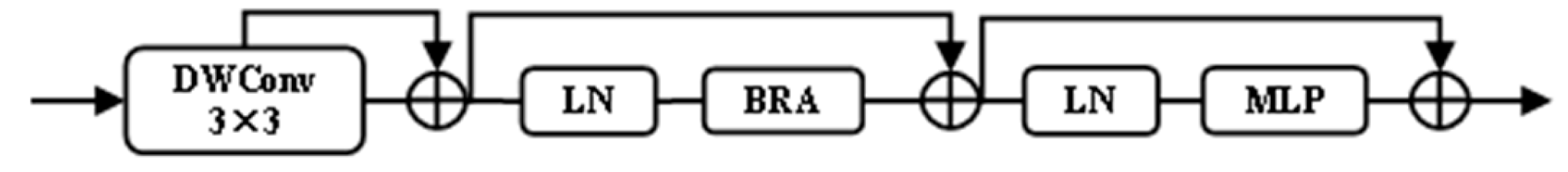

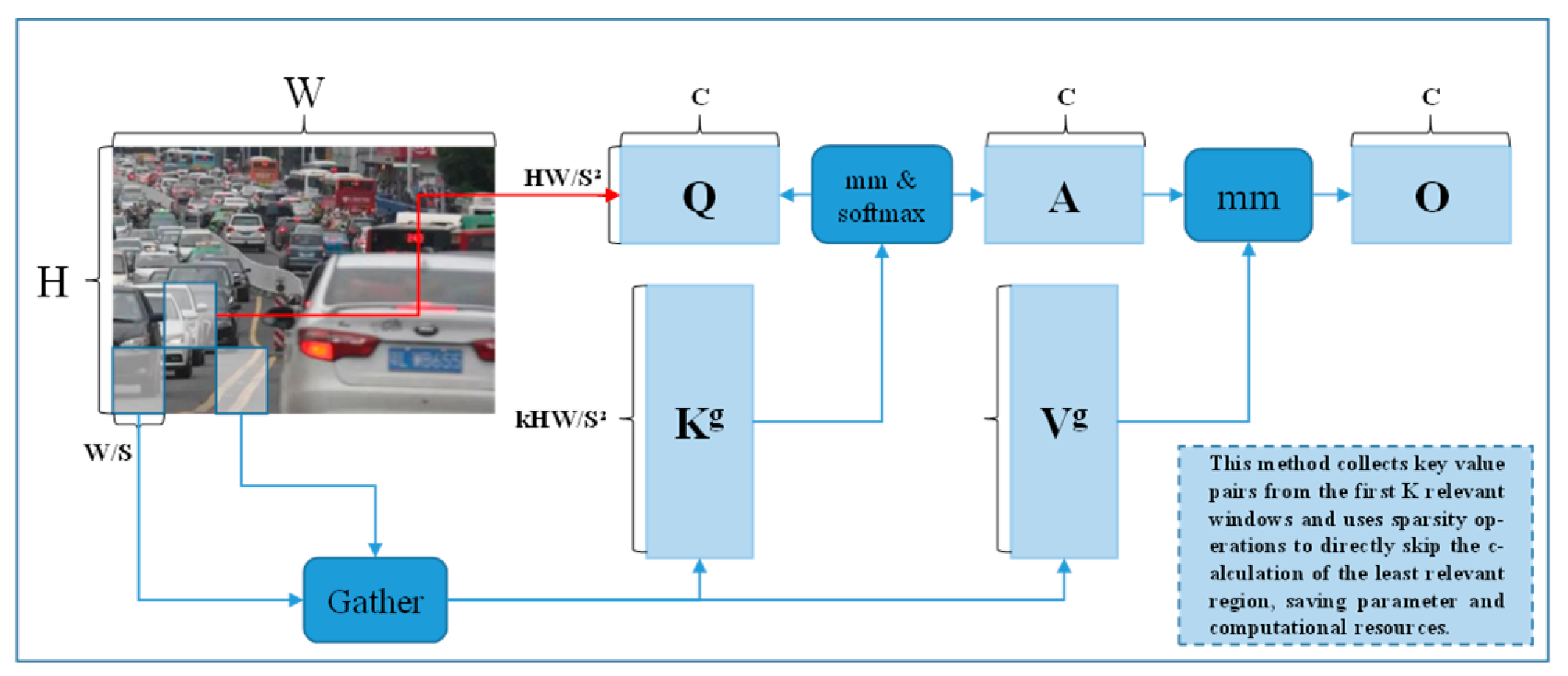

2.2.2. Attention Mechanism

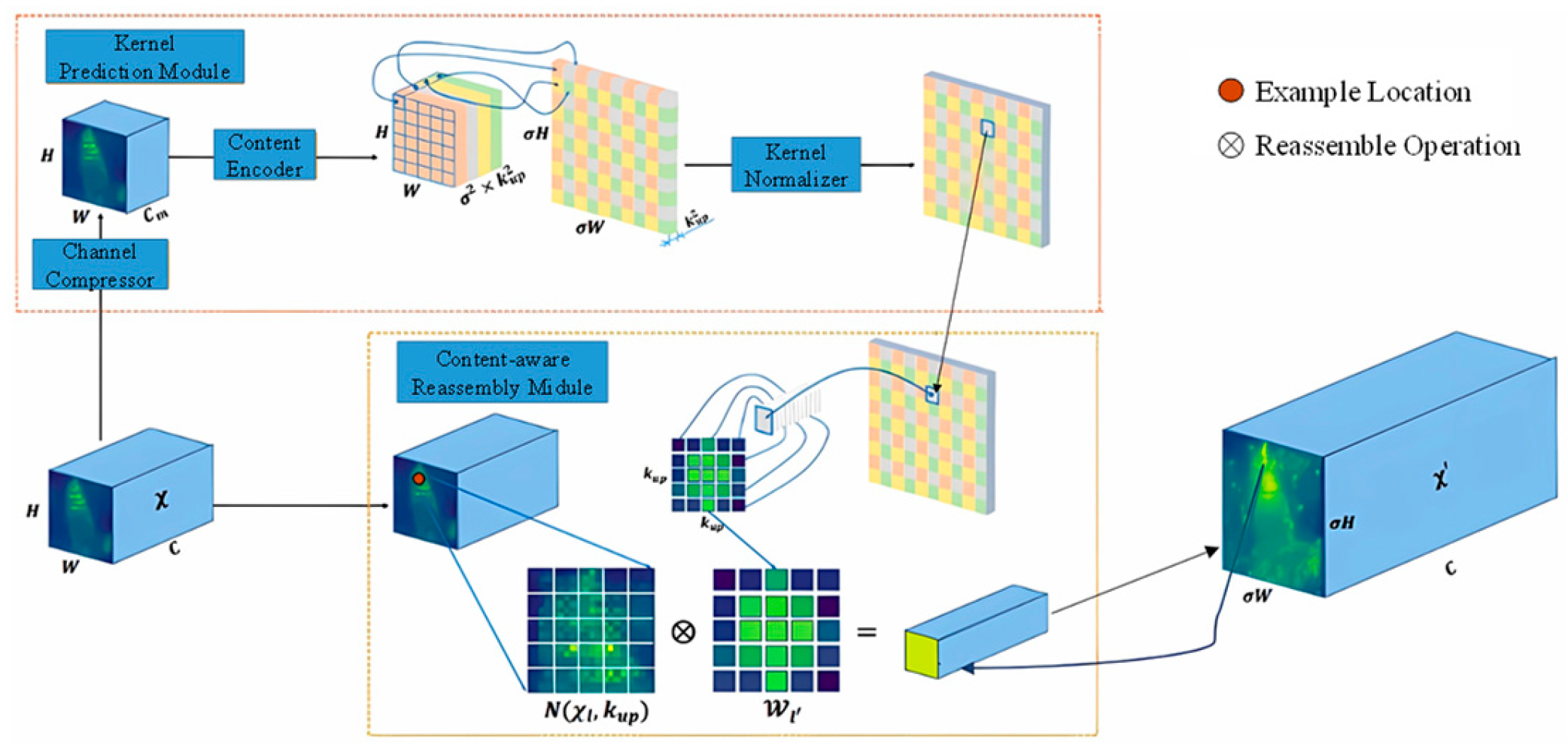

2.2.3. Adding the Lightweight Upsampling Operator CARAFE

2.3. Focal EIoU Loss Function

2.4. Problem–Solution Correspondence

3. Experimental Results and Analysis of YOLOv5s-F

3.1. Experimental Dataset

3.2. Experimental Environment

3.3. Experimental Hyperparameters

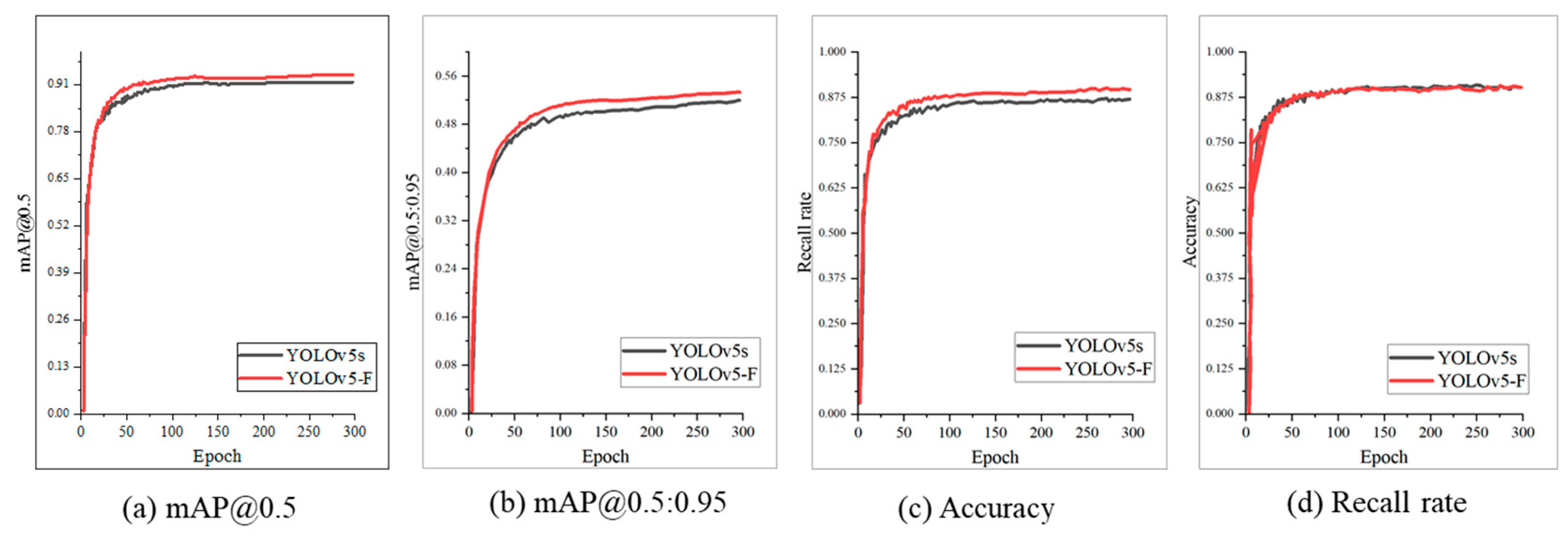

3.4. Experimental Results and Analysis

3.4.1. Comparative Experiments on Modifications to the YOLOv5s Backbone Network

3.4.2. Ablation Experiments on the YOLOv5s-F Network Model

3.4.3. Loss Function Comparison Experiment

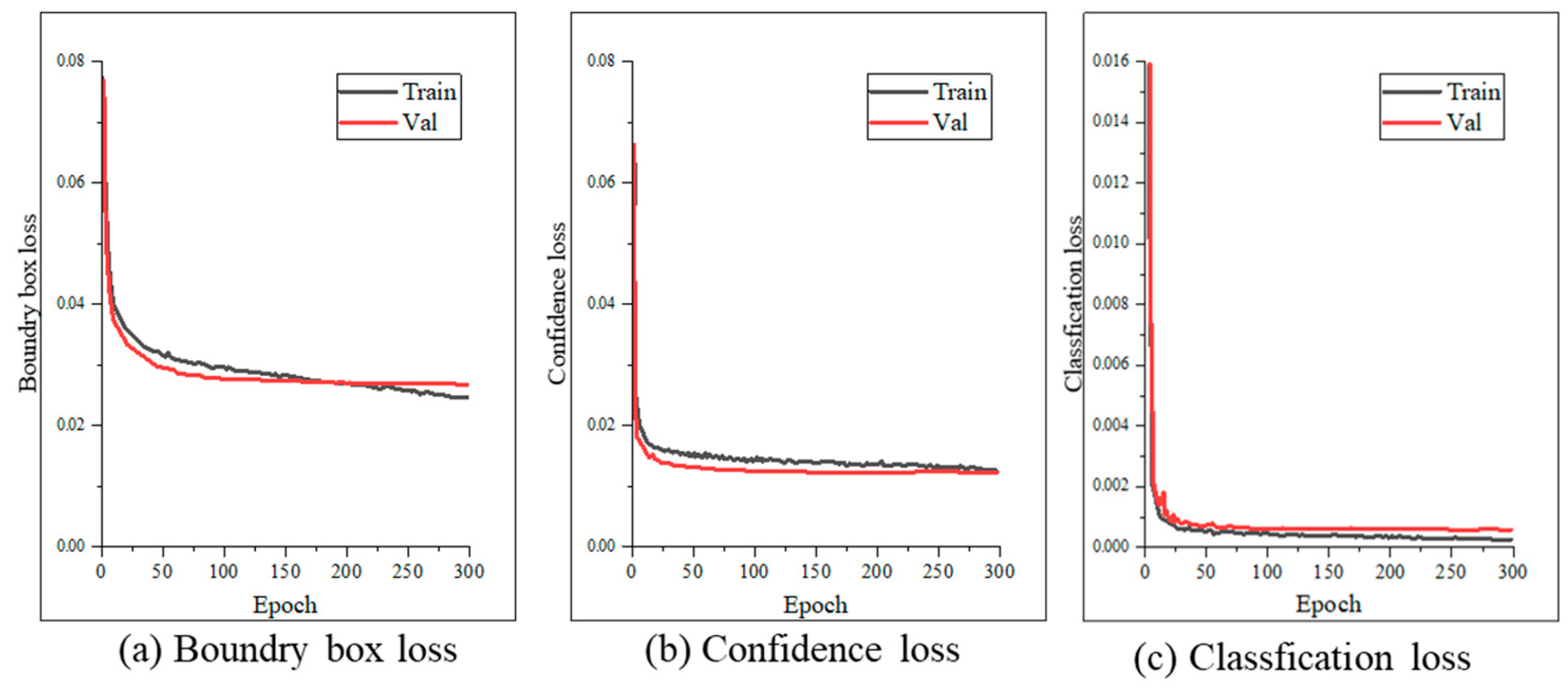

3.4.4. Convergence Speed and Tracking Continuity of the YOLOv5s-F Algorithm

3.4.5. Generalization Ability

- (1)

- Adaptability: YOLOv5s-F can adapt to different scenarios and tasks, such as drone vision, pedestrian detection, and vehicle detection, without requiring specific adjustments or optimizations for each task.

- (2)

- Transferability: YOLOv5s-F can effectively perform transfer learning on different datasets, utilizing knowledge from a source dataset to enhance detection performance on a target dataset.

- (3)

- Robustness: YOLOv5s-F can withstand specific environmental factors such as lighting variations, occlusions, and background interference, thereby improving detection accuracy and robustness.

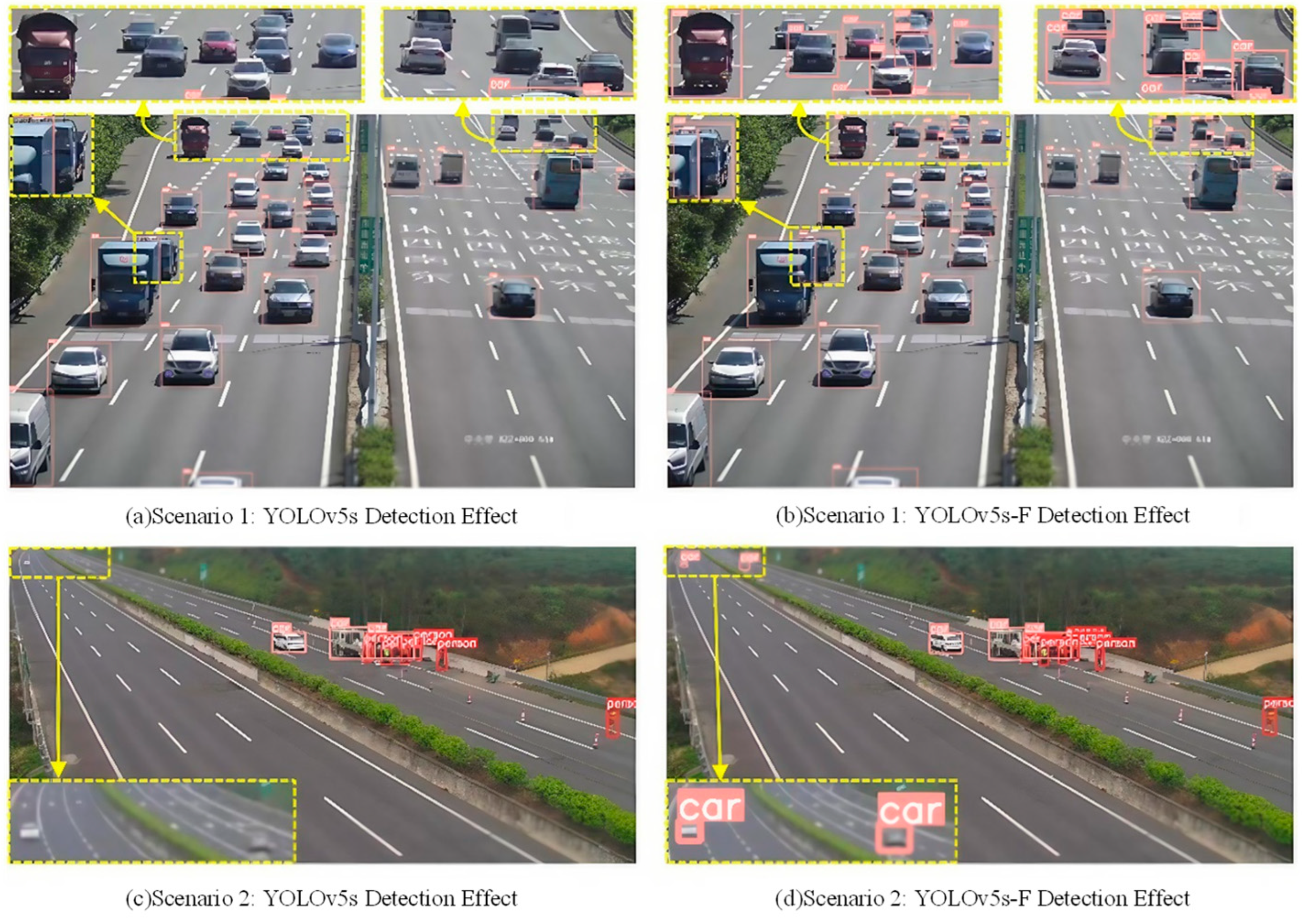

3.4.6. Detection Performance

3.4.7. Inference Performance and Real-Time Validation on Deployment Hardware

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| SIFT | Scale-invariant feature transform |

| HOG | Histogram of Oriented Gradient |

| SVM | Support Vector Machine |

| CNN | Convolutional Neural Network |

| R-CNN | Region-Convolutional Neural Network |

| YOLO | You Only Look Once |

| SSD | Single Shot MultiBox Detector |

| SNIP | Scale Normalization for Image Pyramids |

| CBS | Conv + BN + SiLU |

| BRA | Bi-level Routing Attention |

| CARAFE | Content-Aware Reassembly of Features |

| FLOPs | Floating Point Operations Per Second |

| AP | Average Precision |

| mAP | mean Average Precision |

| FPS | Frames Per Second |

References

- Lowe, D.G. Distinctive Image Features from Scale-Invariant Keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Dalal, N.; Triggs, B. Histograms of Oriented Gradients for Human Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), San Diego, CA, USA, 20–26 June 2005; IEEE: New York, NY, USA, 2005. [Google Scholar] [CrossRef]

- Cortes, C.; Vapnik, V. Support-Vector Networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-Based Learning Applied to Document Recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; IEEE: New York, NY, USA, 2016. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S. SSD: Single Shot MultiBox Detector. In Proceedings of the European Conference on Computer Vision (ECCV), Amsterdam, The Netherlands, 11–14 October 2016; Springer: Berlin/Heidelberg, Germany, 2016. [Google Scholar] [CrossRef]

- Chen, J.; Kao, S.; He, H.; Zhuo, W.; Wen, S.; Lee, C.; Chan, S. Run, Don’t Walk: Chasing Higher FLOPS for Faster Neural Networks. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; IEEE: New York, NY, USA, 2023. [Google Scholar] [CrossRef]

- Liu, Z.; Mao, H.; Wu, C.; Feichtenhofer, C.; Darrell, T.; Xie, S. A ConvNet for the 2020s. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; IEEE: New York, NY, USA, 2022. [Google Scholar] [CrossRef]

- Wang, Q.; Li, L. Lane Detection and Target Tracking Algorithm for Vehicles in Complex Road Conditions and Dynamic Environments. Int. J. ITS Res. 2025. [Google Scholar] [CrossRef]

- Guo, Y.; Li, Y.; Wang, L. Dynamic Network Surgery for Efficient DNNs. In Proceedings of the Advances in Neural Information Processing Systems (NeurIPS), Vancouver, BC, Canada, 10–15 December 2024. [Google Scholar] [CrossRef]

- Teich, J.; Hannig, A.; Schmitt-Landsiedel, D. Hardware/Software Co-Design of Pipelined Image Processing Applications Using the Xputer. In Proceedings of the 2000 IEEE International Conference on Computer Design (ICCD), Austin, TX, USA, 17–20 September 2000; pp. 118–125. [Google Scholar] [CrossRef]

- Xu, Y.; Zhang, Q.; Zhang, J.; Tao, D. ViTA: Vision Transformer with Bi-level Routing Attention. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; IEEE: New York, NY, USA, 2023. [Google Scholar] [CrossRef]

- Liu, Z.; Lin, Y.; Cao, Y. Swin Transformer for Aerial Image Recognition. ISPRS J. Photogramm. Remote Sens. 2021, 180, 130–150. [Google Scholar]

- Tang, Y.; Han, K.; Wang, Y.; Xu, C.; Guo, J.; Xu, C.; Tao, D. Patch Slimming for Efficient Vision Transformers. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18 June–24 June 2022; IEEE: New York, NY, USA, 2022. [Google Scholar]

- Tang, Y.; Han, K.; Wang, Y. Block-Sparse Attention for High-Resolution Image Processing. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; IEEE: New York, NY, USA, 2021. [Google Scholar] [CrossRef]

- Sermanet, P.; Eigen, D.; Zhang, X. OverFeat: Integrated Recognition, Localization and Detection using Convolutional Networks. In Proceedings of the International Conference on Learning Representations (ICLR), Banff, AB, Canada, 14–16 April 2014. [Google Scholar] [CrossRef]

- Chen, L.; Zhang, H.; Xiao, J. Multi-Scale Fusion with Dedicated Small-Object Detection Layers. In Proceedings of the European Conference on Computer Vision (ECCV), Glasgow, UK, 23–28 August 2020; Springer: Berlin/Heidelberg, Germany, 2020. [Google Scholar] [CrossRef]

- Wang, J.; Chen, K.; Xu, R.; Liu, Z.; Loy, C.; Lin, D. CARAFE: Content-Aware ReAssembly of Features. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; IEEE: New York, NY, USA, 2019. [Google Scholar] [CrossRef]

- Hestness, J.; Narang, S.; Ardalani, N.; Diamos, G.; Jun, H.; Kianinejad, H.; Patwary, M.M.A.; Yang, Y.; Zhou, Y. Deep Learning Scaling Is Predictable, Empirically. arXiv 2017, arXiv:1712.00409. [Google Scholar] [CrossRef]

- Wu, D.; Lv, S.; Jiang, M. Kernel Prediction Overhead Reduction for Content-Aware Upsampling. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; IEEE: New York, NY, USA, 2022. [Google Scholar] [CrossRef]

- Zhang, Y.-F.; Ren, W.; Zhang, Z.; Jia, Z.; Wang, L.; Tan, T. Focal and Efficient IOU Loss for Accurate Bounding Box Regression. Neurocomputing 2022, 506, 146–157. [Google Scholar] [CrossRef]

- Chen, Z.; Chen, K.; Lin, W. Hyperparameter Sensitivity in Localization Loss Functions. In Proceedings of the International Conference on Machine Learning (ICML), Maryland, MD, USA, 17–23 July 2022. [Google Scholar]

- Wang, G.; Lu, S.; Zhou, J. Adaptive Curvature Methods for Stable Object Detection Training. Neural Netw. 2023. [Google Scholar]

- Kupyn, O.; Budzan, V.; Mykhailych, M.; Mishkin, D.; Matas, J. DeblurGAN: Blind Motion Deblurring Using Conditional Adversarial Networks. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake, City, UT, USA, 18–23 June 2018; pp. 8183–8192. [Google Scholar]

- Lee, H.; Hwang, S. Temporal Filtering for Dynamic Object Tracking. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May–5 June 2021; IEEE: New York, NY, USA, 2021. [Google Scholar] [CrossRef]

- Zhang, Q.; Nie, Y.; Zhu, L.; Xiao, C.; Zheng, W.-S. Enhancing Underexposed Photos Using Perceptually Bidirectional Similarity. IEEE Trans. Multimed. 2021, 23, 189–202. [Google Scholar] [CrossRef]

- Seger, U. Chapter 18—HDR Imaging in Automotive Applications. In High Dynamic Range Video; Dufaux, F., Le Callet, P., Mantiuk, R.K., Mrak, M., Eds.; Academic Press: Cambridge, MA, USA, 2016; pp. 477–498. [Google Scholar] [CrossRef]

- Saito, K.; Watanabe, K. Domain Adaptation for Traffic Scene Understanding. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; IEEE: New York, NY, USA, 2020. [Google Scholar] [CrossRef]

- Chen, C.; Chen, Q.; Xu, J.; Koltun, V. Learning to See in the Dark. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake, City, UT, USA, 18–23 June 2018; pp. 3291–3300. [Google Scholar] [CrossRef]

- Khan, S.; Naseer, M.; Hayat, M. Transformers in Vision: A Survey. ACM Comput. Surv. 2022, 54, 1–41. [Google Scholar] [CrossRef]

- Kumar, A.; Dhanalakshmi, R. EYE-YOLO: A Multi-Spatial Pyramid Pooling and Focal-EIOU Loss Inspired Tiny YOLOv7 for Fundus Eye Disease Detection. Int. J. Intell. Comput. Cybern. 2024, 17, 503–522. [Google Scholar] [CrossRef]

- Kalra, N.; Tudu, J.T. AI-Driven Power Gating for Enhanced Energy Efficiency in Superscalar Processors. In Proceedings of the 2024 IEEE 31st International Conference on High Performance Computing, Data and Analytics Workshop (HiPCW), Bangalore, India, 18–21 December 2024; pp. 155–156. [Google Scholar] [CrossRef]

- Elfwing, S.; Uchibe, E.; Doya, K. Sigmoid-Weighted Linear Units for Neural Network Function Approximation in Reinforcement Learning. Neural Netw. 2018, 107, 3–11. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Beyer, L. Vision Transformer Architecture Variants for Efficient Deployment. arXiv 2022. [Google Scholar] [CrossRef]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; MIT Press: Cambridge, MA, USA, 2016; Chapter 6. [Google Scholar]

- Ma, N.; Zhang, X.; Zheng, H.; Sun, J. ShuffleNet V2: Practical Guidelines for Efficient CNN Architecture Design. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; Springer: Berlin/Heidelberg, Germany, 2018. [Google Scholar] [CrossRef]

- Xu, S.; Wang, X.; Lv, W.; Chang, Q.; Cui, C.; Deng, K.; Wang, G.; Dang, Q.; Wei, S. PP-YOLOE: An Evolved Version of YOLO. arXiv 2022. [Google Scholar] [CrossRef]

- Jocher, G. YOLOv8: State-of-the-Art Object Detection Model; Ultralytics GitHub Repository; Ultralytics: Frederick, MD, USA, 2023; Available online: https://github.com/ultralytics (accessed on 14 August 2025).

- Tan, M.; Pang, R.; Le, Q.V. EfficientDet: Scalable and Efficient Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; IEEE: New York, NY, USA, 2020. [Google Scholar] [CrossRef]

- Zhu, P.; Wen, L.; Du, D.; Bian, X.; Fan, H.; Hu, Q.; Ling, H. VisDrone-DET2019: The Vision Meets Drone Object Detection in Image Challenge Results. In Proceedings of the IEEE International Conference on Computer Vision Workshops (ICCVW), Seoul, Republic of Korea, 27–28 October 2019; IEEE: New York, NY, USA, 2019. [Google Scholar] [CrossRef]

| Core Research Challenges in Highway Small-Target Detection | Proposed Improvements in YOLOv5s-F | Key Differences from YOLOv5s |

|---|---|---|

| 1. High miss rate for distant small targets (<32 × 32 pixels) due to limited feature retention. | Integration of a dedicated 160 × 160 detection layer, fused with the 80 × 80 layer via skip connections to preserve shallow spatial details of small targets. | YOLOv5s lacks a 160 × 160 layer, relying on only three coarser scales (80 × 80, 40 × 40, 20 × 20), leading to a loss of small-target features in deeper networks. |

| 2. Bounding box jitter caused by high-speed motion blur, reducing localization stability. | Adoption of Focal EIoU loss, which splits aspect ratio loss into width/height differences and suppresses low-quality boxes to enhance positioning consistency. | YOLOv5s uses CIoU loss, which fails to effectively decompose width/height errors or suppress noisy predictions, resulting in more jitter in high-speed scenarios. |

| 3. Trade-off between real-time performance (FPS > 30) and detection accuracy, with lightweight models sacrificing precision. | Synergistic design of FasterNet backbone (Partial Convolution reduces 10.1% FLOPs) and BRA (efficient global dependency modeling without excessive computation). | YOLOv5s uses C3 modules with higher computational overhead; its fixed convolutional receptive fields limit global feature capture, failing to balance speed and accuracy as effectively. |

| Dataset Composition | Original Scale | Add New Samples | Updated Scale | Key Improvement Points |

|---|---|---|---|---|

| Original mixed dataset (COCO, etc.) | 10,947 frames | - | 10,947 frames | Retain basic samples |

| Highway-100K | - | 8000 frames | 8000 frames | Supplement standard samples for high-speed vehicle scenarios |

| total | 10,947 frames | 8000 frames | 18,947 frames | Increase the proportion of highway vehicle scene samples to 54.2% |

| Environment | Environment Configuration |

|---|---|

| CPU | Intel Core i7-11800 |

| Memory | 32 G |

| Graphics Card | NVIDIA GeForce RTX 2080Ti |

| Operating System | Windows 10 |

| Programming Language | Python 3.9.10 |

| Deep Learning Framework | Pytorch 1.12.1 |

| Integrated Development Environment | Pycharm Community Edition 2022.3.2 |

| CUDA | 12.0 |

| CUDNN | 8.3.2 |

| Hyper-Parameters | Coefficient Value |

|---|---|

| Initial learning rate | 0.01 |

| Cycle learning rate | 0.01 |

| Momentum | 0.937 |

| Weight attenuation coefficient | 0.0005 |

| Preheat the number of learning rounds | 3.0 |

| Preheating learning momentum | 0.8 |

| Preheat initial bias learning rate | 0.1 |

| Bounding box regression loss coefficient | 0.05 |

| Classification loss coefficient | 0.5 |

| Confidence loss system | 1.0 |

| Positive sample weights in BCE loss with or without objects | 1.0 |

| Model | Backbone | AP@0.5/% | mAP@0.5/% | FPS | Params M | FLOPs G | |

|---|---|---|---|---|---|---|---|

| Pedestrian | Vehicle | ||||||

| YOLOv5s | C3Net | 89.3 | 95.3 | 92.3 | 26.6 | 7.02 | 15.8 |

| YOLOv5s-S2 | Shufflenetv2 | 86.7 | 92.3 | 90.4 | 48.7 | 3.36 | 9.0 |

| YOLOv5s-F | FasterNet | 88.1 | 94.9 | 91.5 | 46.5 | 4.02 | 9.5 |

| Serial Number | Focal EIoU | BRA | CARAFE | 160 × 160 Head | AP@0.5/% | mAP@0.5/% | mAP@0.5 (Small Target)/% | FPS | Params M | FLOPs G | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Pedestrian | Vehicle | ||||||||||

| 1 | 88.1 | 94.9 | 91.5 | 88.1 | 46.5 | 4.02 | 9.5 | ||||

| 2 | √ | 88.3 | 95.2 | 91.8 | 88.3 | 45.4 | 4.02 | 9.5 | |||

| 3 | √ | √ | 88.6 | 95.3 | 91.9 | 88.6 | 40.1 | 4.17 | 10.2 | ||

| 4 | √ | √ | 89.2 | 95.4 | 92.3 | 90.0 | 39.0 | 4.25 | 9.9 | ||

| 5 | √ | 90.1 | 95.4 | 92.7 | 90.1 | 38.6 | 4.40 | 10.9 | |||

| 6 | √ | √ | √ | 91.5 | 95.4 | 93.5 | 92.5 | 34.0 | 4.85 | 12.5 | |

| 7 | √ | √ | √ | √ | 93.1 | 95.5 | 94.4 | 93.1 | 30.7 | 5.31 | 14.2 |

| YOLOv8 | 91.8 | 95.9 | 93.8 | 90.0 | 29.6 | 11.1 | 28.4 | ||||

| mAP@0.5 | mAP@0.5 (Small Target)/% | FPS | Params(M) | FLOPs(G) | |

|---|---|---|---|---|---|

| YOLOv5s-F | 94.4 | 93.1 | 30.7 | 5.31 | 14.2 |

| YOLOv8s | 93.8 | 90.0 | 29.6 | 11.1 | 28.4 |

| PP-YOLOE-s | 93.5 | 89.2 | 32.1 | 7.97 | 17.5 |

| Efficient-Det-D2 | 91.2 | 86.5 | 24.3 | 8.10 | 22.6 |

| Loss Function | mAP@0.5 (%) | Small-Target AP@0.5 (%) | FPS | Convergence Epochs | Bounding Box Std Dev | Params (M) | FLOPs (G) |

|---|---|---|---|---|---|---|---|

| IoU | 89.2 | 85.6 | 47.2 | 210 | 1.8 | 5.31 | 14.2 |

| GIoU | 90.1 | 86.3 | 46.8 | 195 | 1.6 | 5.31 | 14.2 |

| DIoU | 90.7 | 87.1 | 46.5 | 180 | 1.5 | 5.31 | 14.2 |

| CIoU | 91.5 | 88.1 | 46.5 | 170 | 1.4 | 5.31 | 14.2 |

| EIoU | 92.0 | 88.7 | 46.2 | 160 | 1.3 | 5.31 | 14.2 |

| Focal EIoU | 92.3 | 89.5 | 45.8 | 150 | 1.2 | 5.31 | 14.2 |

| Loss Function | Center Coordinate Std Dev (Pixels) | IoU Fluctuation (Variance) |

|---|---|---|

| IoU | 3.2 | 0.086 |

| GIoU | 2.8 | 0.072 |

| DIoU | 2.5 | 0.065 |

| CIoU | 2.1 | 0.058 |

| EIoU | 1.8 | 0.051 |

| Focal EIoU | 1.2 | 0.032 |

| Loss Function | Average Overlap Ratio of Consecutive Frames (%) |

|---|---|

| CIoU | 78.3 |

| EIoU | 82.5 |

| Focal EIoU (Ours) | 89.7 |

| YOLOv5s | YOLOv5s-F | |||||

|---|---|---|---|---|---|---|

| Type | P/% | R/% | AP@0.5/% | P/% | R/% | AP@0.5/% |

| Pedestrian | 48.5 | 39.7 | 40.7 | 56.4 | 41.7 | 45.6 |

| People | 45.2 | 35.6 | 33.5 | 49.8 | 35.2 | 36.4 |

| Bicycle | 29.1 | 16.9 | 13.8 | 29.0 | 16.7 | 14.5 |

| Car | 64.0 | 73.5 | 74.4 | 67.2 | 80.9 | 81.1 |

| Van | 47.5 | 36.9 | 36.8 | 50.7 | 41.8 | 42.2 |

| Truck | 55.3 | 30.9 | 32.2 | 47.0 | 31.6 | 32.3 |

| Tricycle | 40.7 | 23.1 | 19.9 | 44.2 | 25.1 | 24.6 |

| Awning-tricycle | 24.0 | 11.6 | 10.4 | 26.7 | 15.4 | 13.3 |

| Bus | 61.1 | 43.8 | 46.8 | 62.5 | 50.2 | 53.3 |

| Motor | 48.0 | 43.2 | 39.1 | 53.7 | 45.3 | 44.6 |

| YOLOv5s | YOLOv5s-F | |

|---|---|---|

| mAP@0.5/% | 34.8 | 38.8 |

| mAP@0.5: 0.95/% | 19.2 | 22.1 |

| Dataset | Model | mAP@0.5 (%) | Small-Target AP@0.5 (%) | FPS |

|---|---|---|---|---|

| VisDrone2019 | YOLOv5s-F | 38.8 | 32.1 | 30.2 |

| Highway-100K | YOLOv5s-F | 92.5 | 89.7 | 30.5 |

| Custom Collected | YOLOv5s-F | 91.8 | 88.3 | 30.1 |

| Hardware Device | Model | Avg Inference Time (ms) | FPS | Peak Memory Usage (GB) | Power Consumption (W, Embedded Only) | Meets Real-Time Requirement (FPS > 30) |

|---|---|---|---|---|---|---|

| NVIDIA RTX 2080Ti | YOLOv5s | 37.6 | 26.6 | 4.2 | - | No |

| YOLOv8s | 33.8 | 29.6 | 6.8 | - | Near (slightly below 30) | |

| YOLOv5s-F | 32.6 | 30.7 | 3.1 | - | Yes | |

| NVIDIA RTX 3090 | YOLOv5s-F | 18.2 | 54.9 | 3.3 | - | Yes (exceeds real-time requirements) |

| NVIDIA Jetson Nano | YOLOv5s | 152.3 | 6.6 | 1.8 | 7.5 | No (suitable for low-frame scenarios) |

| YOLOv5s-F | 118.5 | 8.4 | 1.2 | 6.8 | No | |

| NVIDIA Jetson Xavier NX | YOLOv5s-F | 35.2 | 28.4 | 2.1 | 15.2 | Near (achievable with quantization) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Published by MDPI on behalf of the World Electric Vehicle Association. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Guo, J.; Geng, G.; Sun, L.; Ji, Z. YOLOv5s-F: An Improved Algorithm for Real-Time Monitoring of Small Targets on Highways. World Electr. Veh. J. 2025, 16, 483. https://doi.org/10.3390/wevj16090483

Guo J, Geng G, Sun L, Ji Z. YOLOv5s-F: An Improved Algorithm for Real-Time Monitoring of Small Targets on Highways. World Electric Vehicle Journal. 2025; 16(9):483. https://doi.org/10.3390/wevj16090483

Chicago/Turabian StyleGuo, Jinhao, Guoqing Geng, Liqin Sun, and Zhifan Ji. 2025. "YOLOv5s-F: An Improved Algorithm for Real-Time Monitoring of Small Targets on Highways" World Electric Vehicle Journal 16, no. 9: 483. https://doi.org/10.3390/wevj16090483

APA StyleGuo, J., Geng, G., Sun, L., & Ji, Z. (2025). YOLOv5s-F: An Improved Algorithm for Real-Time Monitoring of Small Targets on Highways. World Electric Vehicle Journal, 16(9), 483. https://doi.org/10.3390/wevj16090483