A Self-Attention-Enhanced 3D Object Detection Algorithm Based on a Voxel Backbone Network

Abstract

1. Introduction

- For objects with noisy points, low-resolution voxels struggle to preserve features, thereby degrading detection robustness.

- Traditional 3D convolutions with fixed receptive fields are incapable of adaptively capturing multi-scale contextual relationships due to their inherent locality bias and inability to model long-range dependencies without significantly increasing computational complexity.

2. Related Work

3. Materials and Methods

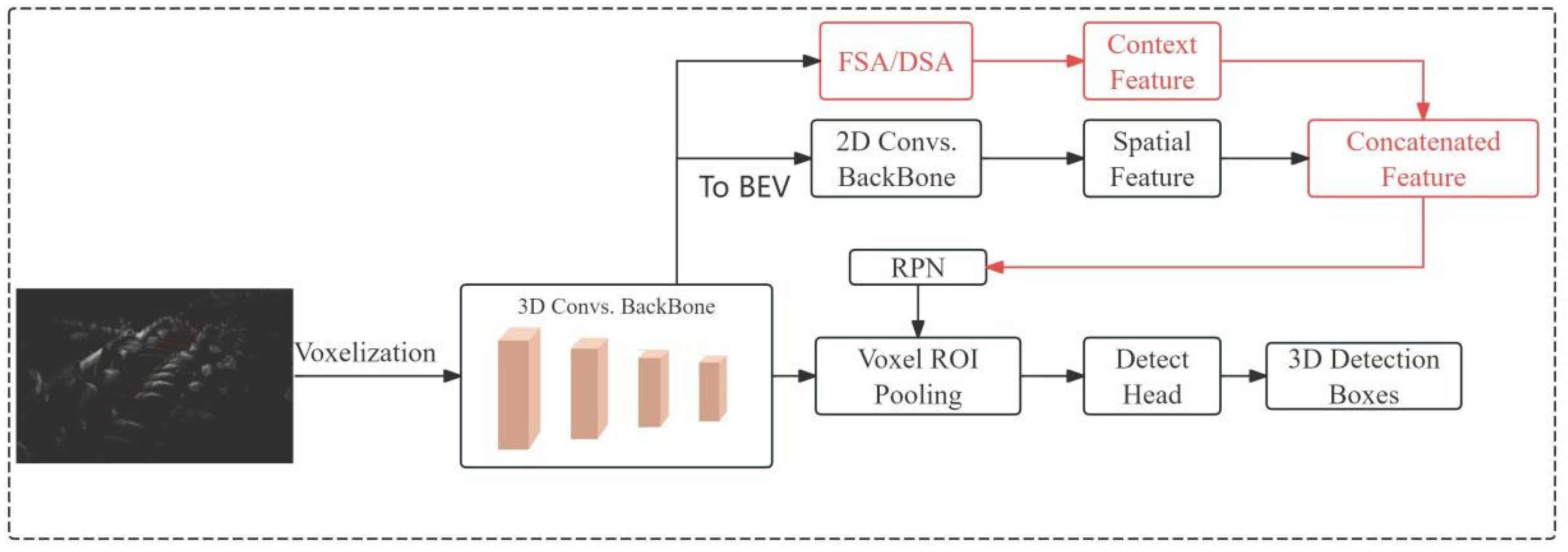

3.1. Overall Architecture

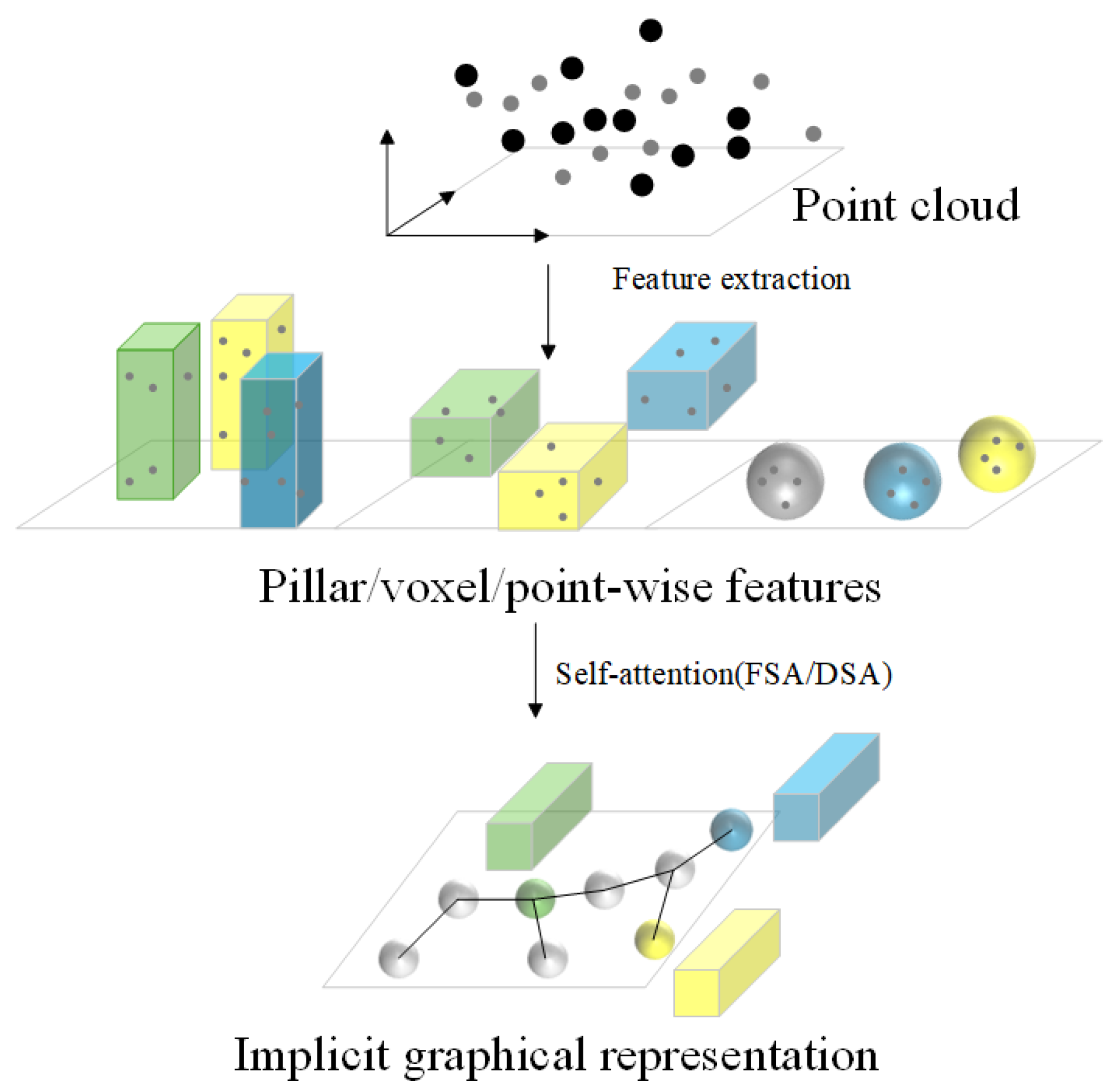

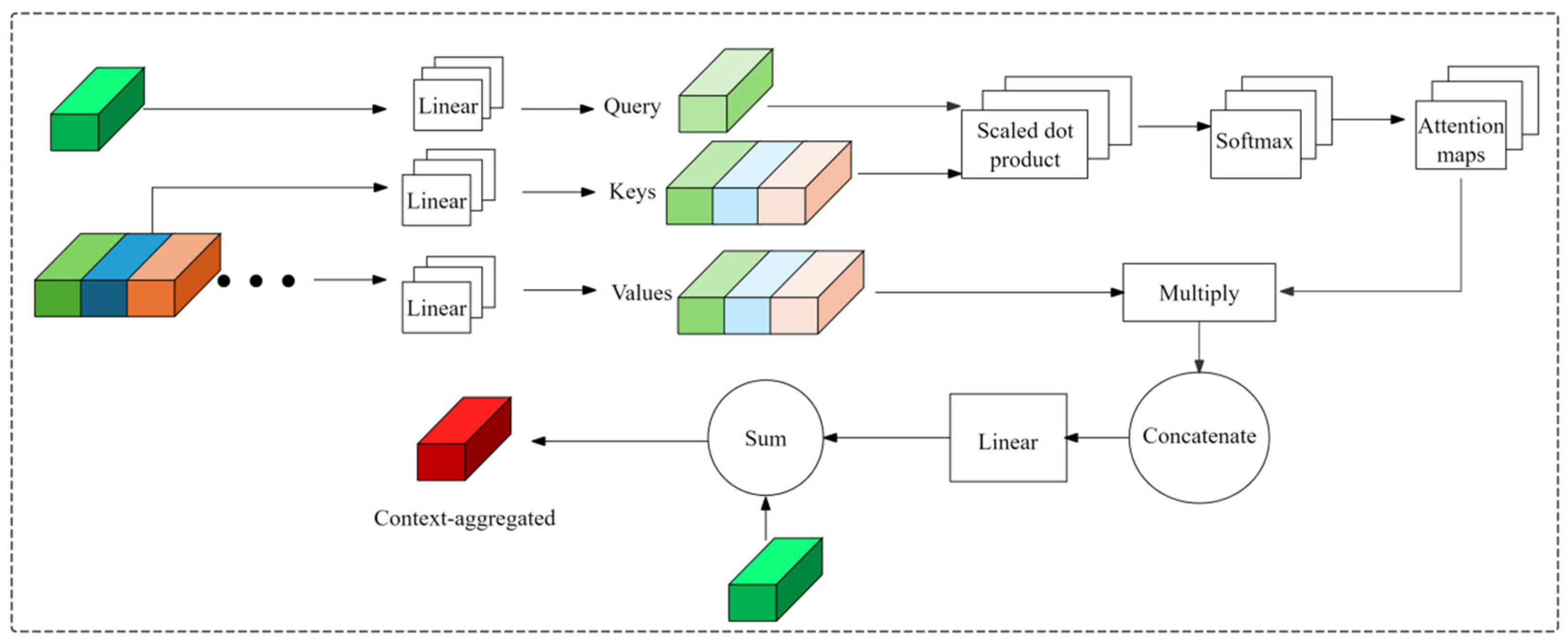

3.2. Self-Attention Module

3.3. Full Self-Attention Module

- Handling Irregular Spatial Distribution:

- 2.

- Multi-Scale Geometric Context Integration:

- 3.

- Adaptive Density-Aware Processing:

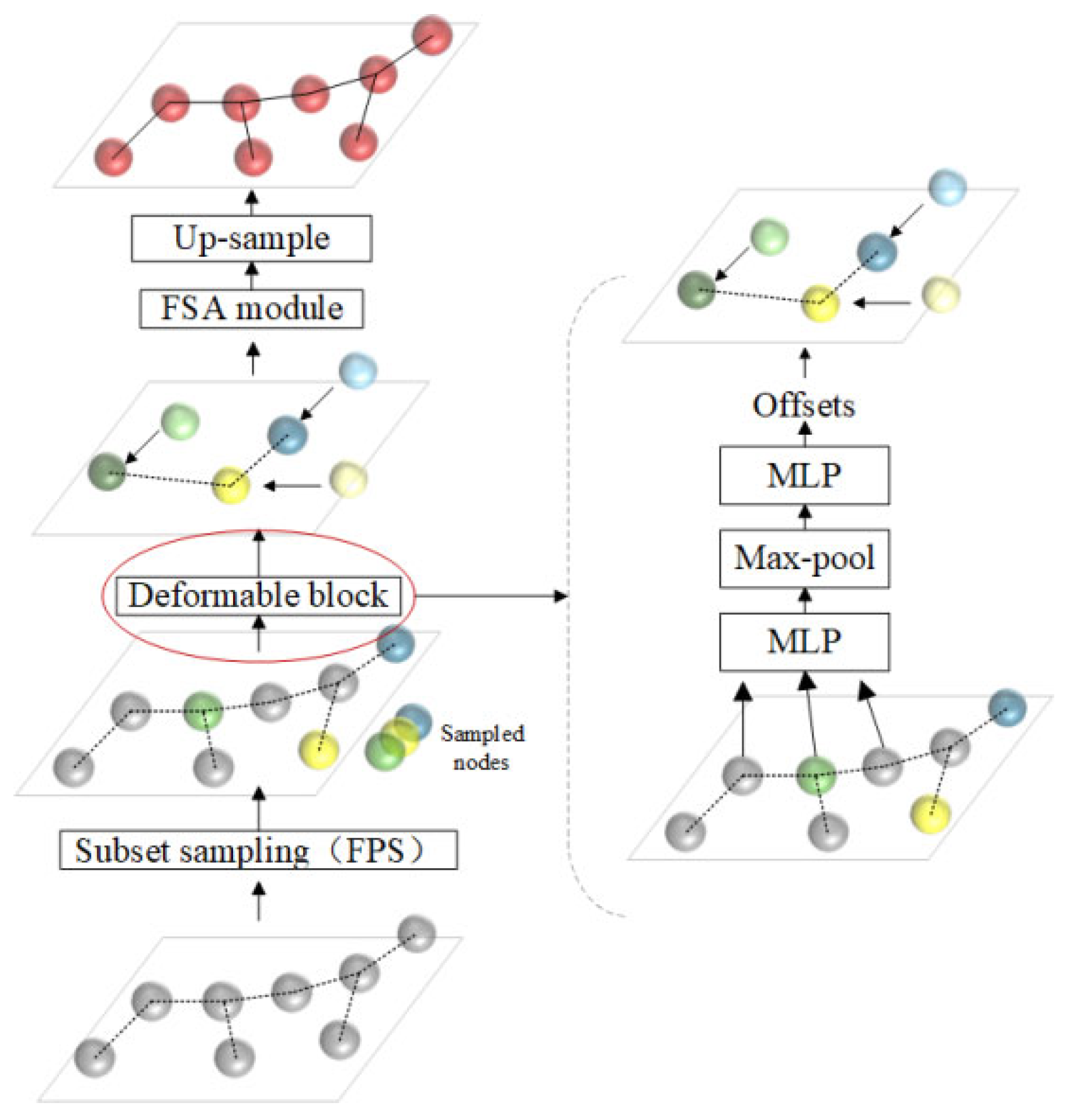

3.4. Deformable Self-Attention Module

3.5. Datasets

3.6. Implementation Details

4. Results

4.1. Results on KITTI Dataset

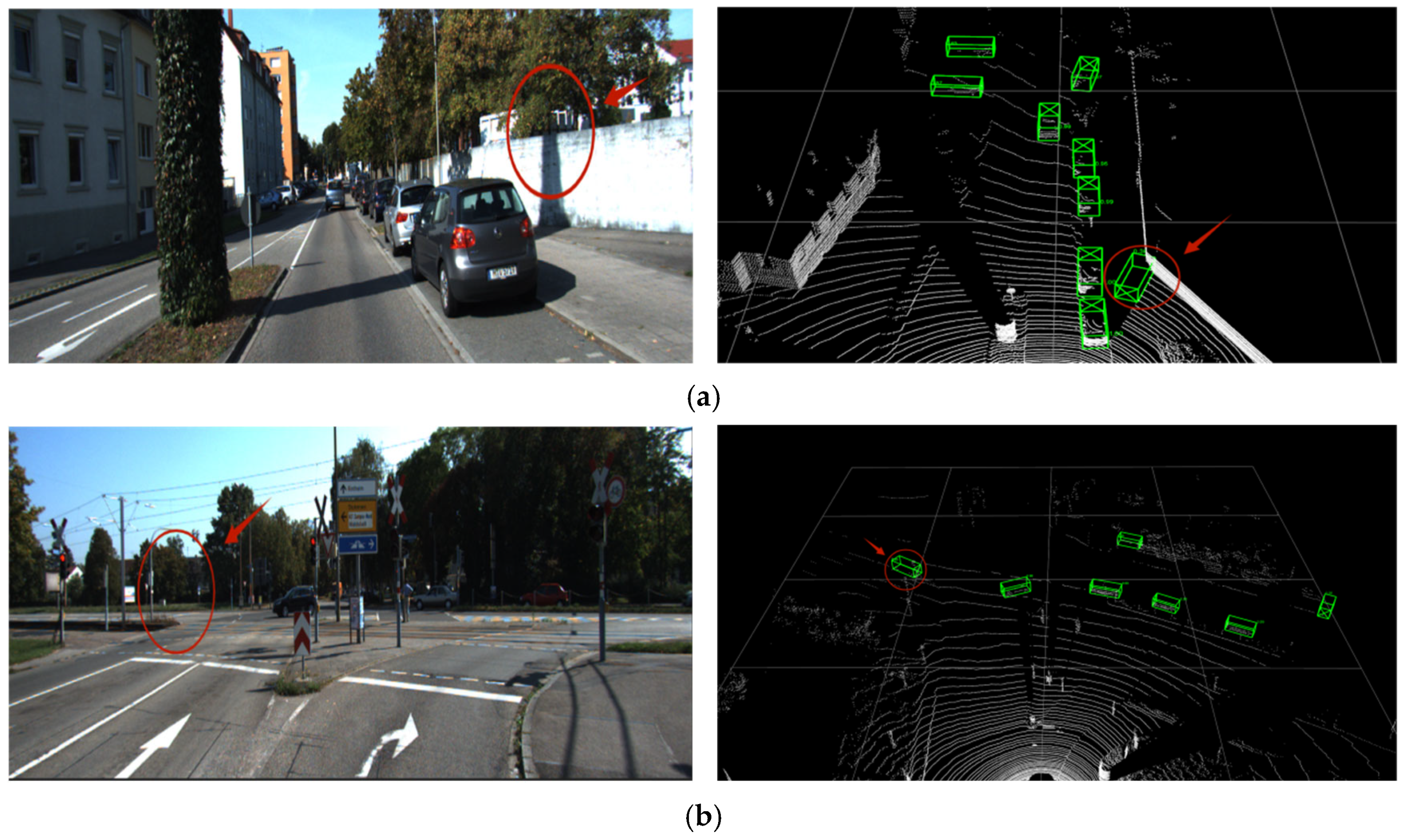

4.2. Visualization

4.3. Results on the Waymo Open Dataset

4.4. Ablation Study

4.5. Individual Module Analysis and Failure Cases

4.5.1. Individual Module Contributions

4.5.2. Failure Case Analysis

4.6. Statistical Significance Analysis

5. Conclusions

5.1. Main Contributions

5.2. Key Findings

5.3. Practical Contributions

5.4. Limitations and Future Directions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Arnold, E.; Al-Jarrah, O.Y.; Dianati, M.; Fallah, S.; Oxtoby, D.; Mouzakitis, A. A survey on 3D object detection methods for autonomous driving applications. IEEE Trans. Intell. Transp. Syst. 2019, 20, 3782–3795. [Google Scholar] [CrossRef]

- Yurtsever, E.; Lambert, J.; Carballo, A.; Takeda, K. A survey of autonomous driving: Common practices and emerging technologies. IEEE Access 2020, 8, 58443–58469. [Google Scholar] [CrossRef]

- Caesar, H.; Bankiti, V.; Lang, A.H.; Vora, S.; Liong, V.E.; Xu, Q.; Beijbom, O. nuScenes: A multimodal dataset for autonomous driving. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 11621–11631. [Google Scholar]

- Qi, C.R.; Li, Y.; Hao, S.; Guibas, L.J. Pointnet++: Deep hierarchical feature learning on point sets in a metric space. Advances in neural information processing systems. In Proceedings of the NIPS’17: Proceedings of the 31st International Conference on Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Shi, S.; Wang, X.; Li, H. Pointrcnn: 3d object proposal generation and detection from point cloud. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 770–779. [Google Scholar]

- Chen, Y.; Liu, S.; Shen, X.; Jia, J. Fast point r-cnn. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 9775–9784. [Google Scholar]

- Zhou, Y.; Tuzel, O. Voxelnet: End-to-end learning for point cloud based 3d object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4490–4499. [Google Scholar]

- Yan, Y.; Mao, Y.; Li, B. Second: Sparsely embedded convolutional detection. Sensors 2018, 18, 3337. [Google Scholar] [CrossRef] [PubMed]

- Qian, R.; Lai, X.; Li, X. 3D object detection for autonomous driving: A survey. Pattern Recognit. 2022, 130, 108796. [Google Scholar] [CrossRef]

- Shi, S.; Guo, C.; Jiang, L.; Wang, Z.; Shi, J.; Wang, X.; Li, H. Pv-rcnn: Point-voxel feature set abstraction for 3d object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 10529–10538. [Google Scholar]

- Yin, T.; Zhou, X.; Krahenbuhl, P. Center-based 3d object detection and tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 11784–11793. [Google Scholar]

- Deng, J.; Shi, S.; Li, P.; Zhou, W.; Zhang, Y.; Li, H. Voxel r-cnn: Towards high performance voxel-based 3d object detection. In Proceedings of the AAAI Conference on Artificial Intelligence, Virtual, 2–9 February 2021; Volume 35, pp. 1201–1209. [Google Scholar]

- Huang, B.; Li, Y.; Xie, E.; Liang, F.; Wang, L.; Shen, M.; Liu, F.; Wang, T.; Luo, P.; Shao, J. Fast-BEV: Towards real-time on-vehicle bird’s-eye view perception. arXiv 2023, arXiv:2301.07870. [Google Scholar]

- Chen, Y.; Liu, J.; Zhang, X.; Qi, X.; Jia, J. Voxelnext: Fully sparse voxelnet for 3d object detection and tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 21674–21683. [Google Scholar]

- Shi, S.; Jiang, L.; Deng, J.; Wang, Z.; Guo, C.; Shi, J.; Wang, X.; Li, H. PV-RCNN++: Point-voxel feature set abstraction with local vector representation for 3D object detection. Int. J. Comput. Vis. 2023, 131, 531–551. [Google Scholar] [CrossRef]

- Xiao, H.; Li, Y.; Du, J.; Mosig, A. Ct3d: Tracking microglia motility in 3D using a novel cosegmentation approach. Bioinformatics 2011, 27, 564–571. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Chen, Y.; Yang, Z.; Zheng, X.; Chang, Y.; Li, X. Pointformer: A dual perception attention-based network for point cloud classification. In Proceedings of the Asian Conference on Computer Vision, Macau, China, 4–8 December 2022; pp. 3291–3307. [Google Scholar]

- Liu, Z.; Tang, H.; Amini, A.; Yang, X.; Mao, H.; Rus, D.L. Bevfusion: Multi-task multi-sensor fusion with unified bird’s-eye view representation. In Proceedings of the 2023 IEEE International Conference on Robotics and Automation (ICRA), London, UK, 29 May–2 June 2023; pp. 2774–2781. [Google Scholar]

- Guo, Y.; Wang, Y.; Wang, L.; Wang, Z.; Cheng, C. Cvcnet: Learning cost volume compression for efficient stereo matching. IEEE Trans. Multimed. 2022, 25, 7786–7799. [Google Scholar] [CrossRef]

- He, C.; Li, R.; Li, S.; Zhang, L. Voxel set transformer: A set-to-set approach to 3d object detection from point clouds. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 8417–8427. [Google Scholar]

- Lang, A.H.; Vora, S.; Caesar, H.; Zhou, L.; Yang, J.; Beijbom, O. Pointpillars: Fast encoders for object detection from point clouds. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 12697–12705. [Google Scholar]

- Yang, Z.; Sun, Y.; Liu, S.; Jia, J. 3dssd: Point-based 3d single stage object detector. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 11040–11048. [Google Scholar]

- Zhang, G.; Fan, L.; He, C.; Lei, Z.; Zhang, Z.; Zhang, L. Voxel mamba: Group-free state space models for point cloud based 3d object detection. Adv. Neural Inf. Process. Syst. 2024, 37, 81489–81509. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention is all you need. In Advances in Neural Information Processing Systems; Curran Associates Inc.: Red Hook, NY, USA, 2017. [Google Scholar]

- Geiger, A.; Lenz, P.; Stiller, C.; Urtasun, R. Vision meets robotics: The kitti dataset. Int. J. Robot. Res. 2013, 32, 1231–1237. [Google Scholar] [CrossRef]

- Mei, J.; Zhu, A.Z.; Yan, X.; Yan, H.; Qiao, S.; Chen, L.-C.; Kretzschmar, H. Waymo open dataset. In Panoramic Video Panoptic Segmentation, Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; Springer Nature: Cham, Switzerland, 2022; pp. 53–72. [Google Scholar]

- Lyu, J.; Qi, Y.; You, S.; Meng, J.; Meng, X.; Kodagoda, S.; Wang, S. CaLiJD: Camera and LiDAR Joint Contender for 3D Object Detection. Remote Sens. 2024, 16, 4593. [Google Scholar] [CrossRef]

- Song, Z.; Zhang, G.; Liu, L.; Lei, Y. RoboFusion: Towards robust multi-modal 3D object detection via SAM. arXiv 2024, arXiv:2401.03907. [Google Scholar]

- Noh, J.; Lee, S.; Ham, B. Hvpr: Hybrid Voxel-Point Representation for Single-Stage 3D Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 14605–14614. [Google Scholar]

- Jiang, H.; Ren, J.; Li, A. 3D object detection under urban road traffic scenarios based on dual-layer voxel features fusion augmentation. Sensors 2024, 24, 3267. [Google Scholar] [CrossRef] [PubMed]

| Method | IoU Thresh. | AP3D (%) | APBEV (%) | ||||

|---|---|---|---|---|---|---|---|

| Easy | Mod. | Hard | Easy | Mod. | Hard | ||

| Voxel-RCNN | 0.7 | 92.27 | 85.16 | 82.48 | 95.34 | 91.17 | 88.68 |

| SA-VoxelRCNN | 0.7 | 93.94 | 86.62 | 83.54 | 95.43 | 91.29 | 90.03 |

| Improvement | 0.7 | +1.67 | +1.46 | +1.06 | +0.09 | +0.12 | +1.35 |

| Method | FPS (HZ) | AP3D (%) | ||

|---|---|---|---|---|

| Easy | Mod. | Hard | ||

| LiDAR+RGB | ||||

| MV3D (2017) | - | 74.97 | 63.63 | 54.00 |

| UberATG-MMF (2019) | - | 88.40 | 77.43 | 70.22 |

| 3D-CVF (2019) | - | 88.84 | 79.72 | 72.80 |

| PI-RCNN (2020) | - | 88.27 | 78.53 | 77.75 |

| MAFF-Net (2022) | - | 88.88 | 79.37 | 74.68 |

| CaLiJD [27] (2024) | - | 93.05 | 84.49 | 78.09 |

| RoboFusion [28] (2024) | - | 90.90 | 82.93 | 80.62 |

| LiDAR-only | ||||

| Point-based: | ||||

| PointRCNN (2019) | 10.0 | 86.69 | 75.64 | 70.70 |

| STD (2019) | 12.5 | 87.65 | 79.11 | 75.09 |

| 3DSSD (2020) | 26.3 | 88.36 | 79.57 | 74.55 |

| Point-GNN (2020) | - | 87.89 | 78.34 | 72.38 |

| Point–Voxel: | ||||

| Fast Point-RCNN (2019) | 15.0 | 84.28 | 75.73 | 67.39 |

| PV-RCNN (2020) | 8.9 | 90.25 | 81.43 | 76.82 |

| HVPR [29] (2021) | 36.1 | 91.14 | 82.05 | 79.49 |

| Voxel-based: | ||||

| VoxelNet (2018) | - | 77.46 | 65.12 | 57.73 |

| SECOND (2018) | 30.4 | 83.34 | 72.55 | 65.82 |

| PointPillars (2019) | 42.4 | 82.58 | 74.31 | 68.99 |

| TANet (2020) | 28.7 | 85.94 | 75.76 | 68.32 |

| SA-SSD (2020) | 25.0 | 88.75 | 79.79 | 74.16 |

| Voxel-RCNN (2021) | 25.2 | 90.90 | 81.62 | 77.06 |

| VoxSeT (2022) | 30.0 | 88.53 | 82.06 | 77.46 |

| SECOND+DL-VFFA [30] (2024) | 11.0 | 87.79 | 79.92 | 61.48 |

| SA-VoxelRCNN | 22.3 | 90.17 | 84.11 | 78.93 |

| Point Category | Mean Attention Weight | Noise Suppression Rate |

|---|---|---|

| Vehicle centers | 0.847 | N/A |

| Tree foliage | 0.078 | 89.21% |

| Traffic signs | 0.092 | 87.64% |

| Sensor artifacts | 0.063 | 92.56% |

| Road surface | 0.231 | N/A |

| Method | Overall | 0–30 m | 30–50 m | 50 m-lnf |

|---|---|---|---|---|

| LEVEL 1 3DmAP (IoU = 0.7): | ||||

| Voxel-RCNN (2021) | 75.54 | 92.25 | 73.89 | 53.26 |

| SA-VoxelRCNN | 78.23 | 93.51 | 76.51 | 55.13 |

| LEVEL 1 BEV mAP (IoU = 0.7): | ||||

| Voxel-RCNN (2021) | 88.07 | 97.53 | 87.24 | 77.60 |

| SA-VoxelRCNN | 90.13 | 98.32 | 88.35 | 79.14 |

| LEVEL 2 3D mAP (IoU = 0.7): | ||||

| Voxel-RCNN (2021) | 66.35 | 91.46 | 67.79 | 40.54 |

| SA-VoxelRCNN | 73.97 | 92.87 | 72.83 | 46.34 |

| LEVEL 2 BEV mAP (IoU = 0.7): | ||||

| Voxel-RCNN (2021) | 80.87 | 95.66 | 81.15 | 62.89 |

| SA-VoxelRCNN | 82.34 | 96.53 | 82.09 | 63.24 |

| Method | Nfilters | Nh | Mod AP3D (%) |

|---|---|---|---|

| Baseline | (64, 128, 256) | - | 80.09 |

| (64, 64, 128) | - | 79.65 | |

| (a) | (64, 64, 128) | 2 | 80.17 |

| (b) | (64, 64, 128) | 4 | 80.73 |

| (c) | (64, 128, 256) | 4 | 81.12 |

| Method | Nl | Nkeypts | Mod AP3D (%) |

|---|---|---|---|

| Baseline | - | - | 81.12 |

| (A) | 1 | - | 81.63 |

| 2 | 512 | 82.27 | |

| 4 | 512 | 81.26 | |

| (B) | 2 | 1024 | 82.30 |

| 2 | 2048 | 82.33 | |

| 2 | 4096 | 82.31 |

| Configuration | FSA | DSA | Mod AP3D (%) | Hard AP3D (%) |

|---|---|---|---|---|

| Baseline | ✗ | ✗ | 80.09 | 76.85 |

| +FSA only | ✓ | ✗ | 81.34 | 78.12 |

| +DSA only | ✗ | ✓ | 81.78 | 78.46 |

| +FSA+DSA | ✓ | ✓ | 82.33 | 79.23 |

| Scenario | Baseline AP | Our Method | Improvement | Cost Increase |

|---|---|---|---|---|

| Sparse scenes | 65.24% | 65.76% | 0.52% | +15% |

| Dense cluttered | 78.42% | 78.89% | 0.47% | +120% |

| Uniform backgrounds | 82.13% | 82.41% | 0.28% | +8% |

| Normal scenes | 80.09% | 82.33% | 2.24% | +8% |

| Comparison | Mean Improvement | Standard Deviation | t-Statistic | p-Value |

|---|---|---|---|---|

| Ours vs. Baseline: | ||||

| Moderate | +2.49% | 0.31% | 8.03 | <0.001 |

| Hard | +1.87% | 0.26% | 7.19 | <0.001 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Published by MDPI on behalf of the World Electric Vehicle Association. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, Z.; Huang, X. A Self-Attention-Enhanced 3D Object Detection Algorithm Based on a Voxel Backbone Network. World Electr. Veh. J. 2025, 16, 416. https://doi.org/10.3390/wevj16080416

Wang Z, Huang X. A Self-Attention-Enhanced 3D Object Detection Algorithm Based on a Voxel Backbone Network. World Electric Vehicle Journal. 2025; 16(8):416. https://doi.org/10.3390/wevj16080416

Chicago/Turabian StyleWang, Zhiyong, and Xiaoci Huang. 2025. "A Self-Attention-Enhanced 3D Object Detection Algorithm Based on a Voxel Backbone Network" World Electric Vehicle Journal 16, no. 8: 416. https://doi.org/10.3390/wevj16080416

APA StyleWang, Z., & Huang, X. (2025). A Self-Attention-Enhanced 3D Object Detection Algorithm Based on a Voxel Backbone Network. World Electric Vehicle Journal, 16(8), 416. https://doi.org/10.3390/wevj16080416