The optimization benefits demonstrated thus far focus exclusively on inter-AP movement without considering the operational efficiency of data collection at each access point. This represents a significant analytical gap, as the time and energy required for hovering and data transmission can vary dramatically based on how stations are spatially distributed within the coverage area of each AP.

When a drone visits an individual station station directly, it hovers directly above the station with optimal signal strength and minimal communication time. However, when visiting an access point, the drone positions itself at the AP center and must communicate with all assigned stations within the coverage radius. Stations located near the coverage edge experience weaker signal strength, higher packet loss rates, and require longer transmission times compared to stations clustered near the AP center.

In other words, the distribution pattern of stations within each AP coverage area directly impacts operational efficiency. A drone requires significantly more time collecting data from an AP where stations are scattered across the coverage perimeter compared to one where stations cluster near the center position.

The proposed optimization herein, up to this point, addresses only the travel component while ignoring the potentially significant energy costs associated with hovering and communication phases. This observation highlights the necessity of developing metrics that evaluate AP quality based on station distribution characteristics and conduct further experiments.

3.4.1. Access Point Quality Metrics

The evaluation of access point data collection efficiency requires a comprehensive assessment framework that captures how sensor spatial distribution patterns affect operational performance. While the ILP optimization successfully minimizes access point count and ensures coverage, it does not account for the quality of sensor clustering within each coverage area, which directly impacts the communication efficiency and hover time requirements.

To address this gap, we develop a composite quality assessment methodology that integrates multiple geometric and operational characteristics into unified metrics. The mathematical framework utilizes the notation defined below defining all variables used in Equations (

1) through (

4).

Building upon the mathematical components defined in

Table 1, a composite quality index is proposed, as defined in Equation (

1), that integrates multiple geometric and operational characteristics into a unified assessment technique. The composite AP quality score combines three fundamental aspects of station distribution within each access point’s coverage area:

where

,

, and

represent the relative importance weights for each quality dimension. This formulation treats each component as a penalty factor, where higher component values indicate poorer AP configuration, and the overall quality score approaches 1.0 for optimal configurations. The parametric weighting approach enables adaptive reconfiguration for unforeseen scenarios where operational priorities or mission constraints deviate from standard assumptions.

Compactness Component

The compactness component

, as defined in Equation (

2), evaluates how efficiently stations utilize the available coverage area:

where

represents the average distance from the AP center to all assigned stations, and

denotes the maximum coverage radius. Values approaching 0 indicate stations clustered near the AP center, enabling optimal communication efficiency, while values near 1.0 suggest stations distributed at the coverage perimeter, requiring maximum transmission power and extended collection times.

Maximum Distance Component

The maximum distance component

, as defined in Equation (

3), captures the worst-case communication scenario within each AP:

where

represents the maximum distance from AP center

c to any assigned station

. Low values indicate all stations remain within close proximity to the AP center, while high values approaching 1.0 signal that some stations operate at the communication range limit, potentially requiring additional transmission attempts and extended hover times.

Consistency Component

The consistency component

, as defined in Equation (

4), quantifies the uniformity of sensor distribution around the AP center:

where

represents the standard deviation of distances from the AP center to assigned stations. Values near 0 indicate uniform station clustering with predictable communication requirements, while higher values suggest irregular distribution patterns that complicate mission planning and introduce operational uncertainty. The normalization by coverage radius ensures consistency measures remain comparable across different AP configurations and deployment scenarios.

This composite metric combines all quality factors into a single score where values approaching 1.0 indicate optimal AP configurations for efficient data collection.

Table 2 summarizes how the quality score of an access point (AP) configuration affects the data collection efficiency and required hover time. Higher scores (closer to 1.0) indicate excellent performance with no additional hover time needed. As the score decreases, collection efficiency degrades, and additional hover time is required to compensate—ranging from 10 to 20% for good scores to over 40% for poor ones. APs with poor quality metrics may experience longer hover times compared to well-clustered configurations. In large networks where our method achieves the greatest travel distance reductions, this hovering penalty could offset some of the energy savings from optimized routing.

The weighted combination of these three components provides a comprehensive assessment of AP quality, with the compactness factor receiving the primary emphasis () due to its direct impact on communication efficiency, while the maximum distance and consistency factors contribute equally () to capture the reliability and predictability aspects of data collection operations.

3.4.2. Operational Impact-Based Quality Assessment

Building upon the individual AP quality metrics described in the previous section, practical drone deployment scenarios require a more comprehensive evaluation technique that captures the real-world operational consequences of AP placement decisions. While the standard deviation distance, maximum distance, and compactness ratio provide valuable insights into station clustering characteristics, they do not directly translate into actionable information about mission duration, energy consumption, or operational reliability.

The operational impact-based approach shifts focus from geometric properties to mission-critical performance indicators. This methodology recognizes that drone operators primarily concern themselves with total mission time and operational predictability rather than abstract clustering metrics. The mathematical formulation employs the notation presented in

Table 3 defining all variables used in Equations (

5) through (

10).

Using the mathematical components defined in

Table 3, the assessment methodology quantifies how AP quality variations translate into tangible operational outcomes, such as extended hover times, increased communication overhead, and reduced mission reliability.

The operational assessment methodology evaluates each access point using a comprehensive technique that incorporates both quality-based time penalties and distance-dependent communication efficiency degradation. For access points exhibiting quality scores below the operational threshold , the system calculates additional hover time requirements using two complementary approaches. The quality-based penalty mechanism applies a linear relationship between quality degradation and time overhead, while the distance-based penalty incorporates realistic communication physics to estimate retransmission and positioning requirements.

The mathematical formulation for operational extra time calculation combines these factors as follows in Equation (

5), taking the maximum to capture the dominant limiting factor:

where the quality-based time penalty is expressed in Equation (

6) as

where the variables are defined in

Table 3:

represents the AP quality score (

) for AP

i as calculated in

Section 3.4.1 using Equation (

1),

denotes the number of stations assigned to AP

i, and

represents the baseline collection time per station under optimal conditions.

The distance-based penalty, as defined in Equation (

7), incorporates communication efficiency degradation. This distance-based penalty accounts for communication degradation as sensors operate farther from their assigned access point center, requiring additional time for reliable data transmission:

The communication efficiency factor

(see

Table 3) as defined in Equation (

8) models real-world wireless signal degradation based on distance-dependent physics such as the average station distance within the coverage area:

where

establishes the minimum communication efficiency, and

controls the degradation rate. A non-linear degradation function is employed

because real-world wireless communication exhibits non-linear distance-dependent behavior rather than simple linear degradation. Signal strength drops with distance squared according to the inverse square law [

38], packet loss increases exponentially as signal weakens [

39], and retransmission needs grow rapidly at communication limits [

40]. The selection of

represents a balance between mathematical realism and practical applicability expecting wireless communication to be fairly robust at moderate distances but to degrade more noticeably as sensors approach the limits of the coverage area, as

(quadratic relationship) would create overly harsh efficiency drops that degrade too quickly with distance, while

would produce unrealistic linear behavior that fails to capture the exponential nature of wireless communication degradation.

provides moderate non-linear degradation that aligns with empirical wireless behavior observed in drone-sensor communication scenarios, offering a reasonable approximation of actual communication physics without excessive computational complexity.

converts penalty to efficiency (higher penalty = lower efficiency).

ensures minimum 50% efficiency (worst case).

The distance penalty

(defined in

Table 3) is calculated in Equation (9) as

where

represents the normalized average distance for AP

i, and

defines the optimal distance ratio (all variables defined in

Table 3). This penalty quantifies how far beyond the optimal distance ratio the sensors are positioned, with values of 0 indicating optimal positioning and values approaching 1 representing maximum deviation.

The mathematical reasoning behind Equation (

9) centers on creating a penalty system that appropriately scales sensor distribution effects on communication efficiency. The goal is to convert the distance ratio, which ranges from 0 to 1, into a distance penalty that equals 0 when the distance ratio is at or below

(0.3, the optimal zone), equals 1 when the distance ratio reaches 1.0 (the coverage edge), and provides linear scaling between these critical points.

The transformation process involves two essential mathematical steps. First, the origin is shifted by subtracting 0.3 from the distance ratio (), which repositions the coordinate system so that when the distance ratio equals 0.3, the penalty component becomes zero, and when the distance ratio equals 1.0, the penalty component becomes 0.7. Second, this shifted value is scaled to fit the 0–1 range by dividing by 0.7, which is calculated as (1.0–0.3). This scaling ensures that when the penalty component equals zero, the final result is 0 divided by 0.7, which equals 0, and when the penalty component equals 0.7, the final result is 0.7 divided by 0.7, which equals 1. The specific values used in this formula reflect the concept of usable range within the coverage area. The total possible range spans from 0.0 to 1.0, representing the full coverage radius, while the optimal range extends from 0.0 to 0.3, representing the no-penalty zone where sensors operate with maximum communication efficiency. The penalty range covers 0.3 to 1.0, which is where graduated penalties are applied based on distance from the access point center. The denominator value of 0.7, calculated as (1.0–0.3), represents this penalty-applicable range, essentially the portion of the coverage area where communication efficiency begins to degrade and penalties become necessary.

Alternative approaches demonstrate why this specific formulation is necessary. A simple linear approach using just the distance ratio would incorrectly apply penalties even within the optimal zone from 0 to 0.3, while a simple shift subtracting 0.3 would produce negative values and fail to properly scale the results. Incorrect scaling approaches would maintain negative values and produce wrong scale ranges, failing to achieve the desired 0–1 penalty mapping that accurately reflects communication physics in drone-sensor scenarios.

This operational technique also identifies problematic access points that require special attention during mission planning as defined in Equation (

10). Access points with quality scores

are flagged, as these locations typically experience significant communication challenges and extended collection times. The number of such problematic APs within a flight plan is quantified as

where

denotes the total number of access points in the flight plan and

is a problematic quality threshold of 0.5 based on the values of

Table 2. This counts how many access points have quality scores below the problematic threshold, which is used as a metric for assessing the reliability and operational risk of the flight plan.

3.4.3. Composite Quality Index

While operational impact assessment provides crucial insights into individual AP performance, mission planners require a unified metric that synthesizes multiple quality dimensions into a single interpretable score. The complexity of modern drone operations involves balancing numerous competing factors, including average performance, worst-case scenarios, operational consistency, time efficiency, and system reliability. Individual metrics, while valuable for detailed analysis, prove insufficient for holistic flight plan evaluation and comparative assessment between alternative routing strategies.

The proposed composite quality index addresses this limitation by integrating five distinct performance dimensions through a weighted aggregation technique. To simplify the mathematical notation, let

Q denote the composite quality score, and

through

represent the individual quality components as defined in

Table 4.

Each component captures a specific aspect of flight plan quality, with weights assigned based on operational priority and impact on mission success. The mathematical formulation, as defined in Equation (

11), combines these factors as

The average quality component represents the arithmetic mean of individual AP quality scores across the entire flight plan, providing insight into overall system performance. This metric captures the baseline operational efficiency expected during normal mission execution.

The worst-case quality component corresponds to the minimum quality score encountered within the flight plan, highlighting potential operational bottlenecks. This factor ensures that composite scores reflect the practical reality that mission performance often depends on the weakest system component rather than average performance.

The consistency component

, as defined in Equation (

12), quantifies quality variation across APs using standard deviation-based measures:

where

represents the standard deviation of AP quality scores. High consistency indicates predictable operational conditions with

approaching zero, while significant variation suggests uneven performance characteristics that complicate mission planning and execution. The standard deviation provides an intuitive measure of quality spread, expressed in the same units as the quality scores themselves.

The time efficiency component

, as defined in Equation (

13), evaluates the relationship between baseline collection time and quality-induced delays:

where

represents the optimal collection time under ideal conditions and

quantifies additional time requirements due to quality degradation.

The reliability component

, as defined in Equation (

14), provides assessment based on problematic AP detection:

where

denotes problematic APs as defined in Equation (

10) and

denotes the total number of access points in the flight plan. The −2 in the exponential function

is the decay rate that controls how quickly reliability drops as problematic APs increase. To determine the optimal exponent coefficient, analysis of how different values affect the reliability scoring across varying scenarios of problematic access point proportions reveals the impact of this parameter on assessment accuracy as depicted in

Table 5.

A decay rate of −1 produces penalties that are too gentle, maintaining 90% reliability even when 10% of access points are problematic, which may not adequately reflect the operational risk. Conversely, a decay rate of −3 creates excessively harsh penalties, dropping reliability to 74% with only 10% problematic access points, which could be unnecessarily conservative for most operational scenarios.

The value of −2 provides realistic operational impact modeling by producing reliability scores of 82% with 10% problematic access points, 61% with 25% problematic access points, and 37% with 50% problematic access points. These values align well with practical expectations about how flight plan reliability should degrade as the proportion of problematic access points increases.

The selection of the exponential approach with the exponent set to −2 reflects the balance needed for standard operational contexts where reliability assessment should be neither overly optimistic nor unnecessarily pessimistic. This decay rate ensures that small numbers of problematic access points receive proportionate penalties without completely undermining confidence in the flight plan, while still appropriately reflecting the increased operational risk associated with higher proportions of problematic access points.

The weighting scheme prioritizes average quality () as the primary performance indicator, followed by time efficiency () and worst-case performance () as secondary factors. Consistency () and reliability () provide additional nuance to the composite assessment while maintaining focus on operational outcomes.

This composite technique enables direct comparison between alternative flight plans and provides mission planners with a unified metric for evaluating overall operational effectiveness. The resulting scores range from 0 to 1 (

Table 6), with higher values indicating superior operational characteristics and improved mission efficiency. By consolidating multiple performance dimensions into a single interpretable value, the composite quality index facilitates the systematic evaluation of different access point configurations and routing strategies, allowing operators to make informed decisions about flight plan selection based on comprehensive quality assessment rather than individual metric optimization.

3.4.4. Quality-Based Post-Processing Enhancement

While the composite quality index provides a comprehensive technique for evaluating flight plan effectiveness, the practical application of ILP-based access point placement reveals inherent limitations that can significantly impact operational efficiency. To demonstrate these challenges and their resolution, an examination of the example configuration presented in

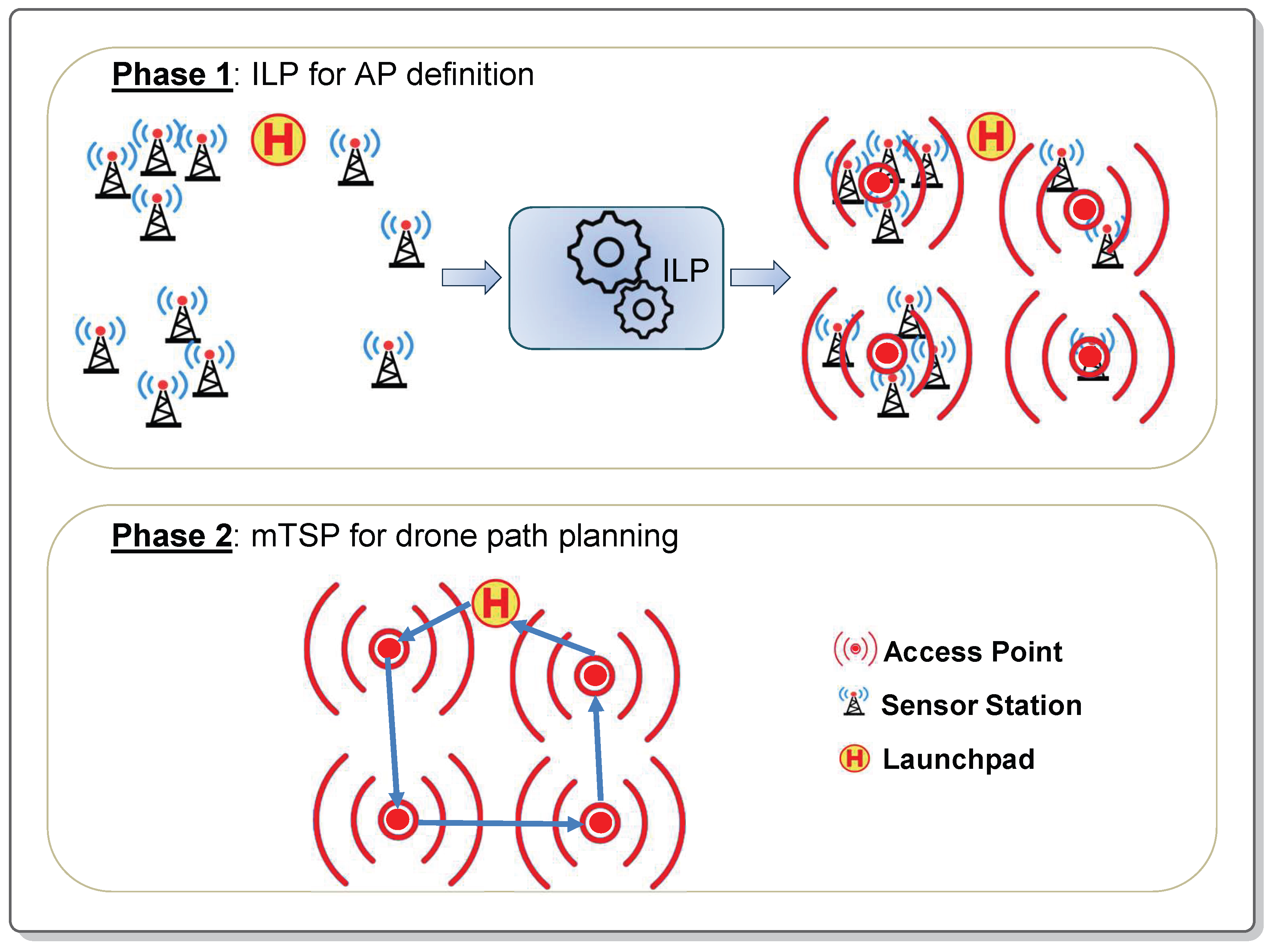

Figure 6 is conducted, which illustrates an 11-station deployment scenario processed through our two-phase optimization approach.

Figure 6a presents the initial distribution of 11 sensor stations across the operational area. Following ILP optimization for access point placement,

Figure 6b displays the resulting four access points with their associated coverage areas.

Figure 6c reproduces the same configuration using color coding to clearly delineate the access point assignments and their respective station allocations. Upon closer examination of this ILP-generated solution in

Figure 6d, a critical operational challenge becomes apparent regarding station-to-access-point assignments.

The ILP formulation, while successfully minimizing the total number of access points, does not guarantee optimal station-to-AP assignments from a communication efficiency perspective. Stations may be assigned to access points that satisfy coverage constraints but are not necessarily the nearest available option. This phenomenon occurs because the ILP objective function prioritizes minimizing access point count over optimizing individual station assignment distances. Consequently, some stations operate closer to the communication range limits of their assigned access points rather than benefiting from proximity to potentially closer alternatives.

Applying the quality assessment (Equation (

11)) to the ILP-generated configuration reveals the operational impact of these suboptimal assignments (

Figure 6c,

Table 7). The analysis yields a composite quality score of 0.62, indicating fair operational performance with room for significant improvement. More critically, the predicted extra hover time reaches 11.66 s, representing substantial operational overhead that directly impacts mission efficiency and energy consumption.

The detailed access point analysis exposes considerable quality variation across the four AP locations. AP1 achieves perfect quality (1.00) due to its single assigned station, while AP2 demonstrates poor performance (0.35) resulting from four stations distributed across varying distances from the access point center. AP3 and AP4 exhibit moderate quality scores of 0.64 and 0.51, respectively, indicating mixed operational characteristics that contribute to the overall system inefficiency.

This quality degradation stems directly from the ILP constraint structure, which ensures coverage and minimizes access point count but does not incorporate distance optimization in station assignments. While the ILP solution successfully maintains the minimum number of access points required for complete coverage, the resulting station distribution patterns create communication challenges that translate into extended collection times and reduced operational efficiency.

To address this limitation, an approach is proposed that involves post-processing the ILP-generated access point locations to reassign stations to their nearest available access points while preserving the optimal access point count achieved through integer programming. Algorithm 2 implements this enhancement strategy by maintaining the ILP-determined access point positions but redistributing station assignments based on proximity criteria rather than the original ILP assignment constraints.

| Algorithm 2 Assign sensor station locations to the nearest access point. |

- Require:

List of station coordinates , list of access point centers , coverage radius r - Ensure:

List of access points, each with a center and its assigned stations - 1:

Initialize empty map ▹ center → access point - 2:

for all

do - 3:

new access point with center c and radius r - 4:

end for - 5:

for all

do - 6:

▹ Valid centers within radius - 7:

if then - 8:

▹ Nearest center - 9:

Add s to - 10:

end if - 11:

end for - 12:

list of access points in return A

|

The post-processing algorithm iterates through all station locations, calculating distances to each available access point within communication range, and assigns each station to the nearest feasible option. This approach preserves the fundamental optimization achievement of minimizing access point count while improving the geometric distribution of stations around their respective collection points.

Reapplying the quality assessment metrics (Equation (

11)) following Algorithm 2 post-processing demonstrates substantial operational improvements (

Figure 6d,

Table 8). The composite quality score increases from 0.62 to 0.76, representing a significant enhancement in overall flight plan effectiveness. More importantly, the predicted extra hover time decreases from 11.66 s to 5.77 s, achieving a 50% reduction in operational overhead through improved station-to-access-point assignments.

The redistributed access point analysis reveals more balanced quality characteristics across all four AP locations. AP1 maintains reasonable performance (0.67) with three well-distributed stations, while AP2 achieves similar effectiveness (0.65) with its two assigned stations. AP3 accommodates four stations with moderate quality (0.51), and AP4 demonstrates good performance (0.75) with its two assigned stations. This more uniform quality distribution contributes to improved operational predictability and reduced mission complexity.

The post-processing approach effectively addresses the limitation inherent in pure ILP formulations while maintaining the fundamental optimization objective of minimizing access point count. By decoupling the access point placement problem from the station assignment optimization, this two-stage approach achieves superior operational characteristics without compromising the mathematical guarantees provided by integer linear programming for coverage and access point minimization.