A Review of Lithium-Ion Battery State of Charge Estimation Methods Based on Machine Learning

Abstract

1. Introduction

- The limitations of traditional SOC estimation methods are summarized. The advantages and necessity of machine-learning SOC estimation are presented.

- The datasets commonly used by researchers and the processing methods for the datasets are summarized and sorted out.

- The models of SOC estimation using traditional neural networks and deep neural networks are introduced, and the advantages and disadvantages of various models are compared.

- The impact of model hyperparameter settings and tuning methods on model performance is discussed. Factors affecting model performance are explored, and reference methods for model validation are established.

- The research needs, challenges faced, and areas requiring improvement for machine-learning-based SOC estimation are clarified.

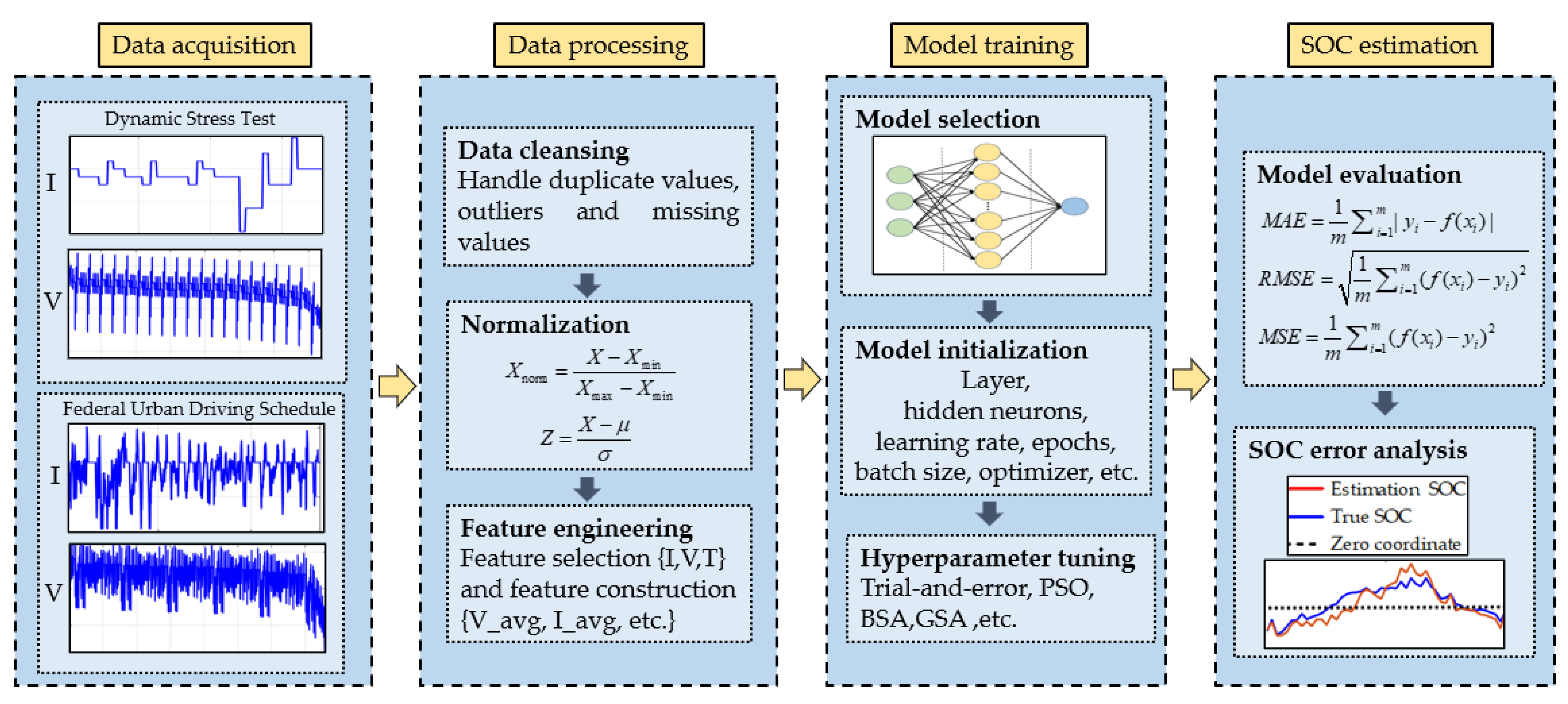

2. Methods

3. Data Collection and Preparation

3.1. Data Collection

3.2. Data Preparation

4. Model Selection and Training

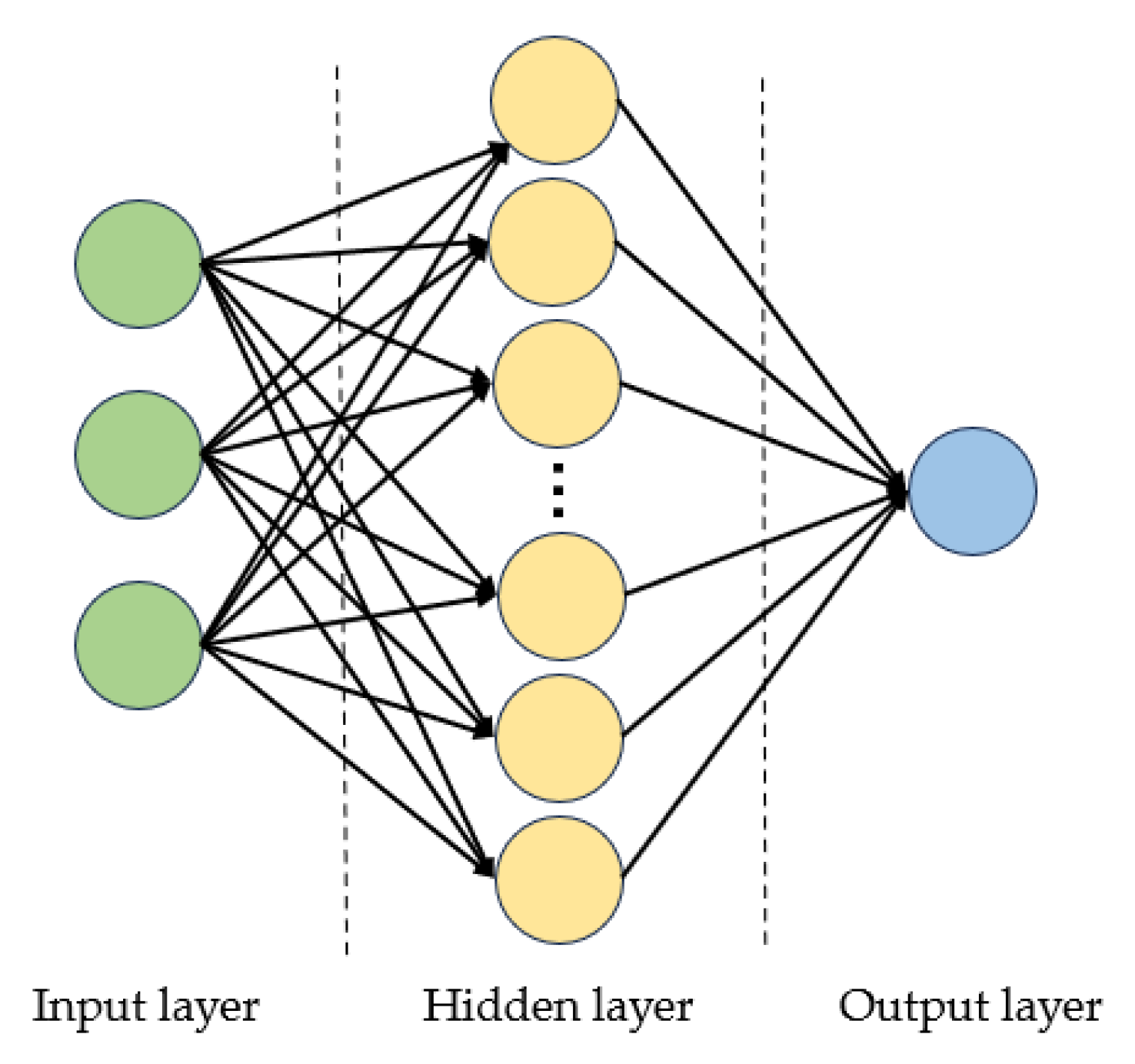

4.1. Artificial Neural Networks

4.1.1. BPNN Network

4.1.2. RBFNN Network

4.1.3. ELM and WNN Networks

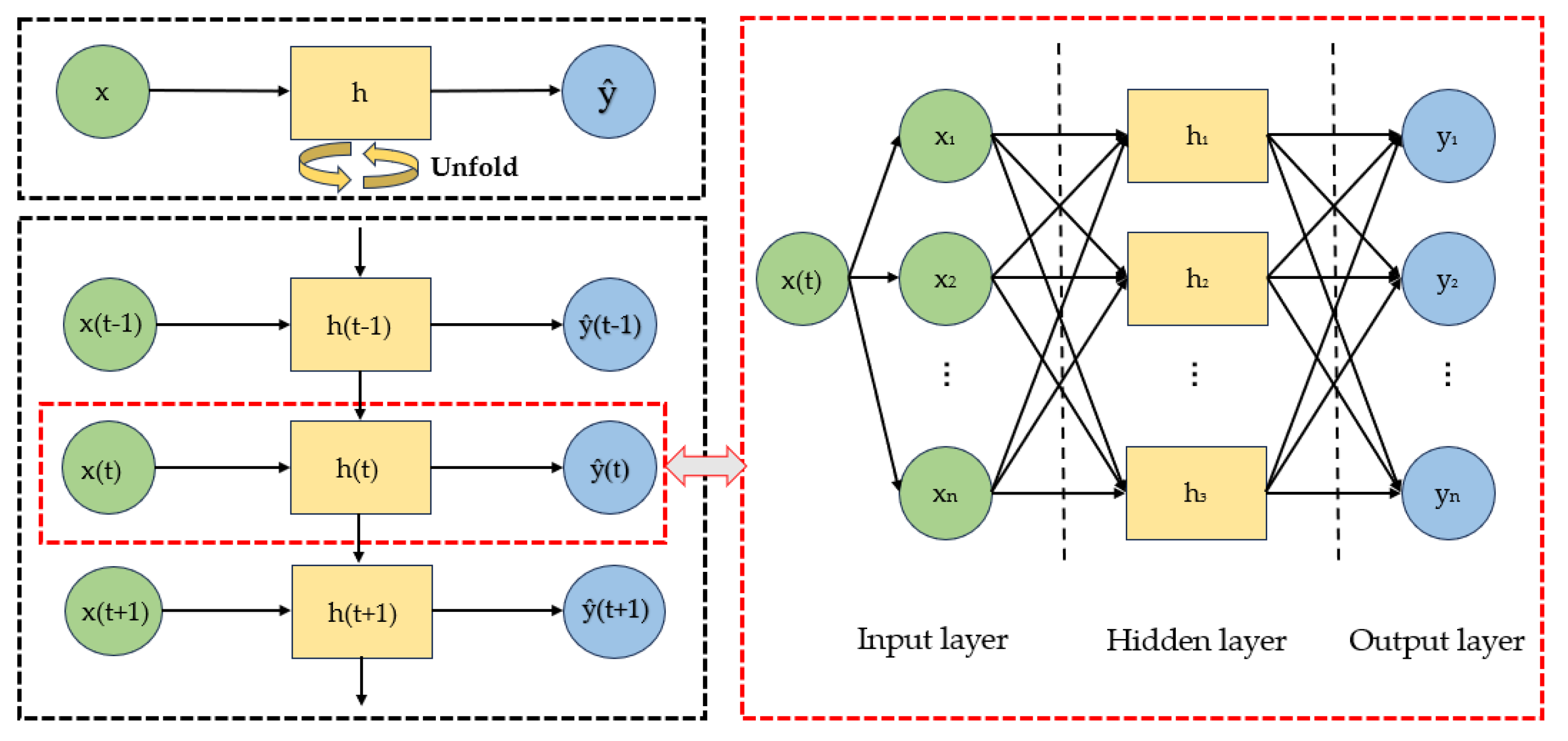

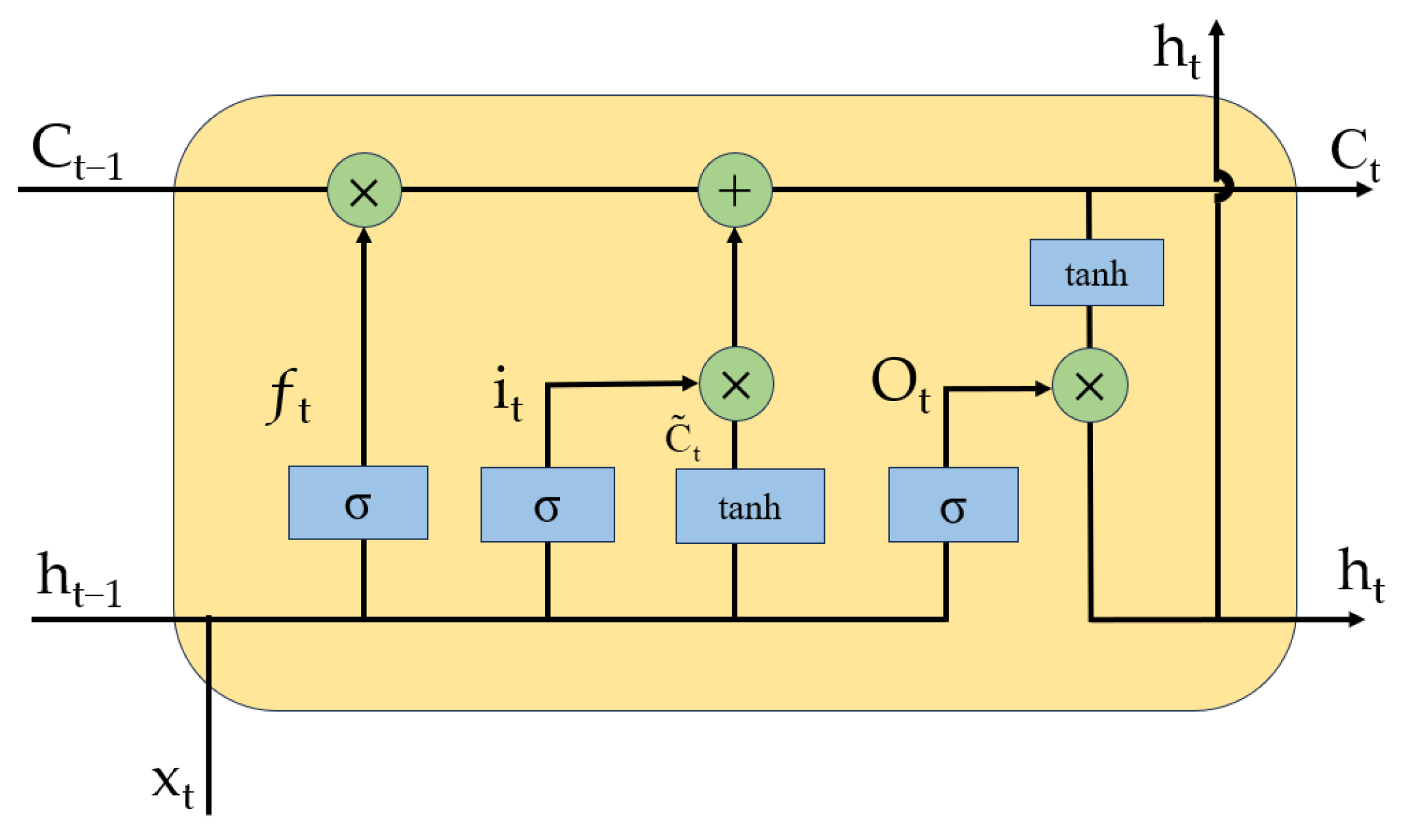

4.2. Deep Neural Network

4.3. Support Vector Machine

4.4. Other Methods of Machine Learning

4.4.1. Transfer Learning

4.4.2. Random Forest

4.4.3. Hybrid Models

5. Model Evaluation and Tuning

5.1. Evaluation Indicators of the Model

5.2. Hyperparameter Tuning

5.3. Model Evaluation Guidelines

5.3.1. The Effect of Hyperparameters

- Use of a Unified Dataset: Table 2 summarizes the high-quality publicly available datasets. Researchers should use the same dataset for model comparison, including identical data cleaning, preprocessing, and feature engineering steps. Models should be trained and tuned on training, validation, and test sets split in the same proportion, with a recommended ratio of 7:2:1.

- Model Hyperparameters of the Same Order of Magnitude: For example, the hyperparameters to be determined for neural networks include the number of layers in the network structure, the number of neurons per layer, learning rate, batch size, and the number of epochs. It is also crucial to ensure the use of the same hyperparameter tuning methods to keep the number of parameters consistent across different models.

- Equivalent Computing Resources: When comparing models, ensure that all the models run in the same or equivalent computing environments. This ensures similar computational costs and memory usage, avoiding biases due to differences in hardware performance.

- Uniform: Applying the same evaluation metrics across all the model assessments is vital for a fair and objective comparison of model performance.

5.3.2. Other Influencing Factors

6. Discussion

- Challenges Faced

- Areas for Improvement

7. Conclusions

Author Contributions

Funding

Conflicts of Interest

Abbreviations

| Acronyms | Explanation |

| SOC | State of Charge |

| SOH | State of Health |

| KF | Kalman Filter |

| EKF | Extended Kalman Filter |

| UKF | Unscented Kalman Filter |

| AKF | Adaptive Kalman Filter |

| PF | Particle Pilter |

| CC–CV | Constant Current–Constant Voltage |

| DST | Dynamic Stress Test |

| FUDS | Federal Urban Driving Schedule |

| US06 | Highway Driving Schedule |

| LA92 | Los Angeles 1992 |

| NEDC | New European Driving Cycle |

| EUDC | Extra-Urban Driving Cycle |

| UDDS | Urban Dynamometer Driving Schedule |

| BN | Batch Normalization |

| PCA | Principal Components Analysis |

| L–M | Levenberg–Marquardt |

| RBF | Radial Basis Function |

| ANNs | Artificial Neural Network |

| FNN | Feedforward Neural Network |

| BPNN | Back Propagation Neural Network |

| RBFNN | Radial Basis Function Neural Network |

| ELM | Extreme Learning Machine |

| WNN | Wavelet Neural Network |

| CNN | Convolutional Neural Network |

| RNN | Recurrent Neural Network |

| LSTM | Long Short-Term Memory Network |

| Bi-LSTM | Bi-directional Long Short-Term Memory |

| SBi-LSTM | Stacked Bidirectional Long Short-Term Memory |

| GRU | Gated Recurrent Unit |

| FCN | Fully Convolutional Network |

| Bi-GRU | Bidirectional Gated Recurrent Neural Network |

| SVM | Support Vector Machine |

| EKF | Extended Kalman Filter |

| LMWNN | Three-layer WNN optimized by L–M |

| LMMWNN | Multi-hidden layer WNN optimized by L–M |

| PLMMWNN | LMMWNN optimized by piecewise network |

| PSO | Particle Swarm Optimization |

| BSA | Backtracking Spiral Algorithm |

| SSA | Salp Swarm Algorithm |

| WOA | Whale Optimization Algorithm |

| GA | Genetic Algorithm |

| GSA | Gravity Search Algorithm |

| OLSA | Orthogonal Least Squares Algorithm |

| AGA | Adaptive Genetic Algorithm |

| HBA | Honey Badger Optimization Algorithm |

| DSA | Differential Search Algorithm |

| DSOP | Double Search Optimization Process |

| MAE | Mean Absolute Error |

| MSE | Mean Squared Error |

| RAE | Relative Absolute Error |

| RMSE | Root Mean Square Error |

References

- Hannan, M.A.; Lipu, M.S.H.; Hussain, A.; Mohamed, A. A review of lithium-ion battery state of charge estimation and management system in electric vehicle applications: Challenges and recommendations. Renew. Sustain. Energy Rev. 2017, 78, 834–854. [Google Scholar] [CrossRef]

- Ng, K.S.; Moo, C.S.; Chen, Y.P.; Hsieh, Y.C. Enhanced coulomb counting method for estimating state-of-charge and state-of-health of lithium-ion batteries. Appl. Energy 2009, 86, 1506–1511. [Google Scholar] [CrossRef]

- Iryna, S.; William, R.; Evgeny, V.; Afifa, B.; Peter, H.L. Battery open-circuit voltage estimation by a method of statistical analysis. J. Power Sources 2006, 159, 1484–1487. [Google Scholar]

- Xie, W. Study on SOC Estimation of Lithium-Ion Battery Module Based on Thevenin Equivalent Circuit Model. Ph.D. Thesis, Shanghai Jiao Tong University, Shanghai, China, 2013. [Google Scholar]

- Liu, X.; Li, K.; Wu, J.; He, Y.; Liu, X. An extended Kalman filter based data-driven method for state of charge estimation of Li-ion batteries. J. Energy Storage 2021, 40, 102655. [Google Scholar] [CrossRef]

- Shang, Y.; Zhang, C.; Cui, N. State of charge estimation for lithium-ion batteries based on extended Kalman filter optimized by fuzzy neural network. Control Theory Appl. 2016, 33, 212–220. [Google Scholar]

- Tian, S.; Lv, Q.; Li, Y. SOC estimation of lithium-ion power battery based on STEKF. J. South China Univ. Technol. 2020, 48, 69–75. [Google Scholar]

- Zhou, W.; Jiang, W. SOC estimation of lithium battery based on genetic algorithm optimization extended Kalman filter. J. Chongqing Univ. Technol. 2019, 33, 33–39. [Google Scholar]

- Zhang, H.; Fu, Z.; Tao, F. Improved sliding mode observer-based SOC estimation for lithium battery. AIP Conf. Proc 2019, 2122, 020058. [Google Scholar]

- Yang, L. SOC estimation of lithium battery based on second-order discrete sliding mode observer. Electr. Appl. Energy Eff. Manag. Technol. 2018, 3, 43–46+52. [Google Scholar]

- Xu, J.; Mi, C.; Cao, B.; Deng, J.; Chen, Z.; Li, S. The State of Charge Estimation of Lithium-Ion Batteries Based on a Proportional-Integral Observer. IEEE Trans. Veh. Technol. 2014, 63, 1614–1621. [Google Scholar] [CrossRef]

- Sakile, R.; Sihua, U.K. Estimation of State of Charge and State of Health of Lithium-Ion Batteries Based on a New Adaptive Nonlinear Observer. Adv. Theory Simul. 2021, 4, 2100258. [Google Scholar] [CrossRef]

- Tran, N.; Khan, A.B.; Nguyen, T.T.; Kin, D.W.; Choi, W. SOC Estimation of Multiple Lithium-Ion Battery Cells in a Module Using a Nonlinear State Observer and Online Parameter Estimation. Energies 2018, 11, 1620. [Google Scholar] [CrossRef]

- Dini, P.; Colicelli, A.; Saponara, S. Review on Modeling and SOC/SOH Estimation of Batteries for Automotive Applications. Batteries 2024, 10, 34. [Google Scholar] [CrossRef]

- Narendra, K.S.; Annaswamy, A.M. Stable Adaptive Systems; Prentice Hall: Englewood Cliffs, NJ, USA, 1989. [Google Scholar]

- Marino, R.; Tomei, P. Nonlinear Control Design: Geometric, Adaptive, and Robust; Prentice Hall: Hertfordshire, UK, 1995. [Google Scholar]

- Gan, Z. Research on SOC Estimation of Battery Electric Vehicle Based on Machine Learning. Ph.D. Thesis, South China University of Technology, Guangzhou, China, 2022. [Google Scholar]

- ADVISOR2002. Battery Documentation. Available online: http://adv-vehicle-sim.sourceforge.net/advisor_doc.html (accessed on 6 May 2023).

- NASA. Datasets-Battery. Available online: https://ti.arc.nasa.gov/tech/dash/groups/pcoe/prognostic-data-repository/ (accessed on 13 September 2023).

- CALCE. Lithium-Ion Battery Experimental Data. Available online: https://calce.umd.edu/battery-data (accessed on 6 May 2023).

- LG 18650HG2 Li-ion Battery Data. Available online: https://data.mendeley.com/datasets/cp3473x7xv/3 (accessed on 6 May 2023).

- Severson, K.A.; Attia, P.M.; Jin, N.; Perkins, N.; Jiang, B.; Yang, Z.; Chen, M.H.; Aykol, M.; Herring, P.K.; Fraggedakis, D.; et al. Data-driven prediction of battery cycle life before capacity degradation. Nat. Energy 2019, 4, 383–391. [Google Scholar] [CrossRef]

- Attia, P.M.; Grover, A.; Jin, N.; Severson, K.A.; Markov, T.M.; Liao, Y.-H.; Chen, M.H.; Cheong, B.; Perkins, N.; Yang, Z.; et al. Closed-loop optimization of fast-charging protocols for batteries with machine learning. Nature 2020, 578, 397–402. [Google Scholar] [CrossRef] [PubMed]

- BIRKL, C. Oxford Battery Degradation Dataset. Available online: https://ora.ox.ac.uk/objects/uuid%3A03ba4b01-cfed-46d3-9b1a-7d4a7bdf6fac (accessed on 6 May 2023).

- Battery Dataset. Sandia National Laboratories. Available online: https://www.batteryarchive.org/snl_study.html (accessed on 6 May 2023).

- Battery Dataset. Poznan University of Technology Laboratories. Available online: https://data.mendeley.com/datasets/k6v83s2xdm/1 (accessed on 6 May 2023).

- Panasonic 18650PF Li-Ion Battery Data. Available online: https://doi.org/10.17632/wykht8y7tg.1#folder-96f196a8-a04d-4e6a-827d-0dc4d61ca97b (accessed on 6 May 2023).

- Liu, H. Research on the Estimation Method of SOC and SOH of Lithium-Ion Battery Based on Deep Learning. Ph.D. Thesis, Tianjin University, Tianjin, China, 2020. [Google Scholar]

- Altman, N.S. An introduction to kernel and nearest-neighbor nonparametric regression. Am. Stat. 1992, 46, 175–185. [Google Scholar] [CrossRef]

- Ioffe, S.; Szegedy, C. Batch normalization: Accelerating deep network training by reducing internal covariate shift. PMLR 2015, 37, 448–456. [Google Scholar]

- Li, C.; Chen, Z.; Cui, J.; Wang, Y.; Zou, F. The lithium-ion battery state-of-charge estimation using random forest regression. In Proceedings of the Prognostics and System Health Management Conference, (PHM-2014 Hunan), Zhangjiajie, China, 24–27 August 2014. [Google Scholar]

- Xie, Y.; Cheng, X. Overview of machine learning methods for state estimation of lithium-ion batteries. Automot. Eng. 2021, 43, 1720–1729. [Google Scholar]

- Ren, Z.; Du, C. A review of machine learning state-of-charge and state-of-health estimation algorithms for lithium-ion batteries. Energy Rep. 2023, 9, 2993–3021. [Google Scholar] [CrossRef]

- Zhang, L.; Shen, Z.; Sajadi, S.M.; Prabuwono, A.S.; Mahmoud, M.Z.; Cheraghian, G.; El Din, E.M.T. The machine learning in lithium-ion batteries: A review. Eng. Anal. Bound. Elem. 2022, 141, 1–16. [Google Scholar] [CrossRef]

- Rauf, H.; Khalid, M.; Arshad, N. A novel smart feature selection strategy of lithium-ion battery degradation modelling for electric vehicles based on modern machine learning algorithms. J. Energy Storage 2023, 68, 107577. [Google Scholar] [CrossRef]

- Rimsha; Murawwat, S.; Gulzar, M.M.; Alzahrani, A.; Hafeez, G.; Khan, F.A.; Abed, A.M. State of charge estimation and error analysis of lithium-ion batteries for electric vehicles using Kalman filter and deep neural network. J. Energy Storage 2023, 72, 108039. [Google Scholar] [CrossRef]

- Ma, Y.; Yao, M.; Liu, H.; Tang, Z. State of Health estimation and Remaining Useful Life prediction for lithium-ion batteries by Improved Particle Swarm Optimization-Back Propagation Neural Network. J. Energy Storage 2022, 52, 104750. [Google Scholar] [CrossRef]

- Sun, S.; Gao, Z.; Jia, K. State of charge estimation of lithium-ion battery based on improved Hausdorff gradient using wavelet neural networks. J. Energy Storage 2023, 64, 107184. [Google Scholar] [CrossRef]

- Chen, P.; Mao, Z.; Wang, C.; Lu, C.; Li, J. A novel RBFNN-UKF-based SOC estimator for automatic underwater vehicles considering a temperature compensation strategy. J. Energy Storage 2023, 72, 108373. [Google Scholar] [CrossRef]

- Hou, X.; Gou, X.; Yuan, Y.; Zhao, K.; Tong, L.; Yuan, C.; Teng, L. The state of health prediction of Li-ion batteries based on an improved extreme learning machine. J. Energy Storage 2023, 70, 108044. [Google Scholar] [CrossRef]

- Liu, X.; Dai, Y. Energy storage battery SOC estimate based on improved BP neural network. J. Phys. Conf. Ser. 2022, 2187, 012042. [Google Scholar] [CrossRef]

- Yin, A.; Zhang, W.; Zhao, H.; Jiang, H. Research on SOC prediction of lithium iron phosphate battery based on neural network. J. Electron. Meas. Instrum. 2011, 25, 433–437. [Google Scholar] [CrossRef]

- Yu, Z.; Lu, J.; Wang, X. SOC estimation of Li-ion battery based on GA-BP neural network. Chin. J. Power Sources 2020, 44, 337–340. [Google Scholar]

- Xu, Y.; Fu, Y.; Wu, T. Estimation of the SOC of lithium-ion battery based on WOA-BP neural network. Battery 2023, 53, 38–42. [Google Scholar]

- Zhang, Y. Prediction System of Lithium Battery SOC Based on RBF Neural Network. Ph.D. Thesis, Anhui Normal University, Wuhu, China, 2019. [Google Scholar]

- Li, Z.; Shi, Y.; Zhang, H.; Sun, J. Hybrid estimation algorithm for SOC of lithium-ion batteries based on RBF-BSA. J. Huazhong Univ. Sci. Technol. 2019, 47, 67–72. [Google Scholar]

- Chang, W. Estimation of the state of charge for a LFP battery using a hybrid method that combines a RBF neural network, an OLS algorithm and AGA. Int. J. Electr. Power Energy Syst. 2013, 53, 603–611. [Google Scholar] [CrossRef]

- Cui, D. Research on SOC Estimation Technology of lithium-Ion Battery Based on Wavelet Neural Network. Ph.D. Thesis, Tsinghua University, Beijing, China, 2020. [Google Scholar]

- Xie, S.; Wang, P.; Wang, Z. Application of improved WNN in battery SOC estimation. J. Power Supply 2020, 18, 199–206. [Google Scholar]

- Song, S.; Wang, Z.; Lin, X. Research on SOC estimation of lithium iron phosphate battery based on extreme learning machine. Power Technol. 2018, 42, 806–808+881. [Google Scholar]

- Anandhakumar, C.; Murugan, N.S.S.; Kumaresan, K. Extreme Learning Machine Model with Honey Badger Algorithm Based State-of-Charge Estimation of Lithium-Ion Battery. Expert Syst. Appl. 2023, 238, 121609. [Google Scholar] [CrossRef]

- Sesidhar, D.V.S.R.; Badachi, C.; Green II, R.C. A review on data-driven SOC estimation with Li-Ion batteries: Implementation methods & future aspirations. J. Energy Storage 2023, 72, 108420. [Google Scholar]

- Di Luca, G.; Di Blasio, G.; Gimelli, A.; Misul, D.A. Review on Battery State Estimation and Management Solutions for Next-Generation Connected Vehicles. Energies 2024, 17, 202. [Google Scholar] [CrossRef]

- Zhou, F.; Jin, L.; Dong, J. Review of Convolutional Neural Networks. Chin. J. Comput. 2017, 40, 1229–1251. [Google Scholar]

- Yang, L.; Wu, Y.; Wang, J.; Liu, Y. Review of the research on circular neural network. Comput. Appl. 2018, 38 (Suppl. S2), 1–6+26. [Google Scholar]

- Li, J.; Huang, X.; Tang, X.; Guo, J.; Shen, Q.; Chai, Y.; Lu, W.; Wang, T.; Liu, Y. The state-of-charge prediction of lithium-ion battery energy storage system using data-driven machine learning. Sustain. Energy Grids Netw. 2023, 34, 101020. [Google Scholar] [CrossRef]

- Kim, K.-H.; Oh, K.-H.; Ahn, H.-S.; Choi, H.-D. Time–Frequency Domain Deep Convolutional Neural Network for Li-Ion Battery SoC Estimation. IEEE Trans. Power Electron. 2024, 39, 125–134. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long Short-Term Memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Chen, J.; Li, W.; Sun, Y.; Xu, J.; Zhang, D. Prediction of battery SOC based on convolution-bidirectional long-term and short-term memory network. Power Technol. 2022, 46, 532–535. [Google Scholar]

- Chung, J.; Gulcehre, C.; Cho, K.H.; Bengio, Y. Empirical Evaluation of Gated Recurrent Neural Networks on Sequence Modeling. arXiv 2014, arXiv:1412.3555. [Google Scholar]

- Naguib, M.; Kollmeyer, P.J.; Emadi, A. State of Charge Estimation of Lithium-Ion Batteries: Comparison of GRU, LSTM, and Temporal Convolutional Deep Neural Networks. In Proceedings of the 2023 IEEE Transportation Electrification Conference & Expo (ITEC), Detroit, MI, USA, 21–23 June 2023. [Google Scholar]

- Hannan, M.A.; How, D.N.T.; Lipu, M.S.H.; Ker, P.J.; Dong, Z.Y.; Mansur, M.; Blaabjerg, F. SOC Estimation of Li-ion Batteries with Learning rate-optimized Deep Fully Convolutional Network. IEEE Trans. Power Electron. 2020, 36, 7349–7353. [Google Scholar] [CrossRef]

- Zhang, S. Study on the Prediction of the State of Charge and Health of Lithium Batteries Based on Machine Learning. Ph.D. Thesis, Guangxi Normal University, Guilin, China, 2022. [Google Scholar]

- Ma, L.; Hu, C.; Cheng, F. State of charge and state of energy estimation for lithium-ion batteries based on a long short-term memory neural network. Energy Storage 2021, 37, 102440. [Google Scholar] [CrossRef]

- Li, C.; Xiao, F.; Fan, Y. An Approach to State of Charge Estimation of Lithium-Ion Batteries Based on Recurrent Neural Networks with Gated Recurrent Unit. Energies 2019, 12, 1592. [Google Scholar] [CrossRef]

- Li, C.; Xiao, F.; Fan, Y.; Zhang, Z.; Yang, G. A simulation modeling method of lithium-ion battery at high pulse rate based on LSTM-RNN. J. China Electr. Eng. 2020, 40, 3031–3042. [Google Scholar]

- Han, Y. Wide-Temperature SOC Estimation of Lithium-Ion Battery Based on Gated Cyclic Unit Neural Network. Ph.D. Thesis, Harbin Institute of Technology, Harbin, China, 2021. [Google Scholar]

- Bian, C.; He, H.; Yang, S. Stacked bidirectional long short-term memory networks for state-of-charge estimation of lithium-ion batteries. Energy 2020, 191, 116538. [Google Scholar] [CrossRef]

- Zhou, D. Research on SOC/SOH Estimation Algorithm of Lithium-Ion Battery Based on Recurrent Neural Network. Ph.D. Thesis, Southwest Jiaotong University, Chengdu, China, 2022. [Google Scholar]

- Chatterjee, S.; Gatla, R.K.; Sinha, P.; Jena, C.; Kundu, S.; Panda, B.; Nanda, L.; Pradhan, A. Fault detection of a Li-ion battery using SVM based machine learning and unscented Kalman filter. Mater. Today Proc. 2023, 74, 703–707. [Google Scholar] [CrossRef]

- Hou, J.; Li, T.; Zhou, F.; Zhao, D.; Zhong, Y.; Yao, L.; Zeng, L. A Review of Critical State Joint Estimation Methods of Lithium-Ion Batteries in Electric Vehicles. World Electr. Veh. J. 2022, 13, 159. [Google Scholar] [CrossRef]

- Yu, Y.; Ji, S.; Wei, K. Estimating method for power battery state of charge based on LS-SVM. Chin. J. Power Sources 2012, 3, 61–63. [Google Scholar]

- Li, R.; Xu, S.; Li, S.; Zhou, Y.; Zhou, K.; Liu, X.; Yao, J. State of charge prediction algorithm of lithium-ion battery based on PSO-SVR cross validation. IEEE Access 2020, 8, 10234–10242. [Google Scholar] [CrossRef]

- Qin, P.; Zhao, L. A Novel Transfer Learning-Based Cell SOC Online Estimation Method for a Battery Pack in Complex Application Conditions. IEEE Trans. Ind. Electron. 2024, 71, 1606–1615. [Google Scholar] [CrossRef]

- Eleftheriadis, P.; Giazitzis, S.; Leva, S.; Ogliari, E. Transfer Learning Techniques for the Lithium-Ion Battery State of Charge Estimation. IEEE Access 2024, 12, 993–1004. [Google Scholar] [CrossRef]

- Eleftheriadis, P.; Leva, S.; Ogliari, E. Bayesian Hyperparameter Optimization of Stacked Bidirectional Long Short-Term Memory Neural Network for the State of Charge Estimation. Sustain. Energy, Grids Netw. 2023, 36, 101160. [Google Scholar] [CrossRef]

- Zhang, X.; Wang, Z.; Li, H.; Zhao, B.; Sun, L.; Zang, M. State-of-Charge Estimation of Lithium Batteries based on Bidirectional Gated Recurrent Unit and Transfer Learning. In Proceedings of the 35th Chinese Control and Decision Conference (CCDC), Yichang, China, 20–22 May 2023. [Google Scholar]

- Lipu, M.H.; Hannan, M.A.; Hussain, A.; Ansari, S.; Rahman, S.A.; Saad, M.H.; Muttaqi, K.M. Real-Time State of Charge Estimation of Lithium-Ion Batteries Using Optimized Random Forest Regression Algorithm. IEEE Trans. Intell. Veh. 2023, 8, 639–648. [Google Scholar] [CrossRef]

- Yang, Y.; Zhao, L.; Yu, Q.; Liu, S.; Zhou, G.; Shen, W. State of Charge Estimation for Lithium-Ion Batteries Based on Cross-Domain Transfer Learning with Feedback Mechanism. J. Energy Storage 2023, 70, 108037. [Google Scholar] [CrossRef]

- Yang, F.F.; Zhang, S.; Li, W.; Miao, Q. State-of-Charge Estimation of Lithium-Ion Batteries Using LSTM and UKF. Energy 2020, 201, 117664. [Google Scholar] [CrossRef]

- Huang, H.; Bian, C.; Wu, M.; An, D.; Yang, S. A Novel Integrated SOC–SOH Estimation Framework for Whole-Life-Cycle Lithium-Ion Batteries. Energy 2024, 288, 129801. [Google Scholar] [CrossRef]

- Xia, B.; Cui, D.; Sun, Z.; Lao, Z.; Zhang, R.; Wang, W.; Sun, W.; Lai, Y.; Wang, M. State of charge estimation of lithium-ion batteries using optimized Levenberg-Marquardt wavelet neural network. Energy 2018, 153, 694–705. [Google Scholar] [CrossRef]

- Cui, D.; Xia, B.; Zhang, R.; Sun, Z.; Lao, Z.; Wang, W.; Sun, W.; Lai, Y.; Wang, M. A novel intelligent method for the state of charge estimation of lithium-ion batteries using a discrete wavelet transform-based wavelet neural network. Energies 2018, 11, 995. [Google Scholar] [CrossRef]

- Wang, L.; Zheng, N.; Wang, C. The hidden nodes of BP neural nets and the activation functions. In Proceedings of the International Conference on Circuits and Systems, Shenzhen, China, 16–17 June 1991; pp. 77–79. [Google Scholar]

- Yao, Y.; Chen, Z.; Wu, L.; Cheng, S.; Lin, P. An estimation method of lithium-ion battery health based on improved grid search and generalized regression neural network. Electr. Technol. 2021, 22, 32–37. [Google Scholar]

- Hannan, M.A.; Lipu, M.S.H.; Hussain, A.; Saad, M.H.; Ayob, A. Neural network approach for estimating state of charge of lithium-ion battery using backtracking search algorithm. IEEE Access 2018, 6, 10069–10079. [Google Scholar] [CrossRef]

- Dou, J.; Ma, H.; Zhang, Y.; Wang, S.; Ye, Y.; Li, S.; Hu, L. Extreme learning machine model for state-of-charge estimation of lithium-ion battery using salp swarm algorithm. J. Energy Storage 2022, 52, 104996. [Google Scholar] [CrossRef]

- Li, F.; Zuo, W.; Zhou, K.; Li, Q.; Huang, Y. State of charge estimation of lithium-ion batteries based on PSO-TCN-Attention neural network. J. Energy Storage 2024, 84, 110806. [Google Scholar] [CrossRef]

- Lipu, M.S.H.; Hannan, M.A.; Hussain, A.; Saad, M.H.; Ayob, A.; Uddin, M.N. Extreme learning machine model for state-of-charge estimation of lithium-ion battery using gravitational search algorithm. IEEE Trans. Ind. Appl. 2019, 55, 4225–4234. [Google Scholar] [CrossRef]

- Hu, J.N.; Hu, J.J.; Lin, H.; Li, X.; Jiang, C.; Qiu, X.; Li, W. State-of-charge estimation for battery management system using optimized support vector machine for regression. J. Power Sources 2014, 269, 682–693. [Google Scholar] [CrossRef]

- Chemali, E.; Kollmeyer, P.J.; Preindl, M.; Emadi, A. State-of-charge estimation of li-ion batteries using deep neural networks: A machine learning approach. J. Power Sources 2018, 400, 242–255. [Google Scholar] [CrossRef]

- Khalid, A.; Sundararajan, A.; Acharya, I.; Sarwat, A.I. Prediction of li-ion battery state of charge using multilayer perceptron and long short-term memory models. In Proceedings of the 2019 IEEE Transportation Electrification Conference and Expo (ITEC), Detroit, MI, USA, 19–21 June 2019. [Google Scholar]

- Lin, M.; Zeng, X.; Wu, J. State of health estimation of lithium-ion battery based on an adaptive tunable hybrid radial basis function network. J. Power Sources 2021, 504, 230063. [Google Scholar] [CrossRef]

- Bhattacharjee, A.; Verma, A.; Mishra, S.; Saha, T.K. Estimating state of charge for xEV batteries using 1D convolutional neural networks and transfer learning. IEEE Trans. Veh. Technol. 2021, 70, 3123–3135. [Google Scholar] [CrossRef]

- Alvarez Anton, J.C.; Garcia Nieto, P.J.; Blanco Viejo, C.; Vilan Vilan, J.A. Support vector machines used to estimate the battery state of charge. IEEE Trans. Power Electron. 2013, 28, 5919–5926. [Google Scholar] [CrossRef]

- Song, X.; Yang, F.; Wang, D.; Tsui, K.L. Combined CNN-LSTM network for state-of-charge estimation of lithium-ion batteries. IEEE Access 2019, 7, 88894–88902. [Google Scholar] [CrossRef]

- Chen, J.; Zhang, Y.; Wu, J.; Cheng, W.; Zhu, Q. SOC estimation for lithium-ion battery using the LSTM-RNN with extended input and constrained output. Energy 2023, 262, 125375. [Google Scholar] [CrossRef]

- Xiao, B.; Liu, Y.; Xiao, B. Accurate state-of-charge estimation approach for lithium-ion batteries by gated recurrent unit with ensemble optimizer. IEEE Access 2019, 7, 54192–54202. [Google Scholar] [CrossRef]

- Huang, Z.; Yang, F.; Xu, F.; Song, X.; Tsui, K.L. Convolutional gated recurrent unit-recurrent neural network for state-of-charge estimation of lithium-ion batteries. IEEE Access 2019, 7, 93139–93149. [Google Scholar] [CrossRef]

- Fang, X.; Xu, M.; Fan, Y. SOC-SOH Estimation and Balance Control Based on Event-Triggered Distributed Optimal Kalman Consensus Filter. Energies 2024, 17, 639. [Google Scholar] [CrossRef]

| Method | Advantage | Disadvantage | |

|---|---|---|---|

| Direct method | Ampere-Hour Integration |

|

|

| Open Circuit Voltage |

|

| |

| Model method | Electrochemical Model |

|

|

| Equivalent Circuit Model |

|

| |

| Kalman Filter (KF) |

|

| |

| Extended Kalman Filter (EKF) |

|

| |

| Unscented Kalman Filter (UKF) |

|

| |

| Particle Pilter (PF) |

|

| |

| Nonlinear observer | Sliding Mode Observer |

|

|

| Data Source | Data Content | Data Characteristics | |

|---|---|---|---|

| 1 | Dataset of new energy vehicle data platform [17]. | Includes the total voltage, monomer current, cell voltage, temperature, etc., and the error identification code. | Encompasses core vehicle system parameters and BeiDou navigation position data. |

| 2 | ADVISOR2002 dataset of advanced vehicle simulation software [18]. | Simulation software provides the voltage, current, temperature, and SOC values. | Simulation mimics comprehensive electric vehicle battery data under varied conditions. |

| 3 | NASA dataset [19]. | Features charge/discharge data across conditions, temperature states, and aging, with randomness and uncertainty. | Battery cycles through random current conditions. |

| 4 | University of Maryland Battery Laboratory (CACLE) [20]. | Discharge data under three different discharge conditions, including voltage, current, temperature and other parameters. | Discharge conditions include DST, US06, and FUDS. |

| 5 | Panasonic 18650PF lithium-ion battery dataset of McMaster University, Canada [21]. | Includes time information, discharge capacity, power, battery voltage, current, energy, battery shell temperature, incubator temperature, etc. | Covers temperatures of 0 °C, 10 °C, and 25 °C. |

| Method | Main Operation |

|---|---|

| Data cleaning [17] | Remove duplicate values, handle abnormal values and fill in missing values. |

| Statistical characteristics [17] | Calculate statistical measures like the mean, variance, max, min, and median to characterize battery data distribution and variation. |

| Data normalization [28,29,30] | Normalize features across dimensions using min–max and standardization. |

| Data conversion [29] | Apply logarithmic and exponential transformations for nonlinear data to linearize and enhance model fit. |

| Data dimensionality reduction [31] | Use PCA and other techniques for dimensionality reduction, converting high-dimensional data into a compact feature space. |

| Data balance [32] | Employ under-sampling and over-sampling to balance the dataset and improve the minority class recognition. |

| Time characteristics [32] | Extract time features from timestamps to identify periodicity and trends. |

| Data smoothing [32] | Smooth data to lessen noise and sharp fluctuations. |

| Differential feature [32] | Perform differential analysis of battery data, using the differences between consecutive time points as features. |

| Model Name | Model Advantage | Model Disadvantage | |

|---|---|---|---|

| 1 | BPNN | Mature technology, strong nonlinear mapping ability, self-learning and adaptive ability, strong generalization ability and fault tolerance ability. | Slow convergence speed, blindness in parameter selection and long training time, and easy to fall into local minimum. |

| 2 | RBFNN | Local approximation, good interpolation ability, simple calculation and fast convergence. | Easily influenced by the basis function and easily overfitted. |

| 3 | ELM | Simple structure, few model parameters, fast convergence speed and high accuracy. | Parameter selection has great influence on the performance of the ELM. |

| 4 | WNN | Superior to the BP network in design, approximation sensitivity, and fault tolerance. | Parameters are difficult to determine. |

| 5 | CNN | Handles complex, nonlinear functions and high-dimensional data effortlessly, ensuring higher accuracy. | Long training time and high cost. |

| 6 | RNN | Temporal and short-term memory. | Prone to gradient explosion. |

| 7 | LSTM | Long-term memory, controllable memory ability, high prediction accuracy, and improves the gradient attenuation problem in the RNN. | Complicated structure, increased calculation and long training time. |

| 8 | GRU | Simple structure, few model parameters, easy adjustment of parameters, high prediction accuracy, difficult overfitting, and mitigation of gradient disappearance or explosion. | Cannot fully solve gradient vanishing; the GRU has fewer parameters than LSTM, reducing the overfitting risk. |

| 9 | SVM | Effectively solves the problem of under-study and over-study, and suitable for high-dimensional small-sample data. | Sensitive to parameters and noise, with high computational complexity; unsuitable for large datasets. |

| Model | RMSE (%) | MAE (%) | MAX (%) |

|---|---|---|---|

| CNN-GRU | 0.39 | 0.30 | 2.18 |

| CNN-LSTM | 0.72 | 0.52 | 6.63 |

| LSTM | 0.96 | 0.86 | 6.86 |

| GRU | 0.83 | 0.73 | 2.79 |

| BPNN | 1.24 | 9.6 | 8.99 |

| Model (Mean/Max) | NEDC | UDDS | UKBC | EUDC |

|---|---|---|---|---|

| PLMMWNN | 1.0%/6.7% | 1.0%/9.7% | 1.2%/7.6% | 1.5%/9.7% |

| EKF | 1.7%/5.8% | 2.1%/5.1% | 2.4%/7.7% | 3.0%/11.1% |

| CNN Hyperparameter | Explanation |

|---|---|

| Convolutional layers | Determines the depth of the feature hierarchy. |

| Convolution kernel size | Influences the local scope of feature extraction. |

| Stride | Step length when kernel sliding. |

| Padding | Fills zeros around the input data. |

| Activation function | Determines the nonlinearity of the network. |

| Pooling | Reduces the feature dimension. |

| RNN Hyperparameter | Explanation |

|---|---|

| Learning rate | Affects the convergence speed and stability of model training. |

| Layers | Determines the structural depth of the model. |

| Hidden neurons | Limits the capacity of the model. |

| Epochs | The number of times all samples are traversed by the model. |

| Batch size | The number of samples used for each training. |

| Optimizer | Affects the efficiency of model training. |

| Method | Advantage | Disadvantage |

|---|---|---|

| Trial-and-error method | Simple and convenient | Constant trial and error. |

| Grid search | Traverse all hyperparametric combinations and apply to small datasets. | Large amount of calculation and long time. |

| BSA | High flexibility and large search space. | High time complexity and strong randomness. |

| SSA | Few parameters, simple structure, easy operation, etc. | Slow convergence, prone to local optima. |

| PSO | Minimal initialization restrictions, quick convergence, low computational complexity. | Cannot ensure global search, easily trapped in local optimization. |

| GSA | Fast convergence speed, strong global search ability and avoidance of falling into local optimum. | High computational complexity. |

| DSOP | The search space of each step is reduced. | Less application and research. |

| Method | Inputs | Hidden Neurons and Layers | Parameter Total | MAE |

|---|---|---|---|---|

| FNN [73] | {V, I, T, V_avg, I_avg} | Hidden neurons = 55 Layers = 2 | 3466 | 1.5~3.5% |

| LSTM [74] | {V, I, T} | Hidden neurons = 55 Layer = 1 | 3376 | 1.2~1.55% |

| Method | Dataset | Import | Hyperparameter | Evaluation at 25° | Ref. |

|---|---|---|---|---|---|

| BPNN | CALCE | {V, I, T} | Hidden neurons = 24 | RMSE = 0.91% (FUDS) MAE = 0.59% (FUDS) | [86] |

| FNN | Panasonic 18650PF | {V, I, T, V_avg, I_avg} | Hidden neurons = 55 Layers = 2 | MAE = 1.5%~3.5% | [91] |

| RBFNN | NASA dataset | {V, I, T} | Adaptive control | RMSE = 0.72% MAE = 0.61% | [93] |

| WNN | Laboratory data | {V, I} | Hidden neurons = 10 | MAE = 0.8% (UDDS) | [82] |

| CNN | Panasonic NCR18650PF | {V, I, T} | Convolutional Layers = 2 Filters = 8 | MAE = 0.8% (US06) MAE = 4.72% (US06) | [94] |

| SVM | Laboratory data | {V, I, T} | Support vectors = 903 Kernel width = 1 | RMSE = 0.4% (DST) MAE < 4% (DST) | [95] |

| LSTM | CALCE | {V, I, T} | Hidden neurons = 256 Layer = 1 Time steps = 50 | RMSE = 1.71% (UDDS) MAE = 1.39% (UDDS) | [64] |

| LSTM | Panasonic 18651PF | {V, I, T} | Hidden neurons = 55 Layer = 1 | MAE = 1.2~1.55% | [92] |

| LSTM-RNN-UKF | Laboratory data | {V, I, T} | Hidden neurons = 300 Layer = 1 Epochs = 8000 | RMSE = 1.11% MAE = 0.97% | [80] |

| CNN-LSTM | Laboratory data | {V, I, T, V_avg, I_avg} | Hidden neurons = 300 Filters = 6 | RMSE = 1.31% MAE = 0.92% (DST, US06, and FUDS) | [96] |

| RNN-LSTM | CALCE | {V, I, T} | Hidden layers = 1 Hidden neurons = 30 Batch size = 64 Epochs = 150 | RMSE = 2.3% (FUDS) MAE = 11.5% (FUDS) RMSE = 1.8% (US06) MAE = 10.6% (US06) | [97] |

| (Extended input) RNN-LSTM | CALCE | {V, I, T} | Hidden layers = 1 Hidden neurons = 30 Batch size = 64 Epochs = 151 | RMSE < 1.3% (FUDS) MAE = 3.2% (FUDS) | [97] |

| Bi-LSTM | Panasonic 18650 PF, CALCE | {V, I, T} | Hidden neurons = 64 Layers = 2 | MAE = 0.56% (US06) MAE = 0.46% (HWFET) MAE = 0.84% (FUDS) | [68] |

| GRU | CACLE | {V, I, T} | Hidden neurons = 260 GRU layer = 1 | RMSE = 0.64% (FUDS) MAE = 0.49% (FUDS) | [98] |

| GRU-CNN | Laboratory data | {V, I, T} | Hidden neurons = 150 Filters = 8 GRU layer = 2 GRU neurons = 80 | RMSE = 1.54% (FUDS) MAE = 1.26% (FUDS) | [99] |

| GRU-RNN | CALCE | {V, I, T} | Hidden neurons = 30 Hidden layer = 1 Batch size = 64 Epochs = 300 | RMSE = 1.7% (US06) MAXE = 9.4% (US06) RMSE = 2.0% (FUDS) MAXE = 11.6% (FUDS) | [97] |

| GRU-RNN-AKF | CALCE | {V, I, T} | Hidden neurons = 30 Hidden layer = 1 Batch size = 64 Epochs = 300 | RMSE = 0.2% (US06) MAXE = 0.6% (US06) RMSE = 0.5% (FUDS) MAXE = 0.9% (FUDS) | [97] |

| Temperature (°C) | LSTM | LSTM-UKF | ||

|---|---|---|---|---|

| RMSE (%) | MAE (%) | RMSE (%) | MAE (%) | |

| 0 | 1.46 | 1.31 | 0.73 | 0.63 |

| 10 | 1.06 | 0.88 | 0.29 | 0.21 |

| 20 | 2.04 | 1.60 | 1.11 | 0.97 |

| 27 | 1.93 | 1.58 | 1.06 | 0.93 |

| 30 | 1.68 | 1.40 | 0.93 | 0.82 |

| 40 | 1.86 | 1.38 | 0.92 | 0.81 |

| 50 | 1.93 | 1.51 | 1.03 | 0.89 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhao, F.; Guo, Y.; Chen, B. A Review of Lithium-Ion Battery State of Charge Estimation Methods Based on Machine Learning. World Electr. Veh. J. 2024, 15, 131. https://doi.org/10.3390/wevj15040131

Zhao F, Guo Y, Chen B. A Review of Lithium-Ion Battery State of Charge Estimation Methods Based on Machine Learning. World Electric Vehicle Journal. 2024; 15(4):131. https://doi.org/10.3390/wevj15040131

Chicago/Turabian StyleZhao, Feng, Yun Guo, and Baoming Chen. 2024. "A Review of Lithium-Ion Battery State of Charge Estimation Methods Based on Machine Learning" World Electric Vehicle Journal 15, no. 4: 131. https://doi.org/10.3390/wevj15040131

APA StyleZhao, F., Guo, Y., & Chen, B. (2024). A Review of Lithium-Ion Battery State of Charge Estimation Methods Based on Machine Learning. World Electric Vehicle Journal, 15(4), 131. https://doi.org/10.3390/wevj15040131