1. Introduction

The development of autonomous driving technology has increased the demand for diverse and realistic test scenarios. Naturalistic driving data (NDD) provide valuable information for testing autonomous driving systems under various driving conditions. Scene-based testing for autonomous driving is an effective approach used to reduce testing costs and improve testing efficiency. Well-known open-source datasets such as NGSIM [

1], KITTI [

2], and High-D [

3] have been widely applied to scenario identification and validation for autonomous driving algorithms. By collecting and analyzing the NDD, a better understanding of the patterns in real driving environments can be achieved, providing valuable support for testing scenario construction. Additionally, the extracted test scenarios can be utilized to evaluate and compare the performance and limitations of autonomous driving systems, thereby guiding system optimization.

Numerous scholars have conducted related research on the identification of testing scenarios based on NDD. Some have placed significant emphasis on mining features from NDD, such as road types, road conditions, and traffic situations, in order to support the generation of test scenarios for automated driving. Gu et al. [

4] employed NDD for scene graph generation, integrating external knowledge and image reconstruction techniques to enhance the accuracy and reliability of scene generation. Ries et al. [

5] proposed a network structure that combines convolutional neural network (CNN) and long short-term memory network (LSTM) methods to identify scenes from videos. The positions of traffic participant and ego vehicle are encoded in a grid-based format. Z. Du et al. [

6] extracted scenario features from NDD using LGBM decision trees and combined them with CIDAS accident data to restructure scenarios. Ding et al. [

7] proposed the conditional multiple-trajectory synthesizer (CMTS), which combines normal and collision trajectories to generate safety-critical scenarios by interpolating them in the latent space. The method of extracting test scenarios from NDD has shown promising progress, but it still faces numerous challenges. These challenges primarily include accurately identifying scenes from massive datasets, automating the annotation process for efficient data labeling, and handling diverse data sources and formats. Consequently, the development of ways to effectively utilize NDD to improve the accuracy and efficiency of scenario identification has become a critical concern for researchers. Therefore, there is a pressing need in both industry and academia for an automated data labeling approach in order to reduce labeling costs.

The existing unsupervised and semi-supervised methods [

8,

9] can reduce labeling efforts but are not suitable for handling large amounts of high-dimensional time-series data. Additionally, existing research mostly focuses on specific scenarios based on NDD, such as lane changing [

10,

11] and car following [

12,

13]. This approach cannot fully utilize the scenario information contained in NDD. Thus, the challenge is to identify different types of scenes on a large scale from massive NDD. This problem can be addressed using rule-based [

14] and machine learning-based approaches [

15,

16,

17,

18]. Rule-based approaches rely on expert experience. Zhao et al. [

19] modeled driving scenarios based on ontology and integrated data from multiple sensors. They constructed a rule library for driving scenarios by incorporating expert knowledge and legal regulations. This was leveraged to assist in the development and testing of various functions in intelligent connected vehicles. Sun et al. [

20] identified dangerous scenarios from NDD by setting thresholds for parameters such as speed and acceleration. Wachenfeld et al. [

21] introduced a dangerous scenario extraction method based on the worst-case collision time. The fundamental principle of identifying test scenarios through machine learning lies in mining the intrinsic features and patterns within the data for scene classification. Tan et al. [

22] inputted the current state of the autonomous vehicle and the high-definition map into an LSTM network and trained the model using NDD to generate natural driving scenarios. Rocklage et al. [

23] presented a retrospective-based approach for automatic scene extraction and generation that was capable of randomly generating static or dynamic scenes. Fellner et al. [

24] proposed a heuristic-guided branch search algorithm for scene generation. Spooner et al. [

25] introduced a novel method called ped-cross generative adversarial network (Ped-Cross GAN) to generate pedestrian crosswalk scenes. Additionally, importance sampling [

26] and Monte Carlo search [

27] methods have also been widely applied in efforts to generate critical scenarios.

Despite the efficiency and simplicity of the rule-based approach, subjective rules and threshold settings are often introduced during rule formulation. However, these are not generalizable approaches for different NDD, and their use can result in subjective errors. In contrast, machine learning-based methods can automatically learn and discover features and identify scenarios from data with better adaptiveness. Therefore, applying machine learning theory in scene identification is becoming one of the mainstream methodologies in the present and will be important into the future.

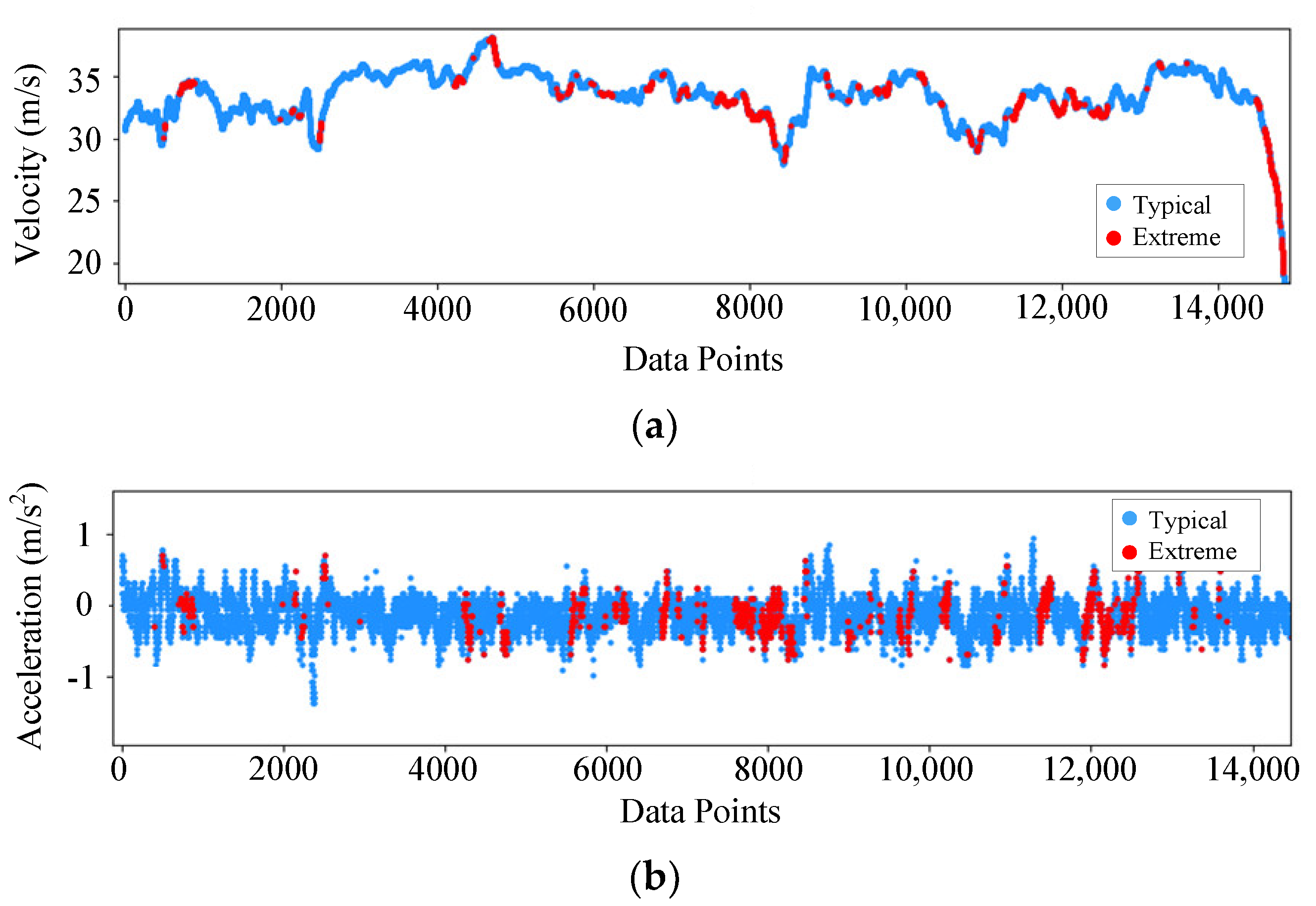

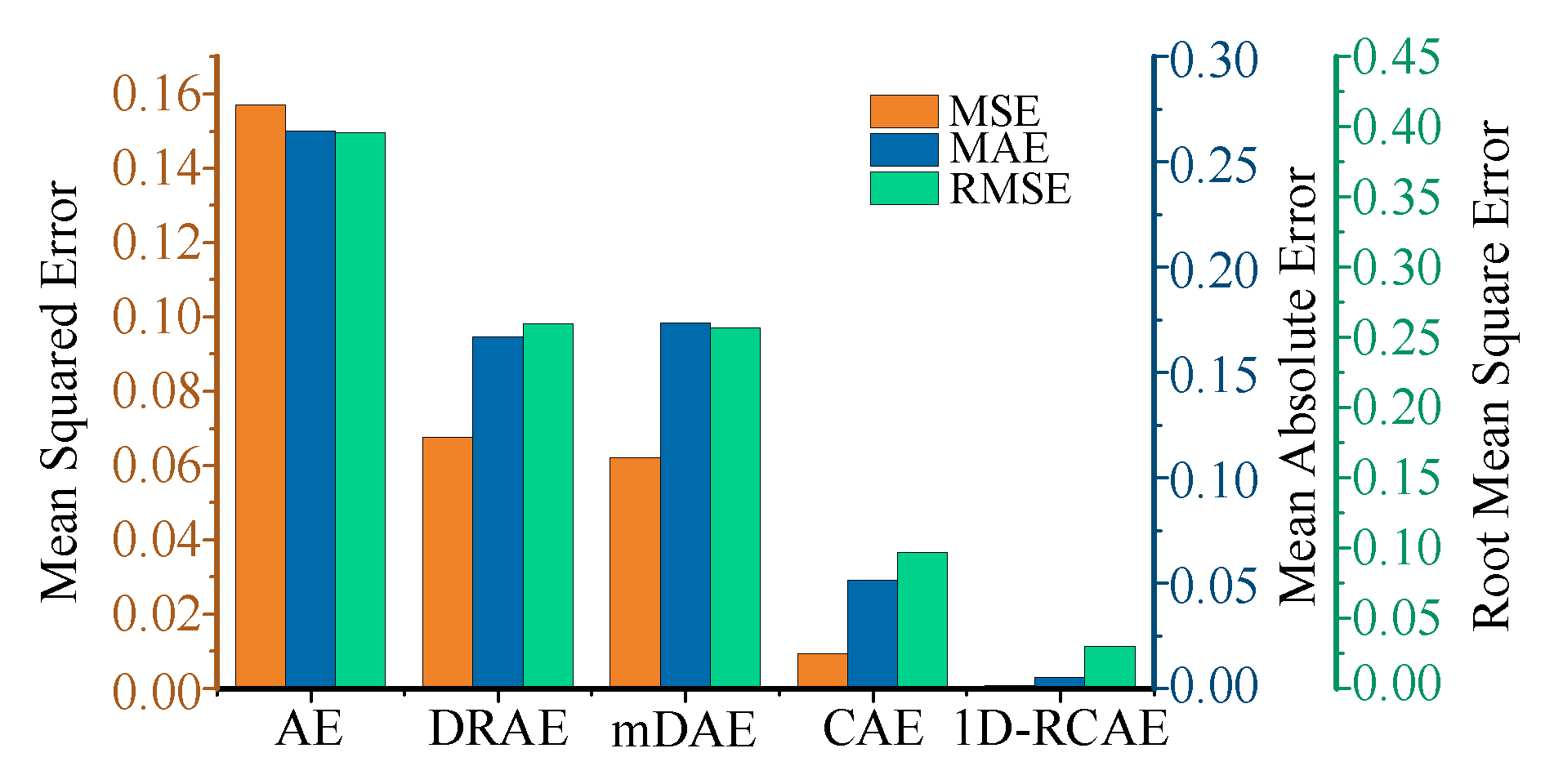

To obtain different scenarios under extreme and typical driving conditions, it is necessary to divide original NDD into extreme and typical data subsets. Isolation forest (IF) [

28] is a fast, efficient, and unsupervised data segmentation method. Its fundamental principle involves randomly partitioning the dataset and applying the depth of trees to determine the typical and extreme data. Due to the high dimensionality of the raw data, the use of direct training would result in inefficiency. Therefore, autoencoders [

29] have been widely employed in the field of feature extraction. The conventional autoencoder (AE) employs fully connected layers, which results in a large number of parameters. When dealing with high-dimensional data, this method is prone to overfitting and lacks local perception. In contrast, convolutional neural networks consist of convolutional layers and pooling layers. By introducing convolutional kernels, parameter sharing can be achieved, reducing the number of model parameters and accelerating the training process. Additionally, increasing the number of network layers can cause training difficulties and higher training costs, as well as issues like gradient vanishing or exploding. The application of residual learning [

30] can help to propagate gradients more effectively within the network, thereby improving its training performance.

Identifying test scenarios is crucial in the research for and development of autonomous driving systems. The identified test scenarios can be adopted to objectively evaluate the performance of autonomous driving algorithm. By testing the behavior and performance of autonomous vehicles in various scenarios, a better understanding of the algorithm’s strengths and limitations can be gained, guiding its improvement and optimization. Different test scenarios may involve varying risks and challenges. Accurately identifying and categorizing these scenarios can assist autonomous vehicles to proactively responding to potential hazards, thereby ensuring safety. Identifying test scenarios based on the available data resources is a critical issue that requires urgent attention in the development of autonomous driving technology.

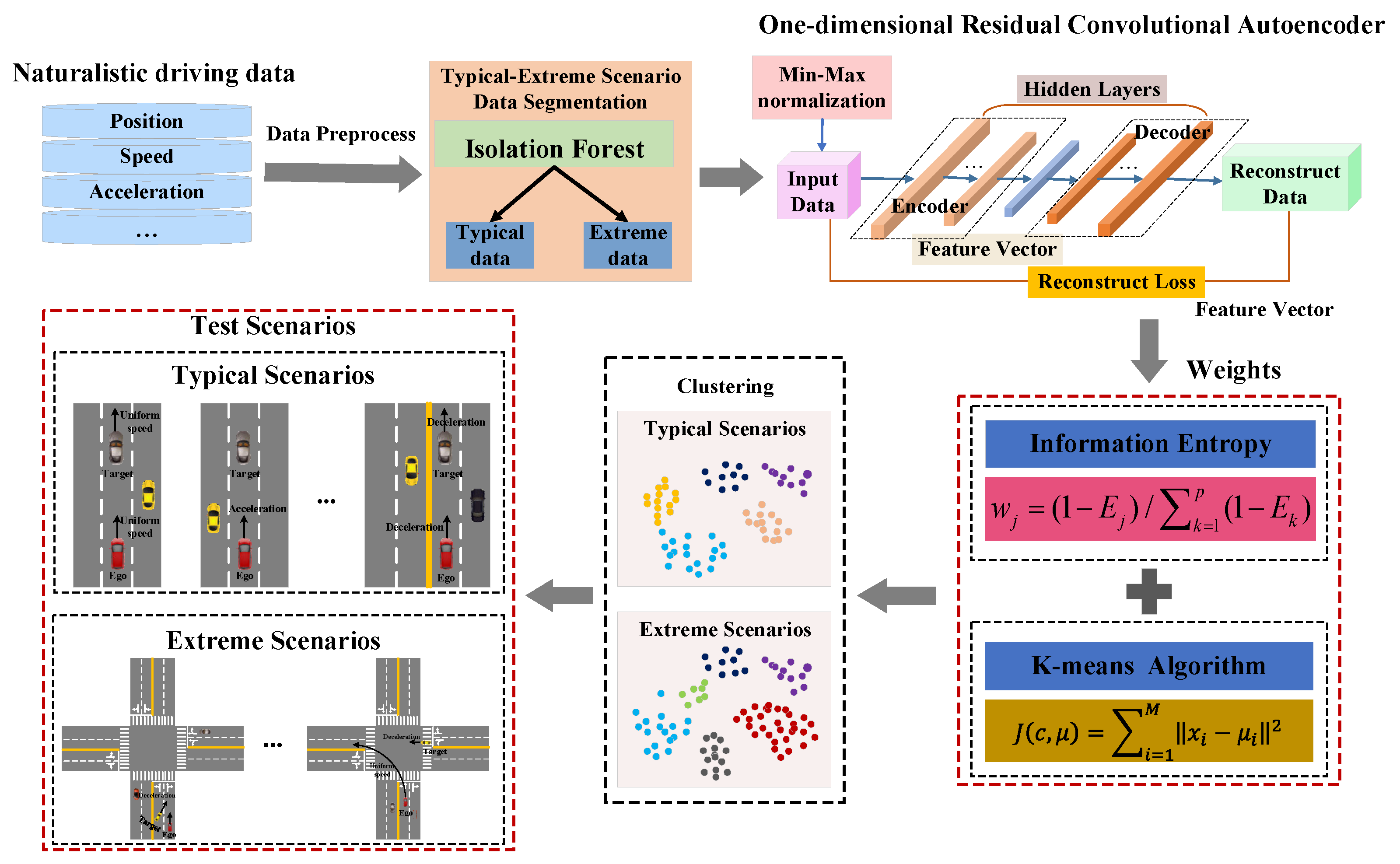

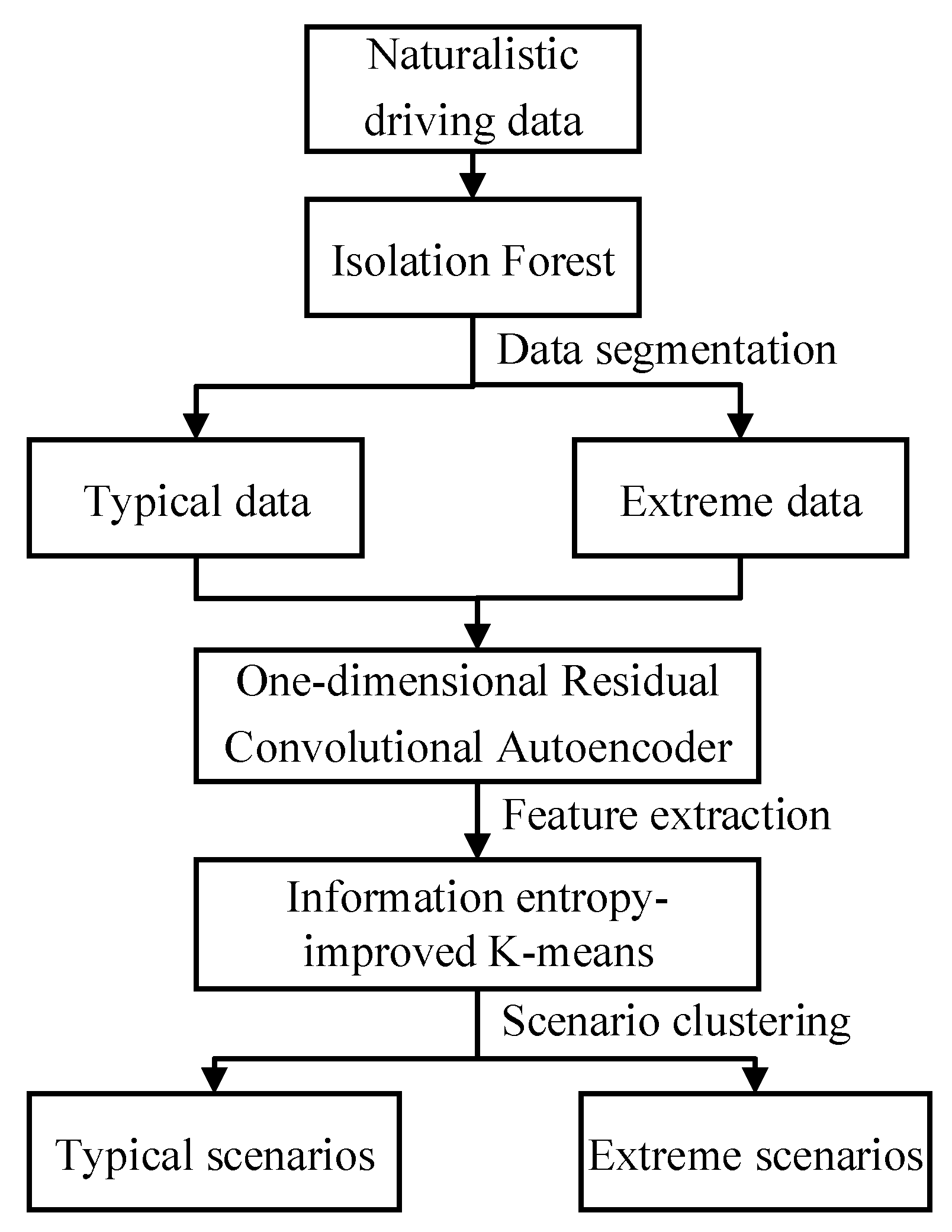

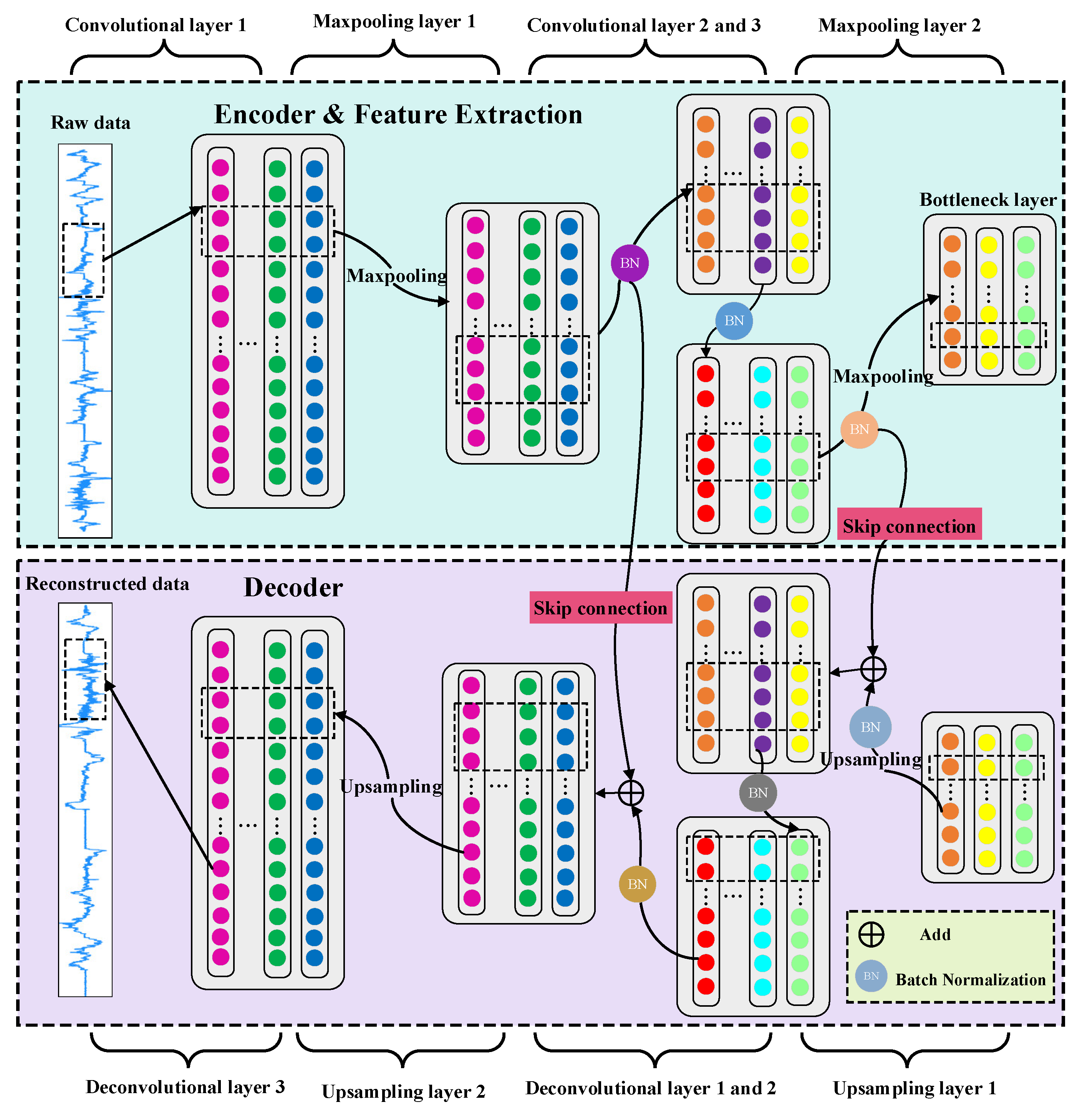

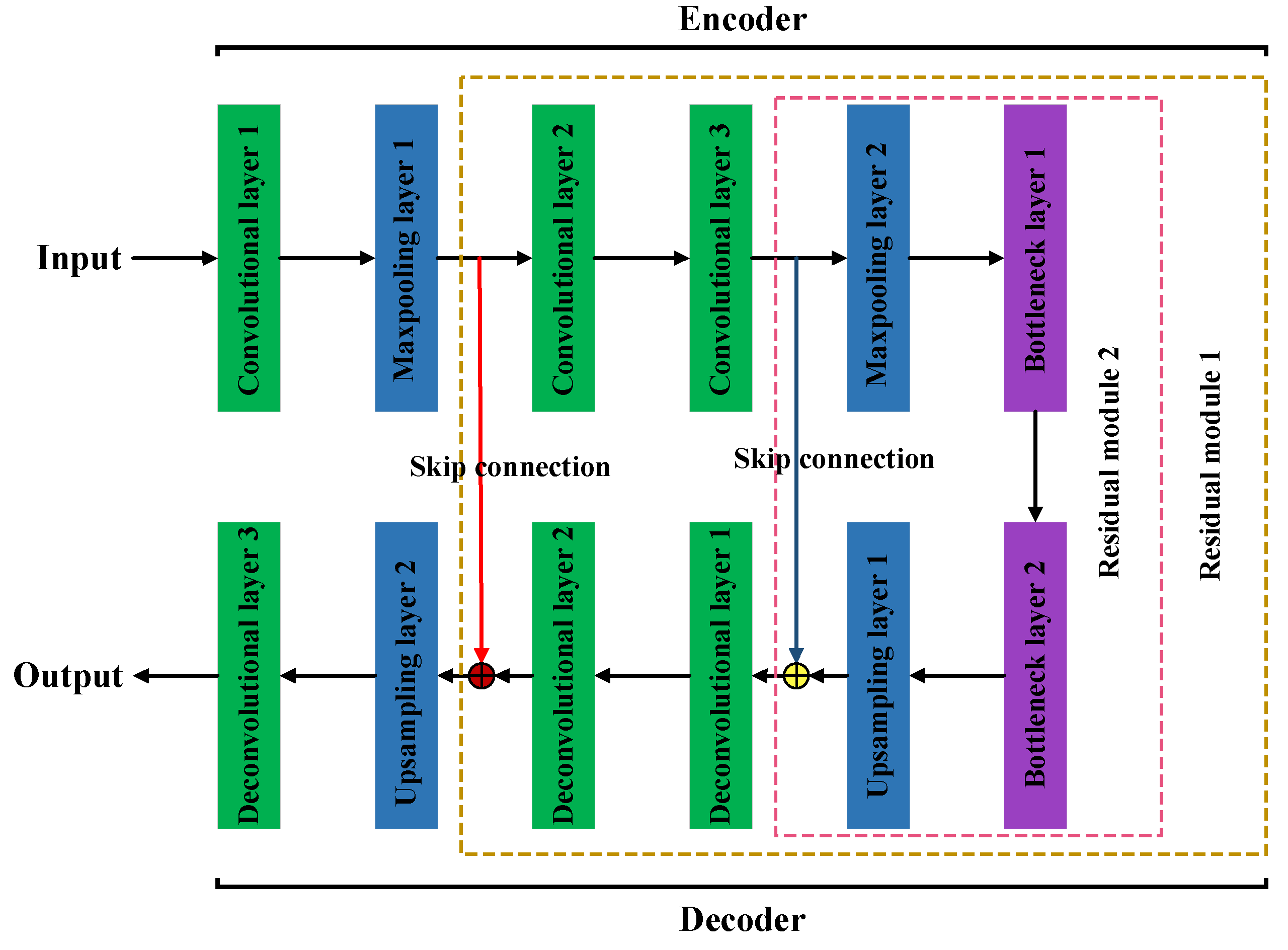

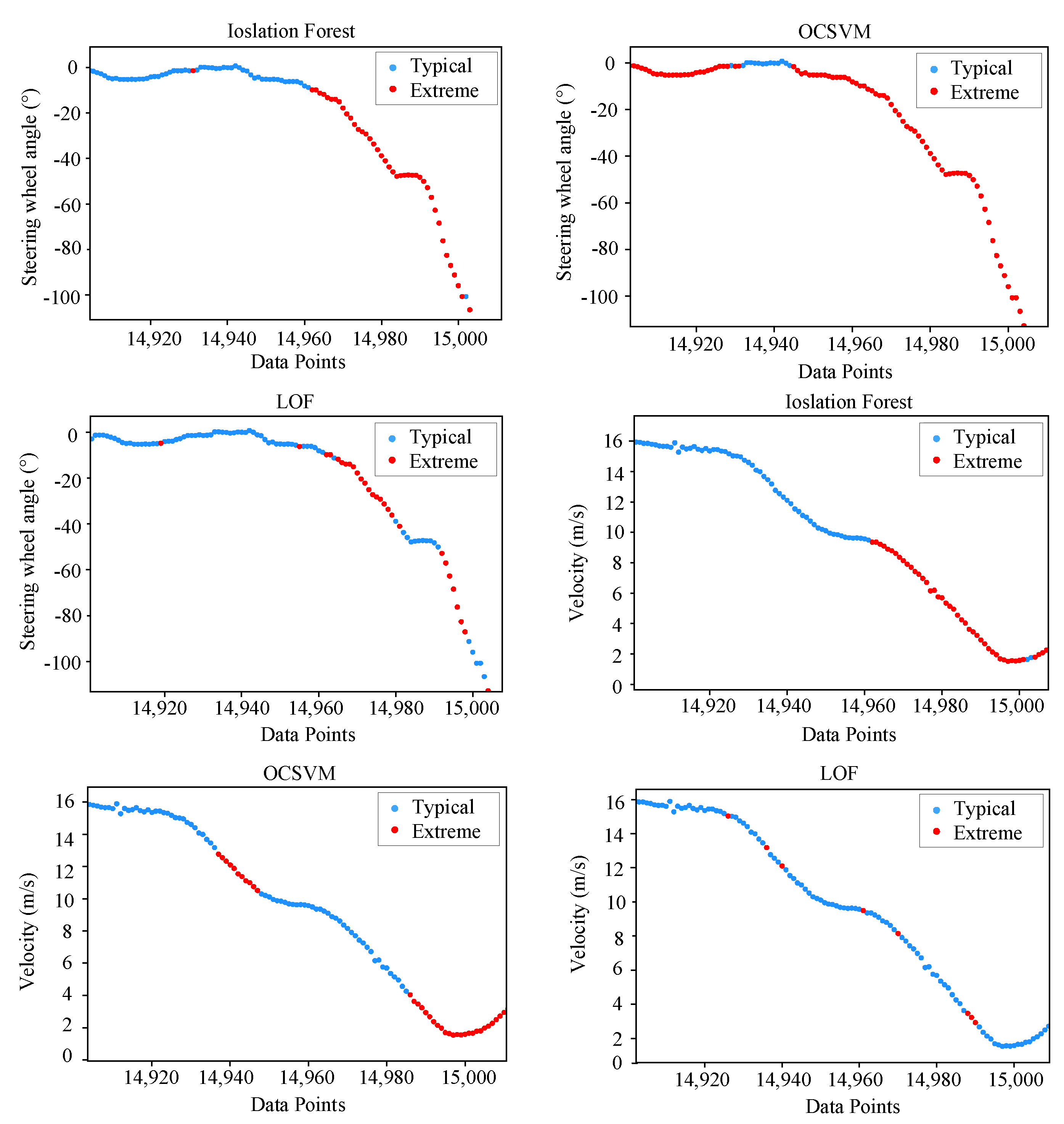

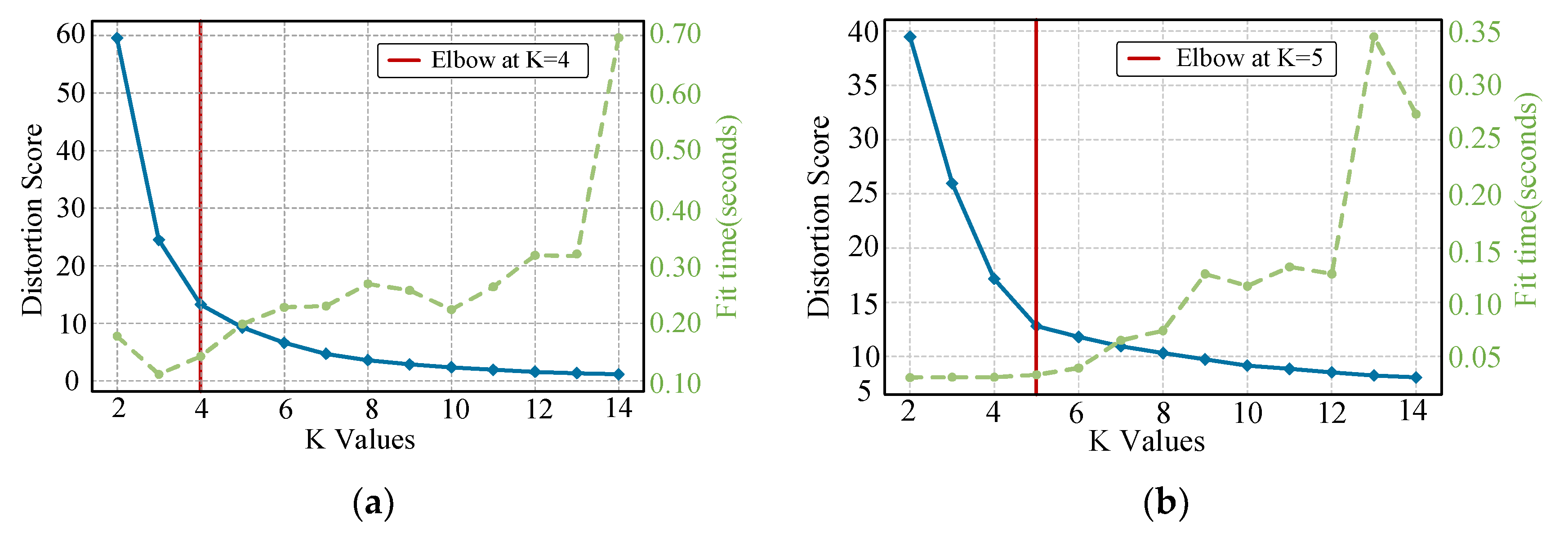

This paper addresses the challenges of large-scale and complex unlabeled NDD by proposing a deep unsupervised learning framework that combines IF, one-dimensional residual convolutional autoencoders (1D-RCAE), and information entropy (IE)-optimized K-means algorithms for autonomous driving testing scenario identification. Additionally, this method exhibits robustness to the complex noise and high-dimensional features present in NDD. The main contributions of this study are as follows: (1) Utilizing IF to achieve the segmentation of typical and extreme driving scenarios, resulting in separate datasets for the extraction of typical and extreme scenes. (2) Designing a novel neural network, the 1D-RCAE, which can learn and extract features from data without the need for labels, in contrast to traditional machine learning methods. (3) The residual learning mechanism is introduced to optimize the training process and enhances the feature extraction capability of the network. (4) The application of IE optimizes the K-means algorithm, enhancing the accuracy and robustness of the clustering process. The framework of the proposed method is shown in

Figure 1.

5. Conclusions

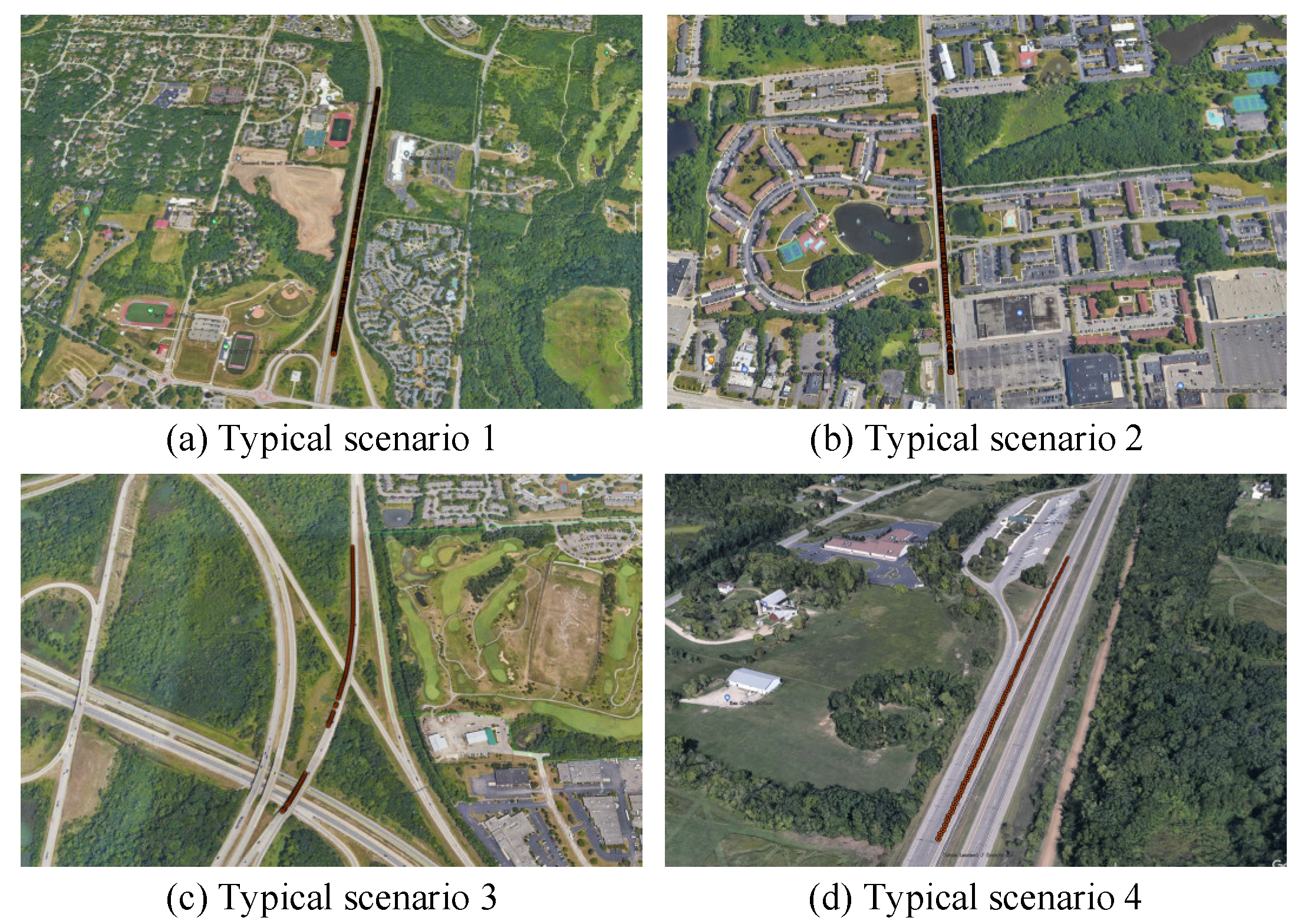

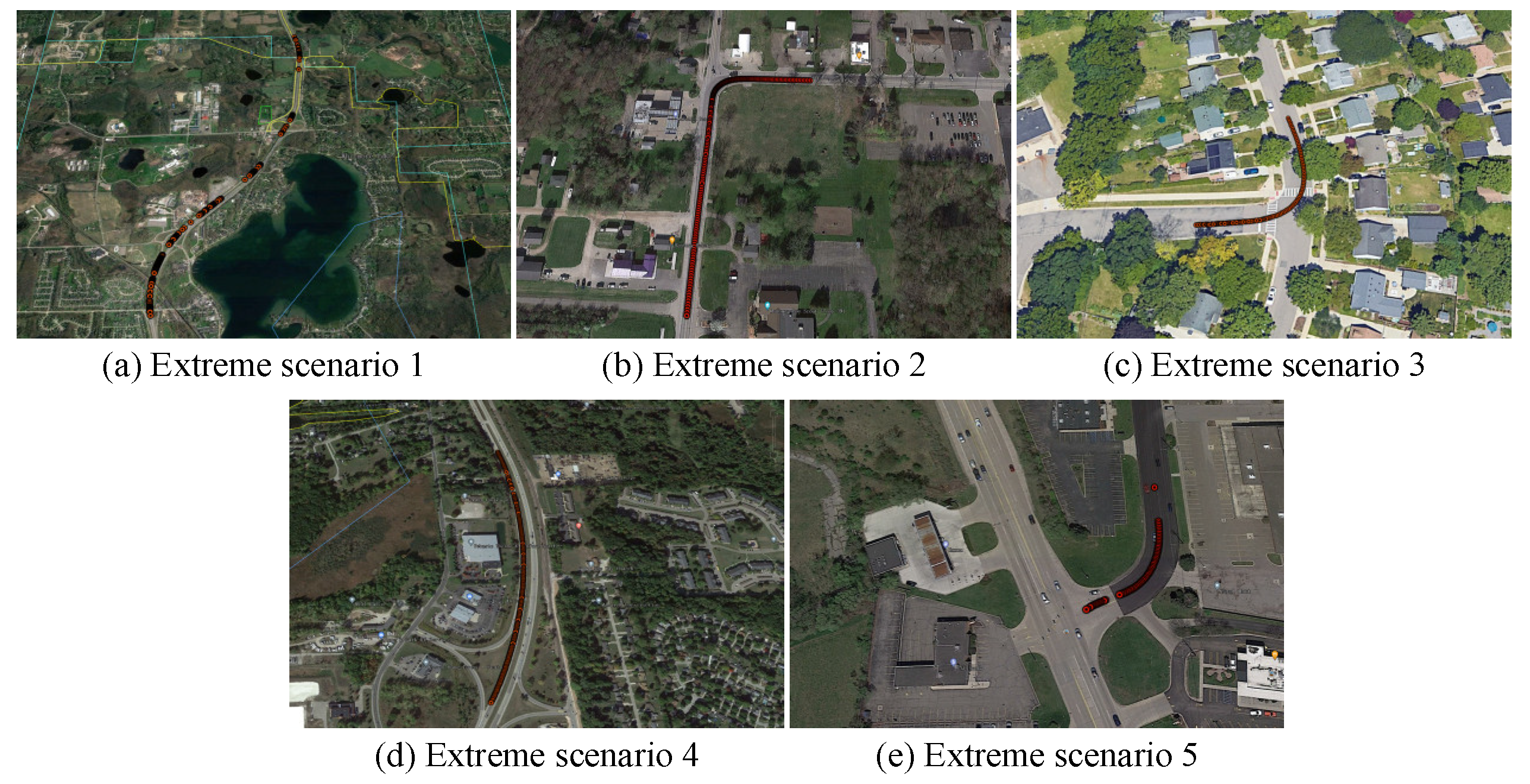

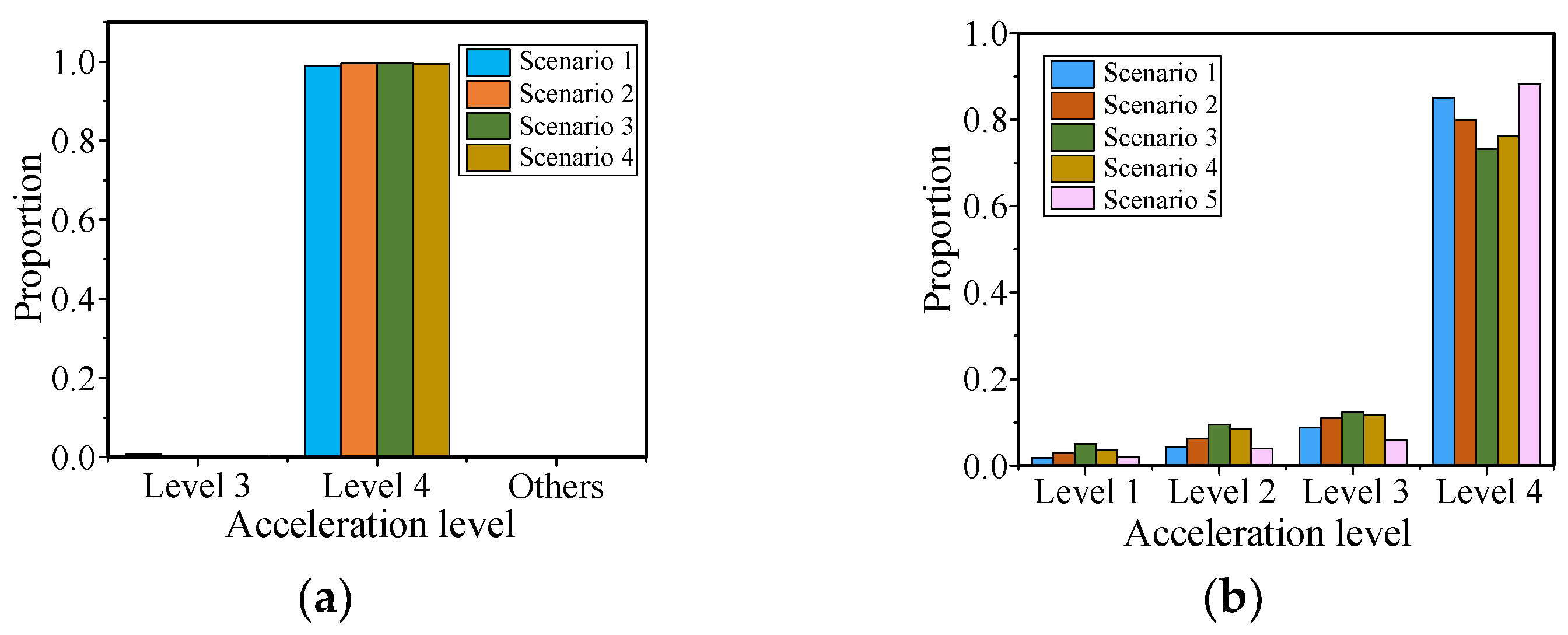

This paper proposes an automatic driving test scenario identification method based on deep unsupervised learning that combines IF, 1D-RCAE, and IE-improved K-means algorithms. Firstly, data variables that can represent the ego vehicle state and surrounding environment information are selected as the segmentation criteria. IF is employed for typical–extreme scenario segmentation, and the results demonstrate that IF can obtain more interpretable segmentation results compared to LOF and OCSVM. Secondly, a novel network called 1D-RCAE is designed to extract scene features. The results illustrate that the 1D-RCAE outperforms other networks, highlighting its superior feature extraction capability. Finally, considering the different importance of different features, the K-means algorithm is optimized using IE, and the extracted scene features are clustered. By analyzing the characteristics of each scene parameter in different categories, four typical scenes and five extreme scenes are obtained. The IE-optimized K-means algorithm is compared with other commonly applied clustering algorithms, and the results demonstrate that the performance of the IE-improved K-means algorithm outperforms those of other algorithms. The identified scenes can provide strong support for the construction of an automatic driving test scenario library.

In the future, virtual test scenarios will be constructed based on the identified scenes to test the autonomous driving systems, and an assessment system will be built to evaluate the test results quantitatively and provide suggestions of how to optimize the autonomous driving systems. Additionally, based on this foundation, research will be also conducted on scene generalization to generate various types of scenarios, enriching the automated driving test scenario library. These scenarios will be applied for automated driving algorithm verification, identifying deficiencies through scenario testing and making improvements in order to enhance the safety of autonomous vehicles.