Research on a Lightweight Panoramic Perception Algorithm for Electric Autonomous Mini-Buses

Abstract

1. Introduction

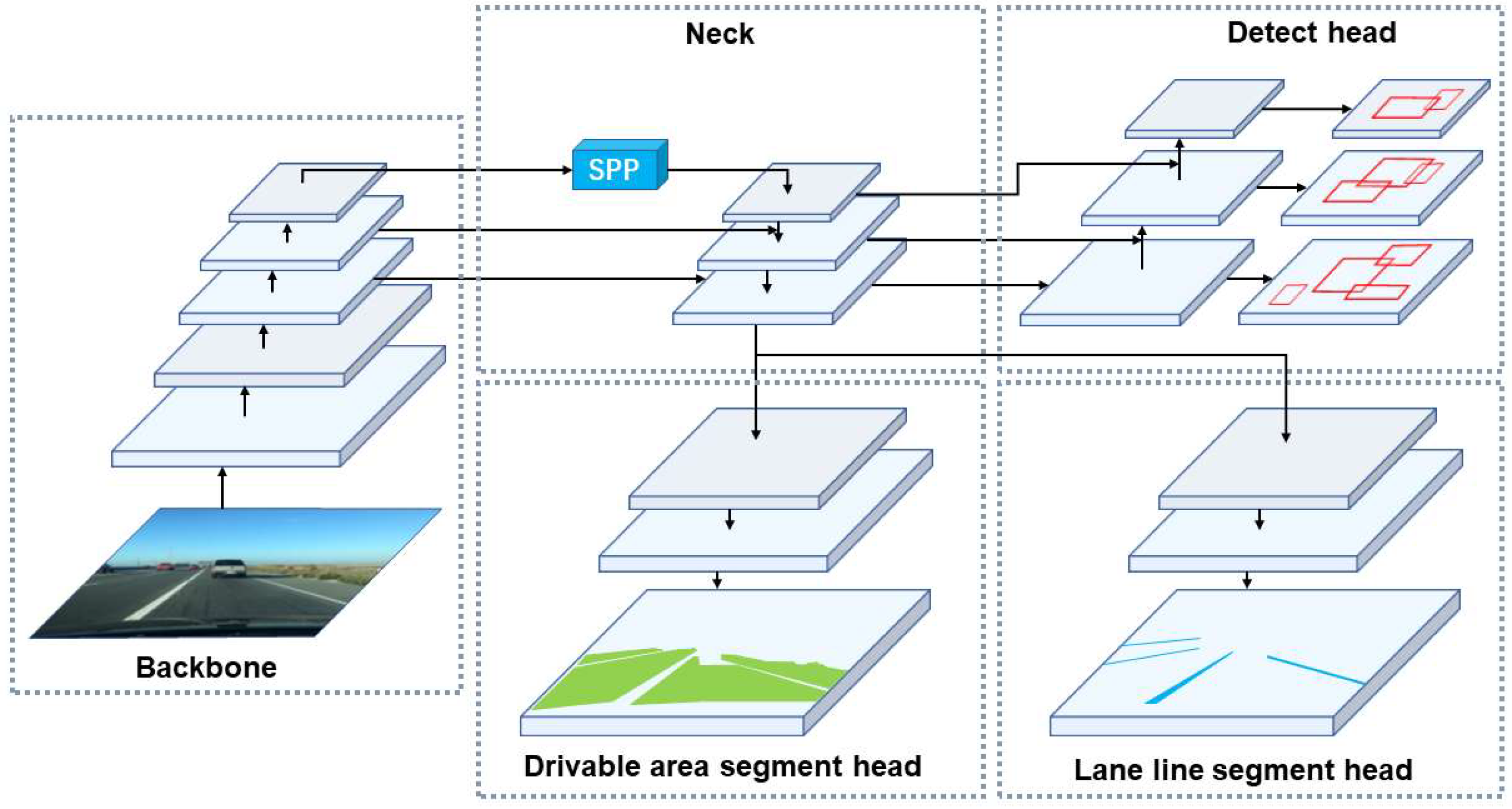

2. Algorithm YOLOP-E

2.1. Algorithm YOLOP

2.2. Lightweighting of Backbone Network

2.3. Optimization of Attention Mechanism

2.4. Optimization of Loss Function

3. Experimental Design and Verification

3.1. Experimental Setup

3.2. Dataset Setting

3.3. Implementation Details

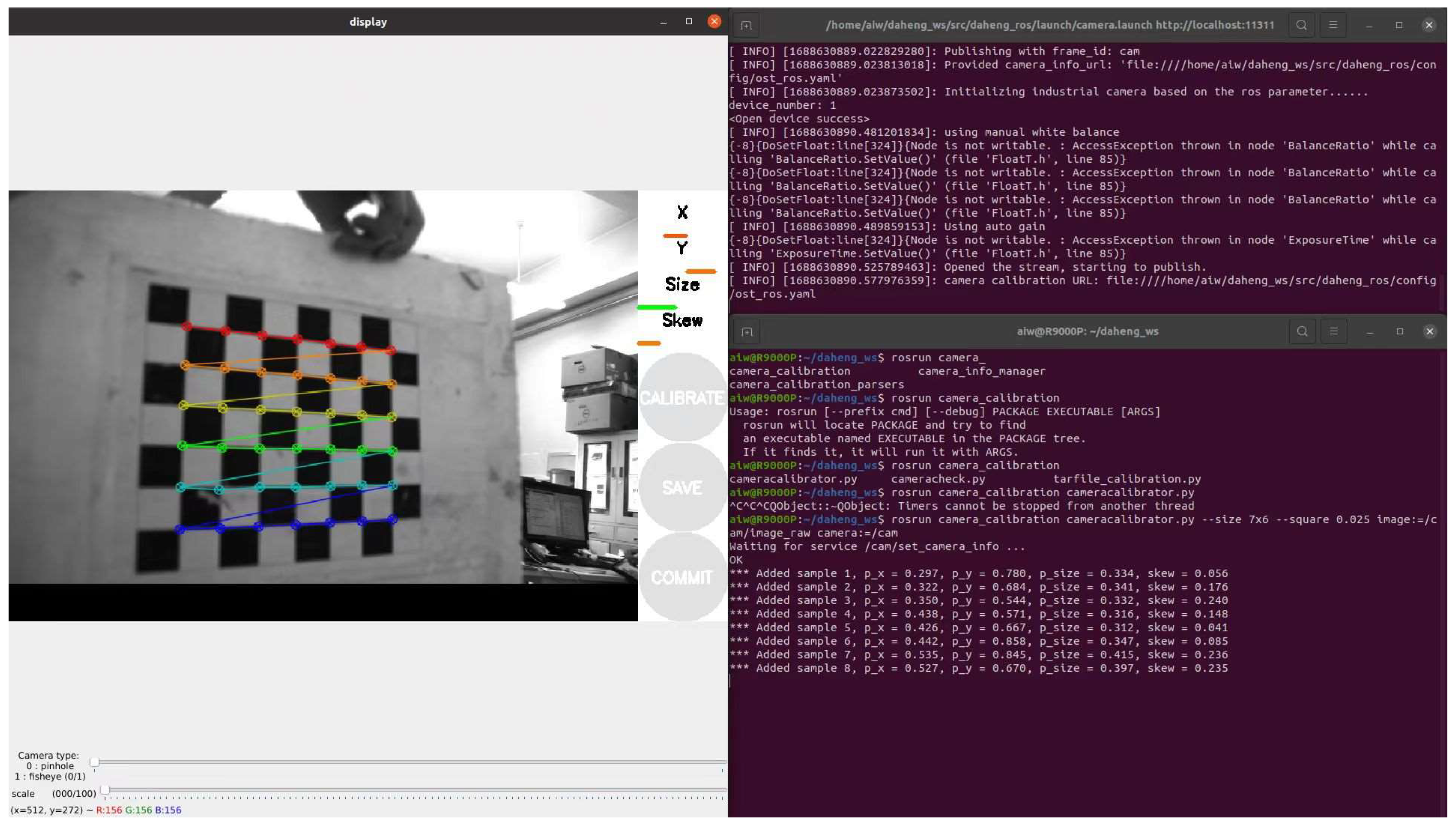

3.4. Real-Vehicle Experimental Setup

4. Experimental Results

4.1. Experimental Results and Analysis of YOLOP-E and YOLOP

4.2. Comparative Experiments with other Algorithms

4.3. Real Vehicle Verification and Ablation Experiments

4.4. Detection Performance

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Girshick, R. Fast R-CNN. arXiv 2015, arXiv:1504.08083. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Springer International Publishing: Berlin, Germany; pp. 21–37. [Google Scholar]

- Luo, H.; Gao, F.; Lin, H.; Ma, S.; Poor, H.V. YOLO: An Efficient Terahertz Band Integrated Sensing and Communications Scheme with Beam Squint. arXiv 2023, arXiv:2305.12064. [Google Scholar]

- Paszke, A.; Chaurasia, A.; Kim, S.; Culurciello, E. ENet: A Deep Neural Network Architecture for Real-Time Semantic Segmentation. arXiv 2016, arXiv:1606.02147. [Google Scholar]

- Pan, X.; Shi, J.; Luo, P.; Wang, X.; Tang, X. Spatial As Deep: Spatial CNN for Traffic Scene Understanding. arXiv 2017, arXiv:1712.06080. [Google Scholar] [CrossRef]

- Wu, D.; Liao, M.-W.; Zhang, W.-T.; Wang, X.-G.; Bai, X.; Cheng, W.-Q.; Liu, W.-Y. YOLOP: You Only Look Once for Panoptic Driving Perception. Mach. Intell. Res. 2022, 19, 550–562. [Google Scholar] [CrossRef]

- Bochkovskiy, A.; Wang, C.-Y.; Liao, H.-Y.M. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Spatial Pyramid Pooling in Deep Convolutional Networks for Visual Recognition. Comput. Vis. {ECCV} 2014, 346–361. [Google Scholar] [CrossRef]

- Lin, T.-Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature Pyramid Networks for Object Detection. arXiv 2017, arXiv:1612.03144. [Google Scholar]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path Aggregation Network for Instance Segmentation. arXiv 2018, arXiv:1803.01534. [Google Scholar]

- Tan, M.; Le, Q. EfficientNetV2: Smaller Models and Faster Training. arXiv 2021, arXiv:2104.00298. [Google Scholar]

- Gupta, S.K.; Hiray, S.; Kukde, P. Spoken Language Identification System for English-Mandarin Code-Switching Child-Directed Speech. arXiv 2023, arXiv:2306.00736. [Google Scholar]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Huang, H.; Chen, Z.; Zou, Y.; Lu, M.; Chen, C. Channel prior convolutional attention for medical image segmentation. arXiv 2023, arXiv:2306.05196. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.-Y.; Kweon, I.S. CBAM: Convolutional Block Attention Module. arXiv 2018, arXiv:1807.06521. [Google Scholar]

- Chu, Y.; Li, P.; Bai, Y.; Hu, Z.; Chen, Y.; Lu, J. Group channel pruning and spatial attention distilling for object detection. Appl. Intell. 2022, 52, 16246–16264. [Google Scholar] [CrossRef]

- Zheng, Z.; Wang, P.; Ren, D.; Liu, W.; Ye, R.; Hu, Q.; Zuo, W. Enhancing Geometric Factors in Model Learning and Inference for Object Detection and Instance Segmentation. arXiv 2021, arXiv:2005.03572. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Y.-F.; Ren, W.; Zhang, Z.; Jia, Z.; Wang, L.; Tan, T. Focal and Efficient IOU Loss for Accurate Bounding Box Regression. arXiv 2022, arXiv:2101.08158. [Google Scholar] [CrossRef]

- Wang, Y.; Ma, X.; Chen, Z.; Luo, Y.; Yi, J.; Bailey, J. Symmetric Cross Entropy for Robust Learning with Noisy Labels. arXiv 2019, arXiv:1908.06112. [Google Scholar]

- Yu, F.; Xian, W.; Chen, Y.; Liu, F.; Liao, M.; Madhavan, V.; Darrell, T. Bdd100k: A diverse driving video database with scalable annotation tooling. arXiv 2018, arXiv:1805.04687. [Google Scholar]

- Tan, M.; Pang, R.; Le, Q.V. EfficientDet: Scalable and Efficient Object Detection. arXiv 2020, arXiv:1911.09070. [Google Scholar]

| Key Parameters | Parameter Values |

|---|---|

| Resolution | 2448 × 2048 |

| Minimum pixel size | 3.45 μm × 3.45 μm |

| Storage temperature | −20–70 °C |

| Operating temperature | 0–45 °C |

| Operating humidity | 10–80% |

| FPS (f/s) | mAP50 (%) | Params (M) | |

|---|---|---|---|

| YOLOv5-m | 73 | 75.3 | 18.4 |

| EfficientDet | 87.5 | 72.4 | 18.2 |

| Ours (det only) | 69.4 | 79.4 | 26.4 |

| FPS (f/s) | mIoU (%) | Params (M) | |

|---|---|---|---|

| ENet | 83 | 35.2 | 18.4 |

| SCNN | 22.6 | 37.6 | 48.2 |

| Ours (sgt only) | 61.3 | 73.7 | 26.4 |

| FPS (f/s) | mAP50 (%) | mIoU (%) | Params (M) | |

|---|---|---|---|---|

| YOLOP | 16.2 | 76.5 | 70.5 | 42.4 |

| Efficientnet backbone | 36.2 | 72.4 | 62.4 | 26.9 |

| CBAM Attention mechanism | 34.4 | 77.2 | 71.2 | 27.2 |

| Focal EIoU Loss and SCE Loss | 19.2 | 78.6 | 71.6 | 42.7 |

| Ours | 41.6 | 79.2 | 73.4 | 27.6 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, Y.; Li, G.; Hao, L.; Yang, Q.; Zhang, D. Research on a Lightweight Panoramic Perception Algorithm for Electric Autonomous Mini-Buses. World Electr. Veh. J. 2023, 14, 179. https://doi.org/10.3390/wevj14070179

Liu Y, Li G, Hao L, Yang Q, Zhang D. Research on a Lightweight Panoramic Perception Algorithm for Electric Autonomous Mini-Buses. World Electric Vehicle Journal. 2023; 14(7):179. https://doi.org/10.3390/wevj14070179

Chicago/Turabian StyleLiu, Yulin, Gang Li, Liguo Hao, Qiang Yang, and Dong Zhang. 2023. "Research on a Lightweight Panoramic Perception Algorithm for Electric Autonomous Mini-Buses" World Electric Vehicle Journal 14, no. 7: 179. https://doi.org/10.3390/wevj14070179

APA StyleLiu, Y., Li, G., Hao, L., Yang, Q., & Zhang, D. (2023). Research on a Lightweight Panoramic Perception Algorithm for Electric Autonomous Mini-Buses. World Electric Vehicle Journal, 14(7), 179. https://doi.org/10.3390/wevj14070179