Request Expectation Index Based Cache Replacement Algorithm for Streaming Content Delivery over ICN

Abstract

:1. Introduction

1.1. Motivation and Contributions

1.2. Organization

2. Scenario Description and Assumptions

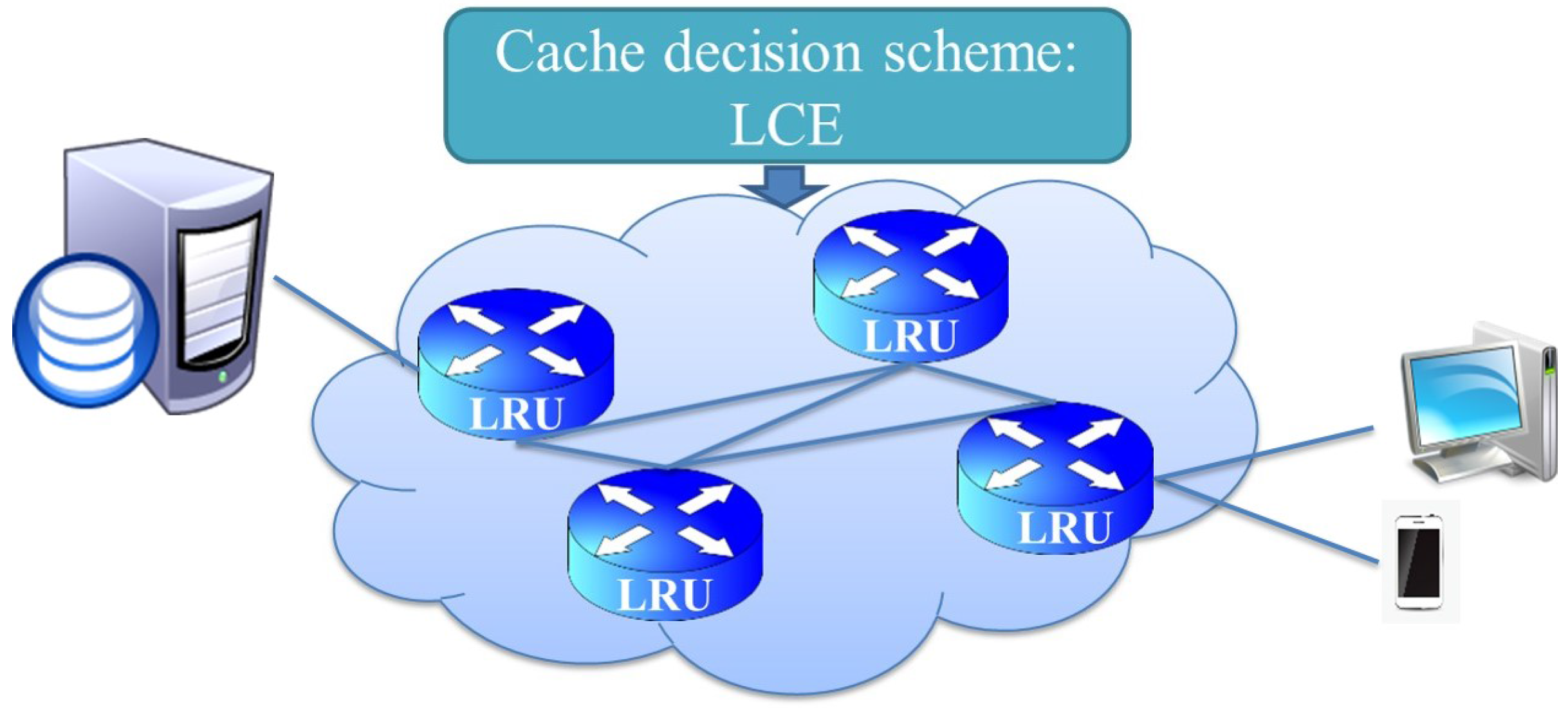

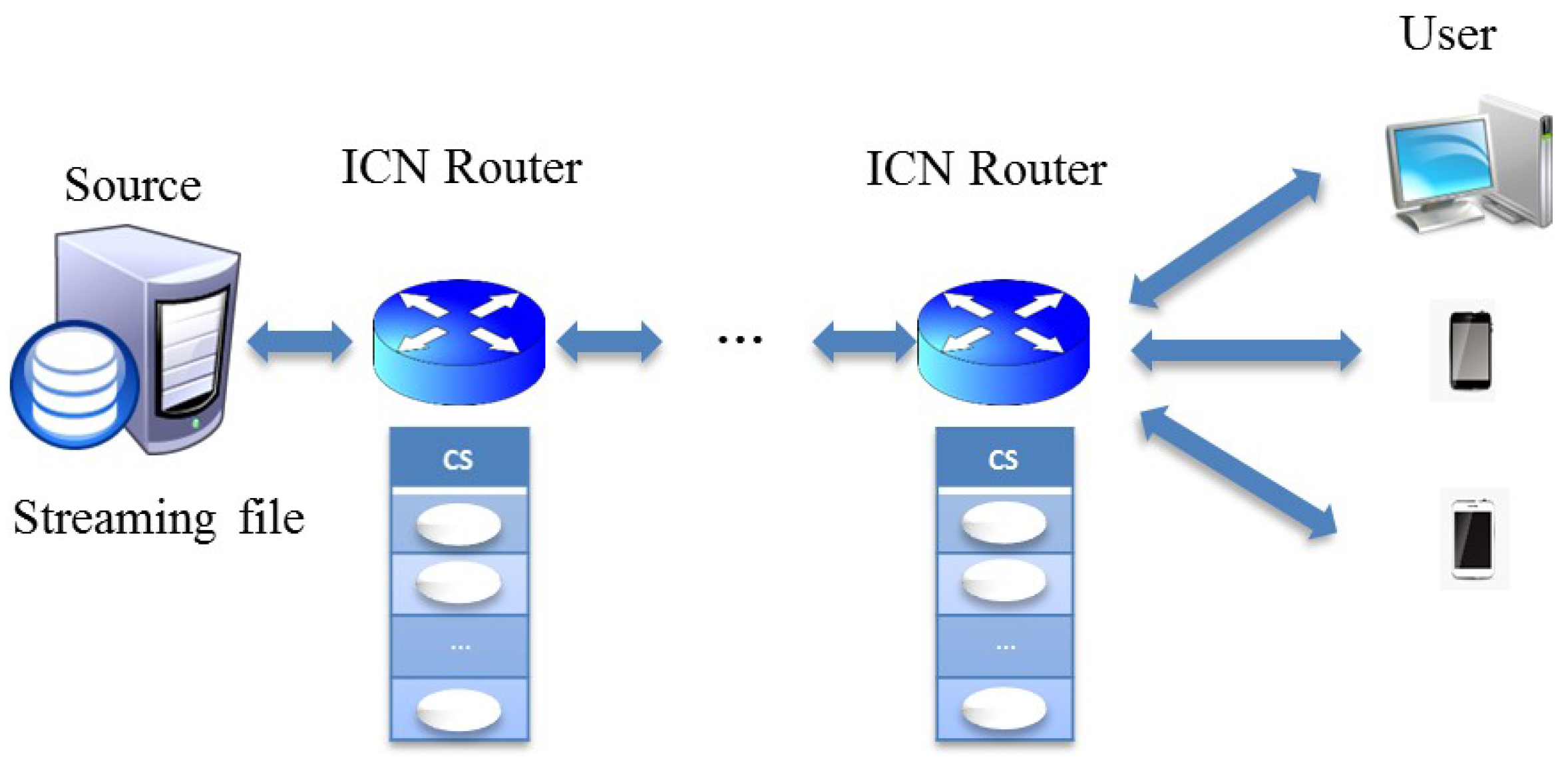

2.1. In-Network Caching for Streaming Content Delivery Scenarios

2.2. Definitions and Assumptions

- The name of the streaming chunk in ICN is abstracted as two components: the streaming file name i and the chunk sequence number j, which can be used to distinguish different chunks of the file (e.g., /3/9).

- The chunk sequence number j is ordered based on the streaming sequence (e.g., the sequence number of the first chunk is 1, the second is 2, etc.).

- Accordingly, a streaming file consists of a set of chunks , which are ordered by the sequence number j, and each chunk is assumed to have the same chunk size.

- We assume that the streaming chunks are requested in sequence starting with the first chunk.

- The request duration of (the period between the start and end of one continuous user request for file ) follows the distribution . For example, in the case of video, viewers may quit the session before the end of the video.

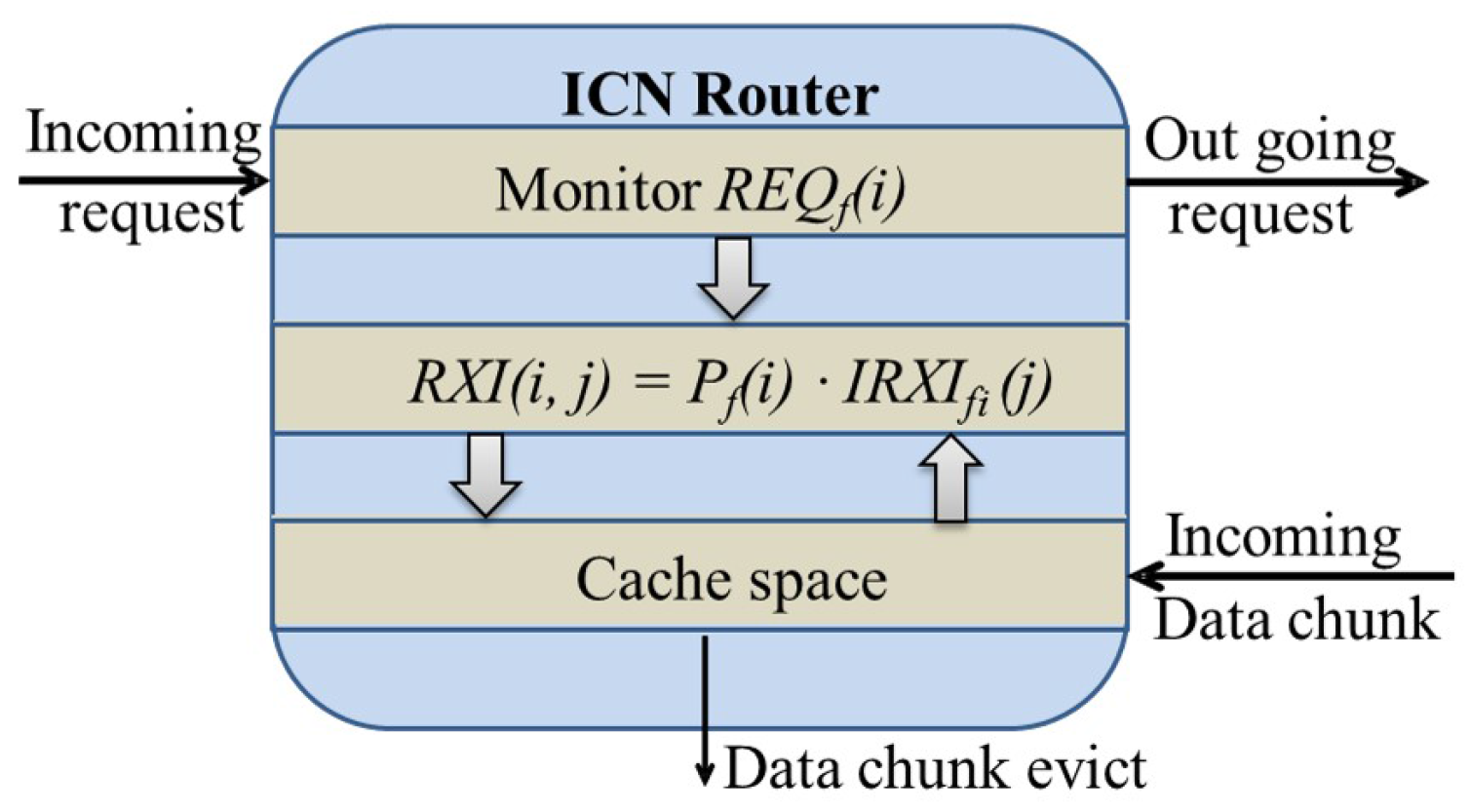

3. Request Expectation Index Based Cache Replacement Algorithm

3.1. Request Expectation Index

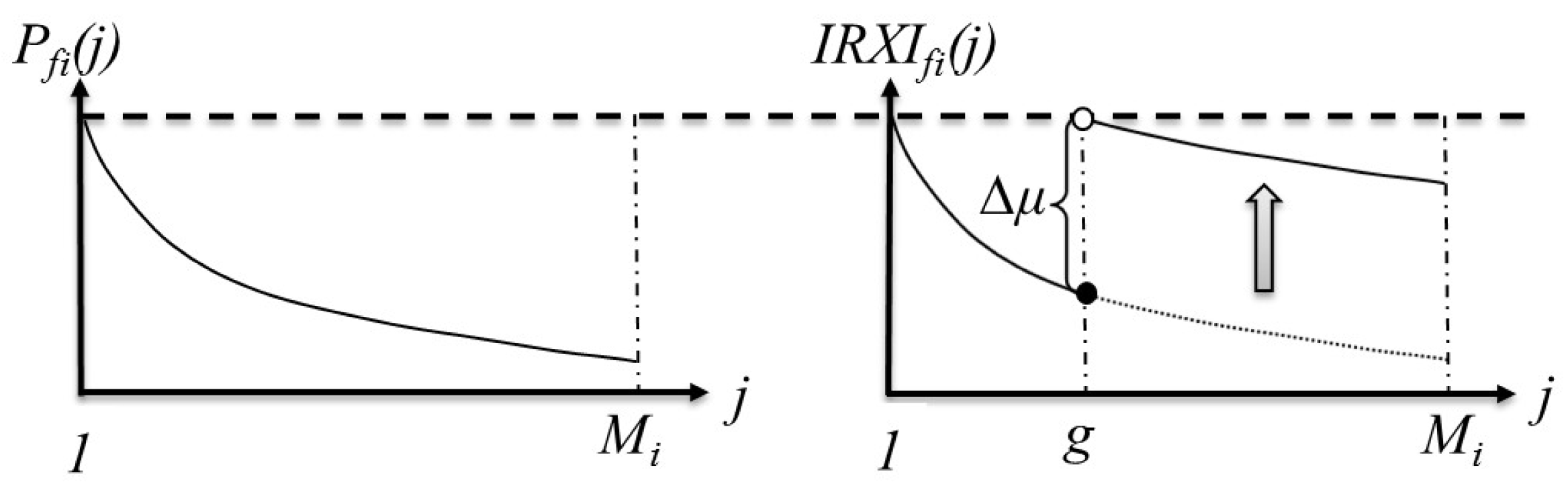

3.1.1. File-Level Request Probability

3.1.2. Chunk-Level Internal Request Probability

3.1.3. Chunk-Level Internal Request Expectation Index

- has been cached in the cache space of the ICN router.

- updating only occurs when this ICN router receives a request for chunk of the same file .

- The sequence numbers j of the cached chunks are larger than the sequence number of the received request, i.e., .

| Algorithm 1 Update/Recover |

|

3.2. RXI-Based Cache Replacement Algorithm

| Algorithm 2 RXI-based Cache Replacement |

|

4. Simulations and Results

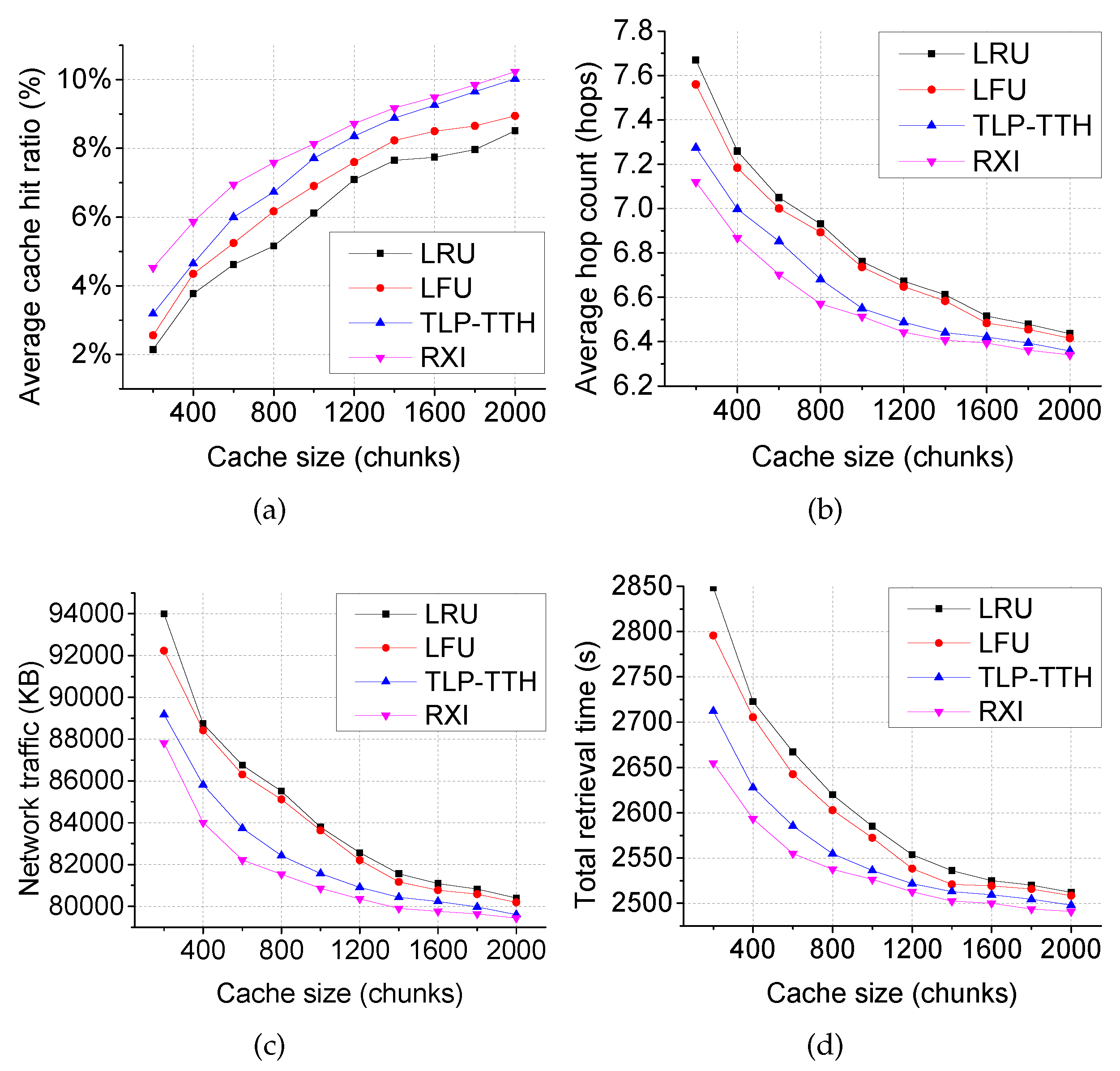

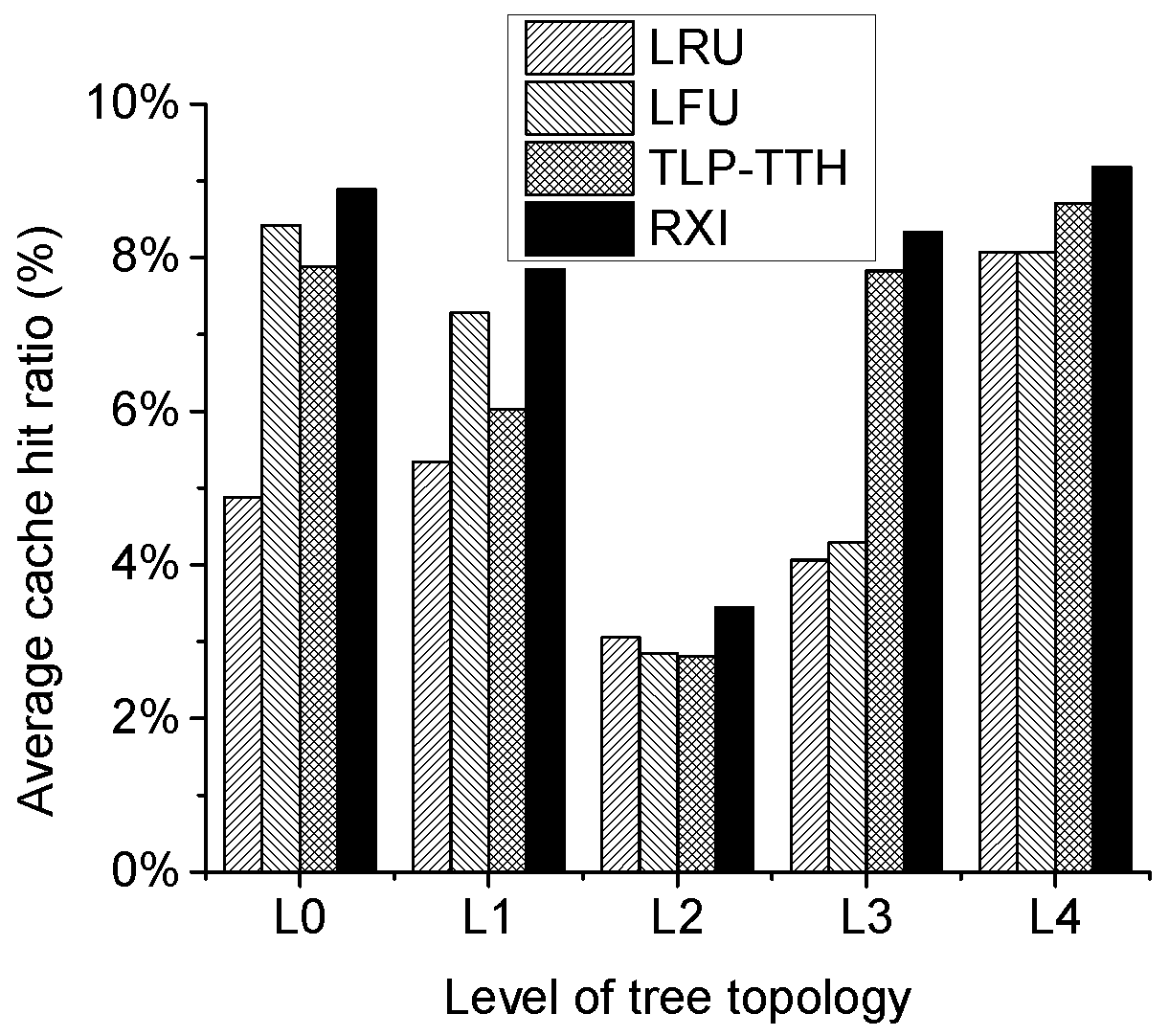

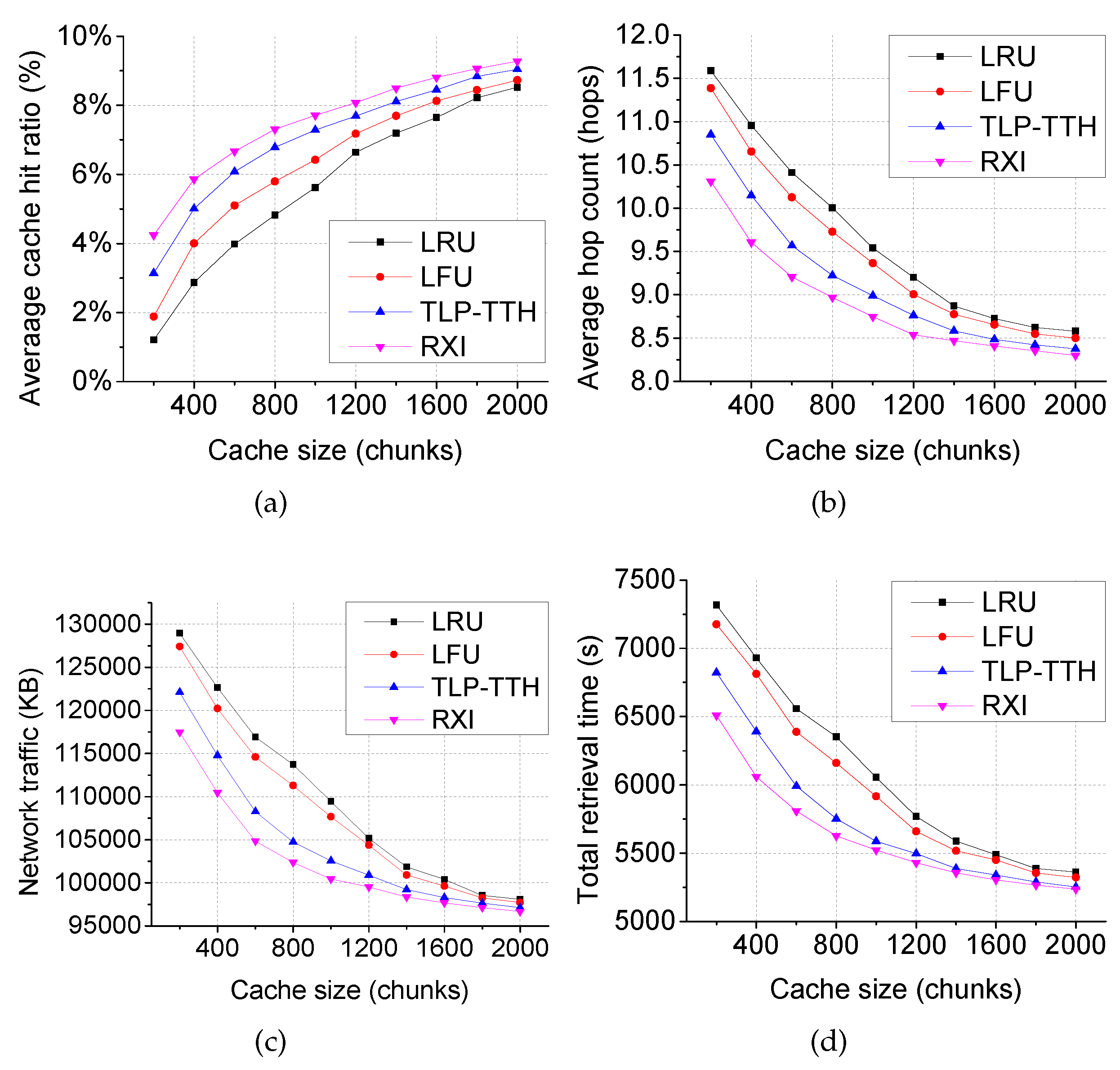

- Average cache hit ratio is used as the primary performance metric to measure the cache utilization efficiency. In our simulation, the cache hit ratio is calculated individually by each router and represents the number of cache hits divided by the number of requests received by each router.

- Average hop count indicates the average transmission round-trip distance (the sum of the hops of one pair of request and data packets transferred) for each video chunk delivery.

- Network traffic represents the sum of the data forwarded by every ICN router during the entire simulation.

- Total retrieval time denotes the sum of the retrieval time between a request being sent and the data bing received by the users.

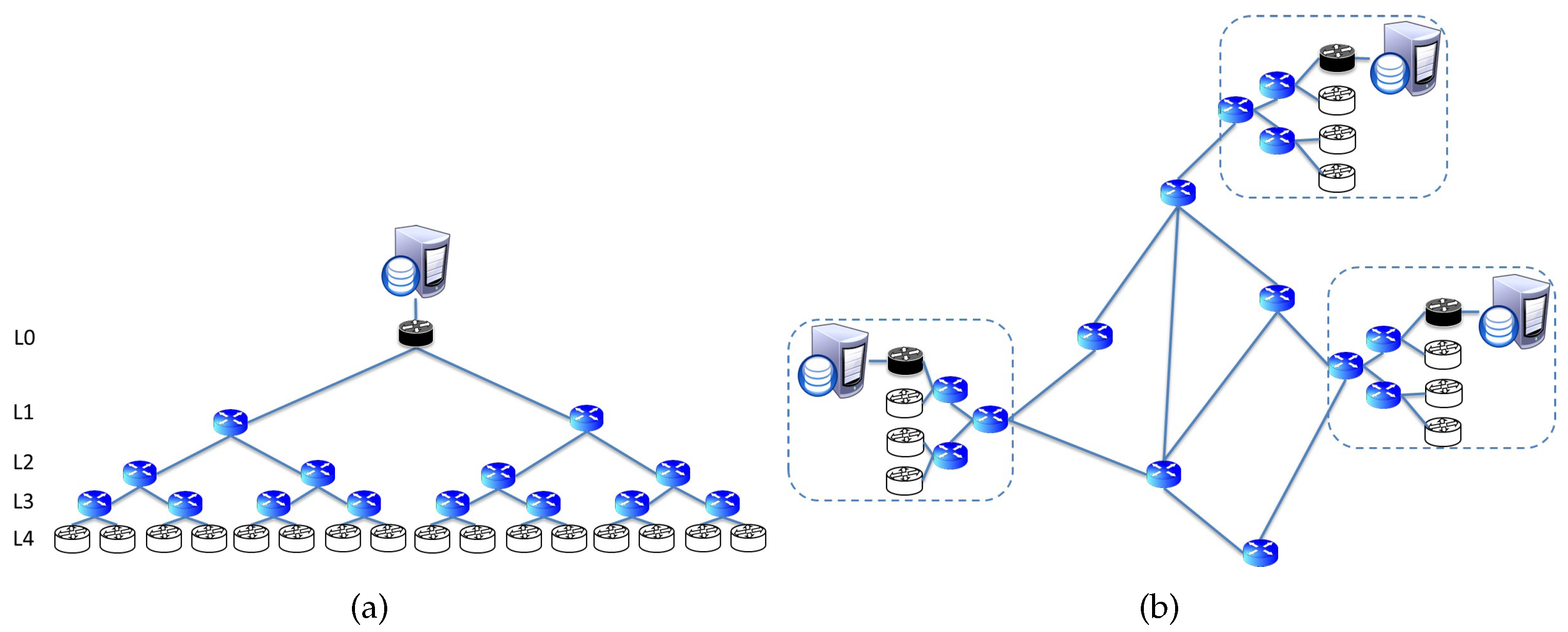

4.1. Scenario 1: Hierarchical Topology with One Producer

4.2. Scenario 2: Hybrid Topology with Multiple Producers

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Cisco Visual Networking Index. Global Mobile Data Traffic Forecast Update, 2013–2018; White Paper 2014; Cisco Visual Networking Index: London, UK, 2014. [Google Scholar]

- Koponen, T.; Chawla, M.; Chun, B.-G.; Ermolinskiy, A.; Kim, K.H.; Shenker, S.; Stoica, I. A data-oriented (and beyond) network architecture. In SIGCOMM Computer Communication Review; ACM: New York, NY, USA, 2007; pp. 181–192. [Google Scholar]

- Tarkoma, S.; Ain, M.; Visala, K. The publish/subscribe internet routing paradigm (PSIRP): Designing the future internet architecture. In Future Internet Assembly; IOS Press: Amsterdam, The Netherlands, 2009; pp. 102–111. [Google Scholar]

- Fotiou, N.; Nikander, P.; Trossen, D.; Polyzos, G.C. Developing information networking further: From PSIRP to PURSUIT. In Broadnets; Springer: Berlin, Germany, 2010; pp. 1–13. [Google Scholar]

- Dannewitz, C. Netinf: An Information-Centric Design for the Future Internet. In Proceedings of the 3rd GI/ITG KuVS Workshop on The Future Internet, Munich, Germany, 28 May 2009; pp. 1–3. [Google Scholar]

- Zhang, L.; Estrin, D.; Burke, J.; Jacobson, V.; Thornton, J.D.; Smetters, D.K.; Zhang, B.; Tsudik, G.; Massey, D.; Papadopoulos, C. Named Data Networking (NDN) Project. Relatório Técnico NDN-0001, Xerox Palo Alto Res. Center-PARC 2010, 1892, 227–234. [Google Scholar]

- Jacobson, V.; Smetters, D.K.; Thornton, J.D.; Plass, M.F.; Briggs, N.H.; Braynard, R.L. Networking Named Content. In Proceedings of the 5th International Conference on Emerging Networking Experiments and Technologies, Rome, Italy, 1–4 December 2009; ACM: New York, NY, USA, 2009; pp. 1–12. [Google Scholar]

- Niebert, N.; Baucke, S.; El-Khayat, I.; Johnsson, M.; Ohlman, B.; Abramowicz, H.; Wuenstel, K.; Woesner, H.; Quittek, J.; Correia, L.M. The way 4WARD to the Creation of a Future Internet. In Proceedings of the IEEE 19th International Symposium on Personal, Indoor and Mobile Radio Communications, PIMRC 2008, Cannes, France, 15–18 September 2008; pp. 1–5. [Google Scholar]

- Xylomenos, G.; Ververidis, C.N.; Siris, V.A.; Fotiou, N.; Tsilopoulos, C.; Vasilakos, X.; Katsaros, K.V.; Polyzos, G.C. A survey of information-centric networking research. IEEE Commun. Surv. Tutor. 2014, 16, 1024–1049. [Google Scholar] [CrossRef]

- Ahlgren, B.; Dannewitz, C.; Imbrenda, C.; Kutscher, D.; Ohlman, B. A survey of information-centric networking. IEEE Commun. Mag. 2012, 50, 26–36. [Google Scholar] [CrossRef]

- Choi, N.; Guan, K.; Kilper, D.C.; Atkinson, G. In-Network Caching Effect on Optimal Energy Consumption in Content-Centric Networking. In Proceedings of the 2012 IEEE International Conference on Communications (ICC), Ottawa, ON, Canada, 10–15 June 2012; pp. 2889–2894. [Google Scholar]

- Liu, W.X.; Yu, S.Z.; Gao, Y.; Wu, W.T. Caching efficiency of information-centric networking. IET Netw. 2013, 2, 53–62. [Google Scholar] [CrossRef]

- Meng, Z.; Luo, H.; Zhang, H. A survey of caching mechanisms in information-centric networking. IEEE Commun. Surv. Tutor. 2015, 17, 1473–1499. [Google Scholar]

- Laoutaris, N.; Che, H.; Stavrakakis, I. The LCD interconnection of lru caches and its analysis. Perform. Eval. 2006, 63, 609–634. [Google Scholar] [CrossRef]

- Laoutaris, N.; Syntila, S.; Stavrakakis, I. Meta Algorithms for Hierarchical Web Caches. In Proceedings of the 2004 IEEE International Conference on Performance, Computing, and Communications, Hyderabad, India, 20–23 December 2004; pp. 445–452. [Google Scholar]

- Psaras, I.; Chai, W.K.; Pavlou, G. Probabilistic in-Network Caching for Information-Centric Networks. In Proceedings of the Second Edition of the ICN Workshop on Information-Centric Networking, Helsinki, Finland, 17 August 2012; ACM: New York, NY, USA, 2012; pp. 55–60. [Google Scholar]

- Cho, K.; Lee, M.; Park, K.; Kwon, T.T.; Choi, Y.; Pack, S. Wave: Popularity-Based and Collaborative in-Network Caching for Content-Oriented Networks. In Proceedings of the 2012 IEEE Conference on Computer Communications Workshops (INFOCOM WKSHPS), Orlando, FL, USA, 25–30 March 2012; pp. 316–321. [Google Scholar]

- Arianfar, S.; Nikander, P.; Ott, J. Packet-Level Caching for Information-Centric Networking. In Proceedings of the ACM SIGCOMM, ReArch Workshop, New Delhi, India, 30 August–3 September 2010. [Google Scholar]

- Bernardini, C.; Silverston, T.; Festor, O. MPC: Popularity-Based Caching Strategy for Content Centric Networks. In Proceedings of the IEEE International Conference on Communications (ICC), Budapest, Hungary, 9–13 June 2013; pp. 3619–3623. [Google Scholar]

- Wang, Y.; Li, Z.; Tyson, G.; Uhlig, S.; Xie, G. In Optimal Cache Allocation for Content-Centric Networking. In Proceedings of the 21st IEEE International Conference on Network Protocols (ICNP), Gottingen, Germany, 7–10 October 2013; pp. 1–10. [Google Scholar]

- Cui, X.; Liu, J.; Huang, T.; Chen, J.; Liu, Y. A Novel Metric for Cache Size Allocation Scheme in Content Centric Networking. In Proceedings of the National Doctoral Academic Forum on Information and Communications Technology, Beijing, China, 21–23 August 2013. [Google Scholar]

- Guan, J.; Quan, W.; Xu, C.; Zhang, H. The Location Selection for Ccn Router Based on the Network Centrality. In Proceedings of the 2012 IEEE 2nd International Conference on Cloud Computing and Intelligent Systems (CCIS), Hangzhou, China, 30 October–1 November 2012; pp. 568–582. [Google Scholar]

- Xu, Y.; Wang, Z.; Li, Y.; Lin, T.; An, W.; Ci, S. Minimizing Bandwidth Cost of Ccn: A Coordinated in-Network Caching Approach. In Proceedings of the 24th International Conference on Computer Communication and Networks (ICCCN), Las Vegas, NV, USA, 3 August–6 August 2015; pp. 1–7. [Google Scholar]

- Li, Y.; Xie, H.; Wen, Y.; Zhang, Z.-L. Coordinating in-Network Caching in Content-Centric Networks: Model and Analysis. In Proceedings of the 2013 IEEE 33rd International Conference on Distributed Computing Systems (ICDCS), Philadelphia, PA, USA, 8–11 July 2013; pp. 62–72. [Google Scholar]

- Wang, J.M.; Zhang, J.; Bensaou, B. In Intra-as Cooperative Caching for Content-Centric Networks. In Proceedings of the 3rd ACM SIGCOMM Workshop on Information-Centric Networking, Hong Kong, China, 12 August 2013; ACM: New York, NY, USA, 2013; pp. 61–66. [Google Scholar]

- Ming, Z.; Xu, M.; Wang, D. Age-Based Cooperative Caching in Information-Centric Networking. In Proceedings of the 23rd International Conference on Computer Communication and Networks (ICCCN), Shanghai, China, 4–7 August 2014; pp. 1–8. [Google Scholar]

- Yu, Y.; Bronzino, F.; Fany, R.; Westphalz, C.; Gerlay, M. Congestion-Aware Edge Caching for Adaptive Video Streaming in Information-Centric Networks. In Proceedings of the 12th Annual IEEE Consumer Communications and Networking Conference (CCNC), Las Vegas, NV, USA, 9–12 January 2015; pp. 588–596. [Google Scholar]

- Belady, L.A. A study of replacement algorithms for a virtual-storage computer. IBM Syst. J. 1966, 5, 78–101. [Google Scholar] [CrossRef]

- Mattson, R.L.; Gecsei, J.; Slutz, D.R.; Traiger, I.L. Evaluation techniques for storage hierarchies. IBM Syst. J. 1970, 9, 78–117. [Google Scholar] [CrossRef]

- Van Roy, B. A short proof of optimality for the min cache replacement algorithm. Inf. Process. Lett. 2007, 102, 72–73. [Google Scholar] [CrossRef]

- Arlitt, M.F.; Friedrich, R.J.; Jin, T.Y. Web Cache Performance by Applying Different Replacement Policies to the Web Cache. U.S. Patent 6272598 B1, 7 August 2001. [Google Scholar]

- Romano, S.; ElAarag, H. A Quantitative Study of Recency and Frequency Based Web Cache Replacement Strategies. In Proceedings of the 11th Communications and Networking Simulation Symposium, Ottawa, ON, Canada, 14–17 April 2008; ACM: New York, NY, USA; pp. 70–78. [Google Scholar]

- Podlipnig, S.; Böszörmenyi, L. A survey of web cache replacement strategies. ACM Comput. Surv. (CSUR) 2003, 35, 374–398. [Google Scholar] [CrossRef]

- Carofiglio, G.; Gallo, M.; Muscariello, L.; Perino, D. Modeling Data Transfer in Content-Centric Networking. In Proceedings of the 23rd International Teletraffic Congress, San Francisco, CA, USA, 6–9 September 2011; pp. 111–118. [Google Scholar]

- Sun, Y.; Fayaz, S.K.; Guo, Y.; Sekar, V.; Jin, Y.; Kaafar, M.A.; Uhlig, S. Trace-Driven Analysis of ICN Caching Algorithms on Video-on-Demand Workloads. In Proceedings of the 10th ACM International on Conference on emerging Networking Experiments and Technologies, Sydney, Australia, 2–5 December 2014; ACM: New York, NY, USA, 2014; pp. 363–376. [Google Scholar]

- Chao, F.; HUANG, T.; Jiang, L.; CHEN, J. Y.; LIU, Y. J. Fast convergence caching replacement algorithm based on dynamic classification for content-centric networks. J. China Univ. Posts Telecommun. 2013, 20, 45–50. [Google Scholar]

- Ooka, A.; Eum, S.; Ata, S.; Murata, M. Compact CAR: Low-overhead cache replacement policy for an icn router. arXiv, 2016; arXiv:1612.02603. [Google Scholar]

- Zhang, G.; Li, Y.; Lin, T. Caching in information centric networking: A survey. Comput. Netw. 2013, 57, 3128–3141. [Google Scholar] [CrossRef]

- Lee, J.; Lim, K.; Yoo, C. Cache Replacement Strategies for Scalable Video Streaming in Ccn. In Proceedings of the 19th Asia-Pacific Conference on Communications (APCC), Bali, Indonesia, 29–31 August 2013; pp. 184–189. [Google Scholar]

- Li, H.; Nakazato, H. Two-level popularity-oriented cache replacement policy for video delivery over ccn. IEICE Trans. Commun. 2016, 99, 2532–2540. [Google Scholar] [CrossRef]

- Fricker, C.; Robert, P.; Roberts, J. A Versatile and Accurate Approximation for Lru Cache Performance. In Proceedings of the 24th International Teletraffic Congress, Krakow, Poland, 4–7 September 2012; p. 8. [Google Scholar]

- Afanasyev, A.; Moiseenko, I.; Zhang, L. Ndnsim: NDN Simulator for NS-3; University of California: Los Angeles, CA, USA, 2012; p. 4. [Google Scholar]

- The China Education and Research Network (CERENT). Available online: http://www.edu.cn/cernet_1377/index.shtml (accessed on 29 June 2016).

- Rossi, D.; Rossini, G. Caching performance of content centric networks under multi-path routing (and more). Technical Report. Relatório Técnico Telecom ParisTech 2011. Available online: http://perso.telecom-paristech.fr/~drossi/paper/rossi11ccn-techrep1.pdf (accessed on 5 May 2015).

| File-level request probability of file among different files at an Information-Centric Networking (ICN) router. | |

| Local request ratio of file measured at each ICN router. | |

| The time unit of the measurement, e.g., denotes that is recalculated each second. | |

| Chunk-level internal request probability for chunk , which refers to the request probability for chunk among all other chunks within streaming file . | |

| Internal request expectation index (IRXI) of chunk . | |

| Request expectation index (RXI) of chunk . | |

| Request duration distribution. | |

| Probability density function of request duration distribution . | |

| Average request interval of consecutive chunks in file . | |

| Total chunk number of file , e.g., for chunk , . | |

| n | Total number of chunks cached in a router. |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, H.; Nakazato, H.; Ahmed, S.H. Request Expectation Index Based Cache Replacement Algorithm for Streaming Content Delivery over ICN. Future Internet 2017, 9, 83. https://doi.org/10.3390/fi9040083

Li H, Nakazato H, Ahmed SH. Request Expectation Index Based Cache Replacement Algorithm for Streaming Content Delivery over ICN. Future Internet. 2017; 9(4):83. https://doi.org/10.3390/fi9040083

Chicago/Turabian StyleLi, Haipeng, Hidenori Nakazato, and Syed Hassan Ahmed. 2017. "Request Expectation Index Based Cache Replacement Algorithm for Streaming Content Delivery over ICN" Future Internet 9, no. 4: 83. https://doi.org/10.3390/fi9040083

APA StyleLi, H., Nakazato, H., & Ahmed, S. H. (2017). Request Expectation Index Based Cache Replacement Algorithm for Streaming Content Delivery over ICN. Future Internet, 9(4), 83. https://doi.org/10.3390/fi9040083