Abstract

The proliferation of sensor technology has led to an explosion in data volume, making the retrieval of specific information from large repositories increasingly challenging. While Retrieval-Augmented Generation (RAG) can enhance Large Language Models (LLMs), they often lack precision in specialized domains. Taking the Civil IoT Taiwan Data Service Platform as a case study, this study addresses this gap by developing a dialogue engine enhanced with a GraphRAG framework, aiming to provide accurate, context-aware responses to user queries. Our method involves constructing a domain-specific knowledge graph by extracting entities (e.g., ‘Dataset’, ‘Agency’) and their relationships from the platform’s documentation. For query processing, the system interprets natural language inputs, identifies corresponding paths within the knowledge graph, and employs a recursive self-reflection mechanism to ensure the final answer aligns with the user’s intent. The final answer transformed into natural language by utilizing the TAIDE (Trustworthy AI Dialogue Engine) model. The implemented framework successfully translates complex, multi-constraint questions into executable graph queries, moving beyond keyword matching to navigate semantic pathways. This results in highly accurate and verifiable answers grounded in the source data. In conclusion, this research validates that applying a GraphRAG-enhanced engine is a robust solution for building intelligent dialogue systems for specialized data platforms, significantly improving the precision and usability of information retrieval and offering a replicable model for other knowledge-intensive domains.

1. Introduction

The proliferation of Internet of Things (IoT) devices and advanced sensing technologies has led to an explosive growth in observational data, particularly in the environmental and civil domains [1,2]. While this data abundance offers unprecedented opportunities for scientific research and public services, it simultaneously creates significant challenges in data retrieval and integration. Data is often stored in isolated silos across different agencies, lacking standardized formats and effective interconnections. Consequently, users, especially non-domain experts, face considerable obstacles when attempting to discover and utilize relevant information through traditional keyword-based search systems, which are ill-equipped to handle complex, multi-faceted queries [1,3]. This information retrieval bottleneck impedes the efficient use of valuable data resources, highlighting the urgent need for more intelligent and intuitive data access solutions.

Large Language Models (LLMs) have demonstrated remarkable capabilities in understanding and generating human language, presenting a promising avenue for creating natural language interfaces for complex databases [4,5,6,7]. However, when applied to specialized, fact-intensive domains, general-purpose LLMs are prone to factual inaccuracies and “hallucinations”, where they generate plausible but incorrect information [8,9,10,11,12,13]. To anchor these models in factual knowledge, Retrieval-Augmented Generation (RAG) has emerged as a state-of-the-art technique [14,15,16,17,18]. The standard RAG framework enhances an LLM’s prompt with relevant information retrieved from an external knowledge base, thereby grounding its responses in verifiable data. Despite its success, conventional RAG typically operates on unstructured text documents, which are segmented into independent chunks. This approach often fails to capture the intricate, underlying relationships between different pieces of information, limiting its ability to perform complex, multi-hop reasoning tasks that require synthesizing information across multiple documents or data points [4,19,20,21].

To overcome the limitations of text-based retrieval, recent research has increasingly focused on integrating the structured nature of Knowledge Graphs (KGs) into the RAG pipeline, a paradigm termed GraphRAG [4,19]. A KG represents information as a network of entities (nodes) and their relationships (edges), offering a structured, machine-readable format for domain knowledge [19,22,23,24,25]. By leveraging this structure, GraphRAG can perform retrieval over interconnected data, enabling more sophisticated reasoning and enhancing the transparency of the generation process [4,19,26]. Instead of retrieving isolated text chunks, GraphRAG can identify and retrieve relevant subgraphs or reasoning paths that directly address the logic of a user’s query [27,28,29,30]. This graph-based approach not only improves the factual consistency of the generated answers but also enhances their explainability, as responses can be traced back to specific entities and relations within the KG [12,19,22]. However, the practical implementation of GraphRAG faces its own challenges, including the resource-intensive process of constructing high-quality, domain-specific KGs and the complexity of accurately translating natural language queries into graph traversal logic [19,23,31].

This study presents a practical application of the GraphRAG methodology to enhance the dialogue service of the Civil IoT Taiwan Data Service Platform. This platform serves as a critical national infrastructure, aggregating vast amounts of real-time sensor data from multiple government agencies across domains such as air quality, hydrology, meteorology, and seismology. Given the heterogeneity and scale of its data, users often struggle to formulate queries that span multiple datasets or require specific domain knowledge. Our research aims to develop a sophisticated dialogue engine by building domain-specific KG from the platform’s documentation and data. This system leverages the TAIDE (Trustworthy AI Dialogue Engine) model, a generative AI developed in Taiwan, augmented with our GraphRAG framework to provide users with an intuitive, accurate, and trustworthy conversational interface for data exploration.

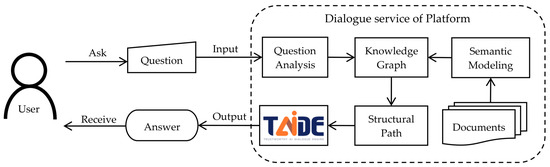

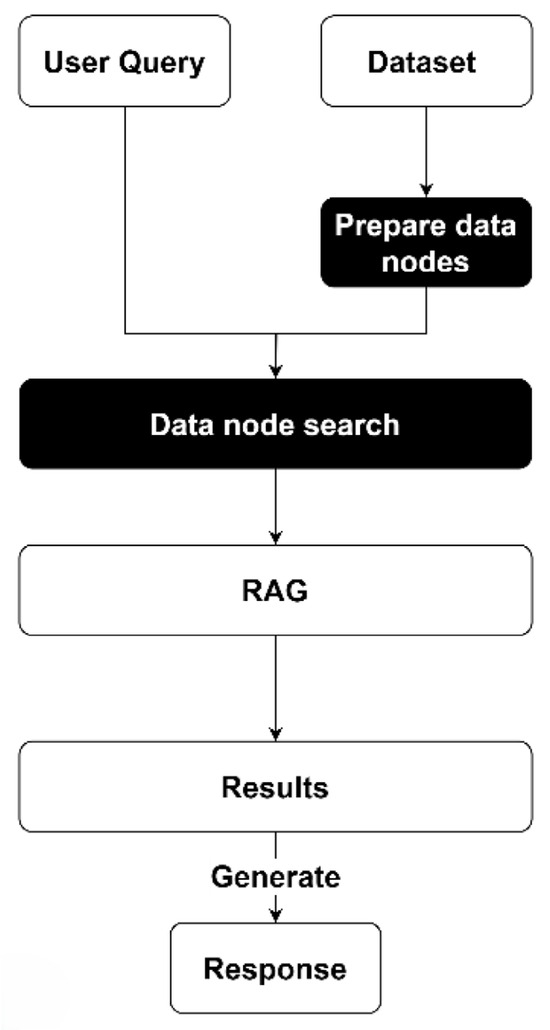

This paper proposes an end-to-end framework for building and utilizing a GraphRAG-powered dialogue system tailored for a complex IoT data platform (see Figure 1). Our methodology begins with the semi-automatic construction of a semantically rich KG from the diverse and unstructured documents based on the platform. We then detail a multi-stage process for reasoning, which includes: (1) performing named entity recognition and coreference resolution on the user’s query; (2) retrieving relevant paths from the KG based on the inferred query structure; and (3) implementing a reflexion and recursive prompting mechanism to validate the retrieved path and refine the final answer. Based on the TAIDE model, the final answer in natural language will be produced and then replied through the platform interface. Compared with Advanced RAG method, the answers from the GraphRAG in this research showed more detailed and correct answers. The primary contribution of this work is the design and implementation of a robust dialogue system that significantly improves the accessibility and usability of a national IoT data repository. Ultimately, this research demonstrates the tangible value of GraphRAG in transforming complex data platforms into interactive and intelligent knowledge services.

Figure 1.

Framework of Dialogue service in Civil IoT Taiwan Data Service Platform.

The remainder of this paper is organized as follows: Section 2 describes the materials utilized in this study, including the Civil IoT Taiwan-Data Service Platform, which integrates heterogeneous sensor data. Section 3 outlines the methods employed, detailing the semi-automatic knowledge graph construction process and the multi-stage GraphRAG framework for translating natural language queries into executable graph queries. Section 4 presents the experimental results, showcasing case studies that validate the system’s performance and effectiveness in addressing complex domain-specific questions. Section 5 discusses the broader implications of the findings, acknowledges limitations, and proposes directions for future research. Finally, Section 6 summarizes the key contributions of this work and concludes the paper.

2. Materials

This section details the primary data source and the foundational language model employed in this study. We describe the architecture and data characteristics of the Civil IoT Taiwan Data Service Platform and introduce the TAIDE large language model, which serves as the core of our dialogue engine.

2.1. Civil IoT Taiwan Data Service Platform

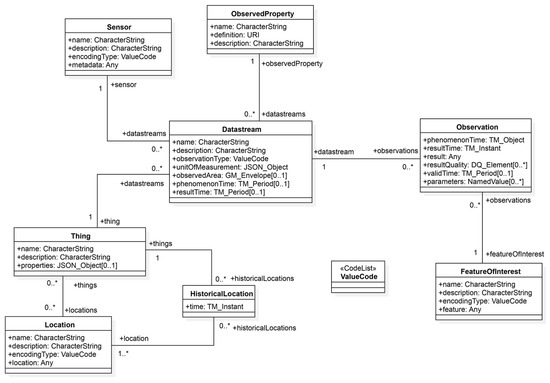

The core data for this study is sourced from the Civil IoT Taiwan Data Service Platform (hereafter “the Platform”), a comprehensive national infrastructure established to aggregate and standardize environmental sensor data from various governmental agencies [32,33]. The Platform addresses the significant challenge of data heterogeneity arising from disparate sources, formats, and terminologies by implementing the Open Geospatial Consortium (OGC) SensorThings API as a unified data exchange standard [34]. The UML (Unified Modeling Language) data model is shown in Figure 2. This strategic adoption facilitates structured integration and enables cross-thematic query capabilities, which are essential for advanced data analysis and application development.

Figure 2.

Data model of OGC SensorThings API [34].

The Platform currently hosts a vast repository of information, encompassing over 55 distinct datasets and accumulating more than two billion observation records. These datasets are organized into six primary themes: Air Quality, Seismic Activity, Water Resources, Meteorology, Disaster Warning and Notification, and Closed-Circuit Television (CCTV) [35]. Among these, water resources constitute the largest category with 26 datasets. The well-defined, multi-level information architecture of the Platform—which clearly delineates data by themes, providing agencies, specific datasets, and observational attributes—offers a rich, structured foundation. This inherent structure is leveraged in our study to model the nodes and relationships within the knowledge graph, providing the semantic backbone required for our GraphRAG approach.

2.2. TAIDE (Trustworthy AI Dialogue Engine)

The conversational reasoning in our framework is powered by the TAIDE (Trustworthy AI Dialogue Engine), a generative large language model (LLM) developed by Taiwan’s National Science and Technology Council (NSTC) in 2023 [36]. TAIDE was specifically created to provide a high-performing, reliable, and secure foundational model deeply rooted in Taiwan’s unique language, culture, and values. It is crucial to clarify that the term ‘trustworthy’ in its name reflects this design philosophy rather than a specific technical feature evaluated in this study. The model’s trustworthiness is derived from two key principles: (1) Cultural and Linguistic Alignment, ensuring responses align with Taiwan’s local context and ethical standards; and (2) Data Sovereignty and Security, aiming to establish a sovereign AI by reducing reliance on foreign technologies and ensuring compliance with local regulations.

The specific model employed in this research is the Llama3-TAIDE-LX-8B, which is based on the Llama 3 8B architecture and features 8 billion parameters. This model has undergone extensive fine-tuning with a high-quality corpus of over 114 billion tokens, focusing on tasks pertinent to local needs, including summarization, text generation, and question answering (QA) in Traditional Chinese [37]. Consequently, TAIDE was selected as the core dialogue engine for this study due to its advanced proficiency in understanding and generating nuanced Traditional Chinese, its alignment with the specific context of the Civil IoT Taiwan platform, and its design focus on providing safe and accurate responses to user queries.

3. Methods

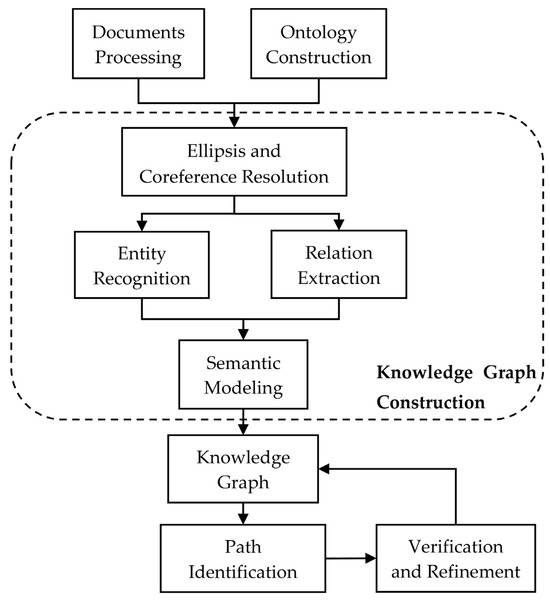

The methodological framework of this study is designed to construct a robust knowledge graph from the data sources and leverage it for a high-fidelity natural language question-answering system. The workflows covering GraphRAG process in this study are shown in Figure 3. The process is divided into three primary stages: (1) Knowledge Graph Construction, which involves data preprocessing, information extraction, and semantic modeling; (2) Natural Language Query Processing, which translates user questions into executable graph queries; and (3) Post-Query Verification, which ensures the relevance and accuracy of the retrieved answers.

Figure 3.

Workflow of GraphRAG in this research.

3.1. Knowledge Graph Construction

The foundation of the QA system is a knowledge graph built from domain-specific documents, such as technical manuals, official web pages, and data definition tables sourced from collaborative platforms like the Civil IoT Data Service Platform. The construction process is systematic, ensuring that the resulting graph is both comprehensive and semantically precise. To establish the semantic schema for the KG, we defined a set of entity and relation types based on the data architecture of the Civil IoT Data Service Platform. These definitions are presented in Table 1 and Table 2, respectively.

Table 1.

Defined entity types based on the contents of Civil IoT data Service Platform.

Table 2.

Defined relation types between entities.

3.1.1. Data Preprocessing: Document Chunking

The initial step addresses the challenge of processing source documents that are often loosely structured and semantically complex, covering diverse topics ranging from policy backgrounds to technical specifications. A direct application of information extraction on such large documents can lead to low accuracy and context misinterpretation [1,23,38]. To mitigate this, each source file, such as content from a webpage or a technical manual, is treated as a single document, corresponding to a specific dataset or thematic area. Documents are segmented into smaller, semantically self-contained chunks using a Python (ver. 3.12.9)-based script. This segmentation is performed based on structural and logical cues, including natural paragraphs and heading hierarchies. Each chunk represents a coherent unit of information, such as the description of a dataset provider or its update frequency. This chunking strategy effectively constrains the scope for entity extraction, ensuring that identified relationships are supported by a clear and immediate context, thereby reducing the risk of cross-paragraph misinterpretations [4,12].

3.1.2. Semantic Entity and Relation Extraction

Following chunking, key semantic information is extracted from each text chunk to identify meaningful entities and the relationships between them. To handle the complexity of natural language, where the same entity may be referred to by different names or pronouns across the text, coreference resolution techniques are applied. This is crucial for linking all mentions to a single, canonical entity, a common challenge in conversational QA and long-form documents [39]. Models based on deep bidirectional transformers (e.g., BERT) or sequence-to-sequence architectures are employed for this task [40,41].

The named entity recognition (NER) process leverages a combination of established NLP techniques. Standard NER tools, such as Stanford NER [42] or spaCy NER [43], are utilized to identify and classify entities within the text [4,19,23]. These tools can recognize geopolitical entities (GPE), organizations (ORG), and other relevant types. The scope of NER is configured to be broad, capturing not only formal locations but also organizations like schools or government agencies that serve as critical contextual entities. With the continuous advancement of natural language processing technologies, GPT models have shown substantial potential in performing NER tasks with a notable degree of accuracy [12,20,44,45]. In this study, we adopt this advanced approach. The entire extraction task is orchestrated using LangChain (ver. 0.3.26), which serves as a framework to manage calls to GPT-4.1 (version dated 14 April 2025). For each chunk, the model is prompted to perform both NER—to identify and classify key entities (e.g., ‘Agency’, ‘Theme’, ‘Dataset’)—and subsequent relation extraction to determine the semantic links between them (e.g., ‘provides’, ‘belongs_to’). The output of this stage is a collection of structured entity-relationship, which serves as the raw material for the graph modeling phase described in the following section.

3.1.3. Semantic Schema and Graph Modeling

To ensure the knowledge graph is machine-interpretable and supports complex reasoning, a defined semantic schema is imposed on its nodes and edges. Each entity identified in the previous step becomes a node in the graph. Nodes are enriched with a set of structured attributes to make them self-descriptive semantic units, including:

- label: A category tag indicating the node’s type (e.g., Theme, Dataset, Agency).

- description: A human-readable summary derived from the source text to provide semantic context.

- source: A reference to the original document and chunk, enabling full traceability and verification.

Relationships between entities are represented as directed edges, each explicitly typed and described, including:

- relationship_type: A standardized semantic type from a predefined ontology (e.g., belongs_to, provides, integrates).

- relationship_description: A concise explanation of the relationship, extracted from the source text.

- directionality: The inherent direction of the relationship is preserved (e.g., Agency → provide → Dataset).

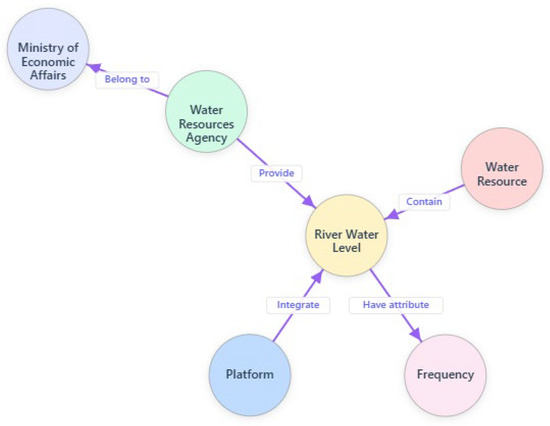

To maintain the logical integrity of the graph, constraints are enforced on how nodes can be connected. For instance, “attribute nodes” (e.g., Update Frequency, Observation Property) are prohibited from directly connecting to high-level entities like Agency. Instead, they must be connected via an intermediary Dataset node, ensuring all paths in the graph are semantically valid and meaningful. The final step involves importing the structured entity-relationship into a Neo4j (ver. 2 June 2025) property graph database to construct the knowledge graph. Neo4j’s property graph model is particularly suited for this task, as it allows nodes and relationships to be enriched with descriptive attributes, enabling advanced reasoning and insights. An example of knowledge graph was shown in Figure 4. In the Theme [Water Resources], [River Water Level] Dataset has the attribute [Frequency] and was provided by [Water Resources Agency], which belongs to the [Ministry of Economic Affairs].

Figure 4.

Example of knowledge graph related to River Water Level Dataset.

3.2. Natural Language Query Processing

The knowledge graph is constructed and serves as the backbone for the QA system. The system is designed to interpret user queries in natural language and translate them into formal graph traversal and filtering operations. Using the Gemma 3-12B-IT model as the inference engine, it is integrated locally via the Ollama API. The query syntax inferred by Gemma 3-12B-IT is sent to Neo4j (ver. 2 June 2025) for data retrieval, and the query results are returned to Llama-3.1-TAIDE-LX-8B-Chat for semantic integration and response generation.

3.2.1. Query Understanding and Path Identification

User’s question was first parsed to understand its underlying intent. This involves identifying the core entities, the relationships being queried, and any specified constraints. Given the conversational nature of many user interactions, the system is designed to handle elliptical or incomplete utterances (e.g., “and what about air quality?”) by resolving context from previous turns. Based on this understanding, the system identifies all potential structural paths within the knowledge graph that could logically answer the query. For example, a query about datasets provided by a specific agency would map to the path Agency → provides → Dataset.

3.2.2. Condition Translation and Value Filtering

After identifying the structural path, the system translates specific values mentioned in the query into filtering conditions. This step ensures that the query’s constraints are precisely reflected in the graph traversal logic and follow a constrained value.

- Value Mapping: Any tagged value in the query (e.g., [Theme: Air Quality], [Observation Property: PM2.5]) is converted into a WHERE clause to filter nodes along the identified path.

- Logical Operators: The translation mechanism supports standard logical operations. If multiple values are provided for the same attribute (e.g., [Theme: Air Quality] and [Theme: Seismic Activity]), they are combined using an IN operator (semantic OR). Conditions on different attributes (e.g., Theme and Agency) are combined using AND. Negations (e.g., “not air quality data”) are translated into NOT conditions.

- Unspecified Values: If a query mentions an entity type without a specific value (e.g., “which agencies provide data?”), no filter is applied to that node type, allowing it to serve as the target of the query or a pass-through point in the path.

This systematic translation of natural language constrains into a formal query structure ensures that the user’s intent is faithfully executed against the knowledge graph.

3.3. Post-Query Verification and Refinement

To maximize the reliability of the system, a final verification stage is implemented to analyze the results before they are presented to the user.

Result Reflection and Deviation Detection: This mechanism systematically assesses the semantic consistency between the user’s original query and the generated answer. It performs a validation check to confirm that the query path and filtering logic correctly captured the user’s intent. If a potential semantic deviation is detected (e.g., the results do not align with an implicit assumption in the query), the system can pinpoint the source of the error in the query interpretation or execution pipeline and suggest a correction. This reflective process enhances the transparency and accuracy of the final response, building user trust in the system.

4. Experiments and Results

4.1. Advanced RAG

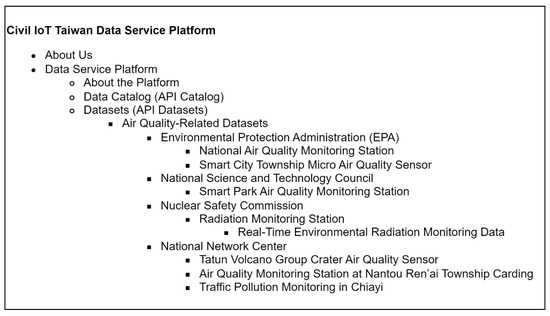

Traditional Retrieval-Augmented Generation, often termed Naive RAG, integrates retrieval and generation steps to produce responses from large-scale data sources. However, this approach exhibits significant limitations when applied to heterogeneous, hierarchical, or structurally complex datasets [15,46,47]. Key drawbacks include insufficient precision in data retrieval, where queries often return a high volume of irrelevant information, and a vulnerability to noisy or low-quality data, which can degrade the quality of the generated output [14,47]. Furthermore, Naive RAG’s reliance on direct term matching is often insufficient for broad or interdisciplinary queries, leading to retrieval outcomes that lack the necessary thematic focus. To overcome these challenges, we employ an Advanced RAG methodology centered on node-structured data point filtering. This paradigm shifts from a simple retrieve-then-generate pipeline to a more sophisticated process that involves structuring and filtering data prior to retrieval. The core of this approach is the organization of the knowledge base into a hierarchical node model [15]. In this model, each node represents a specific topic or category, creating a structured map of the information landscape (see Figure 5). For instance, data can be categorized into distinct service platforms and further into specific dataset types, such as air quality metrics. This structure allows the system to first identify the nodes most relevant to a user’s query and then conduct a targeted retrieval exclusively within those nodes, significantly reducing the processing of irrelevant data and enhancing the precision of the retrieved context.

Figure 5.

The workflow of Advanced RAG.

A critical component for operationalizing this advanced framework is enabling the model to comprehend and navigate the defined data structure. For this, we utilize the Self-Instruct method, a technique for automatically generating large-scale, high-quality instruction-following data without reliance on extensive manual annotation [48]. By defining a target node structure from high-level categories down to the specific attributes of individual data points (see Figure 6), Self-Instruct can be used to generate a diverse set of instructions for a node classification task. The model then automatically generates data points and corresponding labels that align with these instructions, creating a robust training dataset.

Figure 6.

Example of data node classification for the Public IoT Data Service Platform.

The integrated workflow of Advanced RAG with Self-Instruct proceeds as follows. First, a node classification model is trained using the dataset generated via Self-Instruct. This endows the model with the ability to accurately map unstructured or semi-structured information to the predefined hierarchical node structure. For example, it can learn to differentiate between air quality datasets originating from distinct government agencies based on their intrinsic properties. During inference, when a user query is received, the system first leverages this trained classifier to filter and organize the entire knowledge base, identifying and isolating the specific nodes that are semantically aligned with the query’s intent. Only after this targeted filtering and structuring does the retrieval mechanism extract context, which is then passed to the LLM for generation.

4.2. Testing Cases

This section shows the practical user cases for testing. The process of both GraphRAG and Advanced RAG are listed and compared.

4.2.1. Query Available Data

Query 1. I want to know which ministries provide air quality datasets that include the ‘PM2.5’ observation property?

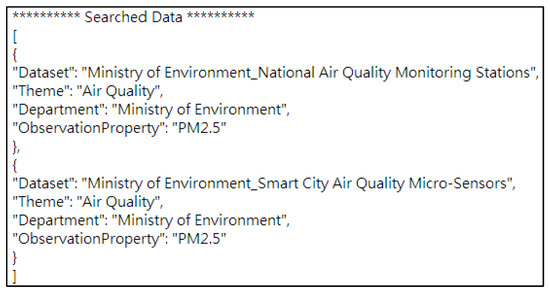

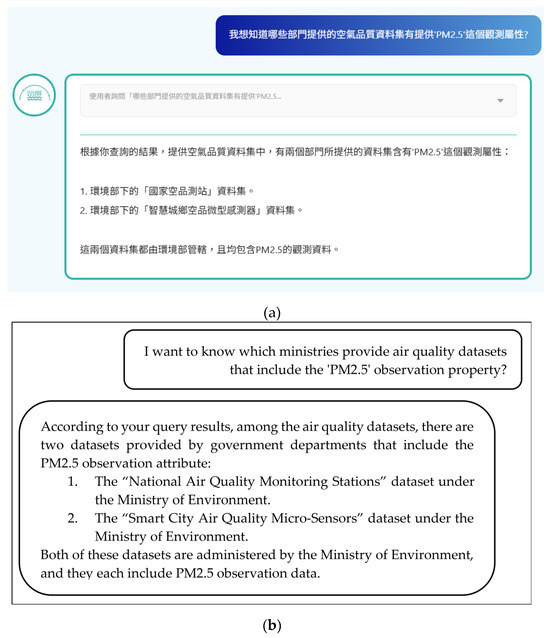

The PM2.5 data is available on the platform. Therefore, the exact dataset directories and the relevant information can be retrieved and validated. After the GraphRAG processing, two results are shown as Figure 7. Based on the results and the TAIDE model, Figure 8 illustrates the original platform response (a) alongside its English translation (b). The message provides two correct directories of datasets on the platform for the user. The whole process detail of GraphRAG is shown in Appendix A.1.

Figure 7.

Searched Data from Knowledge Graph_Query 1.

Figure 8.

Response from the Civil IoT Taiwan Data Service Platform with GraphRAG_Query 1: (a) snapshot of the original response; (b) corresponding English translation of the QA.

The result of the Query 1. retrieved by Advanced RAG was shown in Appendix A.2, the response first gives an overview of the datasets in the platform. Three sources of ministries/agencies are then listed. However, the contents only give a general condition of data authority other than the specific description in this platform. Users who follow the response are unable to retrieve the corresponding datasets.

4.2.2. Query Unavailable Data

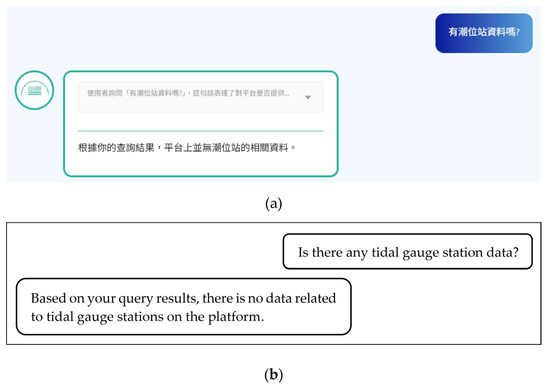

Query 2. Is there any tidal gauge station data?

In Taiwan, the tidal gauge stations have the same competent authorities as some of the datasets on this platform. However, the data from the tidal gauge stations are not included yet. Therefore, Query 2 might be a fragile process but unavailable in the end.

The results of Query 2, including the platform response and its English translation, are displayed in Figure 9. The message indicates “Based on your query results, there is no data related to tidal gauge stations on the platform”. The whole detailed process is in Appendix B.1.

Figure 9.

Response from the Civil IoT Taiwan Data Service Platform with GraphRAG_Query 2: (a) snapshot of the original response; (b) corresponding English translation of the QA.

In the case of querying unavailable dataset by retrieving with the help of Advanced RAG, the response shows the dataset overview same as previous testing case. The specific directory and datasets related to “water” are thereafter mentioned. The hallucination happened in this case (see Appendix B.2).

4.3. Quantitative Performance Evaluation

To quantitatively assess the accuracy of our proposed method in determining the correct knowledge graph path after interpreting a user’s query, an evaluation was conducted. We employed GPT-o4-mini (version dated 16 April 2025) to generate a set of potential user queries. The generation prompt provided a description of the seven entity types defined in Table 1 of this study but did not include the specific names of the nodes within each category. The model was instructed to randomly select entities to compose questions under the scenario of a user who is unfamiliar with the environmental sensing data platform.

To reflect the characteristics of typical user inquiries on a QA platform, the number of entities per question was set to either two or three. A total of 40 random questions were generated, with 20 questions for each configuration (2-entity and 3-entity queries). The KG paths determined by our method for these generated questions were then manually compared against a ground truth to verify their accuracy. The performance was quantified using three standard metrics: Precision (P), Recall (R), and the F1-Score (F1).

As shown in Table 3, the results of this accuracy assessment demonstrate strong performance, with all three metrics (Precision, Recall, and F1-Score) exceeding 0.7 for queries containing both two and three entity types. Notably, the accuracy for queries composed of two entities was consistently higher than for those with three entities. Specifically, the recall for two-entity questions reached a high of 0.824. These findings indicate that the proposed method is particularly effective at processing queries with fewer constraints.

Table 3.

Quantitative Evaluation of KG Path Accuracy.

5. Discussion

While our proposed GraphRAG framework demonstrates significant potential for enhancing domain-specific QA, we acknowledge several limitations that offer clear avenues for future research. This discussion addresses these limitations by examining the challenges in knowledge graph construction and maintenance, the framework’s generalizability and application costs, and promising directions for future work.

5.1. Methodological Limitations and Scalability

The current methodology for constructing and maintaining the knowledge graph presents challenges related to scalability, dynamism, and potential bias, which are critical for enterprise-level deployment.

- Scalability of KG Construction: A primary consideration is the scalability of the construction process. The current semi-automatic approach relies on manually defined entity types and relationships. While this method ensures high semantic precision and control within the specific domain of the Civil IoT Taiwan platform, it introduces a potential bottleneck when scaling to vastly larger or more diverse datasets. The manual effort required for schema definition and validation may not be feasible for corpora that are orders of magnitude larger or that lack well-defined documentation.

- Maintenance in Dynamic Environments: The current knowledge graph is static, representing a snapshot of the data platform at the time of its creation. However, real-world information ecosystems are dynamic, with datasets being added, updated, or deprecated continuously. This presents a significant challenge for maintaining the graph’s currency and accuracy. Without a mechanism for dynamic updates, the graph risks becoming obsolete, leading to outdated or incorrect responses.

- Potential for Inherent Bias: The entity and relationship extraction process, being reliant on an LLM, is susceptible to the inherent biases present in the model’s training data. This could lead to a skewed or incomplete knowledge representation, where certain concepts are over-represented while others are marginalized. Such biases could subtly influence the query results and perpetuate existing informational disparities.

5.2. Generalizability, Transferability, and Application Cost

While the framework was successfully validated on a specific public IoT data platform, its broader applicability requires careful consideration of its transferability, the associated costs, and the development of a generalized workflow.

- From Specific Case to General Framework: We position the current implementation as a successful proof-of-concept that validates the core principles of our GraphRAG architecture. However, its strong focus on the Civil IoT Taiwan platform means that its direct transferability to other platforms is not guaranteed. The framework’s components exhibit varying degrees of domain independence. The core query reasoning engine, which deconstructs user intent into structural paths and filters, is largely generic. In contrast, the data preprocessing scripts and the specific entity/relationship schema are domain-dependent and would require customization for new applications.

- Cost of Application: Transferring this approach to a new platform would involve several cost components.

- 1.

- Initial Setup Costs: This includes the significant human-in-the-loop effort required from domain experts to define the initial ontology (entities, relationships), validate the extracted knowledge, and fine-tune the LLM prompts for the new domain’s specific terminology and structure.

- 2.

- Operational and Maintenance Costs: These recurring costs encompass API call fees for the underlying LLMs (e.g., TAIDE), computational resources for hosting the graph database (e.g., Neo4j), and the engineering effort needed to maintain and periodically update the knowledge graph as the source data evolves.

- Proposed Generalized Workflow: To facilitate adoption, we propose a modular and domain-agnostic workflow that can be adapted to various data sources. This pipeline would consist of distinct, configurable stages:

- Data Ingestion and Preprocessing: A flexible module to connect to diverse data sources (APIs, databases, documents) and normalize the content.

- Automated Schema Suggestion: An LLM-powered stage to analyze the source data and suggest a preliminary ontology (entity types, relationships), which domain experts can then refine.

- Iterative Knowledge Extraction: A semi-automated process where an LLM extracts entities and relationships, followed by a validation step to ensure accuracy.

- Graph-Based Query Engine: The core reasoning module that translates natural language questions into formal graph queries.

- Response Synthesis and Grounding: An LLM generates a natural language response based on the retrieved graph data, with verifiable citations back to the source nodes.

5.3. Future Research Directions

The limitations and challenges discussed above naturally lead to several promising directions for future research that aim to enhance the framework’s robustness, intelligence, and applicability.

- Automating KG Lifecycle Management: To address scalability and dynamism, future work will focus on developing techniques for automated ontology learning and schema induction from unstructured text, reducing the reliance on manual definitions. Furthermore, we plan to implement mechanisms for dynamic graph updates, such as incremental construction pipelines triggered by data changes and temporal reasoning capabilities to manage time-sensitive information.

- Enhancing Conversational Capabilities: The current system excels at processing complex, single-turn queries but is not optimized for multi-turn conversational dialogues. Future iterations will integrate more sophisticated dialogue state tracking mechanisms to maintain context, resolve coreferences, and handle ellipsis across an extended conversation, enabling a more natural and effective user interaction model.

- Quantitative Evaluation and Cross-Domain Validation: The current evaluation is qualitative and based on a limited number of test cases. A critical next step is to introduce a comprehensive quantitative evaluation framework. This will involve developing a benchmark of diverse query types and using metrics such as answer accuracy, completeness, and source traceability. We also plan to conduct user studies to measure task success rates and user satisfaction. Inspired by recent work, we may employ LLM-based evaluators to assess criteria like Directness, Comprehensiveness, and Diversity in the generated answers. Subsequently, we will apply the generalized workflow to new domains, such as biomedical or financial data, to rigorously test its adaptability and refine the automated schema mapping techniques.

6. Conclusions

This paper introduced a robust framework for enhancing natural language question-answering by grounding queries in a semantically rich knowledge graph. We addressed the critical challenge of semantic ambiguity in user queries, which often leads to inaccurate or irrelevant results in traditional data retrieval systems. Our approach successfully bridges the gap between unstructured natural language and structured data by implementing a multi-stage, structure-oriented query reasoning architecture. This architecture ensures that every generated query is not only syntactically correct but also semantically aligned with the user’s original intent and the underlying structure of the knowledge graph.

The primary contribution of this work is the development of a methodology that systematically deconstructs a user’s query into its core components: the query target, structural paths, and value-based constraints. By first identifying the true informational goal of the query—distinguishing it from entities that merely serve as filters—our system can construct logical pathways through the graph that accurately reflect the relationships between concepts. The subsequent translation of specific values (e.g., “Air Quality,” “Ministry of Environment”) into precise filtering conditions ensures that the query remains focused and constrained as intended by the user. Furthermore, the design of our knowledge graph, where nodes and edges are enriched with descriptive metadata and source information, provides an essential layer of transparency and verifiability, making it a trusted source for enterprise-level QA. This structured approach has proven effective in handling complex queries involving multiple entities and conditions within a single turn.

Looking ahead, a significant opportunity lies in the deeper integration of our knowledge graph-based framework with LLMs. While our system ensures the retrieval of factually accurate and verifiable data, LLMs can be leveraged to synthesize these results into more natural, explanatory, and user-friendly narratives. The knowledge graph can serve as a powerful factual backbone to mitigate LLM hallucinations, providing grounded information that the LLM can then articulate. This synergistic combination would merge the structured reasoning and verifiability of our framework with the generative fluency of LLMs, paving the way for a new generation of highly reliable and intelligent conversational AI systems for complex data exploration and discovery.

Author Contributions

Conceptualization, H.-H.Y.; validation, H.-H.Y. and C.-W.K.; Programing K.-M.L.; formal analysis and investigation W.-T.L., K.-M.L. and C.-W.K.; writing—original draft preparation, W.-T.L. and K.-M.L.; writing—review and editing, W.-T.L. and H.-H.Y.; Project administration, C.-C.Y. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding author.

Acknowledgments

The successful completion of this research was made possible by the academic resources and advanced research infrastructure provided by the National Center for High-Performance Computing, National Institutes of Applied Research (NIAR), Taiwan. We gratefully acknowledge their invaluable support.

Conflicts of Interest

The authors declare no conflicts of interest.

Correction Statement

This article has been republished with a minor correction to the Data Availability Statement. This change does not affect the scientific content of the article.

Abbreviations

The following abbreviations are used in this manuscript:

| CCTV | Closed-circuit television |

| GPE | Geopolitical entities |

| GraphRAG | Graph retrieval-augmented generation |

| IoT | Internet of thing |

| KG | Knowledge graph |

| LLM | Large language model |

| NER | Named entity recognition |

| NSTC | National Science and Technology Council |

| OGC | Open Geospatial Consortium |

| QA | Question answering |

| RAG | Retrieval-augmented generation |

| TAIDE | Trustworthy AI dialogue engine |

| UML | Unified modeling language |

Appendix A. Process of Query Available Data

Appendix A.1. GraphRAG

- ********** Original Sentence **********

I want to know which ministries provide air quality datasets that include the ‘PM2.5’ observation property?

- ********** Annotation Rationale **********

First, the sentence expresses the user’s desire to query whether the “air quality dataset” contains the “PM2.5” observation property. This indicates that the user is concerned with datasets related to air quality and whether these datasets include a specific observation property. Therefore, the “Air Quality” theme should be tagged first, and from this, the “Dataset” tag can be inferred.

Next, the sentence mentions “which ministries,” indicating that the user wants to know the ministries that provide these air quality datasets. According to the tag category list, a ministry is a competent authority within the government, responsible for promoting policies in a specific field and for the coordination and supervision of data from its subordinate agencies. Therefore, the “Ministry” tag should be applied.

Finally, “PM2.5” mentioned in the sentence is an observation property of the dataset. According to the tag category list, an observation property corresponds to SensorThings’ ObservedProperty, so the “ObservationProperty” tag should be applied.

In summary, four tags should be applied: “Theme,” “Dataset,” “Ministry,” and “ObservationProperty.”

[Theme:Quality], [Dataset], [Ministry], [ObservationProperty:PM2.5]

- ********** Path Design Rationale **********

The user wants to know which ministries provide air quality datasets that include the ‘PM2.5’ observation property. This means the user wishes to query for ministries that provide air quality data, and these datasets must include the ‘PM2.5’ observation property. Therefore, the query target is [Dataset], which needs to be filtered by [Theme], [Ministry], and [ObservationProperty].

First, based on the “Air Quality” theme proposed by the user, it is necessary to find datasets related to that theme. Then, based on the “PM2.5” observation property provided by the user, filter out the datasets that contain this property. Finally, based on the ministry to which the dataset belongs, find the ministries that provide these datasets.

Therefore, the query path should start from [Theme], connect to [Dataset], then connect to [Ministry], and filter on [ObservationProperty].

- ********** Path Design **********

[Theme] → [Dataset]; [Dataset] ← [Ministry]; [Dataset] → [ObservationProperty]

- ********** Path Filter **********

Theme = “Air Quality” AND ObservationProperty = “PM2.5”

- ********** Final Entity Tag **********

[Dataset]

- ********** Convert to Syntax **********

MATCH

(topic) -[]-> (dataset),

(dataset) <-[]- (dept),

(dataset) -[]-> (obsProps)

WHERE topic.id = “Air Quality” AND obsProps.id = “PM2.5”

RETURN DISTINCT dataset.id AS Dataset, topic.id AS Theme, dept.id AS Ministry, obsProps.id AS ObservationProperty

- ********** Searched Data **********

[

{

“Dataset”: “Ministry of Environment_National Air Quality Monitoring Stations”,

“Theme”: “Air Quality”,

“Ministry”: “Ministry of Environment”,

“ObservationProperty”: “PM2.5”

},

{

“Dataset”: “Ministry of Environment_Smart City Air Quality Micro-Sensors”,

“Theme”: “Air Quality”,

“Ministry”: “Ministry of Environment”,

“ObservationProperty”: “PM2.5”

}

]

- ********** TAIDE Response **********

Based on your query results, among the air quality datasets, two datasets provided by one ministry contain the ‘PM2.5’ observation property:

The “National Air Quality Monitoring Stations” dataset under the Ministry of Environment.

The “Smart City Air Quality Micro-Sensors” dataset under the Ministry of Environment.

Both datasets are managed by the Ministry of Environment and include PM2.5 observation data.

Appendix A.2. Advanced RAG

- ********** Original Sentence **********

I want to know which departments provide air quality datasets that include the ‘PM2.5’ observation property?

- ********** Searched Data **********

A total of 47 datasets have been accumulated, with over 2 billion sensor data entries. Among these, there are 11 datasets in the air quality category, 27 in the water resources category, 6 in the seismic activity category, and 3 in the disaster warning and reporting category.

- ********** TAIDE Response **********

Based on the classification of datasets in the Civil IoT Taiwan platform, the air quality datasets that provide the “PM2.5” observation property are primarily contributed by the following departments:

- Environmental Protection Administration Air Quality Monitoring Stations: This department collects extensive PM2.5 data through air quality monitoring stations across the country. These data cover variations in PM2.5 concentrations across different regions and times, aiding in the analysis and monitoring of PM2.5’s impact on air quality.

- Local Government Environmental Protection Bureaus: Many local government environmental protection bureaus also operate air quality monitoring stations, collecting data on PM2.5 and other air quality indicators. These data help local governments understand air quality conditions and implement policies and measures to improve air quality as needed.

- Central Weather Bureau of the Ministry of Transportation and Communications: Although the Central Weather Bureau primarily focuses on meteorological observations, it also provides air quality-related data, including PM2.5 concentrations. These data help understand the impact of meteorological conditions on PM2.5 concentrations.

The datasets provided by these departments offer rich PM2.5 observation data, enabling researchers and policymakers to analyze the impact of PM2.5 on public health and formulate effective policies to reduce PM2.5 emissions.

References

- Li, H.; Yue, P.; Wu, H.; Teng, B.; Zhao, Y.; Liu, C. A Question-Answering Framework for Geospatial Data Retrieval Enhanced by a Knowledge Graph and Large Language Models. Int. J. Digit. Earth 2025, 18, 2510566. [Google Scholar] [CrossRef]

- Guo, H.; Nativi, S.; Liang, D.; Craglia, M.; Wang, L.; Schade, S.; Corban, C.; He, G.; Pesaresi, M.; Li, J.; et al. Big Earth Data Science: An Information Framework for a Sustainable Planet. Int. J. Digit. Earth 2020, 13, 743–767. [Google Scholar] [CrossRef]

- Sudmanns, M.; Tiede, D.; Lang, S.; Bergstedt, H.; Trost, G.; Augustin, H.; Baraldi, A.; Blaschke, T. Big Earth Data: Disruptive Changes in Earth Observation Data Management and Analysis? Int. J. Digit. Earth 2020, 13, 832–850. [Google Scholar] [CrossRef] [PubMed]

- Edge, D.; Trinh, H.; Cheng, N.; Bradley, J.; Chao, A.; Mody, A.; Truitt, S.; Metropolitansky, D.; Ness, R.O.; Larson, J. From Local to Global: A Graph RAG Approach to Query-Focused Summarization. arXiv 2024, arXiv:2404.16130. [Google Scholar] [CrossRef]

- Mohammadjafari, A.; Maida, A.S.; Gottumukkala, R. From Natural Language to SQL: Review of LLM-Based Text-to-SQL Systems. arXiv 2024, arXiv:2410.01066. [Google Scholar]

- Tai, C.-Y.; Chen, Z.; Zhang, T.; Deng, X.; Sun, H. Exploring Chain of Thought Style Prompting for Text-to-SQL. In Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing, Singapore, 6–10 September 2023; Bouamor, H., Pino, J., Bali, K., Eds.; Association for Computational Linguistics: Stroudsburg, PA, USA, 2023; pp. 5376–5393. [Google Scholar]

- Liu, M.; Xu, J. NLI4DB: A Systematic Review of Natural Language Interfaces for Databases. arXiv 2025, arXiv:2503.02435. [Google Scholar] [CrossRef]

- Xu, Z.; Jain, S.; Kankanhalli, M. Hallucination Is Inevitable: An Innate Limitation of Large Language Models. arXiv 2024, arXiv:2401.11817. [Google Scholar] [CrossRef]

- Xiao, Y.; Wang, W.Y. On Hallucination and Predictive Uncertainty in Conditional Language Generation. In Proceedings of the 16th Conference of the European Chapter of the Association for Computational Linguistics: Main Volume, Online, 19–23 April 2021; Merlo, P., Tiedemann, J., Tsarfaty, R., Eds.; Association for Computational Linguistics: Stroudsburg, PA, USA, 2021; pp. 2734–2744. [Google Scholar]

- Tonmoy, S.M.T.I.; Zaman, S.M.M.; Jain, V.; Rani, A.; Rawte, V.; Chadha, A.; Das, A. A Comprehensive Survey of Hallucination Mitigation Techniques in Large Language Models. arXiv 2024, arXiv:2401.01313. [Google Scholar] [CrossRef]

- Shen, Y.; Heacock, L.; Elias, J.; Hentel, K.D.; Reig, B.; Shih, G.; Moy, L. ChatGPT and Other Large Language Models Are Double-Edged Swords. Radiology 2023, 307, e230163. [Google Scholar] [CrossRef]

- Pusch, L.; Conrad, T.O.F. Combining LLMs and Knowledge Graphs to Reduce Hallucinations in Question Answering. arXiv 2024, arXiv:2409.04181. [Google Scholar] [CrossRef]

- Farquhar, S.; Kossen, J.; Kuhn, L.; Gal, Y. Detecting Hallucinations in Large Language Models Using Semantic Entropy. Nature 2024, 630, 625–630. [Google Scholar] [CrossRef] [PubMed]

- Guu, K.; Lee, K.; Tung, Z.; Pasupat, P.; Chang, M.-W. REALM: Retrieval-Augmented Language Model Pre-Training. In Proceedings of the 37th International Conference on Machine Learning, Virtual, 13–18 July 2020; JMLR.org: Norfolk, MA, USA, 2020; Volume 119, pp. 3929–3938. [Google Scholar]

- Lewis, P.; Perez, E.; Piktus, A.; Petroni, F.; Karpukhin, V.; Goyal, N.; Küttler, H.; Lewis, M.; Yih, W.; Rocktäschel, T.; et al. Retrieval-Augmented Generation for Knowledge-Intensive NLP Tasks. In Proceedings of the 34th International Conference on Neural Information Processing Systems, Vancouver, BC, Canada, 6–12 December 2020; Curran Associates Inc.: Red Hook, NY, USA, 2020; pp. 9459–9474. [Google Scholar]

- Wang, Z.; Araki, J.; Jiang, Z.; Parvez, M.R.; Neubig, G. Learning to Filter Context for Retrieval-Augmented Generation. arXiv 2023, arXiv:2311.08377. [Google Scholar] [CrossRef]

- Siriwardhana, S.; Weerasekera, R.; Wen, E.; Kaluarachchi, T.; Rana, R.; Nanayakkara, S. Improving the Domain Adaptation of Retrieval Augmented Generation (RAG) Models for Open Domain Question Answering. Trans. Assoc. Comput. Linguist. 2023, 11, 1–17. [Google Scholar] [CrossRef]

- Gao, Y.; Xiong, Y.; Gao, X.; Jia, K.; Pan, J.; Bi, Y.; Dai, Y.; Sun, J.; Wang, M.; Wang, H. Retrieval-Augmented Generation for Large Language Models: A Survey. arXiv 2024, arXiv:2312.10997. [Google Scholar] [CrossRef]

- Han, H.; Wang, Y.; Shomer, H.; Guo, K.; Ding, J.; Lei, Y.; Halappanavar, M.; Rossi, R.A.; Mukherjee, S.; Tang, X.; et al. Retrieval-Augmented Generation with Graphs (GraphRAG). arXiv 2025, arXiv:2501.00309. [Google Scholar]

- Ni, B.; Wang, Y.; Cheng, L.; Blasch, E.; Derr, T. Towards Trustworthy Knowledge Graph Reasoning: An Uncertainty Aware Perspective. In Proceedings of the AAAI Conference on Artificial Intelligence, Philadelphia, PA, USA, 25 February–4 March 2025; Volume 39, pp. 12417–12425. [Google Scholar] [CrossRef]

- Ram, O.; Levine, Y.; Dalmedigos, I.; Muhlgay, D.; Shashua, A.; Leyton-Brown, K.; Shoham, Y. In-Context Retrieval-Augmented Language Models. Trans. Assoc. Comput. Linguist. 2023, 11, 1316–1331. [Google Scholar] [CrossRef]

- Abu-Rasheed, H.; Weber, C.; Fathi, M. Knowledge Graphs as Context Sources for LLM-Based Explanations of Learning Recommendations. In Proceedings of the 2024 IEEE Global Engineering Education Conference (EDUCON), Kos, Greece, 8–11 May 2024; pp. 1–5. [Google Scholar]

- Kommineni, V.K.; König-Ries, B.; Samuel, S. From Human Experts to Machines: An LLM Supported Approach to Ontology and Knowledge Graph Construction. arXiv 2024, arXiv:2403.08345. [Google Scholar] [CrossRef]

- Ji, S.; Pan, S.; Cambria, E.; Marttinen, P.; Yu, P.S. A Survey on Knowledge Graphs: Representation, Acquisition, and Applications. IEEE Trans. Neural Netw. Learn. Syst. 2022, 33, 494–514. [Google Scholar] [CrossRef]

- Pan, S.; Luo, L.; Wang, Y.; Chen, C.; Wang, J.; Wu, X. Unifying Large Language Models and Knowledge Graphs: A Roadmap. IEEE Trans. Knowl. Data Eng. 2024, 36, 3580–3599. [Google Scholar] [CrossRef]

- Luo, L.; Li, Y.-F.; Haffari, G.; Pan, S. Reasoning on Graphs: Faithful and Interpretable Large Language Model Reasoning. arXiv 2023, arXiv:2310.01061. [Google Scholar]

- Guo, K.; Shomer, H.; Zeng, S.; Han, H.; Wang, Y.; Tang, J. Empowering GraphRAG with Knowledge Filtering and Integration. arXiv 2025, arXiv:2503.13804. [Google Scholar] [CrossRef]

- Luo, H.; Zhang, J.; Li, C. Causal Graphs Meet Thoughts: Enhancing Complex Reasoning in Graph-Augmented LLMs. arXiv 2025, arXiv:2501.14892. [Google Scholar]

- Wang, Y.; Lipka, N.; Rossi, R.A.; Siu, A.; Zhang, R.; Derr, T. Knowledge Graph Prompting for Multi-Document Question Answering. In Proceedings of the Thirty-Eighth AAAI Conference on Artificial Intelligence and Thirty-Sixth Conference on Innovative Applications of Artificial Intelligence and Fourteenth Symposium on Educational Advances in Artificial Intelligence, Vancouver, BC, Canada, 20–27 February 2024; AAAI Press: Washington, DC, USA, 2024; Volume 38, pp. 19206–19214. [Google Scholar]

- Sequeda, J.; Allemang, D.; Jacob, B. Knowledge Graphs as a Source of Trust for LLM-Powered Enterprise Question Answering. J. Web Semant. 2025, 85, 100858. [Google Scholar] [CrossRef]

- Zahera, H.M.; Ali, M.; Sherif, M.A.; Moussallem, D.; Ngonga, A. Generating SPARQL from Natural Language Using Chain-of-Thoughts Prompting. In Proceedings of the International Conference on Semantic Systems, Amsterdam, The Netherlands, 17–19 September 2024. [Google Scholar]

- Lee, C.-M.; Kuo, W.-L.; Tung, T.-J.; Huang, B.-K.; Hsu, S.-H.; Hsieh, S.-H. Government Open Data and Sensing Data Integration Framework for Smart Construction Site Management. In Proceedings of the 36th International Symposium on Automation and Robotics in Construction, Banff, AB, Canada, 21–24 May 2019. [Google Scholar]

- Lin, Y.-F.; Chang, T.-Y.; Su, W.-R.; Shang, R.-K. IoT for Environmental Management and Security Governance: An Integrated Project in Taiwan. Sustainability 2021, 14, 217. [Google Scholar] [CrossRef]

- Liang, S.; Khalafbeigi, T.; van der Schaaf, H. OGC SensorThings API Part 1: Sensing Version 1.1. Available online: https://docs.ogc.org/is/18-088/18-088.html (accessed on 1 August 2025).

- Civil IoT Taiwan-Data Service Platform. Available online: https://ci.taiwan.gov.tw/dsp/Views/_EN/index.aspx (accessed on 31 July 2025).

- TAIDE. Available online: https://en.taide.tw/ (accessed on 31 July 2025).

- Lee, C.-S.; Wang, M.-H.; Chen, C.-Y.; Yang, S.-C.; Reformat, M.; Kubota, N.; Pourabdollah, A. Integrating Quantum CI and Generative AI for Taiwanese/English Co-Learning. Quantum Mach. Intell. 2024, 6, 64. [Google Scholar] [CrossRef]

- Hu, Y.; Mai, G.; Cundy, C.; Choi, K.; Lao, N.; Liu, W.; Lakhanpal, G.; Zhou, R.Z.; Joseph, K. Geo-Knowledge-Guided GPT Models Improve the Extraction of Location Descriptions from Disaster-Related Social Media Messages. Int. J. Geogr. Inf. Sci. 2023, 37, 2289–2318. [Google Scholar] [CrossRef]

- Aralikatte, R.; Lamm, M.; Hardt, D.; Søgaard, A. Ellipsis Resolution as Question Answering: An Evaluation. In Proceedings of the 16th Conference of the European Chapter of the Association for Computational Linguistics: Main Volume, Online, 19–23 April 2021; Merlo, P., Tiedemann, J., Tsarfaty, R., Eds.; Association for Computational Linguistics: Stroudsburg, PA, USA, 2021; pp. 810–817. [Google Scholar]

- Lin, C.-J.; Huang, C.-H.; Wu, C.-H. Using BERT to Process Chinese Ellipsis and Coreference in Clinic Dialogues. In Proceedings of the 2019 IEEE 20th International Conference on Information Reuse and Integration for Data Science (IRI), Los Angeles, CA, USA, 30 July–1 August 2019; pp. 414–418. [Google Scholar]

- Zhang, W.; Wiseman, S.; Stratos, K. Seq2seq Is All You Need for Coreference Resolution. arXiv 2023, arXiv:2310.13774. [Google Scholar] [CrossRef]

- Finkel, J.R.; Grenager, T.; Manning, C. Incorporating Non-Local Information into Information Extraction Systems by Gibbs Sampling. In Proceedings of the 43rd Annual Meeting on Association for Computational Linguistics—ACL ’05, Ann Arbor, MI, USA, 25–30 June 2005; Association for Computational Linguistics: Stroudsburg, PA, USA, 2005; pp. 363–370. [Google Scholar]

- spyCy Industrial-Strength Natural Language Processing in Python. Available online: https://spacy.io/ (accessed on 1 August 2025).

- Covas, E. Named Entity Recognition Using GPT for Identifying Comparable Companies. arXiv 2023, arXiv:2307.07420. [Google Scholar] [CrossRef]

- Wang, S.; Sun, X.; Li, X.; Ouyang, R.; Wu, F.; Zhang, T.; Li, J.; Wang, G.; Guo, C. GPT-NER: Named Entity Recognition via Large Language Models. In Proceedings of the Findings of the Association for Computational Linguistics: NAACL 2025, Albuquerque, NM, USA, 29 April–4 May 2025; Chiruzzo, L., Ritter, A., Wang, L., Eds.; Association for Computational Linguistics: Stroudsburg, PA, USA, 2025; pp. 4257–4275. [Google Scholar]

- Karpukhin, V.; Oguz, B.; Min, S.; Lewis, P.; Wu, L.; Edunov, S.; Chen, D.; Yih, W. Dense Passage Retrieval for Open-Domain Question Answering. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing (EMNLP), Online, 16–20 November 2020; Webber, B., Cohn, T., He, Y., Liu, Y., Eds.; Association for Computational Linguistics: Stroudsburg, PA, USA, 2020; pp. 6769–6781. [Google Scholar]

- Izacard, G.; Grave, E. Leveraging Passage Retrieval with Generative Models for Open Domain Question Answering. arXiv 2021, arXiv:2007.01282. [Google Scholar] [CrossRef]

- Wang, Y.; Kordi, Y.; Mishra, S.; Liu, A.; Smith, N.A.; Khashabi, D.; Hajishirzi, H. Self-Instruct: Aligning Language Models with Self-Generated Instructions. In Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Toronto, ON, Canada, 9–14 July 2023; Rogers, A., Boyd-Graber, J., Okazaki, N., Eds.; Association for Computational Linguistics: Stroudsburg, PA, USA, 2023; pp. 13484–13508. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).