Prompt-Driven and Kubernetes Error Report-Aware Container Orchestration †

Abstract

1. Introduction

2. Background and Related Work

2.1. Large Language Models

2.2. Prompt Engineering

2.3. Related Work

3. Prompting Set-Up

- Deployment resources: Every manifest must include a Deployment object, ensuring that the service runs with managed pod replicas, automatic restarts, and version-controlled rollouts.

- Persistent storage: If the service requires data persistence, a PersistentVolumeClaim (PVC) must be generated. This ensures that critical application data is not lost when pods are rescheduled.

- Volume mounting: Any defined PersistentVolumeClaim must be properly mounted into the application container, thereby linking storage to the running service.

- Security context: Containers must explicitly declare a securityContext with privileged set to false. This restriction reduces the risk of privilege escalation attacks and enforces least-privilege principles.

- Resource management: Containers must declare both resource requests and limits. This guarantees predictable performance, prevents resource starvation, and improves cluster stability under load.

- Service exposure: A Service object must be generated to expose the required network ports, thereby enabling communication between the deployed application and other workloads or clients.

- Network policies: Finally, a NetworkPolicy must be defined to restrict incoming and outgoing traffic to only the namespaces that are strictly required. This constraint enforces a zero-trust networking model and limits the attack surface.

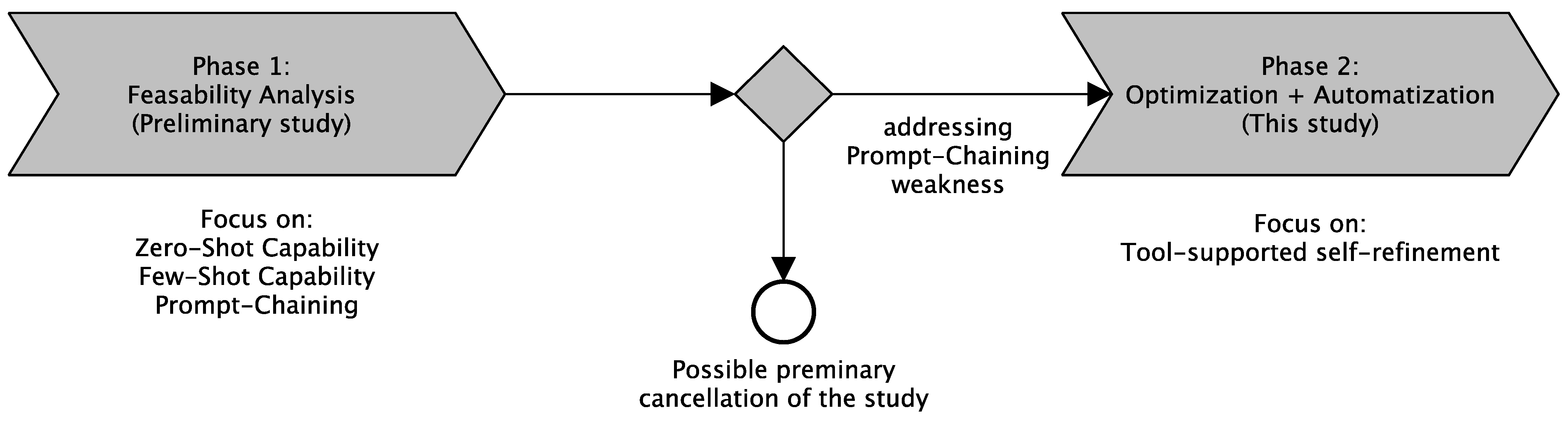

4. Methodology

4.1. Phase 1: Feasibility Analysis

- RQ 1 (Zero-Shot Capability):We aim to determine how well LLMs can generate Kubernetes manifests out-of-the-box.

- RQ 2 (Few-Shot Capability):We are therefore interested in examining whether larger LLMs produce better results in few-shot settings.

- RQ 3 (Prompt-Chaining Capability):We aim to determine whether Kubernetes manifests can be gradually refined with prompt chaining in order to add capabilities that LLMs do not “retrieve from their memory” by default in zero-shot settings.

4.1.1. Analyzed Use Case (NoSQL DB) Considering Real-World Constraints

- The database or application containers should not run with elevated privileges (securityContext.privileged: false).

- The database/application should be accessible only within its namespace, necessitating the correct generation of a NetworkPolicy.

- The database/application containers should not monopolize resources, requiring the generation of memory and CPU resource limits.

- Deployment (or StatefulSet including a PersistentVolumeClaimTemplate).

- Correct Volume mounts in Deployment/StatefulSets.

- PersistentVolumeClaim (unless the LLM opts for a StatefulSet).

- Service.

4.1.2. Generation and Evaluation Strategy

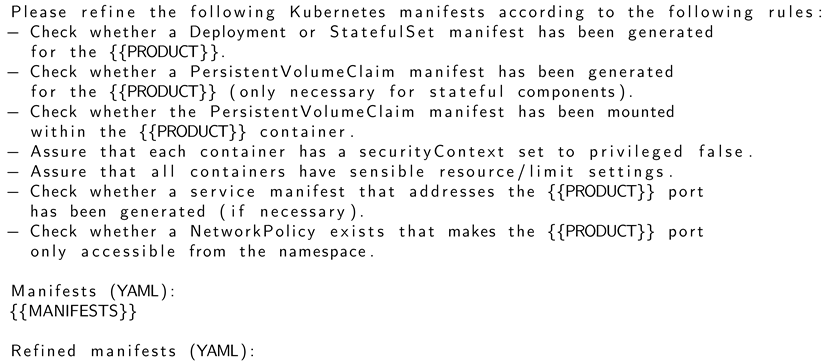

- Verify that a Deployment manifest has been generated for the database.

- Verify that a PersistentVolumeClaim manifest has been generated for the database.

- Ensure that the PersistentVolumeClaim manifest is mounted within the database container.

- Ensure that the container’s securityContext is set to privileged false.

- Ensure that the containers have appropriate resource/limit settings.

- Verify that a service manifest addressing the database port has been generated.

- Ensure that a Network Policy restricts database port access within the namespace.

- Zero-Shot: The prompt did not explicitly specify the constraints to be met. Consequently, the refinement stage depicted in Figure 2 was not executed.

- Zero-Shot + Constraints: The prompt explicitly specified the constraints to be met. However, no incremental refinement was carried out for each constraint individually. Therefore, the refinement stage shown in Figure 2 was not executed in this case either.

- Few-Shot + Refinement: The prompt did not specify the constraints to be met. However, the draft stage results were explicitly refined iteratively for each constraint during the refinement stage illustrated in Figure 2.

4.2. Phase 2: Optimization and Automatization

- RQ 4 (Self-Refinement Capability):Is it possible to increase the quality of generated container orchestration manifest files with tool-based self-refinement?

5. Results

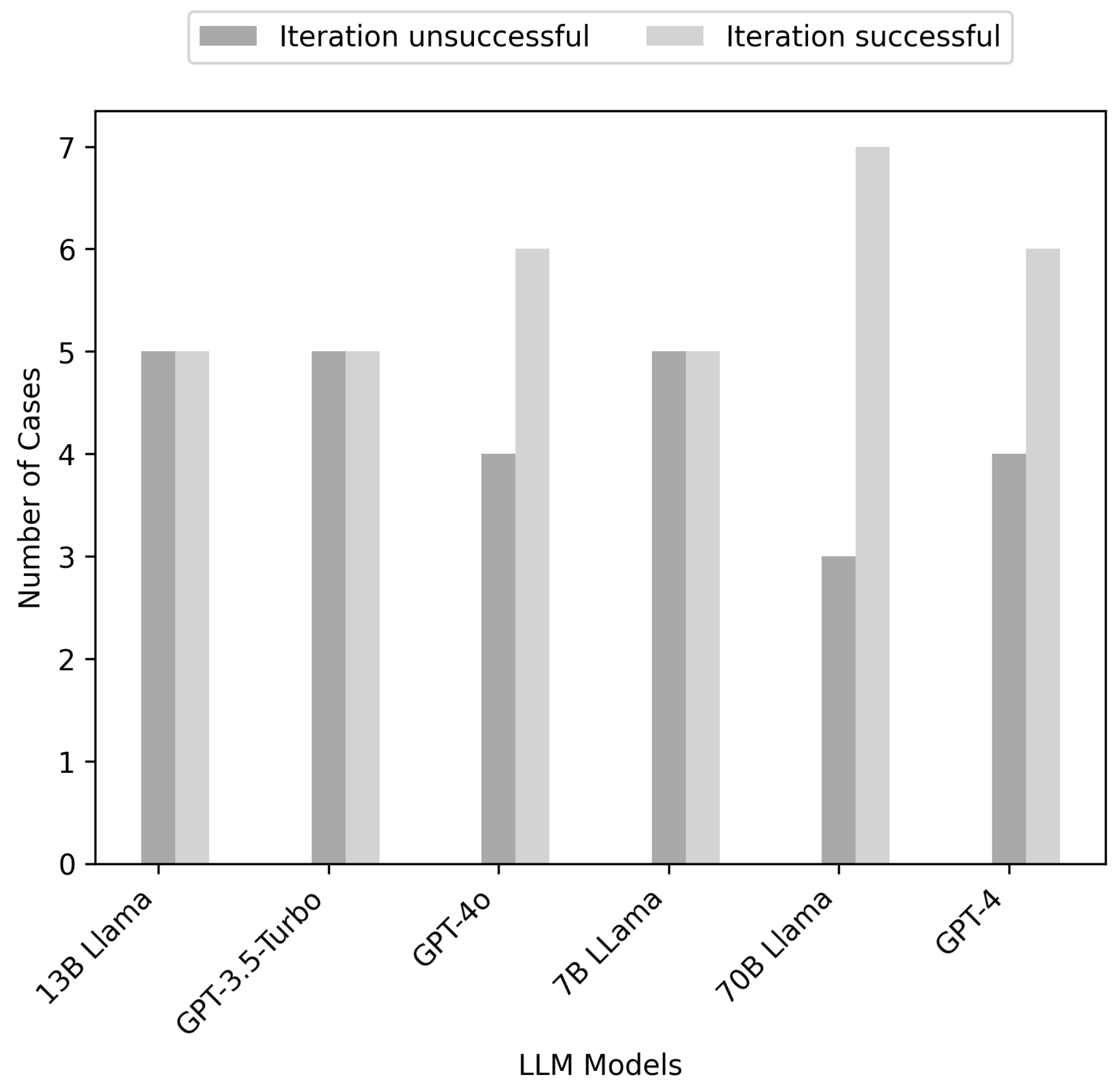

5.1. Explanation of Phase 1 Results

5.2. Explanation of Phase 2 Results

5.3. Discussion of Results

5.4. Limitations to Consider

6. Conclusions and Outlook

Key Take-Aways

- LLMs can generate Kubernetes manifests out-of-the-box, but most outputs need refinement or corrections.

- GPT-4 and GPT-3.5 performed best, sometimes enabling fully automated deployments.

- Open-source models (LLaMA2/3, Mistral) worked, but had lower success rates; performance did not always scale with model size.

- Prompt-only iterative refinement was ineffective: it sometimes worsened results for smaller models.

- Tool-based self-refinement (using Kubernetes dry-runs) greatly improved outcomes, fixing most failed cases automatically.

- Some failures remained unrecoverable without human input, usually due to missing contextual info (e.g., namespaces).

- Best practice: Combine LLMs with automated validation tools for reliable DevOps automation.

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Correction Statement

Appendix A

Appendix A.1. Zero-Shot Prompt

- Create required manifests to deploy a MongoDB database in Kubernetes.

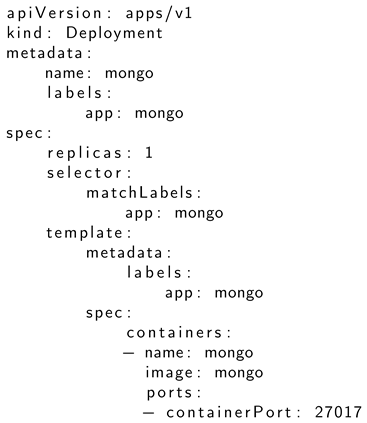

- and “hope” that a valid Kubernetes manifest file like this one will be created. In fact, GPT-4 generated the following example (Listing A1):

| Listing A1. Generated example MongoDB Deployment in Kubernetes. |

|

- Create required manifests to deploy a {{PRODUCT}} in Kubernetes.

Appendix A.2. Refinement Prompting

| Listing A2. Refinement Prompt-Template. |

|

Appendix A.3. Error Correction Prompting

| Listing A3. Error Correction Prompt Template. |

|

References

- Kratzke, N. Cloud-Native Computing: Software Engineering von Diensten und Applikationen für die Cloud; Carl Hanser Verlag GmbH Co KG: München, Germany, 2023. [Google Scholar]

- Chang, Y.; Wang, X.; Wang, J.; Wu, Y.; Zhu, K.; Chen, H.; Yang, L.; Yi, X.; Wang, C.; Wang, Y.; et al. A survey on evaluation of large language models. arXiv 2023, arXiv:2307.03109. [Google Scholar] [CrossRef]

- Kaddour, J.; Harris, J.; Mozes, M.; Bradley, H.; Raileanu, R.; McHardy, R. Challenges and applications of large language models. arXiv 2023, arXiv:2307.10169. [Google Scholar] [CrossRef]

- Naveed, H.; Khan, A.U.; Qiu, S.; Saqib, M.; Anwar, S.; Usman, M.; Barnes, N.; Mian, A. A comprehensive overview of large language models. arXiv 2023, arXiv:2307.06435. [Google Scholar] [CrossRef]

- Zhao, X.; Lu, J.; Deng, C.; Zheng, C.; Wang, J.; Chowdhury, T.; Yun, L.; Cui, H.; Xuchao, Z.; Zhao, T.; et al. Domain specialization as the key to make large language models disruptive: A comprehensive survey. arXiv 2023, arXiv:2305.18703. [Google Scholar]

- Quint, P.C.; Kratzke, N. Towards a Lightweight Multi-Cloud DSL for Elastic and Transferable Cloud-native Applications. arXiv 2019, arXiv:1802.03562. [Google Scholar]

- Kratzke, N.; Drews, A. Don’t Train, Just Prompt: Towards a Prompt Engineering Approach for a More Generative Container Orchestration Management. In Proceedings of the 14th International Conference on Cloud Computing and Services Science—Volume 1: CLOSER. INSTICC, SciTePress, Angers, France, 2–4 May 2024; pp. 248–256. [Google Scholar] [CrossRef]

- Madaan, A.; Tandon, N.; Gupta, P.; Hallinan, S.; Gao, L.; Wiegreffe, S.; Alon, U.; Dziri, N.; Prabhumoye, S.; Yang, Y.; et al. Self-Refine: Iterative Refinement with Self-Feedback. arXiv 2023, arXiv:2303.17651. [Google Scholar] [CrossRef]

- Tosatto, A.; Ruiu, P.; Attanasio, A. Container-based orchestration in cloud: State of the art and challenges. In Proceedings of the 2015 Ninth International Conference on Complex, Intelligent, and Software Intensive Systems, Santa Catarina, Brazil, 8–10 July 2015; pp. 70–75. [Google Scholar]

- Sultan, S.; Ahmad, I.; Dimitriou, T. Container security: Issues, challenges, and the road ahead. IEEE Access 2019, 7, 52976–52996. [Google Scholar] [CrossRef]

- Petroni, F.; Rocktäschel, T.; Lewis, P.; Bakhtin, A.; Wu, Y.; Miller, A.H.; Riedel, S. Language models as knowledge bases? arXiv 2019, arXiv:1909.01066. [Google Scholar] [CrossRef]

- Hou, X.; Zhao, Y.; Liu, Y.; Yang, Z.; Wang, K.; Li, L.; Luo, X.; Lo, D.; Grundy, J.; Wang, H. Large language models for software engineering: A systematic literature review. arXiv 2023, arXiv:2308.10620. [Google Scholar] [CrossRef]

- Liu, P.; Yuan, W.; Fu, J.; Jiang, Z.; Hayashi, H.; Neubig, G. Pre-train, Prompt, and Predict: A Systematic Survey of Prompting Methods in Natural Language Processing. ACM J. 2023, 55, 1–35. [Google Scholar] [CrossRef]

- Chen, B.; Zhang, Z.; Langrené, N.; Zhu, S. Unleashing the potential of prompt engineering in Large Language Models: A comprehensive review. arXiv 2023, arXiv:2310.14735. [Google Scholar] [CrossRef]

- Lanciano, G.; Stein, M.; Hilt, V.; Cucinotta, T. Analyzing Declarative Deployment Code with Large Language Models. CLOSER 2023, 2023, 289–296. [Google Scholar]

- Xu, Y.; Chen, Y.; Zhang, X.; Lin, X.; Hu, P.; Ma, Y.; Lu, S.; Du, W.; Mao, Z.M.; Zhai, E.; et al. CloudEval-YAML: A Realistic and Scalable Benchmark for Cloud Configuration Generation. arXiv 2023, arXiv:2401.06786. [Google Scholar]

- Komal, S.; Zakeya, N.; Raphael, R.; Harit, A.; Mohammadreza, R.; Marin, L.; Larisa, S.; Ian, W. ADARMA Auto-Detection and Auto-Remediation of Microservice Anomalies by Leveraging Large Language Models. In Proceedings of the 33rd Annual International Conference on Computer Science and Software Engineering, Las Vegas, NV, USA, 11–14 September 2023; CASCON ’23. pp. 200–205. [Google Scholar]

- Liu, X.; Thomas, A.; Zhang, C.; Cheng, J.; Zhao, Y.; Gao, X. Refining Salience-Aware Sparse Fine-Tuning Strategies for Language Models. In Proceedings of the 63rd Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Vienna, Austria, 27 July–1 August 2025; pp. 31932–31945. [Google Scholar] [CrossRef]

- Wei, J.; Bosma, M.; Zhao, V.; Guu, K.; Yu, A.W.; Lester, B.; Du, N.; Dai, A.M.; Le, Q.V. Finetuned Language Models Are Zero-Shot Learners. arXiv 2021, arXiv:2109.01652. [Google Scholar]

- Touvron, H.; Lavril, T.; Izacard, G.; Martinet, X.; Lachaux, M.A.; Lacroix, T.; Rozière, B.; Goyal, N.; Hambro, E.; Azhar, F.; et al. LLaMA: Open and Efficient Foundation Language Models. arXiv 2023, arXiv:2302.13971. [Google Scholar] [CrossRef]

- Kaplan, J.; McCandlish, S.; Henighan, T.J.; Brown, T.B.; Chess, B.; Child, R.; Gray, S.; Radford, A.; Wu, J.; Amodei, D. Scaling Laws for Neural Language Models. arXiv 2020, arXiv:2001.08361. [Google Scholar] [CrossRef]

- Topsakal, O.; Akinci, T.C. Creating Large Language Model Applications Utilizing LangChain: A Primer on Developing LLM Apps Fast. In Proceedings of the International Conference on Applied Engineering and Natural Sciences, Konya, Turkey, 10–12 July 2023. [Google Scholar]

- Wei, J.; Wang, X.; Schuurmans, D.; Bosma, M.; Hsin Chi, E.H.; Xia, F.; Le, Q.; Zhou, D. Chain of Thought Prompting Elicits Reasoning in Large Language Models. arXiv 2022, arXiv:2201.11903. [Google Scholar]

- Kojima, T.; Gu, S.S.; Reid, M.; Matsuo, Y.; Iwasawa, Y. Large Language Models are Zero-Shot Reasoners. arXiv 2022, arXiv:2205.11916. [Google Scholar]

- Wang, X.; Wei, J.; Schuurmans, D.; Le, Q.; Hsin Chi, E.H.; Zhou, D. Self-Consistency Improves Chain of Thought Reasoning in Language Models. arXiv 2022, arXiv:2203.11171. [Google Scholar]

- Liu, J.; Liu, A.; Lu, X.; Welleck, S.; West, P.; Bras, R.L.; Choi, Y.; Hajishirzi, H. Generated Knowledge Prompting for Commonsense Reasoning. In Proceedings of the Annual Meeting of the Association for Computational Linguistics, Online, 1–6 August 2021. [Google Scholar]

- Yao, S.; Yu, D.; Zhao, J.; Shafran, I.; Griffiths, T.L.; Cao, Y.; Narasimhan, K. Tree of Thoughts: Deliberate Problem Solving with Large Language Models. arXiv 2023, arXiv:2305.10601. [Google Scholar] [CrossRef]

- Long, J. Large Language Model Guided Tree-of-Thought. arXiv 2023, arXiv:2305.08291. [Google Scholar] [CrossRef]

- Paranjape, B.; Lundberg, S.M.; Singh, S.; Hajishirzi, H.; Zettlemoyer, L.; Ribeiro, M.T. ART: Automatic multi-step reasoning and tool-use for large language models. arXiv 2023, arXiv:2303.09014. [Google Scholar]

- Gao, L.; Madaan, A.; Zhou, S.; Alon, U.; Liu, P.; Yang, Y.; Callan, J.; Neubig, G. PAL: Program-aided Language Models. arXiv 2022, arXiv:2211.10435. [Google Scholar]

- Yao, S.; Zhao, J.; Yu, D.; Du, N.; Shafran, I.; Narasimhan, K.; Cao, Y. ReAct: Synergizing Reasoning and Acting in Language Models. arXiv 2022, arXiv:2210.03629. [Google Scholar]

- Lewis, P.; Perez, E.; Piktus, A.; Petroni, F.; Karpukhin, V.; Goyal, N.; Küttler, H.; Lewis, M.; Yih, W.t.; Rocktäschel, T.; et al. Retrieval-augmented generation for knowledge-intensive NLP tasks. In Proceedings of the 34th International Conference on Neural Information Processing Systems, Vancouver, BC, Canada, 6–12 December 2020. NIPS’20. [Google Scholar]

- National Security Agency (NSA); Cybersecurity Directorate; Endpoint Security; Cybersecurity and Infrastructure Security Agency (CISA). Kubernetes Hardening Guide. 2022. Available online: https://media.defense.gov/2022/Aug/29/2003066362/-1/-1/0/CTR_KUBERNETES_HARDENING_GUIDANCE_1.2_20220829.PDF (accessed on 7 September 2025).

- OpenAI; Achiam, J.; Adler, S.; Agarwal, S.; Ahmad, L.; Akkaya, I.; Aleman, F.L.; Almeida, D.; Altenschmidt, J.; Altman, S.; et al. GPT-4 Technical Report. arXiv 2024, arXiv:2303.08774. [Google Scholar]

- Ye, J.; Chen, X.; Xu, N.; Zu, C.; Shao, Z.; Liu, S.; Cui, Y.; Zhou, Z.; Gong, C.; Shen, Y.; et al. A Comprehensive Capability Analysis of GPT-3 and GPT-3.5 Series Models. arXiv 2023, arXiv:2303.10420. [Google Scholar] [CrossRef]

- Touvron, H.; Martin, L.; Stone, K.; Albert, P.; Almahairi, A.; Babaei, Y.; Bashlykov, N.; Batra, S.; Bhargava, P.; Bhosale, S.; et al. Llama 2: Open Foundation and Fine-Tuned Chat Models. arXiv 2023, arXiv:2307.09288. [Google Scholar] [CrossRef]

- Jiang, A.Q.; Sablayrolles, A.; Mensch, A.; Bamford, C.; Chaplot, D.S.; Casas, D.d.; Bressand, F.; Lengyel, G.; Lample, G.; Saulnier, L.; et al. Mistral 7B. arXiv 2023, arXiv:2310.06825. [Google Scholar] [CrossRef]

- Dubey, A.; Jauhri, A.; Pandey, A.; Kadian, A.; Al-Dahle, A.; Letman, A.; Mathur, A.; Schelten, A.; Yang, A.; Fan, A.; et al. The llama 3 herd of models. arXiv 2024, arXiv:2407.21783. [Google Scholar] [CrossRef]

- Lin, J.; Tang, J.; Tang, H.; Yang, S.; Dang, X.; Han, S. AWQ: Activation-aware Weight Quantization for LLM Compression and Acceleration. arXiv 2023, arXiv:2306.00978. [Google Scholar] [CrossRef]

- Chen, M.; Tworek, J.; Jun, H.; Yuan, Q.; Pinto, H.P.d.; Kaplan, J.; Edwards, H.; Burda, Y.; Joseph, N.; Brockman, G.; et al. Evaluating Large Language Models Trained on Code. arXiv 2021, arXiv:2107.03374. [Google Scholar] [CrossRef]

| LLM | Service | GPU | VRAM | Phase | Remarks |

|---|---|---|---|---|---|

| GPT-4 | Managed | ? | ? | 1 + 2 | OpenAI (details unknown, [34]) |

| GPT-4o | Managed | ? | ? | 1 | OpenAI (details unknown, [34]) |

| GPT-3.5-turbo | Managed | ? | ? | 1 + 2 | OpenAI (details unknown, [35]) |

| Llama2 13B | Self-host | A6000 | 46.8 Gi | 1 | Chat model [36] |

| Llama2 7B | Self-host | A2 or A4000 | 14.7 Gi | 1 | Chat model [36] |

| Mistral 7B | Self-host | A2 or A4000 | 10.8 Gi | 1 | Fine-tuned for coding [37] |

| Llama3 70B | Self-host | A6000 | 47.8 Gi | 2 | Instruct model [38] |

| Llama3 13B | Self-host | A6000 | 23.3 Gi | 2 | Instruct model [38] |

| Llama3 8B | Self-host | A2 or A4000 | 14.3 Gi | 2 | Instruct model [38] |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Beuter, N.; Drews, A.; Kratzke, N. Prompt-Driven and Kubernetes Error Report-Aware Container Orchestration. Future Internet 2025, 17, 416. https://doi.org/10.3390/fi17090416

Beuter N, Drews A, Kratzke N. Prompt-Driven and Kubernetes Error Report-Aware Container Orchestration. Future Internet. 2025; 17(9):416. https://doi.org/10.3390/fi17090416

Chicago/Turabian StyleBeuter, Niklas, André Drews, and Nane Kratzke. 2025. "Prompt-Driven and Kubernetes Error Report-Aware Container Orchestration" Future Internet 17, no. 9: 416. https://doi.org/10.3390/fi17090416

APA StyleBeuter, N., Drews, A., & Kratzke, N. (2025). Prompt-Driven and Kubernetes Error Report-Aware Container Orchestration. Future Internet, 17(9), 416. https://doi.org/10.3390/fi17090416