1. Introduction

Notwithstanding their generative fluency, Large Language Models (LLMs) are fundamentally constrained by their static pretraining data, often resulting in outdated or unverifiable content, particularly in dynamic or knowledge-intensive settings [

1].

To mitigate this, retrieval-augmented generation (RAG) has emerged as a popular paradigm that supplements LLMs with external documents retrieved at inference time [

2,

3]. However, RAG approaches typically depend on auxiliary infrastructure, search engines, knowledge graphs, vector databases that complicate deployment in offline, privacy-sensitive, or resource-constrained scenarios [

4]. Moreover, when external sources are unavailable or incomplete, hallucinations may persist.

In contrast, prompt engineering and augmentation offer infrastructure-light alternatives to steer LLMs more effectively without model retraining [

5]. However, these methods often depend on heuristic rules or static templates, lack explainability, and cannot dynamically prioritize the most relevant information, especially in complex or open-domain tasks [

6,

7].

To address these challenges, we propose a novel framework that replaces external retrieval systems with an orchestrated workflow of lightweight LLM modules. Rather than relying on a document store, we treat a dedicated LLM module as a neural knowledge component that evaluates the salience of information already present in the prompt. This approach eliminates infrastructure dependencies while maintaining adaptability and transparency.

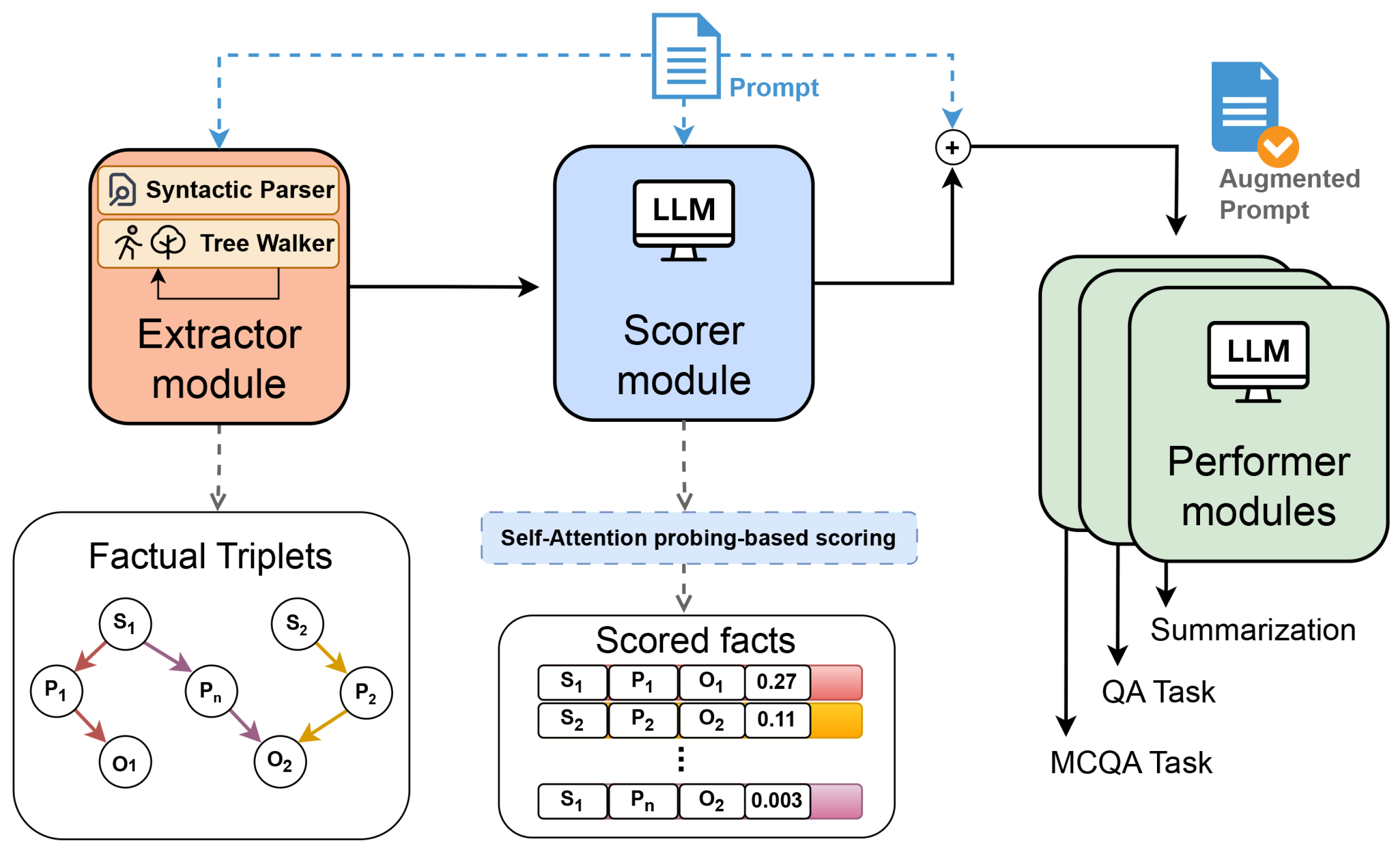

We introduce

LLMs in Staging, an explainable orchestration pipeline for prompt augmentation that operates without external structured retrieval. Inspired by theatrical staging, our workflow decomposes the augmentation process into coordinated roles: (1) an

extractor module identifies factual triples from the input by combining syntactic parsing with a rule-based tree traversal. This representation enables the augmentation process to preserve explicit semantic relationships while remaining compact and machine-readable; (2) a dedicated

scorer module, based on a generic lightweight LLM, evaluates the importance of each triple using self-attention signals that reflect the model’s internal beliefs. Leveraging fact triples allows the scorer module to trace back and reason over discrete relational units, making the entire workflow more transparent and interpretable; (3) a

performer module processes the augmented prompt for downstream tasks in supervised fine-tuning or zero-shot settings.

Figure 1 shows the main elements of the proposed architecture.

This design is grounded in the view that LLMs encode knowledge through self-attention and can act as modular, updatable components [

8,

9]. By delegating the scoring task to a dedicated LLM, we can independently refine how importance is judged, for example, by swapping in a domain-specific scorer, without retraining the performer model or relying on external data sources.

Our architecture addresses three critical limitations in existing systems: (a) retrieval-infrastructure dependency for deployment in constrained environments; (b) heuristic fragility, by replacing static, rule-based selection with model-informed fact evaluation; and (c) opacity in augmentation, by introducing a traceable and interpretable process that facilitates auditability for high-trust applications [

10,

11].

Our contributions are as follows:

We introduce an explainable and infrastructure-free prompt augmentation framework, replacing external retrieval with an orchestrated LLM workflow.

We propose a novel fact scoring technique that uses an LLM’s self-attention signals to identify salient information based solely on the prompt, without requiring external content.

We empirically validate our method on multiple model types (encoder-only, decoder-only, encoder–decoder) and tasks, including multiple-choice QA, open-book QA, and summarization.

We demonstrate that decoupling extraction, scoring, and execution enhances modularity, supports structured audit trails, and enables lightweight improvements.

This paper is organized as follows:

Section 2 provides an overview of related research on prompt augmentation, workflow-based orchestration of large language models, and positions our approach within this context.

Section 3 details the proposed methodology, describing the extractor, scorer, and performer modules.

Section 4 outlines the experimental setup, including the tasks, datasets, evaluation metrics, and prompting strategies.

Section 5 presents and analyzes the empirical results across multiple downstream architectures and tasks, highlighting both overall performance and ablation insights. Finally,

Section 6 discusses the implications of our findings, and

Section 7 concludes the paper.

2. Literature Review

2.1. Prompt Augmentation

Prompt augmentation has emerged as a lightweight alternative to model retraining, with the aim of guiding LLMs towards more accurate, coherent, or task-specific outputs. Early approaches relied on manually crafted templates and demonstrations [

5,

12], with few-shot learning becoming a widely adopted strategy. Subsequent work introduced dynamic prompt composition, such as Chain-of-Thought (CoT) prompting [

13], which encourages intermediate reasoning steps, and Self-Consistency [

14], which aggregates multiple sampled outputs to improve reliability. More recent advances explore automatic augmentation via retrieved examples, retrieved explanations [

15], or model-generated knowledge [

16]. However, most of these techniques depend on external retrieval pipelines or heuristic sampling, lacking structured and interpretable mechanisms for prioritizing the most salient information within the prompt itself.

While RAG [

2,

3,

17] significantly improves factuality by injecting retrieved documents into the prompt context, its reliance on search engines, knowledge graphs, or dense retrieval systems [

4] imposes operational complexity. Furthermore, hallucinations may persist when retrieval fails, and maintaining up-to-date external indexes is nontrivial in dynamic or privacy-sensitive settings [

18]. Infrastructure-free alternatives capable of enriching model inputs without external dependencies are, therefore, highly desirable. Retrieval-free methods probe internal model knowledge, for example, through factual probing [

8], knowledge neurons [

9], or self-reflective prompting [

19], showing that LLMs can be queried as implicit neural knowledge bases. These insights motivate intra-model harvesting strategies, where knowledge extracted by one prompt or module can be restructured to guide subsequent tasks [

20].

2.2. Workflow-Based Orchestration of LLMs

A parallel line of research explores structuring LLM applications into explicit workflows, where different modules or prompting stages are coordinated in pipelines. Toolformer [

21] and related systems demonstrate how LLMs can call external tools in structured sequences. Frameworks such as LangChain (

https://www.langchain.com, accessed on 21 November 2025) and Semantic Kernel (

https://learn.microsoft.com/en-us/semantic-kernel, accessed on 21 November 2025) generalize this idea by enabling modular orchestration and integration with procedural components. Reasoning pipelines like Program of Thoughts [

22] or Reflexion [

23] organize multi-step prompting to enhance consistency and correctness. Other works explore orchestration of multiple LLMs for task decomposition, planning, or role-playing [

24,

25,

26], highlighting the potential of coordinated modular architectures. However, these approaches mainly address complex reasoning, tool use, or planning, and rarely target structured prompt augmentation or internal fact scoring.

2.3. Positioning of Our Work

In contrast to both retrieval-based augmentation and workflow approaches focused on planning or tool use, our work introduces an orchestrated LLM workflow specifically designed for explainable prompt augmentation. By structuring the process into dedicated modules (fact extractor, importance scorer, and performer), we enable infrastructure-free, auditable, and dynamically adaptive input enhancement based solely on internal model reasoning. This situates our method at the intersection of prompt augmentation and workflow orchestration, offering a lightweight, interpretable, and low-cost alternative to RAG for static, constrained, or high-trust deployment contexts.

3. Methodology

The overall design is conceptually inspired by multi-agent paradigm, where independent yet concurrent actors contribute to a common objective by exchanging asynchronous messages [

27]. Our framework adopts a cooperative workflow in which the augmentation process is decomposed into three specialized components that interact and exchange information, each fulfilling a distinct role: (1) an

extractor module identifies structured information from the input text in the form of factual triples; (2) a

scorer module evaluates these triples by ranking them according to its internal attention-based beliefs; and (3) a

performer module processes the augmented prompt, constructed by concatenating the original input text with the corresponding factual triples, to support downstream tasks.

We represent extracted knowledge as triples because this structured format provides a clear, interpretable abstraction of factual relationships while remaining lightweight and domain-agnostic. Triples naturally align with the organization of knowledge graphs, facilitating modular updates, integration into downstream reasoning processes, and explicit traceability between the extracted content and the scorer’s internal assessments. This structured representation thus enhances both transparency and interpretability throughout the workflow.

Much like in a theater staging, the supporting modules work “behind the scenes” to improve the quality of the performer’s final output (see

Figure 1 for an overview of the architecture).

3.1. Extractor Module

The extractor module is a task-agnostic module that leverages a probabilistic language model in conjunction with a probabilistic context-free grammar to identify factual information embedded in the original prompt, guided by its syntactic structure. Extraction is performed at the sentence level, facilitating deployment in resource-constrained environments. It generates an interpretable factual skeleton, which serves as the foundational structure for the scorer module to derive its own belief representation to support the performer module. The extractor module identifies factual information within an input text by means of

triples. Formally, given an input text

X, the extractor module

produces a set of factual triples:

where each of the components,

,

, and

, is itself a set of words:

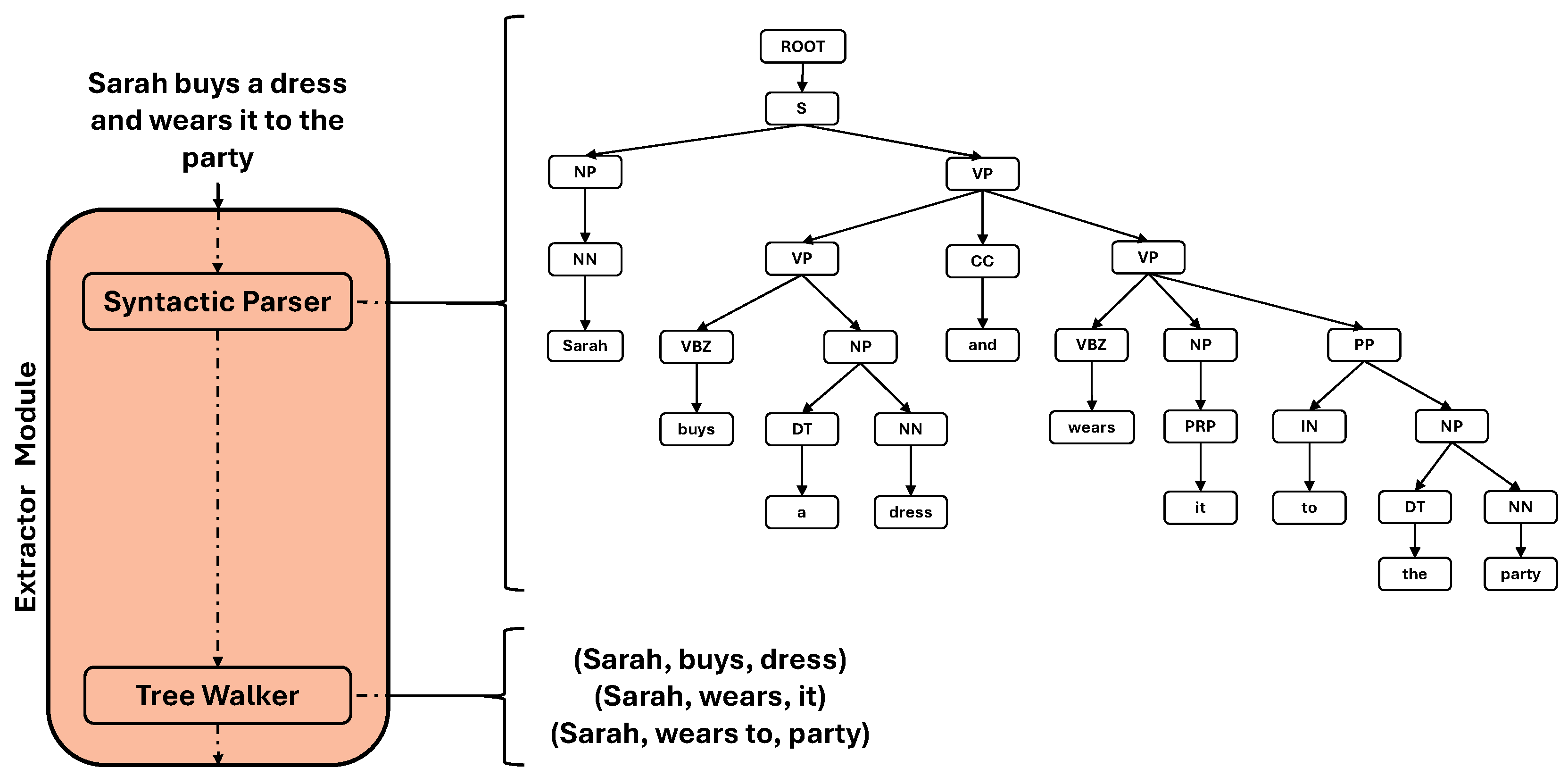

To carry out the extraction, the module employs a hybrid approach that combines syntactic parsing with a rule-based traversal of the resulting constituency tree to extract factual triples.

3.1.1. Syntactic Parsing

As an initial step, we apply the constituency parser from Stanza [

28] to each sentence in the input text. This component produces a phrase-structure tree that captures the hierarchical grammatical organization of the sentence, based on a probabilistic context-free grammar.

Specifically, the parser (1) tokenizes the sentence, (2) performs Part-of-Speech (POS) tagging, and (3) generates a constituency parse tree representing the syntactic structure. An illustrative example of the resulting parse is shown in

Figure 2.

3.1.2. Facts Extraction via Tree Traversal

Given the constituency trees, the extractor module leverages a suite of mutually recursive procedures, each specialized for a particular constituent type (see

Table 1 for a complete list and definitions), to identify candidate

triples (see

Figure 2 for an example). Although this implementation superficially resembles a recursive-descent parser, it does not perform any grammar-based analysis or grammaticality checks of the text. Instead, it operates solely on the precomputed constituency parse trees, without relying on any grammar rules, look-ahead mechanisms, or runtime production checks. In other words, the algorithm functions purely as a

recursive tree-walker that applies a fixed set of pattern-driven rules to traverse the parse structure and extract relational triples.

At a high level, the algorithm proceeds as follows:

Root scan: Identify all top-level clauses (S, SQ, SINV, SBAR) and dispatch them to parseS or parseSBAR.

Subject extraction: In parseS, locate noun-phrase’s (NP) children, and collect them as subjects through parseNP procedure.

Modifier attachment: Still in parseS, if subjects are found, look for adjacent PPs, ADJPs, and ADVPs to emit extra triples using an inferred-relation.

Predicate-object discovery: Recurse into verb-phrase (VP) nodes through parseVP procedure, and collect verb tokens. Then, for each embedded NP/PP/ADJP/ADVP, recurse to parse its object and emit new candidate triples. If no explicit object appears, emit an inferred-object triple.

Substructure recursion: Whenever a nested clause or coordination (e.g., UCP, S/SBAR) is encountered, re-invoke parseS, parseSBAR, parseNP, etc., threading through any active subject or verb context.

Post-processing: Combine triples with identical or , and remove any exact duplicates to avoid redundancy.

Thus, no stage of the extraction pipeline, including root scan, subject extraction, or any subsequent procedure, performs grammatical verification beyond the structural information already encoded in the input parse trees.

3.1.3. Inferred Elements

During traversal, the extractor may generate additional triples involving either an inferred-relation or an inferred-object.

An inferred-relation is emitted when a modifier (e.g., prepositional phrase or adjective phrase) attaches to an identified subject or object but lacks an explicit predicate, thereby implying a relation of the form . For example, from the sentence “I bought a new red shirt this morning”, the triple represents an inferred relation between the noun and its adjectival modifiers.

Conversely, an inferred-object is produced when a verb phrase lacks an explicit syntactic complement, allowing the system to capture underspecified relations of the form . For instance, from the sentence “The committee decided”, the triple represents an inferred object, since the verb lacks a direct complement.

3.1.4. Plug-and-Play Design and Interpretability

As a task-agnostic component, the extractor module is designed to be plug-and-play and can be seamlessly integrated into existing NLP workflows. Unlike neural relation-extraction architectures, which typically require extensive supervised training and yield models with limited interpretability [

29], our tree-traversal strategy provides a fully transparent and deterministic mechanism for identifying factual triples. Each extracted triple can be explicitly traced back to a specific syntactic pattern within the constituency tree, ensuring complete explainability of both the extraction logic and the resulting output.

This design not only enhances interpretability but also maintains a low computational footprint: the entire extraction process runs efficiently on standard CPU hardware, without requiring GPUs or large-scale inference pipelines. Consequently, the approach achieves a good balance between transparency, domain-agnostic generality, and computational efficiency.

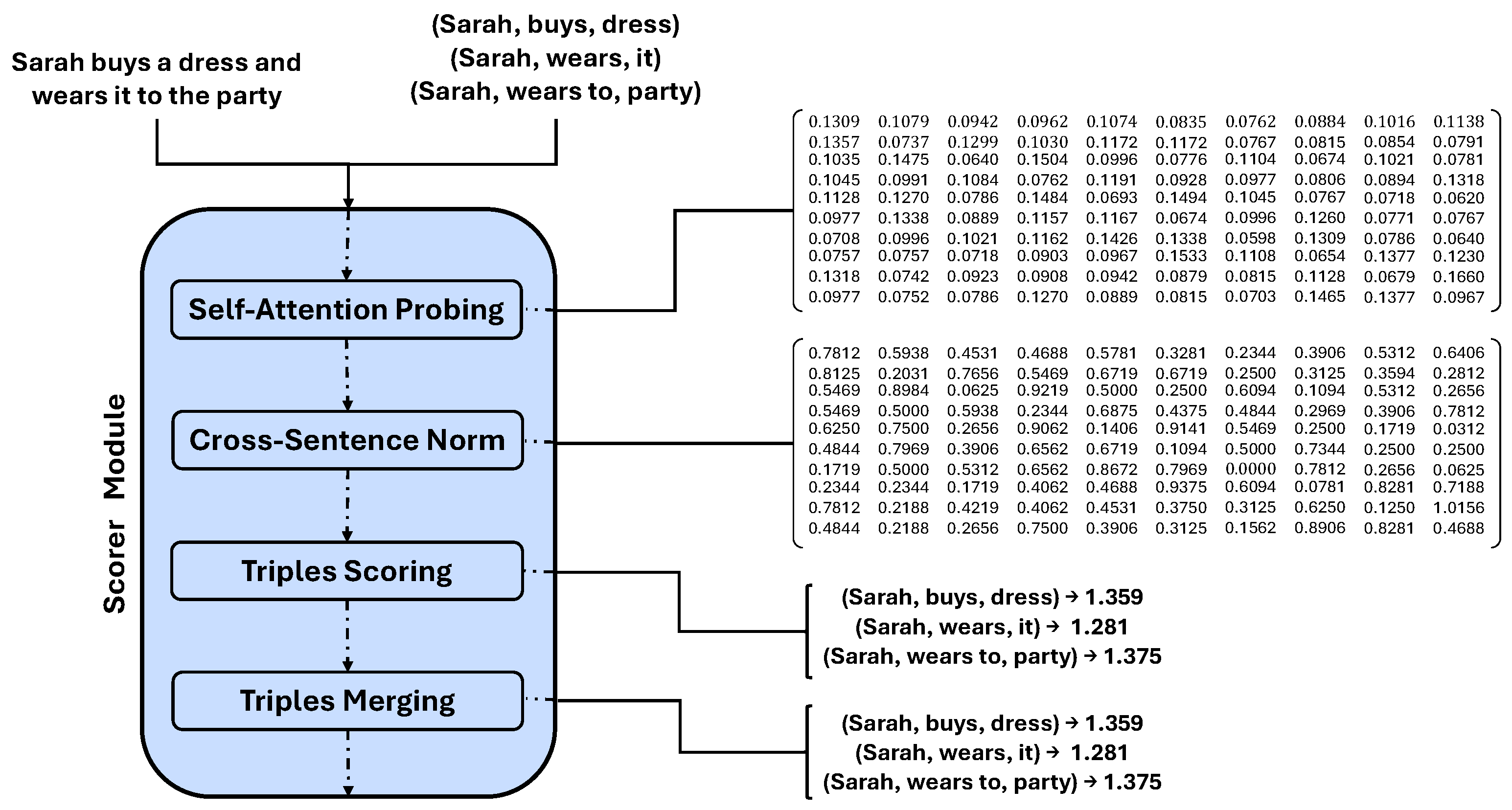

3.2. Scorer Module

The scorer module operates as a lightweight module built upon a single pre-trained encoder-only transformer architecture, in our implementation DeBERTa-v3 [

30]. It assigns each candidate triple

a non-negative relevance score

, reflecting how strongly the model “attends” to the relation in its internal representations, which we interpret as a proxy for its internal belief about factual relevance. This module acts as a neural knowledge base by leveraging the relationships identified during a generic English pre-training. Formally, given an input text

X, and a set of factual triples

T produced by

, the scorer module

computes

An illustrative example of a scoring pipeline is provided in

Figure 3.

3.2.1. Model Selection and Explainability

An encoder-only design is critical, since full bidirectional self-attention over all token pairs is required to meaningfully assess inter-token relations. Decoder-only or encoder–decoder models either lack “future” token context or underutilize their decoder parameters, respectively. In contrast, encoder-only architectures support complete context modeling and can be continuously pre-trained to incorporate up-to-date domain knowledge [

31]. Among state-of-the-art encoders, DeBERTa-v3 achieves leading benchmarks in contextual understanding compared with other lightweight models, such as BERT and RoBERTa, making it a suitable backbone for our scoring module [

30].

The scorer remains fully transparent: raw self-attention weights are directly inspected and aggregated to yield , without any opaque fine-tuning or additional neural generation layers. This interpretability distinguishes it from neural relation-scoring architectures, which typically obscure the rationale behind individual scores and require resource-intensive inference. By contrast, our scorer maintains both analytical transparency and computational efficiency, enabling traceable and reproducible evaluation of each extracted triple.

3.2.2. Self-Attention Probing

To compute a relevance score for each triple, the scorer module probes its internal self-attention weights. Let each transformer layer

and head

produce an attention matrix:

where

n is the number of tokens in the sentence. The module first aggregates these token-level weights into word-level matrices

, where

is the number of words in the sentence, via a simple pooling over sub-token spans. Next, it pools layers and heads by taking an element-wise maximum:

and applies a row-wise softmax to ensure each row adds up to one.

3.2.3. Cross-Sentence Normalization

Unnormalized attention matrices vary in scale according to sentence length, as longer sentences will generally lead to lower attention weights, while shorter ones will generally lead to higher attention weights. Let be the unnormalized attention matrices of k different sentences. To make scores comparable across sentences, the module applies:

A natural logarithm transform , converting multiplicative distances to additive scales;

A length-adjustment term to each entry, counteracting the dilution effect in longer inputs;

A global shift by subtracting the minimum value observed from all sentence matrices.

Thus, for each sentence

i, we obtain

which guarantees non-negative, length-compensated attention scores suitable for cross-sentence comparison.

3.2.4. Triple-Level Scoring

For each candidate triple

, the scorer module extracts six pairwise attention scores by taking the maximum entry in

between the word spans of each element:

The final relevance score is defined as

capturing both forward and backward attention strength along the predicate chain.

This metric enables the retention of only the most salient relations, mitigating noisy or redundant extractions. This is crucial, for example, to satisfy practical constraints, such as input token limits, where selecting a subset of high-impact triples is necessary.

In our implementation, we adopt max-pooling when aggregating attention values between token spans. We empirically compared this with mean-pooling and weighted-mean pooling strategies and observed that max-pooling provides more robust relevance estimation. This effect can be attributed to the highly peaked nature of attention distributions in transformer models: averaging operations tend to flatten these spikes, thereby attenuating the most informative cross-token interactions.

3.2.5. Triples Merging

To capture multi-hop dependencies, the scorer module merges sequences of triples sharing overlapping arguments. Given a chain

with

, the module forms an extended tuple

and assigns it an aggregate score

This formulation rewards cumulative relevance while penalizing excessively long or speculative paths, thereby producing concise, high-impact fact sequences for downstream use.

3.2.6. Efficient Parallel Execution and Scalability

Because both attention extraction and triple generation operate on the same input text, the extractor and scorer modules can execute in parallel, further reducing end-to-end latency. Subsequent operations (i.e., matrix pooling, aggregation, normalization, and max-pooling) are all GPU-optimized tensor operations, making the scoring process practically instantaneous. Moreover, the training-free design affords plug-and-play integration with any transformer encoder, ensuring broad applicability across domains without architectural modification.

3.3. Performer Module

The performer module represents the terminal stage of our workflow, in charge of executing the downstream task on an enriched version of the original prompt. Unlike the extractor and scorer modules, which operate “behind the scenes”, the performer is the visible actor whose output defines the system’s observable behavior. Formally, given an input text

X, a set of factual triples

T, and their relevance scores

, the performer module

carries out

is the augmented prompt fed into the downstream model.

3.3.1. Augmented Prompt Construction

The performer module receives three inputs (X, T, and ) and, based on task specifications and runtime constraints (e.g., token budget, model capacity), selects a subset of the highest-scoring triples. These selected triples are then serialized and incorporated into the prompt by prepending, appending, or interleaving them with the original text. The resulting augmented prompt provides explicit factual grounding, intended to boost performance on tasks such as classification, summarization, or question answering.

3.3.2. Plug-and-Play Compatibility with Downstream Models

Because prompt augmentation occurs entirely at the input level, the performer module imposes no requirements on the internal architecture of the downstream model. This model-agnostic design supports seamless integration in both supervised fine-tuning and zero-shot scenarios, without any additional training or parameter modifications. To demonstrate this versatility, we benchmark the performer with three different types of LLMs architectures: encoder-only, encoder–decoder, and decoder-only.

4. Experimental Setup

This section outlines the experimental design used to assess the effectiveness of the proposed LLMs in Staging framework. We evaluate its impact across a range of natural language understanding and generation tasks by comparing the performance of performer modules under our augmented prompting strategy versus the standard prompt. The following subsections detail the tasks, datasets, evaluation metrics, model configurations, and baselines employed in our study.

4.1. Tasks, Datasets, and Evaluation Metrics

We benchmark our approach across three representative task categories, covering both reasoning-oriented and generative use cases. An overview of tasks and datasets is provided in

Table 2.

4.1.1. Multiple-Choice Question Answering (MCQA)

In this task, the model is given a question along with a fixed set of candidate answers, exactly one of which is correct. This setting assesses the model’s capacity for discrete reasoning, factual recall, and contextual inference. We evaluate performance on three datasets:

RACE [

32] and

MCTest [

33], which involve reading comprehension over relatively short prompts, and the more challenging

QuALITY [

34], which features substantially longer contexts. The evaluation metric is

Accuracy, defined as the proportion of correctly predicted answers over the total number of questions.

4.1.2. Open-Book Question Answering (QA)

In this task, the model must extract or synthesize answers from background documents, simulating scenarios that require factual grounding and document-level comprehension. We consider two datasets: (1)

MS MARCO [

35], which focuses on general-purpose QA over short web snippets, emphasizing concise fact-based responses, and (2)

GovReportQA [

36], which requires deeper comprehension and multi-hop reasoning across lengthy government reports. For this task, we report

BLEURT [

38] scores, which capture semantic similarity and correlate well with human judgments of answer quality.

4.1.3. Text Summarization

This task involves generating concise and coherent summaries that preserve the core information of longer-source documents. It evaluates the model’s ability to abstract, condense, and prioritize salient content. We use the

GovReport [

37] dataset, consisting of over 19,000 U.S. government documents, such as Congressional Budget Office (CBO) and Government Accountability Office (GAO) reports, paired with expert-written executive summaries. This benchmark is particularly suited for assessing summarization quality in information-dense, high-stakes domains.

We evaluate using the

ROUGE metrics (ROUGE-1, ROUGE-2, and ROUGE-L) [

39], which measure the overlap of unigrams, bigrams, and longest common subsequences between the generated and reference summaries. ROUGE remains the standard for automatic summarization evaluation, offering a good balance between content coverage and linguistic fluency.

Alternative metrics such as

BLEU [

40] and

METEOR [

41], though widely used in machine translation, are less suitable for summarization, as they tend to over-penalize lexical or phrasal variation and are less sensitive to paraphrasing. On the other hand,

BERTScore [

42] leverages contextual embeddings to capture semantic similarity, but it does not directly measure factual consistency with the reference, which is crucial in the summarization setting. For these reasons, we adopt ROUGE as the most appropriate and reliable metric for evaluating summarization performance in our study.

4.2. Core Prompting Strategies

We define two primary prompting configurations used to evaluate the LLMs in Staging framework. These serve as the core experimental conditions, against which all ablation variants are compared.

4.2.1. Vanilla Prompting

In this baseline condition, the performer operates in isolation from the upstream modules. The input consists solely of the original prompt, typically a context document, and a task-specific instruction. No factual extraction or scoring is performed, and neither the extractor nor the scorer module is invoked. This configuration establishes a reference point for measuring the contribution of structured prompt augmentation.

4.2.2. LLMs-in-Stage Augmentation

This condition reflects the complete instantiation of our proposed framework. The input document is first processed by the extractor, which generates a set of factual triples that capture salient content. These triples are then ranked by the scorer according to their contextual relevance. The performer receives an augmented prompt, comprising the original document and a subset of top-ranked triples. The number of triples is determined based on the available token budget and the context length of the performer. This approach introduces an explicit relevance-guided structure to the prompt, designed to improve the performer’s task effectiveness.

4.3. Ablation Prompting Strategies

To isolate the contributions of the extractor and scorer modules, we perform an ablation study with two configurations, each designed to selectively disable components of the framework.

4.3.1. Random-Scoring Augmentation

This variant targets the scorer’s role in the augmentation pipeline. The extractor produces factual triples as in the entire framework. However, instead of relevance scoring, a dummy scorer assigns random scores to the triples. The augmented prompt is then constructed following the same token budget and formatting constraints used in LLMs-in-Stage Augmentation. This variant evaluates the importance of the scorer’s relevance estimation in the augmentation process.

4.3.2. Duplication Augmentation

In this configuration, the extractor and scorer modules are disabled and replaced with a dummy module that returns the input document unaltered. The resulting prompt, provided to the performer, consists of the original document concatenated with a duplicate. This condition tests whether performance improvements in the full framework result from meaningful augmentation or simply increased input length or repetition.

5. Results

This section presents and analyzes the empirical performance of the

LLMs in Staging framework, based on the experimental settings described in

Section 4. Unlike traditional Retrieval-Augmented Generation (RAG) pipelines, our approach operates entirely without access to external knowledge sources. As detailed in

Section 3, it leverages only the information already present within the original prompt. This design is intentional:

LLMs in Staging is aimed explicitly at scenarios where RAG is not feasible, such as when no external documents are available, and only pretrained language models can be used. In these settings, our extractor and scorer modules (

Section 3.1 and

Section 3.2) restructure and enrich the given prompt to transparently enhance the model’s downstream performance.

5.1. Downstream LLMs’ Architectures

To assess the adaptability of our framework, we evaluate its performance across three major LLM architectures:

encoder-only,

encoder–decoder, and

decoder-only. These categories represent distinct reasoning and context-handling paradigms, enabling a comprehensive analysis of prompt augmentation robustness.

Table 3 summarizes the full configuration space. For encoder-only models, we evaluate

ModernBERT [

43] and

EuroBERT [

44] on MCQA, using all prompting strategies. In the case of longer-input tasks, such as QuALITY, a

capped strategy is applied, limiting input to either

N tokens or the model’s maximum context window, where

N is the document length. Encoder–decoder models

LongT5 [

45] and

PegasusX [

46] are assessed on QA and summarization tasks, respectively. Finally, decoder-only

Qwen2.5 [

47] models (0.5B, 7B) are evaluated in a zero-shot setting across all tasks, probing whether instruction-tuned models inherently benefit from structured factual augmentation.

5.2. MCQA Results

We evaluate accuracy improvements across three multiple-choice QA (MCQA) benchmarks using both encoder-only and decoder-only models under a range of prompting strategies (

Table 4).

5.2.1. Overall Gains

The LLMs-in-Stage augmentation consistently outperforms vanilla prompting and ablation baselines, particularly for smaller models. Specifically,

ModernBERT achieves gains of up to on RACE, on MCTest, and on QuALITY.

EuroBERT sees improvements of up to , , and on the respective datasets.

Qwen2.5-0.5B demonstrates substantial gains: on RACE, on MCTest, and on QuALITY. In contrast, the larger Qwen2.5-7B experiences slight performance drops, ranging from to .

These results suggest that both encoder-only and decoder-only architectures benefit from our structured prompt augmentation, though in different ways. Encoder-only models, which are less receptive to instructions, exhibit only modest improvements. Decoder-only models, however, show more substantial gains, particularly at smaller scales, indicating they are better able to leverage structured prompt augmentation. For larger decoder-only models, the marginal benefit diminishes, likely due to their already strong baseline capabilities. Moreover, as expected for the nature of our prompting strategy, tasks that exhibit longer prompts (QuALITY) benefit more than the shorter ones (RACE and MCTest). It is also essential to recall that decoder-only models are used in a zero-shot setting, proving the plug-and-play benefits of the architecture.

5.2.2. Ablation Insights

Document-Duplication consistently underperforms compared to Vanilla prompting, with relative changes ranging from to . This suggests that simply repeating the context does not enhance discrete reasoning capabilities. In contrast, LLMs-in-Stage augmentation significantly outperforms Document-Duplication, with improvements of up to , except in the case of larger models (e.g., Qwen2.5-7B), where the gains are less pronounced. These findings highlight the effectiveness of our structured prompt augmentation, which integrates contributions from the extractor and scorer modules. By selectively surfacing relevant information already present in the input, this collaboration enables the performer module to focus more precisely on the most salient facts, thereby enhancing the performance.

Random-scoring yields better results than both Vanilla and Document-Duplication, but falls short (up to ) of LLMs-in-Stage’s performance across all configurations. These findings underscore the key contribution of the scorer module in enhancing the performer’s performance.

5.3. Open-Book QA Results

We assess the performance of

LongT5 and

Qwen2.5 (0.5B and 7B) on two open-book QA benchmarks (

MS MARCO,

GovReportQA) using BLEURT scores as the evaluation metric (

Table 5). The main difference with the MCQA setting is that this task is a pure text-generation task.

5.3.1. Overall Gains

The LLMs-in-Stage augmentation yields consistent performance improvements across all models and datasets. Specifically,

LongT5 achieves gains of up to on MS MARCO and on GovReportQA.

Qwen2.5-0.5B shows notable improvements, with increases of up to and on the respective datasets.

Unlike the MCQA task, which is inherently discriminative, open-book QA requires coherent and factually grounded text generation. In this generative setting, our structured prompt augmentation proves especially effective, enhancing not only answer relevance but also generation quality.

Decoder-only models, with a zero-shot setting, benefit most from the added context and focus introduced by the extractor and scorer modules, with Qwen2.5-0.5B (LLMs-in-Stage augmentation) achieving even superior performance () than Qwen2.5-7B (Vanilla prompting). LongT5’s modest improvements are probably due to its existing strengths in handling structured generative tasks.

5.3.2. Ablation Insights

Document-Duplication performs similarly to Vanilla prompting, with relative differences ranging from to , reinforcing the notion that context repetition offers limited value for factual grounding in generative settings. In contrast, Random-Scoring leads to modest gains over both baselines but still lags behind LLMs-in-Stage augmentation. These trends underscore the pivotal role of the extractor in identifying and surfacing relevant content, while the scorer module contributes further refinement, helping focus the performer model’s generative capabilities on high-quality, contextually relevant information.

5.4. Summarization Results

We evaluate summarization performance on the

GovReport dataset using

PegasusX and

Qwen2.5 (0.5B and 7B), with ROUGE scores reported in

Table 6.

5.4.1. Overall Gains

LLMs-in-Stage augmentation consistently outperforms all baselines across both models. Specifically,

As with open-book QA, summarization is a generative task that requires not just factual grounding but also fluency, coherence, and compression. Our structured prompt augmentation proves highly effective in this setting, enabling models to better identify and focus on the most salient content. Notably, Qwen2.5-0.5B (LLMs-in-Stage augmentation) performs similar () to Qwen2.5-7B (Vanilla prompting). The gains are particularly pronounced in larger decoder-only models, with performance improvements scaling proportionally to the number of model parameters.

5.4.2. Ablation Insights

Two key patterns emerge from the ablation analysis:

For PegasusX, Document-Duplication improves ROUGE-L by over Vanilla prompting but still underperforms relative to LLMs-in-Stage ().

For Qwen2.5, Document-Duplication performs comparably to Vanilla, ranging from to , yet it consistently lags behind LLMs-in-Stage by margins of to .

Random-scoring also trails LLMs-in-Stage across all configurations. These findings further reinforce the complementary roles of the extractor and scorer modules: the extractor surfaces key content from lengthy source documents, while the scorer prioritizes the most relevant elements to guide more focused and coherent summary generation. The benefit is especially evident in instruction-tuned architectures, but the encoder–decoder model (PegasusX) also leverages the augmented prompts effectively when appropriately guided.

6. Discussion

This section provides a reflective examination of the methodological choices and empirical findings presented in the paper. We outline how the proposed scorer leverages self-attention patterns, discuss the architectural decisions underlying its design, and identify both the limitations and prospective extensions of our approach. Together, these elements clarify the broader implications of our results and situate the method within ongoing research on factual grounding and model interpretability.

6.1. Interpretability of Self-Attention Beliefs

The scorer module relies on the self-attention patterns of an encoder-only transformer to quantify how strongly the model associates entities within a factual triple.

While attention weights do not directly measure causality, prior work has shown moderate correlations between attention intensity and interpretability in transformer architectures.

In our experiments, high attention activations often emerged between linguistically salient pairs, such as (subject, predicate) or (predicate, object), suggesting that the learned attention structure implicitly encodes relational salience. We, therefore, interpret these activations as self-attention beliefs, i.e., representational indicators of what the model considers relevant within a sentence, acknowledging that this notion remains an approximation rather than a formal measure of factual grounding. Further investigation using controlled probing or causal attribution methods would be a valuable direction for future research.

6.2. Choice of Scorer Architecture

We selected DeBERTa-v3 as the backbone of the scorer module because it offers a favorable trade-off between semantic precision and computational efficiency.

Although lighter architectures achieve lower latency and parameter counts, they exhibit weaker representational power and produce less discriminative attention distributions, which are critical to our scoring function. Benchmark evidence [

30] and internal validation confirmed that DeBERTa-v3 achieves stable, high-quality relevance estimates while remaining fast enough for integration into the full pipeline.

In future work, we plan to explore multi-agent scorer systems combining heterogeneous backbones, where smaller models could serve more latency-sensitive tasks without compromising overall factual quality.

6.3. Limitations and Future Work

Our method assumes that attention intensity can serve as a proxy for factual relevance. While empirically supported by prior analyses, this assumption may not universally hold across domains or tasks. Moreover, the current implementation uses a single encoder model; incorporating ensembles or cross-model calibration could enhance robustness.

Finally, although the present study focuses on summarization, question answering, and multiple-choice QA, the same mechanism could generalize to other tasks requiring factual grounding or consistency verification, such as fact verification, dialogue, and long-document reasoning.

7. Conclusions

We introduce LLMs in Staging, a retrieval-free LLM-based workflow that enables explainable and structured prompt augmentation for large language models. Our method yields significant improvements on both discriminative and generative tasks, all without external retrieval and suitable for zero-shot settings in decoder-only instruct models. The modular, explainable pipeline is plug-and-play across diverse LLM architectures, making it ideal for resource-constrained and privacy-sensitive settings.

Encoder-only and encoder–decoder models show modest yet consistent gains from augmentation, likely due to their limited ability to exploit structured prompts, while ablations confirm the value of cooperative interaction among the extractor, scorer, and performer modules.

In particular, the scorer’s attention-based mechanism demonstrates that transformer self-attention can serve as a practical indicator of factual salience: high-intensity attention patterns frequently align with semantically relevant subject–predicate–object relations, supporting the interpretability of our self-attention belief formulation.

The choice of DeBERTa-v3 as scorer backbone represents an effective compromise between semantic precision and computational efficiency, though future versions may explore hybrid architectures including lighter encoders.

Future work will extend the scorer module with mechanistic interpretability tools such as TunedLens to deepen the modeling of internal beliefs and enhance factual reasoning. We also plan to experiment with alternative salience signals, latency-optimized scorer ensembles, and integration with trustworthy-reasoning frameworks from recent fact-checking research, toward a more transparent and reliable use of internal model knowledge.

Author Contributions

Conceptualization, G.L.; methodology, G.T. and G.L.; software, G.T.; validation, M.T., G.T. and G.L.; resources, A.P and S.C.; writing—original draft preparation, G.T. and G.L.; writing—review and editing, M.T and S.C.; supervision, A.P.; funding acquisition, G.L. and S.C. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by University of Parma with Azione B grant “AGILE: Agentic Graph-based Interpretability and Layer-wise Extraction for Efficient Trust Verification in Large Language Models” (Principal Investigator: Dr. Gianfranco Lombardo). The Ph.D fellowship of Giuseppe Trimigno is funded by Regione Emilia-Romagna with PR FSE+ 2021-2027.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

All the datasets supporting reported results can be found at Huggingface (

https://huggingface.co/, accessed on 21 November 2025.)

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Ji, Z.; Lee, N.; Frieske, R.; Yu, T.; Su, D.; Xu, Y.; Ishii, E.; Bang, Y.J.; Madotto, A.; Fung, P. Survey of hallucination in natural language generation. ACM Comput. Surv. 2023, 55, 248. [Google Scholar] [CrossRef]

- Lewis, P.; Perez, E.; Piktus, A.; Petroni, F.; Karpukhin, V.; Goyal, N.; Küttler, H.; Lewis, M.; Yih, W.t.; Rocktäschel, T.; et al. Retrieval-augmented generation for knowledge-intensive nlp tasks. Adv. Neural Inf. Process. Syst. 2020, 33, 9459–9474. [Google Scholar] [CrossRef]

- Izacard, G.; Grave, E. Leveraging Passage Retrieval with Generative Models for Open Domain Question Answering. In Proceedings of the EACL 2021—16th Conference of the European Chapter of the Association for Computational Linguistics, Kyiv, Ukraine, 19–23 April 2021; Association for Computational Linguistics: Stroudsburg, PA, USA, 2021; pp. 874–880. [Google Scholar] [CrossRef]

- Shuster, K.; Poff, S.; Chen, M.; Kiela, D.; Weston, J. Retrieval Augmentation Reduces Hallucination in Conversation. In Proceedings of the Findings of the Association for Computational Linguistics: EMNLP 2021, Punta Cana, Dominican Republic, 7–11 November 2021; pp. 3784–3803. [Google Scholar] [CrossRef]

- Zhou, D.; Schärli, N.; Hou, L.; Wei, J.; Scales, N.; Wang, X.; Schuurmans, D.; Cui, C.; Bousquet, O.; Le, Q.V.; et al. Least-to-Most Prompting Enables Complex Reasoning in Large Language Models. In Proceedings of the Eleventh International Conference on Learning Representations, Kigali, Rwanda, 1–5 May 2023. [Google Scholar] [CrossRef]

- Guu, K.; Lee, K.; Tung, Z.; Pasupat, P.; Chang, M. Retrieval augmented language model pre-training. In Proceedings of the International Conference on Machine Learning, PMLR, Online, 13–18 July 2020; pp. 3929–3938. [Google Scholar] [CrossRef]

- Borgeaud, S.; Mensch, A.; Hoffmann, J.; Cai, T.; Rutherford, E.; Millican, K.; Van Den Driessche, G.B.; Lespiau, J.B.; Damoc, B.; Clark, A.; et al. Improving language models by retrieving from trillions of tokens. In Proceedings of the International Conference on Machine Learning, PMLR, Baltimore, MD, USA, 17–23 July 2022; pp. 2206–2240. [Google Scholar] [CrossRef]

- Petroni, F.; Rocktäschel, T.; Riedel, S.; Lewis, P.S.; Bakhtin, A.; Wu, Y.; Miller, A.H. Language Models as Knowledge Bases? In Proceedings of the EMNLP/IJCNLP, Hong Kong, China, 3–7 November 2019. [CrossRef]

- Dai, D.; Dong, L.; Hao, Y.; Sui, Z.; Chang, B.; Wei, F. Knowledge Neurons in Pretrained Transformers. In Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics, Dublin, Ireland, 22–27 May 2022; Volume 1: Long Papers, pp. 8493–8502. [Google Scholar] [CrossRef]

- Bommasani, R.; Hudson, D.A.; Adeli, E.; Altman, R.; Arora, S.; von Arx, S.; Bernstein, M.S.; Bohg, J.; Bosselut, A.; Brunskill, E.; et al. On the Opportunities and Risks of Foundation Models. arXiv 2021, arXiv:2108.07258. [Google Scholar] [CrossRef]

- Lombardo, G.; Trimigno, G.; Pellegrino, M.; Cagnoni, S. Language Models Fine-Tuning for Automatic Format Reconstruction of SEC Financial Filings. IEEE Access 2024, 12, 31249–31261. [Google Scholar] [CrossRef]

- Brown, T.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.D.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A.; et al. Language models are few-shot learners. Adv. Neural Inf. Process. Syst. 2020, 33, 1877–1901. [Google Scholar] [CrossRef]

- Wei, J.; Wang, X.; Schuurmans, D.; Bosma, M.; Xia, F.; Chi, E.; Le, Q.V.; Zhou, D. Chain-of-thought prompting elicits reasoning in large language models. Adv. Neural Inf. Process. Syst. 2022, 35, 24824–24837. [Google Scholar] [CrossRef]

- Wang, X.; Wei, J.; Schuurmans, D.; Le, Q.V.; Chi, E.H.; Narang, S.; Chowdhery, A.; Zhou, D. Self-Consistency Improves Chain of Thought Reasoning in Language Models. In Proceedings of the Eleventh International Conference on Learning Representations, Kigali, Rwanda, 1–5 May 2023. [Google Scholar] [CrossRef]

- Verma, S. Contextual compression in retrieval-augmented generation for large language models: A survey. arXiv 2024, arXiv:2409.13385. [Google Scholar] [CrossRef]

- Gao, L.; Madaan, A.; Zhou, S.; Alon, U.; Liu, P.; Yang, Y.; Callan, J.; Neubig, G. Pal: Program-aided language models. In Proceedings of the International Conference on Machine Learning, PMLR, Honolulu, HI, USA, 23–29 July 2023; pp. 10764–10799. [Google Scholar] [CrossRef]

- Hang, C.N.; Yu, P.D.; Tan, C.W. TrumorGPT: Graph-Based Retrieval-Augmented Large Language Model for Fact-Checking. IEEE Trans. Artif. Intell. 2025, 6, 3148–3162. [Google Scholar] [CrossRef]

- Adosoglou, G.; Park, S.; Lombardo, G.; Cagnoni, S.; Pardalos, P.M. Lazy Network: A Word Embedding-Based Temporal Financial Network to Avoid Economic Shocks in Asset Pricing Models. Complexity 2022, 2022, 9430919. [Google Scholar] [CrossRef]

- Liu, F.; AlDahoul, N.; Eady, G.; Zaki, Y.; Rahwan, T. Self-Reflection Makes Large Language Models Safer, Less Biased, and Ideologically Neutral. arXiv 2024, arXiv:2406.10400. [Google Scholar] [CrossRef]

- Lombardo, G.; Tomaiuolo, M.; Mordonini, M.; Codeluppi, G.; Poggi, A. Mobility in unsupervised word embeddings for knowledge extraction—The scholars’ trajectories across research topics. Future Internet 2022, 14, 25. [Google Scholar] [CrossRef]

- Schick, T.; Dwivedi-Yu, J.; Dessì, R.; Raileanu, R.; Lomeli, M.; Hambro, E.; Zettlemoyer, L.; Cancedda, N.; Scialom, T. Toolformer: Language models can teach themselves to use tools. Adv. Neural Inf. Process. Syst. 2023, 36, 68539–68551. [Google Scholar] [CrossRef]

- Chen, W.; Ma, X.; Wang, X.; Cohen, W.W. Program of Thoughts Prompting: Disentangling Computation from Reasoning for Numerical Reasoning Tasks. arXiv 2023. [Google Scholar] [CrossRef]

- Shinn, N.; Cassano, F.; Gopinath, A.; Narasimhan, K.; Yao, S. Reflexion: Language agents with verbal reinforcement learning. Adv. Neural Inf. Process. Syst. 2023, 36, 8634–8652. [Google Scholar] [CrossRef]

- Du, Y.; Li, S.; Torralba, A.; Tenenbaum, J.B.; Mordatch, I. Improving factuality and reasoning in language models through multiagent debate. In Proceedings of the Forty-First International Conference on Machine Learning, Vienna, Austria, 21–27 July 2024. [Google Scholar] [CrossRef]

- Li, G.; Hammoud, H.; Itani, H.; Khizbullin, D.; Ghanem, B. Camel: Communicative agents for “mind” exploration of large language model society. Adv. Neural Inf. Process. Syst. 2023, 36, 51991–52008. [Google Scholar] [CrossRef]

- Roitero, K.; Wright, D.; Soprano, M.; Augenstein, I.; Mizzaro, S. Efficiency and Effectiveness of LLM-Based Summarization of Evidence in Crowdsourced Fact-Checking. In Proceedings of the SIGIR’25, New York, NY, USA, 13–18 July 2025; pp. 457–467. [Google Scholar] [CrossRef]

- Angiani, G.; Fornacciari, P.; Lombardo, G.; Poggi, A.; Tomaiuolo, M. Actors based agent modelling and simulation. In Highlights of Practical Applications of Agents, Multi-Agent Systems, and Complexity: The PAAMS Collection, Proceedings of the International Conference on Practical Applications of Agents and Multi-Agent Systems, Toledo, Spain, 20–22 June 2018; Springer: Berlin/Heidelberg, Germany, 2018; pp. 443–455. [Google Scholar] [CrossRef]

- Qi, P.; Zhang, Y.; Zhang, Y.; Bolton, J.; Manning, C.D. Stanza: A Python Natural Language Processing Toolkit for Many Human Languages. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics: System Demonstrations, Seattle, WA, USA, 5–10 July 2020. [Google Scholar] [CrossRef]

- Cheng, J.; Liu, X.; Zheng, K.; Ke, P.; Wang, H.; Dong, Y.; Tang, J.; Huang, M. Black-Box Prompt Optimization: Aligning Large Language Models without Model Training. In Proceedings of the 62nd Annual Meeting of the Association for Computational Linguistics, Bangkok, Thailand, 11–16 August 2024; Volume 1: Long Papers, pp. 3201–3219. [Google Scholar] [CrossRef]

- He, P.; Gao, J.; Chen, W. DeBERTaV3: Improving DeBERTa using ELECTRA-Style Pre-Training with Gradient-Disentangled Embedding Sharing. In Proceedings of the Eleventh International Conference on Learning Representations, Kigali, Rwanda, 1–5 May 2023. [Google Scholar] [CrossRef]

- Diera, A.; Galke, L.; Karl, F.; Scherp, A. Efficient Continual Learning for Small Language Models with via a Discrete Key-Value Bottleneck. arXiv 2024, arXiv:2412.08528. [Google Scholar] [CrossRef]

- Lai, G.; Xie, Q.; Liu, H.; Yang, Y.; Hovy, E. RACE: Large-scale ReAding Comprehension Dataset From Examinations. In Proceedings of the 2017 Conference on Empirical Methods in Natural Language Processing, Copenhagen, Denmark, 7–11 September 2017; Association for Computational Linguistics: Stroudsburg, PA, USA, 2017; pp. 785–794. [Google Scholar] [CrossRef]

- Richardson, M.; Burges, C.J.; Renshaw, E. MCTest: A Challenge Dataset for the Open-Domain Machine Comprehension of Text. In Proceedings of the 2013 Conference on Empirical Methods in Natural Language Processing, Seattle, WA, USA, 18–21 October 2013; Association for Computational Linguistics: Stroudsburg, PA, USA, 2013; pp. 193–203. [Google Scholar]

- Pang, R.Y.; Khashabi, D.; Chen, W.t.; Sabharwal, A.; Clark, P.; Jia, R. QuALITY: Question Answering with Long Input Texts, Yes! In Proceedings of the 2022 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Seattle, WA, USA, 10–15 July 2022; Association for Computational Linguistics: Stroudsburg, PA, USA, 2022; pp. 4599–4619. [Google Scholar] [CrossRef]

- Bajaj, P.; Campos, D.; Craswell, N.; Deng, L.; Gao, J.; Liu, X.; Majumder, R.; McNamara, A.; Mitra, B.; Nguyen, T.; et al. MS MARCO: A human generated machine reading comprehension dataset. In Proceedings of the Workshop on Cognitive Computing (ICRC), San Diego, CA, USA, 17–19 October 2016. [Google Scholar] [CrossRef]

- Cao, S.; Wang, L. HIBRIDS: Attention with Hierarchical Biases for Structure-aware Long Document Summarization. In Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics, Dublin, Ireland, 22–27 May 2022; Volume 1: Long Papers, pp. 786–807. [Google Scholar] [CrossRef]

- Huang, L.; Cao, S.; Parulian, N.; Ji, H.; Wang, L. Efficient Attentions for Long Document Summarization. In Proceedings of the 2021 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Online, 6–11 June 2021; pp. 1419–1436. [Google Scholar] [CrossRef]

- Sellam, T.; Das, D.; Parikh, A. BLEURT: Learning Robust Metrics for Text Generation. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, Online, 5–10 July 2020; pp. 7881–7892. [Google Scholar] [CrossRef]

- Lin, C.Y. ROUGE: A Package for Automatic Evaluation of Summaries. In Text Summarization Branches Out, Proceedings of the ACL-04 Workshop, Barcelona, Spain, 25–26 July 2004; Anthology ID: W04-1013; Association for Computational Linguistics: Stroudsburg, PA, USA, 2004; pp. 74–81. [Google Scholar]

- Papineni, K.; Roukos, S.; Ward, T.; Zhu, W.J. BLEU: A method for automatic evaluation of machine translation. In Proceedings of the 40th Annual Meeting on Association for Computational Linguistics, ACL’02, Philadelphia, PA USA, 6–12 July 2002; pp. 311–318. [Google Scholar] [CrossRef]

- Lavie, A.; Agarwal, A. Meteor: An automatic metric for MT evaluation with high levels of correlation with human judgments. In Proceedings of the Second Workshop on Statistical Machine Translation, StatMT’07, Prague, Czech Republic, 23 June 2007; pp. 228–231. [Google Scholar]

- Zhang, T.; Kishore, V.; Wu, F.; Weinberger, K.Q.; Artzi, Y. BERTScore: Evaluating Text Generation with BERT. arXiv 2019, arXiv:1904.09675. [Google Scholar] [CrossRef]

- Warner, B.; Chaffin, A.; Clavié, B.; Weller, O.; Hallström, O.; Taghadouini, S.; Gallagher, A.; Biswas, R.; Ladhak, F.; Aarsen, T.; et al. Smarter, better, faster, longer: A modern bidirectional encoder for fast, memory efficient, and long context finetuning and inference. arXiv 2024, arXiv:2412.13663. [Google Scholar] [CrossRef]

- Boizard, N.; Gisserot-Boukhlef, H.; Alves, D.M.; Martins, A.; Hammal, A.; Corro, C.; Hudelot, C.; Malherbe, E.; Malaboeuf, E.; Jourdan, F.; et al. EuroBERT: Scaling multilingual encoders for European languages. arXiv 2025, arXiv:2503.05500. [Google Scholar] [CrossRef]

- Guo, M.; Ainslie, J.; Uthus, D.C.; Ontanon, S.; Ni, J.; Sung, Y.H.; Yang, Y. LongT5: Efficient Text-To-Text Transformer for Long Sequences. In Proceedings of the Findings of the Association for Computational Linguistics: NAACL 2022, Seattle, WA, USA, 10–15 July 2022; pp. 724–736. [Google Scholar] [CrossRef]

- Phang, J.; Zhao, Y.; Liu, P.J. Investigating Efficiently Extending Transformers for Long Input Summarization. In Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing, Singapore, 6–10 December 2023; pp. 3946–3961. [Google Scholar] [CrossRef]

- Qwen; Yang, A.; Yang, B.; Zhang, B.; Hui, B.; Zheng, B.; Yu, B.; Li, C.; Liu, D.; Huang, F.; et al. Qwen2.5 Technical Report. arXiv 2025, arXiv:2412.15115. [Google Scholar] [CrossRef]

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).