Detection and Mitigation in IoT Ecosystems Using oneM2M Architecture and Edge-Based Machine Learning

Abstract

1. Introduction

- We deployed an edge-based DDoS detector tightly integrated with oneM2M, enabling on-device, per-attack responses in a smart-home setting.

- We combined packet-level features with system signals (CPU/memory/traffic) to improve robustness under resource contention.

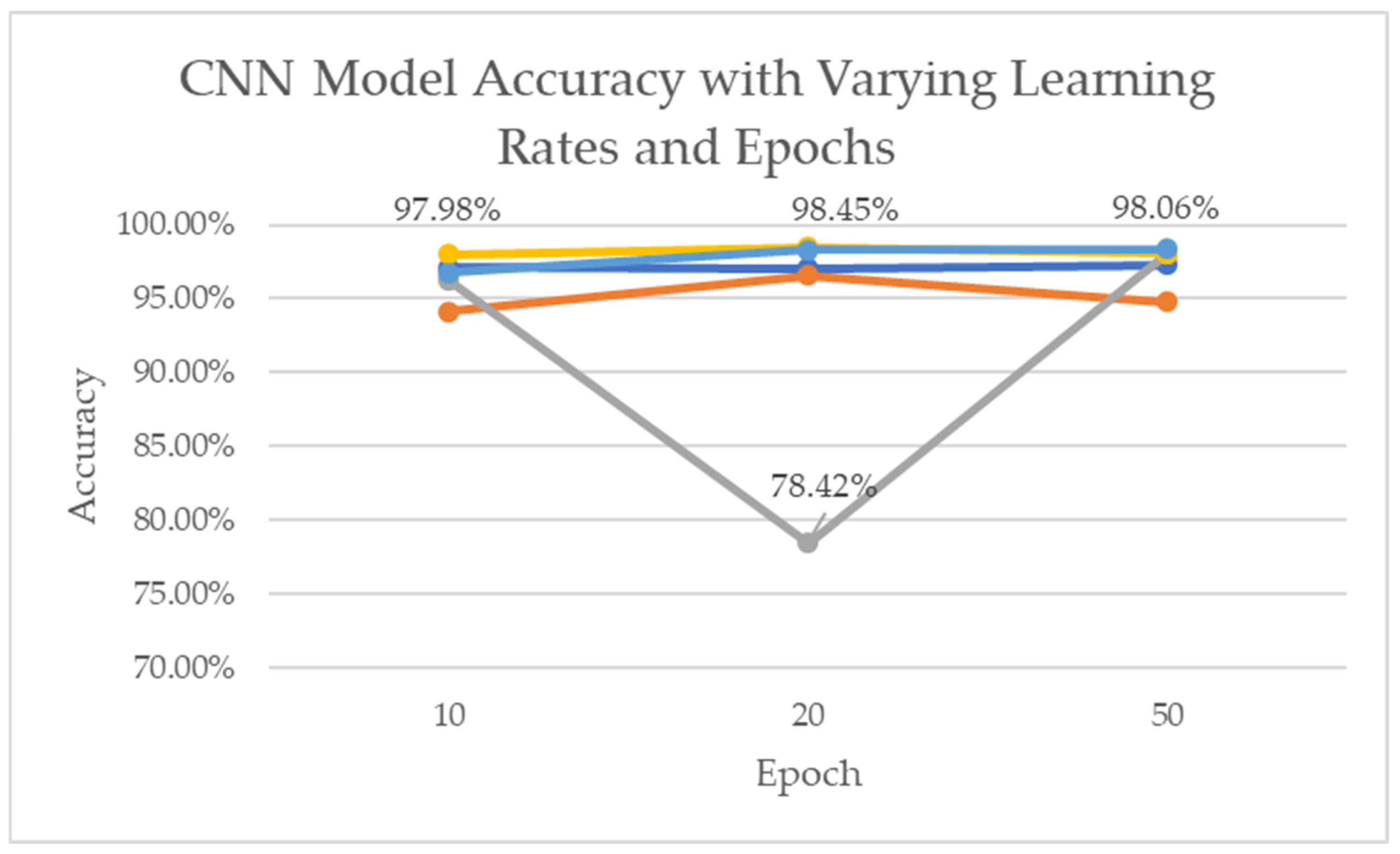

- We provided an efficient 2D-CNN design that achieves 98.45% accuracy with low inference overhead on edge hardware, outperforming LSTM and Decision Tree in our tests.

- We demonstrated attack-specific mitigations (SYN cookies and iptables rate limits) and quantified end-to-end impact under SYN/UDP/ICMP floods.

2. Related Work

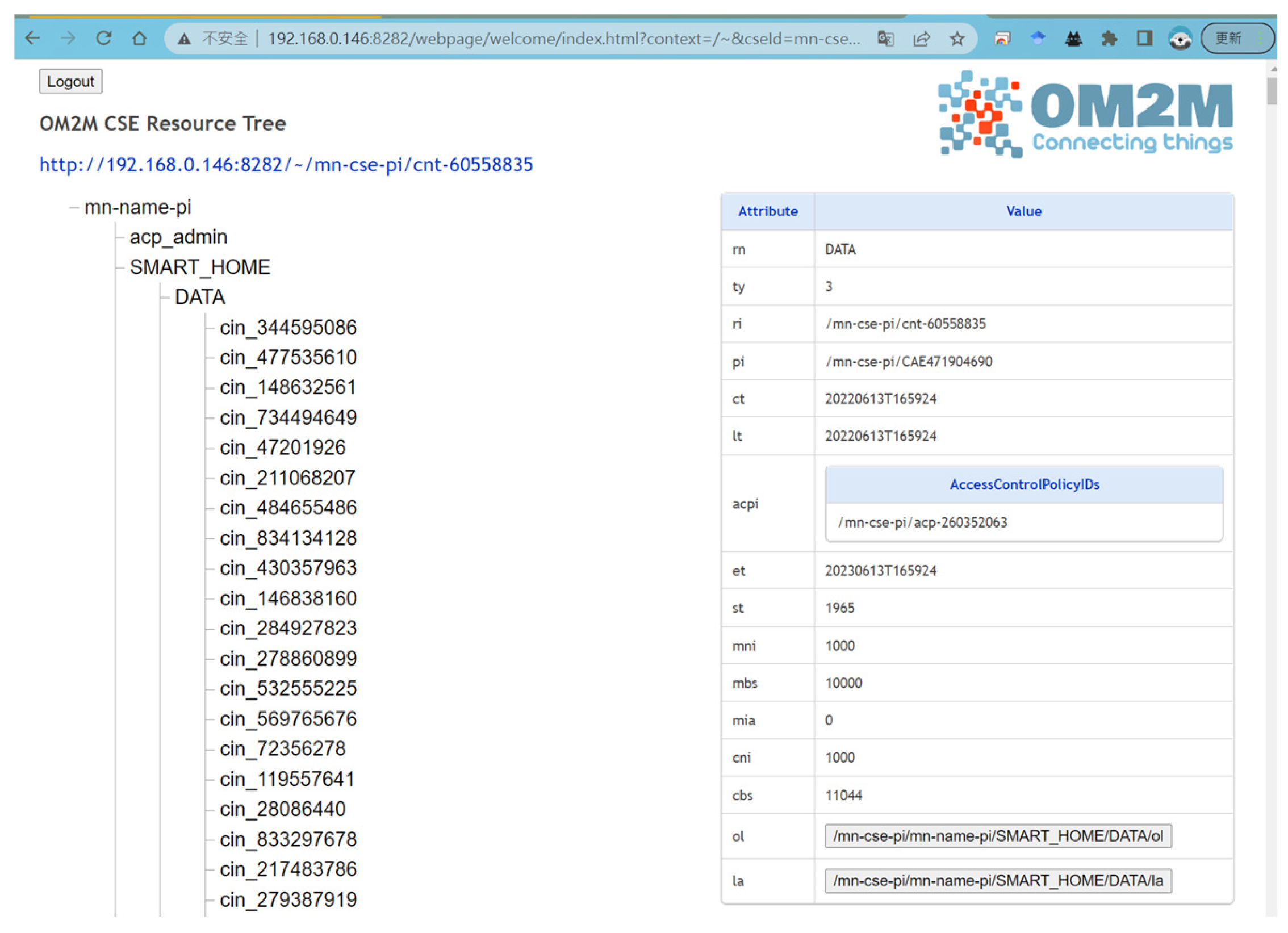

2.1. oneM2M

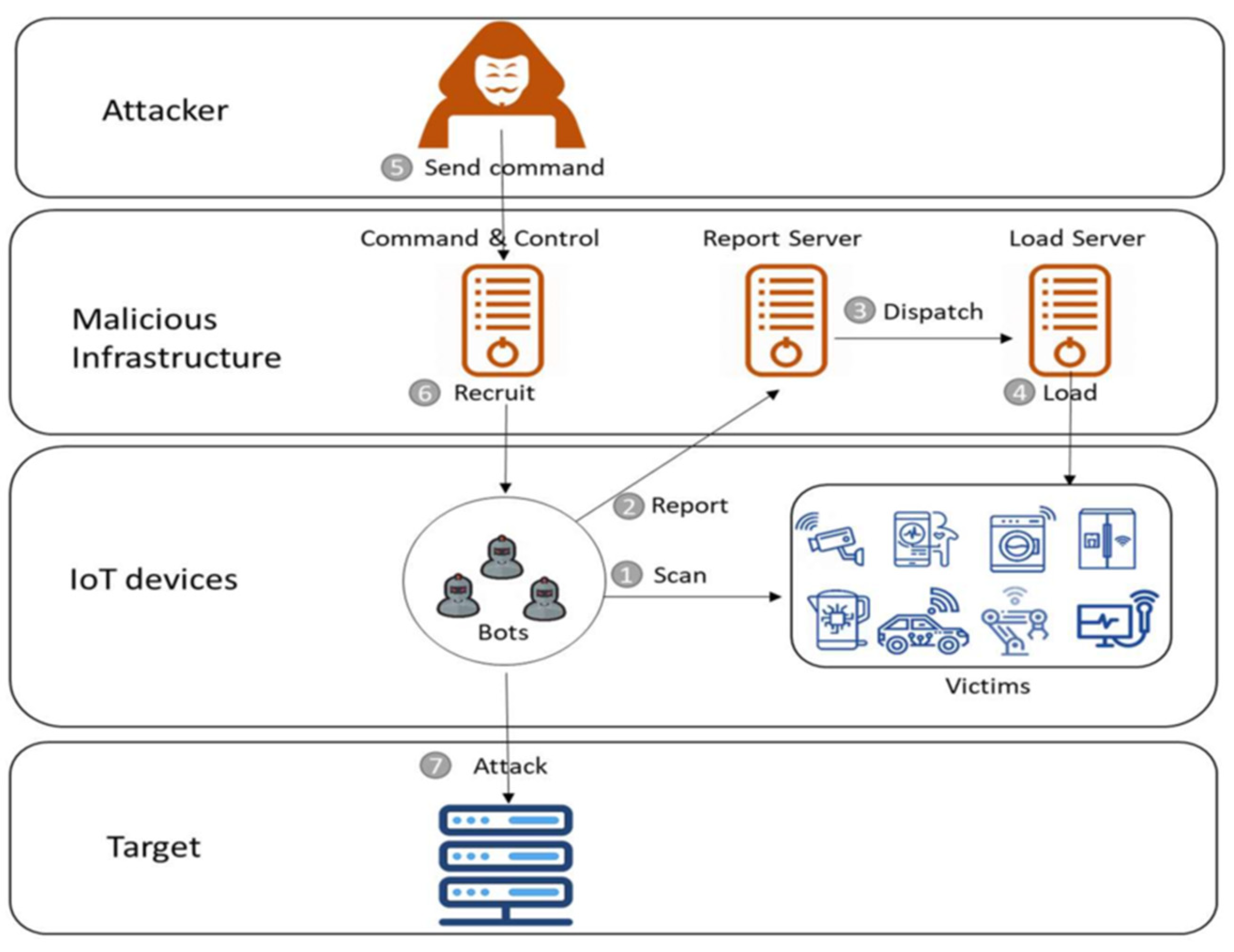

2.2. DDoS

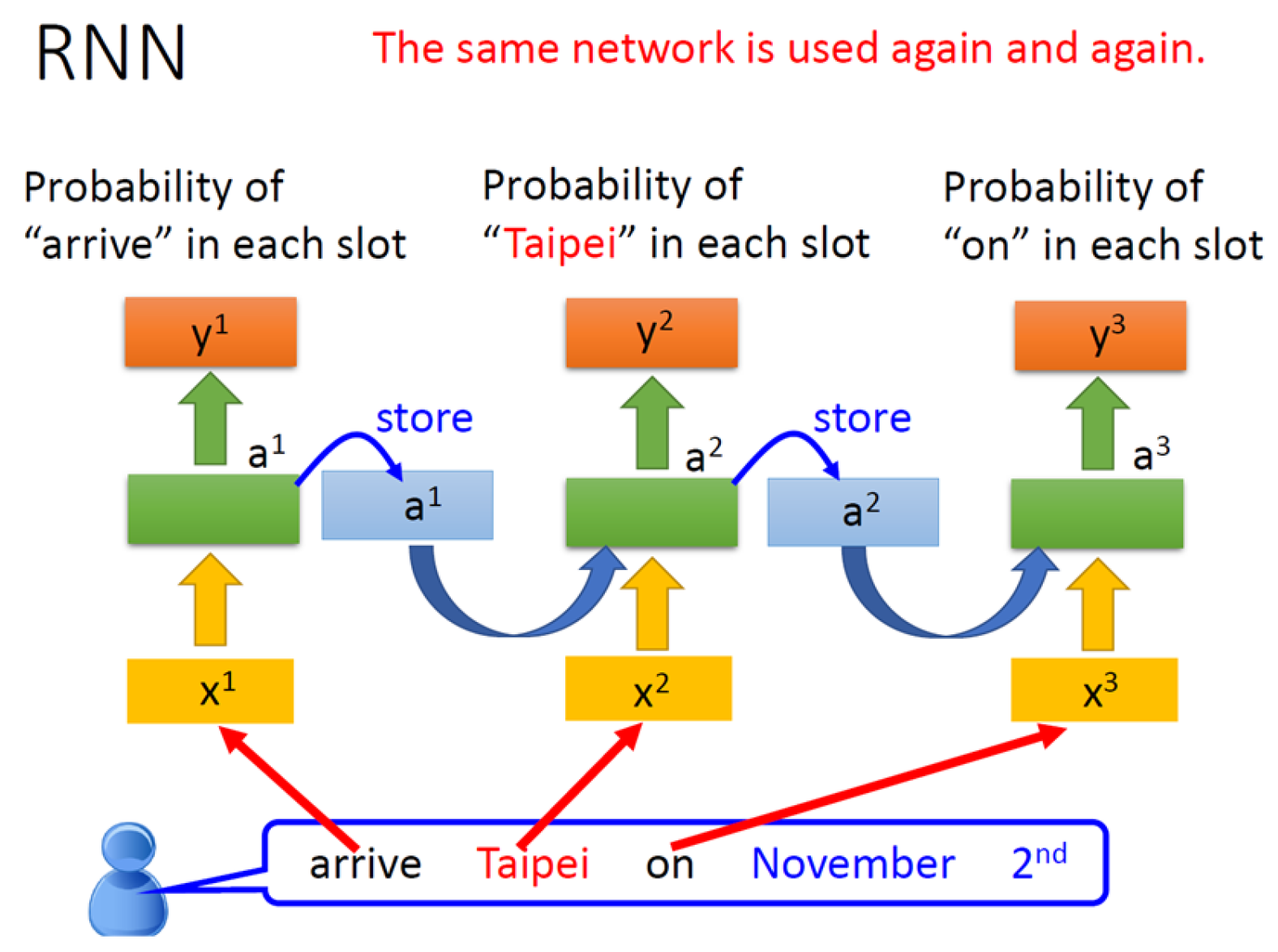

2.3. LSTM

2.4. Recent Baselines and Our Positioning

3. Materials and Methods

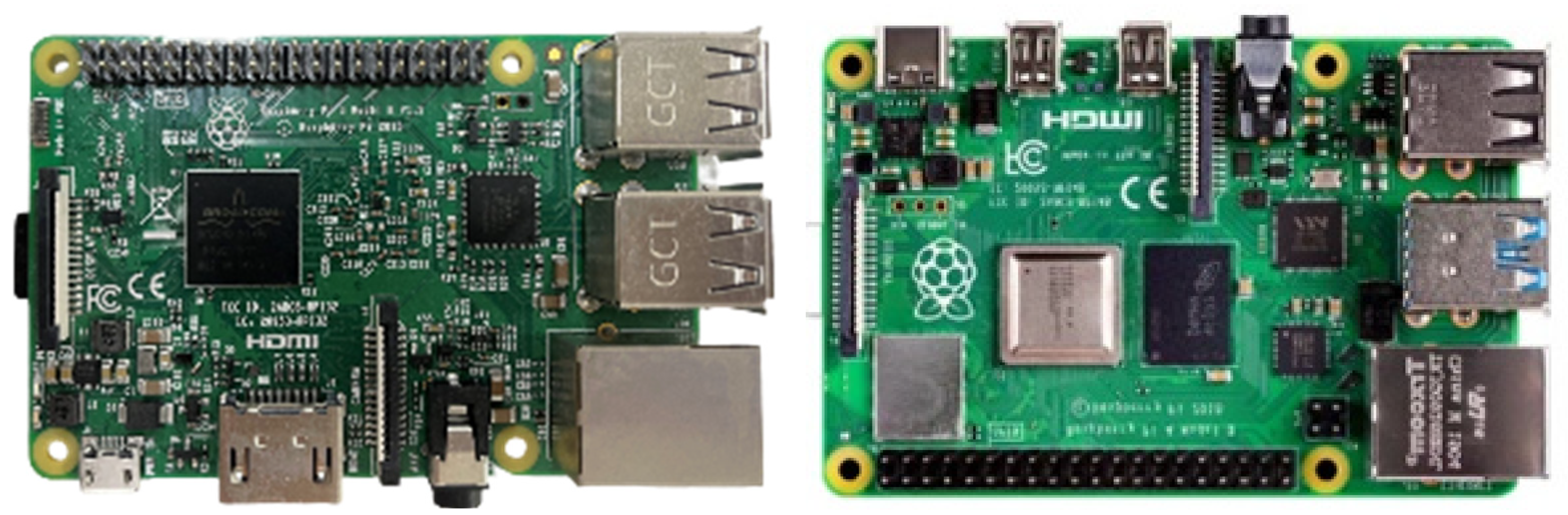

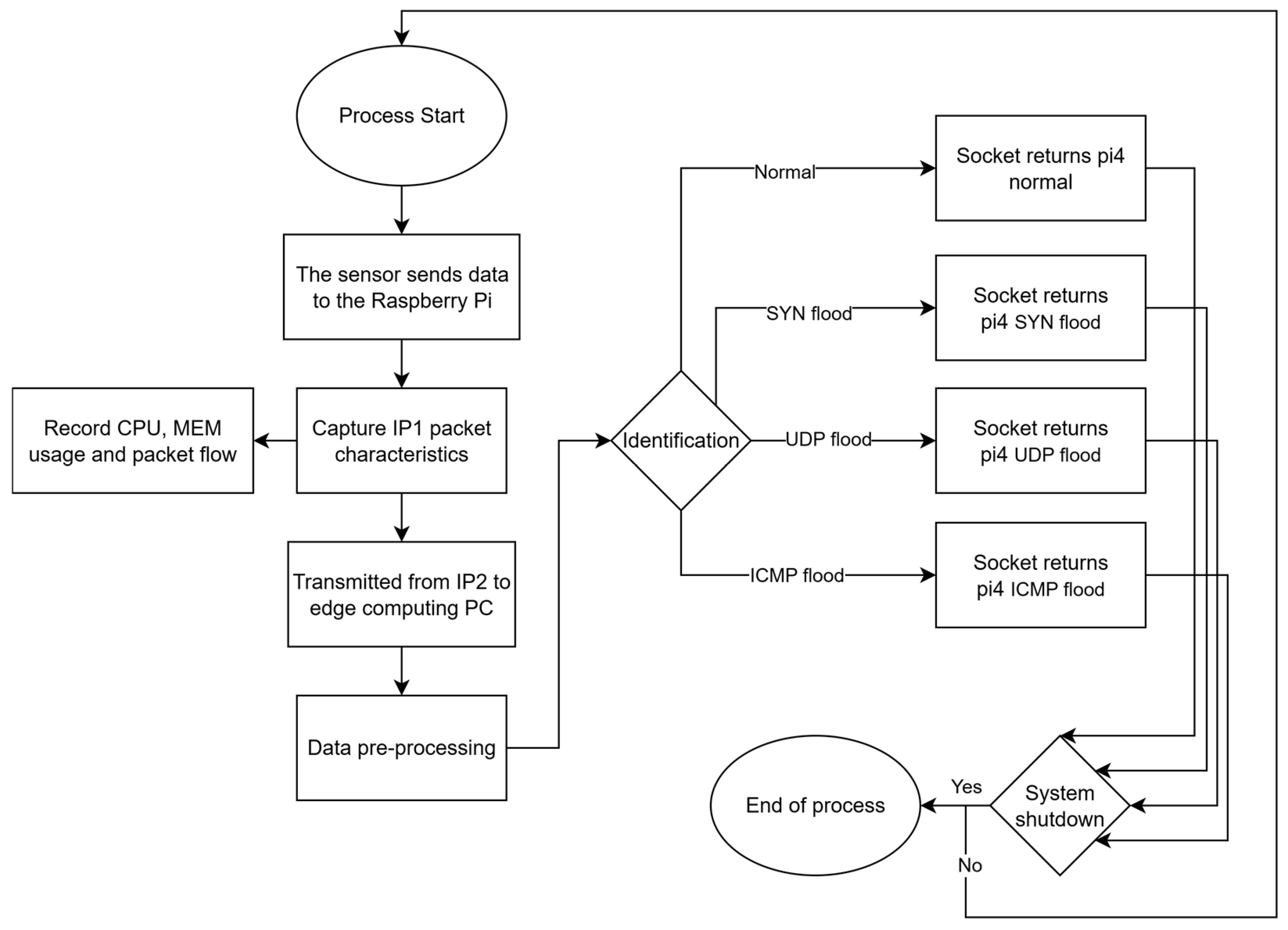

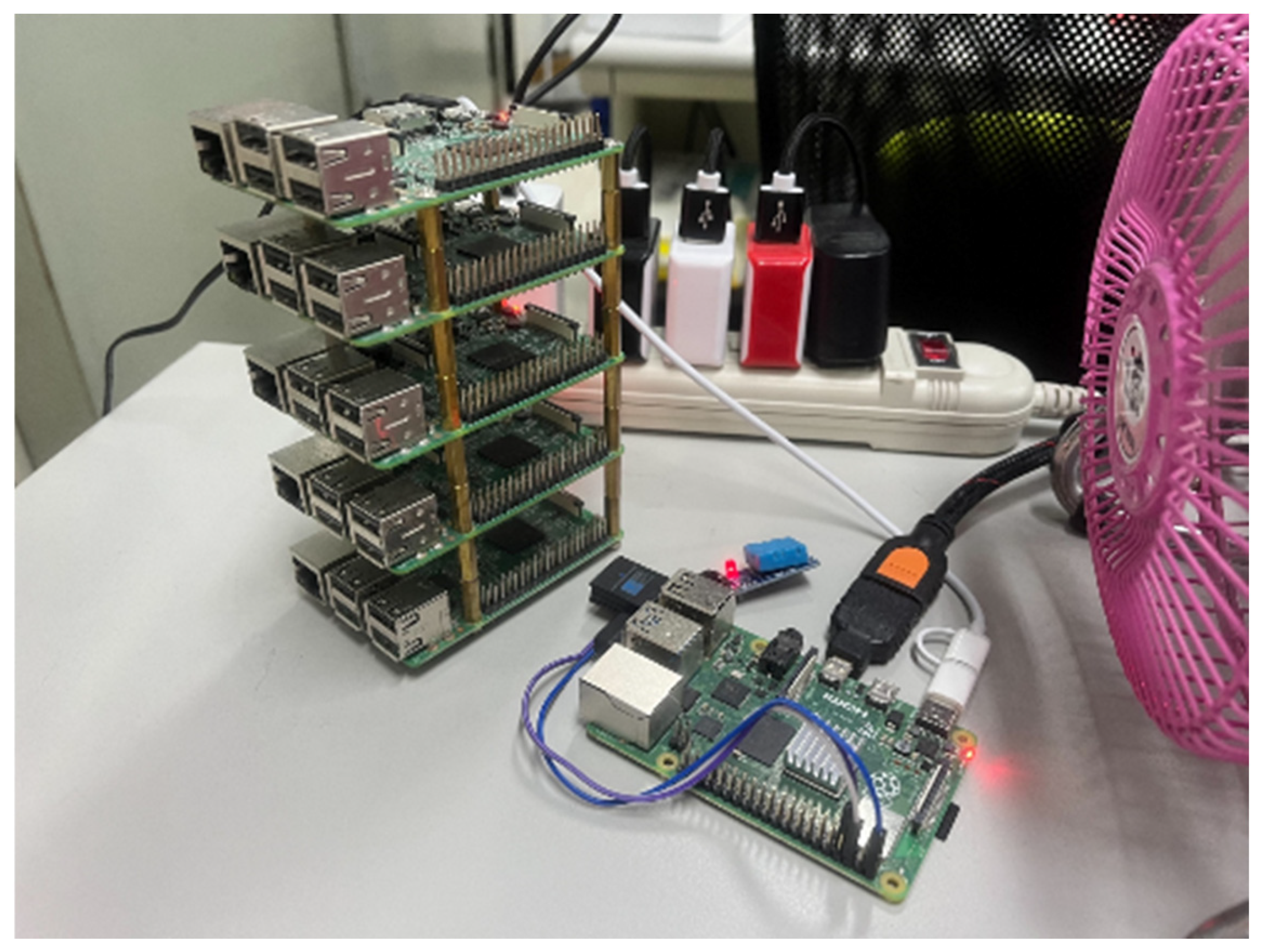

3.1. System Architecture

3.2. Real-Time Detection System Architecture

Theory and Rationale

3.3. Dataset and Model Training

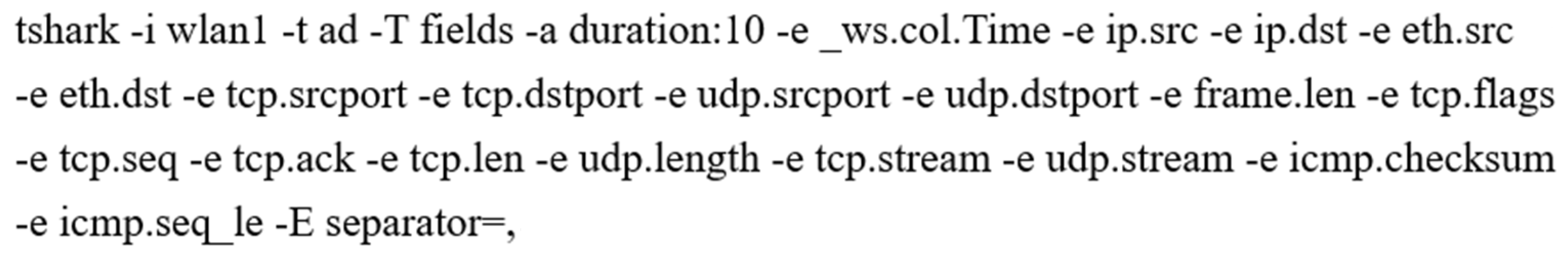

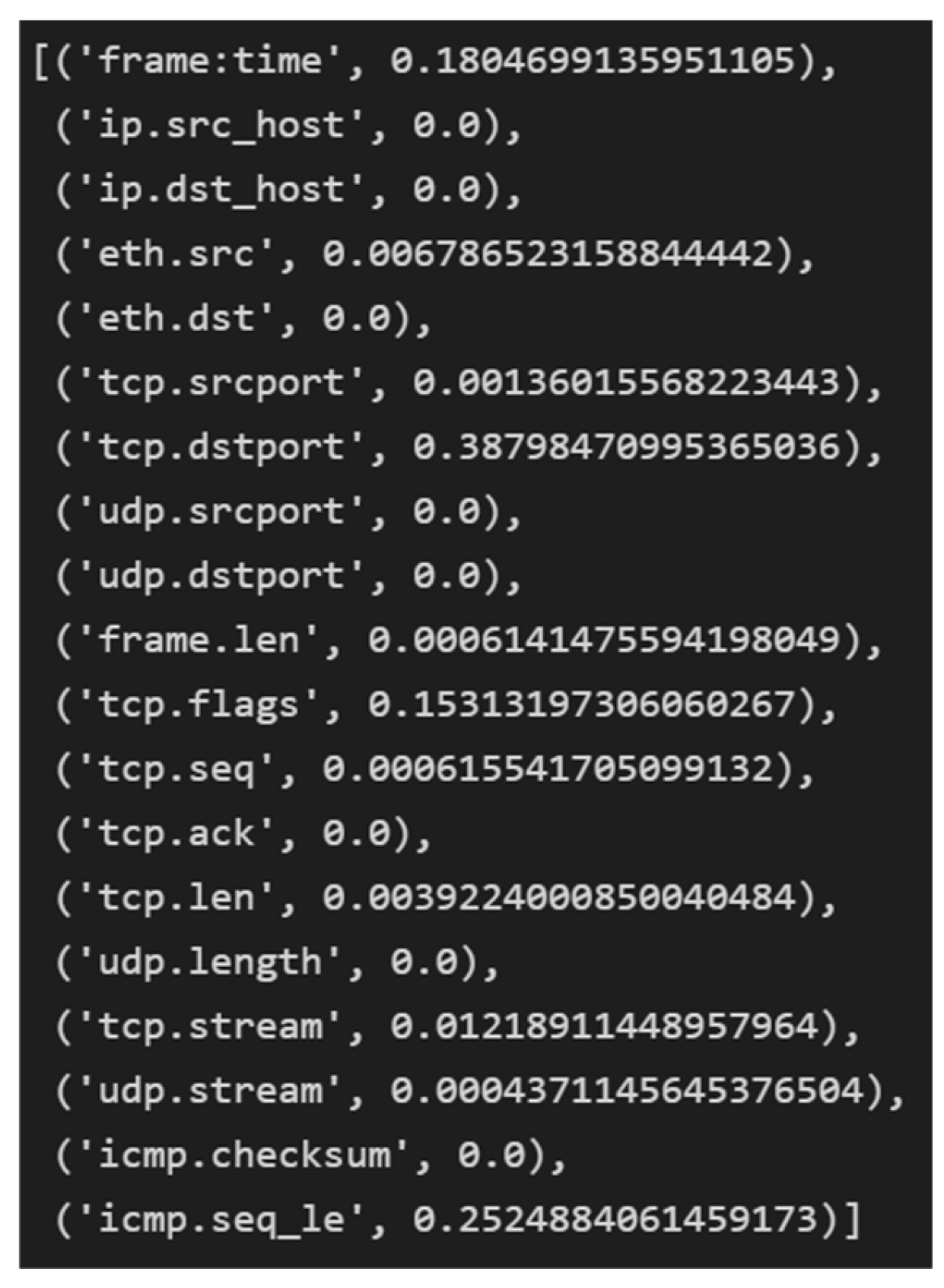

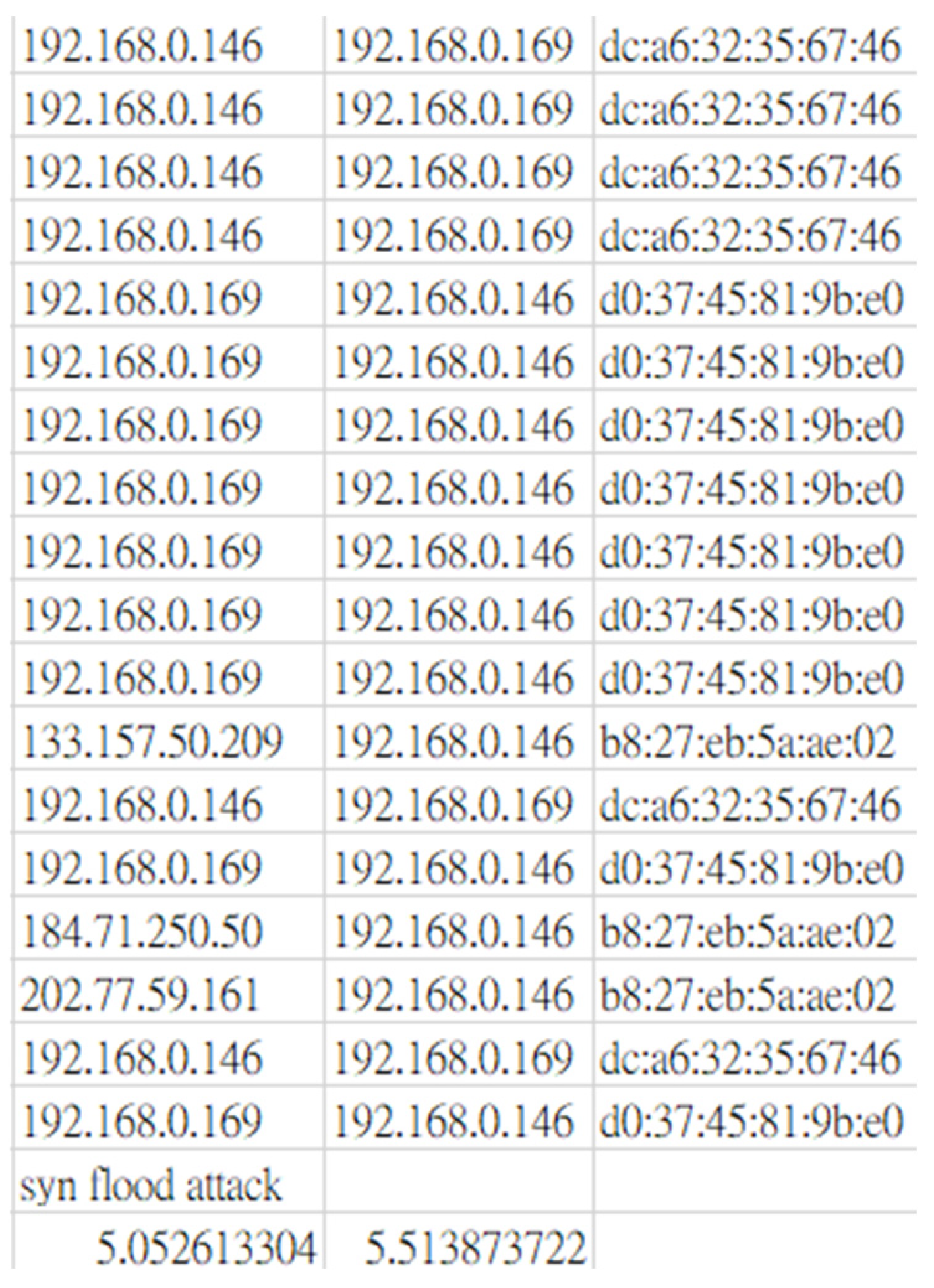

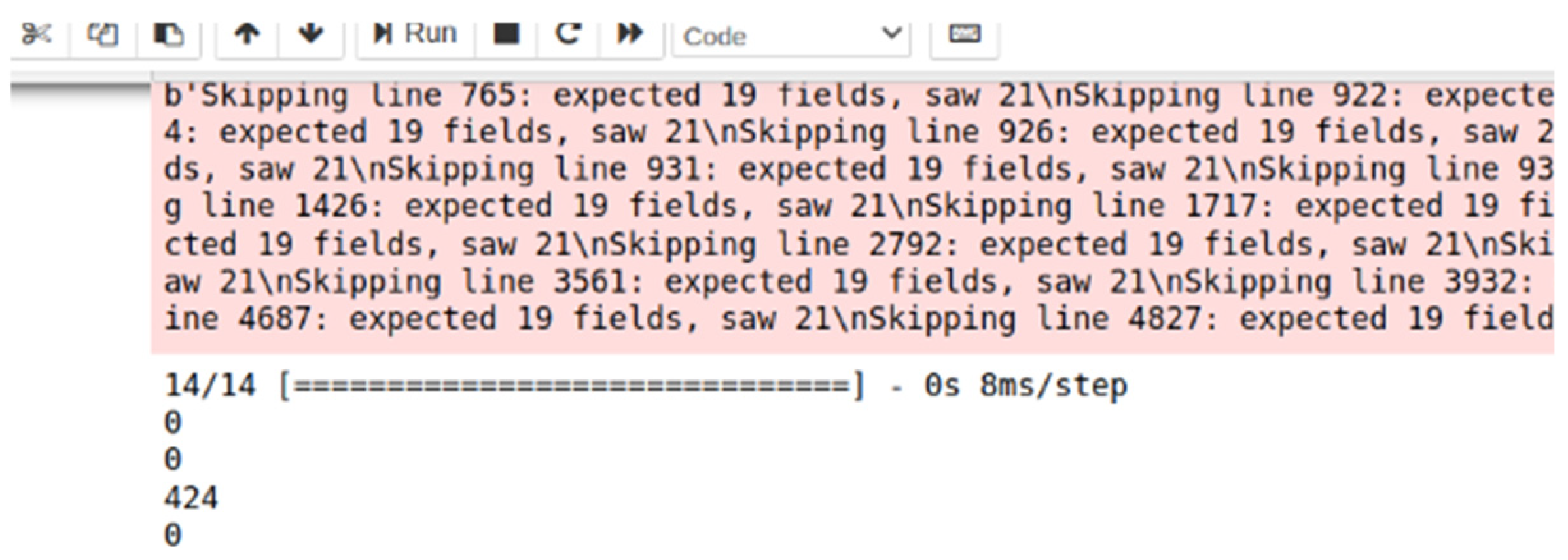

3.4. Packet Capture and Dataset Construction

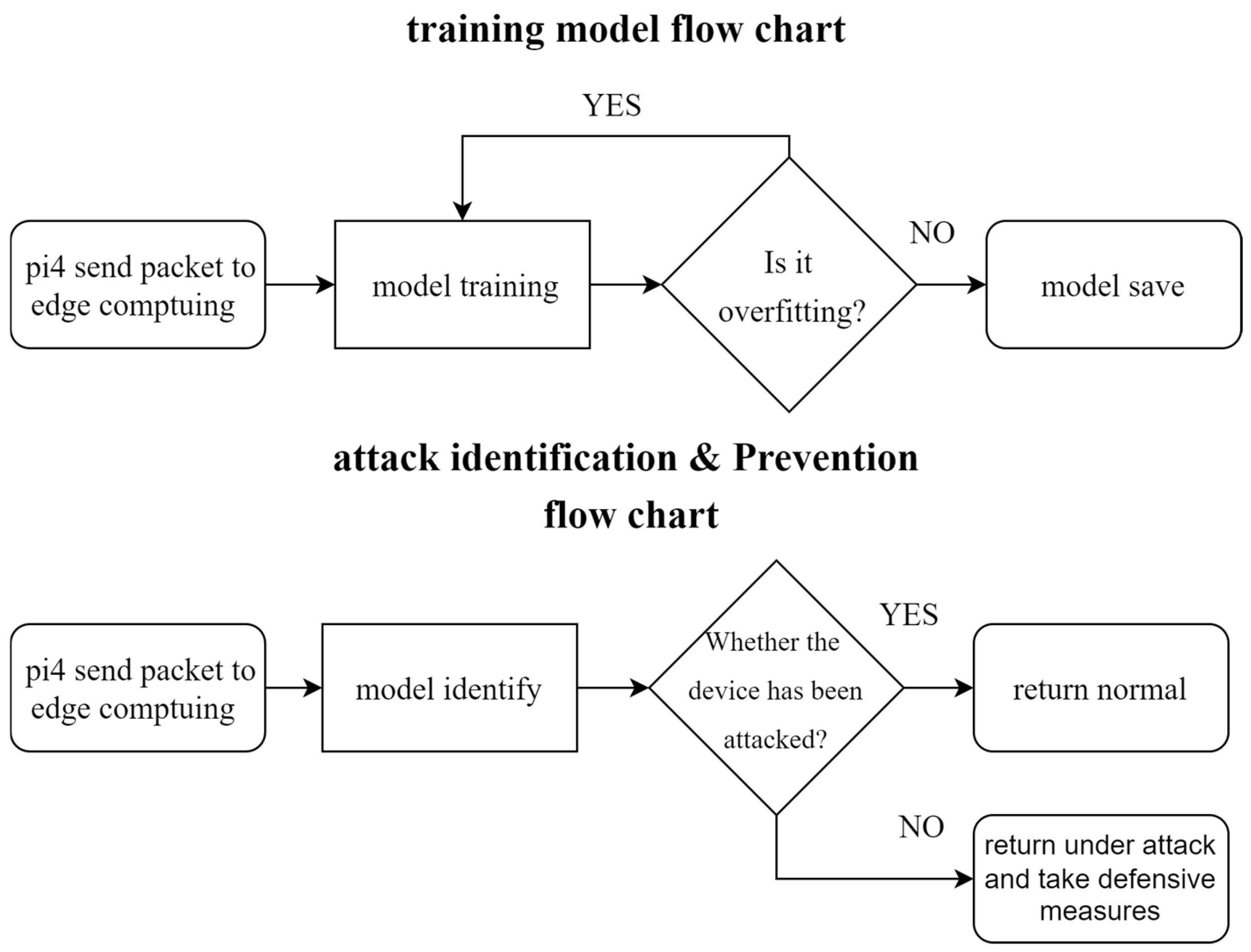

3.5. Model Training

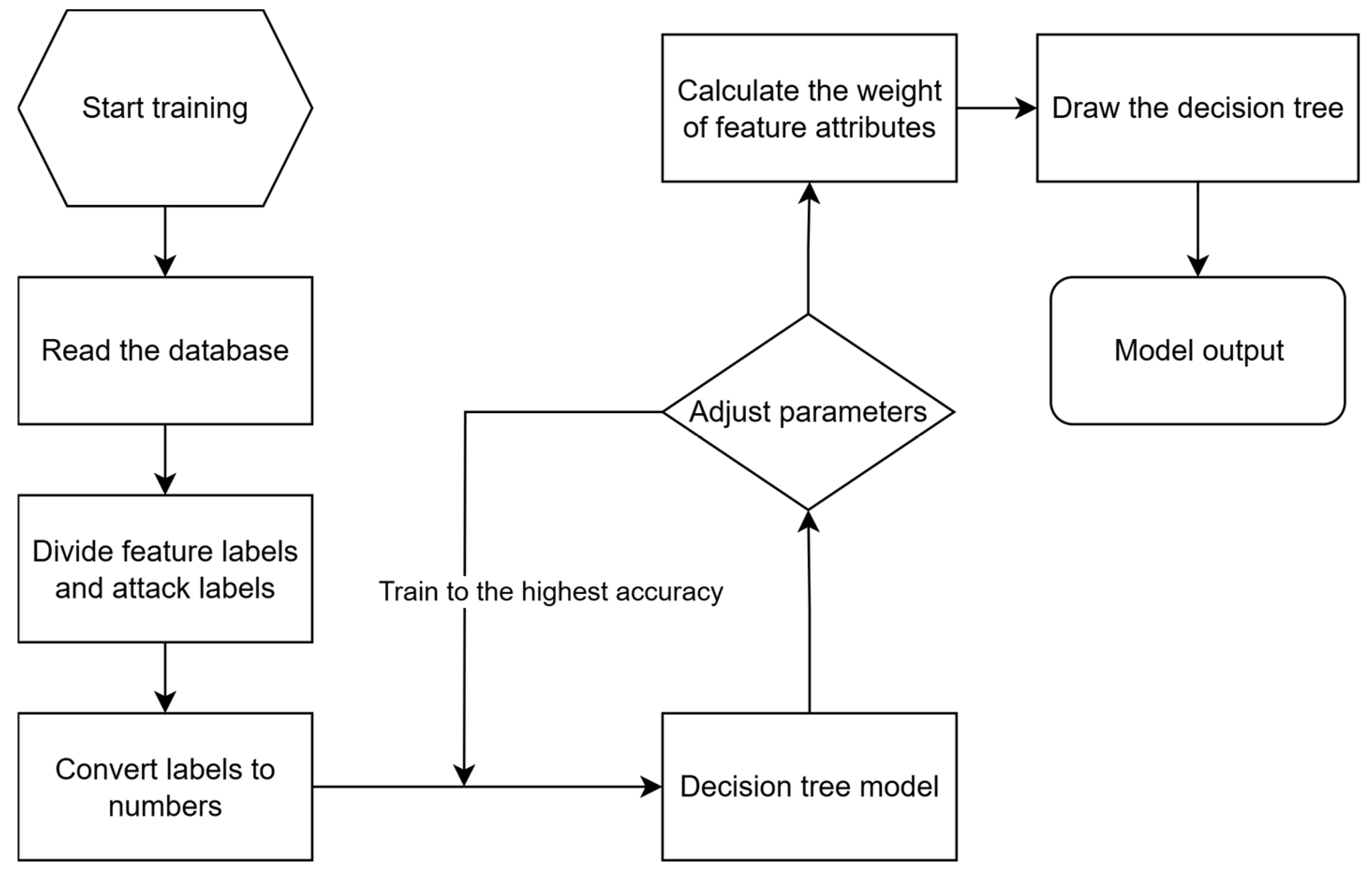

3.5.1. Decision Tree (DT)

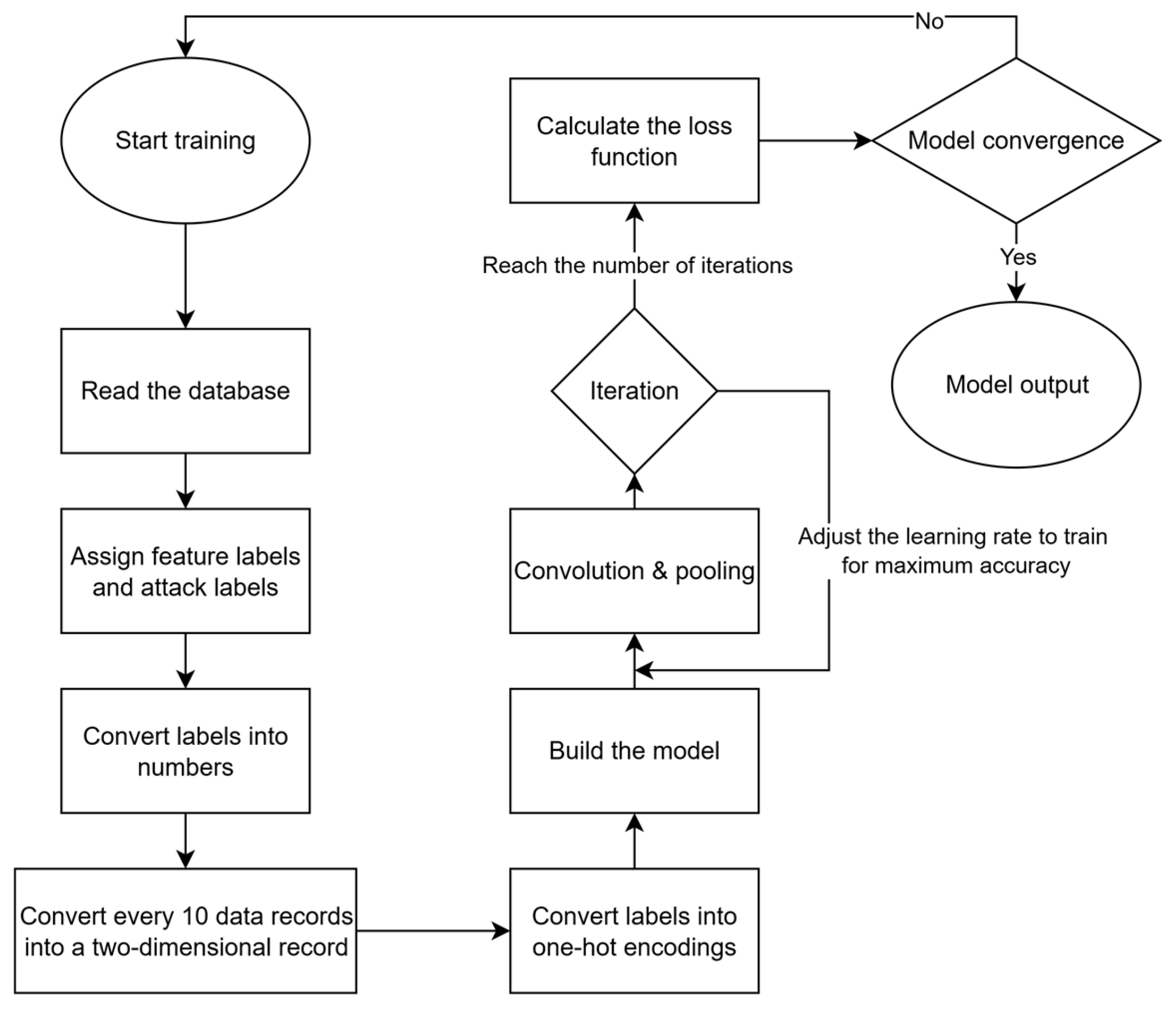

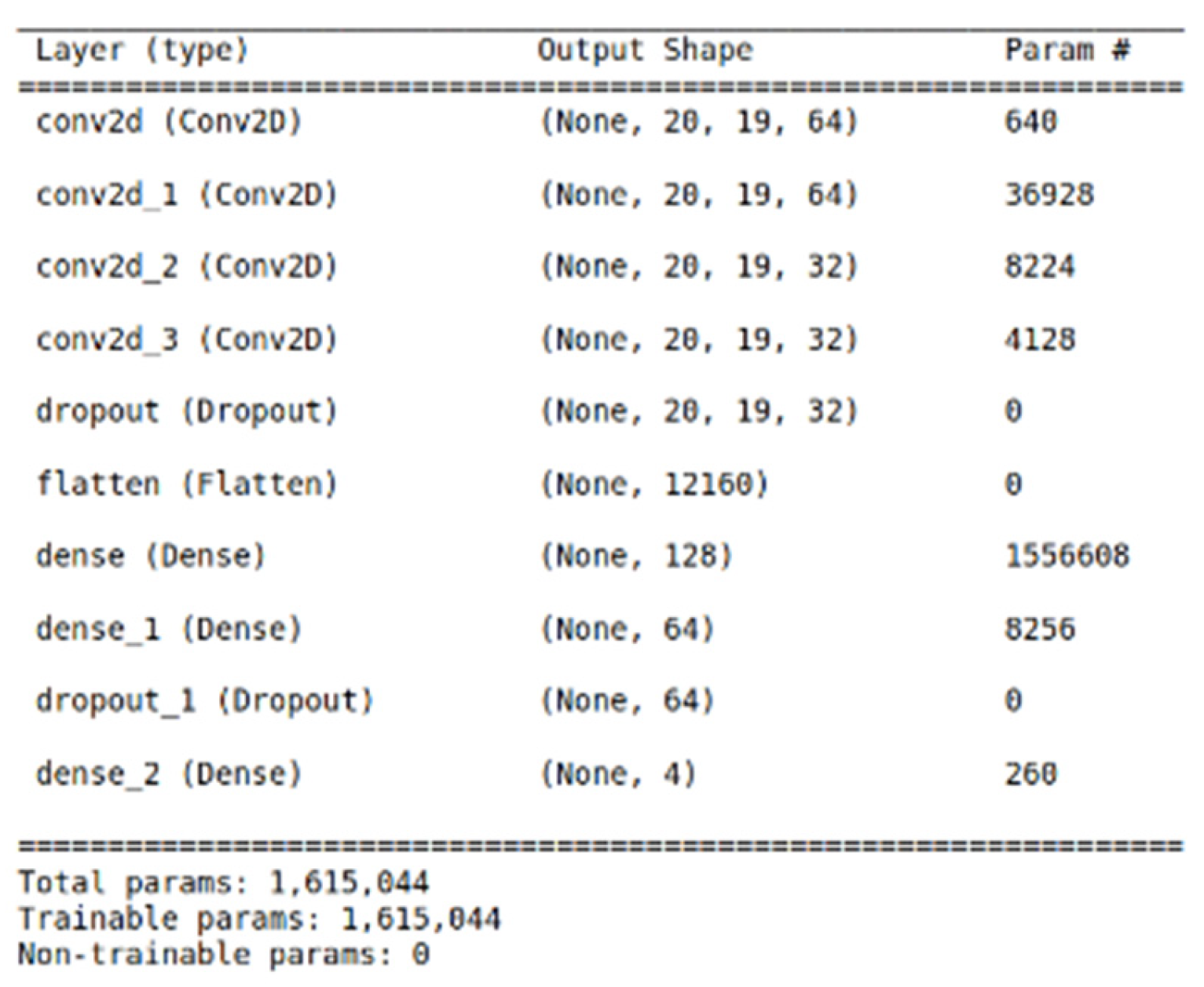

3.5.2. Convolutional Neural Network (CNN)

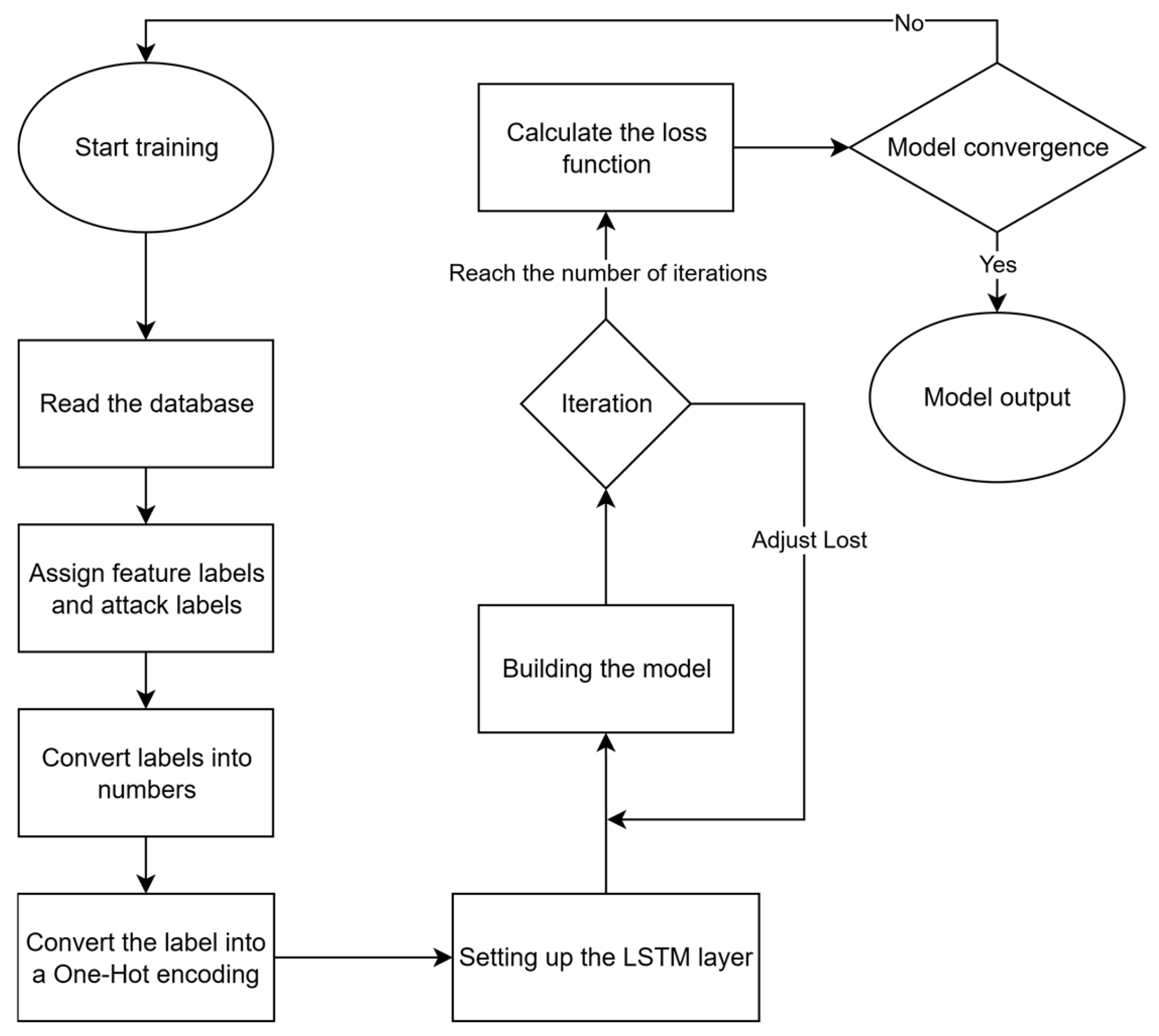

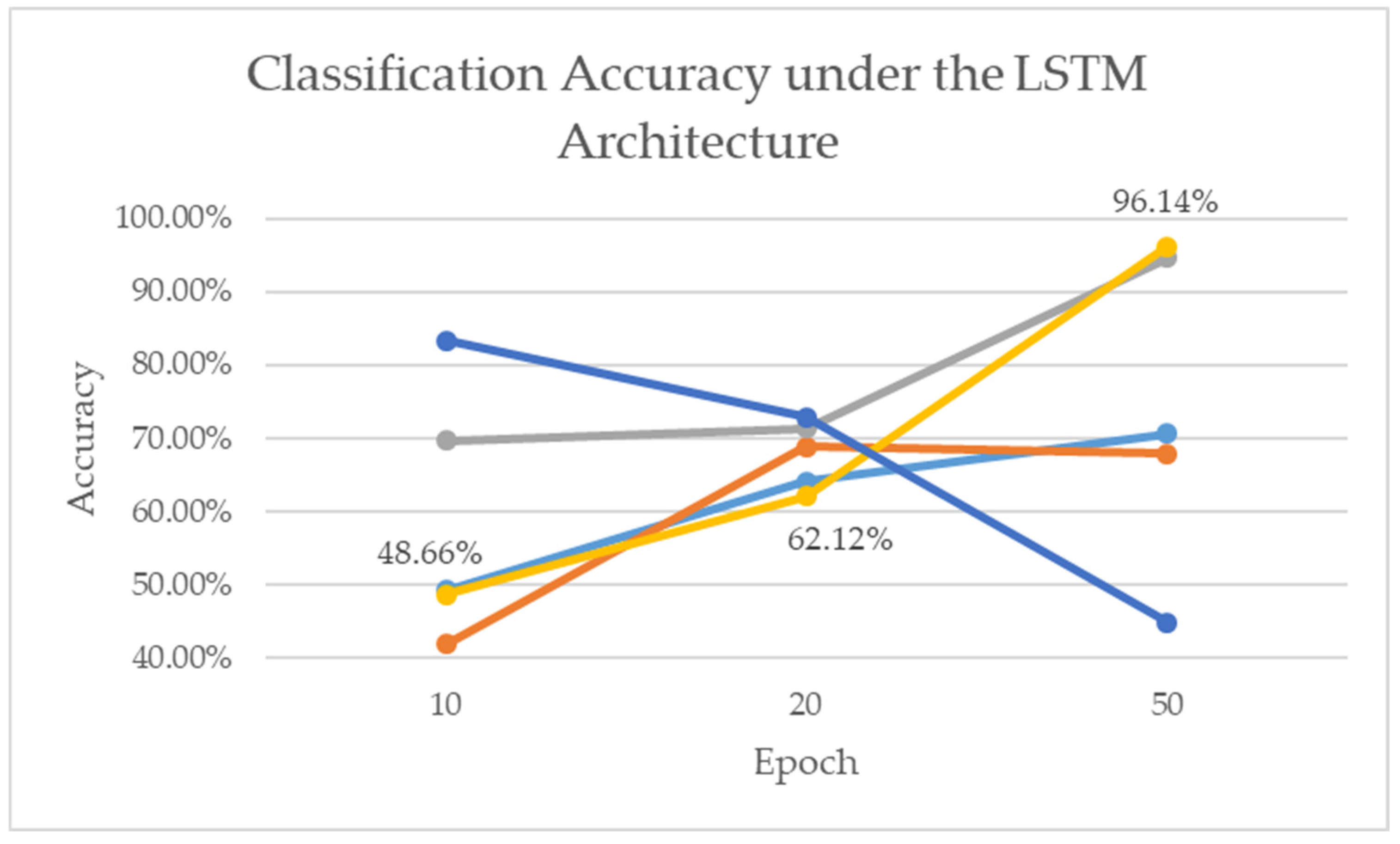

3.5.3. Long Short-Term Memory (LSTM)

4. Results

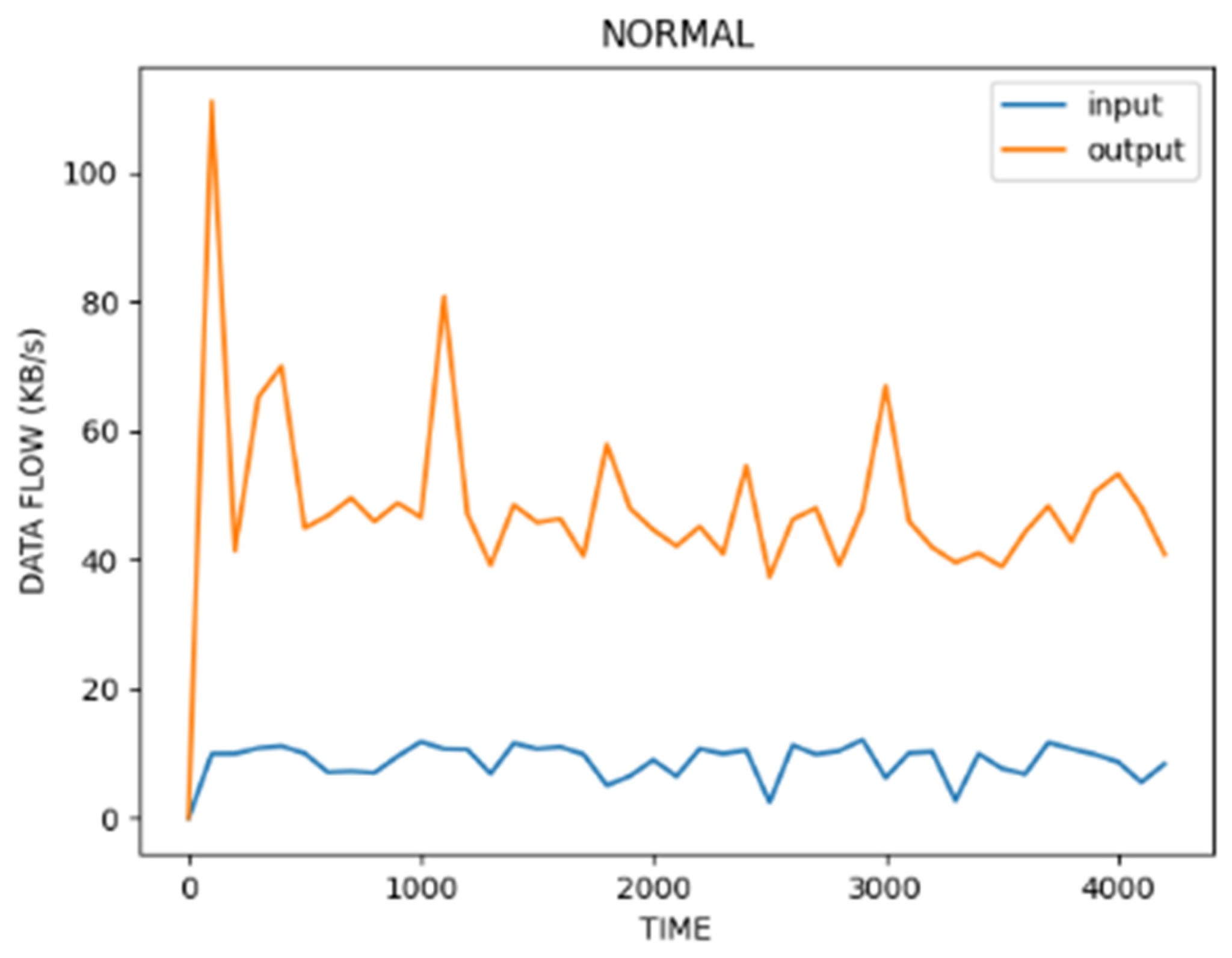

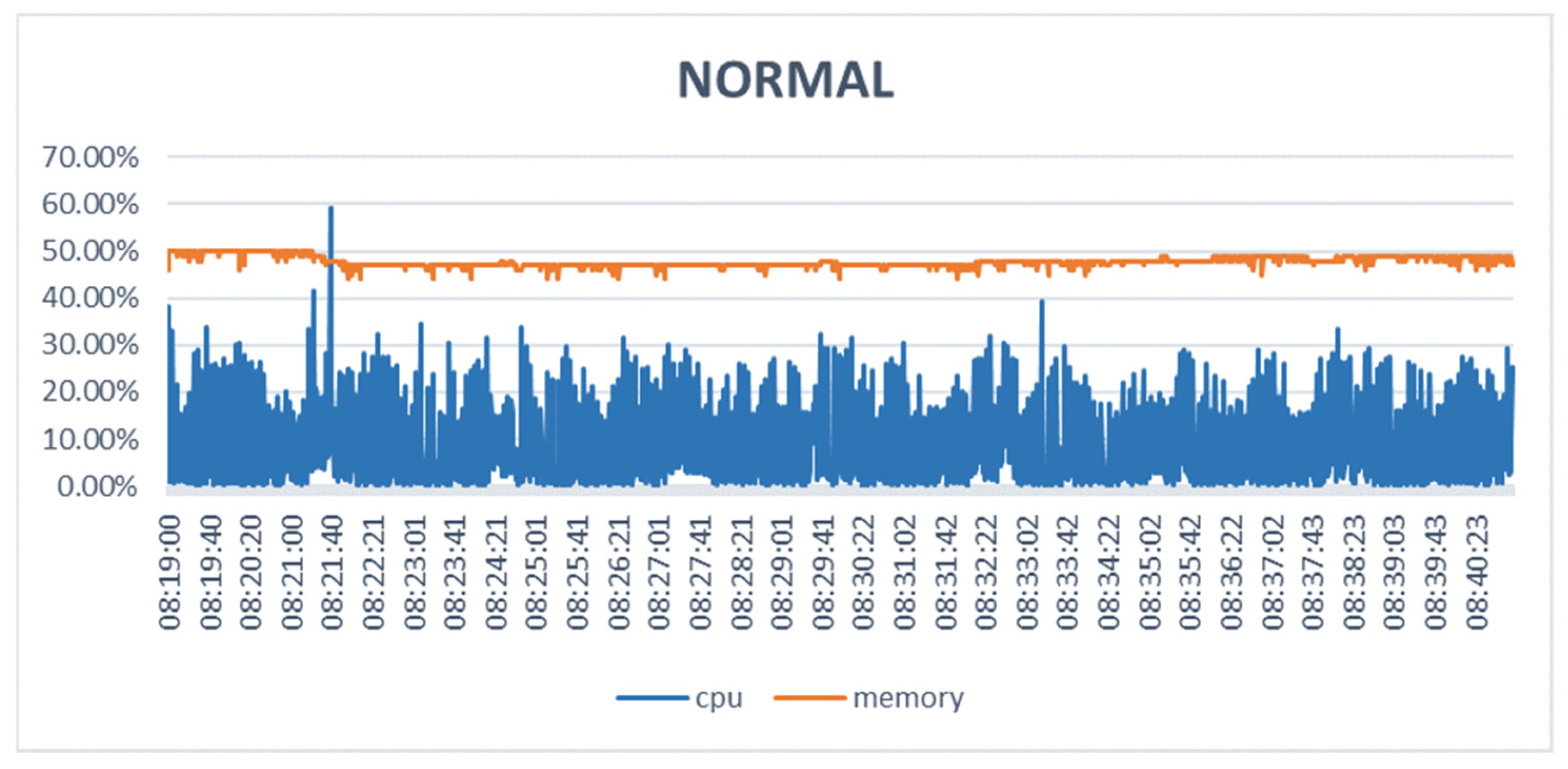

4.1. Data Transmission from IoT Sensing Node

4.2. Data Access

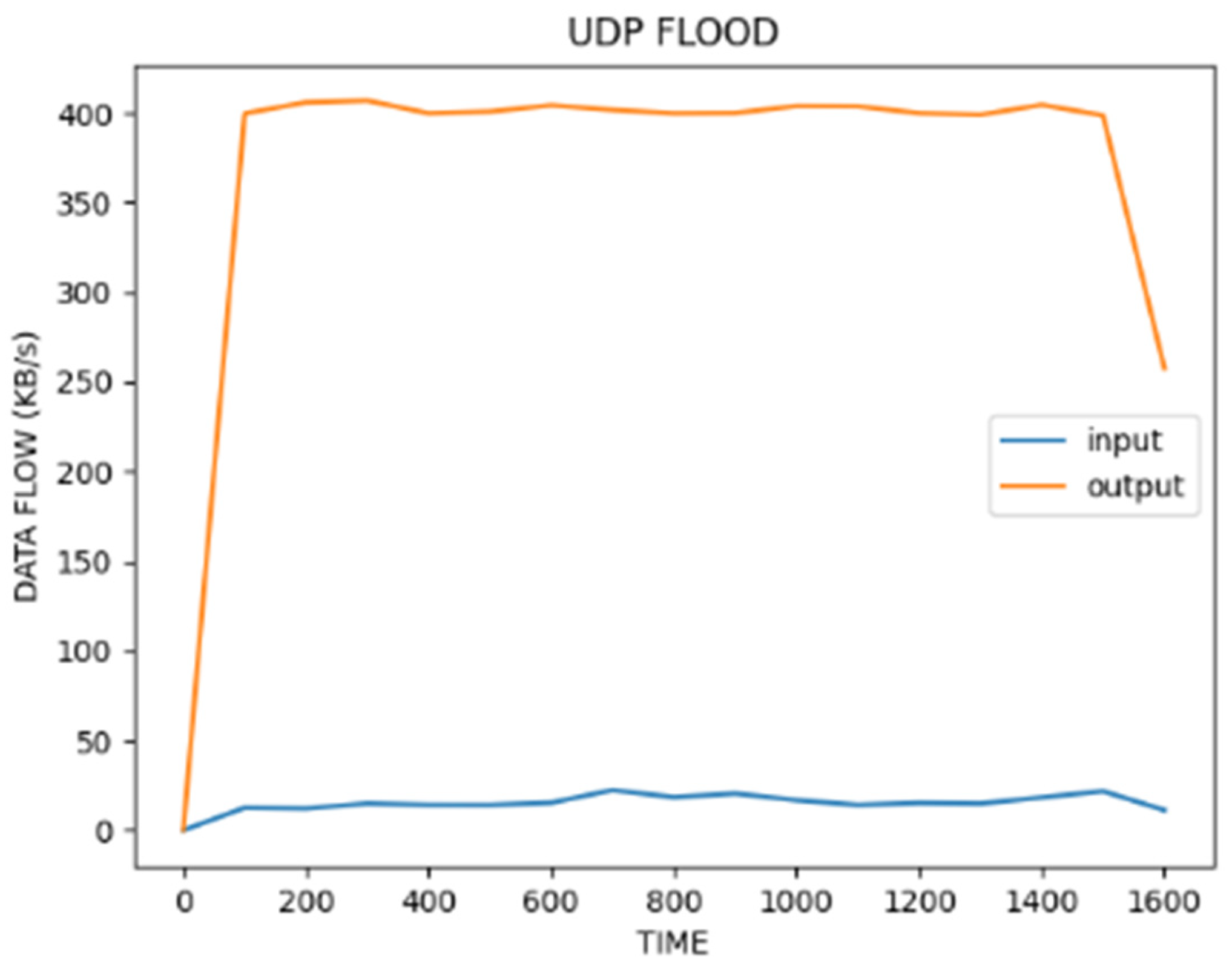

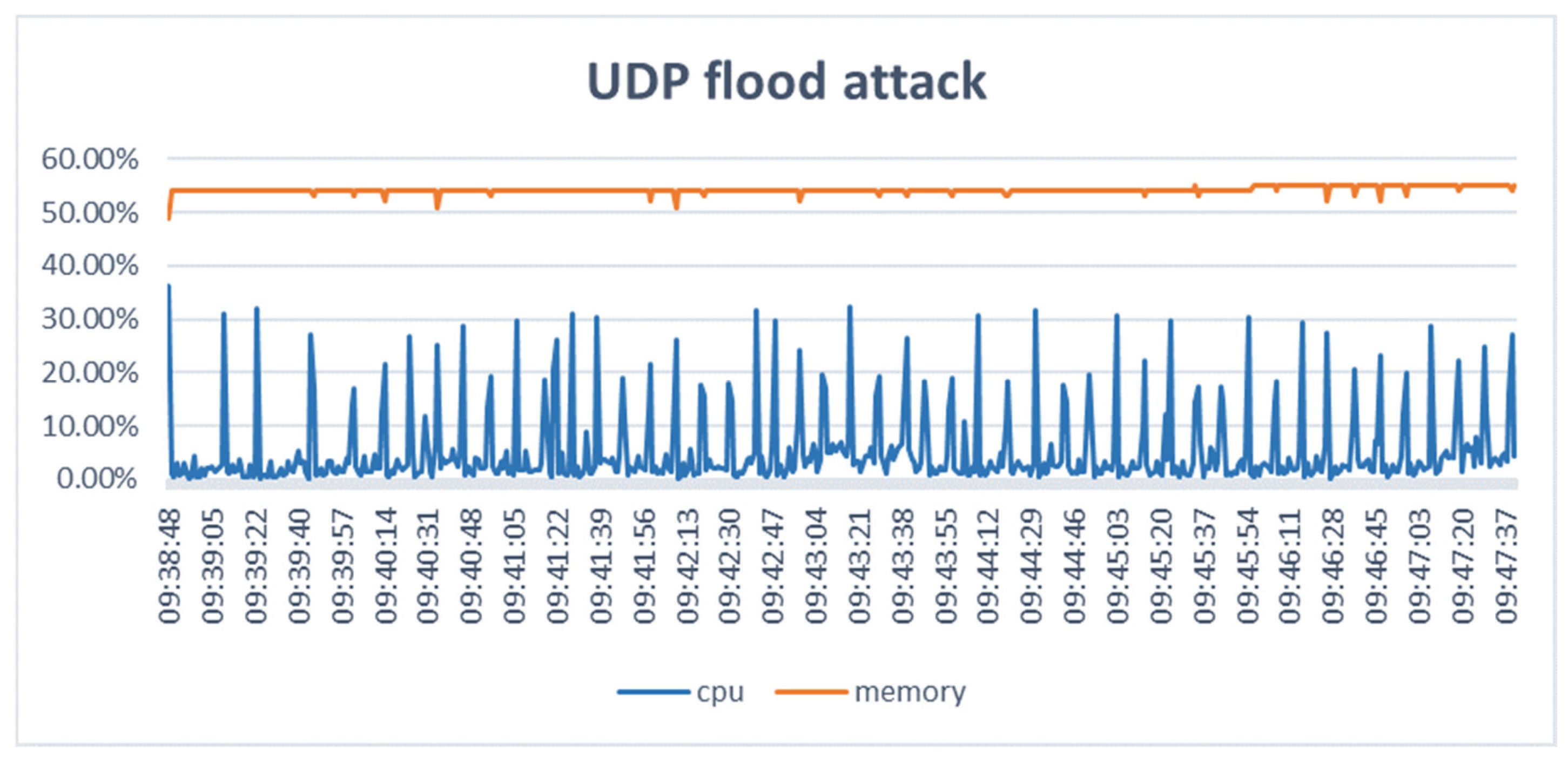

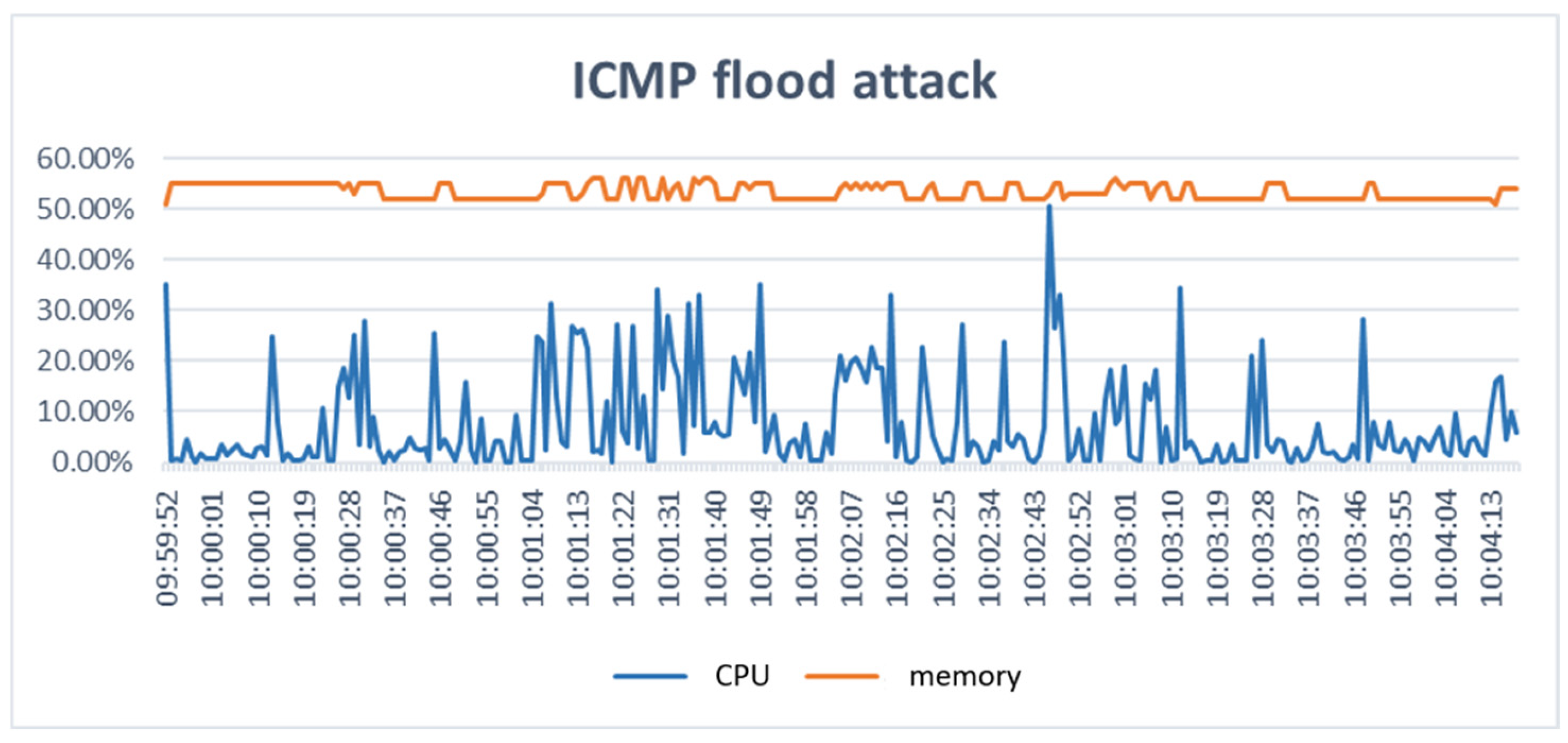

4.3. DoS Attack Architecture and Its Impact on the Server

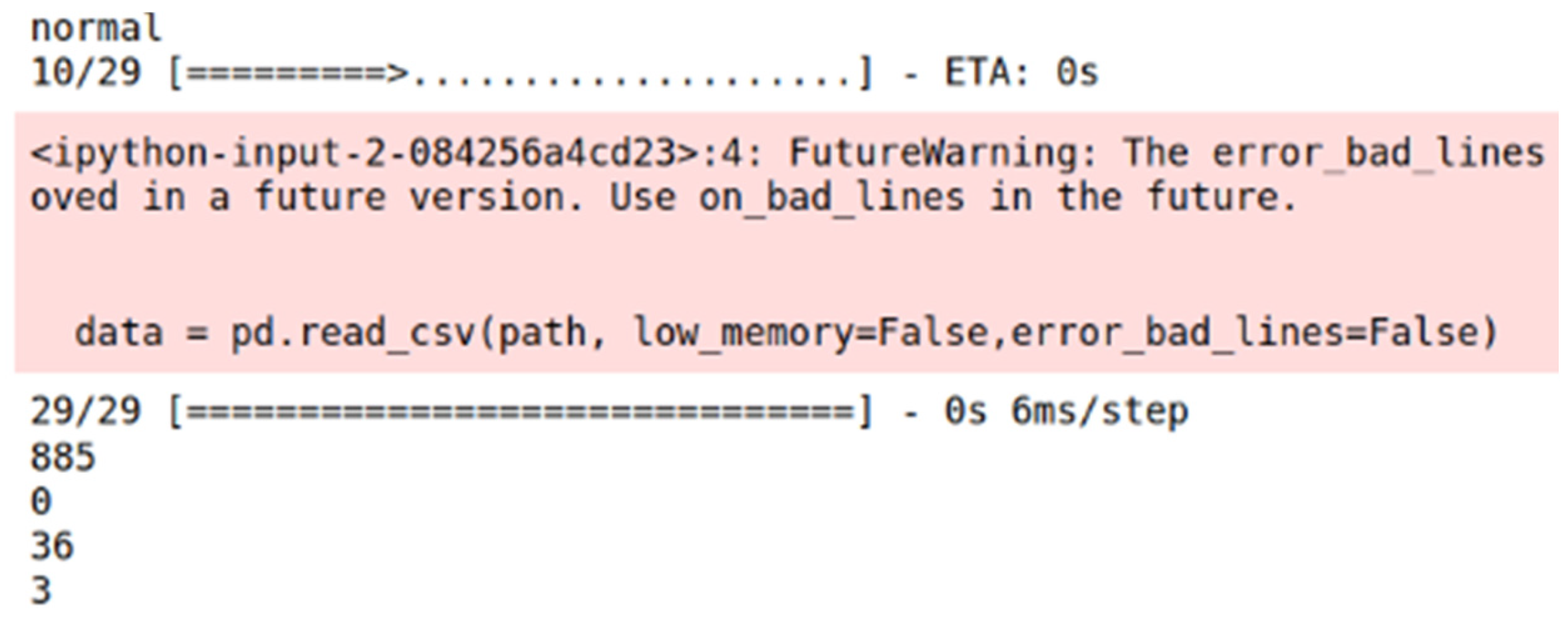

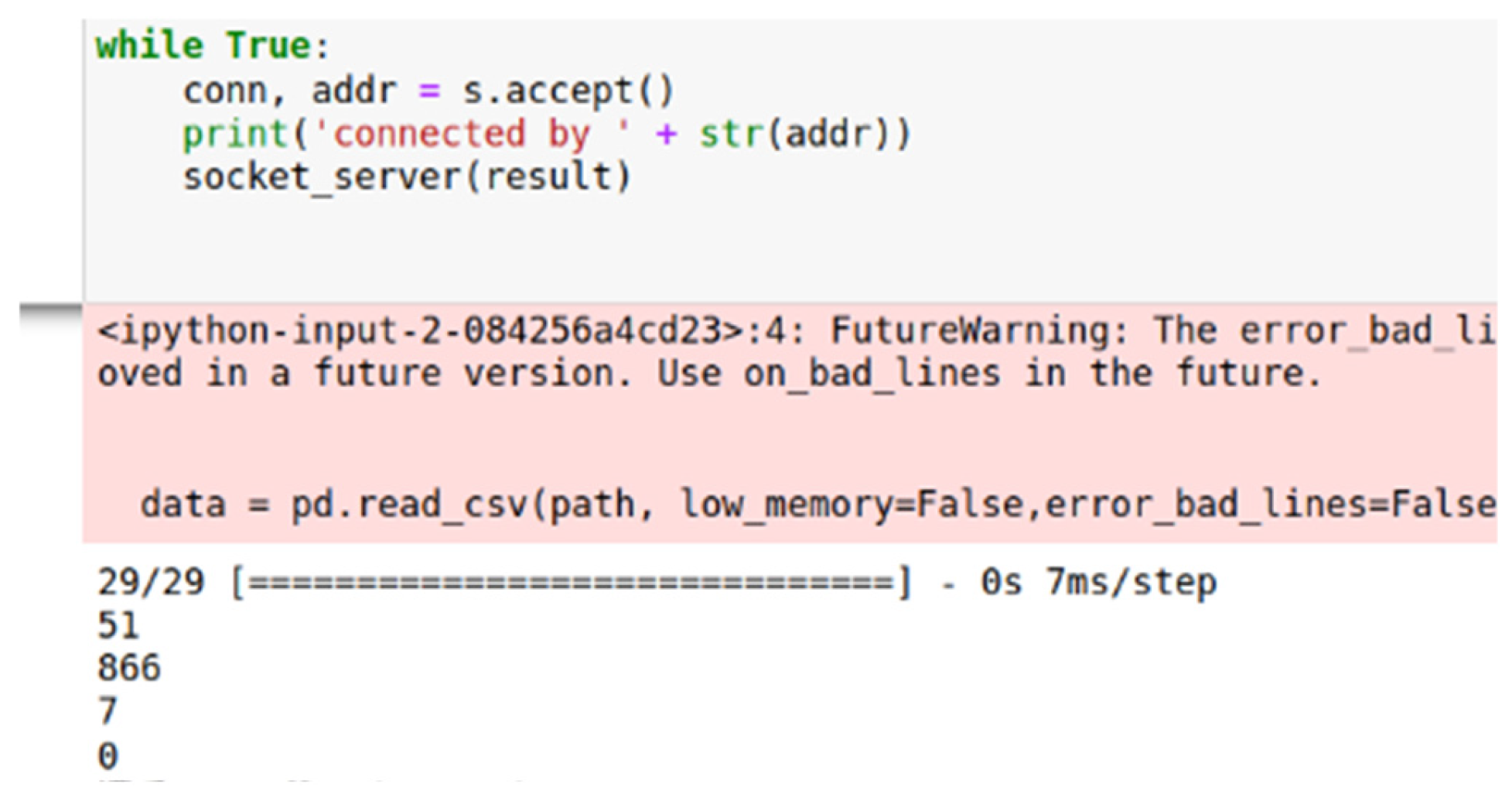

4.4. Edge Computing System and Model Training Process

4.4.1. Decision Tree Model

4.4.2. Two-Dimensional Convolutional Neural Network (2D-CNN)

4.4.3. LSTM Model

4.5. Practical Application

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Verma, S.; Kawamoto, Y.; Kato, N. A Network-Aware Internet-Wide Scan for Security Maximization of IPv6-Enabled WLAN IoT Devices. IEEE Internet Things J. 2021, 8, 8411–8422. [Google Scholar] [CrossRef]

- Bellini, P.; Nesi, P.; Pantaleo, G. IoT-Enabled Smart Cities: A Review of Concepts, Frameworks and Key Technologies. Appl. Sci. 2022, 12, 1607. [Google Scholar] [CrossRef]

- Forsey, C. The 13 Best Smart Home Devices & Systems of 2021. Available online: https://blog.hubspot.com/marketing/smart-home-devices (accessed on 24 February 2021).

- Washizaki, H.; Ogata, S.; Hazeyama, A.; Okubo, T.; Fernandez, E.B.; Yoshioka, N. Landscape of Architecture and Design Patterns for IoT Systems. IEEE Internet Things J. 2020, 7, 10091–10101. [Google Scholar] [CrossRef]

- Eskandari, M.; Janjua, Z.H.; Vecchio, M.; Antonelli, F. Passban IDS: An Intelligent Anomaly-Based Intrusion Detection System for IoT Edge Devices. IEEE Internet Things J. 2020, 7, 6882–6897. [Google Scholar] [CrossRef]

- Tushir, B.; Dalal, Y.; Dezfouli, B.; Liu, Y. A Quantitative Study of DDoS and E-DDoS Attacks on WiFi Smart Home Devices. IEEE Internet Things J. 2020, 8, 6282–6292. [Google Scholar] [CrossRef]

- Bock, P. Lessons Learned from a Forensic Analysis of the Ukrainian Power Grid Cyberattack. Available online: https://blog.isa.org/lessons-learned-forensic-analysis-ukrainian-power-grid-cyberattack-malware (accessed on 31 August 2025).

- Arifin, M.A.S.; Stiawan, D.; Suprapto, B.Y.; Susanto, T.; Salim, T.; Idris, M.Y.; Shenify, M.; Budiarto, R. A Novel Dataset for Experimentation with Intrusion Detection Systems in SCADA Networks Using IEC 60870-5-104 Standard. IEEE Access 2024, 12, 170553–170569. [Google Scholar] [CrossRef]

- Abou El Houda, Z.; Khoukhi, L.; Senhaji Hafid, A. Bringing Intelligence to Software Defined Networks: Mitigating DDoS Attacks. IEEE Trans. Netw. Serv. Manag. 2020, 17, 2523–2535. [Google Scholar] [CrossRef]

- Odumuyiwa, V.; Alabi, R. DDoS Detection on Internet of Things Using Unsupervised Algorithms. J. Cyber Secur. Mobil. 2021, 10, 569–592. [Google Scholar] [CrossRef]

- Sangodoyin, A.O.; Akinsolu, M.O.; Pillai, P.; Grout, V. Detection and Classification of DDoS Flooding Attacks on Software-Defined Networks: A Case Study for the Application of Machine Learning. IEEE Access 2021, 9, 122495–122508. [Google Scholar] [CrossRef]

- Ferrag, M.A.; Friha, O.; Hamouda, D.; Maglaras, L.; Janicke, H. Edge-IIoTset: A New Comprehensive Realistic Cyber Security Dataset of IoT and IIoT Applications for Centralized and Federated Learning. IEEE Access 2022, 10, 40281–40306. [Google Scholar] [CrossRef]

- Mienye, I.D.; Jere, N. A Survey of Decision Trees: Concepts, Algorithms, and Applications. IEEE Access 2024, 12, 86716–86727. [Google Scholar] [CrossRef]

- Huang, Y.-S.; Jiang, J.-H.R. Circuit Learning: From Decision Trees to Decision Graphs. IEEE Trans. Comput.-Aided Des. Integr. Circuits Syst. 2023, 42, 3985–3996. [Google Scholar] [CrossRef]

- Lee, J.; Sim, M.K.; Hong, J.-S. Assessing Decision Tree Stability: A Comprehensive Method for Generating a Stable Decision Tree. IEEE Access 2024, 12, 90061–90072. [Google Scholar] [CrossRef]

- Li, H.; Song, J.; Xue, M.; Zhang, H.; Song, M. A Survey of Neural Trees: Co-Evolving Neural Networks and Decision Trees. IEEE Trans. Neural Netw. Learn. Syst. 2025, 36, 11718–11737. [Google Scholar] [CrossRef] [PubMed]

- Zheng, J.; Gao, Q.; Ogorzałek, M.; Lü, J.; Deng, Y. A Quantum Spatial Graph Convolutional Neural Network Model on Quantum Circuits. IEEE Trans. Neural Netw. Learn. Syst. 2025, 36, 5706–5720. [Google Scholar] [CrossRef]

- Li, Z.; Liu, F.; Yang, W.; Peng, S.; Zhou, J. A Survey of Convolutional Neural Networks: Analysis, Applications, and Prospects. IEEE Trans. Neural Netw. Learn. Syst. 2022, 33, 6999–7018. [Google Scholar] [CrossRef] [PubMed]

- Anthoniraj, S.; Karthikeyan, P.; Vivek, V. Weed Detection Model Using the Generative Adversarial Network and Deep Convolutional Neural Network. J. Mob. Multimed. 2021, 18, 275–292. [Google Scholar] [CrossRef]

- Ma, R.; Wang, Q.; Bu, X.; Chen, X. Real-Time Detection of DDoS Attacks Based on Random Forest in SDN. Appl. Sci. 2023, 13, 7872. [Google Scholar] [CrossRef]

- Aldaej, A.; Ahanger, T.A.; Ullah, I. Deep Learning-Inspired IoT-IDS Mechanism for Edge Computing Environments. Sensors 2023, 23, 9869. [Google Scholar] [CrossRef] [PubMed]

- Chaira, M.; Belhenniche, A.; Chertovskih, R. Enhancing DDoS Attacks Mitigation Using Machine Learning and Blockchain-Based Mobile Edge Computing in IoT. Computation 2025, 13, 158. [Google Scholar] [CrossRef]

- Huang, S.-Y.; An, W.-J.; Zhang, D.-S.; Zhou, N.-R. Image Classification and Adversarial Robustness Analysis Based on Hybrid Quantum–Classical Convolutional Neural Network. Opt. Commun. 2023, 533, 129287. [Google Scholar] [CrossRef]

- Ding, Y.; Li, Z.; Zhou, N. Quantum Generative Adversarial Network Based on the Quantum Born Machine. Adv. Eng. Inform. 2025, 68, 103622. [Google Scholar] [CrossRef]

- oneM2M. Available online: https://www.onem2m.org/ (accessed on 31 August 2025).

- Babita; Kaushal, S.; Kumar, H. Interworking of M2M and oneM2M. In Proceedings of the 2022 International Conference for Advancement in Technology (ICONAT), Goa, India, 21–22 January 2022; pp. 1–4. [Google Scholar] [CrossRef]

- Saini, P.; Mahajan, N.; Kaushal, S.; Kumar, H. An analytical survey on optimized authentication techniques in OneM2M. In Proceedings of the 2023 International Conference on Innovative Data Communication Technologies and Application (ICIDCA), Uttarakhand, India; 2023; pp. 721–729. [Google Scholar] [CrossRef]

- Gupta, R.; Naware, V.; Vattem, A.; Hussain, A.M. A Wi-SUN network-based electric vehicle charging station using Open Charge Point Protocol (OCPP) and oneM2M platform. In Proceedings of the 2024 IEEE Applied Sensing Conference (APSCON), Goa, India; 2024; pp. 1–4. [Google Scholar] [CrossRef]

- oneM2M. TS-0001: Functional Architecture; oneM2M: Nice, France, 2019. [Google Scholar]

- Fielding, R.T. Architectural Styles and the Design of Network-Based Software Architectures. Ph.D. Thesis, University of California, Irvine, CA, USA, 2000. [Google Scholar]

- Wazzan, M.; Algazzawi, D.; Bamasaq, O.; Albeshri, A.; Cheng, L. Internet of Things botnet detection approaches: Analysis and recommendations for future research. Appl. Sci. 2021, 11, 5713. [Google Scholar] [CrossRef]

- Singh, M.; Singh, M.; Kaur, S. Issues and challenges in DNS-based botnet detection: A survey. Comput. Secur. 2019, 86, 28–52. [Google Scholar] [CrossRef]

- Wallarm Cybersecurity Center. UDP Flood Attack. Available online: https://www.wallarm.com/what/udp-flood-attack (accessed on 31 August 2025).

- zaheernew. The Overview of ICMP—PART 03. Available online: https://forum.huawei.com/enterprise/en/the-overview-of-icmp-part-03/thread/807857-867?page=2 (accessed on 31 August 2025).

- Khan, A.; Fouda, M.M.; Do, D.-T.; Almaleh, A.; Rahman, A.U. Short-term traffic prediction using deep learning long short-term memory: Taxonomy, applications, challenges, and future trends. IEEE Access 2023, 11, 94371–94388. [Google Scholar] [CrossRef]

- Martin, B.; Gilmore, C.; Jeffrey, I. A long short-term memory approach to incorporating multifrequency data into deep-learning-based microwave imaging. IEEE Trans. Antennas Propag. 2024, 72, 7184–7196. [Google Scholar] [CrossRef]

| No | Name | Protocol | Type | Description |

|---|---|---|---|---|

| 1 | frame.time | Frame | Date and time | The timestamp when the packet was received. |

| 2 | ip.src_host | IP | Character string | The IP address of the source host. |

| 3 | ip.dst_host | IP | Character string | The IP address of the destination host. |

| 4 | eth.src | MAC | Character string | The MAC address of the sending device. |

| 5 | eth.dst | MAC | Character string | The MAC address of the receiving device. |

| 6 | tcp.srcport | TCP | Unsigned integer | The source port number for the TCP packet. |

| 7 | tcp.dstport | TCP | Unsigned integer | The destination port number for the TCP packet. |

| 8 | udp.srcport | UDP | Unsigned integer | The source port number for the UDP packet. |

| 9 | udp.dstport | UDP | Unsigned integer | The destination port number for the UDP packet. |

| 10 | frame.len | Frame | Unsigned integer | The total length of the packet in bytes. |

| 11 | tcp.flags | TCP | Label | Control flags used in the TCP header. |

| 12 | tcp.seq | TCP | Unsigned integer | The sequence number assigned to the TCP segment. |

| 13 | tcp.ack | TCP | Unsigned integer | The acknowledgment number indicates the next expected byte. |

| 14 | tcp.len | TCP | Unsigned integer | The size of the TCP header. |

| 15 | udp.length | UDP | Unsigned integer | The total length of the UDP packet. |

| 16 | tcp.stream | TCP | Unsigned integer | The stream identifier for the TCP connection. |

| 17 | udp.stream | UDP | Unsigned integer | The stream identifier for the UDP connection. |

| 18 | icmp.checksum | ICMP | Unsigned integer | The checksum value is used for error detection. |

| 19 | icmp.seq_le | ICMP | Unsigned integer | The sequence number in ICMP echo requests or replies. |

| 20 | Attack_label | / | Number | Binary label: 0 for normal traffic and 1 for attack traffic. |

| 21 | Attack_type | / | Character string | The specific type of attack (e.g., SYN flood, UDP flood, and ICMP flood). |

| Predict | Normal Transmission Is Predicted | Predicted to Be Under Attack | |

|---|---|---|---|

| Predict | |||

| Normal Transmission | TP (correct judgment, normal transmission) | FN (error judgment, normal transmission) | |

| Under Attack | FP (false positive judgment, abnormal transmission) | TN (correct judgment, abnormal transmission) | |

| Parameter Value | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Parameter Type | |||||||||||

| max_depth | 48.71% | 87.45% | 88.36% | 92.42% | 93.27% | 92.42% | 93.92% | 93.71% | 94.17% | 93.86% | |

| min_samples_leaf | X | X | X | X | 94.17% | 94.17% | 94.17% | 94.17% | 94.17% | 94.17% | |

| min_samples_split | X | 94.17% | 94.17% | 94.17% | 94.17% | 94.17% | 94.17% | 94.17% | 94.17% | 94.17% | |

| Parameter Value | 0.1 | 0.01 | 0.005 | 0.001 | 0.0005 | |

|---|---|---|---|---|---|---|

| Min_Impurity_Decrease | ||||||

| Validation set accuracy | 87.45% | 88.33% | 93.51% | 94.17% | 94.22% | |

| Test set accuracy | 88.47% | 89.32% | 93.76% | 93.77% | 45.71% | |

| Splitter | Random | Best | |

|---|---|---|---|

| Result | |||

| Validation set accuracy | 94.17% | 97.53% | |

| Test set accuracy | 93.77% | 41.81% | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Luo, Y.-Y.; Chiu, Y.-H.; Cheng, C.-H. Detection and Mitigation in IoT Ecosystems Using oneM2M Architecture and Edge-Based Machine Learning. Future Internet 2025, 17, 411. https://doi.org/10.3390/fi17090411

Luo Y-Y, Chiu Y-H, Cheng C-H. Detection and Mitigation in IoT Ecosystems Using oneM2M Architecture and Edge-Based Machine Learning. Future Internet. 2025; 17(9):411. https://doi.org/10.3390/fi17090411

Chicago/Turabian StyleLuo, Yu-Yong, Yu-Hsun Chiu, and Chia-Hsin Cheng. 2025. "Detection and Mitigation in IoT Ecosystems Using oneM2M Architecture and Edge-Based Machine Learning" Future Internet 17, no. 9: 411. https://doi.org/10.3390/fi17090411

APA StyleLuo, Y.-Y., Chiu, Y.-H., & Cheng, C.-H. (2025). Detection and Mitigation in IoT Ecosystems Using oneM2M Architecture and Edge-Based Machine Learning. Future Internet, 17(9), 411. https://doi.org/10.3390/fi17090411