1. Introduction

The pandemic-induced lockdown spurred the continuous digital evolution of society in numerous ways. Remote work and virtual learning paved the path for novel modes of collective engagement. E-commerce platforms sought to provide a more personal shopping experience for their clients. The pandemic-induced shifts, though temporary, were facilitated by heavily centralized cloud infrastructures, which subsequently became the main focus of Distributed Denial of Service (DDoS) attacks. Post-pandemic threats have introduced fresh methods and tools to enhance success rates. With regard to data center security, it is crucial to develop and implement a new set of protective methods to effectively bolster security against complex and intense DDoS attacks. Our survey is focused on presenting profiles of post-pandemic DDoS attacks, along with their detection and mitigation strategies, surpassing prior studies in technical depth. Additionally, we gathered samples of attacks within an actual data center environment and have made them publicly accessible (refer to related references in Section V). As such, our primary emphasis is on discussing threats from the vantage point of data center networks.

Many industrial stakeholders, such as Cisco [

1], Akamai [

2], and Cloudflare [

3] predict that DDoS attacks will become “bigger” and more frequent in the coming years. Some recent DDoS attacks in 2020 already reached 2.3 Tbps (AWS), and then 2.5 Tbps (Google), and the largest one ever exceeded 3.8 Tbps (Cloudflare) in October 2024, which is much larger than the Mirai botnet attack against the DNS provider Dyn, estimated to have been as high as 1.5 Tbps in 2016. A more sophisticated, multi-vector Mirai botnet variant attack, reaching almost 2 Tbps, was also captured by Cloudflare at the end of 2021. In 2023, high-volume HTTP-based DDoS attacks peaked beyond 200 million requests per second [

3]. These incidents dominated the news outlets worldwide. However, there are countless attack cases that may not hit the front page, although their relative impact on the given (less widely used) service or (less known) company could be much more pronounced. How can we keep pace with our adversaries? It is not just a matter of bolstering our defense with more equipment; our detection methods and mitigation techniques need to be more responsive and more precise.

To combat attackers, a range of detection and mitigation methods is at our disposal. The specific methods employed hinge on the type of attack, but success is heavily contingent on the speed of detection and the accuracy of mitigation. Among the three primary attack categories—volumetric, protocol-based, and application-specific—the more traditional approach of attackers tends to rely on brute force. However, the new wave of DDoS attacks typically falls into one of two categories: massive volume amplification or volatile presence. Effectively countering amplified attacks necessitates correctly identifying the involved parties, while combating volatile attacks requires extremely low detection speed.

While there exist numerous survey papers on this topic, including our previous definitive paper [

4], our current study surpasses them in terms of delivering a timely presentation of new-generation DDoS attacks, as well as providing greater technical depth focusing on the architecture and vulnerabilities of data center networks as primary targets and the hardware acceleration of protection tasks. Accordingly, we place a specific emphasis on the precision and speed of threat detection and mitigation.

It is very important to extend and tailor DDoS detection and mitigation mechanisms to the specific features of high-capacity data center networks. In this way, we also analyze the properties of DCN infrastructures and the architectural options for a high-performance detection system. These related topics support our discussion of our proposed quantitative and qualitative criteria established to help in the development of novel detection and mitigation methods.

Among the various overviews available, some noteworthy survey papers on the subject include the work of Peng, Leckie, and Ramamohanarao [

5] in 2007, which surveyed “network-based” defense mechanisms against DDoS attacks. Their paper already incorporated many of the terms, architectures, and mechanisms that serve as fundamental reference points today.

Zargar, Joshi, and Tipper [

6] conducted one of the earliest comprehensive surveys on modern defense mechanisms against DDoS flooding attacks in 2013. In 2016, Masdari and Jalali [

7] provided an extensive taxonomy of DDoS attack types, expanding the focus to encompass cloud infrastructures. That same year, Yan et al. [

8] described DDoS attacks within the context of Software-Defined Networking (SDN), which is the foundational technological building block of a cloud infrastructure. They outlined research issues and challenges, some of which remain open to this day. As of 2018–2019, while Yan’s challenges had not yet been entirely resolved, significant strides were made in fortifying SDN against DDoS attacks [

9,

10,

11].

Volumetric attacks have gained increased prominence and now employ a wider array of methods, particularly in the fusion of various strategies. Devices and botnets are now commonly rented, leading to an expansion in the user base. These shifts have prompted this current article to surpass earlier discussions on the subject.

This paper offers the following contributions:

We establish clear definitions for key terms related to DDoS analysis.

We present a tutorial-style overview of the security concerns in modern data center networks.

We provide a condensed comparison of the new breeds of DDoS attacks and discuss the related detection and mitigation methods.

We furnish actual DDoS traffic traces that have been captured in real-world scenarios, along with an analysis to enhance overall understanding.

At the end of each section, we offer a highlight of lessons learned on the discussed topic.

The structure of the paper is the following.

Section 2 overviews new attack methods and tools; moreover, it also discusses the challenges raised by their existence.

Section 3 briefly summarizes the modern data center architectures and the related security issues, highlighting the DDoS-related vulnerabilities.

Section 4 surveys the modern acceleration methods for supporting DDoS detection, including those based on OS kernel features and hardware acceleration.

Section 5 characterizes the challenges of DDoS mitigation, whereas

Section 6 surveys the various mitigation techniques and introduces qualitative and quantitative criteria for designing new detection and mitigation methods in response to the discussed challenges. Finally,

Section 7 gives an outlook on DDoS trends in the future, and

Section 8 concludes the paper.

2. New Profiles of DDoS Attacks: Methods, Tools, and Challenges

A generic definition for a Denial-of-Service (DoS) attack is a particular type of malicious traffic that attempts to make an online service unavailable for normal service users. Its distributed version (Distributed-DoS) enhances the threat’s effectiveness by concurrently generating malicious traffic from many contributing sources (usually many thousands or even more) to a single target. The traffic distribution enables a much larger traffic volume (nowadays, it may well exceed the Terabit order) to be developed and directed toward the targeted host or service. In the last decade, we have faced a new wave of DDoS methods and attacks that have become the most common threats on the Internet due to their relatively easy and automated execution. DDoS attempts usually target the resources of service and cloud providers. The new breed of DDoS threats may involve key novelties: (i) vulnerable IoT devices as their security suites often miss even the basic protecting tools, (ii) shorty living and pulsating volatile traffic patterns to be under the radar even for state-of-the-arts IDS/IPS systems, (iii) very high volume of cumulative traffic generated by various amplification techniques, and (iv) composite malicious traffic by combining various DDoS types (so-called vectors) to construct a multi-vector attack.

There are multiple ways to categorize DDoS attacks; we follow the scheme of three categories: volumetric, protocol-based, and application-specific—suggested by various surveys [

12,

13,

14]. This categorization is more sophisticated, but still simple enough compared with other taxonomies. The other commonly used categorization methods include layer-based categorization (network/transport vs. application); targeted resource-based categorization (bandwidth vs. HW resource vs. application runtime) and attack intention (disruption vs. extortion vs. distraction). While volumetric attacks focus on saturating bandwidth on the server’s local network, protocol-based variants target the exhaustion of server-side hardware resources, i.e., system memory, CPU, and IO bus. From a complexity perspective, application-specific attacks have significantly more sophisticated operations specifically targeting a web service or other application.

In the next subsection, we provide reasons and arguments for the appearance of multi-vector attacks during the pandemic.

2.1. New Methods and Tools

The new breed of DDoS threats has two major novelties over the more conventional DDoS operational patterns: massive volume amplification and volatile presence. Together, these two features challenge the security systems of data centers and cloud services and call for a new generation of DDoS detection methods and implementations. Using the latest techniques, an attacker does not even need to access large-scale botnet resources and gain control over them to achieve a substantial attack volume. Instead, new attack techniques make one or many public service hosts send a response message to a spoofed destination address, i.e., to the targeted server host’s address. An alternative way to amplify malicious traffic is to send a small-sized request message to the targeted host with a spoofed source address, which triggers a large response message to that address. This asymmetry between request and response messages results in low resource utilization on the attacker side and may sink all resources at the server side.

Amplification/reflection: By sending spoofed requests, the attacker triggers responses from a group of open DNS or NTP servers back to the victim’s address (

Figure 1). Since the reply is typically more extensive than the request, the cumulative traffic of the targeted response messages can saturate the network path between the attacked host and the Internet.

Volatile (hit-and-run) attack: In contrast to conventional DDoS threats, volatile attacks apply a periodic on/off strategy for controlling their presence on the network. In this case, the ON period is concise, typically lasting from milliseconds to minutes only, followed by an extended OFF period. This behavior is often successful since most IDS/IPS systems today have a detection time in the second range. Thus, these malicious traffic transients can reach the target host under the detection radar.

Multi-vector attack: It combines multiple methods and techniques to overconsume the resources of the target system in various ways. Mitigating these attacks can be challenging and often requires a multi-layer mitigation strategy. An efficient way to make an attack successful is to generate a complex traffic pattern that is easy to blend with regular traffic. Thus, multi-vector attacks may increase the probability of false positive detection that can block out an indefinite portion of user traffic, along with the malicious one. The most popular component vectors are DNS reflection/amplification, TCP-Syn, TCP-Ack, TCP-Syn/Ack, TCP-Rst, and ICMP flood.

2.2. Today’s Mitigation Challenges

Constantly increasing traffic volume: Increasing traffic volume requires ever more protective network resources. Volumetric attacks can quickly exhaust even the most considerable amount of Internet access capacity. DoS attacks have surged in both volume and frequency. Reports indicate a dramatic rise, with some sources noting a 112% increase in attacks from 2022 to 2023 and a 111% surge in the first half of 2024 compared with the same period in 2023. For instance, Cloudflare reported mitigating 7.3 million DDoS attacks in Q2 2025, including a record-breaking 7.3 terabits per second (Tbps) attack targeting a hosting provider [

15,

16].

Shared botnets (many available for hiring): Hiring a botnet is a viable business option for botnet masters. In this model, hired resources are often accounted and paid for on a time basis. There is a major economic challenge here: a significant asymmetry in the expense of the attack and the defense. Renting botnet resources for a 10-min attack costs as low as 35 cents [

17].

Linux-based DDoS malware: The latest Windows versions enable running a complete Linux run-time environment on a Windows-based laptop or desktop computer. This feature opened the possibility for malware authors to cross-compile botnet code to run on both Windows and Linux systems. This option raises crucial challenges in the defense strategy: (i) a high number of IoT devices with common security vulnerabilities run a Linux-based operating system, (ii) Linux-based data-center servers possess a high amount of computational and bandwidth resources to execute a heavy-hitter DDoS attack [

18].

Launching attacks by non-technical users: Volumetric attacks can be initiated with dedicated control programs and scripts available on the darknet or offered to the attacker by the bot master of the rented botnet. These tools are easy to use; therefore, even a non-technical user can initiate and control a powerful attack.

Attack from mobile and IoT equipment: The increasing computational power of handheld devices, the transmission capacity of 4G and 5G networks and the machine-to-machine type IoT use cases open the way to deliver a wide range of botnet malware to mobile and IoT devices. Moreover, mobile security suites typically have a lower level of defense against malware deployment. Thus, handheld devices may become the next target of the bot master (a person who owns the botnet). Reputation-based detection is inefficient for identifying infected mobile devices since user equipment’s IP addresses frequently change in mobile communication networks [

18].

Hit-and-run and multi-vector attacks continue to evolve [

19]: Hit-and-Run is still popular due to its low cost and ease of deployment. At the same time, multi-vector variants are very effective in bypassing traditional mitigation strategies [

20]. Recently, we have seen a significant rise in the popularity of multi-vector attacks incorporating 15 or more vectors. Combining the hit-and-run and multi-vector strategies resulted in a shorter attack duration with an increased success rate. Since attackers often rent a shared botnet to execute the DDoS attack, their ambition to reduce the duration is reasonable. Moreover, the shortened attack has a higher probability of bypassing security systems with a larger detection window.

Browser-based bot attacks: Websites are attractive platforms to deliver malware to a high number of user devices via popular web browsers. Javascript-based codes do not depend on the operating systems and exploit the web browsers’ or protocol-based [

21] vulnerabilities. While these codes stop running as the user quits the browser application, they are re-downloaded and re-initialized as one re-visits the compromised web page.

Emerging encrypted attacks: TLS- and ESP-based attacks have two key advantages: (i) it consumes extra CPU resources to perform encryption and decryption, (ii) many DDoS detection systems do not support the inspection of TLS- and ESP-encrypted traffic [

22].

Distributed targets: From the infrastructural perspective, popular cloud-based services are distributed across many physical servers, and many of them are often located in dedicated IP subnets. Instead of attacking a single IP node, this type of DDoS threat increases the success rate by targeting an entire IP subnet, incorporating a set of servicing nodes.

Application-specific attacks: The majority of application-specific [

23], attacks target a specific service and not a service type in general, e.g., developed to attack a specific streaming service. It means that no attack is capable of targeting streaming services universally. Meanwhile, a recent method called mimicked user browsing is very effective for a large number of web applications. It is a web-based application-specific attack type developed to imitate the behavior of real user interaction with the service provider nodes. The major challenge is its low false rate detection since its traffic pattern is identical to that of a real user. Due to the similarity property, it can easily maintain its success rate even using a large number of participating botnet nodes.

2.3. Lessons Learned in DDoS Challenges

Recent research works propose several methods and tools for effectively detecting the new-generation DDoS attack types (see

Section 4). However, a new breed of attack techniques (especially the combination of hit-and-run and multi-vector attacks) still challenges protection systems (see

Table 1) with a more sophisticated traffic pattern combined with a large traffic volume within a very short time period [

24]. In addition, the new types of network layer attacks, mimicking user browsing attacks, target a specific service with a high success rate. In

Section 4, we discuss the major scientific works for detecting the presented threat types. Since modern cloud services rely on high-capacity data centers that offer large scaling capacities in terms of computational power and storage space, we provide an overview of the data center networks, focusing on their security challenges and vulnerabilities to DDoS attacks.

3. DDoS and Data Center Networks

Nowadays, it is impossible to discuss DDoS without discussing DCNs. As of 2023, every faucet of internet access comes from DCNs. Internet service providers, 5G mobile cores, and IT Clouds are located inside the same DCN. The aforementioned services usually do not own their IPX, peering and IP transit links, but rather choose to be a “tenant” of an existing DCN with access to T1 transit and IPX. The single most important security feature of a large DCN is DDoS protection. Tenants, depending on the throughput of their connection, have limited ability to defend against DDoS attacks. For example, if the 5G core has 2 × 100 GbE connections to the transit core and has 100 Gbps traffic, then DDoS attacks over 100 Gbps will have an impact on the quality of service of the 5G core. Since recent large-scale DDoS attacks preferably target public data centers and their cloud services [

17] and thus challenges the resource pools of their underlying physical infrastructure, we briefly overview the key properties of a modern data center network.

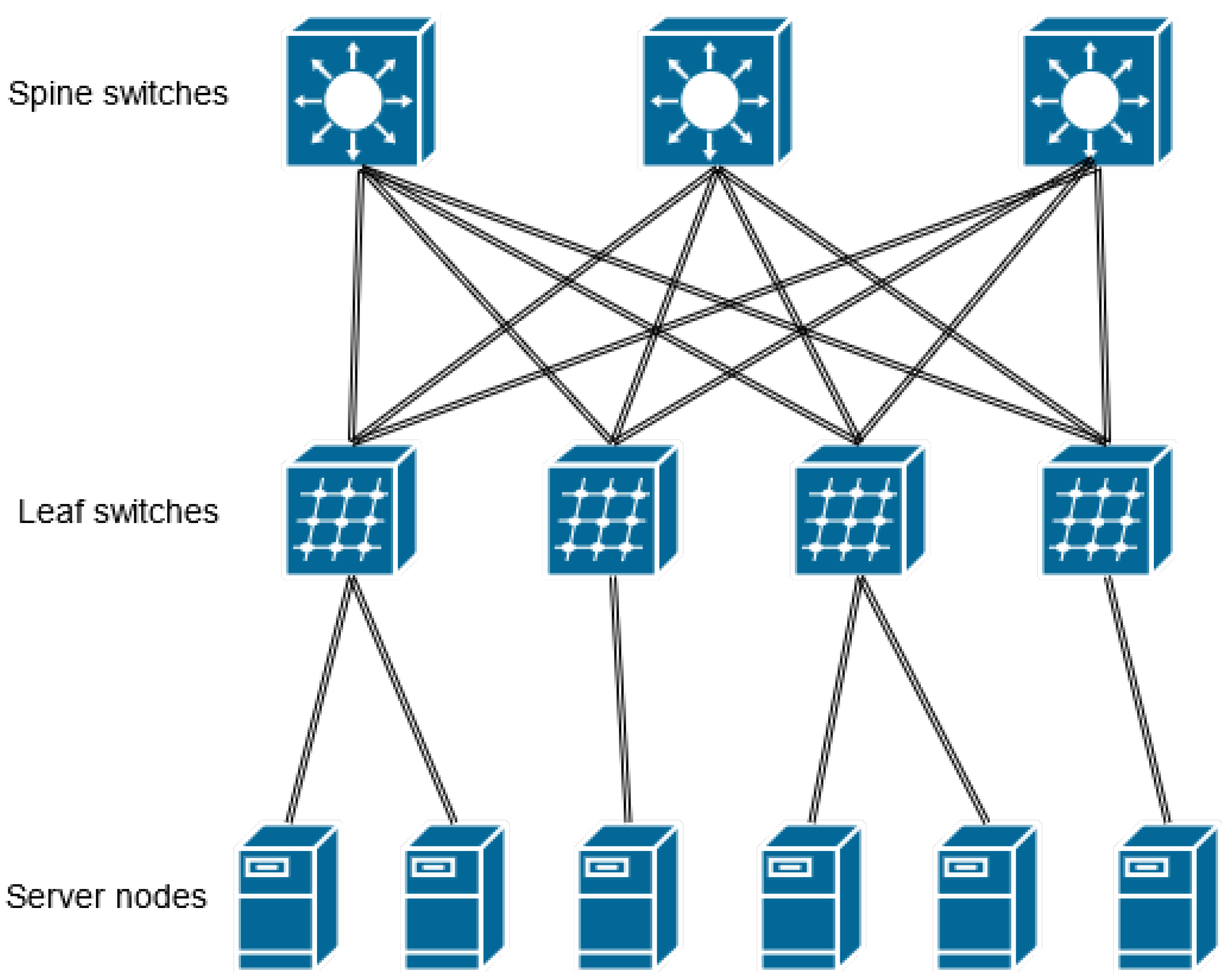

3.1. Spine-Leaf Versus Traditional 3-Tier Network Topology

Traditional data centers incorporate a 3-tier network infrastructure. The 3-tier covers three network layers, i.e., core, aggregation and access layers, respectively. While the core and aggregation layers consist of routers performing L3 network functions, the access layer is composed of L2 switches. Network, computing, storage, and application resources are organized to provide services according to a modular design pattern called point of delivery (PoD). Since the topology has redundant L2 paths by design, a loop-free topology must be assured using the appropriate protocol, e.g., the Spanning-Tree Protocol (STP) [

25]. The emergence of virtualization technologies required more data-link layer flexibility and resulted in an extended L2 domain involving the aggregation layer. Thus, an L2 domain can be extended across all the PoDs, enabling a centralized resource pool of computing, storage, and networking elements. Modern software-based services have components that are distributed across multiple physical or virtual machines within the data center. This novel service architecture generates an increasing amount of east–west traffic between data center servers. Accordingly, there is a shift from the dominance of north–south data flows in terms of traffic volume. The server-to-server network latency may vary in the traditional three-tier architecture based on the selected network path between the two servers. Meanwhile, the spine-leaf network topology enables maintaining low latency between server nodes since it assures a fixed number of hops towards the destination node (see

Figure 2). Also, the spine-leaf topology deprecates the Spanning-Tree Protocol and thus redundant paths can be concurrently active. This feature lowers the ratio of link capacity over-subscriptions. A new generation of routing protocols (e.g., Equal-Cost Multipath - ECM [

26] MP-BPG EVPN [

27]) supports the spine-leaf topology to ensure balancing traffic across all available redundant paths.

3.2. Security Issues

Traditionally, internal networks are protected from the public Internet using in-line firewall systems. In the context of a data center, north–south traffic is considered less trustworthy. Accordingly, most security solutions focus on inspecting north–south traffic. With the advent of spine-leaf architectures, east–west traffic became more dominant in terms of data volume. This property raises the risk of a malicious actor affecting internal service tasks and activities or spreading from one service to another. Until recently, it was a typical professional view that the east–west traffic is internal, and thus it did not require dedicated security monitoring and protection. This assumption is valid only until an external threat does not successfully infect a data center server. As long as a new malware gets through a north–south firewall or IDS system and enters the internal network, the firewall does not provide any protection against it anymore. It becomes an insider threat that is much harder to detect and has a higher probability of becoming a permanent hazard inside the data center network.

The major question in data center security is how the infrastructure (network elements as well as server nodes) can be simultaneously protected against both external and internal threats. Accordingly, we provide a short overview of the key security tools within a data center network.

3.3. Key Security Terms and Elements

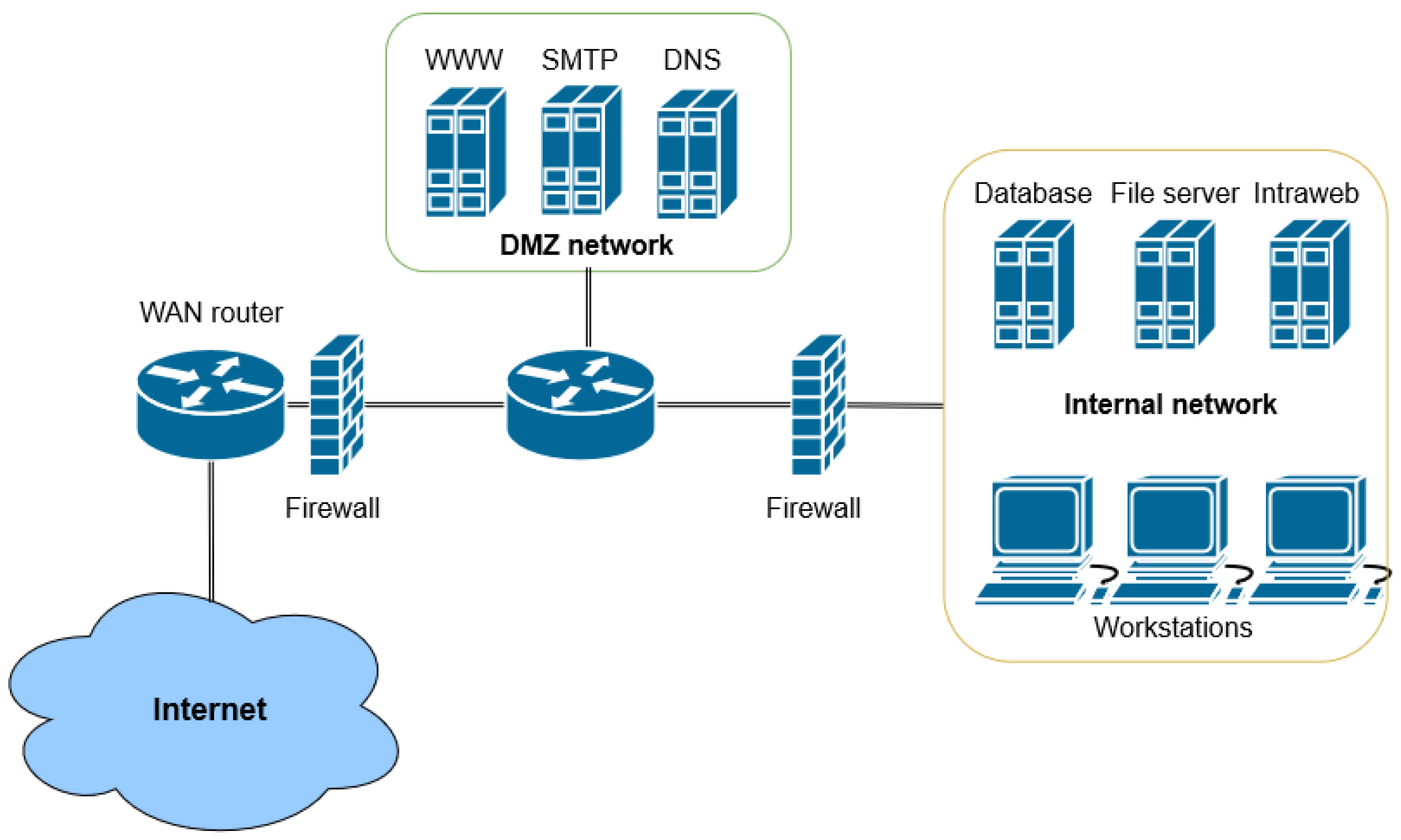

Demilitarized Zone (DMZ) [

28]: DMZ network separates the internal enterprise or data center network from the untrusted public Internet (see

Figure 3). It acts as an additional security layer. Servers offering public services on the Internet typically reside in a DMZ network segment. This additional layer prevents the internal network nodes from being directly accessed from the Internet.

Network firewalls [

29]: The standard firewall function is the stateful traffic inspection that incorporates IP addresses, ports, and protocol state information. The filtering decision is made upon pre-defined rules or context (e.g., information from previous connections). The majority of firewall systems operate on the north–south path of the data center to protect the internal network. With the evolution of firewall systems, new functions and features were added to the next-generation firewalls, such as application awareness, integrated intrusion detection, and antivirus capabilities. Consequently, the threat focus is the most important novelty of next-generation firewall systems.

Deep packet inspection (DPI) [

30]: In order to make a decision on a traffic flow, related packets or a sampled subset of them go under investigation (e.g., for traffic classification or filtering purposes). As its name suggests, Deep Packet Inspection goes deeper than the packet header and inspects the payload. Some DPI methods merely focus on the payload, and others analyze the headers and the payload, as well. The DPI technique is frequently applied in security systems.

Network Intrusion Detection and Prevention Systems [

31]: An IDS/IPS system monitors the network for a wide range of malicious activities. During the analysis process, these systems can incorporate two distinct classes of detection methods, i.e., signature-based (recognizing patterns of malware activity) and anomaly-based ones (typically applying machine learning techniques to detect the presence of any unusual traffic).

Virtualized security functions [

32]: Like most fundamental network functions, security-related services have also become available as a software product optimized for a virtualized environment, and can be operated and scaled on commodity server hardware.

Despite the evolved security policies and the high-performance protection systems, large-scale data centers, as the host sites of public cloud services, have become the primary target of new-generation DDoS attacks [

10,

17]. In Section IV, we will discuss all of the novelties (including methods and tools) that recent DDoS attacks feature in order to increase their success rate in disrupting public services. Also, we provide an overview of how they challenge the underlying physical infrastructure of the cloud services.

The ultimate victims of DDoS attacks are the servers in the universal sense. Among these, the ones serving the masses nowadays reside in data centers, and many of them are designed as cloud infrastructure. While it is outside of the scope of the current article to detail cloud architectures, it is worth showing how the cloud domain experts approach the problem from the cloud infrastructure point of view.

An interesting discussion from this approach is given by Deka et.al. [

33]. They show how the classic cloud service models (i.e., IaaS, PaaS, SaaS) could be impacted by DDoS attacks. Among the various taxonomies, they differentiate DDoS attacks targeting the “Browser”, “Application”, “Server” and “Network” levels, which shows how the historical shift just started to happen from server farms to modern cloud infrastructures. On the other hand, the potential impacts listed in [

33], including the high density of victims, low network performance, resource hunger, and the need for adapting mitigation to the ever-changing types of DDoS attacks, are all still valid nowadays.

Although there seem to be various surveys that explicitly deal with the effects of DDoS on the cloud infrastructure [

34,

35,

36,

37,

38,

39,

40], most of them are brief DDoS tutorials with some reference to cloud resource violations. In their general overview paper [

34], Deshmukh and Devadkar provide a basic tutorial on DDoS types before the first major Mirai botnet attack, and suggest some general concerns related to their effect on cloud environments. A similarly brief overview is provided by Balobaid et al. [

35], and later by Gautam and Tokekar [

36]. The special findings of the more explicitly cloud service-related surveys [

37,

38,

39] will be described in the following subsections that categorize the attacks and impacts.

3.4. Direct and Indirect Impacts

Beside categorizing prevention, detection and mitigation techniques just as other authors do, Srinivasan et al. uniquely highlighted the direct and indirect impacts of DDoS attacks on the cloud environment [

37]. As examples of

direct impacts on the cloud environment, they list service downtime, economic loss due to downtime, business and revenue loss, as well as disruptions to dependent services. Some of the

indirect impacts are economic loss due to resources (not just profit but profitability), energy consumption loss, attack mitigation cost, loss of client trust, associated legal expenses, and effects on brand prestige. Srinivasan et al. have built quite convincing cases on these direct and indirect impacts [

37], making it clear to decision makers that the cloud infrastructure cannot run without a strong DDoS defense.

The issue of collateral damage to non-targets is discussed in [

41,

42]. The authors focus on multi-tenant cloud infrastructures where, in addition to the targeted server(s) or tenant, other stakeholders could also be affected. These are often co-hosted virtual or physical servers or networking devices of other cloud service providers. The collateral damage is the most significant for virtual servers when the targeted VM shares hardware resources with other virtual tenants within a single chassis or rack. Accordingly, the exhaustion of computing and network resources directly affects its co-hosts. The authors identified the key spots of the effects as well as the requirements for threat mitigation to minimize the damage.

A new type of DDoS attack called Ecomonic Denial of Sustainablility (EDoS) is evaluated in [

43]. EDoS targets the financial resources of an organization. A large amount of traffic is sent from the botnet towards a cloud service, which thus has to scale up its resources under pressure. This scaling relates to the billing as well, since the cloud user pays the cost of the EDoS attack itself and it may finally exhaust financial resources. The authors developed an analytical model to investigate the impact of EDoS attacks involving key performance metrics, such as end-to-end response time, consumed computing resources, throughput, and the cost of extra resources triggered by the attack. The model had been verified by a simulation model that showed a strong correlation with it.

3.5. Attackers Internal and External to the Cloud

Darwish, Ouda, and Capretz [

38] categorize DDoS attacks not only by their vector but also by the “residence” of the attacking agents—distinguishing between external and internal attackers. This classification proves essential when designing mitigation strategies. Most traditional DDoS defense mechanisms assume a threat model where malicious traffic originates from external sources, targeting cloud services via public-facing interfaces. Accordingly, mitigation mechanisms such as firewall rules, ACLs, and scrubbing services are typically placed at network edges. However, when attackers are internal, i.e., possibly as compromised VMs or containers, these defenses may be bypassed entirely, making external mitigation efforts ineffective.

This internal/external dichotomy is further explored by Shaar and Efe [

39], who emphasize that internal DDoS attacks are particularly problematic. Internal bots may masquerade as legitimate tenants within a multi-tenant cloud environment and perform attacks that degrade shared resources such as bandwidth, CPU, memory, or disk I/O. Such resource-exhaustion attacks are harder to detect because they do not necessarily exhibit high-volume traffic patterns; instead, they may use subtle, low-rate strategies that cumulatively exhaust shared system capabilities. These attacks challenge traditional volumetric anomaly detection, which is optimized for identifying external floods.

Moreover, in a cloud context, internal DDoS attacks often exploit lateral movement and privilege escalation. An attacker might compromise a lower-privilege VM and use it to initiate low-level attacks on other internal nodes [

44]. If the attacker gains access to orchestration tools or APIs, they might even schedule malicious containers or VMs at scale, turning the cloud’s elasticity against itself. From the perspective of cloud providers, such attacks blur the boundary between infrastructure misuse and coordinated denial-of-service behavior, making enforcement and forensics more difficult.

Additionally, the concept of a “DMZ” can be undermined in cloud environments where trust boundaries are more fluid. Attackers can plant sleeping bots inside the infrastructure (e.g., through malicious workloads or compromised containers) and activate them simultaneously through command-and-control signals or time-based triggers. These internal bots can launch devastating east–west attacks (intra-cloud traffic) that never cross the cloud perimeter, thus evading detection by traditional north–south monitoring tools [

45]. This also has implications for billing and resource allocation; if such internal attacks are not detected early, they can incur substantial costs to the victim organization or the provider itself.

Given these risks, it is very important to extend DDoS detection and mitigation mechanisms to internal segments of the infrastructure. Deploying internal flow monitors, hypervisor-level anomaly detectors, or even using machine-learning-based behavioral analysis can help a lot to identify misuse of internal resources. Furthermore, cloud-native zero-trust models can help prevent lateral movement and mitigate the impact of internal attacks.

3.6. Attacks Targeting Different Areas of the Cloud

Direct DDoS attacks can be categorized by their direct target in the cloud infrastructure. A categorization—based on the one described in [

39]—can be the following:

Attacks against the Cloud infrastructure itself are usually targeting its control environment, such as the HVAC or the energy distribution systems. These are heavy, infrastructure-oriented, industrial-flavored attacks.

IaaS—Attacks against the Infrastructure as a Service can target the actual networking or processing resources through brute force DDoS, but even the virtualization layer as well, through focusing on the vulnerabilities of the Hypervisor, or the Cloud Scheduler.

PaaS—Attacks against the Platform as a Service are focusing on the Virtual Machines. These could be specifically VM-related vulnerabilities, or targeting the time when VM migration is executed. Since VMs are often set up similarly for given applications, sprawling attacks can destroy similarly built systems, e.g., with 1.6 million WorldPress websites in 2001, because of four plug-ins that introduced a certain vulnerability.

SaaS—Attacks against Applications or Software as a Service could exploit certain vulnerabilities (e.g., for web services), or could just as well use brute-force volumetric attacks in order to take a certain SaaS to its knees.

While categorizing DDoS attacks by cloud service layers provides a structural understanding, it is equally important to consider the distinct mitigation requirements at each layer [

10]. Attacks on IaaS often focus on overwhelming bandwidth or compute resources, necessitating mitigation strategies such as rate limiting, upstream filtering, or elastic scaling. Here, solutions like BGP FlowSpec or cloud-based scrubbing centers can play a critical role. However, when the hypervisor or virtualization infrastructure itself is targeted, such as in resource starvation attacks, traditional network-layer defense tools are insufficient. These cases call for hypervisor-aware anomaly detection and possibly kernel-level telemetry, which can observe and respond to irregular resource consumption or unexpected scheduling behavior.

In contrast, attacks on the PaaS layer often exploit the uniformity and scale of cloud platforms. VM migration, orchestration, or automated scaling processes introduce windows of vulnerability. DDoS attacks launched during VM migration can exhaust available resources [

46], delay migration, or disrupt load balancing across the cluster. Since PaaS environments frequently rely on templated or containerized deployments, sprawling attacks—those that spread horizontally across similarly configured instances—can have a systemic impact. Mitigation here requires both horizontal scaling protections (such as per-instance rate-limiting) and segmented infrastructure, so that a vulnerability in one platform instance cannot easily propagate to others. Monitoring tools with workload awareness can help detect such distributed effects early.

SaaS-level attacks, which often include application-layer DDoS such as HTTP floods or slow-rate attacks (e.g., Slowloris), demand application-aware mitigation tools. Traditional volumetric defenses may fail to detect these subtler attack patterns, as they mimic legitimate user behavior. In response, SaaS providers increasingly deploy web application firewalls, rate-limiting based on user behavioral models, and CAPTCHA to separate bots from users [

47]. In modern architectures, SaaS components are often distributed across microservices, making service mesh observability and inter-service traffic profiling essential for identifying cascading slowdowns caused by attacks. Crucially, effective mitigation in SaaS environments must balance security with usability—overly aggressive filtering may result in false positives and degraded user experience, especially for high-traffic or latency-sensitive applications.

The authors of [

48] studied the impact of two common DDoS attacks, i.e., HTTP and SYN floods on Linux- and Windows-based web servers. They found that different web servers responded differently to these threats. Windows-based IIS 10.0 managed to maintain acceptable response times during an HTTP attack, while Linux-based Apache 2.0 showed more stability with TCP Syn flood attacks.

A good example of analyzing the effect of a given attack type as a use-case is provided by Yevsieieva and Helalat, who inspected the impact of the slow HTTP DOS and DDOS attacks on the cloud environment [

40].

As an incremental work, paper [

49] evaluates the performance of IIS 10.0 and Apache 2.0 during attacks in a clustered configuration. The web servers applied different load balancing implementations, NLB for IIS and HAProxy for Apache. The operation of the balancing mechanisms showed a significant performance difference between the two server implementations. Results showed that clustered IIS 10.0 preserved more of its responsiveness and stability than the HAProxy-based Apache cluster. The discrepancy between the results of [

48,

49] is rooted in the difference between the applied load-balancing mechanisms.

3.7. Reducing the Impact of Congestion Detection

DCNs incorporate high-bandwidth delay product paths throughout the data center. While there are paths that are primarily used by server nodes for easy-west communication, they are also affected by DDoS attacks coming from the public network, which temporarily fill up small (4–16 MB) shared buffers in intermediate network switches. This results in a variation in RTT on the east–west paths as well, which directly impacts TCP performance and thus service responsiveness. In addition to the internal congestion control of TCP, Explicit Congestion Notification (ECN), defined in RFC 3168 [

50], enables switches to notify their connected nodes about the presence of congestion without informing them of its extent. Using ECN, even a light congestion can lead to a significant TCP throughput decline. Data Center TCP enhances the effectiveness of ECN by estimating the amount of data affected by the congestion, and sets the congestion window based on this estimate.

3.8. Lessons Learned

As DCNs have been consolidating into larger and larger networks, east–west traffic has become dominant over north–south. More and more services have moved to the cloud. It has become easier to build attack potential inside the DMZ. There are multiple layers of anonymity between the owner of the DCN infrastructure and the malicious actors. Most of the time, only the north–south links of the DCN are monitored for DDoS traffic. In a few years, we might hit ground zero again, when targeted east–west DDoS will be available on demand.

The primary QoS management goal in a data center network is to avoid the exploitation of resources, which are directly impacted by DDoS attacks on the cloud infrastructure. Indirect impacts—such as loss of profitability, client trust, and effects on brand prestige—should also be considered when calculating mitigation costs. Attackers are usually considered external to the cloud environment, but they can also be internal, without their intention or knowledge, when the DMZ is compromised.

Although brute force attacks against network queues are the most common, the attacks themselves could target different areas of the cloud setup, such as the hypervisors, virtual machines, or even the infrastructure control itself.

In order to avoid network congestion, many signaling protocols or protocol extensions have been developed in the past. The most widespread solution is the Explicit Congestion Notification (ECN) protocol defined in RFC 3168 [

50], and refined in its latest update and relaxation, RFC 8311 [

51]. Meanwhile, it is ineffective in case of light congestion events, since it can result in a serious TCP throughput degradation. Accordingly, DC-TCP is a superior solution tailored to the requirements of data center networking. Besides the network and transport layer avoidance techniques, many services implement QoS protection at the application level by using specific load-balancing methods. While DDoS attacks target a specific server host or group of hosts, other co-hosted virtual or physical servers may also be affected. There is a direct connection between DDoS attacks and the billing of virtual resources [

52].

4. Supporting DDoS Mitigation with Kernel and HW Level Acceleration

The detection of DDoS attacks is traditionally conducted by software running on CPUs, and recently, partially by GPUs for AI/ML models. The computational demands of real-time DDoS detection stem from the need to process massive packet volumes at line rates (e.g., 300 Mpps for 100 Gbps links with 64-byte packets). Traditional CPU-based systems, even with multi-core architectures, struggle to scale due to the von Neumann bottleneck, where memory access and sequential processing limit throughput. For instance, software-based detection using tools like Snort requires multiple CPU cores to handle 10 Gbps, often exceeding 50% CPU utilization for basic packet inspection [

53].

As part of modern IDS, AI/ML models trained on network traffic patterns have emerged as a powerful tool, in general [

54], and also specialized for detecting sophisticated and evolving DDoS attacks [

55,

56]. These models can analyze high-dimensional data, identify subtle anomalies, and adapt to novel attack signatures far more efficiently than traditional rule-based systems. However, real-time detection at scale requires significant computational resources. This is where hardware acceleration—through GPUs, FPGAs, and dedicated network processors—plays an important role. By offloading AI inference tasks to specialized hardware, detection systems can maintain high throughput and low latency even under heavy load, making them viable for deployment at network edges or within core infrastructure.

Low-latency detection is essential to mitigate DDoS attacks before they disrupt services. Latency in detection includes packet processing time, decision-making, and mitigation activation. Software-based systems often introduce delays of 10–100 ms due to context switching and queuing [

53]. Hardware and kernel-bypass solutions aim to reduce this to microsecond or millisecond ranges.

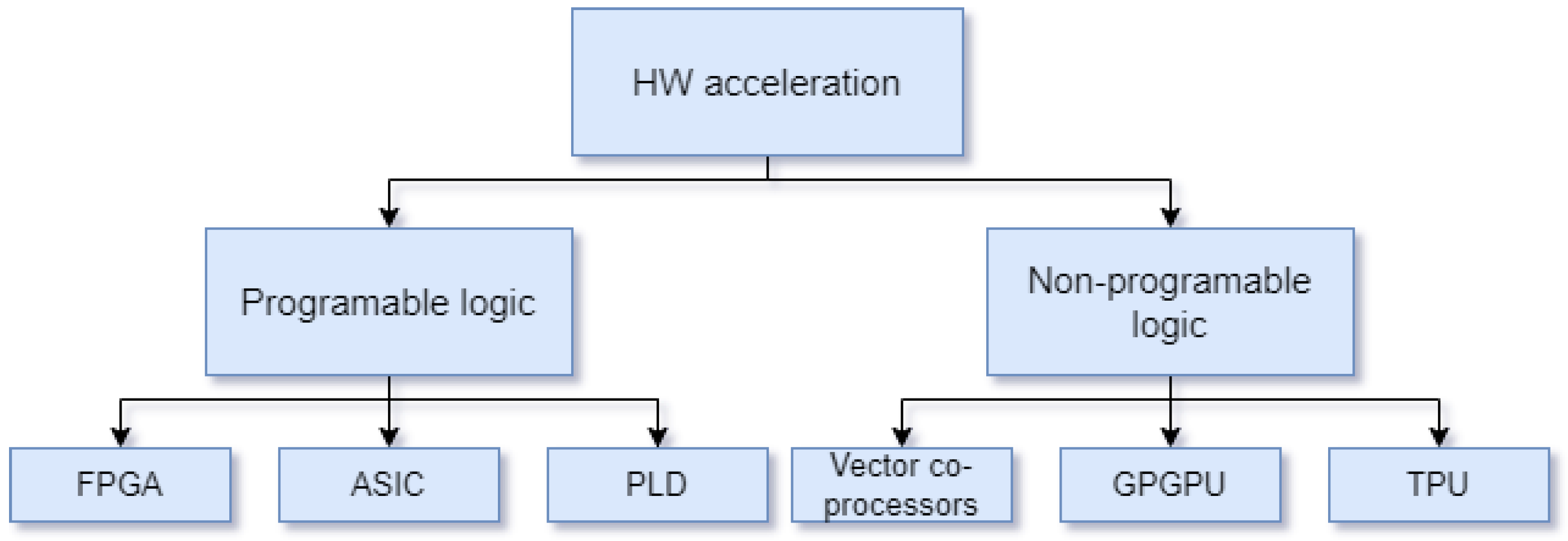

This tradition has two main offshoots: hardware-based (FPGA, ASIC, GPGPU, see

Figure 4), and Kernel bypass acceleration. These two options are implemented not as stand-alone systems but rather heterogeneous systems, where vital, compute-intensive tasks are offloaded to DPDK, eBPF, or HW engines, but still, most functions like management, UI, and logging run on a regular CPU.

As we discussed in

Section 3, modern cloud services rely on high-density data centers where computational and storage elements are interconnected with high-speed (10–100 Gbps) links and networking devices. Typically, these data centers are connected to the public Internet with multiple 100/400 Gbps links. Consequently, there has been a serious industrial effort to use hardware and ML for scaling up the performance of the intrusion detection tasks to meet the security requirements of large data centers.

Although many successful hardware-based industrial solutions have been developed, hardware-based intrusion detection is not researched very much. The case against the research of hardware-based implementations is twofold: (1) The main advantages of hardware are performance and low latency (2) The hardware implementation’s main drawback is the extended development time compared with software implementation, making testing novel methods or algorithms impractical. Hardware is not only a way of implementation, but also a way of thinking. Hardware scales much better than software; many methods are impossible to use and have semi-decent performance using CPUs.

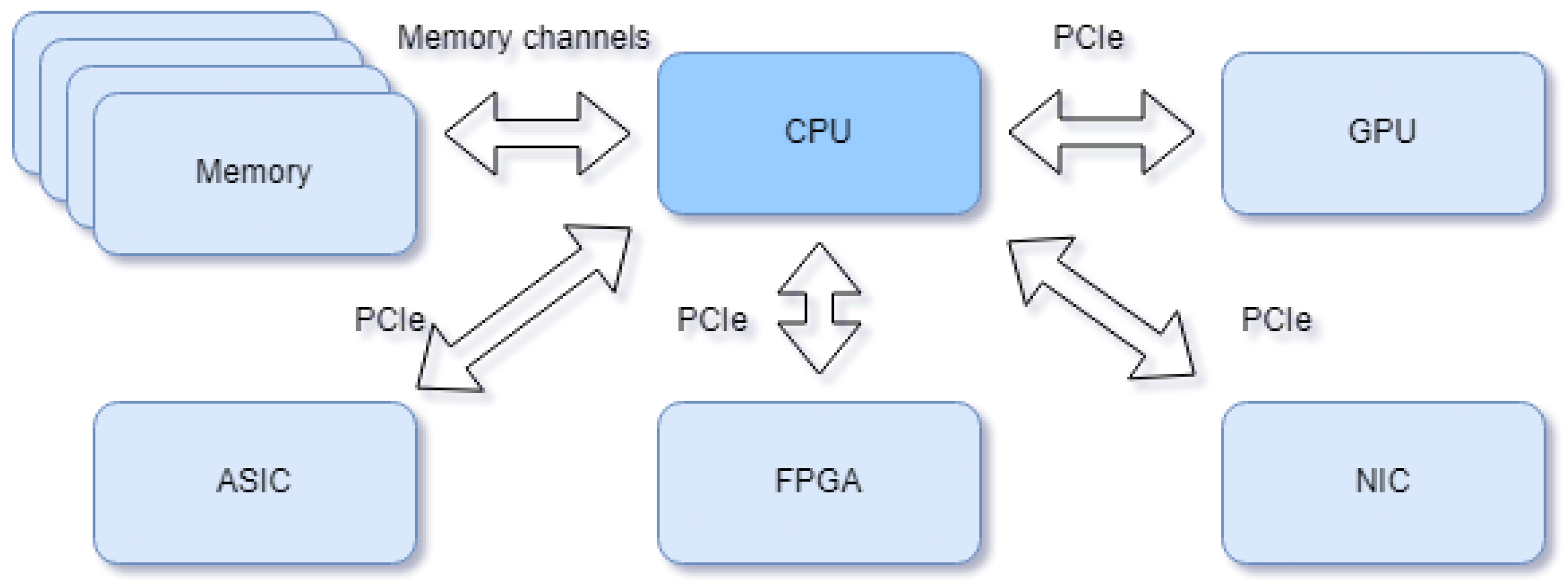

Developers have made a serious effort to have the next-generation architecture be based on the high-performance computing heterogeneous system paradigm, see

Figure 5. In the heterogeneous paradigm, a system is not implemented for an FPGA, ASIC, or CPU, but each subsystem is implemented for the medium that is most beneficial for the target function. This paradigm makes the development of complex systems more resource-efficient and robust. The Xilinx Versal ACAP is an excellent example of what the next generation may look like.

4.1. DDoS Acceleration with ASIC and FPGA

In this section, we will showcase how custom ASICs, FPGAs, and smart NICs are applied to support low-latency detection and mitigation. First, let us discuss the least relevant of the three, ASICs. ASICs that can handle the required traffic volume become prohibitively costly. FPGAs can also do what ASICs do, but at a fraction of the cost, for most manufacturing volume. For example, even for Microsoft, it was more economical to use FPGAs instead of ASICs for Project Catapult and Project Brainwave. A single FPGA chip is capable of processing 10 million packets per second in real-time without loss. Achieving equivalent throughput in software necessitates the utilization of multiple CPU cores.

Chao et al. [

57] propose a novel ASIC hardware that implements the critical functionalities of cybersecurity. These key issues are intrusion detection, firewalling, DDoS detection/mitigation, encryption and decryption. This proposed ASIC can operate at a line rate of 10 Gbps.

Commercial NICs have evolved an immense amount in the past; features have been added to commercial NICs, which were only available in FPGAs a few years ago. Intel E810 NICs have features like N-tuple lookup and action execution, L5+ parsing and deep header inspection. High-throughput NICs are also extremely cheap compared with other options. However, there is very little research on how these new resources can be utilized.

FPGA is currently the most researched and used accelerator of the three options (see

Table 2). By using FPGAs, a lot of the limitations of classical processors are nonexistent. There is much algorithmic opportunity with FPGAs.

Kuka et al. propose a method to filter the malicious traffic of reflection-based DDoS attacks from high-speed links [

60]. This method was implemented for an FPGA and tested by the authors. A very similar method was published by the authors of this survey a year earlier in [

59,

61,

62].

The authors of this survey propose a novel FPGA accelerated architecture, called NEDD, to detect DDoS attacks with high certainty and low reaction-time [

59,

61]. The NEDD architecture uses the massive parallelization capabilities of the FPGAs to parse and process every packet on the network interface for up to 100 Gbps per interface and 300 Mpps. The processing of each packet enables millisecond range detection times [

59] and shallow false detection [

61].

Hoque et al. [

58] propose a correlation-based analysis method, NaHiD, which correlates network objects to each other to detect DDoS attacks. This method is implemented using an FPGA platform to accelerate the decision of the NaHiD; by using HW accelerators, they achieve microsecond range decision time, not on raw traffic, but on already extracted objects.

4.2. Deployment Scenarios

Deployment scenarios vary based on infrastructure scale, budget, and performance requirements. The choice of acceleration method impacts feasibility and effectiveness.

Data Centers: Large-scale data centers (e.g., AWS, Google) handling 100–400 Gbps links require FPGA or eBPF/DPDK solutions for real-time detection. FPGAs are deployed at network edges for inline processing, as in Microsoft’s Project Catapult, where they handle 40–100 Gbps with sub-millisecond latency [

63]. eBPF is preferred for cloud-native environments due to its integration with Kubernetes and scalability with virtual machines. However, eBPF fails in scenarios requiring deep packet analysis of small packets (e.g., 64 byte UDP floods), where FPGAs excel.

Enterprise Networks: Medium-sized enterprises with 10–40 Gbps links often use smart NICs with eBPF/DPDK for cost-effective mitigation. Intel E810 NICs, for instance, support DPI and flow-based filtering, achieving 10 Gbps with low latency [

53]. FPGAs are less common due to high initial costs (e.g.,

–50,000 per FPGA board).

IoT and Edge Networks: IoT environments with constrained devices rely on lightweight eBPF implementations for basic filtering, as full DPI is computationally infeasible. FPGAs are rarely used due to power and cost constraints, but smart NICs with partial offloading are emerging as a solution for edge [

64].

4.3. Concluding Remarks and Lessons Learned

The detection and mitigation of DDoS attacks are among the more performance-sensitive network tasks because data centers and internet service providers have traffic in the range of 100–1000 Gbps. This traffic must be parsed and processed to decide on a packet-by-packet basis if it is malicious. This packet-by-packet decision takes a lot of processing power, which in many cases can not be economically provided by Neumann–Harvard architecture CPUs, not even with hardware-adjacent frameworks like DPDK or XDP. Hardware acceleration provides an excellent opportunity to increase the throughput of all DDoS detection methods.

In

Table 3, we collected the acceleration methods of the previous two sections. We compared the inherent characteristics of the acceleration methods. We felt that the direct comparison of the proposed scientific articles would be extremely unfair because of the vast difference in the proposed feature set, use-case (e.g., data center vs. IoT), requirements, and time of publication. Our comparison aligns quite well with the industrial trends; the traditional user-space software and ASIC-based mitigation became extreme niches for general-purpose DDoS mitigation. Most key stakeholders are using eBPF, DPDK, or FPGA-based solutions. Currently, eBPF seems to be the number one solution, thanks to its flexibility, relatively fast development cycle, and the great synergy between it and Cloud infrastructure (can be scaled together with cloud resources). The only thing that eBPF/DPDK cannot do is certain processing scenarios (NIC n-tuple filters or BGP Flowspec pre-filtering cannot be used) of very small (64 byte) packets at the line rate; this is the area where FPGA shines.

The authors of this article recommend eBPF as the go-to acceleration method for most use cases. Traditional software should be used for quick prototyping and testing novel concepts, and environments where eBPF is not available. FPGA should be used where there is a need to meet the most stringent QoS requirements and complementary cloud scaling is not available, or for mitigation algorithms that scale poorly with CPUs.

5. The Characteristics of DDoS Mitigation

Many studies only concentrate on accurate detection and false detection rates. However, the DDoS detection process has many more similarly important characteristics. This section will discuss the common characteristics of DDoS detection, like detection and mitigation time, false detection rate, and the ability to detect day 0 attacks.

5.1. False Detection

The basic principle of DDoS detection is that regular and DDoS traffic are classified as attacks and non-attacks; see

Table 4. A false detection is an event where network traffic is miscategorized during the DDoS detection/identification process. There are two sub-types of false detection: (I) a DDoS attack is not detected as an attack; this event is called false negative detection (II) regular user traffic is categorized as DDoS, and this event is called a false positive detection. Generally speaking, false positives are more harmful than false negatives because: (a) false detection-based mitigation can cause a partial or complete denial of service for the application whose packets were categorized as an attack. In case of a false negative, the attack will likely only achieve partial denial of service or QoS drop because high-throughput attacks, which can achieve complete DoS, are generally easier to identify, (b) most network traffic is periodic, so the false detection likely reoccurs regularly. In the case of a false negative, the not-detected attack will be most likely a one-of-a-kind event. However, as a security solution provider, we observed attacks that periodically recur for weeks, months, and years against the same target.

Making false decisions (see

Table 4) is easier than ever because there are a growing number of high-throughput, widespread applications, like VPN nodes and encrypted corporate tunnels, whose traffic characteristics reassemble DDoS traffic much more than regular network traffic. While DDoS attacks became much more sophisticated, blurring the line between DDoS and regular traffic.

False detection is probably the most researched challenge the industry is facing today [

23] because (I) DDoS attacks became so numerous and expert work hours so expensive that manual decision-making became infeasible for many; (II) there is a very high cost associated with false detection; (III) automatic decision-making is still much more prone to false detection than a good security expert.

Table 5 summarizes the discussed research on the detection characteristics.

There are two typical solutions to circumvent the false detection problem [

65]: (I) Tri-state logic, in which events are annotated as attack/suspect/normal, is a convenient solution for avoiding false detection. The usual workflow of tri-state logic is that high-confidence attacks are automatically mitigated, suspects are forwarded for post-processing or manual decision, and normal traffic is ignored. (II) Rate limitation is another good tool to mitigate the effect of false positive detection. By rate-limiting specific flows, the effect of false mitigation can be reduced from complete denial-of-service to QoS drop. The congestion control of normal traffic will adapt to the limited bandwidth, while DDoS attacks are reduced to a manageable volume.

In “Low-Rate DDoS Attacks Detection and Traceback by Using New Information Metrics” [

66] Xiang et al. showcase the problem mentioned above, how some application-specific network traffic and low-rate DDoS attacks evolved to resemble each other, making correct decisions harder than ever. They propose a generalized entropy metric that can accelerate and fortify the detection of DDoS attacks.

In “Modelling Behavioural Dynamics for Asymmetric Application Layer DDoS Detection” [

67] Prassed et al. extensively discuss the false positive detection problem in the case of application layer DDoS attacks. They demonstrate how close HTTP DDoS attacks are to user traffic. They propose a new method based on Probabilistic Timed Automata (PTA) and a suspicion scoring mechanism to achieve lower false detection than the current SotA while having a low overhead on the web server.

5.2. Detection and Mitigation Time

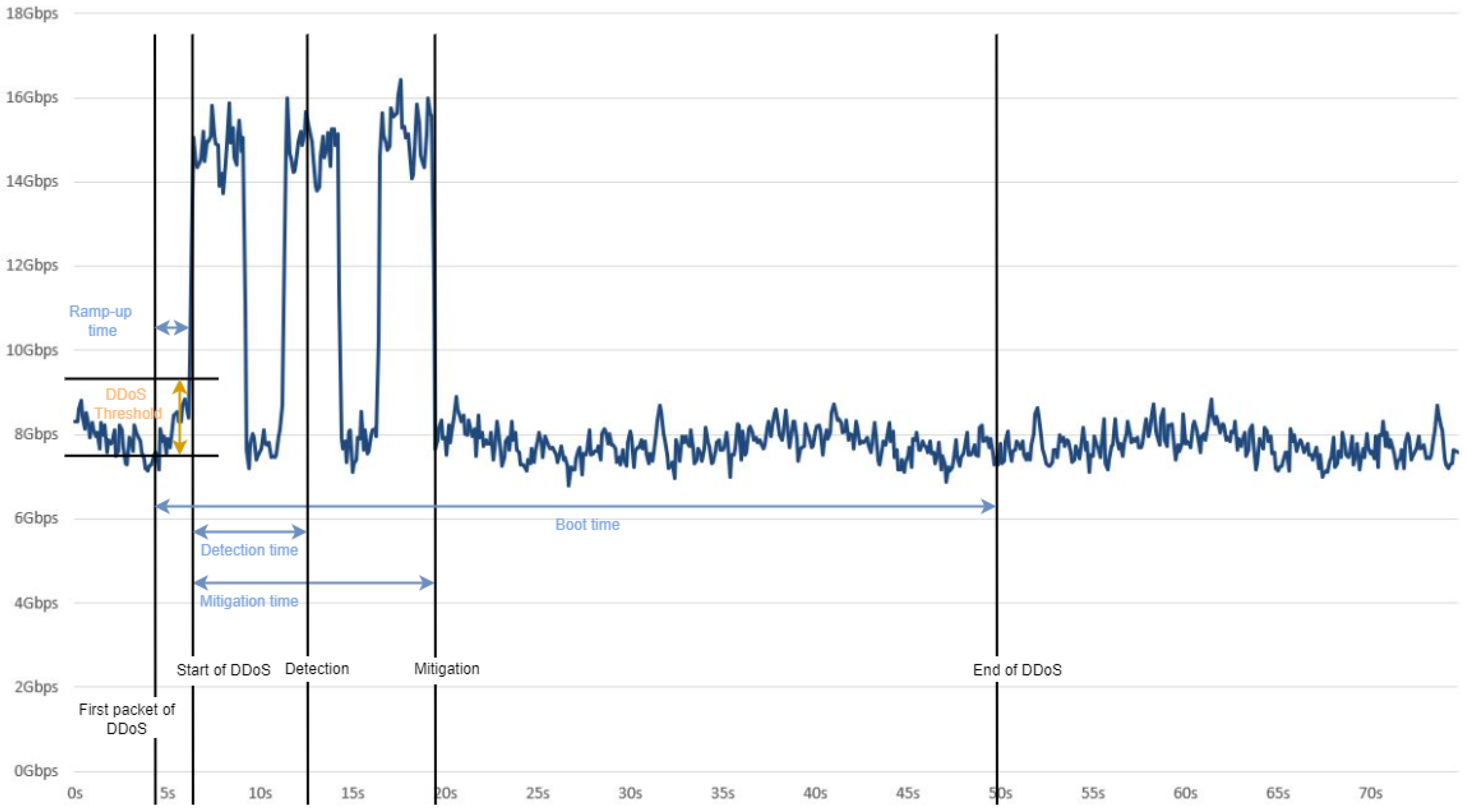

Detection time is the elapsed time from the start of the attack to the detection of the attack. Mitigation time is the time from the start of the attack to the mitigation of the attack, see

Figure 6. Another essential characteristic of DDoS detection is the detection and mitigation time because (I) if the attack is shorter than the detection time, then there is a high chance that the attack will go undetected; (II) in the case of many real-time applications, like high-frequency trading, streaming, and online gaming, if DDoS attacks are not mitigated in a very short time, critical disruption can occur. Many analytic- and low-overhead-based methods have severe problems with detection time.

The authors of this survey in “Detecting DDoS attacks within milliseconds by using FPGA-based hardware acceleration” [

59], and “Low-reaction time FPGA-based DDoS detector” [

61] propose a novel FPGA-accelerated architecture, called NEDD, to detect DDoS attacks with high certainty and low reaction time. The NEDD architecture uses the massive parallelization capabilities of the FPGAs to parse and process every packet on the network interface for up to 100 Gbps per interface and 300 Mpps. The processing of each packet enables millisecond range detection times [

59] and a very low false detection [

61].

5.3. The Ability to Detect Day 0 New Attacks

A critical characteristic of DDoS detection, which studies do not discuss much, is the ability to detect day 0 new attacks. Many studies use datasets from the 1990s to verify their solutions, which is a severe hazard, since today’s internet looks nothing like the internet from that era. DDoS attacks have also completely transformed since the 90s. Many researchers validate their method/solution against completely outdated data. For example, the Slowloris attack was a threat to Apache web servers between 2007 (the year of the inception of the attack tool) and 2010 (when Apache mitigated the vulnerability); at this time, there were 0 IEEE papers that used Slowloris to test their solution. Since 2016, there have been ten papers that seriously discussed this type of attack.

Detecting day 0 attacks is almost impossible for a rule-based DDoS detector because this kind of detector has the known attacks hardwired as rules; new rules must be added for new attacks. This is the problem with supervised learning-based DDoS detection as well. Heuristics-based and unsupervised learning fare much better against day 0 attacks.

In “Detection of DoS attack and Zero Day Threat with SIEM” [

68] Sornalakshmi argues that heuristics algorithms running on system logs are enough to detect day 0 attacks. He proposes nine heuristics, which check whether the server is exploited. This paper showcases the big problem of a heuristic-based system, namely that these nine heuristics are pretty simplistic. It does not defend against many common DDoS techniques. Like in “rule 3”, it presumes that the DDoS tool cannot spoof browser agent information (or the attack comes from a DDoS tool instead of an infected Botnet). The working of “rule 4” is dependent on having unspoofed source addresses.

In “Coping with 0-Day Attacks through Unsupervised Network Intrusion Detection” [

69] Casas et al. demonstrate unsupervised learning-based on clustering algorithms and detection of outliers (UNIDS). They prove that the UNIDS method is three times more efficient in detecting day 0 attacks than traditional detection methods. However, they use an extremely small dataset to validate their hypothesis.

Table 5.

Overview of articles on the topic of detection characteristics.

Table 5.

Overview of articles on the topic of detection characteristics.

| Author(s) | Reference | Characteristic | Novelty | Results |

|---|

| Xiang et. al. | [66] | False detection | Method | Low false-detection, low detection time |

| Prassed et. al. | [67] | False detection | Method | Low false-detection, low overhead |

| Nagy et. al. | [59] | Detection time | Architecture | Ms-range detection time, detection of Hit-and-Run attacks |

| Casas et. al. | [69] | Day-0 detection | Method | Significant improvement over the SOTA in day 0 attack detection efficency |

6. Mitigation

Mitigation is the process or result of making something less severe, dangerous, harsh, or damaging. In the context of DDoS attacks, it prevents some or all packets of the incoming attack from passing through the actuator and reduces the quality of service drop. In this section, we try to answer three crucial questions:

What can be considered a successful mitigation, and what are its metrics?

What is the process of mitigation of DDoS attacks?

What are the State-of-the-Art methods of mitigation?

Regarding a comprehensive survey, Agrawal et al. provide a state-of-the-art survey on DDoS detection and mitigation in the cloud environment in “Defense Mechanisms Against DDoS Attacks in a Cloud Computing Environment: State-of-the-Art and Research Challenges” [

70].

6.1. What Does Successful Mitigation Mean?

The first question can be approached from two different perspectives.

6.1.1. Security Provider’s Perspective

From the perspective of the security provider, we count the total number of packets of the attack and the number of attack packets that pass through the actuator. This is an effective metric to benchmark the quality of the security equipment. We can directly compare the performance of various devices by this metric. As an example, if solution “A” on average lets through 4% of all malicious packets and solution “B” lets through 1% of all malicious packets, then solution “B” should be better than “A”. Nevertheless, is this a sufficient metric to describe mitigation? The answer is no, and here comes the other perspective.

6.1.2. Security Solution Customer’s Perspective

Evaluating the mitigation as successful can be seen from the perspective of the application or the user protection. This is the responsibility of the customers. They want to provide the same QoS to their clients during the DDoS attack as without the attack. So, the second metric is the drop in QoS during an attack. This is much more complex to calculate than the security providers’. However, it is much closer to what a potential user would be interested in.

In their paper, Cao et al. [

71] demonstrate how the commonly used solution to redirect all traffic to well-equipped CDN-s (Content Delivery Networks) like Akamai, and using their DDoS mitigation services, might be insufficient. They also propose a solution that helps the service provider work with their ISP to mitigate DDoS attacks.

In “Using whitelisting to mitigate DDoS attacks on critical Internet sites” [

72] Yoon et al. propose a fundamentally different approach to mitigate the effect of DDoS attacks by positive filtering. In their scheme, only the connections that are on a whitelist are permitted through the firewall of the system, blocking all other incoming traffic. This is an inspiring way of thinking since the CAM (content addressable memory) LUT (look up table) performance has greatly improved in the past decade. This method also has a very low false negative ratio because only trusted sources are let through the firewall. This method also has the benefit of being used with most existing mitigation architectures. The problem with this approach is twofold: (I) the dynamic maintenance of the whitelist, which is more computing-intensive than maintaining a blacklist for most applications. (II) The members of the whitelist are explicitly trusted; if the attacker gains the knowledge of the member list and can forge IP source addresses (IP spoofing), he can deliver 100% of the malicious packets to the target. Their experiments show great promise in using this method to mitigate attacks successfully.

Using the flow-based application data to automatically create packet-based blacklist rules for firewalls is an interesting, novel method proposed by Simpson et al. in [

73]. Harada et al. propose a similar method, but for IoT networks in “Quick Suppression of DDoS Attacks by Frame Priority Control in IoT Backhaul With Construction of Mirai-Based Attacks” [

74] propose a novel distributed denial of service (DDoS) attack suppression system that minimizes the discarding of normal traffic from non-infected Internet of Things (IoT) devices by prioritizing frames within the network. Experimental results demonstrated that our system effectively prevented normal traffic loss within seconds during attack scenarios, including a Mirai-based DDoS attack, and autonomously blocked malicious traffic at network entry points.

6.1.3. A Simple Example of Mitigation Success

Let us demonstrate the difference between the two approaches through a simple example, illustrated in

Figure 7. DCN “A” is leasing two 100 GbE links from its local ISP (Internet Service Provider). It has 50 Gbps of user traffic and is attacked by a 350 Gbps DDoS attack. Altogether, this is 400 Gbps, whereas the leased line is only 200 Gbps. Let us see what happens.

Note that in this example, 1% of dropped user traffic counts as a 1% loss of quality of service, and 1 Gbps DDoS reaching the DCN causes a 0.5% loss of quality of service.

Solution “A” guarantees that 99% of the attack packets never reach the DCN and can be installed right before the core routers of the DCN. Solution “B” is offered by the ISP and guarantees that 96% of the attack packets never get to the DCN, and it is installed in the ISP’s network.

Assuming a random packet drop in the ISP network with solution “A”, 175 Gbps attack traffic, and 25 Gbps user traffic reach the actuator (altogether 200 Gbps). Due to the mitigation capability, merely 1% (1.75 Gbps) of this attack traffic enters the DCN. However, due to previous packet drops, which occurred in the ISP because of the over-subscription of the 2x100GbE link, merely 50% of the user traffic goes through, hence the total QoS drop is 50.75% (i.e., 50% from packet drop, 0.75% from malicious packets reaching the inside of the DCN).

Using solution “B”, 350 Gbps of attack traffic and 50 Gbps of user traffic reach the actuator, which mitigates 336 Gbps of attack traffic; 50 Gbps of user traffic and 14 Gbps of attack traffic enter the DCN, resulting in a 7% drop in QoS.

6.2. The Process of DDoS Mitigation

For the second question, the answer is generally simple; it is mostly a standard process with the following steps:

The detection of the attack, see earlier sections;

Creation of mitigation rules, these are generally n-tuple matching rules;

Proliferation of mitigation rules, the rules have to be sent to every actuator in the system;

Validation of the mitigation;

Tuning of the mitigation rules based on feedback;

Removal of the mitigation rules.

There are several ways of handling attack traffic: (I) simply deleting every packet that matches the mitigation rules, (II) Rate-limiting the matching traffic, (III) Encapsulating and forwarding the rule-matching traffic to a different mitigation system.

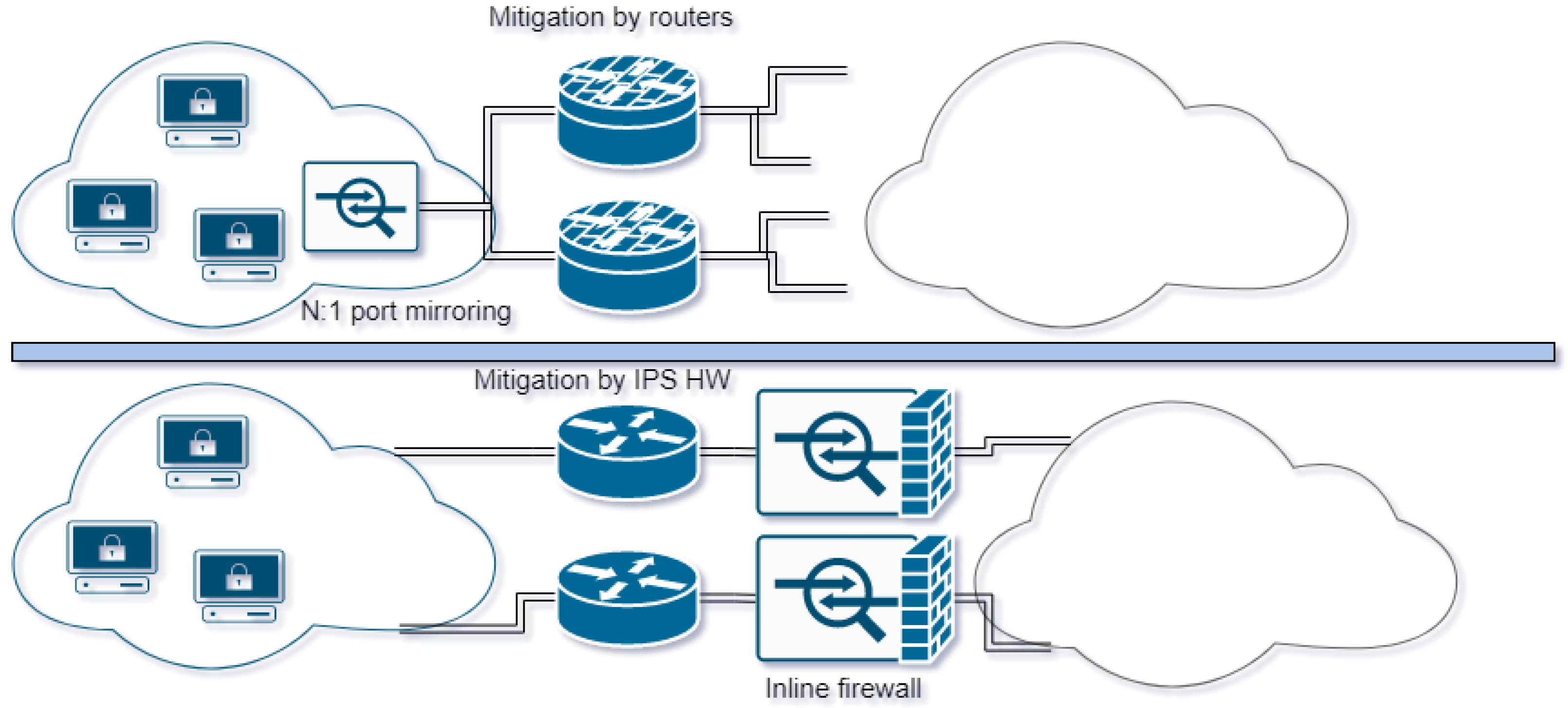

Two kinds of actuators are used for mitigation in modern systems, as shown by

Figure 8:

Inline DDoS mitigation hardware/Firewall. This actuator is pretty straightforward. The operator installs a box between their infrastructure and Internet provider, real traffic passes through, and DDoS traffic is deleted or forwarded. This solution is generally deployed for small-to-medium users.

Mitigation is conducted by the network equipment, using switches and routers as DDoS mitigation actuators. This is a more interesting architecture for mitigation since there is no need for additional hardware, and it can be deployed without redundancy concerns. It is generally cheaper and simpler for big providers with hundreds or even thousands of physical links.

6.3. Scaling Cloud Resources to Mitigate DDoS

Furthermore, there is an actuator-less approach in the cloud environment, which allocates resources smartly to attacked customers. The proponents of this method argue that if we have a large enough resource pool, we can always allocate enough resources to the victims because there are few concurrent DDoS attacks.

In their paper entitled “Can We Beat DDoS Attacks in Clouds?”, Yu et al. in [

75] propose to conduct DDoS mitigation by dynamically allocating computing and networking resources to attacked systems. DDoS achieves its goals by exhausting the resources of the target. Cloud environments have massive amounts of resources; by allocating excess resources to the attacked system, they mitigate the effects of the attack.

A novel DDoS mitigation system, called MiddlePolice [

76] is proposed by Liu et al. By conducting wide spectrum surveys of key potential victims, they proposed three essential requirements that a new DDoS mitigation system must fulfill: (I) The system has to be deployable without extensive modification of the internet system, (II) it has to be independent of vendor- or protocol-specific traffic control policies, (III) it has to solve the traffic-bypass vulnerability of existing cloud system service providers. They designed and implemented a discrete defense system mbox, which can be installed between the client and the ISP, providing a transparent defense layer.

As a rapid mitigation of cloud DDoS attacks, Somani et al. [

77] propose a metric to quantify the resource usage of one unit of attack, the “resource utilization factor”. They measure the resource utilization factor of various attacks. Their mitigation method reduces the resource utilization factor of the measured attacks by tailoring the victim’s processes to consume the minimal local resources upon attack. It is not a standalone defense system but provides an always-on layer of mitigation, which keeps the victim’s service online until the more sophisticated mitigation measures are armed. By deploying this additional layer of defense, Somani et al. decreased the downtime of their test services by 95%.

6.4. Network Hardware as a DDoS Mitigation Tool

Several advanced concepts leverage network infrastructure as an actuator. Firstly, for most users, network infrastructure represents a heterogeneous system, typically comprising equipment from various vendors such as Cisco and Juniper, which requires consistent and homogeneous configuration. BGP FlowSpec, a BGP-based standardized protocol for Distributed Denial of Service (DDoS) mitigation, appears poised to address this challenge. BGP FlowSpec facilitates the dissemination of n-tuple matching-based DDoS mitigation rules across heterogeneous systems, making it particularly noteworthy due to its broad adoption by leading vendors in both networking equipment and Intrusion Detection Systems (IDS).

The second critical concern pertains to the finite resources of networking equipment, which must be allocated between DDoS mitigation efforts and essential networking functions. This limitation raises significant concerns that during a DDoS attack, the network equipment could become overwhelmed, impairing its ability to perform both mitigation and core networking tasks effectively.

In the context of statistical application fingerprinting for DDoS attack mitigation, Ahmed et al. [

78] introduce a novel mitigation method based on the generation of per-application traffic fingerprints. These fingerprints are subsequently used to identify and filter out attacks that deviate from the established fingerprint patterns. The method demonstrated a 97% success rate in identifying attacks, with a misclassification rate of only 2.5%. This represents a significant advancement in the field, as comparable techniques typically achieve precision rates ranging from 80% to 90%, as reported by Hajimaghsoodi et al. [

79].

6.5. Mitigation of Spoofed Attacks

Packet spoofing should theoretically have been eradicated by 2023, as all service providers are expected to filter their uplink traffic for spoofed packets. However, in practice, many DDoS attacks continue to exploit packet spoofing. Mitigating spoofed attacks presents a significant challenge because the origin of the sender’s address is obscured, rendering traditional detection and mitigation techniques, such as reputation databases, ineffective. Additionally, most routing algorithms discard packets on a flow-fair basis, aiming to drop an equivalent number of packets from each flow. This approach often results in a higher rate of legitimate traffic being dropped compared with the spoofed DDoS traffic, where each packet may represent a unique flow [

80,

81].

Due to these complexities, the mitigation of spoofed attacks requires specialized approaches and should be treated as a distinct subject. This area has been relatively under-researched, and we have compiled the most significant contributions on this topic:

Kavisankar et al., in their work “Efficient SYN Spoofing Detection and Mitigation Scheme for DDoS Attack” [

82], address the limitations of reputation-based attack detection methods against spoofed attacks. They propose enhancing trust-based filtering with a TCP probing method, demonstrating that TCP probing can effectively overcome the spoofing vulnerabilities inherent in traditional trust-based detection systems.

In another approach, Sahri et al. propose a collaborative spoofing detection and mitigation solution through an SDN-based looping authentication mechanism for DNS services. This method protects DNS servers from being exploited by spoofed requests, preventing their use as DDoS reflectors [

83]. The CAuth method, developed using DNS and OpenFlow protocols without modification, leverages OpenFlow to authenticate each DNS request, offering a robust solution for blocking spoofed requests in OpenFlow-enabled networks. Cloud security researchers increasingly view collaborative mitigation as a promising strategy for combating DDoS attacks [

84].

Maheshwari et al. introduce a novel adaptation of Hop Count Filtering (HCF) in their study [

85]. HCF works by comparing the hop count of incoming packets with the expected distance based on the source IP address, filtering out packets where the two metrics do not align. They enhance this method by incorporating Round Trip Time (RTT) calculations, allowing them to filter out 99% of spoofed packets, provided sufficient computational resources are available.

Furthermore, Alenezi et al. propose an innovative approach for autonomously and efficiently tracing the origin of DDoS packets, thereby determining whether the packets are spoofed and identifying the source of the attack [

86]. Their method relies on probabilistic packet marking within an autonomous system, which reveals the originating AS without disclosing the full topology of the ISP. This approach represents a significant advancement over traditional probabilistic packet marking (PPM) techniques, though its benefits compared with ingress filtering of spoofed packets remain under discussion. Similar work has been conducted by Rajam et al. [

87].

Overall, detecting and mitigating spoofed attacks remains a daunting challenge. Most techniques involve probing the source address to verify the legitimacy of the packet, which can be effective. However, fabricating a spoofed packet is significantly less resource-intensive than probing a source address. Given the vast number of potential IP addresses, it becomes evident that such methods are only feasible for mitigating attacks of limited scope.

6.6. Concluding Remarks and Lessons Learned

In this section, we examined effective strategies for mitigating DDoS attacks and explored the methodologies used to differentiate between malicious attack packets and legitimate network traffic (see

Table 6). We provided a detailed discussion on the mitigation process and highlighted the significant challenges posed by packet spoofing, including its persistence in network environments and the methods available for its mitigation. Additionally, we identified key research gaps in the field, particularly in the areas of spoofed packet identification and the timely filtering of hit-and-run attacks.

In line with the criteria introduced in the Abstract,

Table 7 and

Table 8 provide a comparative assessment of FPGA-, software-, eBPF-, and AI-based mitigation methods. The tables illustrate how each approach performs against both quantitative metrics, such as mitigation latency, throughput, and accuracy, and qualitative considerations, including adaptability, integration potential, and operational overhead. This synthesis demonstrates that no single solution is universally optimal: while an FPGA offers unparalleled raw performance, it lacks flexibility, whereas AI excels in adaptability but requires significant resources. The proposed criteria, therefore, serve as a balanced framework for evaluating trade-offs and guiding the selection or combination of mitigation strategies suited to emerging DDoS threat profiles.

As a first observation, we have learned that defining success in DDoS mitigation is inherently multidimensional. Evaluating mitigation solely from the security provider’s perspective—by measuring the proportion of attack traffic blocked—can be misleading if it fails to preserve QoS for legitimate users. Instead, effective mitigation must balance two perspectives: packet-level blocking performance and the end-user experience. The examples shown illustrate that a solution blocking more attack traffic is not necessarily better if it results in higher user traffic loss due to suboptimal placement or insufficient provisioning. This underlines the importance of considering both network-level and application-level impacts when designing or deploying mitigation strategies.