Reinforcement Learning in Medical Imaging: Taxonomy, LLMs, and Clinical Challenges

Abstract

1. Introduction

1.1. Key Contributions

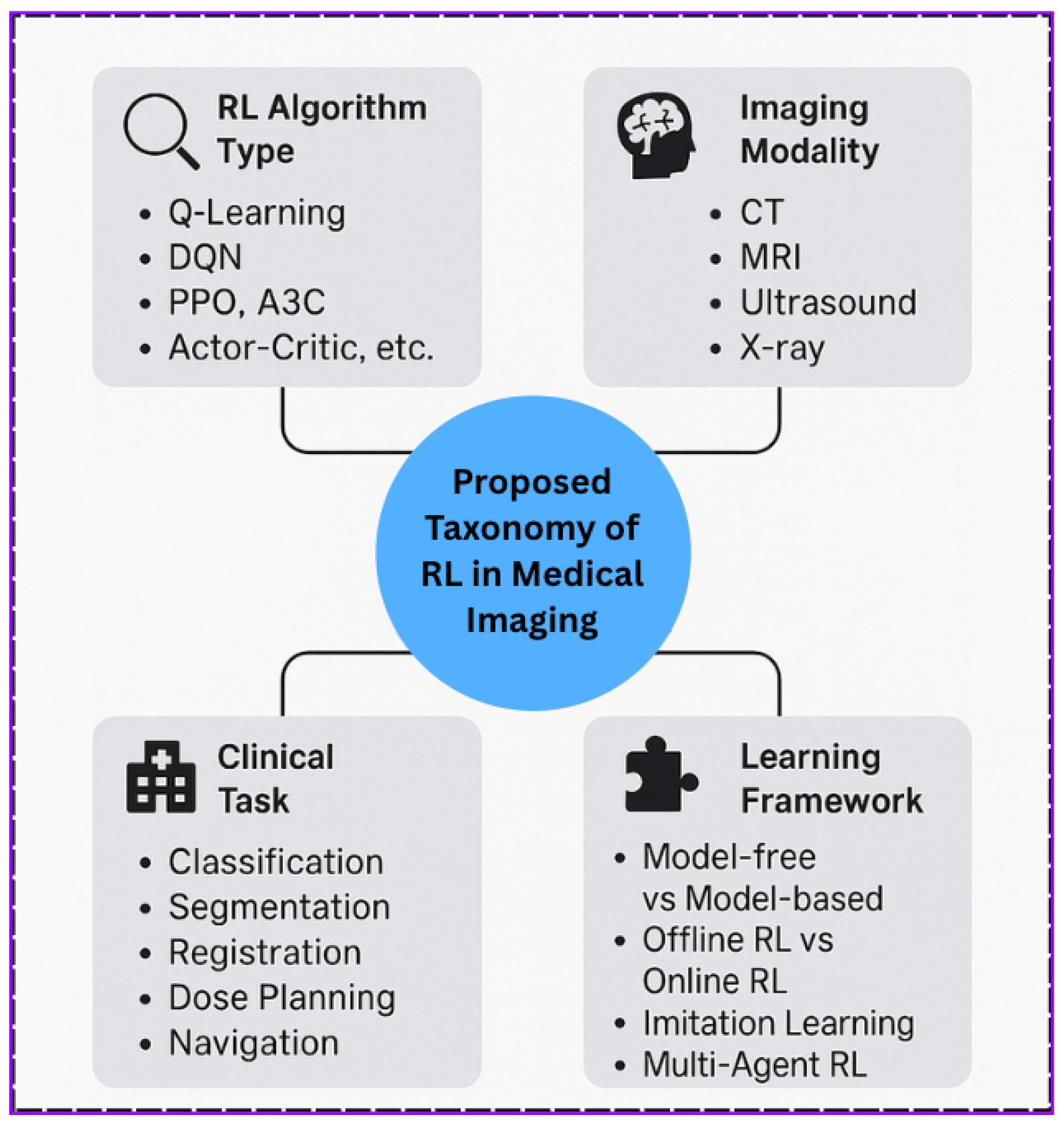

- We propose a unified taxonomy for reinforcement learning in medical imaging, categorizing over 100 studies by algorithm type, imaging modality, and learning framework.

- We identify emerging trends and underexplored intersections, including multi-agent RL, offline RL, and model-based RL in rare modalities.

- We explore the role of Large Language Models (LLMs) for automating literature synthesis, highlighting gaps, and improving interpretability.

- We analyze privacy-preserving strategies such as Differential Privacy (DP) and Federated Learning (FL) for ethical deployment in clinical environments.

- We provide a comprehensive discussion on challenges, limitations, and future research opportunities for deploying RL-based systems in real-world healthcare settings.

1.2. Paper Organization

2. Literature Review and Related Works

3. Background and Motivation

3.1. Reinforcement Learning Fundamentals

- S: The set of environment states;

- A: The set of available actions;

- P: The state transition probability function;

- R: The reward function;

- : The discount factor controlling the agent’s valuation of future rewards.

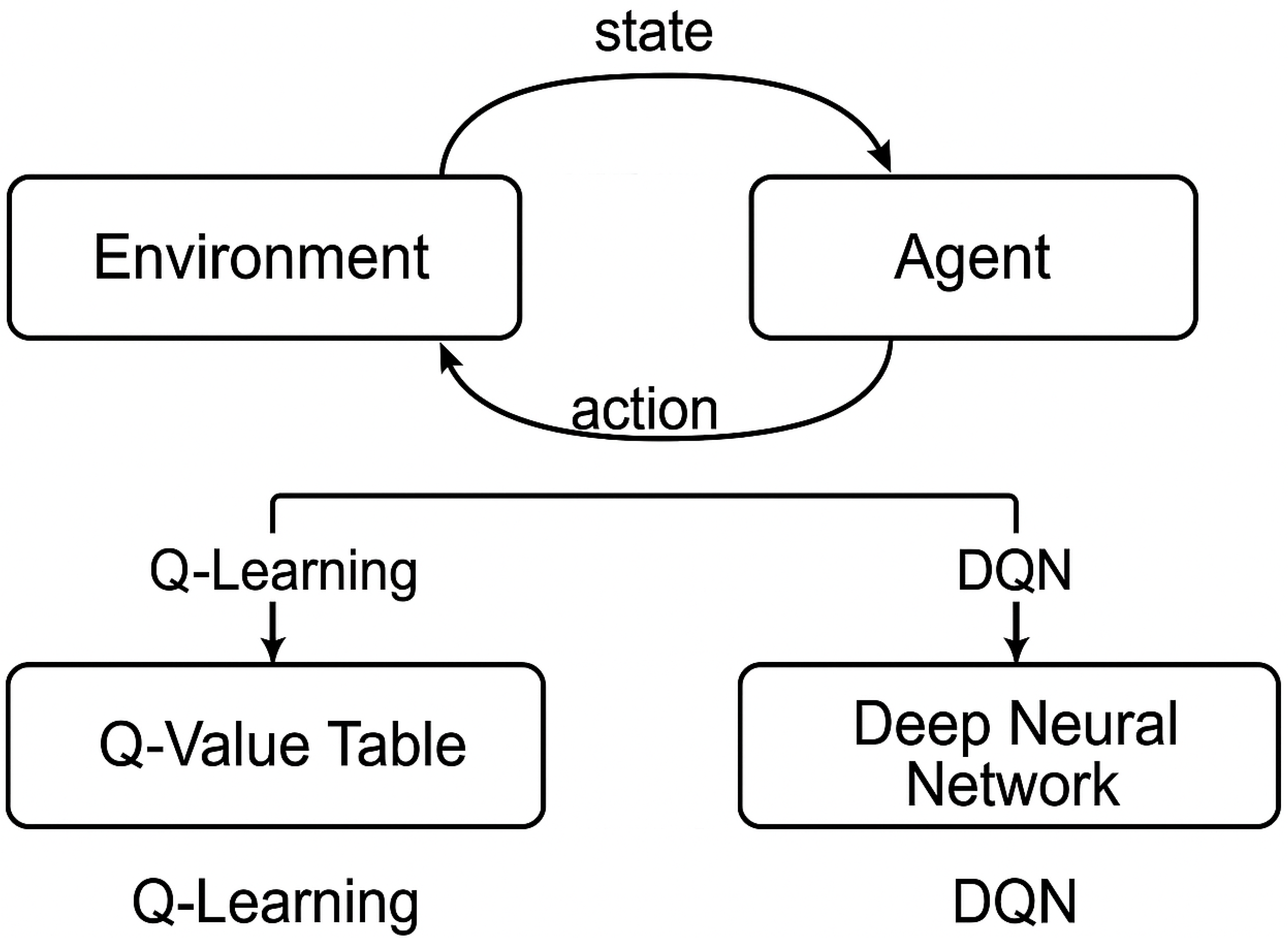

- Q-Learning: A model-free, off-policy approach that learns the optimal action–value function by updating estimates using the Bellman equation [22].

- Deep Q-Networks (DQNs): Introduced by Mnih et al. [23], DQNs extend Q-learning by using deep neural networks to approximate , enabling RL in high-dimensional spaces such as images.

3.2. Artificial Intelligence in Medical Imaging

3.3. Why Reinforcement Learning for Medical Imaging?

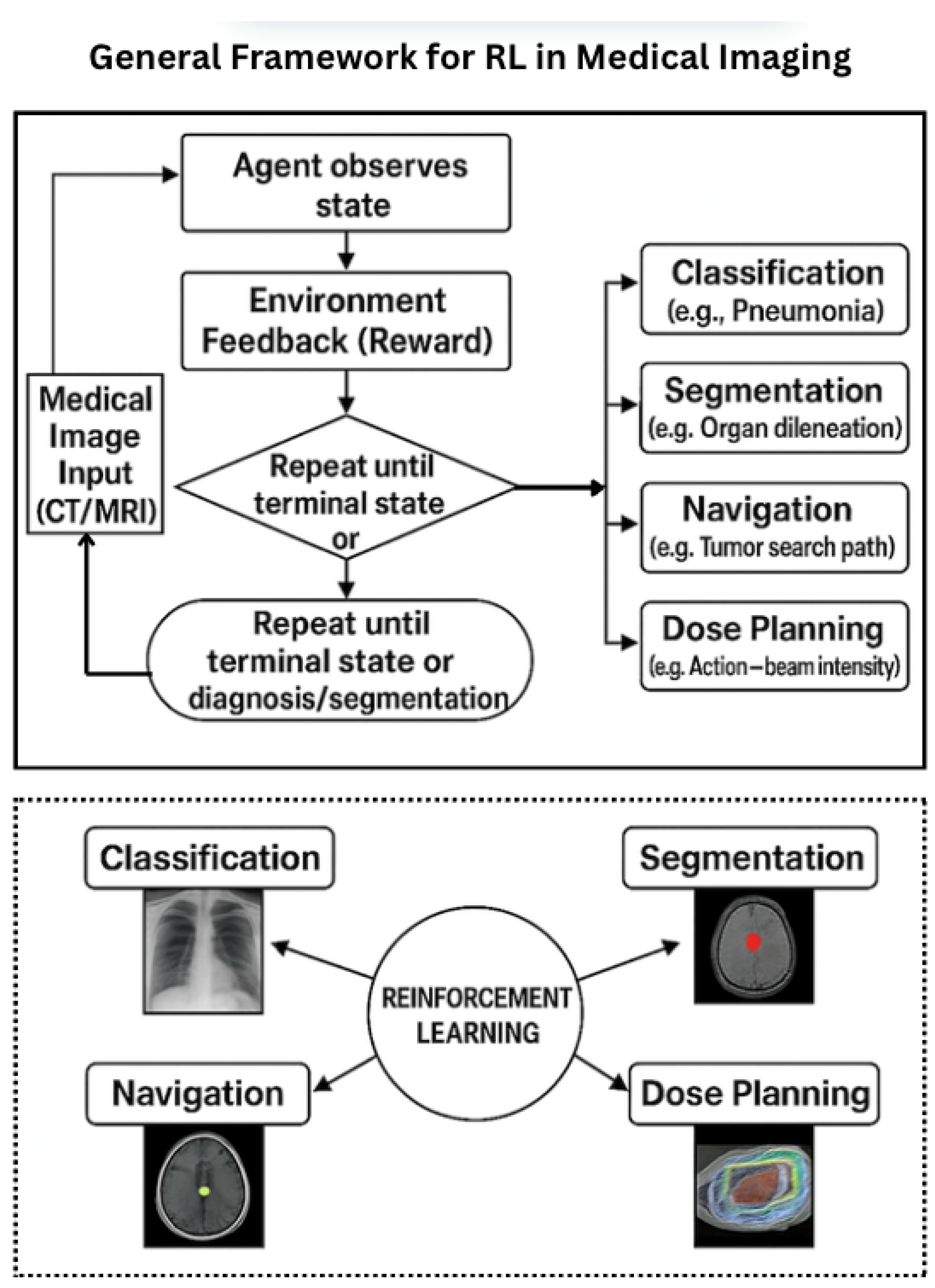

- Navigating and localizing targets within 3D volumetric data (e.g., CT, MRI).

- Performing interactive segmentation in collaboration with clinicians.

- Planning dose distributions in radiotherapy based on projected treatment outcomes.

- Iteratively refining registration and image enhancement tasks.

3.4. Problem Formulation: Pneumonia Detection in Chest X-Rays

- Navigational actions (e.g., move left/right/up/down and zoom).

- Terminal actions (e.g., classify as pneumonia/normal and defer to human).

- +1 for correct classification;

- −1 for incorrect predictions;

- Small penalties for unnecessary steps to encourage efficiency.

- Localized Attention: Focuses on clinically significant regions instead of full-frame processing.

- Interpretability: Enables traceable decision paths aligned with clinical workflows.

- Data Efficiency: Operates under semi-supervised or weakly labeled conditions.

- Adaptive Computation: Adjusts exploration dynamically based on image complexity.

4. Taxonomy of AI-Based Reinforcement Learning in Medical Imaging

4.1. By Imaging Task

- Segmentation: Agents iteratively refine the boundary of organs or lesions, e.g., in brain MRI or ultrasound [32].

- Detection and Localization: RL agents navigate 3D scans to identify key anatomical landmarks or pathologies [33].

- Classification: RL policies guide active learning, view selection, or confidence calibration in diagnosis tasks [32].

- Image Registration: Agents learn transformation policies to align multimodal or time-series images [34].

- Image Synthesis: RL controls adaptive denoising or contrast enhancement in dynamic imaging [35].

- Treatment Planning: In radiotherapy, RL optimizes beam angles and dose distributions [36].

4.2. By Reinforcement Learning Algorithm

- Tabular Methods: Classical Q-learning is used in simpler settings [22].

- Deep RL: Methods like DQN, PPO, and A3C are common in high-dimensional visual tasks [6].

- Model-Based RL: Surrogate environment models enhance sample efficiency [37].

- Multi-Agent RL: Applied in cooperative detection, segmentation, or dose-planning tasks [38].

4.3. By Imaging Modality

- Ultrasound: Real-time landmark detection and prostate segmentation [39].

- MRI: Used in neurological or musculoskeletal applications for segmentation and registration [40].

- CT: Applied in volumetric analysis for lesion detection or navigation [33].

- PET/SPECT: Supports functional synthesis and multimodal alignment [41].

- X-ray: Utilized in view planning and active lesion search [5].

4.4. By Learning Framework

- Offline RL: Leverages static annotated datasets; preferred for safety and reproducibility [9].

- Online RL: Involves real-time interaction with simulators or clinical environments [42].

- Human-in-the-Loop: Clinician feedback informs agent policies [43].

- Imitation Learning: Behavior cloning or inverse RL captures expert strategies [44].

4.5. Visual Summary

4.6. Advantages and Research Gap Bridging

- Modality-Specific RL Strategies: Few studies tailor RL techniques to the unique temporal, spatial, and resolution characteristics of modalities like PET or ultrasound. Our taxonomy encourages targeted algorithm design [41].

- Underutilization of Model-Based and Multi-Agent RL: Despite their potential for sample efficiency and collaboration, these algorithms remain underrepresented in imaging tasks beyond treatment planning. The taxonomy surfaces these areas for future exploration [38].

- Lack of Integration Between Human Expertise and RL Systems: While offline and imitation learning are growing, human-in-the-loop frameworks are rarely applied in real-time settings, especially in high-stakes domains like radiotherapy [43].

- Imbalanced Coverage of Imaging Tasks: Tasks like image synthesis and adaptive contrast enhancement receive significantly less RL attention compared to classification or segmentation. Our structure exposes these disparities [45].

4.7. Taxonomy Construction and Counting Methodology

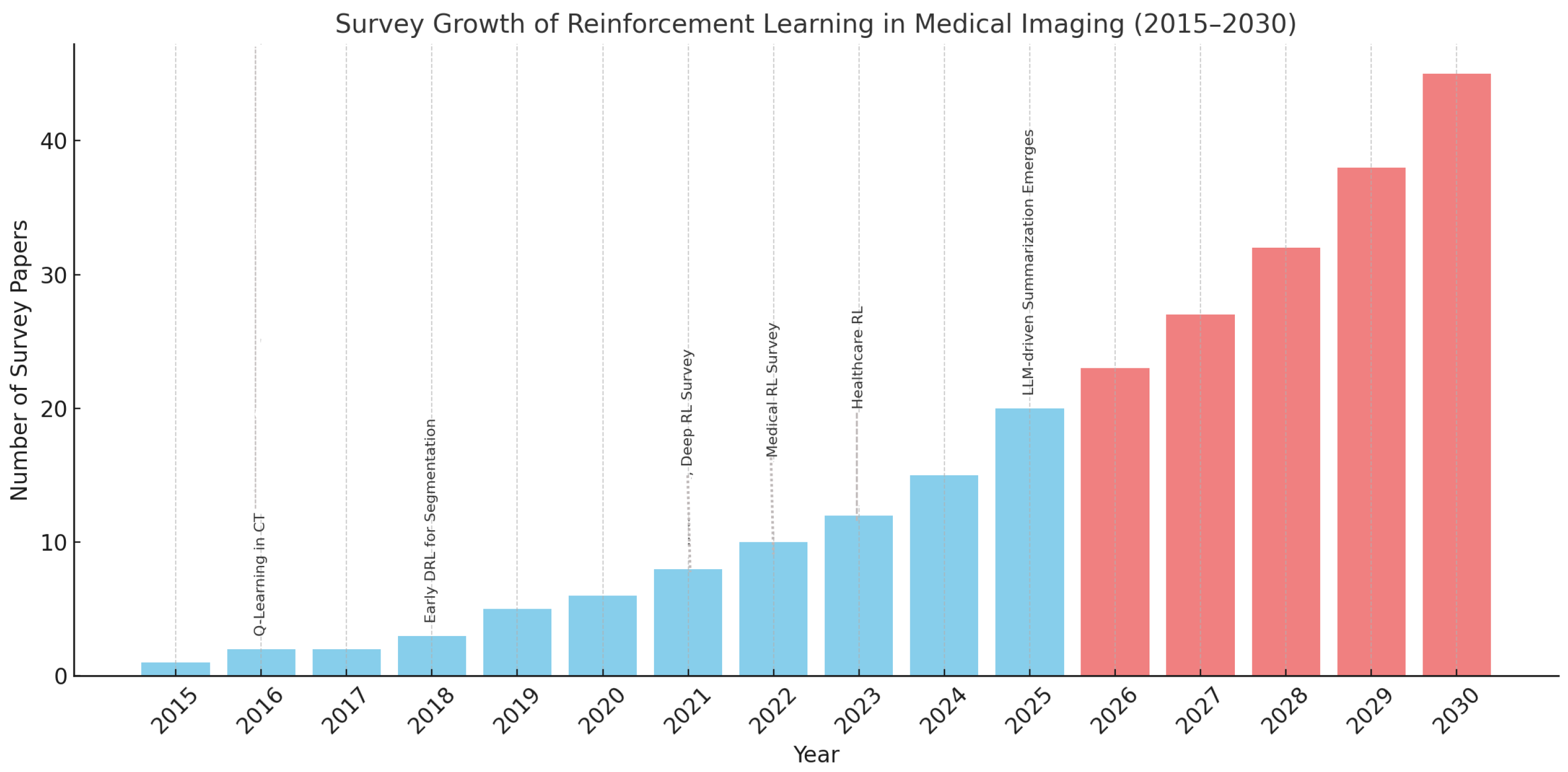

5. Trend Analysis and Future Outlook

5.1. Decade-Long Survey Trends

5.2. Projections (2026–2030): The Role of LLMs

- Extract modality-task-algorithm mappings automatically from the literature.

- Suggest underexplored combinations using structured prompts.

- Co-author survey drafts with human experts for rapid dissemination.

5.3. The Role of LLMs in Accelerating This Growth

- Model-based RL: Rarely applied in high-dimensional medical scenarios.

- Multi-agent Systems: Underrepresented in cooperative imaging tasks.

- Human-in-the-loop Frameworks: Limited adoption in clinical settings.

- Modality Bias: Sparse exploration in PET/SPECT, ultrasound, and temporal imaging.

5.4. Decade-Long Survey Trends

5.5. Discussion: Application and Impact of RL Taxonomy in Medical Imaging

5.6. Design Guidance and Benchmarking

- Select suitable RL algorithms based on task and modality characteristics;

- Design modular agents with well-defined state, action, and reward functions;

- Align with reproducible benchmarks across common clinical tasks.

5.7. Identification of Research Gaps

- Model-based RL for PET/SPECT imaging;

- Human-in-the-loop strategies for image registration;

- Multi-agent RL in real-time segmentation scenarios.

5.8. Automation via Large Language Models (LLMs)

- Survey Synthesis: Automate the extraction of reward-policy-state designs from large literature sets.

- Trend Analysis: Detect shifts in task or modality focus over time.

- Content Drafting: Generate LaTeX figures, tables, and summaries.

- Gap Identification: Highlight underrepresented algorithm–modality combinations.

5.9. Impact and Future Potential

- Curriculum Development: A foundation for training in RL and clinical AI.

- Framework Standardization: Promotes interoperable RL components across applications.

- Collaborative Research: Bridges AI researchers, clinicians, and imaging specialists.

5.10. Challenges and Future Outlook

6. LLM Integration for Explainability and Semantic Reinforcement

Conceptual Roles of LLMs in RL for Medical Imaging

7. Privacy Preserving in Medical RL

7.1. Privacy-Preserving RL in Clinical Environments

7.2. Federated Reinforcement Learning for Cross-Institutional Collaboration

7.3. Summary of Design Considerations and Privacy Strategies

7.4. Privacy-Enhancing Strategies

7.5. Conceptual Framework Blueprint

- Reward Function: Binary feedback system with +1 for correct, for incorrect classifications.

- RL Algorithms: Deep Q-Network (DQN) and Proximal Policy Optimization (PPO) for learning and stability comparisons.

- Feature Encoder: Pretrained ResNet-18 for visual feature extraction.

- LLM Integration: Post-decision explanation using large language models (LLMs), such as GPT, to enhance interpretability [66].

- Privacy Controls: DP-SGD and FL to ensure secure and compliant learning environments.

7.6. Evaluation Criteria

- RL Dynamics: Reward trajectory, episode length, convergence behavior.

- Diagnostic Accuracy: AUROC, Precision, Recall, F1-score.

- Interpretability: SHAP, LIME, and GPT-generated rationales.

- Privacy Robustness: Performance degradation under DP and FL conditions.

8. Challenges and Limitations

8.1. Reward Design and Clinical Alignment

8.2. Sample Inefficiency and Training Instability

8.3. Generalization and Domain Transfer

8.4. Interpretability and Clinical Trust

8.5. Data Availability and Interactive Environments

8.6. Regulatory and Deployment Barriers

9. Open Research Directions

9.1. Human-in-the-Loop Reinforcement Learning (HITL RL)

9.2. Explainability in RL for Medical Imaging

9.3. Model-Based and Sample-Efficient RL

9.4. Hierarchical and Modular Policies

9.5. Explainability and Policy Transparency

- Saliency-based policy attention maps.

- Action-region heatmaps for anatomical interpretability.

- Trajectory visualizations aligned with clinical annotations.

9.6. Interactive and Human-in-the-Loop RL

9.7. Benchmarks and Simulation Environments

- Open-source task-specific simulators for medical RL.

- RL-ready CXR and CT datasets with feedback structures.

- Public leaderboards for tasks like landmark localization or tool tracking.

9.8. Clinical Integration and Continual Learning

10. Conclusions

Funding

Conflicts of Interest

References

- Islam Riad, A.B.M.K.; Barek, M.A.; Rahman, M.M.; Akter, M.S.; Islam, T.; Rahman, M.A.; Mia, M.R.; Shahriar, H.; Wu, F.; Ahamed, S.I. Enhancing HIPAA Compliance in AI-driven mHealth Devices Security and Privacy. In Proceedings of the 2024 IEEE 48th Annual Computers, Software, and Applications Conference (COMPSAC), Osaka, Japan, 2–4 July 2024; pp. 2430–2435. [Google Scholar] [CrossRef]

- Pinto-Coelho, L. How Artificial Intelligence Is Shaping Medical Imaging Technology: A Survey of Innovations and Applications. Bioengineering 2023, 10, 1435. [Google Scholar] [CrossRef]

- Chen, X.; Wang, X.; Zhang, K.; Fung, K.; Thai, T.C.; Moore, K.; Mannel, R.S.; Liu, H.; Zheng, B.; Qiu, Y. Recent Advances and Clinical Applications of Deep Learning in Medical Image Analysis. Med. Image Anal. 2021, 79, 102444. [Google Scholar] [CrossRef]

- Feng, J.; Phillips, R.V.; Malenica, I.; Bishara, A.; Hubbard, A.E.; Celi, L.A.; Pirracchio, R. Clinical Artificial Intelligence Quality Improvement: Towards Continual Monitoring and Updating of AI Algorithms in Healthcare. npj Digit. Med. 2022, 5, 66. [Google Scholar] [CrossRef]

- Alansary, A.; Oktay, O.; Li, Y.; Folgoc, L.L.; Hou, B.; Vaillant, G.; Kamnitsas, K.; Vlontzos, A.; Glocker, B.; Kainz, B.; et al. Evaluating reinforcement learning agents for anatomical landmark detection. Med. Image Anal. 2019, 53, 156–164. [Google Scholar] [CrossRef]

- Zhou, S.K.; Le, H.N.; Luu, K.; Nguyen, H.V.; Ayache, N. Deep Reinforcement Learning in Medical Imaging: A Literature Review. Med. Image Anal. 2021, 70, 102193. [Google Scholar] [CrossRef]

- Hu, J.; Luo, Z.; Wang, X.; Sun, S.; Yin, Y.; Cao, K.; Song, Q.; Lyu, S.; Wu, X. End-to-End Multimodal Image Registration via Reinforcement Learning. Med. Image Anal. 2022, 68, 101878. [Google Scholar] [CrossRef]

- Ebrahimi, S.; Lim, G. A Reinforcement Learning Approach for Finding Optimal Policy of Adaptive Radiation Therapy Considering Uncertain Tumor Biological Response. Artif. Intell. Med. 2021, 121, 102193. [Google Scholar] [CrossRef]

- Hu, M.; Zhang, J.; Matkovic, L.; Liu, T.; Yang, X. Reinforcement Learning in Medical Image Analysis: Concepts, Applications, Challenges, and Future Directions. J. Appl. Clin. Med. Phys. 2023, 24, e13898. [Google Scholar] [CrossRef] [PubMed]

- Al, W.A.; Yun, I.D. Partial Policy-based Reinforcement Learning for Anatomical Landmark Localization in 3D Medical Images. arXiv 2018, arXiv:1807.02908. [Google Scholar] [CrossRef]

- Zhang, C.; Shahriar, H.; Riad, A.B.M.K. Security and Privacy Analysis of Wearable Health Device. In Proceedings of the 2020 IEEE 44th Annual Computers, Software, and Applications Conference (COMPSAC), Madrid, Spain, 13–17 July 2020; pp. 1767–1772. [Google Scholar] [CrossRef]

- Litjens, G.; Kooi, T.; Bejnordi, B.E.; Setio, A.A.A.; Ciompi, F.; Ghafoorian, M.; van der Laak, J.A.; van Ginneken, B.; Sánchez, C.I. A Survey on Deep Learning in Medical Image Analysis. Med. Image Anal. 2017, 42, 60–88. [Google Scholar] [CrossRef]

- Shen, D.; Wu, G.; Suk, H.-I. Deep Learning in Medical Image Analysis. Annu. Rev. Biomed. Eng. 2017, 19, 221–248. [Google Scholar] [CrossRef]

- Saha, B.; Islam, M.S.; Riad, A.K.; Tahora, S.; Shahriar, H.; Sneha, S. BlockTheFall: Wearable Device-based Fall Detection Framework Powered by Machine Learning and Blockchain for Elderly Care. In Proceedings of the 2023 IEEE 47th Annual Computers, Software, and Applications Conference (COMPSAC), Torino, Italy, 26–30 June 2023; pp. 1412–1417. [Google Scholar] [CrossRef]

- Luo, Z.; Hu, J.; Wang, X.; Hu, S.; Kong, B.; Yin, Y.; Song, Q.; Wu, X.; Lyu, S. Stochastic Planner–Actor–Critic (SPAC) for Unsupervised Deformable Image Registration. Proc. AAAI Conf. Artif. Intell. 2022, 36, 1917–1925. [Google Scholar]

- Sahba, F.; Tizhoosh, H.R.; Salama, M.M.A. Application of Reinforcement Learning for Medical Image Segmentation. In Proceedings of the International Joint Conference on Neural Networks (IJCNN), Vancouver, BC, Canada, 16–21 July 2006; pp. 511–517. [Google Scholar]

- Xu, C.; Zhang, D.; Song, Y.; Bittencourt, L.K.; Tirumani, S.H.; Li, S. Synthesis of Gadolinium-Enhanced Liver Tumors on Nonenhanced Liver MR Images Using Pixel-Level Graph Reinforcement Learning. Med. Image Anal. 2021, 70, 101976. [Google Scholar] [CrossRef]

- Ghesu, F.C.; Georgescu, B.; Zheng, Y.; Grbic, S.; Maier, A.; Hornegger, J.; Comaniciu, D. Multi-scale Deep Reinforcement Learning for Real-Time 3D-Landmark Detection in CT Scans. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 41, 176–189. [Google Scholar] [CrossRef] [PubMed]

- Sun, Y.; Li, R.; Li, X.; Fan, Y. Robust Multimodal Image Registration Using Deep Recurrent Reinforcement Learning. Comput. Methods Programs Biomed. 2020, 189, 105323. [Google Scholar] [CrossRef]

- Liu, Y.; Yuan, D.; Xu, Z.; Zhan, Y.; Zhang, H.; Lu, J.; Lukasiewicz, T. Pixel-level Deep Reinforcement Learning for Accurate and Robust Medical Image Segmentation. Sci. Rep. 2025, 15, 8213. [Google Scholar] [CrossRef]

- Judge, A.; Judge, T.; Duchateau, N.; Sandler, R.A.; Sokol, J.Z.; Bernard, O.; Jodoin, P.-M. Domain Adaptation of Echocardiography Segmentation Via Reinforcement Learning (RL4Seg). arXiv 2024, arXiv:2406.17902. [Google Scholar]

- Watkins, C.J.C.H.; Dayan, P. Q-learning. Mach. Learn. 1992, 8, 279–292. [Google Scholar] [CrossRef]

- Mnih, V.; Kavukcuoglu, K.; Silver, D.; Rusu, A.A.; Veness, J.; Bellemare, M.G.; Graves, A.; Riedmiller, M.; Fidjeland, A.K.; Ostrovski, G.; et al. Human-level control through deep reinforcement learning. Nature 2015, 518, 529–533. [Google Scholar] [CrossRef]

- Williams, R.J. Simple Statistical Gradient-Following Algorithms for Connectionist Reinforcement Learning. Mach. Learn. 1992, 8, 229–256. [Google Scholar] [CrossRef]

- Schulman, J.; Wolski, F.; Dhariwal, P.; Radford, A.; Klimov, O. Proximal Policy Optimization Algorithms. arXiv 2017, arXiv:1707.06347. [Google Scholar] [CrossRef]

- Mnih, V.; Badia, A.P.; Mirza, M.; Graves, A.; Lillicrap, T.; Harley, T.; Silver, D.; Kavukcuoglu, K. Asynchronous Methods for Deep Reinforcement Learning. arXiv 2016, arXiv:1602.01783. [Google Scholar] [CrossRef]

- Lillicrap, T.P.; Hunt, J.J.; Pritzel, A.; Heess, N.; Erez, T.; Tassa, Y.; Silver, D.; Wierstra, D. Continuous Control with Deep Reinforcement Learning. arXiv 2015, arXiv:1509.02971. [Google Scholar]

- Yamashita, R.; Nishio, M.; Do, R.; Togashi, K. Convolutional Neural Networks: An Overview and Application in Radiology. Insights Imaging 2018, 9, 611–629. [Google Scholar] [CrossRef]

- Tian, Y.; Xu, Z.; Ma, Y.; Ding, W.; Wang, R.; Gao, Z.; Cheng, G.; He, L.; Zhao, X. Survey on Deep Learning in Multimodal Medical Imaging for Cancer Detection. arXiv 2023, arXiv:2312.01573. [Google Scholar] [CrossRef]

- Liu, Z.; Kainth, K.; Zhou, A.; Deyer, T.W.; Fayad, Z.A.; Greenspan, H.; Mei, X. A Review of Self-Supervised, Generative, and Few-Shot Deep Learning Methods for Data-Limited Magnetic Resonance Imaging Segmentation. NMR Biomed. 2024, 37, e5143. [Google Scholar] [CrossRef] [PubMed]

- Rajpurkar, P.; Irvin, J.; Zhu, K.; Yang, B.; Mehta, H.; Duan, T.; Ding, D.; Bagul, A.; Langlotz, C.; Shpanskaya, K.; et al. CheXNet: Radiologist-Level Pneumonia Detection on Chest X-Rays with Deep Learning. arXiv 2017, arXiv:1711.05225. [Google Scholar]

- Yu, Y.; Hou, X.; Ren, H. Efficient Active Contour Model for Medical Image Segmentation and Correction Based on Edge and Region Information. Expert Syst. Appl. 2022, 194, 116436. [Google Scholar] [CrossRef]

- Browning, J.; Kornreich, M.; Chow, A.; Pawar, J.; Zhang, L.; Herzog, R.; Odry, B. Uncertainty-Aware Deep Reinforcement Learning for Anatomical Landmark Detection in Medical Images. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention (MICCAI), Strasbourg, France, 27 September–1 October 2021; pp. 190–198. [Google Scholar] [CrossRef]

- Dalca, A.V.; Yu, E.; Golland, P.; Fischl, B.; Sabuncu, M.R.; Iglesias, J.E. Unsupervised Deep Learning for Bayesian Brain MRI Segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention–MICCAI 2019, Shenzhen, China, 13–17 October 2019; Lecture Notes in Computer Science. Volume 11766, pp. 356–365. [Google Scholar] [CrossRef]

- Shen, C.; Gonzalez, Y.; Chen, L.; Jiang, S.B.; Jia, X. Intelligent Parameter Tuning in Optimization-based Iterative CT Reconstruction via Deep Reinforcement Learning. arXiv 2017, arXiv:1711.00414. [Google Scholar] [CrossRef]

- Xu, L.; Shen, S.; Shen, C. Deep Reinforcement Learning and Its Applications in Medical Imaging and Radiation Therapy: A Review. Phys. Med. Biol. 2022, 67, 22TR02. [Google Scholar] [CrossRef]

- Barnoy, Y.; Vaidyanathan, T.R.; Bergeles, E.; Burgner-Kahrs, J. Control of Magnetic Surgical Robots With Model-Based Simulators and Reinforcement Learning. IEEE Trans. Med. Robot. Bionics 2022, 4, 945–956. [Google Scholar] [CrossRef] [PubMed]

- Liao, X.; Fu, C.-W.; Xing, L.; Heng, P.-A. Iteratively-Refined Interactive 3D Medical Image Segmentation With Multi-Agent Reinforcement Learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 9391–9399. [Google Scholar] [CrossRef]

- Ghosh, S.; Vadali, G.; Singh, A.; Zhou, Y.; Felfeliyan, B.; Wahd, A.; Knight, J.; Panicker, M.R.; Jaremko, J.L.; Hareendranathan, A.R. Shoulder Rotator Cuff Tear Detection from Ultrasound Videos Using Deep Reinforcement Learning. In Proceedings of the 2025 IEEE 22nd International Symposium on Biomedical Imaging (ISBI), Houston, TX, USA, 14–17 April 2025; pp. 1–4. [Google Scholar] [CrossRef]

- Shekhar, S.; Dubey, S.; Jothikumar, C.; Ashokkumar, C.; Shanmugam, S. A Reinforcement Learning-Based Adaptive Learning Rate Scheduler for Optimizing Brain Tumor Detection. In Proceedings of the 2024 First International Conference for Women in Computing (InCoWoCo), Pune, India, 14–15 November 2024; pp. 1–5. [Google Scholar] [CrossRef]

- Smith, R.L.; Ackerley, I.M.; Wells, K.; Bartley, L.; Paisey, S.; Marshall, C. Reinforcement Learning for Object Detection in PET Imaging. In Proceedings of the 2019 IEEE Nuclear Science Symposium and Medical Imaging Conference (NSS/MIC), Manchester, UK, 26 October–2 November 2019; pp. 1–4. [Google Scholar] [CrossRef]

- Wen, Y.; Si, J.; Brandt, A.; Gao, X.; Huang, H.H. Online Reinforcement Learning Control for the Personalization of a Robotic Knee Prosthesis. IEEE Trans. Cybern. 2020, 50, 2346–2356. [Google Scholar] [CrossRef] [PubMed]

- Luo, B.; Wu, Z.; Zhou, F.; Wang, B.-C. Human-in-the-Loop Reinforcement Learning in Continuous-Action Space. IEEE Trans. Neural Netw. Learn. Syst. 2023, 34, 4123–4135. [Google Scholar] [CrossRef]

- Xiao, D.; Wang, B.; Sun, Z.; He, X. Behavioral Cloning Based Model Generation Method for Reinforcement Learning. In Proceedings of the 2023 China Automation Congress (CAC), Chongqing, China, 17–19 November 2023; pp. 6776–6781. [Google Scholar] [CrossRef]

- Ding, H.; Zhang, K.; Huang, N. DM-GAN: A Data Augmentation-Based Approach for Imbalanced Medical Image Classification. In Proceedings of the 2024 IEEE International Conference on Bioinformatics and Biomedicine (BIBM), Lisbon, Portuga, 3–6 December 2024; pp. 3160–3165. [Google Scholar] [CrossRef]

- Stevens, A.T.H.; de Bruijn, F.J.; Nguyen, L.S. Reinforcement Learning for Ultrasound Image Analysis: A Decade-Long Review. arXiv 2024, arXiv:2502.14995. [Google Scholar]

- Elmekki, H.; Islam, S.; Alagha, A.; Sami, H.; Spilkin, A.; Zakeri, E.; Zanuttini, A.M.; Bentahar, J.; Kadem, L.; Xie, W.F.; et al. Comprehensive Review of Reinforcement Learning for Medical Ultrasound Imaging. Artif. Intell. Rev. 2025, 58, 284. [Google Scholar] [CrossRef]

- Barek, M.A.; Rahman, M.M.; Akter, S.; Riad, A.B.M.K.I.; Rahman, M.A.; Shahriar, H.; Rahman, A.; Wu, F. Mitigating Insecure Outputs in Large Language Models (LLMs): A Practical Educational Module. In Proceedings of the 2024 IEEE 48th Annual Computers, Software, and Applications Conference (COMPSAC), Osaka, Japan, 2–4 July 2024; pp. 2424–2429. [Google Scholar] [CrossRef]

- Brattain, L.J.; Telfer, B.A.; Dhyani, M.; Grajo, J.R.; Samir, A.E. Machine Learning for Medical Ultrasound: Status, Methods, and Future Opportunities. Abdom. Radiol. 2018, 43, 786–799. [Google Scholar] [CrossRef] [PubMed]

- Zhou, M.; Nie, X.; Liu, Y.; Li, D. Parallel Transformer-CNN Model for Medical Image Segmentation. In Proceedings of the 2024 5th International Conference on Computer Engineering and Application (ICCEA), Hangzhou, China, 12–14 April 2024; pp. 1048–1051. [Google Scholar] [CrossRef]

- Ghislain, M.; Martin, F.; Dausort, M.; Dasnoy-Sumell, D.; Barragan Montero, A.M.; Macq, B. Optimal Fractionation Scheduling for Radiotherapy using Reinforcement Learning. Biomedicines 2025, 13, 1367. [Google Scholar] [CrossRef]

- Moradi, M.; Jiang, R.; Liu, Y.; Madondo, M.; Wu, T.; Sohn, J.J.; Yang, X.; Hasan, Y.; Tian, Z. Automated Treatment Planning for Interstitial HDR Brachytherapy for Locally Advanced Cervical Cancer using Deep Reinforcement Learning. arXiv 2025, arXiv:2506.11957. [Google Scholar] [CrossRef]

- Madondo, M.; Shao, Y.; Liu, Y.; Zhou, J.; Yang, X.; Tian, Z. Patient-Specific Deep Reinforcement Learning for Automatic Replanning in Head-and-Neck Cancer Proton Therapy. arXiv 2025, arXiv:2506.10073. [Google Scholar]

- Mosqueira-Rey, E.; Hernández-Pereira, E.; Alonso-Ríos, D.; Bobes-Bascarán, J.; Fernández-Leal, Á. Human-in-the-loop machine learning: A state of the art. Artif. Intell. Rev. 2023, 56, 3005–3054. [Google Scholar] [CrossRef]

- Zhang, K.; Wang, H.; Du, J.; Chu, B.; Arévalo, A.R.; Kindle, R.; Celi, L.A.; Doshi-Velez, F. An interpretable RL framework for pre-deployment modeling in ICU hypotension management. npj Digit. Med. 2022, 5, 173. [Google Scholar] [CrossRef]

- Ivliev, I. G-CCACS (Generalized Comprehensible Configurable Adaptive Cognitive Structure): A Reference Architecture for Transparent, Ethical, and Auditable AI in High-Stakes Domains. SSRN Preprint. 2025. Available online: https://papers.ssrn.com/sol3/Delivery.cfm/5195300.pdf?abstractid=5195300 (accessed on 1 January 2020).

- Akter, M.S.; Barek, M.A.; Rahman, M.M.; Riad, A.B.M.K.I.; Rahman, M.A.; Mia, M.R.; Shahriar, H.; Chu, W.; Ahamed, S.I. HIPAA Technical Compliance Evaluation of Laravel-Based mHealth Apps. In Proceedings of the 2024 IEEE International Conference on Digital Health (ICDH), Shenzhen, China, 7–13 July 2024; pp. 58–67. [Google Scholar] [CrossRef]

- Rahman, M.A.; Barek, M.A.; Riad, A.B.M.K.I.; Rahman, M.M.; Rashid, M.B.; Ambedkar, S.; Miaa, M.R.; Wu, F.; Cuzzocrea, A.; Ahamed, S.I. Embedding with Large Language Models for Classification of HIPAA Safeguard Compliance Rules. arXiv 2024, arXiv:2410.20664. [Google Scholar] [CrossRef]

- Wang, S.; Zhao, Z.; Ouyang, X.; Liu, T.; Wang, Q.; Shen, D. Interactive computer-aided diagnosis on medical image using large language models. Commun. Eng. 2024, 3, 133. [Google Scholar] [CrossRef]

- Brown, T.B.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A.; et al. Language Models Are Few-Shot Learners. Adv. Neural. Inf. Process. Syst. 2020, 33, 1877–1901. [Google Scholar]

- Wei, J.; Wang, X.; Schuurmans, D.; Bosma, M.; Ichter, B.; Xia, F.; Chi, E.H.; Le, Q.V.; Zhou, D. Chain of Thought Prompting Elicits Reasoning in Large Language Models. arXiv 2022, arXiv:2201.11903. [Google Scholar]

- Jiang, B.; Yang, Y.; Yin, M.; Wang, Z.; Qin, J.; Leung, P.A.K. Fetal Ultrasound Standard Plane Extraction using Orthogonal Triple-slice Deep Reinforcement Learning Agent. In Proceedings of the 2024 IEEE Ultrasonics, Ferroelectrics, and Frequency Control Joint Symposium (UFFC-JS), Taipei, Taiwan, 22–26 September 2024; pp. 1–4. [Google Scholar] [CrossRef]

- Qi, Y.; Lin, L.; Wang, J.; Zhang, B.; Zhang, J. Multi-modal Evidential Fusion Network for Trustworthy PET/CT Tumor Segmentation. arXiv 2024, arXiv:2406.18327. [Google Scholar] [CrossRef]

- Song, B.; Doe, J.; Smith, A. SMuRF: Deep Learning–Based Fusion of CT and Pathology for Survival Prediction. eBioMedicine 2025, 114, 105663. [Google Scholar]

- Yao, F.; Lin, H.; Xue, Y.-N.; Zhuang, Y.-D.; Bian, S.-Y.; Zhang, Y.-Y.; Yang, Y.-J.; Pan, K.-H. Multimodal imaging deep learning model for predicting extraprostatic extension in prostate cancer using mpMRI and 18 F-PSMA-PET/CT. Cancer Imaging 2025, 25, 103. [Google Scholar] [CrossRef] [PubMed]

- Thirunavukarasu, A.J.; Ting, D.S.J.; Elangovan, K.; Gutierrez, L.; Tan, T.F.; Ting, D.S.W. Large Language Models in Medicine. Nat. Med. 2023, 29, 19–29. [Google Scholar] [CrossRef] [PubMed]

- Javed, H.; El-Sappagh, S.; Abuhmed, T. Robustness in Deep Learning Models for Medical Diagnostics: Security and Adversarial Challenges Towards Robust AI Applications. Artif. Intell. Rev. 2025, 58, 12. [Google Scholar] [CrossRef]

- Lee, C.S.; Kim, H.-J.; Jeon, M. Federated Learning for CT-Based Liver Tumor Detection with Teacher-Student Slice-Aware Network. BMC Med. Imaging 2025, 25, 1020. [Google Scholar] [CrossRef] [PubMed]

- Pati, S.; Baid, U.; Edwards, B.; Sheller, M.; Wang, S.-H.; Reina, G.A.; Foley, P.; Gruzdev, A.; Karkada, D.; Davatzikos, C.; et al. Federated Learning Enables Big Data for Rare Cancer Boundary Detection. Nat. Commun. 2022, 13, 1103. [Google Scholar] [CrossRef]

- Wang, X.; Peng, Y.; Lu, L.; Lu, Z.; Bagheri, M.; Summers, R.M. ChestX-Ray8: Hospital-Scale Chest X-Ray Database and Benchmarks on Weakly-Supervised Classification and Localization of Common Thorax Diseases. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 3462–3471. [Google Scholar] [CrossRef]

- NVIDIA. NVIDIA Clara Federated Learning (Clara FL). NVIDIA Developer Blog. April 2020. Available online: https://developer.nvidia.com/clara (accessed on 1 January 2020).

- Abadi, M.; Chu, A.; Goodfellow, I.; McMahan, B.; Mironov, I.; Talwar, K.; Zhang, L. Deep Learning with Differential Privacy. In Proceedings of the 2016 ACM SIGSAC Conference on Computer and Communications Security, Vienna, Austria, 24–28 October 2016; pp. 308–318. [Google Scholar]

- Yang, Q.; Liu, Y.; Chen, T.; Tong, Y. Federated Machine Learning: Concept and Applications. ACM Trans. Intell. Syst. Technol. 2019, 10, 1–19. [Google Scholar] [CrossRef]

- Li, T.; Sahu, A.K.; Talwalkar, A.; Smith, V. Federated Learning: Challenges, Methods, and Future Directions. IEEE Signal Process. Mag. 2020, 37, 50–60. [Google Scholar] [CrossRef]

- Brendan McMahan, H.; Moore, E.; Ramage, D.; Hampson, S.; Aguera-y Arcas, B. Communication-Efficient Learning of Deep Networks from Decentralized Data. In Proceedings of the 20th International Conference on Artificial Intelligence and Statistics (AISTATS), Fort Lauderdale, FL, USA, 20–22 April 2017; pp. 1273–1282. Available online: https://proceedings.mlr.press/v54/mcmahan17a/mcmahan17a.pdf (accessed on 1 January 2020).

- Kaissis, G.; Makowski, M.R.; Rückert, D.R.; Braren, B. End-to-End Privacy-Preserving Deep Learning on Multi-Institutional Medical Imaging. Nat. Mach. Intell. 2021, 3, 473–484. [Google Scholar] [CrossRef]

- Ibrahim, S.; Mostafa, M.; Jnadi, A.; Salloum, H.; Osinenko, P. Comprehensive Overview of Reward Engineering and Shaping in Advancing Reinforcement Learning Applications. IEEE Access 2024, 12, 175473–175500. [Google Scholar] [CrossRef]

- Liu, X.-Y.; Wang, Z.; Chen, S.; Zhang, Y.; Li, Q. DOMAIN: Mildly Conservative Model-Based Offline Reinforcement Learning. IEEE Trans. Syst. Man Cybern. Syst. 2025, 1–14. [Google Scholar] [CrossRef]

- Chen, H.; Gomez, C.; Huang, C.M.; Unberath, M. Explainable Medical Imaging AI Needs Human-Centered Design: Guidelines and Evidence from a Systematic Review. npj Digit. Med. 2022, 5, 156. [Google Scholar] [CrossRef]

- Arun, N.; Gaw, N.; Singh, P.; Chang, K.; Aggarwal, M.; Chen, B.; Hoebel, K.; Gupta, S.; Patel, J.; Gidwani, M.; et al. Assessing the (Un)Trustworthiness of Saliency Maps for Localizing Abnormalities in Medical Imaging. Radiol. Artif. Intell. 2020, 2, e190026. [Google Scholar] [CrossRef]

- Kim, W.; Shin, Y.; Park, J.; Sung, Y. Sample-Efficient and Safe Deep Reinforcement Learning via Reset Deep Ensemble Agents. arXiv 2023, arXiv:2310.20287. [Google Scholar] [CrossRef]

- U.S. Food and Drug Administration. Artificial Intelligence/Machine Learning (AI/ML)-Based Software as a Medical Device (SaMD) Action Plan. 2021. Available online: https://www.fda.gov/media/145022/download (accessed on 27 July 2025).

- European Medicines Agency. Reflection Paper on the Use of Artificial Intelligence in the Medicinal Product Lifecycle. 2023. Available online: https://www.ema.europa.eu/en/documents/scientific-guideline/reflection-paper-use-artificial-intelligence-ai-medicinal-product-lifecycle_en.pdf (accessed on 1 January 2020).

- Pateria, S.; Subagdja, B.; Tan, A.-H.; Quek, C. Hierarchical Reinforcement Learning: A Comprehensive Survey. ACM Comput. Surv. 2021, 54, 109. [Google Scholar] [CrossRef]

- Chung, M.; Won, J.B.; Kim, G.; Kim, Y.; Ozbulak, U. Evaluating Visual Explanations of Attention Maps for Transformer-Based Medical Imaging. arXiv 2025, arXiv:2503.09535. [Google Scholar]

- Cardoso, M.J.; Li, W.; Brown, R.; Ma, N.; Kerfoot, E.; Wang, Y.; Murrey, B.; Myronenko, A.; Zhao, C.; Yang, D.; et al. MONAI: An Open-Source Framework for Deep Learning in Healthcare. arXiv 2022, arXiv:2211.02701. [Google Scholar] [CrossRef]

| Study | Focus Area | Modality | RL Method | Remarks/Limitations |

|---|---|---|---|---|

| Zhou et al. (2021) [6] | General RL in medical imaging | Multimodality (CT, MRI, Ultrasound) | DQN, Policy Gradient | Broad review, lacks detailed taxonomy and clinical translation perspectives |

| Hu et al. (2023) [9] | RL in medical imaging | CT, MRI, X-ray | DQN, PPO, A2C | Comprehensive; discusses taxonomy and clinical challenges |

| Ghesu et al. (2019) [18] | Anatomical landmark detection | 3D CT, MRI | Deep Q-Network (DQN) | Pioneering single-agent DQN for spatial localization, extended to multi-agent coordination |

| Sahba et al. (2019) [16] | Prostate segmentation in TRUS | Ultrasound | Q-learning | State-action design for low-label segmentation; focused on prostate-specific TRUS images |

| Xu et al. (2021) [17] | Image synthesis | Liver MRI | PixGRL (pixel-level graph RL) | Synthesizes gadolinium-enhanced liver tumor images from non-enhanced scans. Limited to liver MRI; lacks generalizability, interpretability, and privacy-aware deployment |

| Ebrahimi et al. (2021) [8] | Radiotherapy planning | CT (prostate) | RL with tumor response modeling | Optimizes dose plans under biological uncertainty; lacks real-time feedback integration and privacy-preserving deployment support |

| Litjens et al. (2017) [12] | Deep learning in medical imaging | Multimodality | N/A | Comprehensive CNN review; lacks coverage of sequential decision-making frameworks |

| Shen et al. (2017) [13] | Deep learning survey | Multimodality | N/A | Focused on supervised deep learning; no exploration of RL or hybrid adaptive learning |

| Luo et al. (2021) [15] | Image registration | CT, MRI | Hierarchical Planner–Actor–Critic (SPAC) | Sequential deformation modeling; unsupervised learning; lacks clinical workflow integration and explainability |

| Sun et al. (2020) [19] | Multimodal registration | CT–MRI | Recurrent Actor–Critic RL | Multimodal alignment with lookahead planning; lacks real-time validation and privacy-awareness |

| Liu et al. (2025) [20] | Medical image segmentation | Cardiac, brain MRI | Pixel-level A3C RL | Direct pixel-by-pixel segmentation; more accurate boundaries; needs evaluation on larger clinical datasets; interpretability not yet assessed |

| Judge et al. (2024) [21] | Echocardiography segmentation | Ultrasound echo | RL4Seg (domain-adaptive RL) | 99% anatomical validity with limited labels. Limited to echo images; privacy/federated deployment not addressed |

| Aspect | Supervised Learning | Unsupervised Learning | Reinforcement Learning (RL) |

|---|---|---|---|

| Learning Objective | Learn from labeled data to minimize prediction error | Discover hidden patterns or structures in unlabeled data | Learn policies to maximize cumulative reward via interaction |

| Common Applications | Classification, segmentation (e.g., tumor detection) | Clustering, anomaly detection (e.g., lesion discovery) | Landmark localization, adaptive segmentation, active diagnosis |

| Data Requirement | Requires large annotated datasets | Can operate on unlabeled data | Requires interaction-based feedback (environment or simulator) |

| Feedback Type | Ground-truth labels | Data distribution or structural similarity | Reward signal (sparse, delayed, or shaped) |

| Challenges | Annotation cost, domain shift | Ambiguous grouping, lack of interpretability | Reward design, sample inefficiency, generalization |

| Use in Imaging | Gold standard in classification and segmentation tasks | Useful in feature learning, image synthesis | Suited for sequential tasks, human-in-the-loop systems |

| Task/Modality | CT | MRI | X-Ray | Ultrasound | PET-CT | Multimodal |

|---|---|---|---|---|---|---|

| Segmentation | 22 | 19 | 15 | 6 | 4 | 3 |

| Detection | 14 | 11 | 9 | 5 | 2 | 1 |

| Planning | 8 | 5 | – | 3 | 2 | 2 |

| Navigation | 5 | – | – | 4 | – | 1 |

| Reconstruction | 7 | 6 | 2 | 1 | 1 | 1 |

| Year | Count | Key References/Trends | Focus Area |

|---|---|---|---|

| Actual Survey Publications (2015–2025) | |||

| 2015 | 1 | – | RL intro in imaging |

| 2016 | 2 | Q-learning in CT [22] | Landmark navigation |

| 2017 | 2 | – | Episodic segmentation |

| 2018 | 3 | Early DRL for segmentation [12] | DQN usage |

| 2019 | 5 | PPO, DQN adoption [9] | Policy learning |

| 2020 | 6 | DRL in registration [23] | Spatial alignment |

| 2021 | 8 | DRL Survey [6] | Algorithm review |

| 2022 | 10 | Clinical RL [7] | Deployment pipelines |

| 2023 | 12 | Healthcare RL [9] | Generalization focus |

| 2024 | 15 | Ultrasound-focused surveys [46] | Real-time analysis |

| 2025 | 20 | Human-in-the-loop RL [47] | Interactive training |

| Projected Survey Publications (LLM-Driven, 2026–2030) | |||

| 2026 | 23 | LLM-assisted review generation | NLP-aided taxonomy |

| 2027 | 27 | Auto taxonomies from LLMs | Programmatic analysis |

| 2028 | 32 | RL in PET/SPECT with FL | Rare modality focus |

| 2029 | 38 | Multi-agent explainable RL | Team-based diagnosis |

| 2030 | 45 | Co-authored LLM + human surveys | Autonomous meta-review |

| LLM Capability | Contribution to Survey Growth |

|---|---|

| Automated Extraction | Extracts task, modality, and algorithm types from hundreds of papers to generate structured taxonomies. |

| Trend Analysis | Tracks term frequency (e.g., PPO, prostate segmentation) to detect emerging focus areas. |

| Drafting | Auto-generates abstracts, captions, LaTeX tables, and comparison matrices. |

| Gap Highlighting | Identifies underexplored intersections of task, modality, and method. |

| Human–AI Collaboration | Enables co-authored surveys, expanding interdisciplinary literature creation. |

| Task | Reward Function Design | Clinical Validation Methods |

|---|---|---|

| Radiotherapy Planning | - Dose-distribution-based rewards - Penalization of radiation to organs-at-risk (OARs) - Trade-off between tumor coverage and toxicity minimization | - Expert oncologist scoring - Dose–volume histogram (DVH) comparisons - Policy audit trails for reproducibility |

| Lesion Detection/Localization | - Localization accuracy (IoU with bounding boxes) - Sensitivity–specificity trade-offs - Penalties for false negatives (missed lesions) | - Radiologist agreement scoring - Cohen’s Kappa inter-rater consistency - Clinical case-based decision checks |

| Navigation (e.g., endoscopy and biopsy guidance) | - Trajectory-based rewards (minimize distance to target) - Safety constraints (avoid critical anatomical regions) - Smoothness penalties to reduce abrupt movements | - Expert-in-the-loop path evaluation - Consistency with clinical navigation guidelines - Retrospective comparison against gold-standard trajectories |

| LLM Role | Example Task | Added Value (Conceptual) | Representative Work | Validation Method |

|---|---|---|---|---|

| Pipeline Automation | Modality–task–algorithm mapping for MRI lesion workflows | Reduces manual trial-and-error; suggests suitable RL families (e.g., actor–critic vs. DQN) from textual protocols and prior art | Shen et al. (LLMs in medicine) [66] | Cross-check with protocol databases, benchmark comparisons |

| Annotation and Reporting | CXR region suggestions, structured report drafting | Accelerates expert labeling; standardizes terminology and evidence statements for RL feedback loops | Javed et al. (robustness/ops) [67] | Expert-in-the-loop scoring, inter-rater agreement |

| Cross-Modal Integration | PET-CT fusion reasoning; ultrasound navigation narratives | Bridges heterogeneous inputs with natural-language rationales for RL state/context augmentation | Elmekki et al. (ultrasound RL review) [47] | Consistency check across modalities, clinician review |

| Clinical Decision Support | Trajectory summarization; policy explanation for treatment planning | Improves interpretability and clinician trust via guideline-aware, case-linked narratives | Shen et al. (LLMs in medicine) [66] | Cohen’s Kappa with clinicians, policy audit trails |

| Ethics and Compliance | HIPAA/GDPR-aligned annotation pipelines, privacy-preserving summaries | Ensures safe deployment in regulated healthcare settings; flags privacy/security risks | Ivliev (G-CCACS architecture) [56] | Regulatory audit, compliance checklist validation |

| Component | Description |

|---|---|

| Reward Design | Binary reward: correct = +1, incorrect = −1 |

| RL Algorithms | DQN vs. PPO |

| Encoder Backbone | Pretrained ResNet-18 |

| LLM Integration | GPT-based post-action explanation |

| Privacy Mechanisms | Differential Privacy (DP), Federated Learning (FL) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Islam Riad, A.B.M.K.; Barek, M.A.; Shahriar, H.; Francia, G., III; Ahamed, S.I. Reinforcement Learning in Medical Imaging: Taxonomy, LLMs, and Clinical Challenges. Future Internet 2025, 17, 396. https://doi.org/10.3390/fi17090396

Islam Riad ABMK, Barek MA, Shahriar H, Francia G III, Ahamed SI. Reinforcement Learning in Medical Imaging: Taxonomy, LLMs, and Clinical Challenges. Future Internet. 2025; 17(9):396. https://doi.org/10.3390/fi17090396

Chicago/Turabian StyleIslam Riad, A. B. M. Kamrul, Md. Abdul Barek, Hossain Shahriar, Guillermo Francia, III, and Sheikh Iqbal Ahamed. 2025. "Reinforcement Learning in Medical Imaging: Taxonomy, LLMs, and Clinical Challenges" Future Internet 17, no. 9: 396. https://doi.org/10.3390/fi17090396

APA StyleIslam Riad, A. B. M. K., Barek, M. A., Shahriar, H., Francia, G., III, & Ahamed, S. I. (2025). Reinforcement Learning in Medical Imaging: Taxonomy, LLMs, and Clinical Challenges. Future Internet, 17(9), 396. https://doi.org/10.3390/fi17090396