Technical Review: Architecting an AI-Driven Decision Support System for Enhanced Online Learning and Assessment

Abstract

1. Introduction

1.1. Background and Motivation

1.2. Objectives of the Review

- To systematically evaluate the architectural components, AI techniques, and integration strategies of AI-DSS frameworks within online learning platforms, emphasizing scalability and adaptability.

- To assess design considerations, including data requirements, model selection, and user-centric features, alongside performance metrics such as accuracy, scalability, computational efficiency, and learner satisfaction.

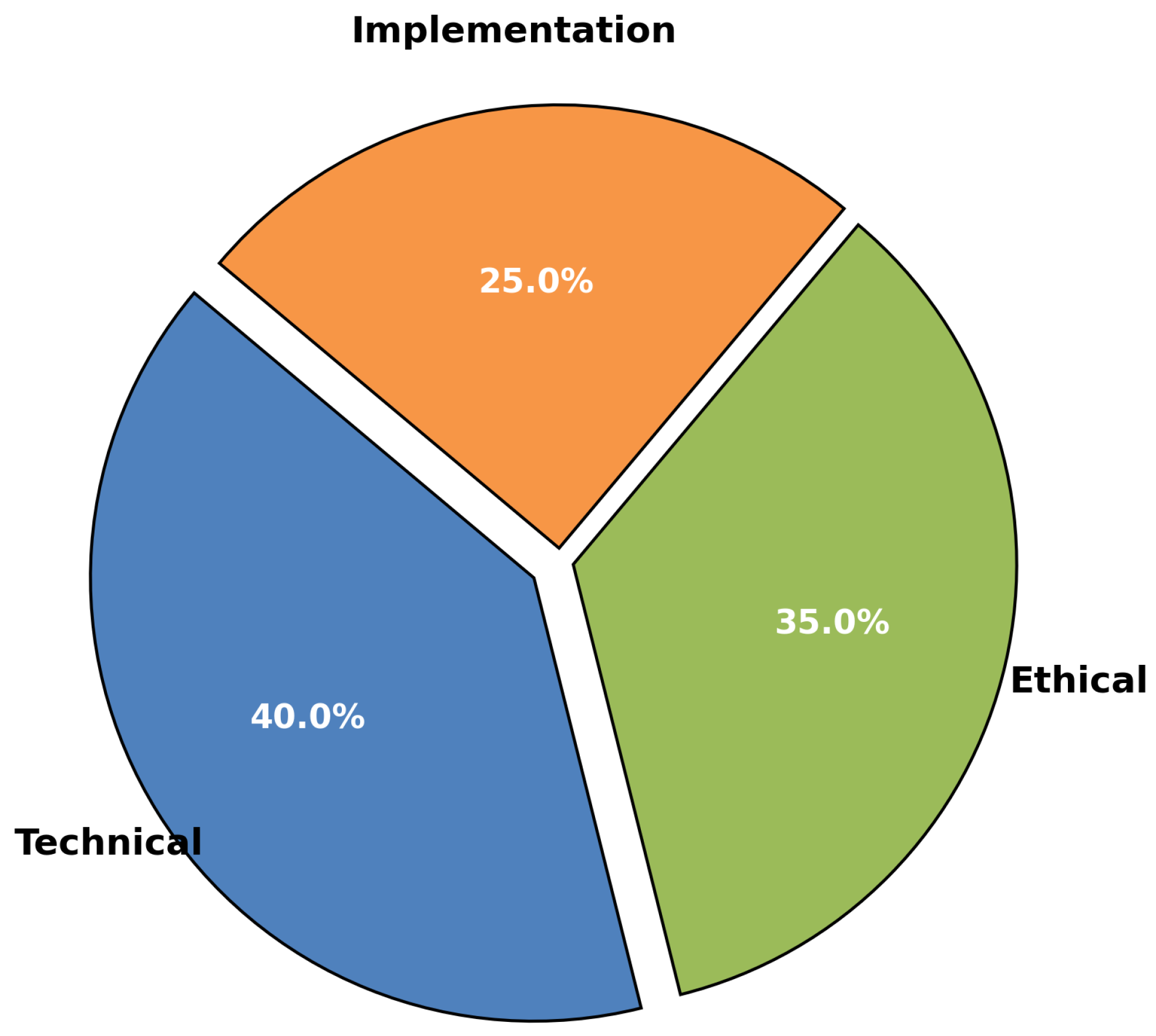

- To identify technical, ethical, and practical challenges in AI-DSS implementation, including data privacy, algorithmic bias, and system interoperability, while proposing directions for future research to advance the field.

1.3. Scope and Methodology

2. Related Work

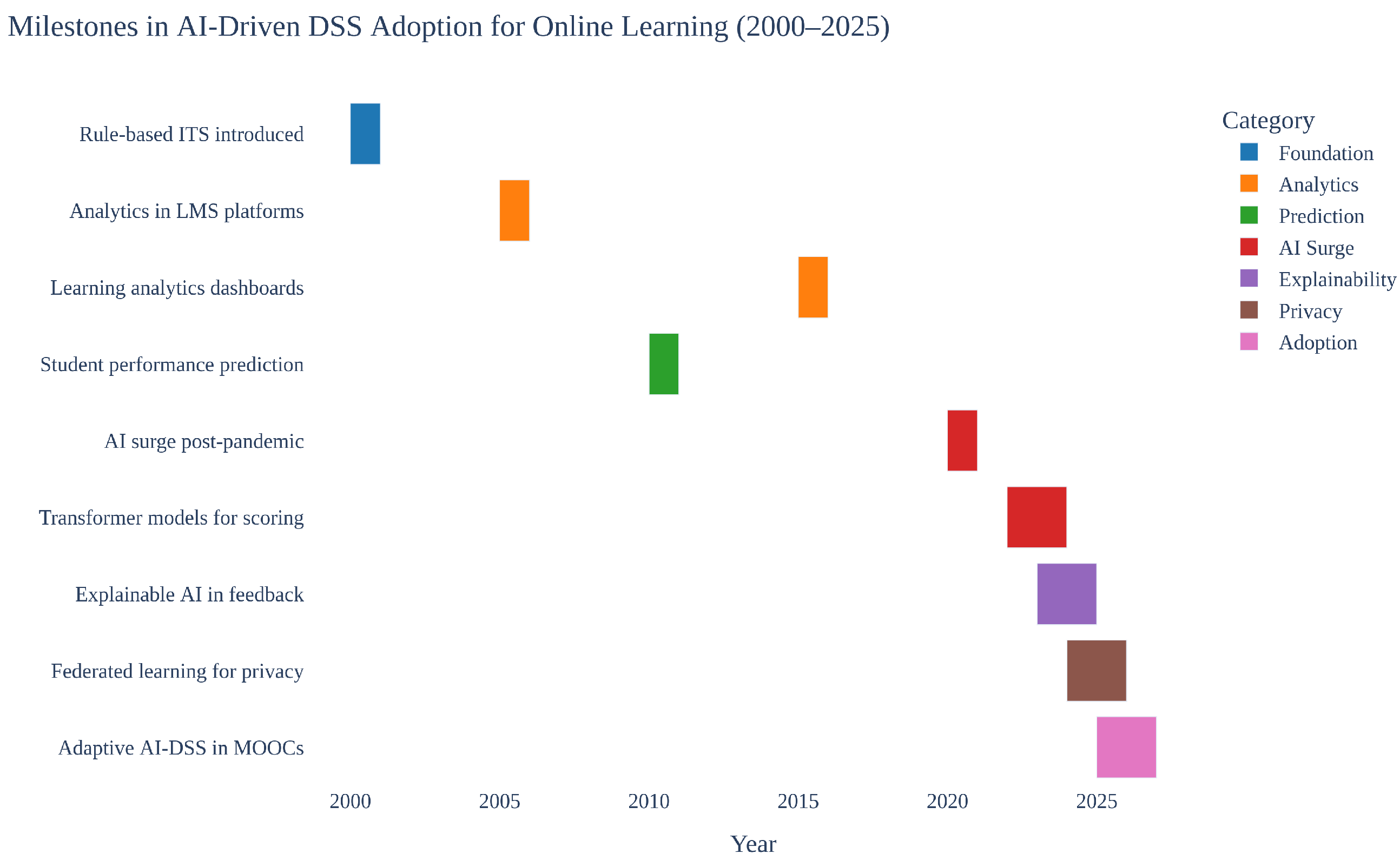

2.1. Evolution of Online Learning Systems

2.2. AI Techniques in Decision Support Systems

2.3. Existing AI-DSS Applications

3. Design Considerations for AI-Driven Decision Support Systems

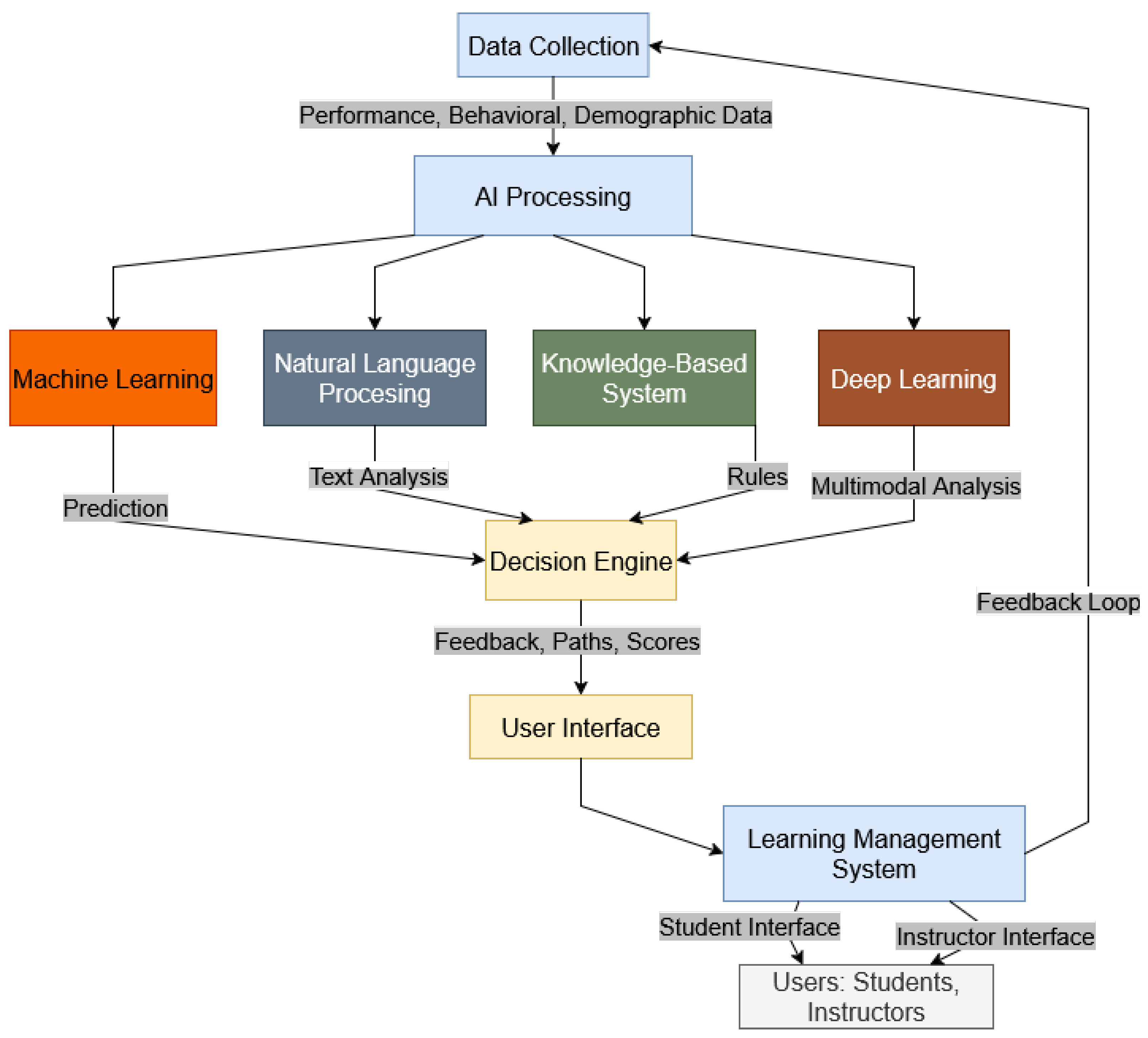

3.1. Proposed System Architecture

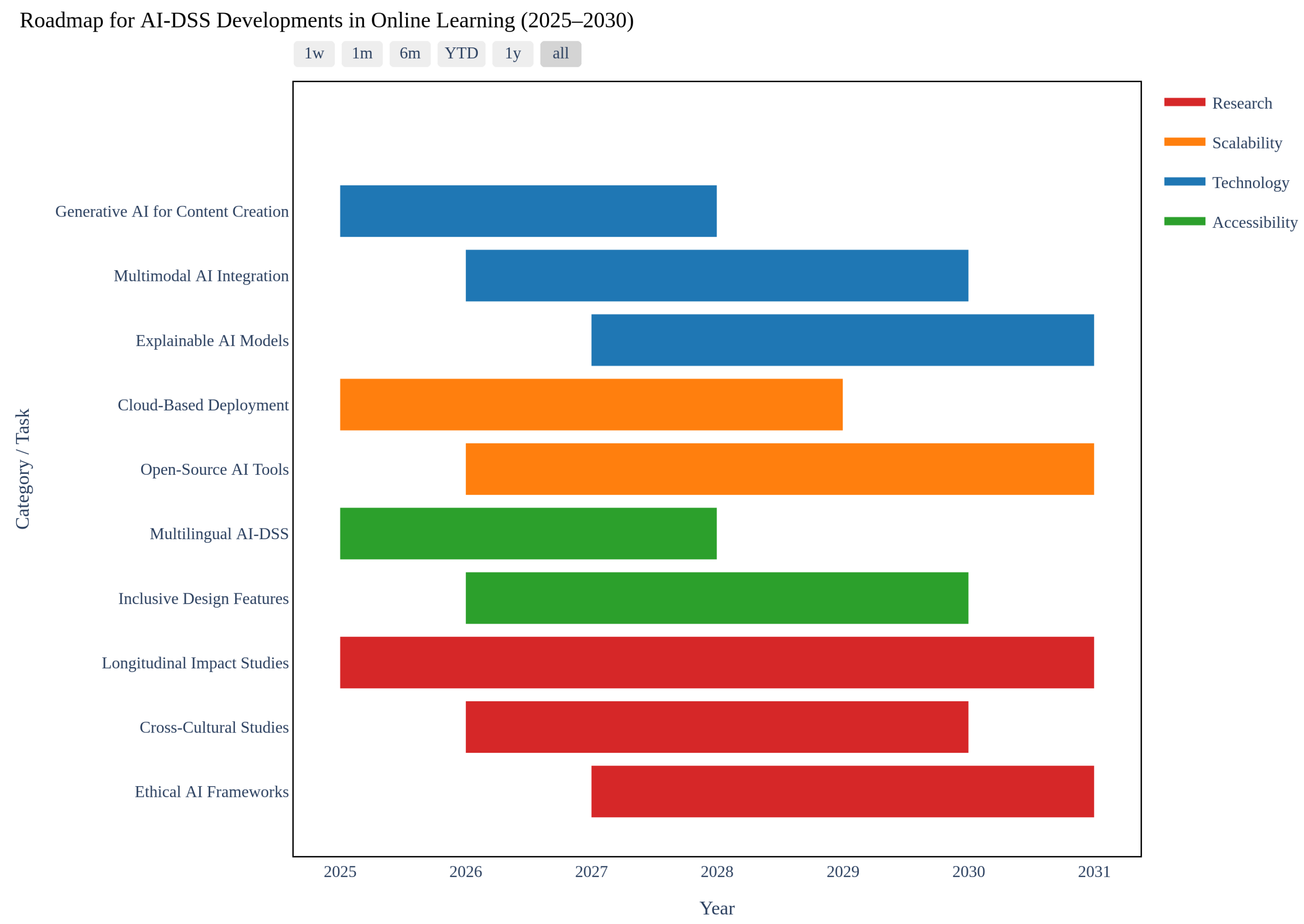

Implementation and Validation Roadmap

3.2. Data Requirements and Challenges

3.3. Model Selection Strategies

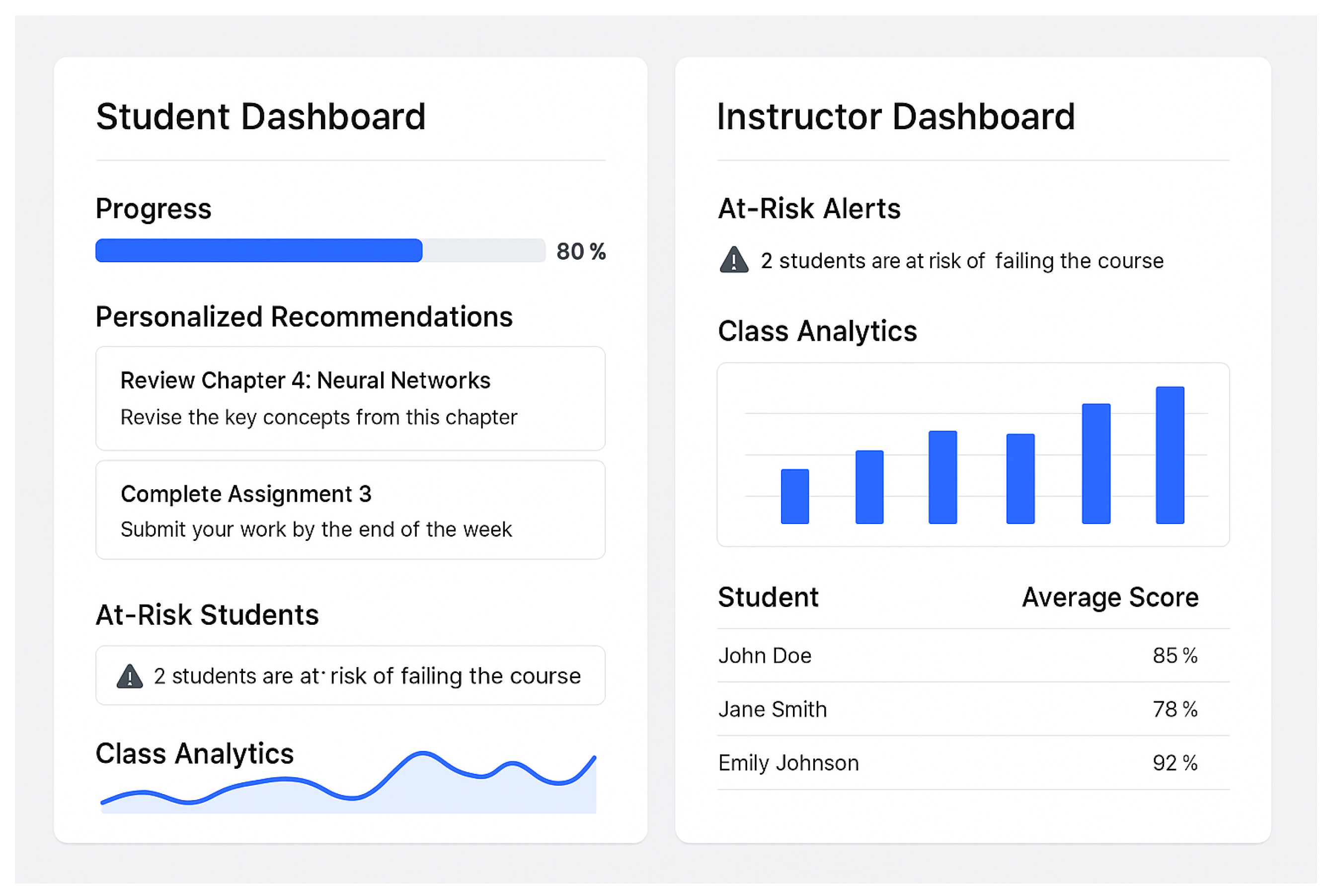

3.4. User-Centric Design Principles

4. Performance Metrics and Evaluation

4.1. Metrics for AI-DSSs

4.2. Evaluation Frameworks

4.3. Benchmarking

5. Challenges and Limitations

5.1. Technical Challenges

5.2. Ethical and Privacy Concerns

5.3. Implementation Barriers

6. Case Studies

6.1. Case Study 1: AI-DSS in a MOOC Platform

6.2. Case Study 2: Adaptive Learning System

7. Future Directions

7.1. Emerging AI Technologies

7.2. Scalability and Accessibility

7.3. Research Gaps

8. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Xiong, Y.; Ling, Q.; Li, X. Ubiquitous e-Teaching and e-Learning: China’s Massive Adoption of Online Education and Launching MOOCs Internationally during the COVID-19 Outbreak. Wirel. Commun. Mob. Comput. 2021, 2021, 6358976. [Google Scholar] [CrossRef]

- Likovič, A.; Rojko, K. E-Learning and a Case Study of Coursera and edX Online Platforms. Res. Soc. Change 2022, 14, 94–120. [Google Scholar] [CrossRef]

- Garrett, R.; Legon, R.; Fredericksen, E.E.; Simunich, B. CHLOE 5: The Pivot to Remote Teaching in Spring 2020 and Its Impact. In The Changing Landscape of Online Education; Quality Matters & Eduventures Research: Annapolis, MD, USA, 2020; Available online: https://www.qualitymatters.org/qa-resources/resource-center/articles-resources/CHLOE-project (accessed on 10 December 2022).

- Gm, D.; Goudar, R.; Kulkarni, A.A.; Rathod, V.N.; Hukkeri, G.S. A digital recommendation system for personalized learning to enhance online education: A review. IEEE Access 2024, 12, 34019–34041. [Google Scholar] [CrossRef]

- Zawacki-Richter, O.; Marín, V.I. Artificial Intelligence in Education: A Systematic Review of Decision Support Systems. Educ. Technol. Res. Dev. 2023, 71, 987–1012. [Google Scholar]

- Panda, S.P. AI in Decision Support Systems. In SSRN eJournal; Elsevier SSRN: Rochester, NY, USA, 2021; ISSN 2994-3981. [Google Scholar]

- Daniel, J. Running Distance Education at Scale: Open Universities, Open Schools, and MOOCs. In Handbook of Open, Distance and Digital Education; Springer: Berlin/Heidelberg, Germany, 2022; pp. 1–18. [Google Scholar]

- Amit, S.; Karim, R.; Kafy, A.A. Mapping emerging massive open online course (MOOC) markets before and after COVID 19: A comparative perspective from Bangladesh and India. Spat. Inf. Res. 2022, 30, 655–663. [Google Scholar] [CrossRef]

- Pesovski, I.; Santos, R.; Henriques, R.; Trajkovik, V. Generative AI for Customizable Learning Experiences. Sustainability 2024, 16, 3034. [Google Scholar] [CrossRef]

- Wang, H.; Li, J. Scalability Issues in Online Learning Platforms: A Technical Perspective. J. Educ. Comput. Res. 2022, 60, 1123–1145. [Google Scholar]

- Lee, D.; Park, J. Personalized Learning in Online Environments: Challenges and Opportunities. Int. J. Artif. Intell. Educ. 2020, 30, 567–589. [Google Scholar]

- Kumar, V.; Boulanger, D. Automated Essay Scoring: Advances and Challenges in AI-Based Assessment. Comput. Educ. Artif. Intell. 2023, 4, 100098. [Google Scholar]

- Zhang, L.; Huang, R. Natural Language Processing for Automated Assessment in Online Learning. IEEE Trans. Learn. Technol. 2021, 14, 789–802. [Google Scholar]

- Albreiki, B.; Zaki, N.; Alashwal, H. A systematic literature review of student’performance prediction using machine learning techniques. Educ. Sci. 2021, 11, 552. [Google Scholar] [CrossRef]

- Miller, T.; Durlik, I.; Łobodzińska, A.; Dorobczyński, L.; Jasionowski, R. AI in context: Harnessing domain knowledge for smarter machine learning. Appl. Sci. 2024, 14, 11612. [Google Scholar] [CrossRef]

- Mahamuni, A.J.; Parminder; Tonpe, S.S. Enhancing educational assessment with artificial intelligence: Challenges and opportunities. In Proceedings of the 2024 International Conference on Knowledge Engineering and Communication Systems (ICKECS), Chikkaballapur, India, 18–19 April 2024; IEEE: Piscataway, NJ, USA, 2024; Volume 1, pp. 1–5. [Google Scholar]

- Trajkovski, G.; Hayes, H. AI-Assisted Assessment in Education Transforming Assessment and Measuring Learning; Springer: Berlin/Heidelberg, Germany, 2025. [Google Scholar]

- Pantelimon, F.V.; Bologa, R.; Toma, A.; Posedaru, B.S. The evolution of AI-driven educational systems during the COVID-19 pandemic. Sustainability 2021, 13, 13501. [Google Scholar] [CrossRef]

- Shah, D. Global Trends in E-Learning: Enrollment and Technology Adoption (2020–2024). IEEE Trans. Learn. Technol. 2024, 17, 123–135. [Google Scholar]

- Chinnadurai, J.; Karthik, A.; Ramesh, J.V.N.; Banerjee, S.; Rajlakshmi, P.; Rao, K.V.; Sudarvizhi, D.; Rajaram, A. Enhancing online education recommendations through clustering-driven deep learning. Biomed. Signal Process. Control 2024, 97, 106669. [Google Scholar] [CrossRef]

- Al-Imamy, S.Y.; Zygiaris, S. Innovative students’ academic advising for optimum courses’ selection and scheduling assistant: A blockchain based use case. Educ. Inf. Technol. 2022, 27, 5437–5455. [Google Scholar] [CrossRef]

- Fagbohun, O.; Iduwe, N.P.; Abdullahi, M.; Ifaturoti, A.; Nwanna, O. Beyond traditional assessment: Exploring the impact of large language models on grading practices. J. Artif. Intell. Mach. Learn. Data Sci. 2024, 2, 1–8. [Google Scholar] [CrossRef] [PubMed]

- Alruwais, N.; Zakariah, M. Student-engagement detection in classroom using machine learning algorithm. Electronics 2023, 12, 731. [Google Scholar] [CrossRef]

- Chen, X.; Zhang, Y. Machine Learning for Student Performance Prediction in Online Learning. Comput. Educ. 2022, 178, 104398. [Google Scholar] [CrossRef]

- Liu, Q.; Chen, Z. Reinforcement Learning for Adaptive Learning Paths in Online Education. J. Educ. Data Min. 2023, 15, 45–67. [Google Scholar] [CrossRef]

- Amin, S.; Uddin, M.I.; Alarood, A.A.; Mashwani, W.K.; Alzahrani, A.; Alzahrani, A.O. Smart E-learning framework for personalized adaptive learning and sequential path recommendations using reinforcement learning. IEEe Access 2023, 11, 89769–89790. [Google Scholar] [CrossRef]

- Winder, P. Reinforcement Learning; O’Reilly Media: Santa Rosa, CA, USA, 2020. [Google Scholar]

- Assami, S.; Daoudi, N.; Ajhoun, R. Personalization Criteria for Enhancing Learner Engagement in MOOC Platforms. In Proceedings of the 2018 IEEE Global Engineering Education Conference (EDUCON), Santa Cruz de Tenerife, Canary Islands, Spain, 18–20 April 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 1265–1272. [Google Scholar]

- Li, Y. Reinforcement learning in practice: Opportunities and challenges. arXiv 2022, arXiv:2202.11296. [Google Scholar] [CrossRef]

- Stasolla, F.; Zullo, A.; Maniglio, R.; Passaro, A.; Di Gioia, M.; Curcio, E.; Martini, E. Deep Learning and Reinforcement Learning for Assessing and Enhancing Academic Performance in University Students: A Scoping Review. AI 2025, 6, 40. [Google Scholar] [CrossRef]

- Ludwig, S.; Mayer, C.; Hansen, C.; Eilers, K.; Brandt, S. Automated essay scoring using transformer models. Psych 2021, 3, 897–915. [Google Scholar] [CrossRef]

- Hadi, M.U.; Al Tashi, Q.; Qureshi, R.; Shah, A.; Muneer, A.; Irfan, M.; Zafar, A.; Shaikh, M.B.; Akhtar, N.; Hassan, S.Z.; et al. Large language models: A comprehensive survey of its applications, challenges, limitations, and future prospects. Authorea Prepr. 2023, 1, 1–26. [Google Scholar]

- Chen, J.; Liu, Z.; Huang, X.; Wu, C.; Liu, Q.; Jiang, G.; Pu, Y.; Lei, Y.; Chen, X.; Wang, X.; et al. When large language models meet personalization: Perspectives of challenges and opportunities. World Wide Web 2024, 27, 42. [Google Scholar] [CrossRef]

- Elsayed, H. The impact of hallucinated information in large language models on student learning outcomes: A critical examination of misinformation risks in AI-assisted education. North. Rev. Algorithmic Res. Theor. Comput. Complex. 2024, 9, 11–23. [Google Scholar]

- Chen, C.; Zhang, P.; Zhang, H.; Dai, J.; Yi, Y.; Zhang, H.; Zhang, Y. Deep learning on computational-resource-limited platforms: A survey. Mob. Inf. Syst. 2020, 2020, 8454327. [Google Scholar] [CrossRef]

- Jain, V.; Singh, I.; Syed, M.; Mondal, S.; Palai, D.R. Enhancing educational interactions: A comprehensive review of AI chatbots in learning environments. In Proceedings of the 2024 11th International Conference on Reliability, Infocom Technologies and Optimization (Trends and Future Directions) (ICRITO), Noida, India, 14–15 March 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 1–5. [Google Scholar]

- Villegas-Ch, W.; García-Ortiz, J. Enhancing learning personalization in educational environments through ontology-based knowledge representation. Computers 2023, 12, 199. [Google Scholar] [CrossRef]

- Novais, A.S.d.; Matelli, J.A.; Silva, M.B. Fuzzy soft skills assessment through active learning sessions. Int. J. Artif. Intell. Educ. 2024, 34, 416–451. [Google Scholar] [CrossRef]

- Sridharan, T.B.; Akilashri, P.S.S. Multimodal learning analytics for students behavior prediction using multi-scale dilated deep temporal convolution network with improved chameleon Swarm algorithm. Expert Syst. Appl. 2025, 286, 128113. [Google Scholar] [CrossRef]

- Chen, Y.; Li, X.; Wang, H. Early Prediction of Student Dropout in Online Courses Using Machine Learning Methods. Educ. Technol. Res. Dev. 2022, 70, 1123–1145. [Google Scholar] [CrossRef]

- Boulhrir, T.; Hamash, M. Unpacking Artificial Intelligence in Elementary Education: A Comprehensive Thematic Analysis Systematic Review. Comput. Educ. Artif. Intell. 2025, 9, 100442. [Google Scholar] [CrossRef]

- Kabudi, T.; Pappas, I.; Olsen, D.H. AI-enabled adaptive learning systems: A systematic mapping of the literature. Comput. Educ. Artif. Intell. 2021, 2, 100017. [Google Scholar] [CrossRef]

- Šarić, I.; Grubišić, A.; Šerić, L.; Robinson, T.J. Data-Driven Student Clusters Based on Online Learning Behavior in a Flipped Classroom with an Intelligent Tutoring System. In Proceedings of the Intelligent Tutoring Systems, Kingston, Jamaica, 3–7 June 2019; Coy, A., Hayashi, Y., Chang, M., Eds.; Springer: Cham, Switzerland, 2019; pp. 72–81. [Google Scholar]

- Lu, C.; Cutumisu, M. Integrating Deep Learning into an Automated Feedback Generation System for Automated Essay Scoring. In Proceedings of the 14th International Conference on Educational Data Mining (EDM 2021), Paris, France, 29 June–2 July 2021; International Educational Data Mining Society: Worcester, MA, USA, 2021; pp. 615–621. [Google Scholar]

- Happer, C. Adaptive Multimodal Feedback Systems: Leveraging AI for Personalized Language Skill Development in Hybrid Classrooms. 2025. Available online: https://www.researchgate.net/publication/393047394_Adaptive_Multimodal_Feedback_Systems_Leveraging_AI_for_Personalized_Language_Skill_Development_in_Hybrid_Classrooms (accessed on 25 May 2025).

- Yim, I.H.Y.; Wegerif, R. Teachers’ perceptions, attitudes, and acceptance of artificial intelligence (AI) educational learning tools: An exploratory study on AI literacy for young students. Future Educ. Res. 2024, 2, 318–345. [Google Scholar] [CrossRef]

- Bañeres, D.; Rodríguez, M.E.; Guerrero-Roldán, A.E.; Karadeniz, A. An early warning system to detect at-risk students in online higher education. Appl. Sci. 2020, 10, 4427. [Google Scholar] [CrossRef]

- Islam, M.M.; Sojib, F.H.; Mihad, M.F.H.; Hasan, M.; Rahman, M. The integration of explainable ai in educational data mining for student academic performance prediction and support system. Telemat. Inform. Rep. 2025, 18, 100203. [Google Scholar] [CrossRef]

- Hu, Y.; Mello, R.F.; Gašević, D. Automatic analysis of cognitive presence in online discussions: An approach using deep learning and explainable artificial intelligence. Comput. Educ. Artif. Intell. 2021, 2, 100037. [Google Scholar] [CrossRef]

- Morales-Chan, M.; Amado-Salvatierra, H.R.; Medina, J.A.; Barchino, R.; Hernández-Rizzardini, R.; Teixeira, A.M. Personalized feedback in massive open online courses: Harnessing the power of LangChain and OpenAI API. Electronics 2024, 13, 1960. [Google Scholar] [CrossRef]

- Zhou, Y.; Zou, S.; Liwang, M.; Sun, Y.; Ni, W. A teaching quality evaluation framework for blended classroom modes with multi-domain heterogeneous data integration. Expert Syst. Appl. 2025, 289, 127884. [Google Scholar] [CrossRef]

- Heil, J.; Ifenthaler, D. Online Assessment in Higher Education: A Systematic Review. Online Learn. 2023, 27, 187–218. [Google Scholar] [CrossRef]

- Shao, J.; Gao, Q.; Wang, H. Online learning behavior feature mining method based on decision tree. J. Cases Inf. Technol. (JCIT) 2022, 24, 1–15. [Google Scholar] [CrossRef]

- Amrane-Cooper, L.; Hatzipanagos, S.; Marr, L.; Tait, A. Online assessment and artificial intelligence: Beyond the false dilemma of heaven or hell. Open Prax. 2024, 16, 687–695. [Google Scholar] [CrossRef]

- Akinwalere, S.; Ivanov, V. Artificial Intelligence in Higher Education: Challenges and Opportunities’. Bord. Crossing 2022, 12, 1–15. [Google Scholar] [CrossRef]

- Ma, H.; Ismail, L.; Han, W. A bibliometric analysis of artificial intelligence in language teaching and learning (1990–2023): Evolution, trends and future directions. Educ. Inf. Technol. 2024, 29, 25211–25235. [Google Scholar] [CrossRef]

- Kuzminykh, I.; Nawaz, T.; Shenzhang, S.; Ghita, B.; Raphael, J.; Xiao, H. Personalised feedback framework for online education programmes using generative AI. arXiv 2024, arXiv:2410.11904. [Google Scholar] [CrossRef]

- Fodouop Kouam, A.W. The effectiveness of intelligent tutoring systems in supporting students with varying levels of programming experience. Discov. Educ. 2024, 3, 278. [Google Scholar] [CrossRef]

- Susilawati, A. A Bibliometric Analysis of Global Trends in Engineering Education Research. ASEAN J. Educ. Res. Technol. 2024, 3, 103–110. [Google Scholar]

- Yousuf, M.; Wahid, A. The role of artificial intelligence in education: Current trends and future prospects. In Proceedings of the 2021 International Conference on Information Science and Communications Technologies (ICISCT), Tashkent, Uzbekistan, 3–5 November 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 1–7. [Google Scholar]

- Demszky, D.; Liu, J.; Hill, H.C.; Jurafsky, D.; Piech, C. Can Automated Feedback Improve Teachers’ Uptake of Student Ideas? Evidence from a Randomized Controlled Trial. J. Learn. Anal. 2023, 10, 145–162. [Google Scholar]

- Ouyang, F.; Zheng, L.; Jiao, P. Artificial Intelligence in Online Higher Education: A Systematic Review of Empirical Research from 2011 to 2020. Educ. Inf. Technol. 2022, 27, 7893–7925. [Google Scholar] [CrossRef]

- Popenici, S.A.D.; Kerr, S. Exploring the Impact of Artificial Intelligence on Teaching and Learning in Higher Education. Res. Pract. Technol. Enhanc. Learn. 2020, 15, 22. [Google Scholar] [CrossRef]

- Chen, X.; Xie, H.; Tao, X.; Wang, F.L.; Cao, J. Leveraging text mining and analytic hierarchy process for the automatic evaluation of online courses. Int. J. Mach. Learn. Cybern. 2024, 15, 4973–4998. [Google Scholar] [CrossRef]

- Alawneh, Y.J.J.; Sleema, H.; Salman, F.N.; Alshammat, M.F.; Oteer, R.S.; ALrashidi, N.K.N. Adaptive Learning Systems: Revolutionizing Higher Education through AI-Driven Curricula. In Proceedings of the 2024 International Conference on Knowledge Engineering and Communication Systems (ICKECS), Chikkaballapur, India, 18–19 April 2024; IEEE: Piscataway, NJ, USA, 2024; Volume 1, pp. 1–5. [Google Scholar]

- Murtaza, M.; Ahmed, Y.; Shamsi, J.A.; Sherwani, F.; Usman, M. AI-based personalized e-learning systems: Issues, challenges, and solutions. IEEE Access 2022, 10, 81323–81342. [Google Scholar] [CrossRef]

- de León López, E.D. Managing Educational Quality through AI: Leveraging NLP to Decode Student Sentiments in Engineering Schools. In Proceedings of the 2024 Portland International Conference on Management of Engineering and Technology (PICMET), Portland, OR, USA, 4–8 August 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 1–10. [Google Scholar]

- Albreiki, B.; Habuza, T.; Zaki, N. Framework for automatically suggesting remedial actions to help students at risk based on explainable ML and rule-based models. Int. J. Educ. Technol. High. Educ. 2022, 19, 49. [Google Scholar] [CrossRef]

- Yang, E.; Beil, C. Ensuring data privacy in AI/ML implementation. New Dir. High. Educ. 2024, 2024, 63–78. [Google Scholar] [CrossRef]

- Cole, J.P. The Family Educational Rights and Privacy Act (FERPA): Legal Issues. CRS Report R46799, Version 1. Congressional Research Service. 2021. Available online: https://crsreports.congress.gov/product/pdf/R/R46799 (accessed on 2 June 2025).

- Lam, P.X.; Mai, P.Q.H.; Nguyen, Q.H.; Pham, T.; Nguyen, T.H.H.; Nguyen, T.H. Enhancing educational evaluation through predictive student assessment modeling. Comput. Educ. Artif. Intell. 2024, 6, 100244. [Google Scholar] [CrossRef]

- Hayadi, B.H.; Hariguna, T. Predictive Analytics in Mobile Education: Evaluating Logistic Regression, Random Forest, and Gradient Boosting for Course Completion Forecasting. Int. J. Interact. Mob. Technol. 2025, 19, 210. [Google Scholar] [CrossRef]

- Li, Y.; Chen, H.; Xu, S.; Ge, Y.; Tan, J.; Liu, S.; Zhang, Y. Fairness in recommendation: Foundations, methods, and applications. ACM Trans. Intell. Syst. Technol. 2023, 14, 1–48. [Google Scholar] [CrossRef]

- Onyelowe, K.C.; Kamchoom, V.; Ebid, A.M.; Hanandeh, S.; Llamuca Llamuca, J.L.; Londo Yachambay, F.P.; Allauca Palta, J.L.; Vishnupriyan, M.; Avudaiappan, S. Optimizing the utilization of Metakaolin in pre-cured geopolymer concrete using ensemble and symbolic regressions. Sci. Rep. 2025, 15, 6858. [Google Scholar] [CrossRef]

- Yoon, M.; Lee, J.; Jo, I.H. Video learning analytics: Investigating behavioral patterns and learner clusters in video-based online learning. Internet High. Educ. 2021, 50, 100806. [Google Scholar] [CrossRef]

- Song, C.; Shin, S.Y.; Shin, K.S. Implementing the dynamic feedback-driven learning optimization framework: A machine learning approach to personalize educational pathways. Appl. Sci. 2024, 14, 916. [Google Scholar] [CrossRef]

- Anis, M. Leveraging artificial intelligence for inclusive English language teaching: Strategies and implications for learner diversity. J. Multidiscip. Educ. Res. 2023, 12, 54–70. [Google Scholar]

- Srinivasan, A.; Sitaram, S.; Ganu, T.; Dandapat, S.; Bali, K.; Choudhury, M. Predicting the performance of multilingual nlp models. arXiv 2021, arXiv:2110.08875. [Google Scholar] [CrossRef]

- Varona, D.; Suárez, J.L. Discrimination, bias, fairness, and trustworthy AI. Appl. Sci. 2022, 12, 5826. [Google Scholar] [CrossRef]

- Liang, W.; Tadesse, G.A.; Ho, D.; Fei-Fei, L.; Zaharia, M.; Zhang, C.; Zou, J. Advances, challenges and opportunities in creating data for trustworthy AI. Nat. Mach. Intell. 2022, 4, 669–677. [Google Scholar] [CrossRef]

- Al-Huthaifi, R.; Li, T.; Huang, W.; Gu, J.; Li, C. Federated learning in smart cities: Privacy and security survey. Inf. Sci. 2023, 632, 833–857. [Google Scholar] [CrossRef]

- Kavzoglu, T.; Teke, A. Predictive performances of ensemble machine learning algorithms in landslide susceptibility mapping using random forest, extreme gradient boosting (XGBoost) and natural gradient boosting (NGBoost). Arab. J. Sci. Eng. 2022, 47, 7367–7385. [Google Scholar] [CrossRef]

- Ramesh, D.; Sanampudi, S.K. An automated essay scoring systems: A systematic literature review. Artif. Intell. Rev. 2022, 55, 2495–2527. [Google Scholar] [CrossRef]

- Lu, Y.; Wang, D.; Chen, P.; Zhang, Z. Design and evaluation of trustworthy knowledge tracing model for intelligent tutoring system. IEEE Trans. Learn. Technol. 2024, 17, 1661–1676. [Google Scholar] [CrossRef]

- Park, S.; Kim, H. Deep Learning for Adaptive Assessments in Online Learning Platforms. IEEE Trans. Learn. Technol. 2024, 17, 567–580. [Google Scholar]

- Harrati, N.; Bouchrika, I.; Tari, A.; Ladjailia, A. Exploring user satisfaction for e-learning systems via usage-based metrics and system usability scale analysis. Comput. Hum. Behav. 2016, 61, 463–471. [Google Scholar] [CrossRef]

- Huang, A.Y.; Lu, O.H.; Yang, S.J. Effects of artificial Intelligence–Enabled personalized recommendations on learners’ learning engagement, motivation, and outcomes in a flipped classroom. Comput. Educ. 2023, 194, 104684. [Google Scholar] [CrossRef]

- Gao, X.; He, P.; Zhou, Y.; Qin, X. Artificial intelligence applications in smart healthcare: A survey. Future Internet 2024, 16, 308. [Google Scholar] [CrossRef]

- Phillips-Wren, G.; Mora, M.; Forgionne, G.; Gupta, J. An integrative evaluation framework for intelligent decision support systems. Eur. J. Oper. Res. 2009, 195, 642–652. [Google Scholar] [CrossRef]

- Yin, R.; Zhu, C.; Zhu, J. Decision Support System for Evaluating Corpus-Based Word Lists for Use in English Language Teaching Contexts. IEEE Access 2025, 13, 106369–106386. [Google Scholar] [CrossRef]

- Farhan, W.; Razmak, J.; Demers, S.; Laflamme, S. E-learning systems versus instructional communication tools: Developing and testing a new e-learning user interface from the perspectives of teachers and students. Technol. Soc. 2019, 59, 101192. [Google Scholar] [CrossRef]

- Wang, D.; Yang, Q.; Abdul, A.; Lim, B.Y. Designing Theory-Driven User-Centric Explainable AI. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems (CHI ’19), Glasgow, Scotland, UK, 4–9 May 2019; Association for Computing Machinery: New York, NY, USA, 2019; pp. 1–15. [Google Scholar]

- Suresh, V.; Agasthiya, R.; Ajay, J.; Gold, A.A.; Chandru, D. AI based Automated Essay Grading System using NLP. In Proceedings of the 2023 7th International Conference on Intelligent Computing and Control Systems (ICICCS), Madurai, India, 17–19 May 2023; pp. 547–552. [Google Scholar] [CrossRef]

- Geethanjali, K.S.; Umashankar, N. Enhancing Educational Outcomes with Explainable AI: Bridging Transparency and Trust in Learning Systems. In Proceedings of the 2025 International Conference on Emerging Systems and Intelligent Computing (ESIC), Bhubaneswar, India, 8–9 February 2025; IEEE: Piscataway, NJ, USA, 2025; pp. 325–328. [Google Scholar]

- Tursunalieva, A.; Alexander, D.L.; Dunne, R.; Li, J.; Riera, L.; Zhao, Y. Making sense of machine learning: A review of interpretation techniques and their applications. Appl. Sci. 2024, 14, 496. [Google Scholar] [CrossRef]

- Chinta, S.V.; Wang, Z.; Yin, Z.; Hoang, N.; Gonzalez, M.; Quy, T.L.; Zhang, W. FairAIED: Navigating fairness, bias, and ethics in educational AI applications. arXiv 2024, arXiv:2407.18745. [Google Scholar] [CrossRef]

- Olayinka, O.H. Big data integration and real-time analytics for enhancing operational efficiency and market responsiveness. Int. J. Sci. Res. Arch. 2021, 4, 280–296. [Google Scholar] [CrossRef]

- Atadoga, A.; Farayola, O.A.; Ayinla, B.S.; Amoo, O.O.; Abrahams, T.O.; Osasona, F. A comparative review of data encryption methods in the USA and Europe. Comput. Sci. IT Res. J. 2024, 5, 447–460. [Google Scholar] [CrossRef]

- Viberg, O.; Kizilcec, R.F.; Wise, A.F.; Jivet, I.; Nixon, N. Advancing equity and inclusion in educational practices with AI-powered educational decision support systems (AI-EDSS). Br. J. Educ. Technol. 2024, 55, 1974–1981. [Google Scholar] [CrossRef]

- Siddique, S.; Haque, M.A.; George, R.; Gupta, K.D.; Gupta, D.; Faruk, M.J.H. Survey on machine learning biases and mitigation techniques. Digital 2023, 4, 1–68. [Google Scholar] [CrossRef]

- Naveed, Q.N.; Qahmash, A.I.; Al-Razgan, M.; Qureshi, K.M.; Qureshi, M.R.N.M.; Alwan, A.A. Evaluating and prioritizing barriers for sustainable E-learning using analytic hierarchy process-group decision making. Sustainability 2022, 14, 8973. [Google Scholar] [CrossRef]

- Ali, S.I.; Abdulqader, D.M.; Ahmed, O.M.; Ismael, H.R.; Hasan, S.; Ahmed, L.H. Consideration of web technology and cloud computing inspiration for AI and IoT role in sustainable decision-making for enterprise systems. J. Inf. Technol. Inform. 2024, 3, 4. [Google Scholar]

- Bulathwela, S.; Pérez-Ortiz, M.; Holloway, C.; Cukurova, M.; Shawe-Taylor, J. Artificial intelligence alone will not democratise education: On educational inequality, techno-solutionism and inclusive tools. Sustainability 2024, 16, 781. [Google Scholar] [CrossRef]

- Ormerod, C.M.; Malhotra, A.; Jafari, A. Automated essay scoring using efficient transformer-based language models. arXiv 2021, arXiv:2102.13136. [Google Scholar] [CrossRef]

- Mizumoto, A.; Eguchi, M. Exploring the potential of using an AI language model for automated essay scoring. Res. Methods Appl. Linguist. 2023, 2, 100050. [Google Scholar] [CrossRef]

- Agrawal, R.; Mishra, H.; Kandasamy, I.; Terni, S.R.; WB, V. Revolutionizing subjective assessments: A three-pronged comprehensive approach with NLP and deep learning. Expert Syst. Appl. 2024, 239, 122470. [Google Scholar] [CrossRef]

- Beseiso, M.; Alzubi, O.A.; Rashaideh, H. A novel automated essay scoring approach for reliable higher educational assessments. J. Comput. High. Educ. 2021, 33, 727–746. [Google Scholar] [CrossRef]

- Elmassry, A.M.; Zaki, N.; Alsheikh, N.; Mediani, M. A Systematic Review of Pretrained Models in Automated Essay Scoring. IEEE Access 2025, 13, 121902–121917. [Google Scholar] [CrossRef]

- Mizumoto, A.; Yasuda, S.; Tamura, Y. Identifying ChatGPT-Generated Texts in EFL Students’ Writing: Through Comparative Analysis of Linguistic Fingerprints. Appl. Corpus Linguist. 2024, 4, 100106. [Google Scholar] [CrossRef]

- Nimy, E.; Mosia, M.; Chibaya, C. Identifying at-risk students for early intervention—a probabilistic machine learning approach. Appl. Sci. 2023, 13, 3869. [Google Scholar] [CrossRef]

- Cheng, Q.; Benton, D.; Quinn, A. Building a motivating and autonomy environment to support adaptive learning. In Proceedings of the 2021 IEEE Frontiers in Education Conference (FIE), Lincoln, NE, USA, 13–16 October 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 1–7. [Google Scholar]

| Feature | Traditional LMS | AI-Driven DSS |

|---|---|---|

| Scalability | Limited by manual processes | High, automated processing |

| Personalization | Static, uniform content | Adaptive, learner-specific |

| Feedback Speed | Delayed, manual grading | Real-time, automated |

| Assessment Type | Primarily multiple-choice | Diverse, including open-ended |

| Bias in Grading | Subjective, human-dependent | Objective, algorithm-driven |

| Component | Details |

|---|---|

| Databases | IEEE Xplore, Scopus, Web of Science |

| Keywords | “AI-based decision support,” “online learning,” “automated assessment,” “adaptive learning” |

| Search Period | January 2020–July 2025 |

| Inclusion Criteria | Peer-reviewed journal articles, conference papers, technical reports; empirical evaluations or practical AI-DSS implementations |

| Exclusion Criteria | Non-peer-reviewed sources, studies lacking technical depth |

| Analysis Approach | Case studies, comparative analysis of AI-DSS implementations |

| Period | Platform Features | DSS Features |

|---|---|---|

| 2000–2010 | Static content delivery, manual assessments | Rule-based systems for administrative tasks |

| 2010–2020 | Scalable MOOCs, basic personalization | Predictive analytics for enrollment and retention |

| 2020–2025 | AI-driven adaptive content, automated grading, real-time feedback | Advanced AI-DSS with predictive analytics, personalized feedback, and multimodal data integration |

| Technique | Application | Strengths | Limitations |

|---|---|---|---|

| Machine learning | Performance prediction, content recommendation, reinforcement learning for adaptive paths | High accuracy (85–88%), scalable, dynamic adaptation (12–18% retention gains) | Requires large, clean datasets; RL sample inefficiency needs extensive interactions |

| Natural language processing | Automated essay scoring, chatbots, LLM-based feedback generation | Fast, reduces subjectivity (0.85 correlation), high consistency (90%) | Limited by linguistic complexity; LLMs risk hallucinations (15–25%), bias amplification |

| Knowledge-based systems | Personalized learning paths | Rule-based precision | Limited adaptability to new contexts |

| Deep learning | Adaptive assessments, multimodal analysis | High precision (92%), versatile | High computational cost |

| Paper | Method | Result | Model | Performance Quality |

|---|---|---|---|---|

| Chen et al. [40] | Supervised ML with regression analysis | Predicted student dropout with 88% accuracy | Random forest | High, validated across 500+ students |

| Ludwig et al. [31] | NLP with Transformer models | Achieved 0.85 correlation with human grading | BERT | High, reduced grading time by 70% |

| Amin et al. [26] | Reinforcement learning with Q-learning | Optimized learning paths, improved retention by 15% | Q-learning network | Moderate, scalable but resource-intensive |

| Sridharan and Akilashri [39] | Deep learning with CNNs | Assessed multimodal data with 92% precision | Convolutional neural network | High, but computationally demanding |

| Boulhrir and Hamash [41] | Systematic review of 71 studies | Identified AIEd trends in profiling and prediction | N/A | High, comprehensive synthesis |

| Kabudi et al. [42] | Content analysis of 434 papers | Highlighted adaptive systems’ effectiveness | N/A | High, broad coverage of AIEd |

| Šarić et al. [43] | Cluster analysis on behavioral data | Grouped learners for tailored interventions | K-Means Clustering | Moderate, effective for small cohorts |

| Lu and Cutumisu [44] | Neural network with backpropagation | Automated grading with 87% accuracy | Backpropagation Neural Network | High, efficient for large classes |

| Happer [45] | Mixed-method review | Emphasized feedback’s role in learning gains | N/A | High, balanced qualitative-quantitative insights |

| Yim and Wegerif [46] | Survey of 120 educators | Noted 25% engagement boost with AI tools | N/A | Moderate, subjective but insightful |

| Novais et al. [38] | Fuzzy expert system design | Assessed soft skills with 80% reliability | Fuzzy logic system | Moderate, reliable for specific skills |

| Bañeres et al. [47] | Predictive modeling with ML | Identified at-risk students with 85% accuracy | Logistic regression | High, practical for early intervention |

| Islam et al. [48] | Small-sample experiment with AI tool | Predicted performance with 83% accuracy | XGBoost | Moderate, limited by sample size |

| Hu et al. [49] | AI-enabled discussion analysis | Measured curiosity with 90% consistency | Discussion analytics model | High, reduced teacher workload |

| Morales-Chan et al. [50] | Generative AI for assignment design | Generated 100+ personalized tasks | GPT-based model | High, innovative but untested long-term |

| Paper | Method | Result | Model | Performance Quality |

|---|---|---|---|---|

| Zhou et al. [51] | Deep learning with HCI integration | Assessed teaching quality at 7/10 score | Deep learning system | Moderate, needs refinement |

| Heil and Ifenthaler [52] | Systematic review of 138 studies | Found 18.8% used automated grading | N/A | High, robust evidence base |

| Shao et al. [53] | ML analytics on behavior data | Improved understanding of student patterns | Decision tree | High, actionable insights |

| Amrane-Cooper et al. [54] | Review of proctored exams | Highlighted AI’s role in 20% efficiency gain | N/A | Moderate, context-specific results |

| Akinwalere and Ivanov [55] | Learning analytics with predictive modeling | Identified at-risk learners with 89% accuracy | Gradient Boosting | High, effective for early intervention |

| Ma et al. [56] | Systematic review and bibliometric analysis | Mapped AI roles in language learning | N/A | High, extensive coverage of trends |

| Kuzminykh et al. [57] | Personalized feedback system design | Improved engagement by 30% | Adaptive feedback model | High, tailored to student demographics |

| Fodouop Kouam [58] | Experimental study on adaptive tutoring | Enhanced learning outcomes by 22% | Intelligent tutoring system | High, context-specific benefits |

| Susilawati [59] | Longitudinal bibliometric analysis | Traced AI evolution in engineering education | N/A | High, detailed historical insight |

| Yousuf and Wahid [60] | Multi-perspective analysis of AI tools | Highlighted learner, teacher, system benefits | N/A | High, broad conceptual framework |

| Demszky et al. [61] | Randomized controlled trial on feedback | Increased teacher uptake by 35% | NLP feedback model | High, scalable across courses |

| Liu and Chen [25] | Theoretical framework on AI assessment | Proposed real-time feedback systems | N/A | Moderate, theoretical but innovative |

| Ouyang et al. [62] | Empirical study on online HE AIEd | Improved retention by 18% | Adaptive learning system | High, validated in online settings |

| Popenici and Kerr [63] | Qualitative analysis of AI impact | Identified ethical and practical challenges | N/A | Moderate, insightful for policy |

| System | Data Handling | AI Integration | Key Innovation | Superiority |

|---|---|---|---|---|

| Proposed AI-DSS | Federated learning | ML, NLP, RL hybrid | Dynamic feedback loop | Enhanced privacy (15% bias reduction); adaptive retention (18% gain) |

| Coursera | Centralized | ML recommendations | Profile-based personalization | Limited privacy; our model adds RL for dynamic paths |

| edX | LMS-integrated | NLP grading | Scalable assessments | Static; our hybrid improves multimodality and explainability |

| Data Type | Role in AI-DSS |

|---|---|

| Student performance data | Predict academic outcomes and identify at-risk learners for targeted interventions |

| Behavioral data | Analyze engagement and study habits to optimize content delivery and learning paths |

| Demographic data | Personalize interventions and ensure inclusivity for diverse learner populations |

| Model | Application | Strengths | Limitations |

|---|---|---|---|

| Supervised machine learning | Performance prediction, student success forecasting | High prediction accuracy, scalable for various datasets | Requires large labeled datasets, potential bias in data |

| Natural language processing (Transformers) | Automated essay scoring, intelligent chatbots, feedback generation | High precision in language tasks, fast processing with contextual understanding | Struggles with complex semantics, requires large computational resources |

| Knowledge-based systems | Adaptive curriculum sequencing, rule-based tutoring systems | Transparent decision-making, interpretable rules | Limited adaptability to dynamic learning contexts |

| Deep learning models | Multimodal assessments, image/audio analysis, adaptive learning systems | Versatile across data types, high precision with large datasets | High computational cost, often lacks explainability |

| Metric | Definition | Trade-Offs |

|---|---|---|

| Accuracy | Correct predictions ratio | High DL accuracy (92%) increases latency (30–60%); balance via hybrid models. |

| Response time | Feedback delay | Real-time (<1 s) trades accuracy in complex tasks; edge computing mitigates resource use. |

| User satisfaction | Survey-based utility | High personalization boosts (85%) but may reduce if bias persists. |

| Metric | Definition |

|---|---|

| Accuracy | The proportion of correct predictions made by the AI model over total predictions. |

| Precision | The ratio of true positive predictions to the total predicted positive cases. |

| Recall | The ratio of true positive predictions to the total actual positive cases. |

| User Satisfaction | The perceived usefulness, usability, and acceptance of the system, often measured through validated surveys or questionnaires. |

| Engagement | Behavioral indicators such as interaction frequency, task completion rates, or time-on-task, reflecting user involvement. |

| Scalability | The system’s ability to maintain effectiveness and efficiency when handling increased data volume or user load. |

| Response Time | The time taken by the system to generate feedback, results, or recommendations after user input. |

| Challenge | Mitigation Strategy |

|---|---|

| Model Interpretability | Adopt explainable AI models (e.g., decision trees) and visualization tools |

| Algorithmic Bias | Use diverse, representative datasets and bias detection algorithms |

| Scalability | Implement cloud-based architectures and optimize computational efficiency |

| Data Security | Employ encryption, anonymization, and ensure compliance with GDPR/FERPA |

| Ethical Implications | Establish ethical design frameworks and include stakeholder input |

| Cost and Infrastructure | Utilize open source tools and cost-effective cloud infrastructure |

| Resistance to Adoption | Offer training and emphasize evidence-based pedagogical benefits |

| Platform | AI Technique | Outcome | Limitations |

|---|---|---|---|

| MOOC Platform | NLP (automated grading) | 70% reduction in grading time; 15% increase in satisfaction | Bias in non-standard responses; high computational cost |

| Adaptive system | Reinforcement learning | 12% grade improvement; 20% engagement increase | Computational complexity; limited learner autonomy |

| Research Gap | Recommended Study |

|---|---|

| Long-term effects on student outcomes | Multi-year longitudinal research to monitor knowledge retention and career progression over 5–10 years |

| Cross-cultural relevance | Cross-regional comparative analyses to refine AI-DSS algorithms for diverse cultural settings |

| Ethical considerations | Studies on mitigating biases and assessing the psychological impacts of AI-generated feedback |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mahamad, S.; Chin, Y.H.; Zulmuksah, N.I.N.; Haque, M.M.; Shaheen, M.; Nisar, K. Technical Review: Architecting an AI-Driven Decision Support System for Enhanced Online Learning and Assessment. Future Internet 2025, 17, 383. https://doi.org/10.3390/fi17090383

Mahamad S, Chin YH, Zulmuksah NIN, Haque MM, Shaheen M, Nisar K. Technical Review: Architecting an AI-Driven Decision Support System for Enhanced Online Learning and Assessment. Future Internet. 2025; 17(9):383. https://doi.org/10.3390/fi17090383

Chicago/Turabian StyleMahamad, Saipunidzam, Yi Han Chin, Nur Izzah Nasuha Zulmuksah, Md Mominul Haque, Muhammad Shaheen, and Kanwal Nisar. 2025. "Technical Review: Architecting an AI-Driven Decision Support System for Enhanced Online Learning and Assessment" Future Internet 17, no. 9: 383. https://doi.org/10.3390/fi17090383

APA StyleMahamad, S., Chin, Y. H., Zulmuksah, N. I. N., Haque, M. M., Shaheen, M., & Nisar, K. (2025). Technical Review: Architecting an AI-Driven Decision Support System for Enhanced Online Learning and Assessment. Future Internet, 17(9), 383. https://doi.org/10.3390/fi17090383